Abstract

This review discusses the challenges in hearing aid design and fitting and the recent developments in advanced signal processing technologies to meet these challenges. The first part of the review discusses the basic concepts and the building blocks of digital signal processing algorithms, namely, the signal detection and analysis unit, the decision rules, and the time constants involved in the execution of the decision. In addition, mechanisms and the differences in the implementation of various strategies used to reduce the negative effects of noise are discussed. These technologies include the microphone technologies that take advantage of the spatial differences between speech and noise and the noise reduction algorithms that take advantage of the spectral difference and temporal separation between speech and noise. The specific technologies discussed in this paper include first-order directional microphones, adaptive directional microphones, second-order directional microphones, microphone matching algorithms, array microphones, multichannel adaptive noise reduction algorithms, and synchrony detection noise reduction algorithms. Verification data for these technologies, if available, are also summarized.

1. Introduction

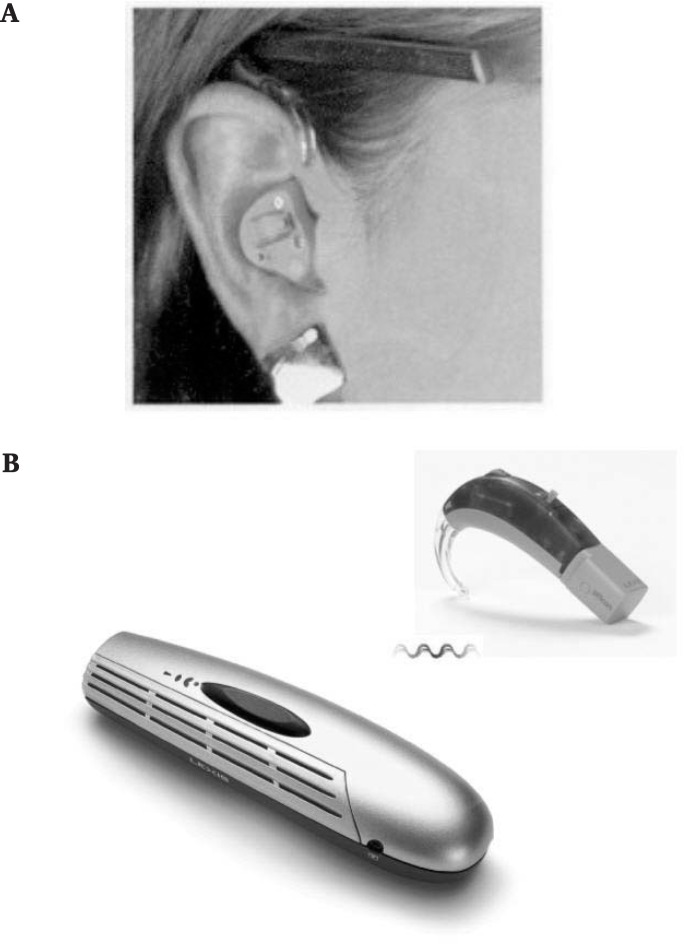

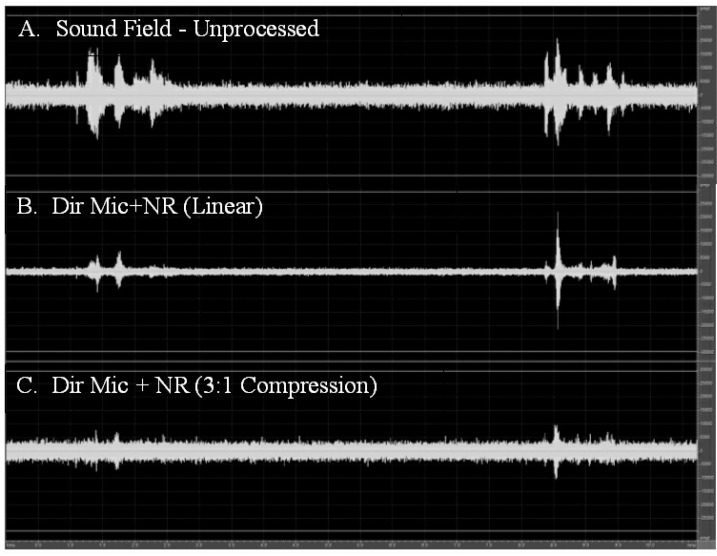

About 10% of the world's population suffers from hearing loss. For these individuals, the most common amplification choice is hearing aids. The hearing aids of today are vastly different from their predecessors because of the application of digital signal processing technologies. With the advances in digital chip designs and the reduction in current consumption, many of the historically unachievable concepts have now been put into practice. This article reviews current hearing aid design and concepts that are specifically attempting to meet the variety of amplification needs of hearing aid users. Some of these technologies and algorithms have been introduced to the consumer market very recently. Validation data on the effectiveness of these technologies, if available, are also discussed.

2. Basics of Hearing Aid Signal Processing Technologies

Four basic concepts in hearing aids and digital signal processing underlie today's advanced signal processing technologies.

2.1. Differentiating Hearing Aids

The first and most basic concept is the differentiation among analog, analog programmable, and digital programmable hearing aids:

In the conventional analog hearing aids, the acoustic signal is picked up by the microphone and converted to an electric signal. The level and the frequency response of the microphone output is then altered by a set of analog filters and the signal sent to the receiver. The signal of the analog hearing aids remains continuous throughout the signal processing path.

In analog programmable hearing aids, the electric signal is normally split into two or more frequency channels. The level of the signal in each channel is amplified and processed by a digital control circuit. The parameters of the digital control circuit are programmed via hearing aid fitting software. The signal, however, remains continuous throughout the signal processing path in the analog programmable hearing aids.

In the digital programmable hearing aid (or simply digital hearing aids), the output of the microphone is sampled, quantized, and converted into discrete numbers by analog-to-digital converters. All the signal processing is then carried out in the digital domain by digital filters and algorithms. Upon completion of digital signal processing, the digital signal is converted back to the analog domain by a digital-to-analog converter or a demodulator.

For a detailed explanation on the differences in hearing aids, please refer to Schweitzer, 1997.

2.2. Channels and Bands

Another basic concept of hearing aids is the differentiation between channels and bands. In general, channel refers to signal processing channels that are the signal processing units for such algorithms as compression, noise reduction, and feedback reduction. The gain control and other functions within the channel operate independently of each other. A band, on the other hand, refers to a frequency shaping band that is mainly used to control the amount of time-invariant gain in a frequency region. A given channel may have several frequency bands; each is subjected to the same signal processing of the channel in which it resides. Some digital hearing aids have an equal number of channels and bands; for example, the Natura by Sonic Innovations has nine signal processing channels and nine frequency shaping bands. Other digital hearing aids, however, may have different numbers of channels and bands; for example, the Adapto by Oticon has two signal processing channels and seven frequency shaping bands.

In some cases, the above distinctions of the channels and bands are smeared because of a lack of a better term to describe the complexity of the signal processing algorithms implemented in hearing aids. The Oticon Syncro, for example, has four channels of signal processing in the adaptive directional microphone algorithm and eight channels of signal processing for the compression and noise reduction algorithms. To distinguish the two, Oticon chooses to describe the multichannel adaptive directional microphone as a “multiband adaptive directional microphone” and reserve the term channel to describe its compression system and noise reduction algorithm.

With the advances in signal processing technologies, some hearing aids may have many channels and bands, which can become difficult to manage in the hearing aid fitting process. Some manufacturers have grouped the channels and bands into a lesser number of fitting regions to simplify the fitting process. For example, the Canta7 by GNResound has 64 frequency-shaping bands and 14 signal processing channels. These channels and bands are grouped into controls at six frequency regions in the fitting software.

2.3. The Building Blocks of Advanced Signal Processing Algorithms

Recently, many adaptive or automatic features have been implemented in digital hearing aids and most of these features are accomplished by signal processing algorithms. These signal processing algorithms typically have three basic building blocks: a signal detection and analysis unit, a set of decision rules, and an action unit. The signal detection and analysis unit usually has either one or several detectors. These detectors observe the signal for a period of time in an analysis time window and then analyze for the presence or absence of certain pertinent characteristics or calculate a value of relevant characteristics. The output of the detection and analysis unit is subsequently compared with the set of predetermined decision rules. The action unit then executes the corresponding actions according to the decision rules.

An analogy of the building blocks of digital signal processing algorithms can be drawn from the operation of compression systems: The signal detection and analysis unit of the compression system is the level detector that detects and estimates the level of the incoming system. The set of decision rules in the compression system is the input-output function and the time constants. Specifically, the input-output function specifies the amount of gain at different input levels, whereas the attack and release times determine how fast the change occurs. The action unit carries out the action that is reflected in the output of the compression system.

2.4. Time Constants of the Advanced Signal Processing Algorithms

Another important concept for advanced signal processing algorithms is the time constants that govern the speed of action. The concept of time constants of an adaptive or automatic algorithm can be described using the example of time constants of a compression system. In a compression system, the attack and release times are defined by the duration that a predetermined gain change occurs given a specific change in input level. In other words, they tell us how quickly a change in the gain occurs in a compression system when the level of the input changes.

Similarly, the time constants of an adaptive or automatic algorithm tell us the time that the algorithm takes to switch from the default state to another signal processing state (i.e., attack/adaptation/engaging time) and the time that the algorithm takes to switch back to the default state (i.e., release/disengaging time) when the acoustic environment changes. For example, the attack/adaptation/engaging time for an automatic directional microphone algorithm is the time for the algorithm to switch from the default omni-directional microphone to the directional microphone mode when the hearing aid user walks from a quiet street into a noisy restaurant. The release/disengaging time is the time for the algorithm to switch from the directional microphone back to the omni-directional microphone mode when the user exits the noisy restaurant to the quiet street.

For some algorithms, the time constants can also be the switching time from one mode to another. An example is the switching time from one polar pattern to another polar pattern in an adaptive directional microphone algorithm. In addition, time constants can also be associated with tracking speed (e.g., the speed with which a feedback reduction algorithm tracks a change in the feedback path).

The proprietary algorithms from different manufacturers have different time constants, depending on factors such as their hearing aid fitting philosophy, interactions or synergy among other signal processing units, and limitations on signal processing speed. Similar to the dilemma in choosing the appropriate release time in a compression system, there are pros and cons associated with the choices of fast or slow time constants in advanced signal processing algorithms:

Fast engaging and disengaging times can act on the changes in the incoming signal very quickly. Yet they may be overly active and create undesirable artifacts (e.g., the pumping effect generated by a noise reduction algorithm with fast time constants).

Slow engaging and disengaging times may be more stable and have fewer artifacts. However, they may appear to be sluggish and allow the undesirable components of the signal to linger a little longer before any signal processing action is taken.

The general trend in the hearing aid industry is to have variable engaging and disengaging times, similar to the concept of variable release times in a compression system. The exact value of the time constants depends on the characteristics of the incoming signal, the lifestyle of the hearing aid user, and the style and model of the hearing aid, among others.

3. Challenges and Recent Developments in Hearing Aids

Challenge No. 1: Enhancing Speech Understanding and Listening Comfort in Background Noise

Difficulty in understanding speech in noise has been one of the most common complaints of hearing aid users. People with hearing loss often have more difficulty understanding speech in noise than do people with normal hearing. When the ability to understand speech in noise is expressed in a signal-to-noise ratio (SNR) for understanding 50% of speech (SNR-50), the SNRs-50 of people with hearing loss may be as much as 30 dB higher than that of people with normal hearing. This means that for a given background noise, the speech needs to be as much as 30 dB higher for people with hearing loss to achieve the same level of understanding as people with normal hearing (Baer and Moore, 1994; Dirks et al., 1982; Duquesnoy, 1983; Eisenberg et al., 1995; Festen and Plomp, 1990; Killion, 1997a; Killion and Niquette, 2000; Peters et al., 1998; Plomp, 1994; Tillman et al., 1970; Soede, 2000). The difference in SNRs-50 between people with normal hearing and hearing loss is called SNR-loss (Killion, 1997b). The exact amount of SNR-loss depends on the degree and type of hearing loss, the speech materials, and the temporal and spectral characteristics of background noise.

From the signal processing point of view, the relationship between speech and noise can be characterized by their relative occurrences in the temporal, spectral, and spatial domain. Temporally, speech and noise can occur at the same instance or at different instances. Spectrally, speech and noise can have similar frequency content, or they may slightly overlap or have different primary frequency regions. Spatially, noise may originate from the same direction as the targeted speech or from a different spatial angle than the targeted speech. Further, speech and noise can have a constant spatial relationship or their relative positions may vary over time. When a constant spatial relationship exists with speech and noise, both components are fixed in space or both are moving at the same velocity. When their relative position varies over time, the talker, the noise, or both may be moving in space.

One of the most challenging tasks of engineers who design hearing aids is to reduce background noise and to increase speech intelligibility without introducing undesirable distortions. Multiple technologies have been developed in the long history of hearing aids to enhance speech understanding and listening comfort for people with hearing loss. The following section reviews some of the recent developments in two broad categories of noise reduction strategies: directional microphones and noise reduction algorithms.

3.1. Noise Reduction Strategy No. 1: Directional Microphones

Directional microphones are designed to take advantage of the spatial differences between speech and noise. They are second only to personal frequency modulation (FM) or infrared listening systems in improving the SNR for hearing aid users. Directional microphones are more sensitive to sounds coming from the front than sounds coming from the back and the sides. The assumption is that when the hearing aid user engages in conversation, the talker(s) is usually in front and sounds from other directions are undesirable.

In the last several years, many new algorithms have been developed to maintain the performance of directional microphones over time and to maximally attenuate moving or fixed noise source(s) from the back hemisphere. In addition, second-order directional microphones and array microphones with higher directional effects are available to further attenuate noise originating from the back hemisphere. The following section reviews the basics of first-order directional microphones, updates the current research findings, and discusses some of the recent developments in directional microphones.

3.1.1. First-Order Directional Microphones

First-order directional microphones have been implemented in behind-the-ear hearing aids since the 1970s. The performance of modern directional microphones has been greatly improved compared to the earlier generations of directional microphones marketed in the 1970s and 1980s (Killion, 1997b). Now, first-order directional microphones are implemented not only in behind-the-ear hearing aids but also in in-the-ear and in-the-canal hearing aids.

3.1.1.1. How They Work

First-order directional microphones are implemented with either a single-microphone design or a dual/twin-microphone design. In the single-microphone design, the directional microphone has an anterior and a posterior microphone port. The acoustic signal entering the posterior port is acoustically delayed and subtracted from the signal entering the anterior port at the diaphragm of the microphone.

The rationale is that if a sound comes from the front, it reaches the anterior port first and then reaches the posterior port a few milliseconds later. Since the sound in the posterior port is delayed by the traveling time between the two microphone ports (i.e., external delay) and the acoustic delay network (i.e., the internal delay), the sound from the front is minimally affected. Therefore, the directional microphones have high sensitivity to sounds from the front. However, if a sound comes from the back, it reaches the posterior port first and continues to travel to the anterior port. If the internal delay equals the external delay, sounds entering the posterior port and the anterior port reach the diaphragm at the same time but on opposite sides of the diaphragm, thus they are cancelled. Therefore, the sensitivity of the directional microphone to sounds from the back is greatly reduced.

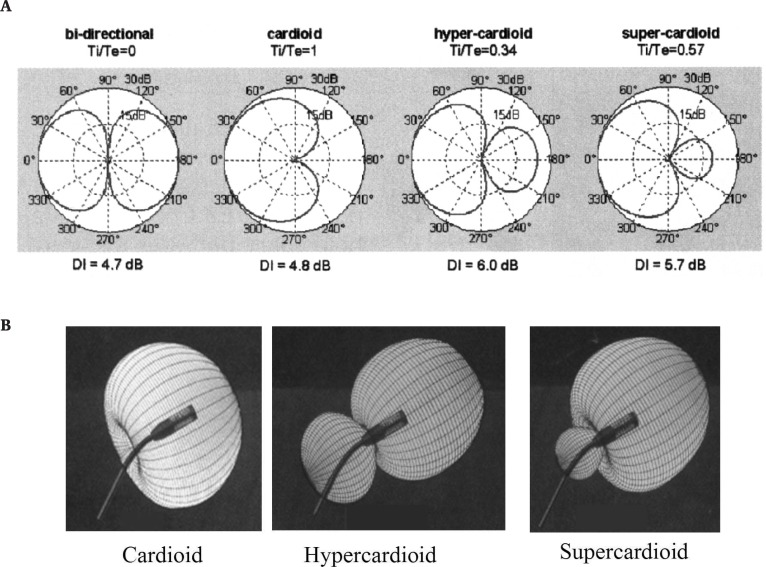

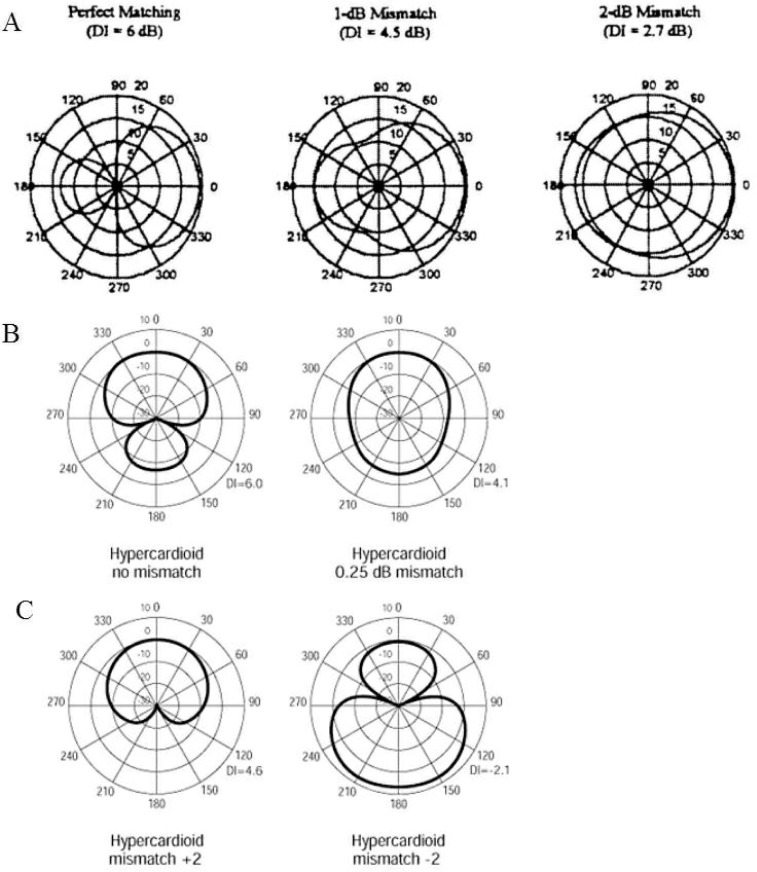

The sensitivity of the directional microphone to sounds coming from different azimuths is usually displayed in a polar pattern. Directional microphones exhibit four distinct types of polar patterns: bipolar (or bidirectional, dipole), hypercardioid, supercardioid, and cardioid (Figure 1A). The least sensitive microphone locations (i.e., nulls) of these polar patterns are at different azimuths relative to the most sensitive location (0° azimuth). Notice that these measurements are made when the directional microphones are free-hanging in a free field where the sound field is uniform, free from boundaries, free from the disturbance of other sound sources, and nonreflective. When the directional microphones are measured in three dimensions, their sensitivity patterns to sounds from different locations are called directional sensitivity patterns (Figure 1B).

Figure 1.

(A) The relationship between the ratio of the internal and external delays and the polar patterns. (Reprinted with permission from Powers and Hamacher, Hear J, 55[10], 2002). (B) The directional sensitivity patterns of directional microphones exhibiting cardioid, hypercardioid, and supercardioid patterns. A commercial stand directional microphone is placed at the center of the directional sensitivity pattern.

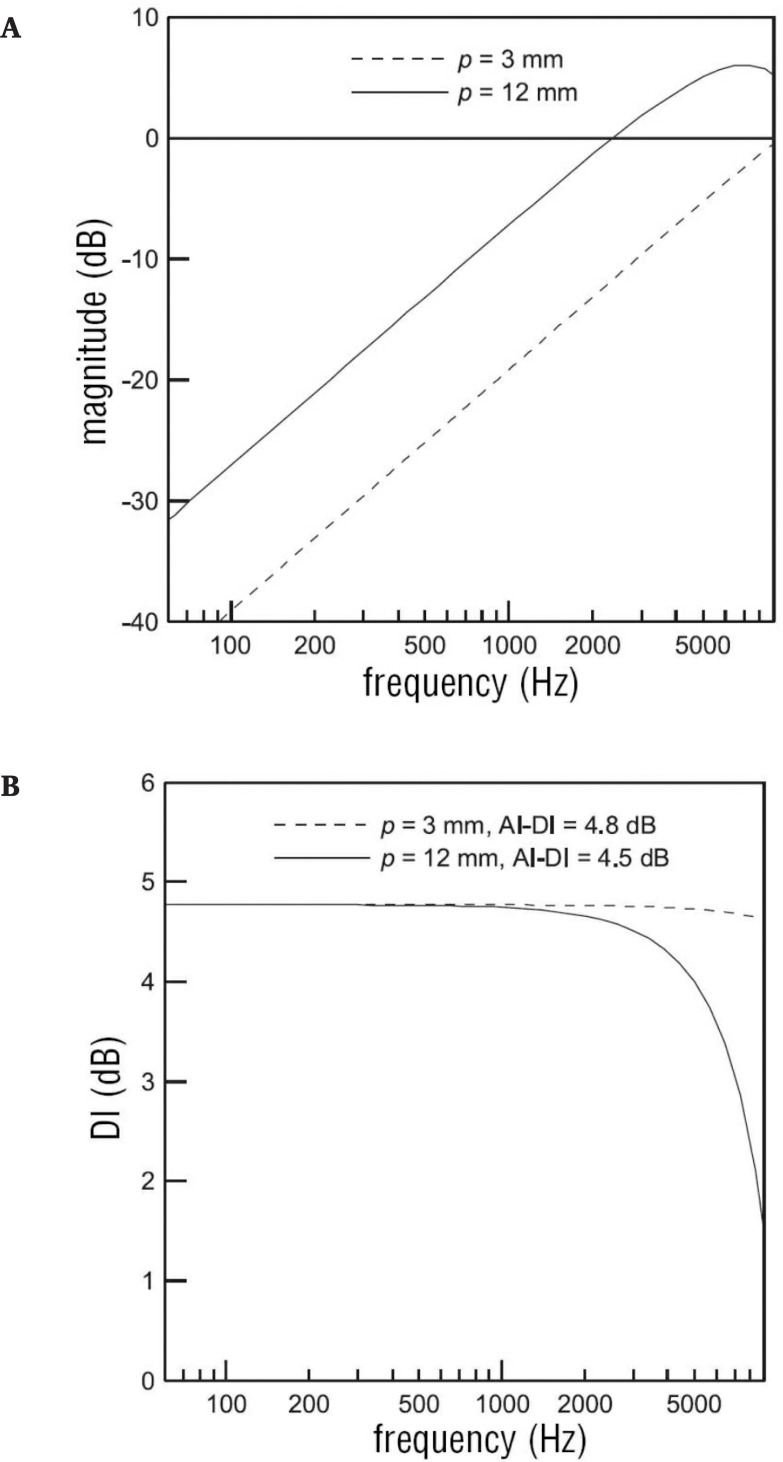

The directional sensitivity patterns of directional microphones are generated by altering the ratio between the internal and external delays. The internal delay is determined by the acoustic delay network placed close to the entrance of the back microphone port. The external delay is determined by the port spacing between the front and back ports, which in turn, is determined by the available space and considerations of the amount of low-frequency gain reduction and the amount of high-frequency directivity (Figure 2).

Figure 2.

(A) The relationship between the port spacing (p) and the amount of low-frequency cut-off for directional microphones that use the delay-and-subtract processing. As port spacing decreases, the cut-off frequency for low-frequency gain reduction increases. This is because sound pressures picked up by the two microphone ports/two omni-directional microphones are subtracted at two adjacent points. As frequency decreases, the wavelength increases, the differences between the two points decreases, and the resultant microphone output becomes smaller after the subtraction. Thus, the cut-off frequency for low-frequency roll-off increases as the microphone port spacing decreases. (B) The relationship between the port spacing (p) and the amount of high-frequency directivity index (DI). As port spacing decreases, the high-frequency directivity index increases. This occurs because as the wavelength of the incoming signal approaches the port spacing, directionality breaks down. The smaller the port spacing, the higher the frequency at which the directionality breaks down (AI-DI = articulation index weighted directivity index). (Courtesy of Oticon, reprinted with permission).

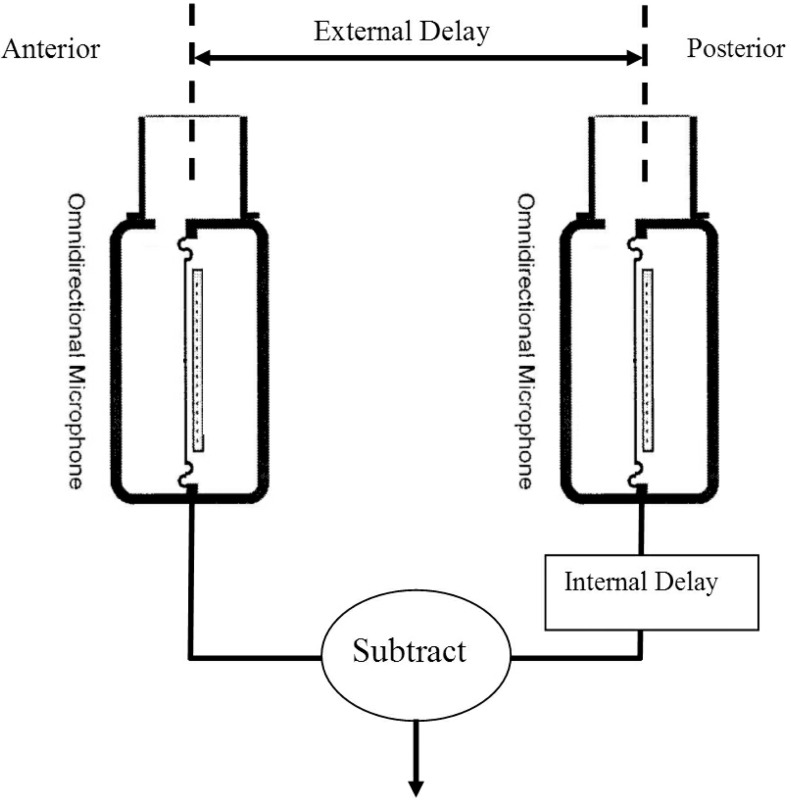

In the dual-microphone design, the directional microphones are composed of two omni-directional microphones that are matched in frequency response and phase (Figure 3). The two omnidirectional microphones are combined by using delay-and-subtract processing, similar to single-microphone directional microphones. The electrical signal generated from the posterior microphone is electrically delayed and subtracted from that of the anterior microphone in an integrated circuit (Buerkli-Halevy, 1987; Preves, 1999; Ricketts and Mueller, 1999). By varying the ratio between the internal and external delays, the polar patterns of the dual-microphone directional microphones can also generate bipolar, cardioid, hypercardioid, or supercardioid patterns.

Figure 3.

A figurative example of how the first-order dual-microphone directional microphone is implemented. The polar pattern of the directional microphone depends on the ratio of the internal and external delays. (Reprinted (modified) with permission from Thompson SC, Hear J 56[11], 2003).

Although the performances of single-microphone and dual-microphone directional microphones are comparable, most of the high-performance digital hearing aids use dual-microphone directional microphones because of their flexibility. Single-microphone directional microphones have fixed polar patterns after being manufactured because neither the external delay nor the internal delay can be altered. However, dual-microphone directional microphones can have variable polar patterns because their internal delays can be varied by signal processing algorithms. The ability to vary the polar pattern after the hearing aid is made opens doors to the implementation of advanced signal processing algorithms (e.g., adaptive directional microphone algorithms).

The directional effect of the directional microphones can be quantified in several ways:

The front-back ratio is the microphone sensitivity difference in dB for sounds coming from 0° azimuth to sounds from 180° azimuth.

The directivity index is the ratio of the microphone output for sounds coming from 0° azimuth to the average of microphone output for sounds from all other directions in a diffuse/reverberant field (Beranek, 1954).

The articulation index-weighted directivity index (AI-DI) is the sum and average of the directivity index at each frequency multiplied by the articulation index weighting of the frequency band for speech intelligibility (Killion et al., 1998).

For a review on the design and evaluation of first-order directional microphones, please refer to the reviews by Ricketts (2001) and Valente (1999).

3.1.1.2. Updates on the Clinical Verification of First Order Directional Microphones

Many factors affect the benefits of directional microphones. Research studies on the effect of directional microphones on speech recognition conducted in laboratory settings showed a large range of SNR-50 improvement, from 1 to 16 dB. The amount of benefit experienced by the hearing aid users depends on the directivity index of the directional microphone; the number, the placement, and the type of noise sources; the room and environmental acoustics, relative distance between the talker and listener, location of the noise relative to the listener, and vent size, among others (Beck, 1983; Hawkins and Yacullo, 1984; Gravel et al., 1999; Kuk et al., 1999; Nielsen and Ludvigsen, 1978; Preves et al., 1999; Ricketts, 2000a; Ricketts and Dhar, 1999; Studebaker et al., 1980; Valente et al., 1995; Wouters et al., 1999).

Normally, the higher the directivity index and AI-DI, the higher the directional benefit provided by the directional microphones (Ricketts, 2000b, Ricketts et al., 2001). Studies by various researchers have also shown that the directivity index or AI-DI can be used to predict the improvements in SNRs-50 provided by the directional microphones with multiple noise sources or relatively diffuse environments (Laugesen and Schmidtke, 2004; Mueller and Ricketts, 2000; Ricketts, 2000a).

The amount of directional benefit of the hearing aids is affected by the number of noise sources and the testing environment. Studies conducted with one noise source placed at the null of the directional microphones (Agnew and Block, 1997; Gravel et al., 1999; Lurquin and Rafhay, 1996; Mueller and John, 1979; Valente et al., 1995; Wouters et al., 2002) showed greater SNR-50 improvements than studies conducted with multiple noise sources or with testing materials recorded in real-world environments (Killion et al., 1998; Preves et al., 1999; Pumfort et al., 2000; Ricketts, 2000a; Ricketts and Dhar, 1999; Valente et al., 2000). In general, 3 to 5 dB of improvement in the SNR-50 is reported in real-world environments with multiple noise sources (Amlani, 2001; Ricketts et al., 2001; Valente et al., 2000; Wouters et al., 1999).

In addition, greater improvements are generally observed in less reverberant environments than in more reverberant environments (Hawkins and Yacullo, 1984; Killion et al., 1998; Ricketts, 2000b; Ricketts and Dhar, 1999; Studebaker et al., 1980; Ricketts and Henry, 2002). Reverberation reduces directional effects because sounds are reflected from surfaces in all directions. The reflected sounds make it impossible for directional microphones to take advantage of the spatial separation between speech and noise. Research studies have also shown that directional microphones are more effective if speech, noise, or both speech and noise are within the critical distance (Ricketts, 2000a; Leeuw and Dreschler, 1991; Ricketts and Hornsby, 2003). Critical distance is the distance at which the level of the direct sound is equal to the level of the reverberant sound. Within the critical distance, the level of the direct sound is higher than the level of the reverberant sound.

Further, the directional effect at the low-frequency region reduces as the vent size increases because vents tend to reduce the gain of the hearing aid below 1000 Hz and allow unprocessed signals from all directions to reach the ear canal. However, when the weightings of articulation index are considered, the decrease in AI-DI was only about 0.4 dB for each 1-mm increase in vent size up to a diameter of 2 mm (Ricketts, 2001; Ricketts and Dittberner, 2002). Although a larger decrease of AI-DI (i.e., 0.8 dB) was observed when the vent size increased from 2 mm to open fitting, the open earmold fitting would still have about a 4 dB higher AI-DI than the omni-directional mode. In general, vents have the greatest effect on hearing aids with high directivity indexes at low frequencies (Ricketts, 2001).

Factors that do not affect the benefit of directional microphones are compression and hearing aid style (Pumfort et al., 2000; Ricketts, 2000b; Ricketts et al., 2001). At the first glance, the actions of compression and directional microphones seem to act in opposite directions, i.e., directional microphones reduce background noise which is usually softer than speech while compression amplifies softer sounds more than louder sounds. In practice, however, sounds from multiple sources occur at the same time and the gain of the compression circuit is determined by the most dominant source or the overall level. If both speech and noise occur at the same instance with a positive SNR, the gain of the hearing aid is determined by the level of the speech, not the noise. Research studies comparing the directional benefits of linear and compression hearing aids did not show any difference in speech understanding ability of hearing aid users if speech and noise coexist at the same instance (Ricketts et al., 2001).

Another factor that does not affect the performance of directional microphones is the hearing aid style (Pumfort et al., 2000; Ricketts et al., 2001). Previous research studies have shown that the omni-directional microphones of the in-the-ear hearing aids have higher directivity indexes than do behind-the-ear hearing aids because of the pinna effect (Fortune, 1997, Olsen and Hagerman, 2002) and the SNRs-50 of subjects also concur with this finding (Pumfort et al., 2000). However, SNRs-50 of subjects were not significantly different for the directional microphones of the two hearing aid styles (Pumfort et al., 2000; Ricketts et al., 2001). This indicated that directional microphones provide less improvement in speech understanding in an in-the-ear hearing aid than a behind-the-ear hearing aid. In other words, although the omni-directional microphones of in-the-ear hearing aids are more directional than the omni-directional microphones of the behind-the-ear hearing aids, the performance of the directional microphones implemented in both hearing aid styles was not significantly different (Ricketts, 2001).

Most laboratory tests have shown measurable directional benefits and many hearing aids users in field evaluation studies also report perceived directional benefit. However, a number of recent field studies reported that a significant percentage of hearing aid users might not perceive the benefits of directional amplification in their daily lives even if the signal processing, venting, and hearing aid style are kept the same in the field trials and laboratory tests (Cord et al., 2002; Mueller et al., 1983; Ricketts et al., 2003; Surr et al., 2002; Walden et al., 2000).

According to the researchers, the possible reasons for the discrepancies can be attributed to the relative locations of the signal and noise, acoustic environments, the type and location of noise encountered, subjects’ willingness to switch between directional and omni-directional microphones, and the percentage of time the use of directional microphone is indicated, among others. (Cord et al., 2002, Surr et al., 2002, Walden et al., 2000; Walden et al., 2004).

Specifically, directional microphones are designed to be more sensitive to sounds coming from the front than sounds coming from other directions. Many laboratory tests showing the benefit of directional microphones were conducted with speech presented at 0° azimuth and noise from the sides or the back with both speech and noise in close proximity to the hearing aid user. However, hearing aid users reported that the desired signal did not come from the front in as much as 20% of the time in daily life (Walden et al., 2003). Studies have indicated that when speech comes from directions other than the front, the use of directional microphone may have a positive, neutral, or negative effect on speech intelligibility, especially for low-level speech from the back (Kuk, 1996; Kuk et al., 2005, Lee et al., 1998; Ricketts et al., 2003).

Two other possible reasons for the discrepancies between laboratory tests and field trials are the acoustic environments, and the type(s) and location(s) of noise encountered. Most laboratory tests are conducted in environments with a reverberation time of less than 600 milliseconds (Amlani, 2001). A wide range of reverberation environments that often have higher reverberation times may be encountered in daily life, however. As directional benefits diminish with increase in reverberation, hearing aid users may not be able to detect the benefits of directional microphones in their everyday life. In addition, the use of non-real-world noise in the laboratory (e.g., speech spectrum noise) at fixed, examiner-determined locations may have exaggerated the benefits of directional microphones.

The need for the user to switch between omni-directional microphones and directional microphones and the percentage of time/situations that the use of directional microphone is indicated in daily life can also partly account for the laboratory and field evaluation differences. Cord and colleagues (2002) reported that about 23% of the subjects stated that they left their hearing aids in the default omni-directional mode during the field trials because they did not notice much difference in the first few trials of directional microphones. Further, Cord and colleagues reported that subjects who actively engaged in switching between the omni-directional and directional microphones reported that they only used directional microphones about 22% of the time. This indicated that omni-directional microphones were sufficient in 78% of daily life, and subjects may not have adequate directional microphones usage time to realize the benefit of directional microphones.

Cord and colleagues (2004) have also investigated the predictive factors differentiating the subjects who regularly switched between the omni-directional and directional modes and those who left the hearing aids in the default position in a subsequent study. They reported that the two groups did not significantly differ in their degree or configuration of hearing loss, hearing aid settings, directional benefits that they receive when tested in the test booth, or the likelihood to encounter situations where bothersome background noise occurs. In other words, there is no ensured evidence that can be used to predict which hearing aid users will switch between the omni-directional and directional microphones versus those who will leave the hearing aids in the default omni-directional mode. In addition, previous studies also failed to predict directional benefits based on hearing aid users’ audiometric testing results (Jespersen and Olsen, 2003; Ricketts and Mueller, 2000).

3.1.1.3. Updates on the Limitations of First-Order Directional Microphones

With the increase in directional microphone usage in recent years, the limitations of directional microphones have become more apparent to the hearing aid engineers and audiology community. These limitations of directional microphones include relatively higher internal noise, low-frequency gain reduction (roll-off), higher sensitivity to wind noise, and reduced probability to hear soft sounds from the back (Kuk et al., 2005, Lee et al., 1998; Ricketts and Henry, 2002; Thompson, 1999).

Two factors contribute to the problem of higher internal noise for the dual-microphone directional microphones. First, the internal noise of the modern omni-directional microphones is about 28 dB SPL. When two omni-directional microphones are combined to make a dual-microphone directional microphone in the delay-and-subtract process, the internal noise of dual microphones is about 3 dB higher than the internal noise of omni-directional microphones (Thompson, 1999). This internal noise is normally masked by environmental sounds and is inaudible to hearing aid users, even in quiet environments. However, the problem arises when a hearing aid manufacturer tries to accommodate the second factor, low-frequency roll-off.

The low-frequency roll-off occurs when low-frequency sounds reaching the two omni-directional microphones are subtracted at similar phase. The amount of low-frequency roll-off is about 6 dB/octave for first-order directional microphones (Thompson, 1999; Ricketts, 2001). The perceptual consequence of the low-frequency roll-off is “tinny” sound quality and under-amplification of low-frequency sounds for hearing aid users with low-frequency hearing loss (Ricketts and Henry, 2002; Walden et al., 2004).

The common practice to solve this problem is to provide low-frequency equalization so that the low-frequency responses of the directional microphones are similar to that of the omni-directional microphones. Unfortunately, by matching the gain between omni-directional and directional modes, the internal microphone noise is also amplified. Some hearing aid users may find this increase in microphone noise objectionable, especially in quiet environments (Lee and Geddes, 1998; Macrae and Dillon, 1996).

Two practices are adopted to circumvent this dilemma. First, instead of fully compensating for the 6-dB/octave low-frequency roll-off, hearing aid manufacturers may decide to provide partial low-frequency compensation (i.e., 3 dB/octave). Second, the consensus in the audiology community is to stay in the omni-directional mode in quiet environments. Field studies have also shown that subjects either preferred the omni-directional mode or showed no preference between the two modes in quiet environments (Mueller et al., 1983; Preves et al., 1999; Walden et al., 2004).

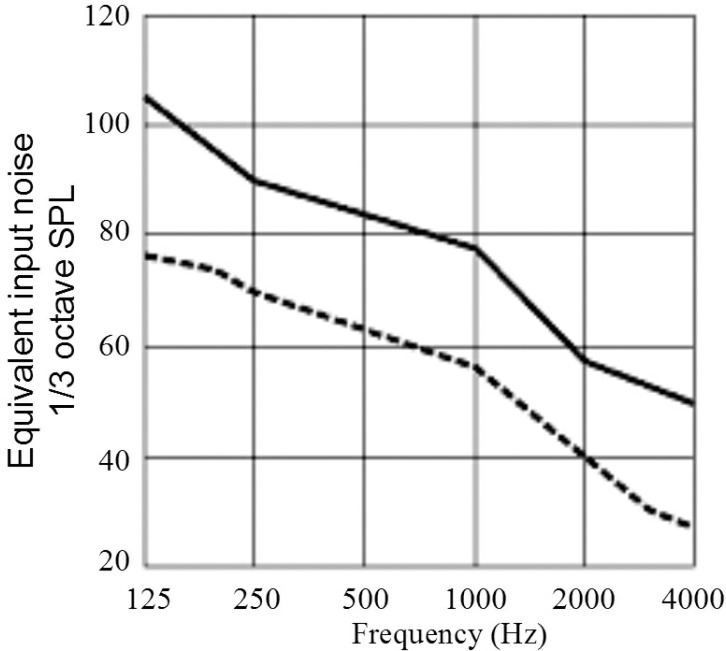

Directional microphones are also more susceptible to wind noise because they have a higher sensitivity to near field signals. When the wind curves around the head, turbulence is created very close to the head. As directional microphones have higher sensitivity to sounds in near field (i.e., sounds within 30 cm), the wind noise level picked up by the directional microphones can be as much as 20 to 30 dB higher than that picked up by an omni-directional microphone (Figure 4) (Kuk et al., 2000; Thompson, 1999). Because wind noise has dominant energy at low frequencies, the negative effect of wind noise is further exacerbated if the directional microphone has low-frequency gain compensation. Again, the strategy is to use omni-directional microphone mode should wind noise be the dominant signal at the microphone input. In addition, some algorithms automatically reduce low frequency amplification when wind noise is detected (Siemens Audiology Group, 2004).

Figure 4.

Directional microphones (solid line) have higher outputs for wind noise than omni-directional microphones (dotted line). (Original data from Dillon et al., 1999. Reprinted with permission from Kuk et al., Hear Rev 7[9], 2000).

Although a design objective, it can be said that a limitation of directional microphones is that they are less sensitive to speech or environmental sounds coming from the back hemisphere, especially at low levels (Kuk et al., 2005; Lee et al., 1998). Directional microphones should be used with caution in environments in which audibility to sounds or warning signals from the back hemisphere is desirable.

3.1.1.4. Working with Directional Microphones

Despite these limitations, directional microphones are currently the most effective noise reduction strategy (second to personal FM or infrared systems) available in hearing aids. Several cautions should be exercised when clinicians fit directional microphones:

First, the performance of directional microphones decreases near reflective surfaces, such as a wall or a hand, or in reverberant environments. Hearing aid users therefore need to be counseled to move away from reflective surfaces or to converse at a place with less reverberation, if possible.

Second, the polar patterns of a directional hearing aid and the locations of the nulls when the hearing aid is worn on the user's head can be very different from an anechoic chamber measurement in which the directional microphone is free-hanging in space (Chung and Neuman, 2003; Neuman et al., 2002). Depending on the hearing aid style, the most sensitive angle of the first-order directional microphones may vary from 30° to 45° for in-the-ear hearing aids to 90° for behind-the-ear hearing aids (Foutune, 1997, Neuman et al, 2002; Ricketts, 2000b). If possible, clinicians need to use the polar patterns measured when the hearing aids are worn in the ear so they can counsel the hearing aid users to position themselves so that the most sensitive direction of their directional microphone points to the direction of the desired signal and the most intense noise is located at the direction with the least sensitivity, if possible.

Third, clinicians need to be aware that some hearing aids automatically provide low-frequency compensation for the directional microphone mode. Others require the clinician to select the low-frequency compensation in the fitting software. Clinicians also need to determine if low-frequency compensation for the directional microphone mode is appropriate given the hearing aid user's listening needs.

Fourth, Walden and colleagues (2004) recently reported that hearing aid users who actively switch between the omni-directional and directional microphones preferred the omni-directional mode more in relatively quiet listening situations. When noise existed, they preferred the omni-directional mode when the signal source was relatively far away. On the other hand, hearing aid users tended to prefer the directional mode in noisy environments, when speech comes from the front, or when the signal is relatively close to them. Walden and colleagues also noted that counseling hearing aid users to switch to the appropriate microphone mode might increase the success rate of directional hearing aid fitting.

Fifth, although a number of studies have shown that children can also benefit from directional microphones to understand speech in noise (Condie et al., 2002; Gravel e al., 1999; Bohnert and Brantzen, 2004), the use of directional microphones that require manual switching in very young children should be cautioned. Very young children who are starting to learn the auditory, speech, and language skills need every opportunity to access auditory stimuli. As directional microphones attenuate sounds from the sides and back, they may reduce the incidental learning opportunities that may help children acquire or develop speech and language skills. In addition, young children probably will not be able to switch between microphone modes requiring parents or caregivers to effectively assume this responsibility among other care-giving liabilities.

As mentioned before, always listening in the directional mode may reduce the chance of detecting warning signals or soft speech from behind, which is crucial to a child's safety. The American Academy of Audiology recommended the use of directional microphones on children with caution, especially on young children who cannot switch between the directional and omni-directional modes (American Academy of Audiology, 2003).

3.1.2. Adaptive Directional Microphones

In the past, all directional microphones had fixed polar patterns. The azimuths of the nulls were kept constant. Noise in the real world, however, may come from different locations and the relative locations of speech and noise may change over time. A directional microphone with a fixed polar pattern may not provide the optimal directional effect in all situations. With the advances in digital technology, directional microphones with variable polar patterns (i.e., adaptive directional microphones) are available in many digital hearing aids. These adaptive directional microphones can vary their polar patterns depending on the location of the noise. The goal is to always have maximum sensitivity to sounds from the frontal hemisphere and minimum sensitivity to sounds from the back hemisphere in noisy environments (Kuk et al., 2002a; Powers and Hamacher, 2004; Ricketts and Henry, 2002). It should be noted that adaptive directional microphones are not the same as the switchless directional microphones implemented in some hearing aids. Adaptive directional microphones automatically vary their polar pattern, whereas switchless directional microphones automatically switch between the omni-directional and directional mode. Most adaptive directional microphones in the market, automatically switch between polar patterns and microphone modes, however.

3.1.2.1. How They Work

Most of the adaptive directional microphones implemented in commercially available hearing aids are first-order directional microphones. The physical construction of adaptive directional microphones is identical to that of the dual-microphone directional microphones. The difference is that the signal processing algorithm of the adaptive directional microphones can take advantage of the independent microphone outputs of the omni-directional microphones and vary the internal delay of the posterior microphone. As mentioned before, the polar pattern of a directional microphone can be changed by varying the ratio of the internal and external delays. Because the external delay (determined by the microphone spacing) is fixed after the hearing aid is manufactured, the ratio of the internal and external delays can be changed by varying the internal delay of the posterior microphone. When the ratio is changed from 0 to 1, the polar pattern is varied from bidirectional to cardioid (Powers and Hamacher, 2004).

Ideally, adaptive directional microphones should always adopt the polar pattern that places the nulls at the azimuths of the dominant noise sources. For example, the adaptive directional microphone should adopt the bidirectional pattern if the dominant noise source is located at the 90° or 270° azimuths and adopt the cardioid pattern if the dominant noise source is located at 180° azimuth. In practice, different hearing aid manufacturers use different calculation methods to estimate the location of the dominant noise source and to vary the internal delay of the directional microphones accordingly. The actual location of the null may vary, depending on the calculation method and the existence of other noise and sounds in the environment.

The adaptive ability of the adaptive directional microphones is achieved in three to four steps:

signal detection and analysis;

determination of the appropriate operational mode (i.e., omni-directional mode or directional mode);

determination of the appropriate polar pattern; and

execution of the decision.

Table 1 summarizes the characteristics and strategies implemented in adaptive directional microphones of some hearing aids. Notice that the determination of the operational mode is user-determined for GNReSound Canta but automatic for other hearing aids. Another point worth noting is that most adaptive directional microphone algorithms process the signal in a single band. More recently, multichannel adaptive directional hearing aids have been introduced. First introduced in the Oticon Syncro hearing aids, this technology allows different directional sensitivity patterns to occur within multiple channels at the same time.

Table 1.

The Characteristics of Adaptive Directional Microphones Implemented in Selected Commercially Available Hearing Aids*

| Oticon-Syncro | Phonak-Perseo | ReSound-Canta | Siemens-Triano | Widex-Diva | |

|---|---|---|---|---|---|

| Signal Detection and Analysis |

|

|

Front-back ratio detector to estimate the location of the dominant sound source |

|

|

| Decision Rules for Determining the Microphone Mode | Surround Mode:

Split-Directionality Mode:

Full-Directionality Mode:

|

Omni Mode: speech only Directional Mode: The decision rules for switching to directional microphones can be adjusted by the clinician on the basis of user priority for speech audibility or comfort:

|

User determined | Omni Mode:

Directional Mode:

|

Omni Mode:

Directional Mode:

|

| Adaptation Speed for Omni-Directional and Directional Switch | 2–4 sec, depending on the hearing aid's Identity setting, i.e., the life style of the hearing aid user in the fitting software | Variable/programmable by clinician, from 4–10 sec, based on “Audibility” or “Comfort” selections in the hearing aid fitting software | Not applicable because switch is user determined | 6–12 sec, depending on the settings of the listening program | 5–10 sec, depending on the settings of the listening program |

| Decision Rules for Determining the Polar Patterns |

|

The internal delay that yield the minimum power output from the directional microphone is adopted | The internal delay that yield minimum output from the directional microphone is adopted | The weighted sum of a bidirectional and cardioid pattern is calculated and the internal delay that yields the minimum output (weighted sum) from the directional microphone is adopted |

|

| All hearing aids: Any polar pattern with nulls between 90° to 270° is possible | |||||

| Adaptation Speech Between Different Polar Patterns | 2 sec/90°, speed may vary depending on the hearing aid's Identity setting | 100 ms between polar patterns | Analysis of environment every 4 ms, changing of polar pattern every 10 ms | 50 ms/90° | Typically less than 5 sec |

| Polar Pattern when Multiple Noise Sources Exist |

|

Cardiod | Hypercardioid | Hypercardiod | Hypercardioid |

| Low Frequency Equalization | Automatic | Programmable in fitting software via “Contrast” feature | Programmable in fitting software | Automatic | Automatic for each polar patterns |

| Information Source(s) | Oticon, 2004; Flynn, 2004, personal communication | www.Phonak.com (a); Ricketts and Henry (2002); Fabry (2004), personal communication | Groth (2004), personal communication | Powers (2004), personal communication. Powers & Hamacher (2004) | Kuk et al., 2002a; Kuk, 2004, personal communication |

| Clinical Verification | Flynn (2004): compared to the first-order fixed directional microphone implemented in Adapto, Syncro's Full-Directionality mode combined with its noise reduction algorithm yielded about 1–2 dB better SNR-50s for hearing aid users with multiple broadband noise sources in the back hemisphere. It is unclear how much of the improvement is solely generated by the adaptive directional microphone | Unavailable. See text for the evaluation of the first-order adaptive directional microphone implemented in Phonak Claro | Unavailable |

Bentler et al. (2004a): the hybrid second-order adaptive directional microphone has improved the SNR-50s of hearing aid users for 4 dB. No significant difference in SNR-50s between the first-order and the hybrid second-order adaptive directional microphones. Ricketts et al. (2003): Significant benefit was observed using the second-order directional microphone compared to its fixed directionality mode in moving noise |

Valente and Mispagel (2004): Compared to the omni-directional mode, the adaptive directional microphone improved SNR-50s for 7.2 dB if a single noise source was located at 180°. The improvement in SNR-50s decreased to 5.1 dB and 4.5 dB when noise was presented at 90° + 270°, and 90°+180°+270°, respectively |

These hearing aids are selected to demonstrate the range and the differences in implementation methods of adaptive directional microphone algorithms in commercially available hearing aids. SNR = signal-to-noise ratio.

It is apparent in Table 1 that hearing aid manufacturers use different strategies to implement their adaptive directional microphone algorithms. The following discussion explains the similarities and differences among the adaptive directional microphone algorithms from different hearing aid manufacturers or models.

a. Signal Detection and Analysis

In the signal detection and analysis unit, algorithms implemented in different hearing aids may have a different number of signal detectors to analyze different aspects of the incoming signal. Some of the most common detectors are the level detector, modulation detector, spectral content analyzer, wind noise detector, and front-back ratio detector, among others.

i. Level Detector

The level detector in adaptive directional microphone algorithms estimates the level of the incoming signal. Many adaptive directional microphones only switch to directional mode when the level of the signal exceeds a certain predetermined level. At levels lower than the predetermined level, the algorithm assumes that the hearing aid user is in a quiet environment and the directional microphone is not needed. Thus, the hearing aid stays at the omni-directional mode.

ii. Modulation Detector

The modulation detector is commonly used in hearing aids to infer the presence or absence of speech sounds in the incoming signal and to estimate the SNR of the incoming signal. The rationale is that the amplitude of speech has a constantly varying envelope with a modulation rate between 2 and 50 Hz (Rosen, 1992), with a center modulation rate of 4 to 6 Hz (Houtgast and Steeneken, 1985). Noise, on the other hand, usually has a modulation rate outside of this range (e.g., ocean waves at the beach may have a modulation rate of around 0.5 Hz) or it occurs at a relatively steady or unmodulated level (e.g., fan noise).

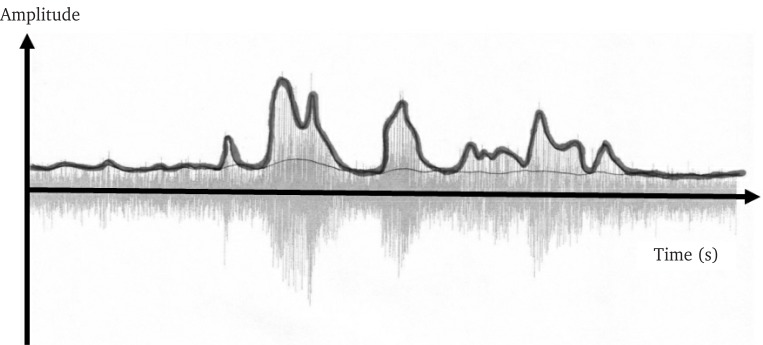

The speech modulation rate of 4 to 6 Hz is associated with the closing and opening of the vocal tract and the mouth. Speech in quiet may have a modulation depth of more than 30 dB, which reflects the difference between the softest consonant (i.e., voiceless /ø/) and the loudest vowel (i.e., /u/). Modulation depth is the level difference between the peaks and toughs of a waveform plotted in the amplitude-time domain (Figure 5). If a competing signal (noise or speech babble) is present in the incoming signal, the modulation depth decreases. Because the amount of amplitude modulation normally decreases with an increase in noise level, the signal detection and analysis unit uses the modulation depth of signals with modulation rates centered at 4 to 6 Hz to estimate the SNR in the incoming signal—the greater the modulation depth, the higher the SNR. Notice that if the competing signal (noise) is a single talker or has a modulation rate close to that of speech, the signal detection and analysis unit cannot differentiate between the desired speech and the noise.

Figure 5.

The modulation detector is composed of a maxima (thick line) and a minima follower (thin line). The maxima follower estimates the level of speech and the minima follower estimates the level of noise. The difference between the two allows the estimation of signal-to-noise ratio in the frequency channel.

The modulation detector is used in the adaptive directional microphone algorithms of the Oticon Syncro and Phonak Perseo digital hearing aids. However, the results of the modulation detectors are used to make different decisions in the algorithm.

The modulation detector of Perseo analyzes the modulation pattern and the spectral center of gravity to estimate the presence or absence of speech and noise. An analog to the spectral center of gravity is the center of gravity for an object. The difference is that center of gravity refers to the weight center of the object, whereas spectral center of gravity refers to the frequency center of a sound. The result of the modulation detector in Perseo is then combined with the priority setting (i.e., Audibility or Comfort) and used to determine the appropriate operational mode for the instance.

Syncro, on the other hand, uses the results of the modulation detector to calculate SNR at the output of the directional microphone. The signal processing algorithm is programmed to seek the operational mode (i.e., the Surround Mode, Split-or Full-Directionality Modes) and the polar patterns to maximize the SNRs of the four frequency bands at the microphone output (Flynn, 2004a). Syncro defines the speech as waves with modulation rates ranged from 2–20 Hz.

iii. Wind Noise Detector

The wind noise detector is used to detect the presence and the level of wind noise. Although the exact mechanisms used in the algorithms from different manufacturers are unknown, it is possible that the wind noise detectors make use of several physical characteristics of the wind noise and hearing aid microphones to infer the presence or absence of wind. First, directional microphones are more sensitive to sounds coming from the near field than sounds coming from the far field, whereas omni-directional microphones have similar sensitivity to sounds coming from the near field and the far field. To a dual-microphone directional microphone, near field refers to sounds coming from a distance of within 10 times of the microphone spacing; far field refers to sounds coming from a distance of more than 100 times of the microphone spacing. Sounds coming from a distance of between 10 to 100 times of the microphone spacing have the properties of both a near field and a far field (Thompson, 2004, personal communication).

When a sound comes from the far field, the outputs generated at the two omni-directional microphones that form the directional microphone are highly correlated. If the outputs are correlated 100%, the peaks and valleys of the waveform from the two microphone outputs should coincide when an appropriate amount of delay is applied to one of the microphone output during the cross-correlation process. The amount of delay applied depends on the direction of the sound. In other words, the outputs of the two omni-directional microphones have a constant relationship and similar amplitude for a sound coming from the far field.

Because microphone output is highly correlated for sounds from the far field, when the microphone outputs are delayed and subtracted to form a directional microphone, the amplitude of the signal is reduced if the signal comes from the sides or the back hemisphere and not much affected if the signal comes from the front hemisphere. In addition, the directional microphone exhibits a 6-dB/octave roll-off at the low-frequency region for sounds coming from any direction. Assuming that the frequency response of the hearing aid is compensated for low-frequency roll-off, the output of the omni-directional microphone mode is comparable to the output of the directional microphone mode for sounds coming from the far field (Edwards, 2004, personal communication; Thompson, 2004, personal communication).

When wind is blowing, a turbulence and some eddies are generated close to the head. Wind noise is therefore a sound from the near field. For a sound coming from the near field, the outputs of the two omni-directional microphones that form a directional microphone are poorly correlated. When the outputs of the omni-directional microphones are delayed and subtracted, minimum reduction in amplitude results no matter which direction the sounds are coming from. In fact, the wind noise entering the two microphones are added to further increase the sensitivity of the directional microphone to wind noise, especially at high frequencies. In addition, the output of the directional microphone also does not exhibit a 6-dB/octave roll-off at the low-frequency region; that is, the frequency response of the sounds is similar for the directional and the omni-directional modes. Assume that it is the same hearing aid with low-frequency compensation; now, the output of the directional microphone is much higher than the output of the omni-directional microphone for this near field sound because of the increased sensitivity and the low frequency gain compensation (Edwards, 2004, personal communication; Thompson, 2004, personal communication).

Although the exact mechanisms of wind noise detectors are proprietary to each hearing aid manufacturer, it is possible that one characteristic that the wind noise detector monitors is the differences between the outputs of the omni-directional and directional microphones (Edwards, 2004, personal communication). Using the example with equalized low-frequency gain, the outputs of the omni-directional and directional microphones are comparable for sounds coming from the far field, but the output of the directional microphone is much higher than the omnidirectional microphone for sounds coming from the near field (wind noise). On the other hand, if the low-frequency gain is not equalized, the output of the directional microphone is lower than the output of the omni-directional microphone for sounds coming from the far field, but the output of the directional microphone is higher than the output of the omni-directional microphone for sounds from the near field.

Another possible strategy to detect wind noise is to use the correlation coefficient to infer the presence of wind noise. The correlation coefficient can be determined by applying several delays to the output of one of the omni-directional microphones and calculating the correlation coefficient between the outputs of the two microphones for each delay time. As mentioned previously, if the microphone outputs are correlated 100%, the peaks and valleys of the waveforms coincide perfectly. If the peaks and valleys of the waveform are slightly mismatched in amplitude or phase, the outputs are said to have a lower correlation coefficient. For sounds in the near field, the correlation coefficient can be close to 0%.

The wind noise detector can make inference based on the degree of correlation between the outputs of the two omni-directional microphones. If the outputs have a high correlation coefficient, the wind noise detector infers that wind noise is absent. If the outputs have a low correlation coefficient, the algorithm infers that wind noise is present (Thompson, 2004, personal communication; Siemens Audiology Group, 2004). According to Oticon, the wind noise detectors in the Syncro hearing aids detect the uncorrelated signals between the microphone outputs that are consistent with the spectral pattern of wind noise to infer the presence or absence of wind noise (Flynn, 2004, personal communication).

In addition, it is possible that a wind noise detector can set different correlation criteria for the coefficients at low- and high-frequency regions for wind noise reduction. High-frequency eddies are normally generated by finer structures around the head (e.g., pinna, battery door of an in-the-ear hearing aid) and low-frequency eddies are generated by larger structures (e.g., the head and the shoulders). As the finer structures are much closer to the hearing aid microphones (in the near field) and the larger structures are further away from the microphone (in the mixed field), high-frequency sounds tend to have a lower correlation coefficient than low-frequency sounds at the microphone output (Thompson, 2004, personal communication). A sample decision rule for the wind noise detector to make use of this acoustic phenomenon can be: wind noise is present in the microphone output if the correlation coefficient is less than 20% at the low-frequency region and less than 35% at the high-frequency region.

When wind noise is detected, many hearing aids with adaptive directional microphones either remain at or switch to the omni-directional microphone mode to reduce annoyance of the wind noise or to increase the audibility of speech, or both (Kuk et al., 2002b; Oticon, 2004a, Siemens Audiology Group, 2004).

iv. Front-Back Detector

Some adaptive directional microphone algorithms also have a front-back ratio detector that detects the level differences between the front and back microphones and estimates the location of dominant signals (Fabry, 2004, personal communication, Groth, 2004, personal communication, Kuk, 2004, personal communication; Oticon, 2004a). For example, the front-back detector of Oticon Syncro combines the analysis results of the front-back ratio detector and the modulation detector to determine if the dominant speech is located at the back. If a higher modulation depth is detected at the output of the back microphone, the algorithm would remain at or switch to the omni-directional mode (Oticon, 2004a).

b. Determination of Operational Mode

As mentioned, the automatic switching between the omni-directional and directional mode, strictly speaking, can be classified as a different hearing aid feature in addition to adaptive directional microphones. Most hearing aids, however, have incorporated the automatic switching function into their adaptive directional microphone algorithms.

Every hearing aid has its own set of decision rules to determine whether the hearing aid should operate in the omni-directional mode or the directional mode for the instance (Table 1). Some hearing aids have simple switching rules. For example, the switching is user-determined in GNReSound Canta; whereas, Siemens Triano switches to the directional mode when the level of the incoming signal reaches a predetermined level.

Other adaptive directional microphone algorithms take more factors into account in the decision-making process, such as the level of the wind noise, the location of the dominating signal, and the level of environmental noise (Kuk et al., 2002a; Oticon, 2004a). The omni-directional mode is often chosen if wind noise dominates the microphone input, if the front-back ratio detector indicates that the dominant signal is located at the back of the hearing aid user, or if the level of the environmental noise or overall signal is below a predetermined level. The predetermined level is usually between 50 and 68 dB SPL, depending on the particular algorithm (Kuk, 2004, personal communication; Oticon, 2004a; Powers, 2004, personal communication).

Some adaptive directional microphone algorithms have more complex decision rules to determine the switching between the omni-directional and the directional mode. For example, the switching rules of Phonak Perseo can be changed by the clinician based on the hearing aid user's preference for audibility of speech (Audibility) or listening comfort (Comfort) (Table 1). If audibility is chosen, the hearing aid switches to directional mode only when speech-in-noise is detected in the incoming signal. If speech-only or noise-only is detected, the hearing aid remains in the omni-directional mode. However, if comfort is chosen, the hearing aid switches to the directional mode whenever noise is detected in the incoming signal. This means that the hearing aid remains in the omni-directional microphone mode only if speech-only is detected.

The adaptive directional microphone algorithm implemented in Oticon Syncro has the most complex decision rules (Table 1). Syncro operates at three distinctive directionality modes, namely, surround mode (i.e., omni-directional in all four bands), split-directionality mode (i.e., omni-directional at the lowest band and directional at the upper three bands), and full-directionality mode (i.e., directional in all four bands).

In the decision-making process, the algorithm uses the information from the level detector and the modulation detector in each of the frequency bands as well as two alarm detectors (i.e., the front-back ratio detector and the wind noise detector). The information provided by the alarm detectors takes precedence in the microphone mode selection process. As mentioned before, the signal processing algorithm implemented in Syncro seeks to maximize the SNR at the directional microphone output. Specifically, the algorithm stays in the surround mode if the omni-directional mode provides the best SNR at the microphone output, if the level of the incoming signal is soft to moderate, if the dominant speaker is located at the back, or if strong wind is detected.

The algorithm switches to the split-directionality mode if speech is detected in background noise, if the omni-directional mode at the lowest band and directional mode in the upper three bands yields the highest SNR, if the incoming signal is at the moderate level, or if a moderate amount of wind noise is detected. The algorithm switches to the full-directionality mode if speech from the front is detected in a high level of background noise, if the SNR is the highest with all four bands in the directional mode, and if no or only a low level of wind noise is detected (Flynn, 2004a).

c. Determination of Polar Pattern(s)

After the adaptive directional microphone algorithm decides that the hearing aid should operate in the directional mode, it needs to decide which polar pattern it should adopt for the instance. The common rule for all the adaptive directional microphone algorithms is that the polar pattern always has the most sensitive beam pointing to the front of the hearing aid user. To determine the polar pattern, many algorithms adjust the internal delay so that the resultant output or the power is minimum (Fabry, 2004, personal communication, Groth, 2004, personal communication, Powers and Hamacher, 2004; Kuk et al., 2002). Oticon Syncro, on the other hand, uses the estimated SNR to guide the decision process for choosing the polar patterns at the four frequency bands. Specifically, the adaptive directional microphone algorithm of Syncro calculates the SNR of each polar pattern with nulls from 180° to 270° at 1° intervals in the four frequency bands. The polar patterns that yield the highest SNR at the directional microphone output at each frequency band are chosen. As most of the adaptive directional microphones do not limit their calculations to bidirectional, hypercardioid, supercardioid, or cardioid patterns, they are capable of generating polar patterns with nulls at any angle(s) from 90° to 270°.

d. Execution of Decision

After the algorithm decides which operational mode or which polar pattern it needs to adopt, the appropriate action is executed. A very important parameter in this execution process is the time constants of the adaptive directional microphone algorithm. Similar to the attack-and-release times in the compression systems, each adaptive directional microphone algorithm has the adaptation/engaging times and release/disengaging times to govern the duration between the changes in microphone modes or polar pattern choices. Adaptive directional microphone algorithms implemented in different hearing aids have a set of time constants to switch from omnidirectional microphones to directional microphones and another set of time constants to adapt to different polar patterns (Table 1). The adaptation time for the algorithms to switch from the omni-directional to the directional mode generally varies from 4 to 10 seconds, depending on the particular algorithm. The adaptation time for an algorithm to change from one polar pattern to another is usually much shorter. It varies from 10 milliseconds to less than 5 seconds, depending on the particular algorithm.

One feature of the adaptive directional microphones worth noting is that their adaptation time varies, depending on other settings in the hearing aid listening program. For example, the time constants of Siemens Triano and Widex Diva change with the listening program, whereas the time constants of Phonak Perseo change with the Audibility or Comfort setting. A set of faster time constants is adopted if audibility is chosen as the priority of the hearing aid use, and a set of slower time constants are used if comfort is chosen to increase listening comfort.

In addition, the time constants of Oticon Syncro change with the Identity setting of the hearing aid program. The Identity setting is chosen by the clinician during the hearing aid fitting session based on the degree of hearing loss, age, life style, amplification experience, listening preference, and etiology of hearing loss of the hearing aid user. It controls the time constants for the adaptive directional microphones as well as many variables in the compression and noise reduction systems. In general, faster time constants are adopted if the Identity is set at Energetic and slower time constants are adopted if the Identity is set at Calm.

Unlike the adaptive release times implemented in compression systems, none of the time constants of the adaptive directional microphone algorithm varies in corresponding to the changes in the characteristics of the incoming acoustic signal. In other words, the time constants of the adaptive directional microphones are pre-set with hearing aid settings, but they do not vary with the acoustic environment. Further, the time constants of the adaptive directional microphones are not directly adjustable by the clinician. They are preset with different programming/priority choices but not as a stand-alone parameter in the fitting software.

3.1.2.2. Verification and Limitations

a. Clinical Verification

Several researchers have evaluated studies to compare the performance of the single-band adaptive directional microphones with the regular directional microphones with fixed polar patterns (Bentler et al., 2004b; Ricketts and Henry, 2002; Valente and Mispagel, 2004). Several inferences can be drawn from these research studies:

The adaptive directional microphones are superior to the fixed directional microphones if noise comes from a relatively narrow spatial angle (Ricketts and Henry, 2002).

The adaptive directional microphones perform similarly to the fixed directional microphones if noise sources span over a wide spatial angle or multiple noise sources from different azimuths coexist (Bentler et al., 2004a). According to Ricketts (personal communication, 2004), when multiple noise sources from different azimuths coexist, the single noise source needs to be at least 15 dB greater than the total level of all other noise sources to obtain a measurable adaptive advantages in at least two hearing aids.

When multiple noise sources from different azimuths coexist or the noise field is diffuse, adaptive directional microphones resort to a fixed cardioid or hypercardioid pattern (Table 1). Thus, the relative performance of the adaptive and fixed directional microphones in a diffuse field and for noise from a particular direction depends on the polar pattern of the fixed directional microphone. For example, compared to a fixed directional microphone with a cardioid pattern, the adaptive directional microphone yields better speech understanding if the noise comes from the side (i.e., it changes to bidirectional pattern) and yields similar speech understanding if the noise comes from the back (i.e., it changes to the cardioid pattern) (Ricketts and Henry, 2002).

Adaptive directional microphones have not been reported to be worse than the fixed directional microphones.

Subjective ratings using the Abbreviated Profile of Hearing Aid Benefit (APHAB) scales have shown higher ratings for the adaptive directional microphones compared with the omni-directional microphones after a 4-week trial in real life environments (Valente and Mispagel, 2004). Oticon has conducted a clinical trial to compare the performance of its hearing aids with a single-band, first-order, fixed directional microphone (Adapto) and a multiband first-order adaptive directional microphone with the noise reduction and compression system active (Syncro) (Flynn, 2004b). The SNRs-50 of hearing aid users were tested when speech was presented from 0° azimuth and uncorrelated broadband noises were presented from four locations in the back hemisphere. Flynn reported approximately a 1-dB improvement in the SNR-50 of hearing aid users between the omni-directional modes of the two hearing aids and approximately 2 dB of improvement between the directional modes of the two hearing aids. However, as the noise reduction algorithm was active for the multi-band adaptive directional microphones and the two hearing aids have different compression systems, it is unclear how much of the differences were solely due to the differences in the directional microphones.

b. Time Constants

The optimum adaptation speeds between the omni-directional and directional mode or among different polar patterns have not been systematically explored. As noted before, adaptive directional microphone algorithms implemented in different hearing aids have a different speed of adaptation for switching the microphone modes and the polar patterns. Some take several seconds to adapt and others claim to adapt almost instantaneously (i.e., in 4 to 5 milliseconds) (Kuk et al., 2000; Powers and Hamacher, 2002; Ricketts and Henry, 2002, Groth, 2004, personal communication).

Similar to the attack-and-release times of a compression system, there are pros and cons associated with having a faster or a slower adaptation time for the adaptive directional microphones. For example, a system with a fast adaptation time can change the polar pattern for maximum noise reduction when the head moves or when a noise source is traveling in the back hemisphere of the hearing aid user. The fast adaptation may be overly active, however, and it may change its polar pattern during the stress and unstressed patterns of natural speech when a competing speaker and a noise source are located at different locations in the back hemisphere of the hearing aid user. The advantage of a slower adaptation time is that it does not act on every small change in the environment, yet it may not be able to quickly and effectively attenuate a moving noise source, for example, a truck moving from one side to the other behind the hearing aid user.

3.1.3. Second-Order Directional Microphones

Although first-order directional microphones generally provide 3 to 5 dB of improvement in SNR for speech understanding in real-world environments, people with hearing loss often experience a much higher degree of SNR-loss. This means that the benefits provided by first-order directional microphones are insufficient to close the gap between the speech understanding ability of people with hearing loss and that of people with normal hearing in background noise. This limitation prompted the development of a number of instruments, such as second-order directional microphones and array microphones, to provide higher directionality.

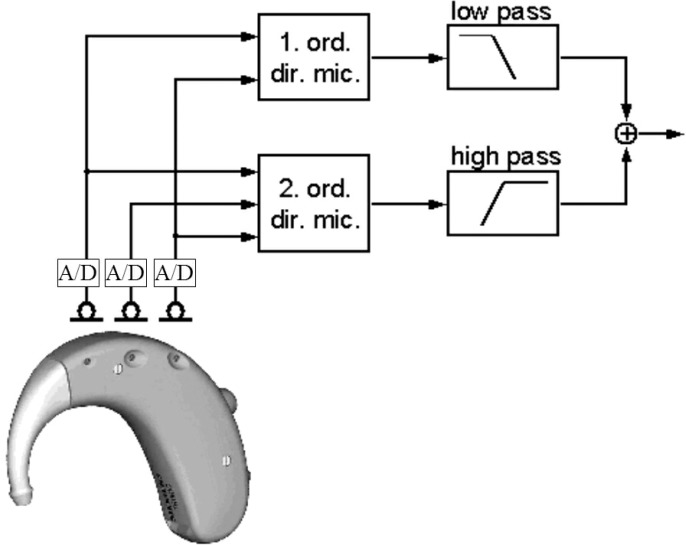

Second-order directional microphones are composed of three matched omni-directional microphones, and they usually have a higher directivity index than the first-order directional microphones. The only commercially available second-order directional microphones to date are implemented in the behind-the-ear Siemens Triano hearing aids. According to Siemens, Triano is implemented with a first-order directional microphone for frequencies below 1000 Hz and a second-order directional microphone above 1000 Hz (Figure 6) (Powers and Hamacher, 2002).

Figure 6.

The implementation of a commercially available hybrid second-order directional microphone. The outputs of the front and back microphones are processed to form a first-order directional microphone (1 ord. dir. mic.), the output of which is low-pass filtered. The outputs of all three microphones are processed to form a second-order directional microphone (2 ord. dir. mic.), the output of which is then high-pass filtered. The low-and high-pass filtered signals are subsequently summed and processed by other signal processing algorithms in the hearing aid. (Reprinted with permission from Powers and Hamacher, Hear J 55[10], 2002).

The reason for this particular set up is because the second-order directional microphone is implemented using the delay-and-subtract processing that yields higher internal microphone noise and a low-frequency roll-off of 12 dB/octave. The steep low-frequency roll-off makes it difficult to amplify the low-frequency region and any effort to compensate for the roll-off would exacerbate the amount of internal noise.

The first-order directional microphone is used to circumvent the problem by keeping the internal noise manageable. It can also preserve the ability of the hearing aid to provide low-frequency amplification. The second-order directional microphone is used to take advantage of its higher directionality. The directional microphones of Triano can also be programmed to have adaptive directionality.

3.1.3.1. Verification and Limitations

Bentler and colleagues (2004a) measured a random sample of behind-the-ear Triano hearing aids with the hybrid second-order directional microphones and reported the free-field average directivity index (DI-a) values ranged from 6.5 to 7.8 dB. The DI-a values were calculated from the sum-average of the DI values from 500 to 5000 Hz without frequency weighting. When the Triano hearing aids were worn on a Knowles Electronics Manikin for Acoustic Research (KEMAR), the DI-a values ranged from 4.5 to 6.0 dB.