Abstract

More than 60,000 people worldwide use cochlear implants as a means to restore functional hearing. Although individual performance variability is still high, an average implant user can talk on the phone in a quiet environment. Cochlear-implant research has also matured as a field, as evidenced by the exponential growth in both the patient population and scientific publication. The present report examines current issues related to audiologic, clinical, engineering, anatomic, and physiologic aspects of cochlear implants, focusing on their psychophysical, speech, music, and cognitive performance. This report also forecasts clinical and research trends related to presurgical evaluation, fitting protocols, signal processing, and postsurgical rehabilitation in cochlear implants. Finally, a future landscape in amplification is presented that requires a unique, yet complementary, contribution from hearing aids, middle ear implants, and cochlear implants to achieve a total solution to the entire spectrum of hearing loss treatment and management.

Introduction

Cochlear implants are the only medical intervention that can restore partial hearing to a totally deafened person via electric stimulation of the residual auditory nerve. Twenty years ago, the cochlear implant started as a single-electrode device that was used mainly for enhancing lipreading and providing sound awareness. Today, it is a sophisticated multielectrode device that allows most of its 60,000 users to talk on the phone. The implant candidacy has been expanded to include children as young as 3 months and adults who have significant functional residual hearing, particularly at low frequencies. Commercial and research enterprises have also matured to generate annual revenues of hundreds of millions of dollars and to command attention from multidisciplinary fields including engineering, medicine, and neuroscience.

This review describes what a cochlear implant is and how it works, and discusses its past, present, and future. It also focuses on audiologic and clinical issues, engineering issues, anatomic and physiologic issues, and cochlear-implant performance in basic psychophysics, speech, music, and cognition. Finally, trends in cochlear implants from clinical, research, and system points of view are presented.

History of Cochlear Implants

Development of cochlear implants can be traced back at least 200 years to the Italian scientist Alessandro Volta, who invented the battery (the unit volt was named after him). He used the battery as a research tool to demonstrate that electric stimulation could directly evoke auditory, visual, olfactory, and touch sensations in humans (Volta, 1800). When placing one of the two ends of a 50-volt battery in each of his ears, he observed that

“… at the moment when the circuit was completed, I received a shock in the head, and some moments after I began to hear a sound, or rather noise in the ears, which I cannot well define: it was a kind of crackling with shocks, as if some paste or tenacious matter had been boiling … The disagreeable sensation, which I believe might be dangerous because of the shock in the brain, prevented me from repeating this experiment…”

Safe and systematic studies on the effect of electric stimulation on hearing were not reported for another 150 years, until modern electronic technology emerged. Equipped with vacuum tube based oscillators and amplifiers, Stevens and colleagues identified at least three mechanisms that were responsible for the “electrophonic perception” (Stevens, 1937; Stevens and Jones, 1939; Jones et al., 1940).

The first mechanism was related to the “electromechanical effect,” in which electric stimulation causes the hair cells in the cochlea to vibrate, resulting in a perceived tonal pitch at the signal frequency at which they were acoustically stimulated.

The second mechanism was related to the tympanic membrane's conversion of the electric signal into an acoustic signal, resulting in a tonal pitch perception but at the doubled signal frequency. Stevens and colleagues were able to isolate the second mechanism from the first, because they found that only the original signal pitch was perceived with electric stimulation in patients lacking the tympanic membrane.

The third mechanism was related to direct electric activation of the auditory nerve, because some patients reported a noise-like sensation in response to sinusoidal electrical stimulation, much steeper loudness growth with electric currents, and occasional activation of facial nerves.

The first direct evidence of electric stimulation of the auditory nerve, however, was offered by a group of Russian scientists who reported that electric stimulation caused hearing sensation in a deaf patient whose middle and inner ears were damaged (Andreev et al., 1935).

In France, Djourno and colleagues reported in 1957 successful hearing by using electric stimulation in two totally deafened patients (Djourno and Eyries, 1957; Djourno et al., 1957a; Djourno et al., 1957b). Their success spurred an intensive level of activity in attempts to restore hearing to deaf people on the United States west coast in the 1960s and 1970s (Doyle et al., 1964; Simmons et al., 1965; Michelson, 1971; House and Urban, 1973).

Although the methods were crude compared with today's technology, these early studies identified critical problems and limitations that needed to be considered for successful electric stimulation of the auditory nerve. For example, they observed that compared with acoustic hearing, electric hearing of the auditory nerve produced a much narrower dynamic range, much steeper loudness growth, temporal pitch limited to several hundred Hertz, and much broader or no tuning. Bilger provided a detailed account of these earlier activities (Bilger, 1977a, 1977b; Bilger and Black, 1977; Bilger et al., 1977a, 1977b; Bilger and Hopkinson, 1977).

On the commercial side, in 1984 the House-3M single-electrode implant became the first device approved by the U.S. Food and Drug Administration (FDA) and had several hundred users. The University of Utah developed a six-electrode implant with a percutaneous plug interface and also had several hundred users. The Utah device was called either the Ineraid or Symbion device in the literature and was well suited for research purposes (Eddington et al., 1978; Wilson et al., 1991b; Zeng and Shannon, 1994). The University of Antwerp in Belgium developed the Laura device that could deliver either 8-channel bipolar or 15-channel monopolar stimulation. The MXM laboratories in France also developed a 15-channel monopolar device, the Digisonic MX20. These devices were later phased out and are no longer available commercially. At present, the three major cochlear-implant manufacturers are Advanced Bionics Corporation, U.S.A. (Clarion); MED-EL Corporation, Austria; and Cochlear Corporation, Australia (Nucleus).

Present Status

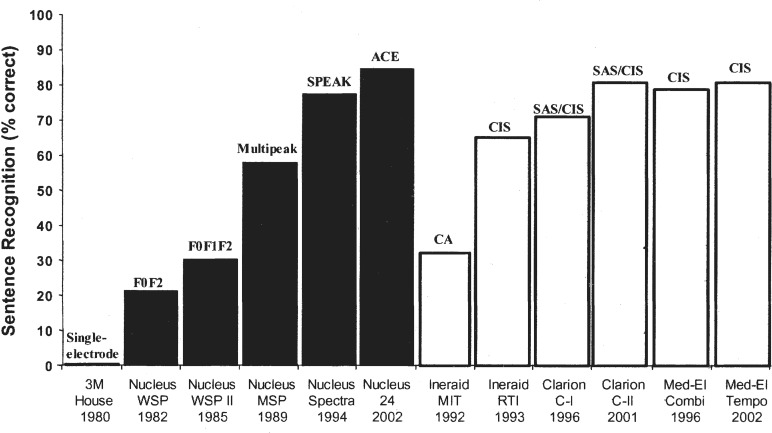

At present, the number of cochlear-implant users has reached 60,000 worldwide, including 20,000 children, and is still growing exponentially. Functionally, the cochlear implant has evolved from the single-electrode device that was used mostly as an aid for lipreading and sound awareness to a modern, multielectrode device that can allow an average user to talk on the telephone. Figure 1 documents the advances made in cochlear implants in terms of speech performance over the past 20 years.

Figure 1.

Speech recognition in cochlear-implant users. The x-axis labels show the type of device, the processor model, the place where the study was conducted, and the year the study was published. The y-axis shows percent correct scores for sentence recognition in quiet. The scores in earlier cochlear implants (House/3M, Nucleus WSP, WSP II, MSP, Ineraid MIT, and RTI) were averaged from investigative studies published in peer-reviewed journals. The scores in later devices were obtained from relatively large-scale company-sponsored clinical trials that had also been published in peer-reviewed journals. Except for a “single-electrode” for the 3M/House device, the text on top of the bars represent speech processing strategies including SPEAK (Spectral PEAK extraction), ACE (Advanced Combination Encoder), CA (Compressed Analog), CIS (Continuous Interleaved Sampler), and SAS (Simultaneous Analog Stimulation).

The early single-electrode device provided essentially no open-set speech recognition except in a few subjects. The steady improvement at a rate of about 20 percentage points in speech recognition per 5 years since 1980 was particularly apparent with the Nucleus device. Despite the differences in speech processing and electrode design, there appears to be no significant difference in performance among the present cochlear-implant recipients who use different devices.

Cochlear-implant research has also improved and matured as a scientific field. Figure 2 shows the annual number of publications on cochlear implants and hearing aids retrieved from the MEDLINE database. As of January 27, 2004, 2,699 publications in the database were related to cochlear implants. For comparison, the entry “hearing AND aid” yielded 2,740 publications, while that for “auditory” yielded 58,551. The annual number of publications clearly shows an exponential growth pattern, mirroring the growth pattern in the number of cochlear-implant users and also, most likely, in the amount of funding.

Figure 2.

Annual number of publications for cochlear implants (the solid line) and hearing aids (the dashed line). The numbers were obtained by searching entries containing “cochlear AND implant” or “hearing AND aid” in the MEDLINE database (http://www.pubmed.gov). The search was performed on January 27, 2004.

Research in hearing aids clearly started earlier than it did in cochlear implants, as evidenced by the relatively constant 10 to 20 annual publications between the early 1960s and middle 1970s. Hearing aid publications took off in the middle 1970s and have since increased steadily to about 100 publications at present. For comparison, cochlear-implant publications did not start to appear in the database until 1972, when animal studies were conducted with an inner ear electrode implant (Horwitz et al., 1972). The first human study was reported by Dr. William House in 1974 (House, 1974). The implant literature has grown exponentially since the early 1990s and exceeded the number of hearing aid publications in the middle 1990s, reaching 250 publications in the year 2000.

Audiologic and Clinical Issues

Patient Selection Criteria

As another sign of progress and maturity in cochlear implants, the audiologic criteria for cochlear implantation has continuously relaxed from bilateral total deafness (>110 dB HL) in the early 1980s, to severe hearing loss (>70 dB HL) in the 1990s, and then to the current suprathresh-old speech-based criteria (<50% open-set sentence recognition with properly fitted hearing aids). Cochlear implantation for both adults and children has received FDA approval.

In cases of auditory neuropathy, patients with a nearly normal audiogram have received cochlear implants and achieved better performance, particularly in noise (Peterson et al., 2003). In cases of combined acoustic and electric hearing, cochlear implantation with short electrodes has been proposed for patients with significant residual low-frequency hearing (von Ilberg et al., 1999; Gantz and Turner, 2003).

As the performance of the cochlear implant continues to get better, its cost will eventually drop with large volume and the candidate base for cochlear implants will continue to grow and overlap with the traditional hearing aid market. A future hearing-impaired listener may have a choice between hearing aids and cochlear implants, or even both.

Presurgical and Postsurgical Issues

Despite significant research effort, there is no reliable and accurate presurgical predictor of postsurgical performance in cochlear implants. Several factors such as duration and etiology of deafness, as well as presurgical auditory and speech performance, have been shown to be correlated with postsurgical performance (Blamey et al., 1996; van Dijk et al., 1999; Gomaa et al., 2003). However, no presurgical factors have been able to account for a significant enough amount of postsurgical performance variability that would allow the physician to have a high level of confidence to tell the prospective candidate how well he or she may do with the implant. Innovative means such as brain imaging and cognitive measures might be used in the future to help increase accuracy and reliability of presurgical prediction of postsurgical performance (Giraud et al., 2001; Lee et al., 2001; Pisoni and Cleary, 2003).

The cochlear-implant surgical approach and procedure has also been greatly improved and simplified as physicians continue to gain experience, thus minimizing the surgical trauma, duration, and potential complications. For example, the duration of surgery has been decreased from a typical hospital stay of 1 to 2 days in the early 1980s to the present few hours on an outpatient basis.

An increased risk of bacterial meningitis with cochlear implants has been a recent concern, particularly for those who have received an implant with a positioner that requires a larger opening in the cochlea (Reefhuis et al., 2003). However, the positioner is no longer being used, and presurgical vaccination and postsurgical monitoring are effective to prevent and treat any potential bacterial infections after a patient receives the implant.

Cost-Utility Analysis and Reimbursement

Cost-utility analysis has been performed in both adults and children to show a favorable comparison of cochlear implants with other life-saving medical devices in terms of improving the implant user's quality of life (Cheng and Niparko, 1999; Palmer et al., 1999; Cheng et al., 2000). These studies, coupled with formal FDA approval, have helped convince many insurance companies to pay full or nearly full device, surgery, and hospital costs for cochlear implants, which may range from US $40,000 to $75,000. However, Medicare still reimburses significantly less (about US $20,000), requiring those who depend on Medicare to pay the remaining cost. In developing countries, the surgical cost is low but the device cost is prohibitively high, thus denying the access of cochlear implants to the overwhelming majority of deaf people there.

Educational and Linguistic Issues

A key question in implanting deaf children is whether they can develop normal language in a mainstream educational environment. The evidence is accumulating to support the hypothesis that early implantation promotes the maturation process in the auditory cortices and normal language development.

Two research groups have provided evidence showing delayed cortical maturation without auditory stimulation and additionally, that cochlear implants can restart this maturation process in children who received cochlear implants before about 7 years old (Ponton et al., 1996; Sharma et al., 2002). Correspondingly, language development measures have shown that children with cochlear implants performed significantly better than expected from unimplanted deaf children and approached or reached the rate of language development observed in children with normal hearing (Moog and Geers, 1999; Svirsky et al., 2000).

(Re)habilitation Issues

No formal structured habilitation or rehabilitation protocols have been developed for children or adults who have received cochlear implants. Most patients have received only basic follow-up visits to tune the “map” in the speech processor in their cochlear implant. On average, postlingually deafened cochlear-implant users go through a learning or adaptation process from a few months to as long as a few years, during which their speech performance continues to get better (Tyler et al., 1997). The individual variability is large in terms of both the speed of adaptation and the final plateau performance. The causes of this large individual variability are not clear, possibly reflecting more patient related variables than device related variables (Wilson et al., 1993).

The rapidly growing number of cochlear-implant users has demanded that researchers and educators develop field-tested, effective, structured (re)habilitation programs and protocols, and additionally, train audiologists, speech pathologists, and special education teachers in the skills required to work with adult and child implant users.

Engineering Issues

Taking advantage of explosive growth and innovations in technology, particularly in microelectronics in the last two decades, cochlear implants have evolved from analog to digital, from single electrode to multiple electrodes, from percutaneous plugs to transcutaneous transmission, and from simple modulation to complicated feature extraction processing.

System Design

In normal hearing, sound travels from the outer ear through the middle ear to the cochlea, where it is converted into electric impulses that the brain can understand. Most cases with severe hearing loss involve damage to this conversion of a sound to an electric impulse in the cochlea. A cochlear implant bypasses this natural conversion process by directly stimulating the auditory nerve with electric pulses. Hence, the cochlear implant will have to mimic and replace auditory functions from the external ear to the inner ear.

Figure 3 shows a modern cochlear implant worn on the body. The basic components include a microphone that sends the sound via a wire to the speech processor that is worn externally, where it is converted into a digital signal. The signal travels back to a headpiece, held in place by a magnet attached to the implant on the other side of the skin, that transmits coded radio frequencies across the skin. The implant decodes the signals, converts them into electric currents, and sends them along a wire threaded into the cochlea. The electrodes at the end of the wire stimulate the auditory nerve connected to the central nervous system, where the electrical impulses are interpreted as sound.

Figure 3.

Block diagram for key components in a typical cochlear implant system. First, a microphone (1) picks up the sound and sends it via a wire (2) to the speech processor (3) that is worn behind the ear or on the belt like a pager for older versions. The speech processor converts the sound into a digital signal according to the individual's degree of hearing loss. The signal travels back to a headpiece (4) that contains a coil transmitting coded radio frequencies across the skin. The headpiece is held in place by a magnet attracted to the implant (5) on the other side of the skin. The implant contains another coil that receives the radio frequency signal and also hermetically sealed electronic circuits. The circuits decode the signals, convert them into electric currents, and send them along wires threaded into the cochlea (6). The electrodes at the end of the wire (7) stimulate the auditory nerve (8) connected to the central nervous system, where the electrical impulses are interpreted as sound.

Although the specific components and designs may be different among the implant manufacturers, the general working principles are the same. For example, the microphone can be mounted at the ear level on top of the ear hook, or pinned at the chest level. The shape, color, and radio frequency (RF) of the transmission coil may be different, but the magnetic coupling is identical. The following section discusses in detail speech processors, electrodes, telemetry, and fitting systems in the present cochlear implants.

Speech Processor

The speech processor is the brain in a cochlear implant. It extracts specific acoustic features, codes them via RF transmission, and controls the parameters in electric stimulation. In the earliest single-electrode 3M/House implant, an analog sound waveform was compressed in amplitude and then was simply amplitude modulated to a 16-kHz sinusoidal carrier to serve as the effective electric stimulus.

All modern multielectrode implants have been developed to take advantage of the so-called tonotopic organization in the cochlea, namely, the apical part of the cochlea encodes low frequencies while the basal part encodes high frequencies. These implants, therefore, all have implemented a bank of filters to divide speech into different frequency bands, but they differ significantly in their processing strategies to extract, encode, and deliver the right features.

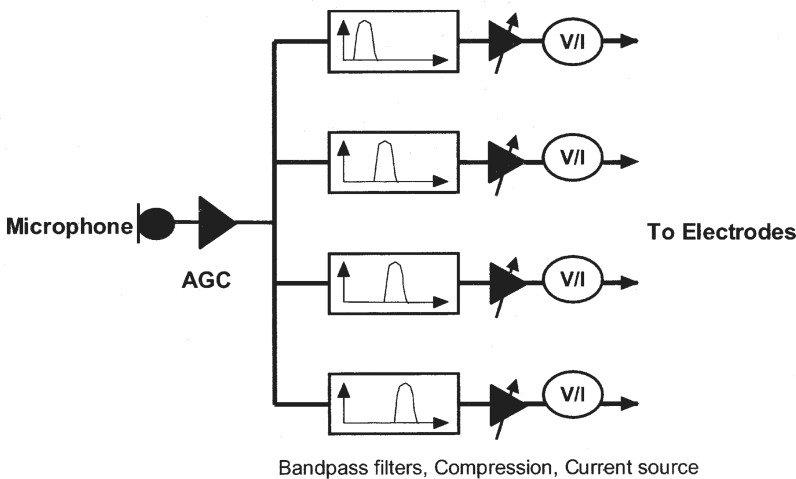

One school of thought was to deliver the band-specific, amplitude-compressed analog waveforms to different electrode locations in the cochlea (Eddington et al., 1978). Figure 4 shows the schematics of a typical compressed-analog strategy. In the Ineraid device, the return or ground electrode is located outside of the cochlea in temporalis muscle (the so-called monopolar stimulation mode). In the Clarion device, the compressed-analog processing is called the Simultaneous-Analog-Strategy (SAS), in which the return electrode can be an adjacent intro-cochlear electrode (the so-called bipolar mode).

Figure 4.

Block diagram for the Compressed-Analog cochlear (AGC) implant speech processor, adapted from Eddington et al., 1978. A microphone picks up the sound and the automatic gain control (AGC) circuit attenuates or amplifies the sound depending on the talker's vocal effort and distance from the receiver. The sound is then divided into four frequency bands by bandpass filters in this particular implementation. The narrow-band signal is compressed in amplitude by gain control to be within the narrow electric dynamic range (see the section on intensity, loudness, and dynamic range in psychophysical performance for details). The compressed band-specific analog signals are converted to currents and finally delivered to different intro-cochlear electrodes with the most apical electrode receiving the signal from the lowest frequency band and the most basal electrode receiving the signal from the highest frequency band.

Another school of thought was based on speech production and perception, in which spectral peaks or formants, which reflect the resonance properties of the vocal tract, are extracted and delivered to different electrodes according to the presumed tonotopic relationship between the place of the electrode and its evoked pitch. The earliest version was the F0F2 strategy implemented in the Nucleus Wearable Speech Processor (WSP II) (Clark et al., 1984), in which the fundamental frequency (F0) and the second formant frequency (F2) were extracted, with F0 determining the stimulation rate and F2 determining the stimulation electrode location of the electrode. Sequentially, the first formant (F1) was added in the Nucleus WSP III processor and up to six spectral peaks in the Nucleus MPEAK strategy (Skinner et al., 1991).

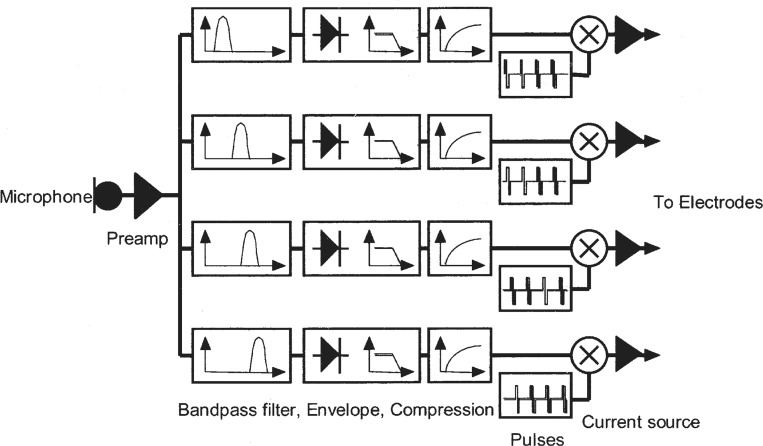

A third school of thought was based on the implicit encoding of temporal envelope cues and could be traced back to earlier work in speech vocoder (Dudly, 1939). Figure 5 shows the schematic diagram of the Continuous-Interleaved-Sampling (CIS) processor, an implant strategy intended to extract and faithfully deliver the temporal envelope cues (Wilson et al., 1991a).

Figure 5.

Block diagram for the Continuous-Interleaved-Sampling cochlear implant speech processor, which is similar to the compressed-analog processor. The envelope, is compressed to match the narrow dynamic range in electric stimulation, and the envelope from each subband is then amplitude-modulated to a pulsatile carrier that interleaves with pulsatile carriers from other subbands. Adapted from (Wilson et al., 1991a).

CIS preprocessing is similar to the compressed-analog processor, but the band-specific signal is subject to envelope extraction via a rectifier and a low-pass filter with its cutoff frequencies typically set at a range of 160 to 320 Hz. The slowly varying temporal envelopes from 3 to 4 spectral bands can deliver high levels of speech intelligibility in quiet (Shannon et al., 1995). The envelope, again, has to be compressed, usually logarithmically, to match the narrow dynamic range in electric stimulation.

To avoid electrode interactions caused by electrical field overlap in simultaneous stimulation, which conceivably can smear the band-specific envelope cues, the envelope from each subband is then amplitude-modulated to a high-rate (>800 Hz) pulsatile carrier that interleaves with pulsatile carriers from other subbands. In other words, only one electrode is being stimulated at any given time.

Variations of the temporal envelope extraction have been developed and implemented. In a typical CIS implementation, the number of bandpass filters is identical to the number of electrodes, ranging from 6 in the earlier implementation in the Ineraid device to 8 in the Clarion CI device, 12 in the Medel device, and 22 in the Nucleus 24 device. Alternatively, the number of filters (m) can be greater than the number of stimulating electrodes (n), in which the envelopes from 6 to 8 subbands with the highest energy are used to stimulate the corresponding electrodes. This implementation has been called the n-of-m, or peak picking strategy, and is currently used in the Nucleus SPEAK processor (McDermott et al., 1992).

To balance the need for high-rate stimulation and for minimizing electrode interaction, pulsatile carriers from two or more distant electrodes may be simultaneously stimulated, and hence are called the Paired Pulsatile Sampler (PPS) and Multiple Pulsatile Stimulation (MPS), respectively (Loizou et al., 2003). To take advantage of better temporal envelope representation with high-rate stimulation (>2000 Hz), all manufacturers have recently introduced high-rate stimulation strategies, including the HiResolution from Advanced Bionics, ACE from Cochlear Corporation, and Tempo from Med El. Figure 6 summarizes these processing strategies on the basis of their different philosophies and implementations. Their corresponding performance in speech can be found in Figure 1.

Figure 6.

Classification scheme for speech processing strategies in cochlear implants.

Electrodes

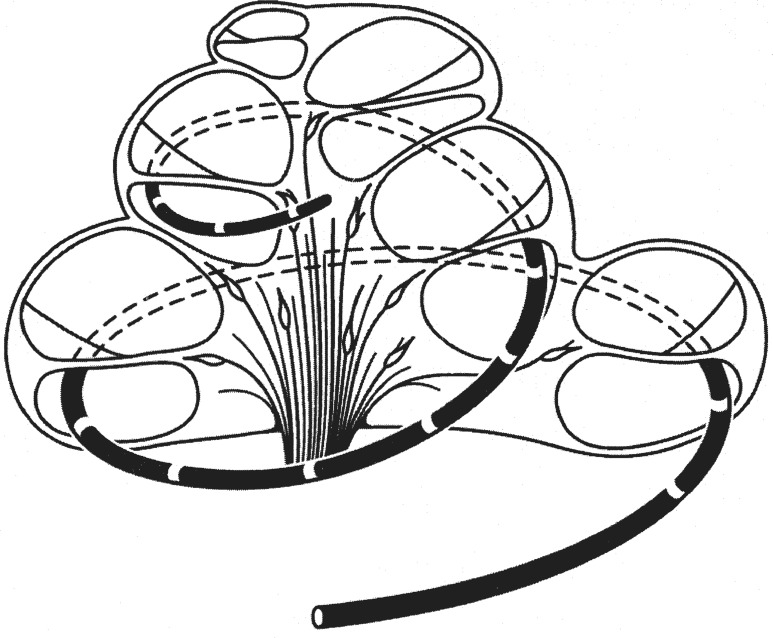

Early electrodes included copper and gold wires, but the modern electrodes are all made of platinum or platinum-iridium alloy. These electrodes, however, differ significantly both by geometric parameters and how they are stimulated. Figure 7 shows a schematic drawing of an electrode array inserted into the scala tympani (bottom compartment) of the cochlea. In this figure, the electrode array has made two full turns and stayed in the scala tympani inside the cochlea. In reality, the electrode array may not have such deep insertion, rather it may have kinks and reversals, or even penetrate the basilar membrane ending up in the scale vestibuli, the top compartment (Skinner et al., 2002).

Figure 7.

A representative intracochlear array for cochlear implants. The white rings on the black carrier represent electrode contacts, which in turn stimulate the nearby auditory neurons in the modiolus. The electrode array is inserted in the scala of tympani and folded into two complete turns.

The electrodes are similar to the rings presented in Figure 7 in the Nucleus device, but are single balls in the Clarion device and double-balls (like a dumb bell) in the Medel device. The distance between electrode contacts may be fixed at 0.75 mm in the Nucleus device or varied from far to near towards the apical end in the recent Clarion device. The electrode array may be shortened significantly for shallow insertion in combined acoustic and electric stimulation, or split into double arrays to be inserted separately into the first and second turns in case of ossification.

Depending on the location of the return or ground electrode, electrode configurations can vary in the mode of monopolar, bipolar, and tripolar stimulation:

In a monopolar mode, the return electrode is located outside of the cochlea, usually in the temporalis muscle behind the ear but can be attached to the case housing the internal electronics.

In a bipolar mode, the return electrode is an adjacent intro-cochlear electrode to the stimulation electrode.

In a tripolar mode, the return electrodes are two adjacent electrodes with each receiving half of the current delivered to the stimulating electrode.

Both modeling work and physiologic data have shown decreased spatial activation from monopolar, bipolar, to tripolar stimulation (Jolly et al., 1996; Bierer and Middlebrooks, 2004). Psychophysical data have shown an increased threshold from monopolar to bipolar stimulation, but whether bipolar stimulation can produce restricted stimulation and better speech performance has been equivocal (Pfingst et al., 1995; Pfingst et al., 2001).

Telemetry

Most modern cochlear implants have already had or will soon have sophisticated telemetry functions that allow close, accurate monitoring and measurement of electrode impedance, electrical field distribution, and nerve activities. The impedance monitoring can detect both open and shorted electrodes, while electrical field distribution and nerve activities allow objective measures of electrode interaction and nerve survival. These measures are becoming increasingly important for the objective fitting of the cochlear implant, particularly in children who cannot provide reliable subjective responses (Brown, 2003).

Fitting Systems

Each implant user has to be individually fitted or mapped to ensure safe and effective electric stimulation. Both the choice of different speech processing strategies and the number of adjustable parameters have increased significantly with the modern devices, allowing an individual user to store multiple maps in the speech processor for listening to different sounds in different environments such as quiet versus noise and speech versus music.

Typically, audiologists spend the most time in measuring the appropriate current values on each individual electrode that reaches the threshold (T-level) and the maximum or most comfortable loudness level (M-level or C-level). This amplitude map needs to be adjusted, particularly in the first several months following the first turn-on of the speech processor, as both the implant system and the implant user are being adapted to electric stimulation.

The goal of amplitude mapping is to optimally convert acoustic amplitudes in speech sounds to electric currents that evoke sensations that are between just audible and the maximal comfortable. Therefore, in addition to the electric dynamic range defined by the T and C levels, two important parameters need to be measured and adjusted for optimal amplitude mapping.

The first parameter is the input acoustic range, which used to be set at 30 dB but is now usually set at 50 to 60 dB to accommodate the amplitude variations in speech sounds (Zeng et al., 2002). The second parameter is the nature and degree of the compression between acoustic and electric amplitudes. Both power and logarithmic compression functions have been used in the current devices. Although the degree of compression shows a relatively minor effect on speech intelligibility, the compression that restores normal loudness sensation tends to provide better speech intelligibility and quality (Fu and Shannon, 1998; Zeng and Galvin, 1999). Usually, the input dynamic range and the compression function are set at the default values suggested by the manufacturers.

Unfortunately, audiologists typically spend little or no time on the frequency-to-electrode map, an important fitting parameter. As suggested by physiologic evidence, the auditory nerve is tonotopically organized depending on its location in the cochlea: the nerve innervating the apical cochlea produces pitch sensation corresponding to low frequency and that innervating the basal cochlea corresponds to high frequency (Bekesy, 1960; Liberman, 1982). However, there is simply no guarantee that pitch will be determined by the relative electrode position.

Depending on insertion depth, presence of kinks in the electrode array, and the extent and pattern of nerve survival, the same electrode may produce a different pitch sensation on different individuals, whereas different electrodes evoked the identical pitch. Even worse, pitch reversal could occur in which the apical electrode evoked a higher pitch sensation than the more basal electrodes. This mismatched frequency-to-electrode map is one of the major reasons for the cochlear-implant users’ poor performance in tests of noise and music perception (see sections on speech and music performance).

Finally, an important goal in fitting a cochlear implant is to get rid of electrodes that produce undesirable stimulations. The complications include stimulation of other facial nerves and muscles, such as vibration, pain, eye twitching, and vestibular responses (Niparko et al., 1991; Stoddart and Cooper, 1999). Occasionally, these complications can be avoided by adjusting the electric stimulation parameters such as the pulse duration and the electrode mode. Guidelines are lacking, so only a few experienced audiologists have ventured into this part of fitting the systems.

Anatomic and Physiologic Issues

The unique nature of electric stimulation is likely to produce perceptual consequences in electric hearing. Highlighted here are the anatomic and physiologic differences between acoustic and electric stimulation that will pave the way to an examination of their perceptual consequences in later sections.

Cochlea and Auditory Nerves

A normal human cochlea has roughly 3,000 inner hair cells that are tuned to different frequencies from 20 to 20,000 Hz. Each of the inner hair cells has 10 to 20 innervating auditory nerve fibers that send information to the central nervous system. In a deafened ear, the number of auditory nerve fibers is likely to be significantly less. The surviving fibers are likely to have an unhealthy status such as shrinkage, loss of dendrites, and demyelination (Linthicum et al., 1991; Nadol et al., 2001).

The degree and pattern of nerve survival is related to the etiology and duration of deafness as well as surgical trauma. Chronic and patterned electric stimulation has been shown to be a potent means of promoting nerve survival in deafened animals, providing further evidence for early intervention of cochlear implants in deaf children (Leake et al., 1999).

Differences Between Acoustic and Electric Stimulation

Different from acoustic stimulation, an auditory nerve is directly activated by membrane potential changes (depolarization) in electric stimulation. Neither passive nor active mechanical tuning is present in electric stimulation, rather the excitation pattern is determined by the electric field distribution, the cochlear electrical impedance, and the excitability of the nerve tissues (van den Honert and Stypulkowski, 1987; Abbas and Brown, 1988; Suesserman and Spelman, 1993; Frijns et al., 1996).

Two additional differences are also apparent between acoustic and electric stimulation, producing significant consequences in nerve responses. First, the loss of cochlear compression (caused by the outer hair cell function) produces much steeper rate-intensity functions in electric stimulation than in acoustic stimulation (Javel and Shepherd, 2000).

Second, the lack of stochastic synaptic transmission produces highly synchronized firing in electrically stimulated nerves (Dynes and Delgutte, 1992; Litvak et al., 2001). The steep rate-intensity function is likely to contribute to the narrow dynamic range while the highly synchronized response is likely to contribute to the fine temporalmodulation detection in cochlear-implant users (see section on psychophysical performance).

Central Responses to Electric Stimulation

The central auditory system shows a great degree of plasticity in response to deprivation of sensory input and its re-introduction via electric stimulation (Shepherd and Hardie, 2001; Kral et al., 2002). For example, cells in the cochlear nuclei shrink in response to a lack of sensory input and then come back to normal size as a result of electric stimulation. The inferior colliculus has significantly different temporal and spatial properties in response to a different pattern and duration of electric stimulation (Snyder et al., 1990; Vollmer et al., 1999; Moore et al., 2002). The auditory cortex also shows totally different responses to electric stimulation in a cat model of acquired and congenital hearing loss, with the latter having significantly less cortical activity (Klinke et al., 1999).

This finding in animal models parallels the human brain imaging data showing also significantly less cortical activity in prelingually deafened persons than in postlingually deafened persons (Lee et al., 2001). What is more interesting is that brain imaging suggests a strong cross-modality plasticity: cochlear-implant users recruit visual cortex to help perform auditory tasks, particularly among the better implant users (Giraud et al., 2001).

Psychophysical Performance

Because the cochlear implant bypasses the first stage of processing in the cochlea and stimulates the auditory nerve directly, it is important to compare the psychophysical performance between acoustic and electric hearing. The differences in intensive, spectral, and temporal processing are highlighted to provide not only critical information regarding the stimulus coding in electric hearing but also, indirectly, the role of cochlear processing in auditory perception.

Intensity, Loudness and Dynamic Range

A person with normal hearing boasts a 120-dB dynamic range and as many as 200 discriminable steps within this range. In contrast, a cochlear-implant user typically has a 10-db to 20-dB dynamic range and 20 discriminable steps (Nelson et al., 1996; Zeng et al., 1998; Zeng and Shannon, 1999). In acoustic hearing, loudness grows as a power function of intensity, whereas loudness tends to grow as an exponential function of electric currents in electric hearing (Zeng and Shannon, 1992, 1994). These differences are likely due to the loss of cochlear processing and have to be accounted for in both the design and fitting of the speech processor in cochlear implants (see section on the engineering issues).

Frequency, Pitch, and Tonotopic Organization

Frequency is likely to be encoded by both time and place mechanisms in normal-hearing listeners. The time code reflects the auditory nerve's ability to phase lock to an acoustic stimulus for frequencies up to 5,000 Hz, whereas the place code reflects the cochlear filter's property to divide an acoustic stimulus into separate bands. The center frequency of these bands corresponds to different places along the cochlea and is preserved along the entire auditory pathway, including the auditory cortex. The frequency-to-structure map is called tonotopic organization.

In cochlear implants, the time code is mimicked by varying the stimulation rate, whereas the place code is approximated by the electrode position. Psychophysical data have shown that this time code is limited to 300 to 500 Hz and the differences in electrode insertion depth and kinks, and nerve survival patterns comprise the place code (Nelson et al., 1995; Collins et al., 1997; Zeng, 2002).

Temporal Processing

Traditional measures in temporal processing include temporal integration, gap detection, and temporal modulation transfer function. In general, cochlear-implant users perform nearly normally or even slightly better than their normal-hearing counterparts for these tasks. This normalcy, however, is usually achieved after the abnormal intensity processing in electric hearing has been accounted for. For example, a normal-hearing person's temporal integration function has a slope of roughly −3 dB per doubling duration for durations up to 100 to 200 milliseconds (msec). The implant data show a similar time course of integration, but a much shallower temporal integration function, with a slope as flat as −0.06 dB per doubling duration (Donaldson et al., 1997).

This difference in slope between acoustic and electric stimulation is most likely due to the loss of cochlear compression in cochlear implants. Of interest is that the loss of compression has a beneficial consequence in temporal processing, allowing the implant users to detect much smaller amplitude modulations (sometimes 1% or less) than the typical 5% to 10% modulation achieved by the normal-hearing listeners (Shannon, 1992).

Figure 8 shows gap detection data as a final example to illustrate the interaction between temporal and pitch resolution. The data were collected from five Ineraid implant subjects using the most apical electrode in a monopolar mode. The stimuli included two sinusoidal markers with each having a 200-msec duration and 0-msec ramps. The first marker frequency was always 100 Hz, whereas the second marker frequency was systematically varied from 100 to 3,000 Hz (x-axis). The standard had a 0-msec gap between the markers, while the signal always included a gap. The initial value for the gap was large for easy detection, but was adaptively adjusted to converge to a value that corresponded to 79.4% correct level of performance in a two-interval, forced-choice procedure. All stimuli were presented at the most comfortable level. Figure 8 demonstrates a dual-mechanism pattern, in which gap detection was at a minimum of about 4 msec when the second marker frequency was equal to the 100-Hz first marker frequency and then monotonically increased to a plateau value of about 30 msec when the second marker frequency reached 300 Hz and above. The plateau between 300 and 3,000 Hz suggests that listeners with implants cannot discern pitch differences at frequencies above 300 Hz.

Figure 8.

Gap detection in electric hearing. The y-axis is the mean gap detection threshold in milliseconds and the x-axis is the second marker frequency (the first marker frequency is always at 100 Hz).

Complex Processing

In terms of temporal masking, both normal-hearing and cochlear-implant listeners show a 10- to 20-msec backward masking effect and a 100- to 200-msec forward masking effect (Shannon, 1990). Simultaneous masking in terms of detection of tone in noise and overshoot has not been reported in the implant literature.

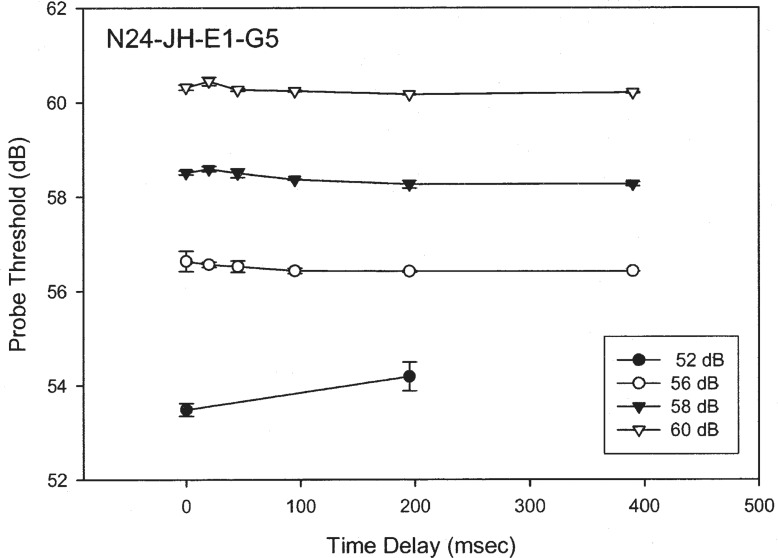

Figure 9 shows preliminary overshoot data from a Nucleus-24 implant subject (unpublished data by Han, Chen, and Zeng at the University of California, Irvine). The masker was a 400-msec, 1,000-Hz biphasic pulse train (100 μsec/phase). The probe was a 10-msec, 1,000-Hz biphasic pulse train (100 μsec/phase) that was presented at various temporal positions from a 0- to 390-msec delay from the onset of the masker. The masker was presented at four levels from threshold to maximal comfortable loudness.

Figure 9.

Overshoot in electric hearing. The y-axis is the detection threshold (dB re: 1 μA) for a brief signal and the x-axis is the delay in milliseconds (msec) from the onset of the masker (see text for details).

There was no sign of any significant overshoot effect, defined by the probe threshold difference between the onset (e.g., 0 msec) and the steady-state (e.g., 200 msec) positions for the probe. Spatial separation between the masker and the probe to two different electrodes did not produce any significant overshoot effect either. The lack of the overshoot effect in cochlear implants may reduce the temporal edge enhancement as observed in normal-hearing listeners (Formby et al., 2000) and an attempt to restore this enhancement has been shown to improve speech performance (Vandali, 2001).

Spatial masking in electric stimulation reflects mostly the overlap in electrical fields between electrodes. Figure 10 shows schematically how electrode interactions can reduce the number of independent neural or functional channels. The top panel shows two totally independent electrodes and the bottom panel shows two totally dependent electrodes. The cause for such electrode interactions may be sparse nerve survival distribution, or long distance between the electrodes and the neurons, or both.

Figure 10.

Schematic representation of how two interacting electrodes can reduce the number of independent neural or functional channels, which are represented by an array of arrows. The upper panel shows a case of two totally independent electrodes with each stimulating an independent neural channel (shaded rectangles) via relatively small degree of electric field spread (circles). The lower panel shows a case of two totally dependent electrodes with both stimulating the same neural channel via relatively large degree of electric field spread.

This schematic demonstration should also make a clear distinction between two often-confused concepts in relation to the number of electrodes and the number of channels. If two electrodes are totally dependent, then they are effectively acting as a single channel. Therefore, the number of electrodes is determined physically and is most likely greater than the number of effective channels due to electrode interactions.

Figure 11 shows the consequence of electrode interactions when cochlear implants are fitted (from #4 Quarterly Progress Report, 1999, by Zeng et al. for NIH Contract NO1-DC-92100 on Speech Processors for Auditory Prostheses). We first performed the standard Clarion clinical fitting procedure to establish the T and M levels on each individual electrode (open symbols) for an SAS user. Then we set the T level equal to the M level in the clinical fitting system, and by doing so, we fixed the current-level contour across the electrodes. We changed the overall volume control to move this current-level contour up and down while we used live voice to ask the subject to report at what level the speech was first audible (“speech-adjusted T level”) and was maximally comfortable (“speech-adjusted M level”). Figure 11 shows that the T and M levels were greatly reduced with the new speech-adjusted procedure (solid symbols).

Figure 11.

Changes in electric dynamic ranges caused by electrode interactions. Open symbols represent original T and M levels measured for individual electrodes in isolation. Solid symbols represent modified T and M levels measured using live speech as a calibration signal. The dashed line represents a suggested default setting of the T levels in the SAS processor.

These results suggest that speech-adjusted M-levels are needed to ensure that stimulation not be overly loud when all eight electrodes are simultaneously stimulated with a realistic broad-band signal similar to speech. As a matter of fact, this fitting protocol that takes electrode interactions into account can produce better speech performance and has been adopted by manufacturers in the newer versions of the implant fitting software.

Other complex psychophysical tasks that have been performed in the evaluation of cochlear implants have shown a positive correlation to speech performance. For example, modulation detection and interference in the time domain have been measured and were highly correlated to speech performance (Fu, 2002; Chatterjee, 2003). On the other hand, the resolution of complex spectral patterns (i.e., rippled noise detection in the spectral domain) has also been shown to be correlated to speech performance (Henry and Turner, 2003).

Another important area of psychophysical performance is in quantifying the bilateral cochlear-implant user's binaural processing capability. Preliminary data suggested that people with a bilateral implant can use the interaural level difference but less effectively than the interaural time difference, requiring matched pitch between the contralaterally and ipsilaterally stimulated electrodes (van Hoesel and Clark, 1997; Lawson et al., 1998; van Hoesel et al., 2002; Long et al., 2003).

Speech Performance

As the most important sound for human communication, speech has been systematically studied at all levels, from motor control and production to acoustical and perceptual analysis. As a matter of fact, the “brain” in the cochlear implant has been called a speech processor rather than a sound processor.

As the earlier section on speech processor design has indicated, speech perception can be performed using either primarily spectral or temporal cues. Earlier effort focused on extraction of spectral cues, but was less successful because of the significantly degraded spectral processing capability in the current cochlear-implant users (see the section on psychophysical performance). Recent effort has taken advantage of the superior temporal processing capability in electric stimulation and focused on temporal feature extraction and encoding, particularly the encoding of the temporal envelope and fine structure.

Hilbert Envelope and Fine Structure

Let us begin with a formal mathematical definition of the temporal envelope and fine structure and then proceed with examples to illustrate their meanings. The formal definition is based on the Hilbert transform that (different from the Fourier transform that separates a complex signal into low- and high-frequency components) divides a signal into a slowly varying and a rapidly varying component. For a real signal sr(t), an analytic signal s(t) can be generated by the following equation:

where i is the imaginary number (i.e., square root of −1) and si(t) is the Hilbert transform of sr(t).

The Hilbert envelope is the magnitude of the analytic signal:

The Hilbert fine structure is cosφ;(t), where φ(t) is the phase of the analytic signal:

The instantaneous frequency of the Hilbert fine structure is:

The original real signal sr(t) can be recovered by simply performing:

To provide some intuition, let us examine what the Hilbert envelope and fine structure are for a sinusoidal signal [sr(t) = Acos(2πft)]. Its Hilbert transform is orthogonal to the original signal, [si(t) = A sin(2πft)]. Performing simple trigonometry, we can show that a sinusoid's Hilbert envelope is simply the amplitude (A) and its fine structure is simply the cosine waveform [cos(2πft)] with a unit amplitude and an instantaneous frequency (f).

Figure 12 shows an illustrative example of decomposition of amplitude and frequency modulations. The top panel is a complex signal containing both amplitude frequency and modulations. The middle panel shows the amplitude modulation, and the bottom panel shows the frequency modulation. This example shows that the Hilbert envelope varies relatively slower than the Hilbert fine structure.

Figure 12.

An illustrative example of a stimulus waveform containing simple amplitude and frequency modulated tones (top trace). The Hilbert envelope is the amplitude modulation signal (middle trace), whereas the Hilbert fine structure is the frequency modulation signal (bottom trace), showing that the instantaneous frequency moving from low in the beginning to high in the middle and back to low at the end.

Temporal and Spectral Processing

Unfortunately, temporal envelope and fine structure are usually loosely defined and often cause confusion in the literature. One confusion is between acoustics and perception, stemming from a functional definition of the temporal cues and its apparent perception via mixed temporal and spectral mechanisms in normal-hearing listeners (Rosen, 1992). Rosen defined three types of temporal cues in speech based on the rate of amplitude fluctuations: (1) envelope (2–50 Hz), (2) periodicity (50–500 Hz), and fine structure (500–10,000 Hz). The envelope cue is likely perceived via a temporal mechanism and the fine structure cue is most likely perceived via a spectral mechanism, whereas the periodicity cue is possibly perceived via both.

Another source of confusion is related to engineering implementation in extracting and encoding temporal cues. Typically in a cochlear implant, the temporal envelope is extracted by passing a stimulus through a nonlinear device such as a half- or full-wave rectifier and then a low-pass filter. Depending on the cutoff frequency of the low-pass filter, the envelope, the periodicity, or even the fine structure cue can be present at the output of the envelope extractor. The confusion arises with the misperception that all we need to do is to increase the cutoff frequency to encode the periodicity and fine structure information. In addition to the perceptual limit of amplitude fluctuations via the purely temporal mechanisms, the higher cutoff frequencies violate a basic rule in demodulation, as distortion products from the nonlinear process will be leaked through the low-pass filter to produce a confounded waveform.

A third confusion stems from the ignorance of the ear's ability to recover the narrow-band envelope from the broad-band fine structure. A famous example is the high intelligibility of the amplitude-infinitely-clipped speech, which has removed the broad-band amplitude cue but preserved the fine-structure cue via zero crossings (Licklider and Pollack, 1948). Another example is the auditory chimera study, again showing high intelligibility of a chimerized sound with speech fine structure and a flat noise envelope (Smith et al., 2002). Recent studies have shown that the original broad-band fine structure with a flat envelope actually contained the original speech narrow-band envelopes that can be recovered by the auditory filters and contribute to the observed high intelligibility (Zeng et al., 2004a).

Speech Recognition

Speech recognition has been systematically measured as a function of a number of spectral bands in normal-hearing subjects and a function of the number of electrodes in cochlear-implant subjects (Shannon et al., 1995; Dorman et al., 1997; Fishman et al., 1997; Friesen et al., 1999). With high-context speech materials, temporal envelope cues from as few as 3 spectral bands are sufficient to support nearly perfect speech intelligibility. Most strikingly, even with as many as 22 intracochlear electrodes, the cochlear-implant users performed similarly to 4 to 10 electrodes.

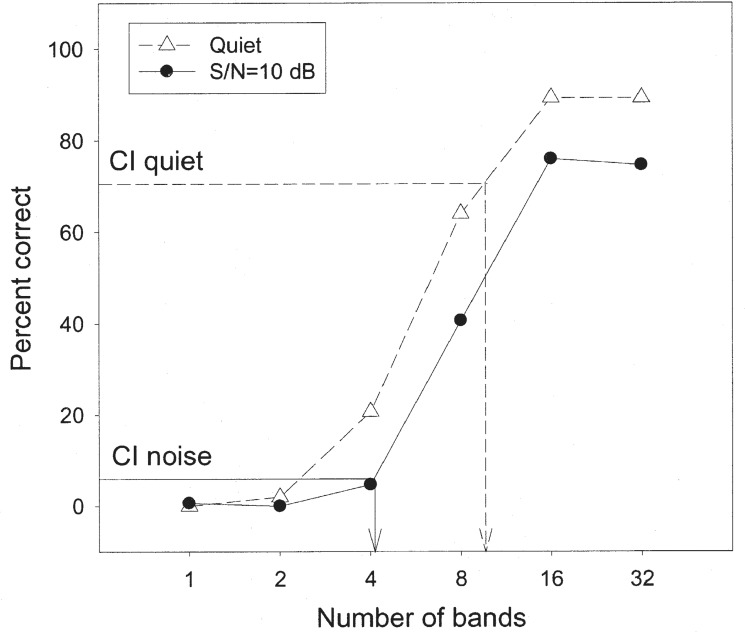

Figure 13 presents recent results from our laboratory (Nie et al., presented at the 2003 ARO meeting), showing percent scores of low-context IEEE sentences as a function of the number of spectral bands (x-axis) in normal-hearing subjects in quiet and at a 10-dB signal-to-noise ratio. Different from previous studies, the masker was a competing sentence from another talker. The cochlear implant performance from 9 subjects is included as the horizontal lines with their corresponding vertical lines indicating the equivalent number of spectral bands.

Figure 13.

Speech recognition in subjects with normal hearing and with cochlear implants. The y-axis is the percent correct score and the x-axis is the number of spectral bands in cochlear implant simulations. The open triangles represent data obtained in quiet and the filled circles represent data obtained in noise. The horizontal dashed line represents cochlear implant performance in quiet while the vertical dashed line represents the equivalent number of spectral bands. The horizontal solid line represents implant performance in noise (10 dB SNR) while the vertical solid line represents equivalent number of spectral bands (see text for details).

Several important points are noteworthy:

First, for these low-context sentences, as many as 16 bands are needed to reach a performance plateau of about 90% correct in the quiet condition. In noise, the performance was decreased by 15 to 20 percentage points, except for the 1- and 2-band conditions, where the floor effect was present.

Second, in quiet, the cochlear-implant subjects performed relatively well at 70% correct, corresponding to an equivalent level of performance with about 10 spectral bands in normal-hearing subjects. However, in noise, the cochlear-implant performance dropped to less than 10% correct, corresponding to an equivalent level of performance with 4 spectral bands in normal-hearing subjects.

Third, we note that the normal-hearing subjects could achieve nearly perfect performance (about 98% correct) with the original unprocessed sentences under the same 10-dB S/N condition. The performance was only at about 75% correct even with 32 spectral bands, suggesting that the fine structure within each band needs to be encoded for improving the performance in noise.

Bilateral Cochlear Implants and Combined Acoustic and Electric Stimulation

To improve cochlear implant performance in noise, researchers have also recently taken advantage of bilateral cochlear implants, combined acoustic and electric stimulation, and advanced hearing aid technologies. Earlier studies on bilateral cochlear implants often confused the better-ear effect with the true binaural summation and squelch effects.

The better-ear effect is due to the physically improved signal-to-noise ratio as a result of head shadow, while the true binaural effects are physiologically based as a result of complicated neural processing (Hawley et al., 1999). Recent studies on bilateral cochlear implants have largely controlled this better-ear effect and have shown accordingly rather small and nonuniform binaural effects in terms of improvement in sound location and speech intelligibility in noise (Muller et al., 2002; Tyler et al., 2003; van Hoesel and Tyler, 2003). Although commercial motivation for bilateral cochlear implants is strong, the current scientific evidence for binaural advantage that may be derived from the current bilateral implants is not compelling considering the doubling of the already high cost for a single cochlear implant (Summerfield et al., 2002).

Another increasingly active area of research to improve cochlear implant performance is to combine acoustic and electric stimulation by taking advantage of the best of both worlds. The combined stimulation can be achieved by using a conventional cochlear implant on one side and a hearing aid on the other side (Tyler et al., 2002; Ching et al., 2004; Kong et al., 2004b).

Alternatively, the combined stimulation uses a specially designed short-electrode cochlear implant while preserving the low-frequency acoustic hearing in the apex of the same cochlea (von Ilberg et al., 1999; Gantz and Turner, 2003).

Combined acoustic and electric hearing, while providing minimal improvement in speech recognition in quiet, is effective in improving speech recognition in noise, particularly when the noise is a competing voice. The most striking demonstration of this effect is that the low-frequency acoustic stimulation alone (40 dB HL or better <500 Hz) provided essentially zero intelligibility, but when combined with the high-frequency electric stimulation, it could significantly improve speech intelligibility with the electric stimulation alone.

Figure 14 proposes a possible sound segregation and grouping mechanism that explains how the additional low-frequency acoustic cue might improve cochlear implant speech recognition in noise (Kong et al., 2004b). Panel A shows speech temporal envelopes extracted from signal (S) and noise (N), respectively. Panel B shows the combined acoustic stream when both are present. In the absence of any low-frequency cues, a subject has to base on the weak pitch cue in the temporal envelope to differentiate between the signal and the noise, producing two closely spaced perceptual streams, making it difficult for the subject to identify which is signal and which is noise (Panel C). On the other hand, the presence of the salient low-frequency acoustic cue is likely to help the subject segregate and then group appropriately the temporal envelopes between the signal and the noise, producing two distinct perceptual streams (Panel D). Research on the combined acoustic and electric stimulation is in its initial stage, but has promise to overcome the greatest weakness in the current cochlear implants, i.e., speech recognition in noise.

Figure 14.

A sound segregation and grouping model for combined acoustic and electric stimulation. Panel A shows speech temporal envelopes from signal (S) and noise (N). Panel B shows the combined envelopes of the signal and the noise. Panel C shows the two closed spaced perceptual streams between the signal and the noise in the presence of the temporal envelope cue alone. Panel D shows the two distinctively separated perceptual streams between the signal and the noise in the presence of the additional low-frequency fine structure cue (the thick solid line).

A third area of emerging research is to apply advanced hearing aid technologies, such as directional microphones, noise reduction algorithms, and automatic switches, to serve as preprocessors to cochlear implant speech processors. Chung et al. (2004) measured cochlear-implant users’ ability to listen to speech in noise using materials pre-processed by hearing aid omnidirectional microphones, or directional microphones, or directional microphones plus noise reduction algorithms. They found that directional microphones significantly improved speech understanding in cochlear-implant users, whereas the addition of noise reduction algorithms significantly improved listening comfort. Further research is needed to better integrate hearing aid and cochlear implant technologies to enhance speech understanding and improve listening comfort in cochlear-implant users.

Speaker and Tone Recognition

This section provides two additional sets of data showing the limitation of the current speech processing strategies and additionally, the importance of encoding the temporal fine structure in cochlear implants. The first experiment measured vowel and speaker recognition using the same stimuli in a group of eight cochlear-implant users (Vongphoe and Zeng, 2004). A clear dichotomy was observed in which the implant subjects were able to recognize most vowels (about 70% correct), but not the speakers who produced these vowels (about 20% correct).

The second experiment compared the performance of Mandarin tone recognition as a function of the number of spectral bands (Kong et al., presented at the 2003 ARO meeting) and a function of the number of electrodes in 4 Nucleus native-Mandarin speakers (Wei et al., 1999; Wei et al., 2004). Figure 15 shows that the normal-hearing subjects were able to use the envelope cue effectively, even with 1 band, producing 70% to 80% correct performance (Fu and Zeng, 2000; Xu et al., 2002), whereas the cochlear-implant subjects performed at chance with 1 electrode.

Figure 15.

Mandarin tone recognition in normal hearing and cochlear implant subjects. The y-axis is the percent correct score for Mandarin tone recognition and the x-axis is either the number of electrodes available to cochlear implant subjects or the number of spectral bands available to normal-hearing subjects. Different open symbols represent individual implant data, and the thick line represents the average implant data. The solid triangles represent simulation data from normal listeners. The chance performance is 25% (the dotted line).

The implant performance peaked at about 70% correct with 10 electrodes and was not improved with an additional number of electrodes. We recently found that the implant performance with tones dropped nearly to the chance level with noise presented at a 5-dB signal-to-noise ratio (Wei, Cao, and Zeng, unpublished data).

Music Performance

As stated before, the cochlear implant has been designed to emphasize speech transmission with little or no attention being paid to other environmental sounds, including music. The consequence of this emphasis is that cochlear-implant users often complain about the poor quality of music perception. Compared with the vast literature in speech perception, research in music perception is relatively rare and mostly descriptive.

Tempo and Rhythm

Cochlear-implant users generally have no or little problem in performing tempo and rhythmic tasks (Gfeller and Lansing, 1991; Kong et al., 2004a). As a matter of fact, they strongly rely on the rhythmic cue to help them perform pitch-related music tasks such as the identification of familiar melodies (Gfeller et al., 1997; Kong et al., 2004a). However, when the rhythmic pattern becomes more complicated, the cochlear-implant users tend to score slightly worse than the normal-hearing subjects (Collins et al., 1994; Gfeller et al., 1997; Kong et al., 2004a), possibly reflecting the reduced capacity in working memory in the cochlear-implant subjects (see section on cognitive performance below).

Pitch, Interval, and Melody

Once the tempo and rhythmic cues are eliminated, the cochlear- implant subjects have tremendous difficulty performing pitch-related tasks. This difficulty occurs for both temporal and spectral pitches, particularly for the latter. Typically, the cochlear-implant subjects can perceive changes in pitch via changes in stimulation rate on a single electrode pair for frequencies up to 300 to 500 Hz (Simmons et al., 1965; Eddington, 1978; Shannon, 1983; Zeng, 2002).

Research has shown that the implant users can use this low-frequency temporal cue to reliably identify pitch interval and melody (Pijl and Schwarz, 1995; McDermott and McKay, 1997; Pijl, 1997). Unfortunately, except for those with the SAS strategy and the obsolete Nucleus WSP processor, current cochlear implants do not encode this low-frequency rate change. The current implant subjects have to extract the pitch information from either the temporal envelope or the spectral pitch associated with electrode position.

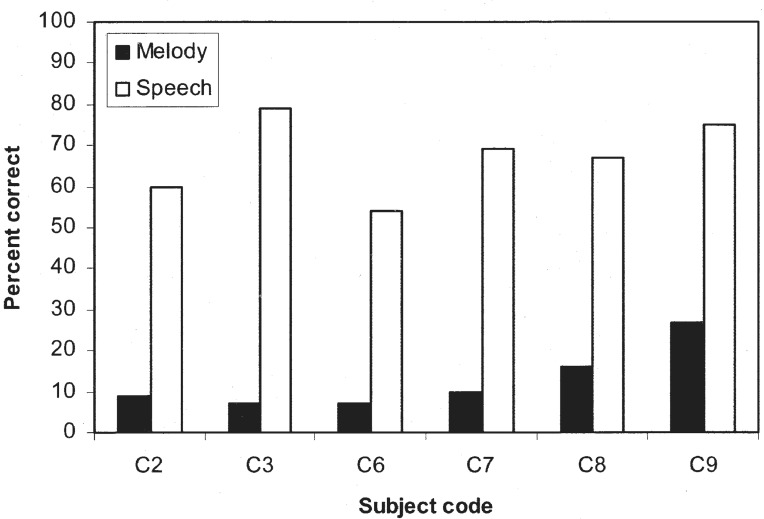

Figure 16 highlights the inadequacy of current pitch encoding schemes from the temporal envelope and electrode position cues by contrasting the difference in performance between speech and music perception (Kong et al., 2004a). The six cochlear-implant subjects were considered average-to-star performers with vowel performance from 54% to 80% correct and IEEE sentence performance in quiet from 16% to 92% correct. In contrast, their melody recognition score (in the absence of rhythmic cues) was essentially at the chance level (8%), except for the Clarion SAS user (subject C9).

Figure 16.

Melody (filled bars) and speech (open bars) recognition in cochlear implant subjects. The speech test was the identification of 12 vowels in a /hVd/ context. C2, C3, and C7 were Nucleus-22 SPEAK users, C6 and C8 were Clarion CIS users, and C9 was a Clarion SAS user.

To improve the current cochlear implant performance in pitch perception, pitch encoding in both temporal and spectral domains needs to be improved. In the temporal domain, the low-frequency pitch may be encoded by dynamically adjusting the stimulating carrier rate, while the boundary of the temporal pitch may be pushed to a higher level by introducing the high-rate or noise conditioner (Zeng et al., 2000; Rubinstein and Hong, 2003).

In the spectral domain, the fitting system has to include better frequency-to-electrode mapping and possibly virtual channels to increase the spectral resolution. The virtual channels can be achieved by stimulating two or more electrodes simultaneously or in fast sequence via pulsatile stimulation. The pitch of the virtual channel is often between that of the two adjacent electrodes when stimulated separately.

Timber and Instruments

Cochlear-implant subjects also have a great deal of difficulty in identifying timbers associated with different musical instruments (Galvin and Zeng, 1998; Gfeller et al., 1998). Figure 17 shows musical instrument identification for both normal hearing and cochlear implant subjects (Galvin and Zeng, 1998). Normal-hearing subjects performed the same task under the original unprocessed stimuli and additionally under conditions to simulate 1-, 2-, 4-, and 8-band cochlear implants.

Figure 17.

Musical instrument identification in 3 normal-hearing (filled and open bars) and 5 cochlear implant (the hatched bar) subjects. The filled and the hatched bars represent data from the original, unprocessed stimuli, consisting of the following nine musical instruments: cello, clarinet, oboe, French horn, English horn, saxophone, trumpet, and viola. The subjects were trained on one pitch (a4) with trail-by-trail feedback and tested without feedback on another (c4). The open bars represent data from processed stimuli with their temporal envelopes extracted from 1, 2, 4, and 8 spectral bands.

It was not surprising that the subjects with normal hearing performed significantly better than the subjects with cochlear implants, but what was surprising was that performance was not improved, or slightly decreased if anything at all, as the number of bands was increased from 1 to 8. This pattern of results was very different from the speech result, providing yet another piece of evidence for the perceptual dichotomy between speech and music sounds (Smith et al., 2002).

Cognitive Performance

In addition to peripheral factors such as electrode interaction, nerve survival, and basic psychophysical performance, cognitive factors such as learning, memory, and information processing are likely to contribute to the large individual differences observed in current cochlear-implant users. As it will be discussed, both “bottom-up” and “top-down” approaches are needed to fully account for the overall cochlear implant performance.

Individual Variability

The average cochlear implant performance measured by open-set speech recognition has improved steadily over the last two decades from essentially 0% correct with the early single-electrode devices to about 80% correct with the modern multi-electrode devices. Yet, for all cochlear implants, there is a wide range of performance in cochlear-implant users ranging from those deriving minimal benefit to “star” users whose performance in quiet approaches a normal-hearing listener's level of performance. How to account for this large individual variability remains one of the most challenging questions in current cochlear implant research.

Traditionally, researchers have employed a bottom-up approach trying to identify psychophysical factors that may account for this large individual variability. This attempt has achieved a moderate level of success in terms of correlating psychophysical measures to speech performance. For example, temporal processing measures in detecting amplitude modulation and discriminating different temporal patterns were shown to correlate with speech performance in implant users (Cazals et al., 1994; Collins et al., 1994; Fu, 2002). Similarly in Mandarin-speaking cochlear-implant users, rate discrimination and tone recognition were significantly correlated (Wei et al., 1999).

In the spectral domain, electrode discriminability and spectral resolvability were also found to be correlated with speech performance (Nelson et al., 1995; Henry et al., 2000; Henry and Turner, 2003). However, these correlation studies typically did not address the underlying processing mechanisms.

Bottom-Up Versus Top-Down Approaches

To overcome this shortcoming in traditional correlation studies, Svirsky and colleagues have used an innovative approach to predict the individual speech performance from psychophysical measures at a mechanism level (Svirsky, 2000; Teoh et al., 2003). Based on signal detection theory models, Svirsky et al. measured temporal and spectral discriminability in cochlear implants and constructed a perceptual space to predict multidimensional phoneme identification (MPI). The MPI model clearly takes a bottom-up approach. Because the psychophysical measures were related to speech features in voicing, manner, and place of articulation, Svirsky et al. could predict not only the overall speech performance but also the error patterns the individual users might make.

Alternatively, a top-down approach could be taken to predict speech performance on the basis of frequency of occurrence and acoustic similarity for individual words (Luce and Pisoni, 1998). This neighborhood activation model (NAM) has been used to predict speech performance in both postlingually deafened (Meyer et al., 2003) and pediatric cochlear-implant users (Kirk et al., 1995).

As with the traditional correlation studies, both the bottom-up (MPI) and top-down (NAM) predictions were highly correlated with speech performance. The additional ability to predict quantitatively the performance showed an extremely interesting bias in the result.

Figure 18 summarizes the work of Svirsky et al. and shows schematically the different biases in the predicted performance between the top-down NAM (labeled as lexical model) and the bottom-up MPI (labeled as psychophysical model). The interesting result is that while both measures are highly correlated with speech performance, the psychophysical measure overpredicted speech performance, whereas the lexical measure underpredicted speech performance. In other words, we have probably overestimated the role of basic psychophysical measures but underestimated the brain's power in speech performance. These interesting results demonstrate that we need to take both top-down and bottom-up approaches to fully account for speech performance in cochlear-implant users.

Figure 18.

Prediction of speech performance in cochlear implants. The y-axis is the actual performance, and the x-axis is the predicted performance. The dashed line represents prediction from a bottom-up, psychophysically based model. The dotted line represents prediction from a top-down, cognitive-based model. The solid diagonal line represents perfect prediction without any bias. See text for details.

Short-Term Working Memory

To process spoken language, a listener has to effectively use short-term working memory as an interface between the auditory input and the stored linguistic information in long-term memory. Recently, short-term memory has been used to account for the large individual variability in speech perception by children with cochlear implants (Pisoni, 2000; Geers, 2003; Pisoni and Cleary, 2003). One such measure is forward and backward digit span tests, in which a subject repeats a list of digits either in the same order as presented or in a reversed order. The outcome is simply the length of the digits that can be correctly recalled.

On average, children with cochlear implants had a digit span of 5.5 in the forward digit span test and 3.5 in the backward digit span test. The age-matched children with normal hearing had a significantly longer digit span of about 8.0 and 4.5, respectively. Most important, they found that the length of digit span, particularly the forward digit span, was significantly correlated with both communication mode and word recognition.

In addition to the short-term working memory measure, other cognitive measures such as attention, categorization, learning, and memory have been suggested for assessing the central cognitive processing efficiency of the initial sensory input provided by a cochlear implant (Pisoni and Cleary, 2004). This line of research will likely provide new information and possibly new diagnostic tools that were not possible with the traditional psychophysical and electrophysiologic measures. One example will be to conduct visual attention and memory test for temporal sequences that could be used to predict presurgically the postsurgical performance, a challenging task that has been attempted many times but remains unresolved.

Cognitive Rehabilitation

At present, cochlear-implant users do not have access to structured and guided rehabilitation strategies. Instead, they are simply left alone to figure out how to deal with the distorted sensory input provided by the cochlear implant. Clearly, a cochlear-implant user does not hear what a normal-hearing person would hear. The current electrodes are too few and far between while most likely providing upward spectrally shifted auditory input. Some users are able to adapt to the new mode of stimulation more rapidly and efficiently than are others.

Unlike the somewhat controversial acclimatization effect in hearing aids (Arlinger et al., 1996), there is strong evidence for the existence of such an effect in cochlear implants. As a matter of fact, recent research has started asking pointed questions regarding how much can be learned and what is learned via cochlear implants.

An early study showed that normal-hearing subjects listening to cochlear implant simulations were the most susceptible to spectral warping and shift (Shannon et al., 1998). However, normal-hearing listeners could significantly improve their performance with the spectrally distorted speech stimuli with merely nine 20-minute sessions of training, suggesting that the brain is capable of adapting to the distorted input (Rosen et al., 1999).

Another study was conducted in actual cochlear-implant users, who showed continuous improvement in speech performance with frequency-shifted speech over a 3-month period (Fu et al., 2002). More interestingly, Fu et al. found that the cochlear- implant subjects could immediately restore to the same level of performance with the original frequency-to-electrode map, even though they had not listened to the original map for 3 months, suggesting that these subjects had learned two sets of maps in their brain.

A third study found that improved longitudinal performance in cochlear-implant users was mainly due to improved labeling with the distorted stimuli rather than improved psychophysical performance, such as electrode discrimination, suggesting that structured cognitive rehabilitation be introduced to help particularly the less successful cochlear-implant users adapt to and learn the distorted sensory input (Svirsky et al., 2001). How to unlock the brain's power has become an important area of research in cochlear implants.

Trends

That the cochlear implant has matured as a field is evidenced by its exponential growth in the patient population and scientific literature, as well as the breadth and depth—and most importantly—the quality of its research. Long gone is the early argument on whether cochlear implants would work at all: “the elephant is flying.”1 More than 60,000 cochlear-implant users are functioning effectively with their devices.