Abstract

Advances in technology and expanding candidacy guidelines have motivated many clinics to consider children with precipitously sloping high-frequency hearing loss as candidates for cochlear implants (CIs). A case study is presented of a pediatric CI patient whose hearing thresholds were preserved within 10 dB of preimplant levels (125–750 Hz) after receiving a fully inserted 31.5-mm electrode array at one ear. The primary goal of this study was to explore the possible benefit of using both a hearing aid (HA) and a CI at one ear while using a HA at the opposite ear. The authors find that although the use of bilateral hearing aids with a CI may only provide a slight benefit, careful attention must be paid to the coordinated fitting of devices, especially at the ear with two devices.

Keywords: bimodal, electroacoustic, pediatric, cochlear implant

Introduction

Cochlear implants (CIs) have been available for children since the early 1980s. When CIs were initially approved by the Food and Drug Administration (FDA) for the pediatric population, only children aged 2 years and older with very profound hearing losses (HLs) and little to no benefit from traditional hearing aids (HAs) were considered candidates for implantation. Advances in CI technology have resulted in improved performance and have expanded candidacy to include younger children and those with more residual hearing. Current FDA guidelines consider children aged 1 year or older and children with severe to profound losses with open-set word recognition of 30% correct, or less, to be candidates for CIs.

The ongoing evolution of CI candidacy is reflected in the fact that many clinics are now considering children with precipitously sloping high-frequency HL as potential candidates for CIs. Adult listeners with similar high-frequency HL, who previously received only marginal benefit from HAs, receive significant benefit from speech information provided by a CI (Gifford, Dorman, McKarns, & Spahr, 2007; Hogan & Turner, 1998). The consideration of individuals with precipitous high-frequency loss has, in part, been motivated by some results that suggest that adults with significant high-frequency HL are not able to use acoustic information effectively and that benefit from amplification is severely limited by poor temporal or frequency resolution (Ching, Dillon, & Byrne, 1998; Hogan & Turner, 1998). Although these results may not necessarily hold for young children (Stelmachowicz, Pittman, Hoover, & Lewis, 2001), providing effective audibility of high-frequency speech information, such as that found in the morpheme/s/, may be absolutely critical for language development in children (Kortekaas & Stelmachowicz, 2000; Stelmachowicz, Pittman, Hoover, Lewis, & Moeller, 2004). In addition, for adult listeners with high-frequency HL, there appears to be a substantial complementary benefit from the combination of acoustic hearing and electric hearing (Gifford, Dorman, McKarns, et al., 2007). The results from Gifford et al. and others suggest that providing low-frequency information via a HA in the ear opposite the CI potentially mitigates some of the perceptual difficulties that occur with CI-only use. That is, for listeners who use only a CI, the recognition of speech in the presence of competing talkers and the recognition of musical melodies can still be difficult, even though the recognition of speech in quiet may be excellent (Ching, van Wanrooy, & Dillon, 2007; Gifford, Dorman, McKarns, et al., 2007; Spahr & Dorman, 2004).

Perceptual benefits from this combination of two devices in two ears (CI in one ear and HA in the other) may differ, however, across various outcome measures. For example, Kong, Stickney, and Zeng (2005) examined the effect of low-frequency residual hearing, in the nonimplanted ear on word recognition in noise and on melody recognition. Four CI recipients, with aided thresholds at the nonimplanted ear in the mild to severe range below 1,000 Hz, were tested using their HA alone, CI alone, and the two devices combined. Kong et al. (2005) found that while the HA-alone condition resulted in no measurable word recognition in noise, the combined use of the two devices resulted in a significant improvement over the CI alone. For the perception of melodies, they found a trend for better performance with the HA alone compared with the implant alone, and the HA-alone and device-combination results were similar. Similarly, in a CI-simulation study (Chang, Bai, & Zeng, 2006), low-pass filtered speech (<300 Hz) combined beneficially with high-frequency information through a CI simulation to enhance speech recognition in noise. Specifically, when low-frequency information, unintelligible by itself, was combined with the CI simulation of high-frequency information, speech reception thresholds were better than those from the CI simulation alone. In a very recent study (Dorman, Gifford, Spahr, & McKarns, 2008), an even wider range of outcome measures were used. Speech recognition in quiet and in noise, melody recognition, and voice discrimination tests were administered to 15 CI recipients in the HA-alone, implant-alone, and the HA and implant combined conditions. All these participants had a fully implanted electrode array and good low-frequency hearing at the opposite ear (i.e., HA ear). Their results are similar to those of others: For speech recognition in quiet and in noise, the implant-alone condition resulted in significantly better scores than the HA-alone condition, and the combined condition resulted in scores that were 17 to 23 percentage greater than those from the implant-alone condition. For melody recognition, the HA-alone condition and the HA and implant combined condition yielded similar scores (∼71% correct recognition), and these were better than those from the implant alone (∼52% correct). Also, scores from the within- and across-gender voice discrimination tests were not significantly different across the three conditions (∼70% and ∼90% correct, respectively).

These studies highlight some of the benefits of the combined use of a CI and a HA in opposite ears, or “bimodal” hearing as it is commonly called (see Ching et al., 2007, for a comprehensive review). In addition to these benefits, a more pragmatic argument for using a HA and an implant in opposite ears is that listening in “real-world” environments may be enhanced by bilateral device use. Bilateral device use may be especially helpful for sound localization and listening to speech in the presence of noise. The possible benefits of binaural hearing have been reviewed extensively and have been examined for the separate contributions from the effects of head shadow, binaural redundancy, and binaural squelch (e.g., see Ching et al., 2007; Dillon, 2001; Litovsky, Parkinson, Arcaroli, & Sammeth, 2006; Schafer, Amlani, Seibold, & Shattuck, 2007).

For the provision of binaural hearing, or its approximation, listeners who are CI candidates are generally thought to have two options. One is using a HA in the ear opposite the CI (bimodal) and the second is using an implant at each ear (bilateral CIs). In a recent review, Ching et al. (2007) compared both bimodal device use and bilateral CIs to unilateral CI use. The study's results were categorized by the following four outcome measures: localization, speech perception, sound quality, and music perception. Results were also separated by listener group—namely, adults and children. Across studies, benefit depended on outcome measure and listener group. Overall, though, most studies report a benefit for both bimodal devices and bilateral implantation over unilateral CI use, and this result was obtained for both adults and children. However, Ching et al. found no evidence to support the efficacy of one type of bilateral device use over the other. One major observation, from examining the studies, was that there are no general procedures to optimize or standardize the fitting of the CIs or HAs, either across individuals or across clinics.

The importance of fitting both the HA and CI in a coordinated manner to achieve the optimal benefit from bimodal device use has been demonstrated (Blamey & Saunders, 2008; Ching et al., 2007; Ching, Incerti, & Hill, 2004). These studies stress that audibility and perceived loudness should be matched across the two ears and devices to maximize benefits. For example, Ching, Psarros, Hill, Dillon, and Incerti (2001) examined 16 children (aged 6–18 years) with a Nucleus 22 or 24 CI who used a HA in the nonimplanted ear. Children were given tests of speech perception and sound localization, and surveys of communicative function. The HAs for the nonimplanted ear were fit initially using National Acoustics Laboratories-Revised Profound targets, and then the frequency responses were further adjusted to balance the loudness between the devices. All children demonstrated benefit compared with the performance with the initial (unadjusted) fittings on at least one of their measures when the fitting of the HA was set to match the loudness of the CI.

Clearly, the bimodal combination of devices across ears seems to provide substantial benefits. In particular, with this combination of acoustic and electric hearing across ears, there are reported improvements in speech perception in noise, recognition of melodies, and localization of sounds compared with unilateral CI use. Although some of these improvements are likely because of the combination of any hearing across ears (such as improvements in localization), other improvements are likely primarily because of the combination of these two types of hearing or stimulation—namely, acoustic and electric. For example, melody recognition and speech perception in noise might be improved because of better fundamental frequency information in acoustic hearing, which may complement electric hearing. Yet bimodal device use is not the only way to combine acoustic and electric hearing. There is also the possibility of using a HA and a CI at the same ear, usually called electroacoustic stimulation (EAS) or, sometimes, hybrid stimulation. Most often, EAS is accomplished by the implantation of short electrode arrays and/or a shorter insertion depth of conventional electrode arrays. As reported by others, this intraaural combination of acoustic and electric hearing also yields many benefits in comparison with CI-only use, particularly for tests that rely on good complex-pitch perception (Gantz & Turner, 2003; Gantz, Turner, Gfeller, & Lowder, 2005; von Ilberg et al., 1999). In a recent review of EAS (Talbot & Hartley, 2008), the wide variety of electrode arrays, insertion depths of the arrays, and manufacturers' devices used in the many EAS studies is evident. In addition, similar to the findings in studies of bimodal devices, several EAS studies emphasize the importance of thoughtful and coordinated fittings of the HA and the CI at one ear (James et al., 2006; Vermeire, Anderson, Flynn, & Van de Heyning, 2008). For example, one consideration is whether to provide overlapping or nonoverlapping frequency information from the CI and HA (Gantz & Turner, 2003; James et al., 2006; Kiefer et al., 2005). In a nonoverlapping fitting, the implant frequency range would be restricted to the higher frequencies where little or no residual hearing is measured, and the HA would amplify sounds only in the low frequencies where residual hearing is present. Across studies, there is no consensus regarding the best degree of overlap, and results also varied with outcome measure. Vermeire et al. (2008) emphasize that not only must the fitting of the CI be coordinated with that of the HA, but the HA fitting protocol should depend on the degree and configuration of the acoustic thresholds. That is, the “best” amount of frequency overlap may depend on the degree of residual hearing that can be amplified.

Whereas the benefits of bimodal device use have been well documented for children, little is known about EAS device use with children because of the fact that FDA-approved EAS/hybrid trials do not include pediatric patients. One problem with EAS- or hybrid-type devices is the expectation that low-frequency hearing will be preserved after implantation surgery. Although low-frequency hearing can be preserved, a substantial proportion of adults (roughly 10% to 30%) who receive EAS devices lose their low-frequency hearing after surgery (Dorman et al., 2009; Fitzgerald et al., 2008; Gstoettner et al., 2004; James et al., 2006; Kiefer et al., 2004). It is unknown whether a similar percentage of pediatric patients would also lose their low-frequency hearing after implant surgery. The only study of EAS devices with children reports that for their nine young participants (ages 4–12yrs), four children had fully preserved, four had partially preserved, and one had unusable low-frequency hearing after implant surgery of a 20-mm electrode array (Skarzynski, Lorens, Piotrowska, & Anderson, 2007). And, for those with partial preservation of hearing, neither the audiometric thresholds (pre- and postsurgery) nor the amounts of threshold shift caused by surgery are reported. A second issue with the use of EAS devices is whether low-frequency acoustic hearing remains stable over the long term. This issue of long-term stability is obviously much more important for children than for adults. Yao, Turner, and Gantz (2006) found that rates of deterioration in audiometric thresholds are greater and more variable across individuals and across frequencies for children compared with those for adults. Because children can be expected to use a CI for a longer period of time, short electrode arrays may be practical for adults but not necessarily for pediatric patients.

Yet because of the evolution of CI candidacy, many clinics are now considering children with precipitously sloping high-frequency HL as potential candidates for CIs—though mostly with conventional CIs. This case study reports on a pediatric patient who, after receiving a fully implanted 31.5-mm electrode array at one ear, had hearing thresholds from 125 to 750 Hz within 10 dB of preimplant levels. That is, moderate to severe low-frequency thresholds were essentially preserved. In addition, this pediatric participant had been wearing bilateral HAs and was expected to continue wearing a HA in the ear opposite the CI. This preservation of low-frequency acoustic hearing in an implanted ear presented the unique possibility of exploring a combination of both EAS and bimodal device use in a single pediatric patient. To our knowledge, there have been no reports of such a three-device combination (CI + HA in one ear and HA in the other ear) involving a standard-length electrode array with a full insertion in a pediatric patient. In fact, there are a mere handful of reports of such three-device combinations in adults, and they involve either a short electrode or a shallower insertion.

The results from these few adult studies, using bilateral HAs in addition to a CI, vary with regard to improvements in speech perception for this three-device combination. Recently, Dorman et al. (2009) examined monosyllabic word recognition in quiet only, for 47 adults with bilateral precipitously sloping HL who received either a short 10-mm array (n = 22) or a standard electrode array (n = 25). All participants qualified for EAS use (hearing threshold levels ≤60 dB HL below 500 Hz and thresholds ≥80 dB HL above 2,000 Hz). Fifteen of the 22 participants in the short electrode group had low-frequency hearing preserved after surgery. It is assumed that all participants in the standard-length electrode group lost residual hearing at the implanted ear, though no audiometric data are reported. Across listener groups, average performance for the group with the standard-length electrode array was significantly better than performance for the short electrode group in both the CI ipsilateral condition (CI [standard electrode group] > CI + HA ipsilateral [short electrode group]) and in the condition where the contralateral HA was added (CI + HA contralateral [standard electrode group] > CI + HA ipsilateral plus HA contralateral [short electrode group]). And, within the short electrode listener group, the addition of the HA to the ipsilateral CI ear resulted in only a marginally significant increase in performance over the traditional bimodal fitting (CI + HA ipsilateral plus HA contralateral slightly > CI + HA contralateral). In an earlier study, Gantz et al. (2005) compared monosyllabic word recognition scores in quiet for eight participants with a 10-mm electrode array/CI and bilateral HAs. Word scores for the CI and bilateral HA condition were better than scores for the CI and ipsilateral HA for five of them (the CI + contralateral HA condition was not reported). Improvements in word scores ranged from approximately 2 to 40 percentage points. Three of them showed a slight decrease in performance ranging from approximately 10 to 15 percentage points. It is not clear from these studies whether the use of bilateral HAs with a CI provides any significant benefit or detriment, and these results are only of speech perception in quiet. Another study, by Kiefer et al. (2005), refers to “an optimal HA” configuration in conjunction with CI use. However, the exact details (ipsilateral HA, contralateral HA, or bilateral HA, for the “optimal HA”) are not provided for the participants tested. Thus, perceptual results from adults using three devices (CI + bilateral HAs) are minimal: There are very few studies, speech in quiet is the only listening test examined, and HA configurations are unspecified. It could be argued that CI recipients with good low-frequency residual hearing at both ears might be best served using only one HA at the opposite ear and allowing, if possible, “natural” (unaided) low-frequency acoustic hearing at the implanted ear. It could also be argued that redundant, somewhat symmetrical, low-frequency information, provided via HAs from both ears, might be best for CI recipients when in more demanding listening situations. And, it is unknown whether more consistent results and evidence, favoring one device combination over the other, would be obtained if outcome measures other than speech in quiet were used.

This case presented the opportunity to evaluate the use of EAS at the CI ear combined with aided acoustic information at the opposite ear. Additionally, this case presented challenges and questions in fitting and evaluating three devices on a young congenitally deafened child. For example, should the HA at the CI ear be introduced at the time of the CI fitting, or should some period of implant acclimatization be given? Would an interruption in HA use at either ear be considered undesirable or disruptive because this child has consistently worn bilateral HAs? And, as a practical matter, until hybrid processors are commercially available, an EAS fitting will likely require a different HA at the implant ear—specifically, an in-the-ear (ITE) HA would replace a behind-the-ear (BTE) HA. Finally, a wide variety of outcome measures are used to examine the combination of acoustic and electric hearing, both intraaurally and interaurally.

Method

The primary goal of this study was to explore the possible benefit, for a listener with preserved low-frequency hearing, of using a HA and a CI at one ear while using a HA at the other ear. That is, how will listening performance with these three devices compare with performance with two devices—either CI + HA at opposite ears or CI+HA at one ear?

Participant

One female pediatric patient, S1, with Turner's syndrome participated in this single-subject design. At roughly 3 years of age, she was fit bilaterally with BTE HAs. She has received audiological services since that time and has attended an oral school for the deaf for approximately 5 years. After deterioration of her hearing to severe to profound levels at high frequencies in both ears, she was implanted (at age 8 years, 8 months) with a Med-El Pulsar CI 100 device in her right ear (CI) with continual use of a Starkey Destiny 1200 BTE HA at the left ear (HALE).

Surgery was performed at a pediatric CI facility. The following is an extract from the surgical report:

A cochleostomy was done by thinning the bone just inferior to the annulus of the round window with a cutting bur and suction irrigation. Once the bone was thin and appeared gray, it was chipped away with a sharp pick and the inferior edge rasped with a small foot plate rasp. The opening into the basal turn of the cochlea was approximately 0.7 mm. Healon was placed into the basal turn. There was minimal perilymph loss. There was no bleeding into the perilymph nor bone dust in the perilymph. The electrode array was then inserted into the basal turn directing the electrode array in an anterior and slightly medial direction, along the plane of the posterior wall of the ear canal. The electrode was advanced into the cochlea without resistance. The marker on the electrode was approximately 3 mm outside of the cochlea.

The insertion depth for this electrode array was 28.5 mm. At the beginning of the study, the participant was 9 years, 1 month of age, and 5 months postimplantation surgery.

Device Conditions and Test Phases

An alternating schedule of “baseline” and “treatment” everyday device-use was established: baseline, treatment, baseline, treatment. In the baseline device condition, S1 wore her Starkey HA in her left ear (HALE) and her CI, with a baseline map, in her right ear. In the treatment device condition, S1 wore her HA in her left ear (HALE) and both her CI, with a treatment map, and a HA in her right ear (HARE). Each phase lasted 2 to 3 weeks, and at the end of each phase, a battery of speech perception tests was administered. At the end of the baseline phases, S1 was also tested using only the CI in her right ear and then using only her HALE. At the end of the treatment phases, S1 was also tested using only the combination of CI + HARE in her right ear. For these additional conditions when S1 was using devices only in her right ear, S1's left ear was plugged. The battery of tests was conducted in several short sessions of less than 1 hour to limit fatigue and boredom for this young participant. Additionally, during each phase, teachers and parents were asked to report any issues regarding auditory performance in the classroom and at home. None were reported.

Test Battery

A battery of tests was performed with S1: (a) unaided audiometric thresholds; (b) aided audiometric thresholds; (c) consonant-nucleus-consonant (CNC) word tests; both in quiet and in noise (+10 dB signal-to-noise ratio [SNR]); (d) Bamford-Kowal-Bench speech-in-noise (BKB-SIN) test to estimate a SNR for speech reception; (e) two emotion perception tasks; (f) three talker discrimination tasks; and (g) a localization task. This battery represents a large span of tests. Some tests in the battery might be sensitive to device combinations across ears, whereas other tests might be sensitive to device combinations across stimulation types (acoustic + electric) and frequency ranges. In particular, the emotion and talker perception tasks were included because of their presumed reliance on good perception of complex pitch, likely to occur via acoustic hearing, at either or both ears. The speech-perception-in-noise tests also might be sensitive to the presence of good acoustic hearing at low frequencies. Also, the localization task is included for its likely sensitivity to the presence of binaural-like cues across ears, from all devices. The test battery details are as follows:

Frequency-modulated (FM) tones. FM stimuli, at 125, 250, and 500 Hz, and 1, 2, 4, and 6 kHz were produced by an audiometer. Unaided and aided thresholds were obtained using conditioned-play audiometry.

Consonant-nucleus-consonant word test. Fifty-item CNC monosyllabic word lists were selected for measuring open-set word recognition (Peterson & Lehiste, 1962). Words were presented at 60 dB SPL, when presented in quiet and when mixed with multi-talker babble at a SNR of +10 dB. For both the in-quiet and in-noise conditions and for each test phase, a distinct list of 50 words was used.

Bamford-Kowal-Bench speech-in-noise test. The BKB-SIN test was administered using the test manual's guidelines specific to children and CI users (Killion, Niquette, Gudmundsen, Revit, & Banerjee, 2004, 2006). For each condition, sentences (from lists 9–18, developed for CI users) were presented at 65 dB SPL, with the level of noise (four-talker babble) increasing in 3-dB steps. Initially, the SNR was +21 dB but then decreased automatically until an SNR of 0 dB was reached. After each sentence presentation, S1 verbally repeated the sentence as best she could, and keywords were scored. The SNR for a 50%-correct word score (SNR-50) was estimated for each condition using the method described by Killion et al. (2004).

Emotion perception. Two types of emotion perception tasks were administered: emotion identification and emotion discrimination. The materials consisted of three semantically neutral sentence scripts spoken by a single female talker who was instructed to speak with four different emotions (angry, scared, happy, and sad). Three productions were recorded for each sentence script and each emotion (Peters, 2006). For the emotion identification task, a one-interval, four-alternative forced-choice paradigm was used, and 36 trials were presented (each emotion is presented an equal number of times). After each sentence, S1 chose one of the four emotions (via a touch screen) as depicted in four labeled photos of a young girl displaying these emotions. This 36-trial run was repeated at each test phase. For the emotion discrimination task, a two-interval, two-alternative forced-choice paradigm was used, and 24 trials were presented at each test phase. For this task, two sentences were presented in each trial; the girl indicated, again via a touch screen with the response choices depicted by pictures and words, whether the talker spoke the sentences with the “same feeling” or with “different feelings” (there were equal numbers of “same” and “different” trials).

Talker discrimination. Sentence stimuli from the Indiana multi-talker speech database were used to assess talker discrimination abilities (Bent, Buchwald, & Alford, 2007; Bradlow, Torretta, & Pisoni, 1996). Eight female and eight male talkers were chosen from the database recordings of 20 talkers. Three types of talker discrimination tests were conducted: (a) across-gender (male vs. female) test, (b) within-female test, and (c) within-male test. For all three types of discrimination, a two-interval, two-alternative forced-choice paradigm was used. In every trial, the sentences differed in the two intervals. S1 was instructed to respond whether the two sentences were spoken by the same person or by different people. For the across-gender talker discrimination test, the same person trials consisted of either a same female or a same male talker saying two different sentences. For the different people trials, one sentence would be spoken by a male talker and the other by a female talker. For the within-female talker discrimination test, the same person trials consisted of a single female talker saying two different sentences, whereas the different people trials consisted of two different female talkers saying two different sentences. And similarly, for the within-male talker discrimination test, the same person trials consisted of a single male talker saying two different sentences, whereas the different people trials consisted of two different male talkers saying two different sentences. For each of these three types of talker discrimination tests and at each test phase a total of 32 trials were presented, half of which were same person and half of which were different people trials. S1 responded, via a touch screen, with the response choices depicted in both pictures and words.

Speaker localization. For this task, S1 was seated in a sound room with 15 audio speakers arranged in an arc, extending from −70° to +70° azimuth, with speakers positioned at every ±10°. The distance from S1's head to each speaker in the arc was approximately 4'6”. On each trial, a single-syllable word was presented randomly from 1 of the 10 active speakers (those positioned at ±10°, ±20°, ±30°, ±50°, and ±70° azimuth), at a level of 60 dB SPL (±3 dB). The audio speakers positioned at ±40°, ±60°, and at 0° azimuth were inactive. S1 was instructed to indicate, by pointing to the speaker and repeating the identifying number above the speaker (1 through 15), from which one the word was heard. S1 was seated with her head pointed at 0° azimuth (directed at Speaker 8), and with Speakers 1 to 7 on her left and Speakers 9 to 15 on her right. She was instructed to return to this position after each trial. Her head movements were not restricted, either physically or with instructions. At each test phase, a total of 100 CNC words were presented, 10 from each speaker location. S1 was asked only to identify the position of the speaker from which the word was heard, not to repeat or identify the word itself. The root mean square (rms) of the error, in degrees, between the identified speaker and the actual speaker used in the word presentation is calculated for each condition in each test phase.

Procedures. All testing was performed in double- or single-walled sound rooms. For all tests, except the localization task, S1 was positioned at 0° azimuth and 1 meter from the loudspeaker that presented the acoustic stimuli in the sound field. For the three types of tests not commonly used in the clinic—namely, the emotion perception, talker discrimination, and speaker localization tests—a short training and familiarization period was provided before data collection.

Hearing Aid Fitting and Cochlear Implant Maps

Left ear (HALE). S1 continued to use her BTE HA every day throughout this study. Real-ear-to-coupler differences (RECDs) were measured using S1's custom ear mold. Unaided thresholds and RECDs were entered and output verified using the AudioScan Verifit system with age-appropriate desired sensational level m[i/o] v5.0 targets (Scollie et al., 2005). Gain and output were further adjusted based on reported comfort and audibility of conversational speech and aided thresholds.

Right ear (HARE). At 6 months postimplantation surgery, S1 was fit with a Phonak Extra 33 ITE HA in the right ear, that is, the ear with the Med-El CI. As described above, unaided thresholds were entered, and average age-appropriate RECDs were used to verify output gain using age-appropriate desired sensational level m[i/o] v5.0 targets (Scollie et al., 2005). Again, gain and output were further adjusted based on reported comfort and audibility of conversational speech and aided thresholds. However, for this HA, no attempt was made to reach output targets at 2,000 to 6,000 Hz, and gain was reduced in this region when feedback was present.

Right ear (CI). Two maps were programmed into S1's speech processor, a baseline map and a treatment map. The frequency band to electrode assignments for these two maps are provided in Table 1. S1's TEMPO+ processor was programmed with a CIS strategy using a stimulation rate per channel of 1,428.6 pps. In the baseline map, frequencies from 200 to 7,000 Hz are mapped to the 10 active electrodes on S1's array. This baseline map is simply the result of applying a standard Med-El logarithmic-spacing rule for mapping center frequencies to active electrodes. The treatment map assigns frequencies from 400 to 7,190 Hz to the same active electrodes. We chose a treatment map based on recommendations from Vermeire et al. (2008), in which fittings are individualized, and overlap is moderate, at most. The treatment map allows frequencies <400 Hz to be presented only acoustically, frequencies from 400 to 750 Hz to be provided both acoustically and electrically, and frequencies >800 Hz to be represented only electrically.

Table 1.

Frequency-Band (Hz) to Electrode Assignments for the Baseline and Treatment Maps Programmed in the Speech Processor; Electrodes #11 and #12 Were Deactivated Soon After Hookup Because of Poor Loudness

| Baseline Map |

Treatment Map |

||||||

|---|---|---|---|---|---|---|---|

| Electrode | Center | Lower | Upper | Center | Lower | Upper | |

| No. | Status | Frequency | Frequency | Frequency | Frequency | Frequency | Frequency |

| 1 | On | 243 | 200 | 286 | 471 | 404 | 538 |

| 2 | On | 346 | 285 | 407 | 615 | 538 | 692 |

| 3 | On | 494 | 407 | 581 | 756 | 668 | 844 |

| 4 | On | 705 | 580 | 829 | 993 | 844 | 1,142 |

| 5 | On | 1,006 | 828 | 1,183 | 1,330 | 1,142 | 1,518 |

| 6 | On | 1,436 | 1,182 | 1,689 | 1,819 | 1,518 | 2,120 |

| 7 | On | 2,049 | 1,686 | 2,410 | 2,500 | 2,086 | 2,914 |

| 8 | On | 2,924 | 2,407 | 3,439 | 3,369 | 2,866 | 3,872 |

| 9 | On | 4,172 | 3,434 | 4,906 | 4,586 | 3,938 | 5,234 |

| 10 | On | 5,953 | 4,900 | 7,001 | 6,212 | 5,234 | 7,190 |

| 11 | Off | — | — | — | — | — | — |

| 12 | Off | — | — | — | — | — | — |

Finally, loudness and audibility were balanced across devices using aided thresholds and comfort of monitored conversational speech. For example, when the HARE and CI signals were combined in S1's right ear, the M levels in the treatment map for the CI were decreased by about 50 CUs (current units) across all electrodes to maintain comfort for conversational speech and loud sounds.

Results

Frequency-Modulated Tones

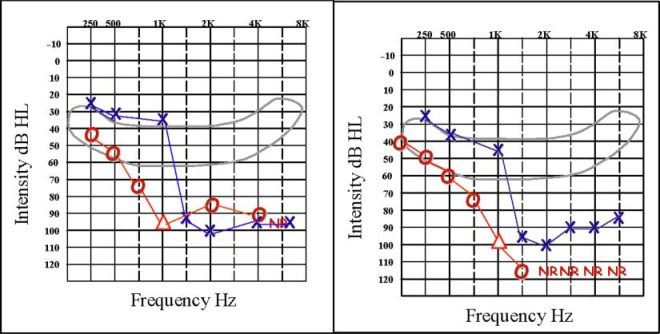

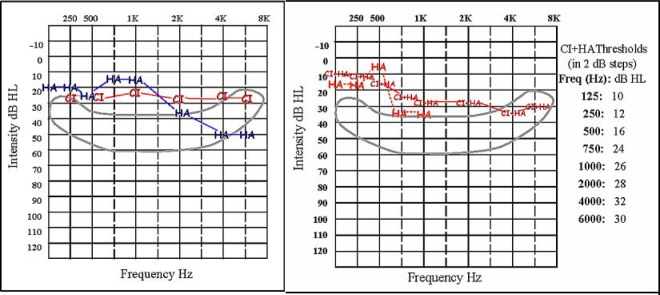

S1's pre- and postsurgery unaided thresholds, for both left and right ears, are shown in Figure 1. These thresholds indicate that S1's hearing in her right ear, in the frequency range from 125 to 1,000 Hz, was essentially preserved after implant surgery. Aided thresholds, for both left and right ears, are shown in the left-hand panel of Figure 2. For these thresholds, S1 wore the CI in her right ear with the baseline map and wore HALE in her left ear. In the right-hand panel of Figure 2 are aided thresholds for S1's right ear only, when S1 wore both the CI and HARE and when S1 wore only HARE. At low frequencies, S1's thresholds using both devices in her right ear are about 10 dB lower than when using only the CI in that ear, with the baseline map; thresholds are also 8 to 10 dB lower with both devices in her right ear than when using only HARE.

Figure 1.

Pre- (left panel) and postsurgery (right panel) unaided thresholds for FM tones for S1's left and right ears.

Figure 2.

Postsurgery aided thresholds for S1. The left panel shows aided thresholds for both ears: for the right ear, using the CI only with the baseline map and for the left ear, using HALE. The right panel shows two sets of aided thresholds for S1's right ear only. The first set are thresholds measured when S1 wore HARE only (indicated by the HA symbols). The second set are thresholds measured when S1 wore both the CI and HARE (indicated by the CI + HA symbols). For these thresholds, the CI was programmed with the treatment map, and 2 dB steps were used; exact values are provided.

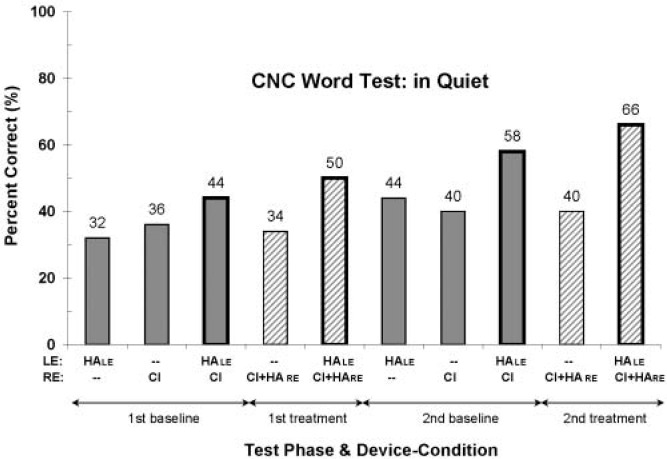

Consonant-Nucleus-Consonant Word Test

Results from the CNC word test conducted in quiet are shown in Figure 3, for each test phase and each device condition. Data in this figure and all subsequent figures are presented in chronological order from left to right. Overall, there appears to be an upward trend in word scores with time, perhaps indicating improvement arising from device experience or some learning and development in this young girl. Second, the scores from Si's two different everyday device-use conditions, shown with bold-bordered bars, are clearly the highest scores in each test phase. These two everyday device-use conditions are also bilateral device-use conditions. Thus, the greater scores for these two conditions may be attributed to device experience, to bilateral information, or to both. Finally, it appears that word scores are best overall for the three-device treatment condition (HALE and CI + HARE), with scores of 50% and 66% correct for the first and second treatment phases, respectively. The scores from the two-device baseline condition (bimodal), 44% and 58% correct, are slightly lower.

Figure 3.

Percentage-correct word scores for the CNC word test in quiet, for each test phase and device condition. Test phases are presented in chronological order, from left to right—namely, the first baseline, first treatment, second baseline, and second treatment phases. The devices used in each condition are indicated in the two rows labeled “LE” and “RE,” shown below the horizontal axis. Filled bars represent data collected at the end of the baseline phases, and striped bars represent data collected at the end of the treatment phases. The bold-bordered bars represent data from the baseline and treatment everyday device-use conditions, which are the bilateral device conditions.

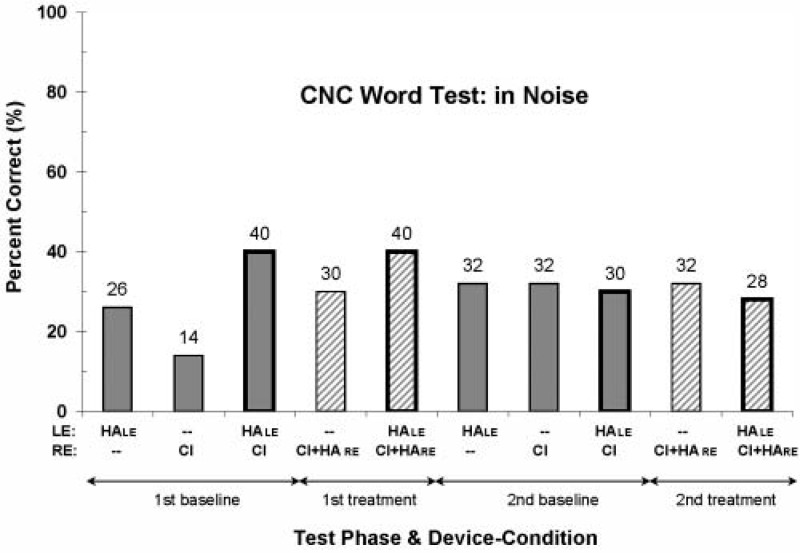

Results from the CNC word test conducted in noise, at a SNR of +10 dB, are shown in Figure 4. In contrast to the in-quiet CNC words scores, these data appear to be more variable. Word scores from the first test phases are similar in pattern to those from the CNC in-quiet tests. In particular, the bilateral device-use or everyday device-use conditions have the highest scores (40%). However, the word scores from the second test phases are nearly constant across all five device conditions. In addition, the scores decreased in the second test phases for the bilateral device-use conditions compared with the first test phase results (40% to 30% and 40% to 28%, respectively, for the baseline and treatment everyday device-use conditions). The reason for this is unknown. However, device malfunction is not a possibility because there was no decrease in scores across the first and second test phases for any of the three unilateral device conditions.

Figure 4.

Percentage-correct word scores for the CNC word test in noise, at a signal-to-noise ratio of +10 dB. See the caption to Figure 3 for additional information.

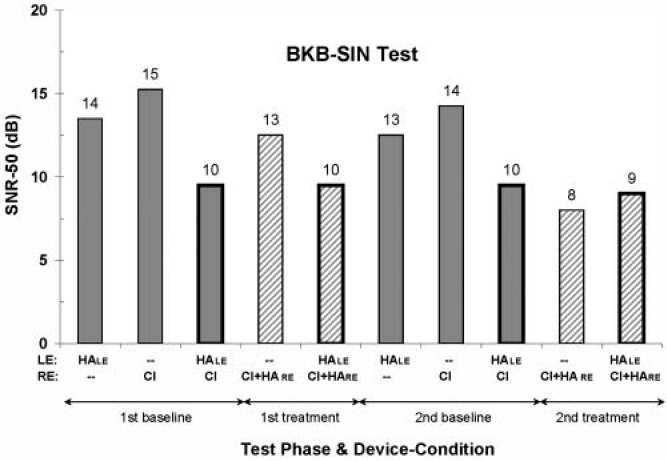

Bamford-Kowal-Bench Speech-in-Noise Test

The SNR-50 values are shown in Figure 5. For this test result, a lower SNR-50 value reflects a better speech-in-noise perceptual ability. Performance is fairly consistent across the first and second test phases, for both the baseline and treatment conditions. In addition, with the exception of the SNR-50 value from the second treatment phase for the unilateral combination CI + HARE, the best scores are found for the bilateral, or everyday, device-use conditions. And although there does not seem to be any difference between the baseline and treatment bilateral device conditions, there does seem to be better performance for two devices versus one. That is, when listening to speech in noise presented from a single spatial location, a combination of CI and HA devices at one ear (average SNR-50 value of 10.5 dB) or across ears (10 dB) yields better performance than does a CI alone (14.5 dB).

Figure 5.

SNR-50 (dB) values from the BKB-SIN test; lower values reflect better performance. See the caption to Figure 3 for additional information.

NOTE: SNR = signal-to-noise ratio; BKB-SIN = Bamford-Kowal-Bench speech-in-noise.

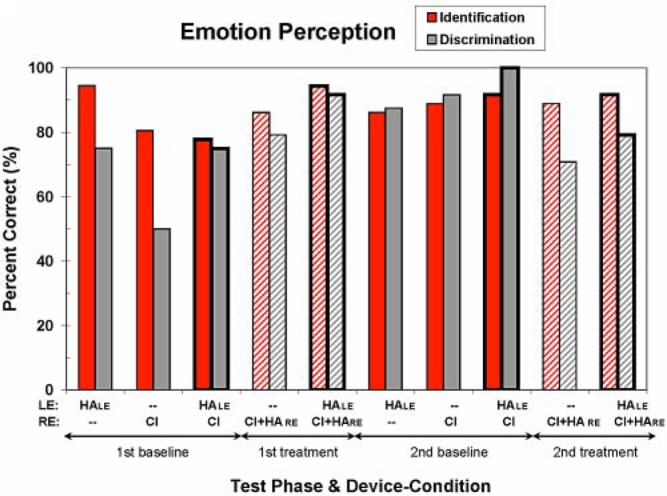

Emotion Perception

Figure 6 shows the results from the two types of emotion perception tasks—namely, emotion identification and emotion discrimination. Overall, S1 performs rather well on both tasks in nearly all device conditions, especially in the second test phases. In fact, in the second baseline phase, S1 has a perfect emotion discrimination score using her baseline everyday devices (HAE and CI). However, on average across the two test phases, there is no clear best or poorest device condition for either emotion task. For example, S1's average identification performances for the baseline and treatment everyday device conditions are 85% and 93% correct, respectively, whereas her average discrimination performances are 88% and 85% correct, respectively. S1's chance level of performance for the emotion discrimination task in the first baseline phase with her CI only (50% correct score) is unexplained and seems aberrant in comparison with the other results for these tasks.

Figure 6.

Percentage-correct scores for the two emotion perception tests—emotion identification and emotion discrimination. (See the caption to Figure 3 for additional information.) Chance performance is 25% correct for emotion identification and 50% correct for emotion discrimination.

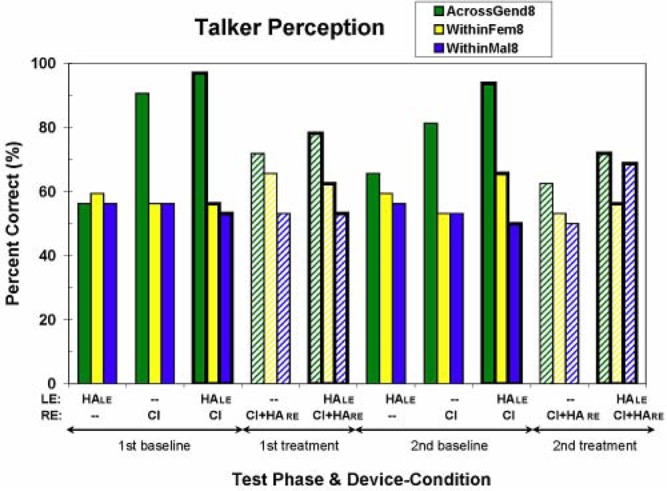

Talker Discrimination

Results from the three talker discrimination tasks are shown in Figure 7. For both within-gender tasks (within-female and within-male tasks) and for all device conditions, performance is not statistically different from chance1 (there is one exception among these 20 scores—namely the score for the within-male task from the second test phase using the treatment everyday devices of HALE and CI + HARE). In contrast, S1 performs better than chance for the across-gender talker discrimination task. Surprisingly, S1's across-gender scores are much better for the CI-only baseline condition than for the baseline HA-only condition. That is, wearing HALE by itself is not sufficient to score well, though wearing the CI (with the baseline map) by itself is. Also, there seems to be a consistent small improvement for both devices (HALE and CI) over the CI alone. However, for this task, the treatment, that is, adding aided acoustic hearing (via HARE) in the implanted ear, seems to interfere with across-gender talker discrimination ability. Though this behavioral result is not well understood, the physical properties of the stimuli in this task are such that the fundamental frequencies of all 16 talkers (range of eight talkers' average f0: 100–142 Hz and 163–237 Hz, for males and females, respectively) should have been amplified through S1's ITE HA (HARE).

Figure 7.

Percentage-correct scores for the three talker discrimination tests—across-gender, within-female, and within-male discrimination. (See the caption to Figure 3 for additional information.) Chance performance is 50% correct for all three talker discrimination tests.

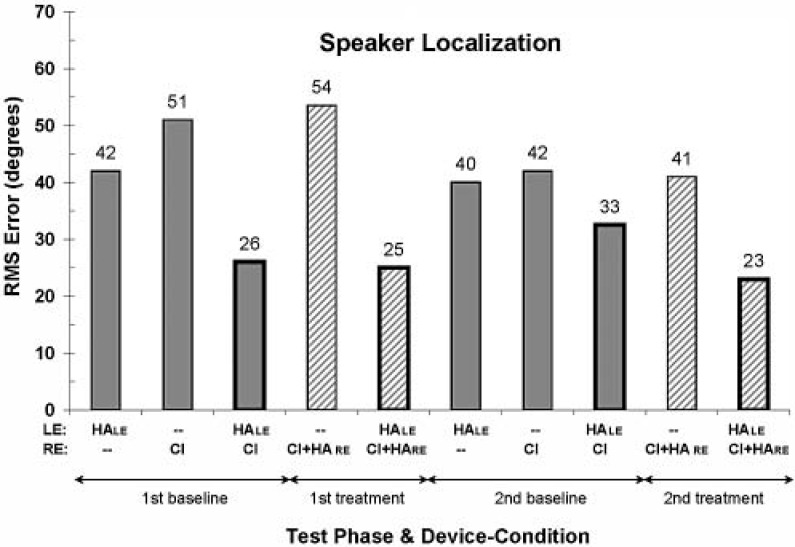

Speaker Localization

The rms error, in degrees, for the speaker localization test is shown in Figure 8. For reference, listeners with normal hearing can perform this particular localization task with rms errors of about 5° or less. For S1, there appears to be an overall trend of decreasing rms error (i.e., an improvement in performance) with time. Across all device-use conditions, the average rms error from the second phase tests is smaller than the average from the first phase tests. Also, as might be expected, the rms error is substantially smaller for the bilateral device-use conditions than for the unilateral device-use conditions. Finally, there appears to be a slight advantage of the treatment bilateral device-use condition (average rms error of 24°) over the baseline bilateral device-use condition (average rms error of 29.5°) for this localization test.

Figure 8.

Root mean square error in degrees for the speaker localization task; lower values reflect better performance. (See the caption to Figure 3 for additional information.) Speaker separation was 10°.

Discussion

In general, the results across outcome measures for S1 are roughly the same, or slightly better, for the treatment device-use condition compared with the baseline device-use condition. That is, use of a HA in S1's implanted ear in addition to a HA in the opposite ear provides, at best, a small extra benefit. Results from the CNC word test in quiet, BKB sentence test in noise, and localization test show a trend of slightly better performance with the addition of the second HA. Although these scores may not reflect a clinically significant increase in benefit, S1 expressed a preference for wearing all three devices and currently wears all three everyday. An additional consideration in interpreting the outcomes of this study is the possibility that benefit from the treatment conditions may have been underestimated. When S1 was tested in the baseline unilateral and bilateral conditions (only a CI at her right ear), the CI ear was not plugged. Because S1's unaided thresholds at her CI ear are 40 to 70 dB HL for the 125 to 750 Hz frequency range, it is possible that some high-level, natural, acoustic low-frequency information was received, in addition to information provided by the CI, even when the ITE HA was not used. We chose, however, to leave S1's right ear unplugged as this was her everyday device configuration. That is, S1 did not have a plug in her CI ear when she was not wearing her ITE HA.

S1's perceptual abilities with three versus two devices can be compared, somewhat, with those reported by others. However, there are just two such studies,2 and in both these studies, short-length CIs are implanted, and the only perceptual test was of CNC words in quiet. More important, these two studies compare different device-use conditions. In the study by Dorman et al. (2009), CNC word scores with three devices (10-mm CI plus bilateral HAs) are compared with scores with two devices (10-mm CI plus HA), where the HA is contralateral to the CI. This is equivalent to comparing results from S1 for her three-device, everyday treatment condition (HALE and CI + HARE) versus those from her two-device, everyday baseline condition (HALE and CI). In the Dorman et al. study of 15 adults, average scores were only slightly better when these listeners used three devices (58% correct; CI plus bilateral HAs) than when they used two devices (50% correct; CI plus contralateral HA, an interaural, two-device combination), though this advantage was not statistically significant for this group of listeners. S1's average CNC score in quiet was 58% with three devices and 51% with two devices used interaurally. This improvement in S1's word-recognition-in-quiet score is similar to the average, though not statistically significant, improvement in scores reported by Dorman et al. for adults. Of course, these results for S1 must be interpreted in the context of a single-subject study. Although no definite conclusions may be drawn regarding the benefits, if any, of using bilateral HAs with a CI, there appears to be no significant detriment. Additionally, S1 reported preferring the sound quality of the additional HA at her implant ear.

The other study of three devices versus two devices compares the use of a 10-mm CI plus bilateral HAs with the two-device combination of a 10-mm CI plus a HA that is ipsilateral to the CI or an intraaural two-device combination (Gantz et al., 2005). This is equivalent to comparing S1's results from her everyday treatment condition (HALE and CI + HARE) with those from the CI + HARE condition. For CNCs in quiet, S1's average score is 58% correct for three devices and 37% correct for the CI plus ipsilateral HA condition. This substantial benefit for S1 is similar to the benefit found for half the listeners in Gantz et al. (2005). In their comparison of three devices with this two device-use condition, four adults performed better, with improvements that ranged from about 8 to 40 percentage points. The remaining listeners in this study did not show a benefit for three devices compared with these two devices; three adults performed poorer, and one adult performed approximately the same. The other device-use conditions were not examined in either study (i.e., there is no comparison of three devices vs. two intraaural devices in Dorman et al., 2009, and no comparison of three devices vs. two interaural devices in Gantz et al., 2005).

S1's perceptual abilities with two devices versus one device (the CI device) can also be compared, somewhat, with those reported by others. When the second device is a HA (i.e., the second device is not another CI), two comparisons are possible—namely, (a) CI plus a HA contralateral to the CI (bimodal) versus the CI alone, and (b) CI plus a HA ipsilateral to the CI (EAS) versus the CI alone. Unlike the mere handful of studies that compare three devices with two devices, there are many studies that compare the use of two devices (CI plus HA) with one (CI) device. Reviews of the many studies of both types of two-device combinations, bimodal (Ching, Incerti, Hill, & van Wanrooy, 2006) and EAS (Talbot & Hartley, 2008), have been published recently.

For S1, there are many tests in this battery for which performance using a CI plus a contralateral HA (bimodal) is better than that using the CI only. These are the CNCs in quiet, CNCs in noise, BKB-SIN, across-gender talker discrimination, and speaker localization tests. This result is consistent, both in trend and in absolute performance levels, with many other reports of the benefits of bimodal device use (Ching et al., 2006). For example, for the localization task, S1 has an rms error of 29.5° when using her HALE and CI compared with an error of 46.5° when using her CI only. For similar speaker arrays, Ching et al. (2004) and Potts (2006) report average rms errors of 36° and 39°, respectively, when their adult listeners used the two-device combination of a CI plus contralateral HA. (Note: S1's rms error is even lower, 24°, for the three-device condition.)

When a HA is added ipsilateral to the CI, the second type of comparison—CI plus ipsilateral HA (EAS) versus CI—can be made. For S1, there are just a few tests in this battery with better performance in the CI + HARE condition than in the CI-only condition. For the CNC words in noise, the BKB-SIN test, and the speaker localization test, there seems to be a small advantage for two devices at the same ear compared with the single CI device. For other tests, CNC words in quiet, emotion discrimination, emotion identification, and within-gender talker discrimination, S1's performance is essentially the same in both conditions. S1's variation in benefit across test measures is also consistent with data from others. Dorman et al. (2008) report variations in benefit, across tests, for two devices at one ear (CI + ipsilateral HA) compared with CI alone or HA alone. For the recognition of speech in noise, average scores from their 15 listeners are significantly better when using both devices than when using either CI alone or HA alone. In contrast, for both within- and across-talker discrimination tests, the average scores from all three conditions (CI alone, ipsilateral HA alone, CI + ipsilateral HA) are all similar. For S1, there is one test—across-gender talker discrimination—in which she performs more poorly with two devices at one ear (CI + ipsilateral HA) compared with the CI-only condition.3 Although this result may seem unusual, decreases in performance when a HA is added ipsilateral to a CI have been reported by others when individually identified data are presented. For example, in a study by Fraysse et al. (2006) of nine adult CI users, six adults had better scores and three had slightly poorer scores in the two-device condition (CI + ipsilateral HA) compared with the CI-only condition for a CNC word test in quiet. This kind of result, poorer performance with both a CI and HA at one ear compared with the CI alone, might reflect a disruptive interaction between information carried in long electrode arrays (28.5 mm for S1 and 17 mm for those in the Fraysse et al., 2006, study) and information carried acoustically.

This enigmatic result, of both benefits and detriments in perception performance because of the addition of a HA at an ear with an implant, highlights the critical importance of well-coordinated fittings of these two devices, regardless of the use of a HA at the opposite ear. Even within a single study and a single outcome measure, the addition of a HA at the same ear as a CI yields both benefits and deficits across listeners (Fraysse et al., 2006). Such variation across listeners has also been noted by Gantz et al. (2005): “Some do better without a hearing aid in the implanted ear because they believe that the hearing aid blocks residual low-frequency hearing” (p. 799). For S1, the CI and ipsilateral HA were adjusted to provide a moderate degree of frequency overlap at her right ear. Given the variability across individuals and studies regarding fitting and benefits (James et al., 2006; Vermeire et al., 2008), it is possible that providing more redundant frequency information may have provided greater benefit for S1. Or, the opposite, completely nonoverlapping frequency information may have provided greater benefit. Clearly, a careful examination of well-coordinated fitting strategies for an implant and a HA at one ear is warranted, for all patients of all ages. Some researchers have begun this examination for 10-mm electrode arrays (e.g., Turner, Gantz, & Reiss, 2008). However, evaluating all possible map and HA configurations is time-consuming and can be difficult especially for young children. In addition, some consideration of these two different types of percepts (acoustic and electric) may be necessary. A recent report on similarity ratings by CI users with residual low-frequency hearing indicates that pure tones delivered acoustically and steady pulse trains delivered to one electrode elicit quite different percepts (McDermott & Sucher, 2006). As noted by McDermott and Sucher (2006), “there remains much progress to be made in optimizing the presentation of combined acoustic and electric signals to the sensorineurally impaired ear” (p. 81).

Describing the Combinations of Acoustic and Electric Stimulation

In this case study, S1 wore two devices (one CI and one HA) in the everyday baseline condition and wore three devices (one CI and two HAs) in the everyday treatment condition. In addition, in both these everyday listening conditions, both of S1's ears were receiving information. Though complex, these listening situations should be described clearly, precisely, and parsimoniously. Should terms or labels reflect the number and types of devices that a listener uses? Can our labels simultaneously reflect the unilateral or bilateral nature of the information received? Also, how should unaided, or natural, acoustic hearing be acknowledged?

The words most commonly used to describe combinations of devices, ears, and stimulation are bimodal and EAS. Typically, bimodal refers to a combination of devices across ears (CI at one ear, HA at the other), whereas EAS primarily refers to a combination of types of stimulation (electric + acoustic). Unfortunately, these words have numerous problems. For example, in more general perceptual research, the word bimodal means the use of two sensory modalities, such as audition + vision, or vision + touch, and so on. (Allman et al., 2008; Sinnett, Soto-Faraco, & Spence, 2008). Bimodal is also used by psychologists and neuroscientists to refer to individuals fluent in both a spoken and a signed language (Chamberlain & Mayberry, 2008; Emmorey, Borinstein, Thompson, & Gollan, 2008). Even for CI research, bimodal has an ambiguity problem regarding the inter- versus intracombination of devices. In fact, recently, some authors have added an adjective, for example, “binaural bimodal,” to reflect the explicit use of two ears (Blamey & Saunders, 2008; Ching et al., 2007). Also, bimodal does not extend well. For example, how would one describe the listening situation of two implants plus two HAs? The term EAS also suffers from the same critical ambiguity as bimodal regarding the inter- versus intraaural nature of the combination of acoustic and electric stimulation. In fact, in recent publications, one finds the term EAS applied to both inter- (Dorman et al., 2008; Gifford, Dorman, McKarns, et al., 2007) and intraaural (Gifford, Dorman, Spahr, & McKarns, et al., 2007; Gstoettner et al., 2006) combinations of electric and acoustic stimulation. In addition, sometimes EAS is confounded with hybrid- or short electrode arrays. However, because not all hybrid- or short electrode users have their acoustic hearing preserved (Fitzgerald et al., 2008; Talbot & Hartley, 2008) and because acoustic hearing can be preserved with standard-length electrode arrays, EAS should not be considered synonymous with either hybrid or short electrodes. Additionally, both the terms bimodal and EAS suffer from their inability to acknowledge explicitly the presence of natural (unaided) acoustic hearing, when applicable.

Because of the recently expanded candidacy for CIs and recent improvements in surgical techniques, more CI users can be expected to have preserved acoustic hearing, whether implanted with short electrode arrays or with standard-length arrays (as is the case with S1). Indeed, “preservation of any residual hearing must be a goal of all future CI surgeries” (Gantz et al., 2005, p. 801). Consequently, researchers are compelled to consider aided and unaided combinations of stimulation, both inter- and intraaurally, which were not possible previously. More important, perceptual experiments should be designed and interpreted with a realization that unaided low-frequency hearing in either ear may contribute to good listening performance in CI users with steeply sloping bilateral high-frequency HL. And, as done here, researchers should test and report postsurgery acoustic thresholds in ears with implanted devices, even when implanted with standard-length electrode arrays.

If preserved hearing is possible for standard-length as well as short electrode arrays, this may have a particularly significant implication for pediatric patients. Because long-term stability of any preserved residual hearing is thought to be more variable for children (Yao et al., 2006), implantation of a standard-length array may allow a pediatric patient the option of electrically presented low-frequency information should his or her acoustic low-frequency hearing deteriorate over time. Currently, because of improved surgical techniques used at our pediatric CI center, we have noted an increase in the number of children who have preserved low-frequency hearing with full insertions of standard-length electrode arrays. For these listeners, alternatives to the default CI map need to be considered as well as careful coordination of HA fittings with the CI map, especially when both a HA and CI are at one ear.

In summary, this case study reports on the perceptual benefits of using three devices—a HA and a CI at one ear while using a HA at the opposite ear. The patient was an 8-year-old girl with a fully inserted 31.5-mm electrode array, for whom hearing thresholds were preserved within 10 dB of preimplant levels at low frequencies (125–750 Hz). This is the first report of such a combination of three devices for a pediatric patient and may also be the first report of such a combination of three devices for a patient of any age with a standard-length electrode array. In addition, comparisons of the use of two devices versus one device were made. For these comparisons, the results from this single participant are consistent with those reported by others, in that there is an advantage to using a CI and HA at opposite ears compared with unilateral CI use. For the use of three versus two devices and across the tests in this battery (with the exception of the one talker discrimination task), the patient performed similarly or slightly better with all three devices compared with the two-device combination of an implant and a HA at opposite ears. As suggested by EAS studies with short electrode arrays, combining acoustic and electric hearing in one ear—perhaps especially with full-length electrode arrays—may warrant further exploration of the best coordinated fitting of both the HA and CI.

Recently, for CI users, Ross (2008) espoused the following clinical philosophy “that hearing aid usage be encouraged in the contralateral ear, unless contraindicated by poorer (not equal) performance during bilateral listening.” When residual hearing is preserved in both ears, we suggest an extension to this clinical philosophy for users of one CI. Specifically, HA use should be encouraged at both ears, unless contraindicated by poorer (not equal) performance during the listening condition with all (three) devices.

Acknowledgments

We gratefully acknowledge the generosity of Phonak for the donation of S1's ITE hearing aid. We also thank Central Institute for the Deaf for their cooperation with this study.

Notes

There were 32 trials for each talker discrimination task and each device condition in each test phase. From a simple binomial distribution with 32 trials, one would expect with 95% confidence that percentage-correct scores between 33% and 67% would occur strictly from chance or guessing.

We limit our discussion to other studies in which a cochlear implant is used in both the three- and two-device listening conditions. Consequently, we exclude the study by Gantz, Turner, and Gfeller (2006), in which the use of three devices (10-mm CI and bilateral HAs) is compared with the use of bilateral HAs.

Yet S1 does not seem to be an atypical CI user. When using only her CI, S1's talker discrimination performance is roughly consistent with scores reported for adult CI users. In particular, S1 discriminates talkers within and across gender with accuracies of about 55% and 86% correct, respectively. Spahr and Dorman (2004) report ranges of roughly 55% to 70% and 72% to 100% correct for within- and across-gender discrimination, respectively. Dorman, Gifford, Spahr, and McKarns (2008) report ranges of 68% to 72% and 71% to 100% correct for within- and across-gender discrimination. And Fu et al. (2004) report a range of 70% to 95% correct for across-gender discrimination.

References

- Allman B. L., Bittencourt-Navarrete R. E., Keniston L. P., Medina A. E., Wang M. Y., Meredith M. A. (2008). Do cross-modal projections always result in multisensory integration? Cerebral Cortex, 18, 2066–2076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bent T., Buchwald A., Alford W. (2007). Research on spoken language processing: Inter-talker differences in intelligibility for two types of degraded speech. Bloomington: Indiana University [Google Scholar]

- Blamey P. J., Saunders E. (2008). A review of bimodal binaural hearing systems and fitting. Acoustics Australia, 36, 87–92 [Google Scholar]

- Bradlow A. R., Torretta G. M., Pisoni D. B. (1996). Intelligibility of normal speech I: Global and finegrained acoustic-phonetic talker characteristics. Speech Communication, 20, 255–272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chamberlain C., Mayberry R. I. (2008). American sign language syntactic and narrative comprehension in skilled and less skilled readers: Bilingual and bimodal evidence for the linguistic basis of reading. Applied Psycholinguistics, 29, 367–388 [Google Scholar]

- Chang J. E., Bai J. Y., Zeng F.-G. (2006). Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Transactions on Biomedical Engineering, 53, 2598–2600 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Dillon H., Byrne D. (1998). Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. Journal of the Acoustical Society of America, 103, 1128–1140 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Incerti P., Hill M. (2004). Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear and Hearing, 25, 9–21 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Incerti P., Hill M., van Wanrooy E. (2006). An overview of binaural advantages for children and adults who use binaural/bimodal hearing devices. Audiology & Neuro-otology, 11 (Suppl. 1), 6–11 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., Psarros C., Hill M., Dillon H., Incerti P. (2001). Should children who use cochlear implants wear hearing aids in the opposite ear? Ear and Hearing, 22, 365–380 [DOI] [PubMed] [Google Scholar]

- Ching T. Y. C., van Wanrooy E., Dillon H. (2007). Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: A review. Trends in Amplification, 11, 161–192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon H. (2001). Binaural and bilateral considerations in hearing aid fitting. In Dillon H. (Ed.), Hearing aids (pp. 370–403). New York: Thieme [Google Scholar]

- Dorman M. F., Gifford R., Lewis K., McKarns S., Ratigan J., Spahr A., et al. (2009). Word recognition following implantation of conventional and 10-mm hybrid electrodes. Audiology & Neuro-otology, 14, 181–189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Gifford R. H., Spahr A. J., McKarns S. A. (2008). The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiology & Neuro-otology, 13, 105–112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K., Borinstein H. B., Thompson R., Gollan T. H. (2008). Bimodal bilingualism. Bilingualism, 11, 43–61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald M. B., Sagi E., Jackson M., Shapiro W. H., Roland J. T., Jr., Waltzman S. B., et al. (2008). Reimplantation of hybrid cochlear implant users with a full-length electrode after loss of residual hearing. Otology & Neuro-otology, 29, 168–173 [DOI] [PubMed] [Google Scholar]

- Fraysse B., Macias A. R., Sterkers O., Burdo S., Ramsden R., Deguine O., et al. (2006). Residual hearing conservation and electroacoustic stimulation with the nucleus 24 contour advance cochlear implant. Otology & Neuro-otology, 27, 624–633 [DOI] [PubMed] [Google Scholar]

- Fu Q.-J., Chinchilla S., Galvin J. J. (2004). “The Role of Spectral and Temporal Cues in Voice Gender Discrimination by Normal-Hearing Listeners and Cochlear Implant Users,” Journal of the Association for Research in Otolaryngology 5, 253–260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz B. J., Turner C. W. (2003). Combining acoustic and electrical hearing. Laryngoscope, 113, 1726–1730 [DOI] [PubMed] [Google Scholar]

- Gantz B. J., Turner C., Gfeller K. E. (2006). “Acoustic plus electric speech processing: preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant,” Audiol Neurootol 11 Suppl 1, 63–68 [DOI] [PubMed] [Google Scholar]

- Gantz B. J., Turner C., Gfeller K. E., Lowder M. W. (2005). Preservation of hearing in cochlear implant surgery: Advantages of combined electrical and acoustical speech processing. Laryngoscope, 115, 796–802 [DOI] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., McKarns S. A., Spahr A. J. (2007). Combined electric and contralateral acoustic hearing: Word and sentence recognition with bimodal hearing. Journal of Speech, Language, and Hearing Research, 50, 835–843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R. H., Dorman M. F., Spahr A. J., McKarns S. A. (2007). Effect of digital frequency compression (DFC) on speech recognition in candidates for combined electric and acoustic stimulation (EAS). Journal of Speech, Language, and Hearing Research, 50, 1194–1202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gstoettner W. K., Helbig S., Maier N., Kiefer J., Radeloff A., Adunka O. F. (2006). Ipsilateral electric acoustic stimulation of the auditory system: Results of long-term hearing preservation. Audiology & Neuro-otology, 11 (Suppl. 1), 49–56 [DOI] [PubMed] [Google Scholar]

- Gstoettner W., Kiefer J., Baumgartner W.-D., Pok S., Peters S., Adunka O. (2004). Hearing preservation in cochlear implantation for electric acoustic stimulation. Acta Oto-Laryngologica, 124, 348–352 [DOI] [PubMed] [Google Scholar]

- Hogan C. A., Turner C. W. (1998). High-frequency audibility: Benefits for hearing-impaired listeners. Journal of the Acoustical Society of America, 104, 432–441 [DOI] [PubMed] [Google Scholar]

- James C. J., Fraysse B., Deguine O., Lenarz T., Mawman D., Ramos A., et al. (2006). Combined electroacoustic stimulation in conventional candidates for cochlear implantation. Audiology & Neuro-otology, 11 (Suppl. 1), 57–62 [DOI] [PubMed] [Google Scholar]

- Kiefer J., Gstoettner W., Baumgartner W., Pok S. M., Tillein J., Ye Q., et al. (2004). Conservation of low-frequency hearing in cochlear implantation. Acta Oto-Laryngologica, 124, 272–280 [DOI] [PubMed] [Google Scholar]

- Kiefer J., Pok M., Adunka O., Sturzebecher E., Baumgartner W., Schmidt M., et al. (2005). Combined electric and acoustic stimulation of the auditory system: Results of a clinical study. Audiology & Neuro-otology, 10, 134–144 [DOI] [PubMed] [Google Scholar]

- Killion M. C., Niquette P. A., Gudmundsen G. I., Revit L. J., Banerjee S. (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. Journal of the Acoustical Society of America, 116, 2395–2405 [DOI] [PubMed] [Google Scholar]

- Killion M. C., Niquette P. A., Gudmundsen G. I., Revit L. J., Banerjee S. (2006). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners [Erratum]. Journal of the Acoustical Society of America, 119, 1888. [DOI] [PubMed] [Google Scholar]

- Kong Y. Y., Stickney G. S., Zeng F. G. (2005). Speech and melody recognition in binaurally combined acoustic and electric hearing. Journal of the Acoustical Society of America, 117, 1351–1361 [DOI] [PubMed] [Google Scholar]

- Kortekaas R. W., Stelmachowicz P. G. (2000). Bandwidth effects on children's perception of the inflectional morpheme/s/: Acoustical measurements, auditory detection, and clarity rating. Journal of Speech, Language, and Hearing Research, 43, 645–660 [DOI] [PubMed] [Google Scholar]

- Litovsky R., Parkinson A., Arcaroli J., Sammeth C. (2006). Simultaneous bilateral cochlear implantation in adults: A multicenter clinical study. Ear and Hearing, 27, 714–731 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott H. J., Sucher C. M. (2006). Perceptual dissimilarities among acoustic stimuli and ipsilateral electric stimuli. Hearing Research, 218, 81–88 [DOI] [PubMed] [Google Scholar]

- Peters K. P. (2006). Emotion Perception in Speech: Discrimination, Identification, and the Effects of Talker and Sentence Variability. Program in audiology and communication sciences. St. Louis, MO: Washington University; School of Medicine. [Google Scholar]

- Peterson G. E., Lehiste I. (1962). Revised CNC lists for auditory tests. Journal of Speech and Hearing Disorders, 27, 62–70 [DOI] [PubMed] [Google Scholar]

- Potts L. G. (2006). “Recognition and Localization of Speech by Adult Cochlear Implant Recipients Wearing a Digital Hearing Aid in the Non-implanted Ear (Bimodal Hearing),” unpublished PhD, in Department of Speech and Hearing Sciences (Washington University, St. Louis: ). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross M. (2008). Cochlear implants and hearing aids: Some personal and professional reflections. Journal of Rehabilitation Research and Development, 45, xvii-xxii. [PubMed] [Google Scholar]

- Schafer E. C., Amlani A. M., Seibold A., Shattuck P. L. (2007). A meta-analytic comparison of binaural benefits between bilateral cochlear implants and bimodal stimulation. Journal of the American Academy of Audiology, 18, 760–776 [DOI] [PubMed] [Google Scholar]

- Scollie S., Seewald R., Cornelisse L., Moodie S., Bagatto M., Laurnagaray D., et al. (2005). The desired sensation level multistage input/output algorithm. Trends in Amplification, 9, 159–197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinnett S., Soto-Faraco S., Spence C. (2008). The cooccurrence of multisensory competition and facilitation. Acta Psychologica, 128, 153–161 [DOI] [PubMed] [Google Scholar]

- Skarzynski H., Lorens A., Piotrowska A., Anderson I. (2007). Partial deafness cochlear implantation in children. International Journal of Pediatric Otorhinolaryngology, 71, 1407–1413 [DOI] [PubMed] [Google Scholar]

- Spahr A. J., Dorman M. F. (2004). Performance of subjects fit with the advanced Bionics CII and nucleus 3G cochlear implant devices. Archives of Otolaryngology–Head & Neck Surgery, 130, 624–628 [DOI] [PubMed] [Google Scholar]

- Stelmachowicz P. G., Pittman A. L., Hoover B. M., Lewis D. E. (2001). Effect of stimulus bandwidth on the perception of/s/in normal- and hearing-impaired children and adults. Journal of the Acoustical Society of America, 110, 2183–2190 [DOI] [PubMed] [Google Scholar]

- Stelmachowicz P. G., Pittman A. L., Hoover B. M., Lewis D. E., Moeller M. P. (2004). The importance of high-frequency audibility in the speech and language development of children with hearing loss. Archives of Otolaryngology–Head & Neck Surgery, 130, 556–562 [DOI] [PubMed] [Google Scholar]

- Talbot K. N., Hartley D. E. (2008). Combined electroacoustic stimulation: A beneficial union? Clinical Otolaryngology, 33, 536–545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner C., Gantz B. J., Reiss L. (2008). Integration of acoustic and electrical hearing. Journal of Rehabilitation Research and Development, 45, 769–778 [DOI] [PubMed] [Google Scholar]

- Vermeire K., Anderson I., Flynn M., Van de Heyning P. (2008). The influence of different speech processor and hearing aid settings on speech perception outcomes in electric acoustic stimulation patients. Ear and Hearing, 29, 76–86 [DOI] [PubMed] [Google Scholar]

- von Ilberg C., Kiefer J., Tillein J., Pfenningdorff T., Hartmann R., Sturzebecher E., et al. (1999). Electricacoustic stimulation of the auditory system: New technology for severe hearing loss. ORL: Journal for Oto-rhino-laryngology and Its Related Specialties, 61, 334–340 [DOI] [PubMed] [Google Scholar]

- Yao W. N., Turner C. W., Gantz B. J. (2006). Stability of low-frequency residual hearing in patients who are candidates for combined acoustic plus electric hearing. Journal of Speech, Language, and Hearing Research, 49, 1085–1090 [DOI] [PubMed] [Google Scholar]