Abstract

Purpose

Google Glass provides a platform that can be easily extended to include a vision enhancement tool. We have implemented an augmented vision system on Glass, which overlays enhanced edge information over the wearer’s real world view, to provide contrast-improved central vision to the Glass wearers. The enhanced central vision can be naturally integrated with scanning.

Methods

Goggle Glass’s camera lens distortions were corrected by using an image warping. Since the camera and virtual display are horizontally separated by 16mm, and the camera aiming and virtual display projection angle are off by 10°, the warped camera image had to go through a series of 3D transformations to minimize parallax errors before the final projection to the Glass’ see-through virtual display. All image processes were implemented to achieve near real-time performance. The impacts of the contrast enhancements were measured for three normal vision subjects, with and without a diffuser film to simulate vision loss.

Results

For all three subjects, significantly improved contrast sensitivity was achieved when the subjects used the edge enhancements with a diffuser film. The performance boost is limited by the Glass camera’s performance. The authors assume this accounts for why performance improvements were observed only with the diffuser filter condition (simulating low vision).

Conclusions

Improvements were measured with simulated visual impairments. With the benefit of see-through augmented reality edge enhancement, natural visual scanning process is possible, and suggests that the device may provide better visual function in a cosmetically and ergonomically attractive format for patients with macular degeneration.

Keywords: Google Glass, HMD, vision enhancement, vision rehabilitation, contrast enhancement, low vision

Most patients with advanced age-related macular degeneration (AMD) experience reduced visual acuity (VA) and contrast sensitivity (CS) because they have to rely on the residual non-foveal retina to inspect targets of interest. Although patients’ peri-peripheral vision is sufficient to recognize the gist of scenes,1 their quality of life is significantly affected by the impairment.2–4 The reduced visual function has a large impact on emotional well-being,5 and social engagement,6 especially due to its effect on tasks such as face recognition,7, 8 which require the ability to discriminate fine details or small contrast differences.

The low vision enhancement system (LVES) was the first such commercial system for distance use, utilizing an opaque head mounted display (HMD). It improved visual acuity and contrast sensitivity by converting the camera image into high contrast-magnified video.9 Later, video-based contrast enhancement and zoom-controlling HMD devices were commercialized, as the Jordy (Enhanced vision, CA, USA) and the SightMate (Vuzix, NY, USA). All these devices use full virtual vision HMDs that block the wearer’s natural field of vision.

An alternative approach, augmented reality (AR) using optical see-through HMDs, was proposed by Peli for various vision conditions,10 for example, employing wideband image enhancement to enhance the visibility of edges of the scene, as an alternative to magnification.11, 12 Luo and Peli developed a hybrid see-through/opaque HMD device that superimposed a scene edge-view over the wearer’s natural view in see-through mode, but the display could be made opaque to display only a magnified scene edge-view.13, 14 Using desktop displays, it has been shown that adding high contrast edges to static images,15 or videos16 is preferred by patients with AMD,15, 16 and improved visual search performance of elderly with simulated central visual loss.15, 17

Precise alignment of the augmented edges with the see-through scene is necessary, but difficult to achieve in the HMD application, because the camera and the display are usually shifted relative to each other. The parallax produced by that shift varies with distance to the objects of regard. An on-axis HMD-camera configuration was attempted and achieved excellent edge alignment, but the optical system aligning the camera and display reduced display brightness to such an extent that the augmented edges were too dim to provide a significant boost in visibility.18

Google Inc. (Mountain View, CA) has recently introduced a novel HMD system, Google Glass, which is intended for an interactive personal communication device. Glass provides a hardware and software development platform that can be applied to vision enhancement for patients with impaired vision. It features a wide-field high-resolution camera, a small optical see-through display positioned above the line of sight, a rechargeable battery, and enough computing power for image processing. The Android-based operating system, which is open for custom app development, supports OpenGL GPU computations and camera access.

Here, we report a preliminary exploration of the utility of Google Glass as a visual aid for patients with AMD by developing an app that provides edge enhancement as an augmented view. The real view of the environment through the Glass display is multiplexed with a scaled cartoonized outline of the view, captured by the camera. The user sees enhanced contrast at the location of edges in the real-world. We show how the spatial alignment problem between the augmented edges and the real view can be addressed to provide such an augmented view. We also describe how we generate and display augmented edge information, where the edge enhancement method and range of enhancement is user selectable, because optimal settings of these factors depend on the individual user’s impairment and the immediate task that the user anticipates, as well as the visual and environment and lighting conditions. The user interface for controlling system parameters must be simple, intuitive, and quick.

METHODS

Google Glass Hardware

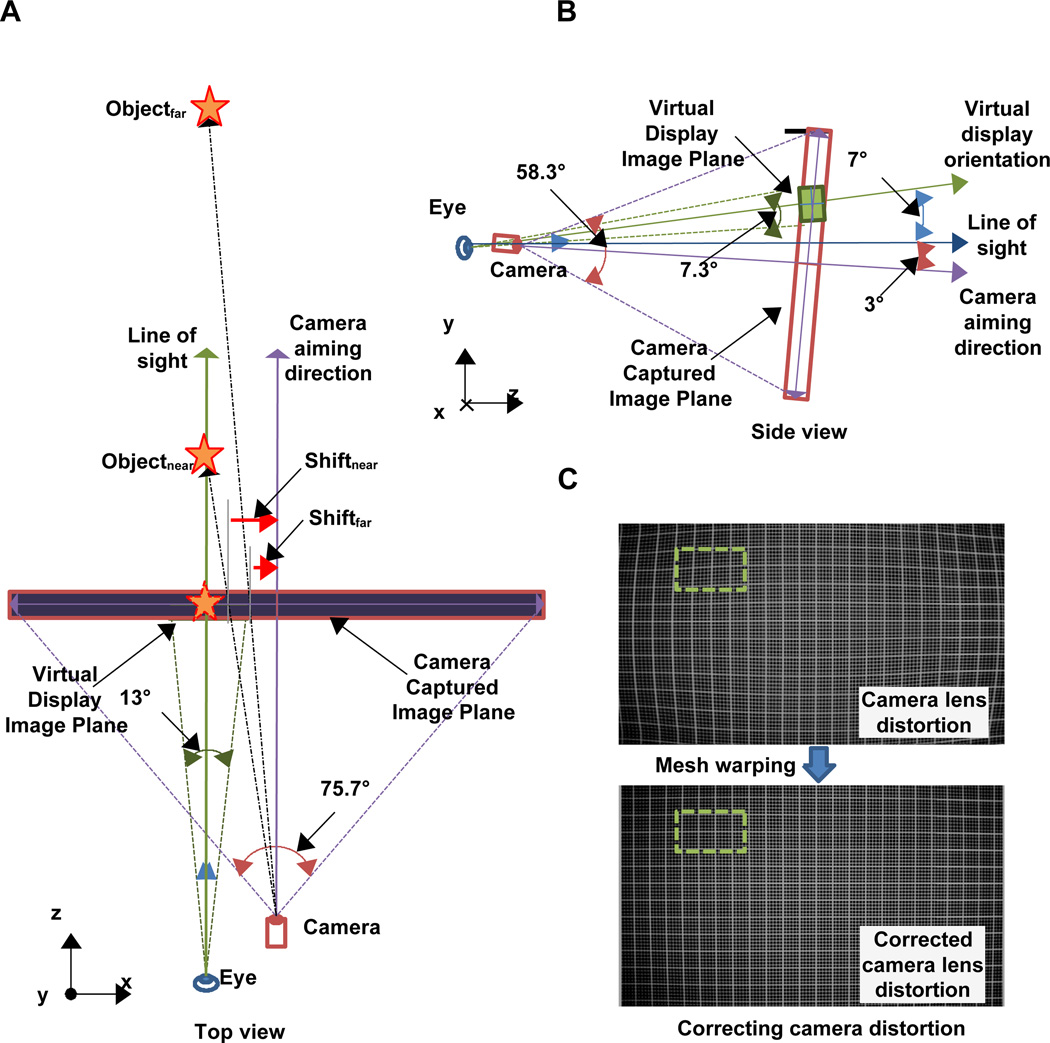

We have measured various parameters of the systems that are not yet provided by Google. The image and video resolution details were derived from the properties of the files recorded by Glass and the field of view dimensions were measured by identifying the horizontal and vertical endpoints of the captured image, video frame, and see-through display, when the camera was aimed perpendicularly at a marked wall. The Google Glass’ wide field of view camera captures 75.7° × 58.3° static images at a resolution of 2528 × 1856 pixels. During video capturing, the upper and lower portions of the captured image are cropped to fit the high definition (HD) screen ratio of 16:9, and then encoded to the resolution of 1280 × 720 pixels (720P) at 30 frames per second. The virtual display is only available for the right eye, covering 13° × 7.3° of the visual field, and has a resolution of 640 × 360 pixels (Fig. 1A). The camera and display are horizontally separated by 16mm, but encased in a single rigid compartment. Therefore, adjustment of the position or angle of the display also changes camera position and aiming angle. The optics for the display provides a small eye box angled 7° downwards, thus the virtual display is only visible if the display is positioned at the designated location (7° above the wearer’s line of sight). The camera is aimed 10° downward relative to the virtual display (Fig. 1B). This setting allows more natural camera aiming direction (3° downward) when the user is standing upright and looking straight into the display by tilting the head down 7°. The outer surface of the see-through display is coated with photochromic material, so that the see-through view is dimmer outdoors (when exposed to ultraviolet (UV) radiation) to improve the contrast/visibility of the displayed image. Just like the transitions spectacle lenses from Transitions Optical, Inc. (Florida, USA), but the coating is applied to the display’s external surface for better exposure to the UV and, therefore, darken faster than the spectacle lenses.

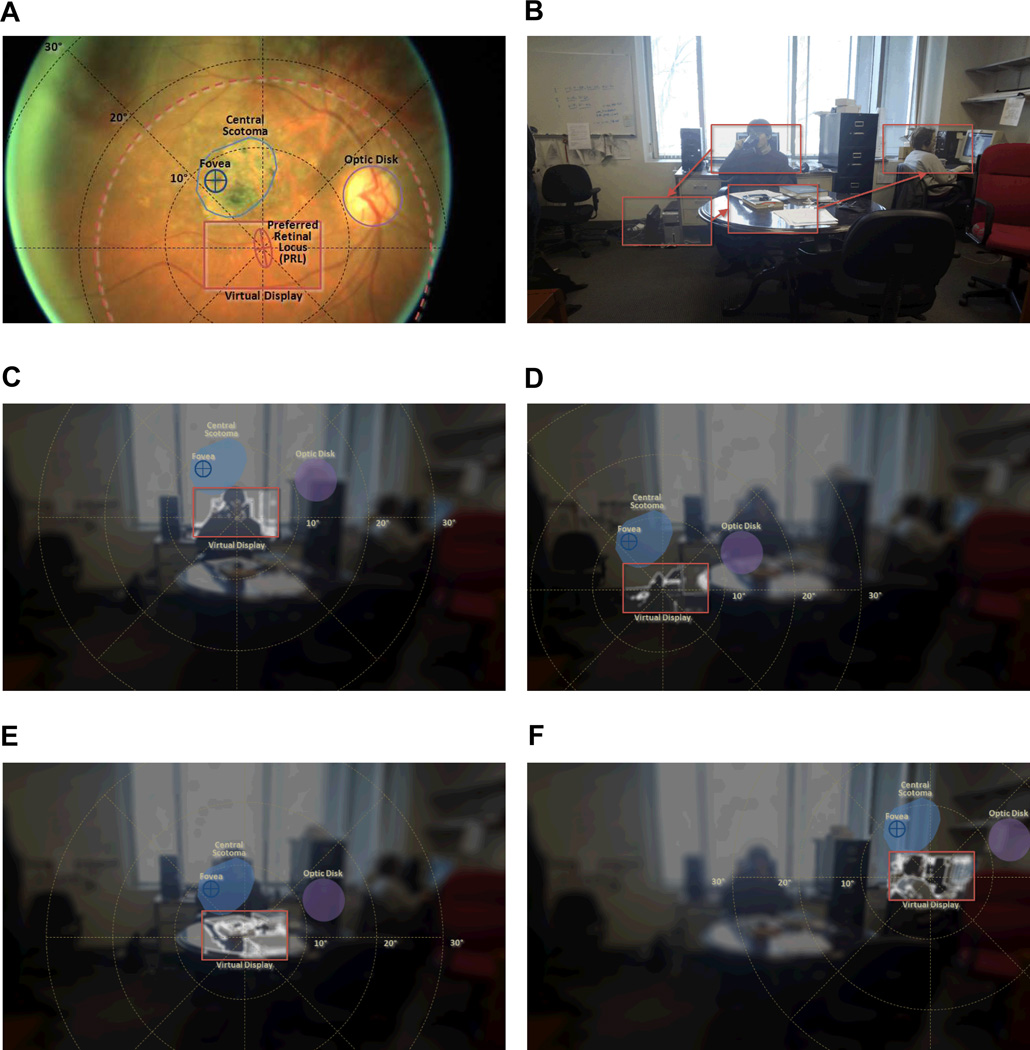

Figure 1.

(A) Schematic (top-down view) of Google Glass hardware configuration: 2D translation of the displayed image is required depending on distance to the object of interest (parallax). (B) Schematic (side view) of Google Glass hardware configuration with the eye in primary position of gaze and the head upright: 10° angular compensation between the virtual display orientation and the camera aiming direction is needed for visual alignment. (C) The Google Glass camera lens’ distortion presented by photographing a grid (top), and the distortion corrected grid (bottom). Dashed line rectangle is the image portion to be displayed on the virtual display. Note that only a small portion of the captured image needs to be rendered. A color version of this figure is available online at www.optvissci.com.

Design Assumptions for Augmented Vision Alignment

Assuming the following conditions makes AR alignment simpler: 1) the virtual display plane is perpendicular to the wearer’s line of sight, and 2) the line of sight passes through the center of the virtual display. In the case of Google Glass, when aiming straight ahead, the wearer must tuck his/her chin down 7° to position the virtual display perpendicular and centered on the line of sight. This is similar to the usage of low vision bioptic telescopes, where the user views the scene through the carrier lens most of the time, but glimpses through the telescope by tilting the head downward.19 Bioptic telescopes are typically set at 10° upwards.

Matching the Size of the Video Image to the See-through View

The field of view of the camera for video preview and the virtual display span 75.7° × 42.6° and 13° × 7.3° of visual angle, and have 1920 × 1080 and 640 × 360 of pixels, respectively. Note that 1080p is the maximum resolution available directly from the camera for video preview, before being encoded to the default video resolution (720p). If the full captured image is drawn to fill the virtual display, as in normal video preview during the recording, the image displayed will be minified by a factor of 5.8×, compared to the see-through view. To match the size of the AR image to the real world, only a small portion of the captured image (330 × 186) needs to be displayed on the virtual display (see the cropped-out area marked in Fig. 1C), and the image should be magnified by a factor of 1.94× to fill the virtual display.

Correcting Camera Lens Distortion

The wide field of view camera on Glass introduces a non-uniform optical distortion (a barrel distortion at the image center, gradually turning into a pincushion distortion towards the periphery) with less distortion in the central field of view (Fig 1C top). The distortion becomes more apparent as the eccentricity from the center of the camera increases. In on-axis camera-display HMD,18 this type of distortion causes few problems because the higher distortion is further from the center, and the image portion to be used (with large magnification) is mostly away from the distorted field. However, for an off-axis camera-display AR system like Google Glass, controlling distortions at the image periphery is necessary because the image portion to be displayed on the virtual display (marked in green dashed line in Fig. 1C) is taken from the more distorted upper-left portion of the captured image (due to the 10° vertical rotational difference and 16mm horizontal disparity between camera and the virtual display).

We measured the camera distortion by photographing a square grid perpendicularly, at 8 inches. All intersections captured were marked, and distortions for each intersection were measured as the horizontal and vertical offsets from the corresponding intersection in a uniform grid. Our custom calibration program produces a black/white grid (with intersections being marked as red) on a desktop monitor and analyzes the captured grid image by checking the position of those red dots. A corrective mesh was created by subtracting the offsets from the corresponding intersection coordinates from the uniform grid. This corrective mesh was used as a vertex for the texture fragment shader process,20 and was applied to warp the captured image and compensate for the lens distortion (Fig. 1C bottom). Unlike the camera, the optical display does not show any apparent spatial distortions, so no additional correction was needed.

Selection of Displayed Image Portion

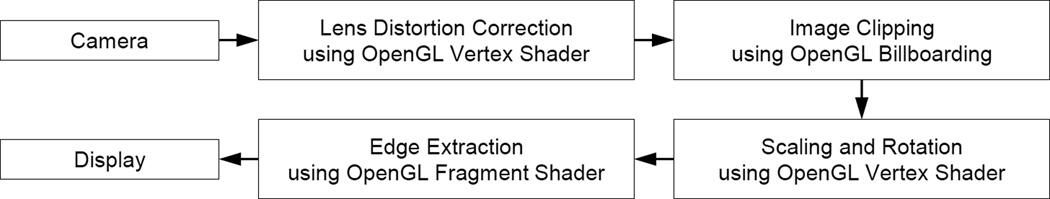

Once the captured image is corrected for camera distortion, the image is projected onto the display plane. Since the virtual display plane is tilted upward 10° relative to the camera aiming direction (see Fig. 1B), the image is projected to a rotated (10° downward) display plane to compensate for the relative angular difference between the camera aiming direction and the virtual display orientation. An additional 2D translation must be applied to align the magnified image to the see-through view (see Fig. 1A). The displacement between the camera and the virtual display axes produces a parallax, where the magnitude of image misalignment between captured images and the view through the virtual display varies with the distance to the aimed object. Since near objects (less than 10ft away) are usually easier for AMD patients to recognize, the default operational correction for parallax effect we applied is for 10ft. The user may change the parallax correction for closer, default, or farther distances by swiping the Glass touchpad forward or backward, where forward swiping intuitively corrects for farther parallax. The disparity caused by parallax decreases as distance to the object increases, e.g. for objects at 1ft, 3ft, 10ft, and 30ft away, the amount of disparity correction need to be applied are 3.0°, 1.0°, 0.3°, and 0.1°, respectively. Figure 2 shows the overall image processing flow implemented with OpenGL and Shader,20 where the displayed portion of image is defined following the lens distortion correction, and edge extraction is only applied to the smaller displayed portion of the image.

Figure 2.

Block diagram of the image processing flow. Lens distortion correction is applied first, then image clipping, which is selected based on disparity setting, is applied next. Additional scaling and rotation is applied to compensate vertical angular difference between camera and the display. Edge extraction and enhancement is only applied to the clipped image area (defined by series of vertex operations).

Edge Enhancement Method

Two types of edge enhancement methods have been implemented so far: the positive and negative Laplacian filters21. The positive Laplacian filter computes the gradient of pixel brightness, determines edge location using a threshold, and suppresses below threshold pixel gradient values. The results on a see-through display will be enhanced bright edges with clear surroundings (Fig. 3A). The negative Laplacian filter gives the opposite effect; edges become transparent but surroundings of the edges are highlighted (Fig. 3B).

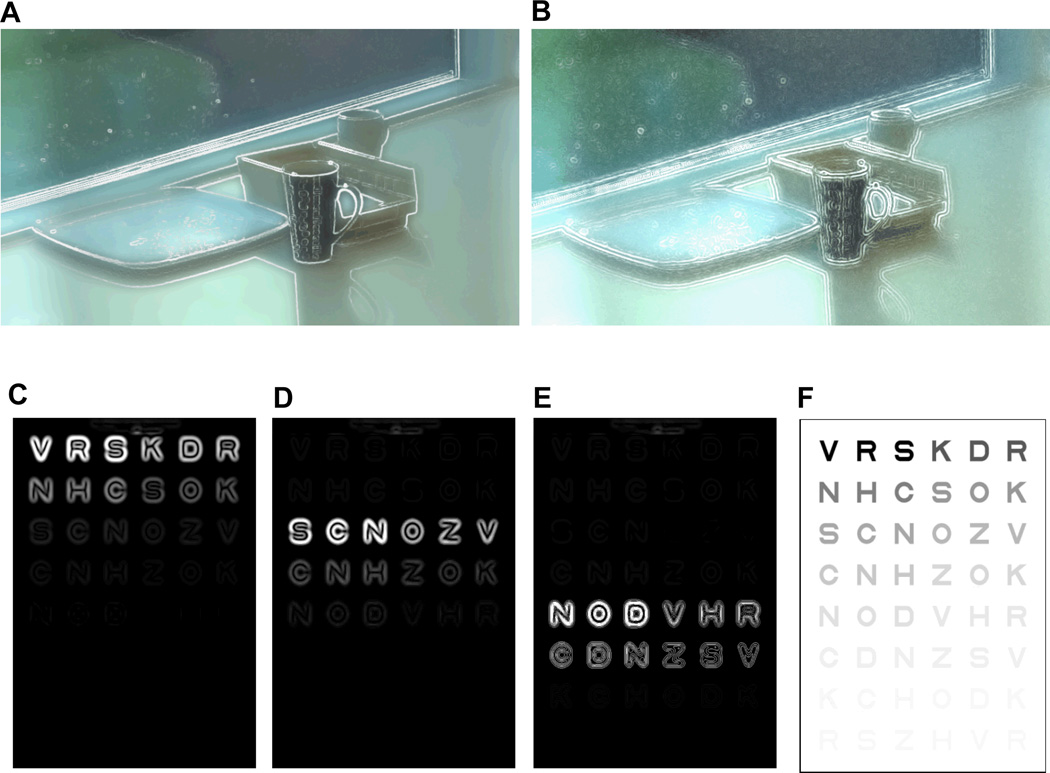

Figure 3.

Illustrations of edge enhanced scene with (A) a positive Laplacian edge detection method and (B) a negative Laplacian edge detection. Note that with positive Laplacian method, the location of edge is whitened, but with negative Laplacian method, surrounding of edges are whitened. (C–E) Illustrations of the effect of selective (positive) contrast enhancement of a Pelli-Robson virtual contrast sensitivity chart (F), calculated with high, medium, and low level contrast ranges, respectively. Note that only the edge information is shown in (C–E), while the overlaid view of the edges and see-through views are shown in (A) and (B). Different scaling levels applied to the images in the top and bottom rows cause the apparent thickness difference of enhanced edges. A color version of this figure is available online at www.optvissci.com.

Users can choose to enhance one of three contrast dependent edge levels. Figure 3C–E illustrates the effects of selecting edge contrast level, when enhancing the view of a Pelli-Robson contrast sensitivity chart22 (Fig 3F). At the high threshold setting, only edges with high contrast are enhanced (Fig. 3C); at the medium setting, only the medium-contrast edges will be enhanced while high contrast edges will not be enhanced (Fig. 3D); and at the low setting, only weak edges are enhanced (Fig. 3E). This adjustable enhancement (bi-level thresholding) is desired because individual AMD patients have varying levels of contrast sensitivity, and most of them may be able to see strong edges even without enhancement. Enhancing already visible edges may not be helpful or desired. It also has been demonstrated that for some object recognition tasks, like face and character recognition, selectively enhancing the contrast of the critical frequencies band for a a specific contrast range, results in better preference or performance.12, 15, 23 Therefore, the user should be able to choose the range of edge contrast for enhancement that fits his/her needs. This approach of selective contrast-level enhancement was described and proposed previously10, 13, 14, 24, 25 but, to our knowledge, this is the first such implementation on a see-through HMD device.

If the system enhances edges with contrast lower than a single threshold, it typically also increases noise in the image. Therefore, the enhancement level should be defined by two contrast thresholds, upper and lower, where the upper threshold is matched with the user’s contrast sensitivity to increase the contrast of just invisible details, and the lower threshold limits enhancement of image noise (see Fig. 3C–E).

Contrast Sensitivity Measurement

We conducted a preliminary study to examine the effectiveness of edge enhancement. Three normal vision (NV) subjects (aged 25–45, VA of 20/30 or better) participated and had their contrast sensitivity measured using a Pelli-Robson contrast sensitivity chart,22 at 1m away, under recommended lighting conditions (the chart was illuminated as uniformly as possible without any glare, and white areas were about 85 cd/m2). NV subjects were used because the effect of edge enhancement depends more on the Glass’ hardware performance (camera and display) than the individual’s contrast sensitivity threshold. With the maximum enhancement setting, which enhances the full range of edge contrasts, the edges drawn on the virtual display produced higher contrast than the highest contrast character on the contrast sensitivity chart (more than by a factor of two, in terms of pixel values of white background of the chart and white AR edges in Fig. 4).

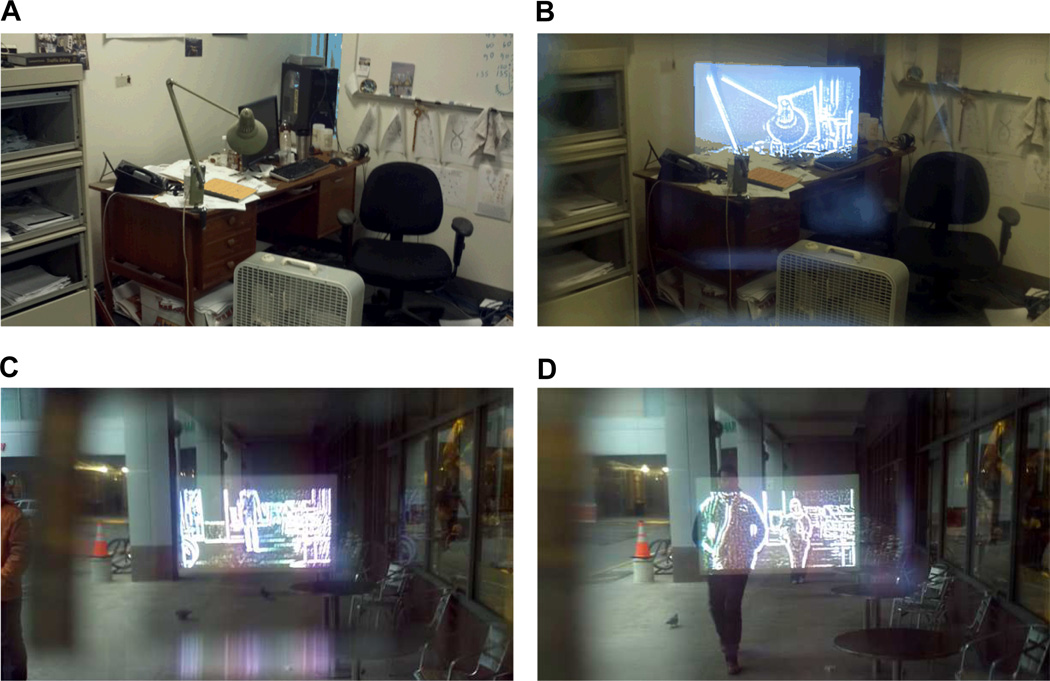

Figure 4.

Alignments of enhanced edges at different distances photographed through Google Glass. Indoor scenes (A) without AR edge enhancement, and (B) with AR edge enhancement (desk lamp is about 10ft away) showing slight misalignment. Outdoor scenes on a cloudy Boston day (C) with a person standing at 50ft away, and (D) two people approaching from about 20ft and 40ft away. Note that alignment of the eye (in this case, a camera to take pictures) and the virtual display position are not exactly aligned (can be observed by the position of the virtual display within the frame, especially in (B), but the AR alignment is robust enough to provide ‘on top’ edges. A color version of this figure is available online at www.optvissci.com.

For each subject, contrast threshold (the lowest visible contrast) was measured under 4 conditions: with and without a heavy diffuser filter (which effectively raises the contrast detection threshold of subjects by a factor of 4), and with and without edge enhancement. The diffuser filter was inserted between the Glass’ display compartment and subject’s eye in the Glass enhanced condition and was held in front of the eye in the unenhanced condition.

RESULTS

Alignment of AR Edges with See-through View

Some spatial misalignment of the off-axis camera-display system is unavoidable. In our implementation, the misalignment is smaller than 0.3° for edges farther than 10ft away (less than 2.5 pixels offset in the original image). This misalignment may go unnoticed because the amount is comparable to the thickness of the enhanced edges. Fig. 4 demonstrates indoor and outdoor AR alignment with the maximum enhancement setting. Some misalignment is visible in Fig. 4B because the misalignment for edges located farther than 10ft is magnified on the virtual display by a factor of 1.94. Note that the misalignment is less visible in Fig. 4C or Fig. 4D, as the offset at farther distances is too small to be visible even with the magnification.

Effects of Edge Enhancement

Subjects’ contrast sensitivity improved substantially with AR edge enhancement, compared to their contrast sensitivity without the Glass in front of the subjects’ eye. The contrast sensitivity benefits become larger with insertion of a diffuser filter in front of the subjects’ eye. However, this improvement is bound by the sensitivity of the camera, and could not improve beyond the log contrast sensitivity of 1.50. Thus the current system may provide letter contrast sensitivity improvement only for patients with letter contrast sensitivity worse than 1.50.

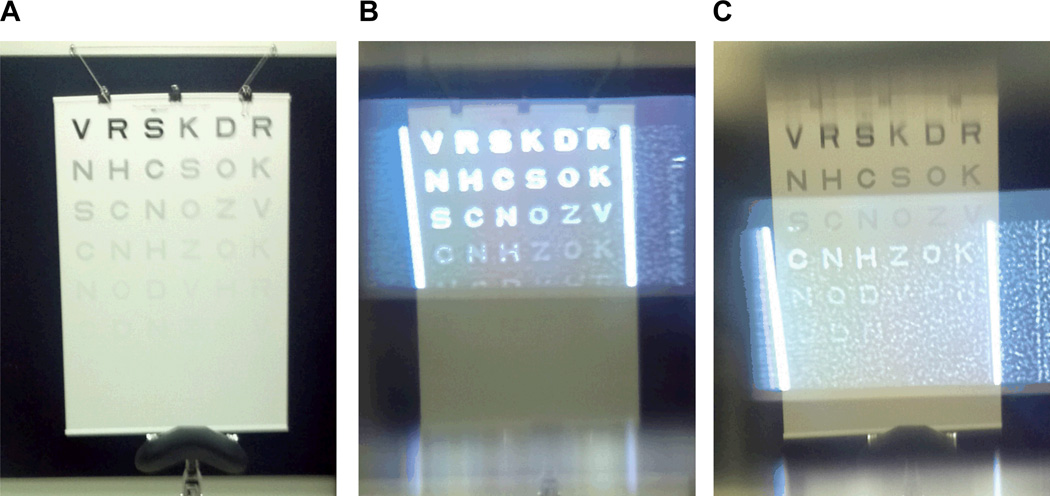

With the maximum edge enhancement setting, the effect of the noise becomes stronger when the scene has low contrast, because the signal-to-noise ratio is decreased. An example of the AR edge enhancement for the contrast sensitivity chart used is shown in Fig. 5.

Figure 5.

(A) Photo of the Pelli-Robson contrast sensitivity chart used. (B) The same chart photographed through the Glass. Strong edges are enhanced when the high contrast edges are targeted. (C) When low contrast edges are targeted, camera and other system noise are also enhanced, but still the structured edge formation can be observed. Compare that to the ideal conditions calculated in Fig. 2, which is free of camera noise. Note that the edge free portion of the display is not completely dark due to leakage of the LCD display backlight. A color version of this figure is available online at www.optvissci.com.

DISCUSSION

Utility of Enhancement over a Small Section of the Visual Field

The 13° × 7.3° span of the Glass virtual display may seem to be too small to provide practical benefits to AMD patients. However, considering that the typical distal field of view through a 3.0× bioptic telescope spans between 6° to 12° in diameter,26 yet it delivers functional utility, we can expect at least similar level of functionality in terms of visual field size. The foveal and parafoveal visual field (< 5° eccentricity) provide the most detailed visual information and is critical for object recognition27, 28. The field within that or just outside of that range provides the same functionality to patients with central scotomas.

The edge enhancement AR approach has some advantages over bioptic telescope magnification. Bioptic magnification creates a ring scotoma that obstructs instant integration of the central view from the wide peripheral view,29, 30 making image navigation difficult. For example, when a 3.0× bioptic telescope with 12° field-of-view is used, 36° of circular retinal surface (shown as a dotted red circle in Fig. 6A) will be filled with the 3.0× magnified view of the 12° field-of-view in real-world. This “ring scotoma” makes it difficult for the user to see the surrounding environment located between 6° and 18° eccentricity, when looking through the telescope. This is true even if the telescope is used monocularly. With the Glass AR implementation of contrast enhancement, simultaneous integration of both central and peri-peripheral scene perception is possible with no ring scotoma. This implementation affords the patient the benefit of natural head scanning processes, e.g. planning of scanning and continuous readjustment of instantaneous aiming direction.

Figure 6.

Illustration of the utility of the image enhancement app on the Glass by a patient with AMD. (A) A retinal image of a patient (presented upside down to correspond to the visual field orientation of the other figures) with superimposed perimetry polar grid centered at the PRL. The location of the PRL, as measured by the Nidek MPI, and the calculated bivariate ellipse including it are shown with a frame, the size of the field of the Glass, centered on it (Virtual Display). A (doted orange) circle of 36° diameter centered at the PRL, illustrates the retinal span of a 12° field-of-view of a 3.0× bioptic telescope, which provides a distal field-of-view similar to that of the Glass display. (B) A room scene taken with the Glass, illustrating a series of possible objects to be examined by the patient using the image enhanced Glass. (C–F) Sequential scene scanning with edge enhancement at the selected gazed locations. The space variant imaging (eccentric-wise blurring) and HDR conversions are applied to a scene image to illustrate perceptual view of the patient. Note that unlike the bioptic telescope, all surrounding parts of the scene remain visible throughout the scanning process. In these images the illustrated blur is applied over the enhancement to illustrate the improved visibility provided by the enhanced edges. A color version of this figure is available online at www.optvissci.com.

Fig. 6 illustrates a hypothetical head scanning sequence of an AMD patient using a Google Glass edge enhancement application. With a central scotoma of about 7° in diameter, the patient fixates with preferred retinal locus (PRL) under the scotoma in this case (Fig. 6A), as measured by the Nidek MPI (Padova, Italy). Although high spatial frequency-low contrast features are not recognizable (as illustrated in the blurred images of Figs. 6C–F), the patient can still see the overall layout of the scene using his residual peri-peripheral vision. Therefore, the patient is able to identify the series of possible objects of interest, and plan to examine those objects sequentially with the enhanced view using head scanning, as diagrammed in Fig. 6B.

A space variant blurring31 was applied to the scene image (representing the 75.7° horizontal camera visual field) to illustrate the reduction in sensitivity with increasing retinal eccentricity. In addition, to illustrate the bright enhanced edges on the virtual display, the brightness of the see-through image has been scaled down to fit into the bottom half of the pixel brightness range, while the onscreen edge brightness is fit into the upper half of the brightness range. This produces an illusion of a high dynamic range (HDR)32 AR view, as is experienced by the user.

As scene scanning is executed, detailed observations, aided by the enhanced edge information, can be made at each gaze position (Fig. 6C–F), while the location of the next scanning target can be perceived with peripheral vision, and the gist of the scene is undisturbed. This is a clear benefit of the see-through HMD design that provides enhanced vision near the patient’s PRL while preserving peripheral vision.33

In the transition from Fig. 6D to Fig. 6E, the next scanning target may appear to be blocked in part by the physiological scotoma, but this location usually can be seen by the left eye, illustrating another advantage of this method that benefits from the binocular reduction of scotomas. Although we made an effort to generate perceptual views of a patient, Fig. 6 is still a conceptual illustration of the functionality, and does not represent a veridical simulation. Verification of such a simulation is complex and laborious33–36 and is beyond the scope of this paper.

The sequence of head scanning enables the patient to acquire “central” visual information, while utilizing near peripheral vision, in a process very similar to natural foveation during eye scanning. The natural aspect of this function makes it intuitive and easy to adopt. The same scanning can be applied with smaller head movements to examine details of an object that is slightly larger than the field of view of the display. The ability to fuse the two eyes’ peripheral vision37–39 contributes to the comfortable use of the display, and may enable more extended use than the short glimpses, which are typical of bioptic use.

The lack of magnification also eliminates the vestibular-visual motion signal conflicts, which may cause difficulties in adaptation to the bioptic telescope.40–42 The bioptic telescope depends on magnification, where high spatial frequencies image contents are converted to low spatial frequencies, whereas the edge enhancement we implemented for Glass enhances the contrast of edges over a wide range of spatial frequencies (wide band enhancement).11, 12

Limitations of Current Implementation

The illustrations of Fig. 6 also point to a known limitation of the current implementation of the AR edge enhancement. With the optical see-through display, enhanced edges must be drawn as white/bright pixels because dark/black edges in the see-through display become transparent. As a result, the current implementation of edge enhancement increases contrast well in dark areas of the scene (as shown in Fig. 6D and 6F), but does not provide the same level of contrast enhancement over a bright areas (as shown in Fig. 6C and 6E). A possible solution would be covering the front (and possibly the top and bottom) of the Glass display compartment with black masking tape, and converting it into an opaque display. With this video see-through configuration, contrast enhancement can be achieved easily by displaying not just the edges but the full magnification-matched video image enhanced by bipolar (black and white) edges43, 44 and possibly additional contrast enhancement approaches.11, 45 Such functionality is possible thanks to the small frame-free format of the display, and the ease with which it can be taken out of the line of sight when it is not needed. The use of video see-through in the implementation will also assure perfect alignment of the edges to the natural (photographed) view. The parallax will cause a shift of the whole display screen image relative to its surrounding but that will be small, and importantly it will not degrade image quality.

It also can be observed that strong edge enhancement may mask weak contrast, high frequency visual features if they are close to the strong edges. A more sophisticated algorithm that considers the proximity of local edges should be explored. The enhancement of only moderate contrast edges discussed above is also a possible solution to this limitation.

CONCLUSIONS

Increased edge-contrast has been demonstrated to be preferred by patients with AMD and other low vision conditions when watching TV 12, 16, 45 or viewing images15, 17. Edge enhancement has also been demonstrated to improve performance in a search tasks performed on a computer screen.15–17, 45 The utility of this approach for enhancing the natural environment has been suggested, but so far has not been implemented due to technological limitations. The Google Glass appears to overcome many of these limitations and thus may provide a valuable platform for implementing a variety of applications that can aid patients with various low vision conditions: 1) at a very reasonable cost, 2) with a cosmetically acceptable and socially desirable format, and 3) with flexibility that supports further innovation. At this stage, we think that a limitation posed to Google Glass in using it as a visual aid is its relatively short battery runtime (about 40 min per full charge with our AR edge application). However, the short runtime problem can be easily overcome by adding an external power pack. Many applications may be developed on this platform to meet various needs of low vision patients. We are looking forward to evaluating the utility of such applications with patients and improving their function.

Table 1.

Measured contrast threshold (in log contrast sensitivity).

| Without light diffuse filter | With light diffuse filter | |||

|---|---|---|---|---|

| With edge enhancement |

Without edge enhancement |

With edge enhancement |

Without edge enhancement |

|

| S1 | 1.50 | 1.50 | 1.50 | 0.75 |

| S2 | 1.50 | 1.50 | 1.50 | 0.75 |

| S3 | 1.50 | 1.50 | 1.50 | 0.75 |

ACKNOWLEDGMENTS

Supported in part by NIH Grants R01EY012890, R01EY005957 and by a gift from Google Inc. EP has patent rights, through a patent assigned to the Schepens ERI, for the use of wideband enhancement for impaired vision in HMD. EP is also a consultant to Google Inc. on the Glass project.

REFERENCES

- 1.Tran TH, Rambaud C, Despretz P, Boucart M. Scene perception in age-related macular degeneration. Invest Ophthalmol Vis Sci. 2010;51:6868–6874. doi: 10.1167/iovs.10-5517. [DOI] [PubMed] [Google Scholar]

- 2.Hassell JB, Lamoureux EL, Keeffe JE. Impact of age related macular degeneration on quality of life. Br J Ophthalmol. 2006;90:593–596. doi: 10.1136/bjo.2005.086595. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cahill MT, Banks AD, Stinnett SS, Toth CA. Vision-related quality of life in patients with bilateral severe age-related macular degeneration. Ophthalmology. 2005;112:152–158. doi: 10.1016/j.ophtha.2004.06.036. [DOI] [PubMed] [Google Scholar]

- 4.Stevenson MR, Hart PM, Montgomery AM, McCulloch DW, Chakravarthy U. Reduced vision in older adults with age related macular degeneration interferes with ability to care for self and impairs role as carer. Br J Ophthalmol. 2004;88:1125–1130. doi: 10.1136/bjo.2003.032383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lamoureux EL, Pallant JF, Pesudovs K, Tennant A, Rees G, O'Connor PM, Keeffe JE. Assessing participation in daily living and the effectiveness of rehabiliation in age related macular degeneration patients using the impact of vision impairment scale. Ophthalmic Epidemiol. 2008;15:105–113. doi: 10.1080/09286580701840354. [DOI] [PubMed] [Google Scholar]

- 6.Bennion AE, Shaw RL, Gibson JM. What do we know about the experience of age related macular degeneration? A systematic review and meta-synthesis of qualitative research. Soc Sci Med. 2012;75:976–985. doi: 10.1016/j.socscimed.2012.04.023. [DOI] [PubMed] [Google Scholar]

- 7.Peli E, Goldstein RB, Young GM, Trempe CL, Buzney SM. Image enhancement for the visually impaired. Simulations and experimental results. Invest Ophthalmol Vis Sci. 1991;32:2337–2350. [PubMed] [Google Scholar]

- 8.Peli E, Lee E, Trempe CL, Buzney S. Image enhancement for the visually impaired: the effects of enhancement on face recognition. J Opt Soc Am (A) 1994;11:1929–1939. doi: 10.1364/josaa.11.001929. [DOI] [PubMed] [Google Scholar]

- 9.Massof RW, Baker FH, Dagnelie G, DeRose JL, Alibhai S, Deremeik JT, Ewart C. Low Vision Enhancement System: improvements in acuity and contrast sensitivity. Optom Vis Sci. 1995;72(suppl.):20. [Google Scholar]

- 10.Peli E, Luo G, Bowers A, Rensing N. Applications of augmented vision head-mounted systems in vision rehabilitation. J Soc Inf Disp. 2007;15:1037–1045. doi: 10.1889/1.2825088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Peli E. Wide-band image enhancement. No 6,611,618 B1. Washington, DC: U.S. Patent and Trademark Office; US Patent. 2003

- 12.Peli E, Kim J, Yitzhaky Y, Goldstein RB, Woods RL. Wideband enhancement of television images for people with visual impairments. J Opt Soc Am (A) 2004;21:937–950. doi: 10.1364/josaa.21.000937. [DOI] [PubMed] [Google Scholar]

- 13.Luo G, Peli E. Use of an augmented-vision device for visual search by patients with tunnel vision. Invest Ophthalmol Vis Sci. 2006;47:4152–4159. doi: 10.1167/iovs.05-1672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Luo G, Peli E. Development and evaluation of vision rehabilitation devices. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:5228–5231. doi: 10.1109/IEMBS.2011.6091293. [DOI] [PubMed] [Google Scholar]

- 15.Satgunam P, Woods RL, Luo G, Bronstad PM, Reynolds Z, Ramachandra C, Mel BW, Peli E. Effects of contour enhancement on low-vision preference and visual search. Optom Vis Sci. 2012;89:E1364–E1373. doi: 10.1097/OPX.0b013e318266f92f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wolffsohn JS, Mukhopadhyay D, Rubinstein M. Image enhancement of real-time television to benefit the visually impaired. Am J Ophthalmol. 2007;144:436–440. doi: 10.1016/j.ajo.2007.05.031. [DOI] [PubMed] [Google Scholar]

- 17.Kwon M, Ramachandra C, Satgunam P, Mel BW, Peli E, Tjan BS. Contour enhancement benefits older adults with simulated central field loss. Optom Vis Sci. 2012;89:1374–1384. doi: 10.1097/OPX.0b013e3182678e52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Luo G, Rensing N, Weststrate E, Peli E. Registration of an on-axis see-through head-mounted display and camera system. Opt Eng. 2005;44:024002-1–024002-7. [Google Scholar]

- 19.Doherty AL, Bowers AR, Luo G, Peli E. Object detection in the ring scotoma of a monocular bioptic telescope. Arch Ophthalmol. 2011;129:611–617. doi: 10.1001/archophthalmol.2011.85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bax MR. Real-time lens distortion correction: 3D video graphics cards are good for more than games. Stanford ECJ. 2002;1:9–13. [Google Scholar]

- 21.Watt A, Policarpo F. The Computer Image. Essex, England: Addison Wesley Longman Ltd; 1998. pp. 248–249. [Google Scholar]

- 22.Solomon JA, Pelli DG. The visual filter mediating letter identification. Nature. 1994;369:395–397. doi: 10.1038/369395a0. [DOI] [PubMed] [Google Scholar]

- 23.Peli E. Limitations of image enhancement for the visually impaired. Optom Vis Sci. 1992;69:15–24. doi: 10.1097/00006324-199201000-00003. [DOI] [PubMed] [Google Scholar]

- 24.Peli E, Luo G, Bowers A, Rensing N. Development and evaluation of vision multiplexing devices for vision impairments. Int J Artif Intell Tools. 2009;18:365–378. doi: 10.1142/S0218213009000184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Pelli D, Robson J, Wilkins A. The design of a new letter chart for measuring contrast sensitivity. Clin Vis Sci. 1988;2:187–199. [Google Scholar]

- 26.Nguyen A, Nguyen AT, Hemenger RP, Williams DR. Resolution, field of view, and retinal illuminance of miniaturized bioptic telescopes and their clinical significance. J Vis Rehab. 1993;7:5–9. [Google Scholar]

- 27.Strasburger H, Rentschler I, Juttner M. Peripheral vision and pattern recognition: a review. J Vis. 2011;11:13. doi: 10.1167/11.5.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pomplun M, Reingold EM, Shen J. Investigating the visual span in comparative search: the effects of task difficulty and divided attention. Cognition. 2001;81:B57–B67. doi: 10.1016/s0010-0277(01)00123-8. [DOI] [PubMed] [Google Scholar]

- 29.Doherty AL, Bowers AR, Luo G, Peli E. The effect of strabismus on object detection in the ring scotoma of a monocular bioptic telescope. Ophthalmic Physiol Opt. 2013;33:550–560. doi: 10.1111/opo.12067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Peli E, Vargas-Martin F. In-the-spectacle-lens telescopic device. J Biomed Opt. 2008;13:034027. doi: 10.1117/1.2940360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Geisler WS, Perry JS. Real-time simulation of arbitrary visual fields. ETRA’02 ACM. 2002:83–87. [Google Scholar]

- 32.Reinhard E, Heidrich W, Debevec P, Pattanaik S, Ward G, Myszkowski K. High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting, 2nd ed. Burlington, MA: Morgan Kaufmann; 2010. [Google Scholar]

- 33.Peli E. Vision multiplexing: an engineering approach to vision rehabilitation device development. Optom Vis Sci. 2001;78:304–315. doi: 10.1097/00006324-200105000-00014. [DOI] [PubMed] [Google Scholar]

- 34.Peli E, Arend L, Labianca AT. Contrast perception across changes in luminance and spatial frequency. J Opt Soc Am (A) 1996;13:1953–1959. doi: 10.1364/josaa.13.001953. [DOI] [PubMed] [Google Scholar]

- 35.Peli E. Contrast sensitivity function and image discrimination. J Opt Soc Am (A) 2001;18:283–293. doi: 10.1364/josaa.18.000283. [DOI] [PubMed] [Google Scholar]

- 36.Peli E, Geri GA. Discrimination of wide-field images as a test of a peripheral-vision model. J Opt Soc Am (A) 2001;18:294–301. doi: 10.1364/josaa.18.000294. [DOI] [PubMed] [Google Scholar]

- 37.Kertesz AE. The effectiveness of wide-angle fusional stimulation in the treatment of convergence insufficiency. Invest Ophthalmol Vis Sci. 1982;22:690–693. [PubMed] [Google Scholar]

- 38.Kertesz AE, Hampton DR. Fusional response to extrafoveal stimulation. Invest Ophthalmol Vis Sci. 1981;21:600–605. [PubMed] [Google Scholar]

- 39.Kertesz AE, Lee HJ. The nature of sensory compensation during fusional response. Vision Res. 1988;28:313–322. doi: 10.1016/0042-6989(88)90159-9. [DOI] [PubMed] [Google Scholar]

- 37.Demer JL, Porter FI, Goldberg J, Jenkins HA, Schmidt K. Adaptation to telescopic spectacles: vestibulo-ocular reflex plasticity. Invest Ophthalmol Vis Sci. 1989;30:159–170. [PubMed] [Google Scholar]

- 38.Chmielowski RJM, Chmielowski PFM. A decentric system for fitting telescopes in home-trial frames. In: Stuen C, Arditi A, Horowitz A, Lang MA, Rosenthal B, Seidman KR, editors. Vision Rehabilitation: Assessment, Intervention, and Outcomes. Lisse, Netherlands: Swets & Zeitlinger; 2000. pp. 191–194. [Google Scholar]

- 39.Peli E. The optical functional advantages of an intraocular low-vision telescope. Optom Vis Sci. 2002;79:225–233. doi: 10.1097/00006324-200204000-00009. [DOI] [PubMed] [Google Scholar]

- 40.Peli E. Feature detection algorithm based on a visual system model. Proc IEEE. 2002;90:78–93. [Google Scholar]

- 41.Peli E. Recognition performance and perceived quality of video enhanced for the visually impaired. Ophthalmic Physiol Opt. 2005;25:543–555. doi: 10.1111/j.1475-1313.2005.00340.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Fullerton M, Peli E. Post transmission digital video enhancement for people with visual impairments. J Soc Inf Disp. 2006;14:15–24. doi: 10.1889/1.2166829. [DOI] [PMC free article] [PubMed] [Google Scholar]