Abstract

Background

Communication while traveling in an automobile often is very difficult for hearing aid users. This is because the automobile /road noise level is usually high, and listeners/drivers often do not have access to visual cues. Since the talker of interest usually is not located in front of the driver/listener, conventional directional processing that places the directivity beam toward the listener’s front may not be helpful, and in fact, could have a negative impact on speech recognition (when compared to omnidirectional processing). Recently, technologies have become available in commercial hearing aids that are designed to improve speech recognition and/or listening effort in noisy conditions where talkers are located behind or beside the listener. These technologies include (1) a directional microphone system that uses a backward-facing directivity pattern (Back-DIR processing), (2) a technology that transmits audio signals from the ear with the better signal-to-noise ratio (SNR) to the ear with the poorer SNR (Side-Transmission processing), and (3) a signal processing scheme that suppresses the noise at the ear with the poorer SNR (Side-Suppression processing).

Purpose

The purpose of the current study was to determine the effect of (1) conventional directional microphones and (2) newer signal processing schemes (Back-DIR, Side-Transmission, and Side-Suppression) on listener’s speech recognition performance and preference for communication in a traveling automobile.

Research design

A single-blinded, repeated-measures design was used.

Study Sample

Twenty-five adults with bilateral symmetrical sensorineural hearing loss aged 44 through 84 years participated in the study.

Data Collection and Analysis

The automobile/road noise and sentences of the Connected Speech Test (CST) were recorded through hearing aids in a standard van moving at a speed of 70 miles/hour on a paved highway. The hearing aids were programmed to omnidirectional microphone, conventional adaptive directional microphone, and the three newer schemes. CST sentences were presented from the side and back of the hearing aids, which were placed on the ears of a manikin. The recorded stimuli were presented to listeners via earphones in a sound treated booth to assess speech recognition performance and preference with each programmed condition.

Results

Compared to omnidirectional microphones, conventional adaptive directional processing had a detrimental effect on speech recognition when speech was presented from the back or side of the listener. Back-DIR and Side-Transmission processing improved speech recognition performance (relative to both omnidirectional and adaptive directional processing) when speech was from the back and side, respectively. The performance with Side-Suppression processing was better than with adaptive directional processing when speech was from the side. The participants’ preferences for a given processing scheme were generally consistent with speech recognition results.

Conclusions

The finding that performance with adaptive directional processing was poorer than with omnidirectional microphones demonstrates the importance of selecting the correct microphone technology for different listening situations. The results also suggest the feasibility of using hearing aid technologies to provide a better listening experience for hearing aid users in automobiles.

Keywords: Hearing aid, directional microphone, wireless technology, automobile

INTRODUCTION

One of the most common complaints of hearing aid users is difficulty in understanding speech in background noise (Takahashi et al, 2007). Among those noisy listening situations encountered daily, traveling in an automobile is perhaps the most difficult (Jensen and Nielsen, 2005; Wagener et al, 2008; Wu and Bentler, in press). For example, in a study designed to investigate the auditory lifestyle of listeners with hearing impairment, Wu and Bentler (in press) asked 27 adults to carry noise dosimeters for one week to measure the sound/noise level of their daily environments. Participants also used paper-and-pencil journals to record the characteristics of their listening activities. The investigators found that the duration of listening activities involving automobile/traffic was approximately 1 hour per day. Among the 25 pre-defined categories of speech listening activity, car/traffic had the highest mean noise level, ranging from 61.2 to 78.7 dBA.

In addition to high-level noise, lack of visual cues contributes to listening difficulty in automobiles. Visual cues (lipreading) can substantially improve listeners’ speech recognition performance (Sumby and Pollack, 1954; Erber, 1969; Hawkins et al, 1988). However, when the listener is driving, visual cues are often unavailable because the talker is either located behind or beside him/her. Even for passengers who do not need to watch the road, visual cues are not always available. As a result, it is common for clinicians to hear complaints from patients regarding listening difficulty in travelling automobiles.

In order to improve speech understanding in noise, considerable research and development effort has been devoted to noise reduction technologies in both microphone-based techniques (e.g., directional microphones) and processing-based applications (e.g., single-microphone digital noise reduction algorithms). Although some of these technologies have the potential to enhance speech understanding in noise, they are unlikely to help drivers and front-seat passengers communicate in motor vehicles. For example, because listeners usually orient their heads toward the talker of interest, conventional fixed directional systems are designed to place the most sensitive directions (i.e., the beam or lobe of the directivity pattern) toward the listener’s front hemisphere. This is the same for adaptive directional systems that are designed to steer the least sensitive direction (i.e., the null) toward the noise sources located in the listener’s rear hemisphere (Kates, 2008). In the automobile, the talker will not be in front of the driver/front-seat passenger; consequently, conventional fixed and adaptive directional systems cannot generate better SNRs for drivers relative to omnidirectional microphones. Moreover, if the talker is in the back seat, conventional directional systems could decrease the SNR, thus having a detrimental effect on speech recognition. In this case, a listener attempting to understand speech coming from behind could experience a disadvantage of 4–5 dB, compared to an omnidirectional mode (Chalupper et al, 2011; Mueller et al, 2011).

In recent years, several hearing aid technologies have been developed to address the communication situations in which the talker of interest is not located in front of the listener. These technologies may have the potential to help drivers communicate better. For example, to enhance speech arriving from behind, a backward-facing directivity pattern, where the directivity beam aims toward the listener’s back and the null faces the front, could be used (Chalupper et al, 2011; Mueller et al, 2011; Kuk and Keenan, 2012). We refer to this type of directional processing scheme as Back-DIR processing. In practice, Back-DIR is often used with other processing schemes. For example, one commercial hearing aid (Chalupper et al, 2011; Mueller et al, 2011) is designed to use the omnidirectional pattern when the noise level is low. In situations where the overall SPL is higher than a certain level and the stimuli consist of speech and noise, the hearing aid will select the adaptive-directional pattern with beam aiming forward when speech is from in front of the listener, and select the “anti-cardioid” pattern, which is a backward-facing cardioid pattern, when speech is from the back.

When listening to speech from the side in a diffuse noise field, a different strategy may be required. In this asymmetric listening configuration, the SNR at each ear will be different. For the ear that is nearest to the speech, an omnidirectional pattern could be ideal for maximizing the SNR because this pattern creates a beam facing toward the listener’s side when the hearing aid is worn on the ear. However, for the ear opposite the speech signal, no directivity pattern could effectively improve the SNR because the speech signals are attenuated by the listener’s head.

Currently, two types of signal processing schemes are available in commercial hearing aids to address this asymmetric listening configuration. In the first scheme, wireless technology is used to transmit and share the information between the two aids. When the SNRs at each ear are determined to be significantly different, the algorithms (1) adapt the appropriate directivity pattern in the hearing aid of the ear with the higher (better) SNR to optimize the signal and (2) use the wireless technology to transmit the actual audio signals from that hearing aid to the one with the lower (poorer) SNR (Nyffeler, 2010; Stuermann, 2011). As a result, the SNR at the ear opposite the speech could be improved. We refer to this type of scheme as Side-Transmission processing.

In the second scheme, the speech and noise level on either side of the head is monitored by the wireless exchange of information between the aids. If the SNRs at the two ears are significantly different, a series of adjustments are made to maximize the audibility of the speech in the ear with the more favorable (higher) SNR, including selecting the appropriate directivity pattern. For the ear with the less favorable (lower) SNR, the gain is reduced and the noise reduction processing is fully activated (Schum, 2010). Although this processing scheme may not increase the SNR at the ear opposite the speech, and may not improve speech recognition, it may reduce the noise interference and allow the listener to more focus on the speech in the ear with the better SNR, likely resulting in decreased listening effort. We refer to this scheme as Side-Suppression processing in this article.

The effects of these signal processing schemes have been evaluated in laboratory environments. For example, Mueller et al. (2011) tested 21 adults with hearing impairment in a speech 180°/noise 0° configuration. The Back-DIR processing provided a benefit of 4.3 dB and 9.9 dB when compared to omnidirectional and conventional adaptive directional processing, respectively. Using a more diffuse noise sound field (speech 180°/noise 0°, 90°, 270°), Kuk et al. (2012) observed a Back-DIR benefit of 7 dB and 8 dB in relation to omnidirectional and conventional fixed directional processing, respectively. Nyffeler and Dechant (2009) compared the manual version of Side-Transmission processing and conventional adaptive directional processing in a diffuse noise field for 28 adults. When speech was from the side, Side-Transmission processing provided significant benefit from 1.5 to 4.3 dB. Sockalingam and Holmberg (2010) evaluated the effect of Side-Suppression processing using a two-alternative forced-choice method to assess ten listeners’ preference. The speech was presented at 62 dB SPL from 50° azimuth and noise (white or pulsating noise) was presented at 64 dB SPL from −50° azimuth in a sound treated booth. Participants overwhelmingly reported (86% of the time) that they preferred the condition with Side-Suppression processing enabled.

Although these laboratory data may support the efficacy of these schemes, they may not generalize to the real-world automobile listening environment. Specifically, the key to the success of these schemes is whether the algorithm can accurately estimate the location of the speech/noise source and the SNR, and then respond accordingly. Although the technologies seem to work well in the laboratory, the acoustic characteristics of a moving automobile differ substantially from those used in the previous studies. Noise encountered in motor vehicles includes sound generated by the automobile itself, other traffic, wind, tires against the pavement, reflected sound, and frictional noise (Olson, 1972). Moreover, the reverberation characteristics of a traveling automobile are greater than the conditions used in previous laboratory research. Therefore, it is unknown whether the hearing aid could correctly identify the location of the talker, estimate the SNR at each ear, and appropriately enable/disable necessary processing schemes in the complicated sound environment of a moving automobile. It is also unknown how much real-world benefit the new schemes could provide in automobiles because simplified laboratory testing environments that use discrete noise sources frequently overestimate the benefit of hearing technology (e.g., Ricketts, 2000).

The purpose of the current study, therefore, was to determine the effect of (1) conventional adaptive directional microphones and (2) newer processing schemes (Back-DIR, Side-Transmission, and Side-Suppression) on listeners’ speech recognition performance and preference in automobiles.

METHODS

Participants

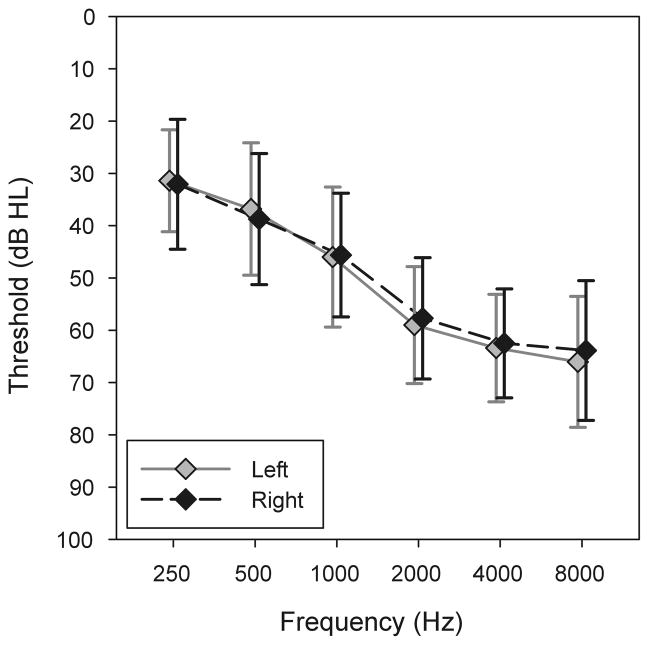

We recruited twenty-five native English-speaking adults (10 males; 15 females) for this study. Participants were eligible for inclusion in this study if their hearing loss met the following criteria: (1) postlingual bilateral downward-sloping sensorineural hearing loss (air-bone gap < 10 dB); (2) hearing thresholds no better than 20 dB HL at 500 Hz, and no worse than 75 dB HL at 3 kHz (re: ANSI., 2004); and (3) hearing symmetry within 15 dB for all test frequencies. This range of hearing loss was chosen to ensure that amplification provided benefit to listeners. Participants’ ages ranged from 44 to 84 years with a mean of 70.5 years (SD = 9.1). The mean pure tone thresholds are shown in Figure 1. Of the 25 participants, 23 were experienced hearing aid users. Participants were considered experienced users if they had at least six months of regular hearing aid use, for no less than 6 hours per day, for the past 12 months. The processing scheme/gain/output of the user’s previous hearing aids was not tested or considered a factor in this investigation.

Figure 1.

Mean hearing thresholds for participants. Error bars indicate one standard deviation.

Hearing aids

Because no commercial hearing aid can conduct all three newer processing schemes, three hearing aid models from different manufacturers were used, denoted as HA1, 2, and 3. HA1 was equipped with the Back-DIR technology; HA2 had the capability of conducting Side-Transmission and Back-DIR processing; and HA3 had the Side-Suppression processing scheme. All three models were mini behind-the-ear hearing aids and with slim tubes and dome tips. This style of hearing aid was chosen because of its frequent use by individuals with mild-to-moderate sloping hearing loss. In order to record stimuli (see below), the hearing aids were programmed to fit a bilateral, symmetrical sloping hearing loss (thresholds 25, 30, 35, 45, 65, and 65 dB HL for octave frequencies from 250 Hz to 8000 Hz). Three test conditions were saved into different programs in each hearing aid model. In the first program the hearing aid microphone was set to the omnidirectional mode (referred to as the OMNI program). The conventional adaptive directional processing, which restricted the directivity null toward the listener’s rear hemisphere, was used in the second program (the DIR program). The new processing scheme from each manufacturer under test was enabled in the third program (the NewTech program): HA1: Back-DIR; HA2: Back-DIR and Side-Transmission; HA3: Side-Suppression. Note that in the NewTech program, the new processing scheme was not a fixed feature; the scheme was activated adaptively only in certain acoustic environments. For example, HA1 will activate the Back-DIR processing scheme when speech is from the back, while selecting the conventional, forward-facing directional processing scheme when speech is from the front. HA2 will conduct Back-DIR and Side-Transmission processing when speech is from the back and side, respectively, in the NewTech program.

The hearing aids were coupled to the ears of a manikin (Knowles Electronics Manikin for Acoustic Research; KEMAR) using closed domes. Closed domes were chosen to ensure that adequate gain could be reached for the low frequencies for the given hearing loss. In situ responses were measured using a probe-microphone hearing aid analyzer (Audioscan Verifit) with a 65 dB SPL speech signal (the “carrot passage”) presented from the manikin’s 0° azimuth. The gains of each program were adjusted to produce real-ear aided responses equivalent (±3 dB) to that prescribed by the NAL-NL1 (National Acoustics Laboratory - Nonlinear version 1; Dillon 1999) for the sloping hearing loss used to program the hearing aids. For all models, the compression processing, digital noise reduction algorithms, and the maximum output limits were set to default by the fitting software without further modification. The feedback suppression system was activated only when feedback was observed (one model). The frequency lowering feature was disabled on the one model that employed it.

To verify the effect of the new processing schemes, the hearing aids were set to the NewTech program and the directivity patterns were measured in an anechoic chamber. The hearing aids were placed on the ears of KEMAR, which was suspended in the middle of the anechoic chamber. Speech and noise were presented at 70 and 68 dBA, respectively, from two loudspeakers. Three configurations were created: speech/noise at 0°/180°, 270°/90°, and 180°/0°. A third loudspeaker, which moved horizontally around KEMAR, presented a transient signal to the hearing aid from different azimuths. The hearing aid output was recorded. The aid’s response to the transient signal was extracted using a phase-inversion technique (Hagerman and Olofsson, 2004) and was then used to derive the directivity pattern (see Wu and Bentler, 2011, for the details of the methodology and equipment). The directivity patterns were measured from the two ears simultaneously.

Results are shown in Figure 2. The directivity patterns of the OMNI program are also shown on the top of the figure as a reference. For HA1, the cardioid and “anti-cardioid” patterns were observed in the speech 0°/noise 180° and speech 180°/noise 0° configurations, respectively. For HA2, the directivity patterns of the two hearing aids were identical in the speech 270°/noise 90° configuration, indicating that the audio signals were transmitted from left aid to the right aid (note that the omnidirectional pattern was chosen by the algorithms to maximize the SNR at the left ear). The anti-cardioid pattern was observed in the speech 180°/noise 0° configuration. For HA3 in the speech 270°/noise 90° configuration, although the directivity of the right aid at mid-to-low frequencies (e.g., 1 kHz) stayed in the omnidirectional pattern, the patterns at higher frequencies (e.g., 2 and 4 kHz) switched to a dipole-like pattern and reduced the noise from the right side by approximately 8 dB. Note that in the speech 180°/noise 0° configuration, the omnidirectional pattern was observed. This result was reasonable because HA3 did not have Back-DIR processing and the omnidirectional pattern was likely the best pattern to increase the level of speech from behind. In general, the results from all hearing aid models in the NewTech program were consistent with what was expected.

Figure 2.

Bilateral planar directivity patterns of hearing aids set to the OMNI program (top of the figure) and the NewTech program (first row to third row). The speech and noise presentation angles are shown at the left side of the figure. The data shown in all plots of a given hearing aid are referenced to the on-axis level (0°) in the Speech 0°/Noise 180° condition of that hearing aid (first row).

The hearing aids were also set to the DIR program and the directivity patterns were measured in the speech 0°/noise 180° configuration. The directivity patterns were similar to those shown in the first row of Figure 2. All patterns were fairly close across the three hearing aids, except that HA3 tended to select the omnidirectional pattern at mid-to-low frequencies.

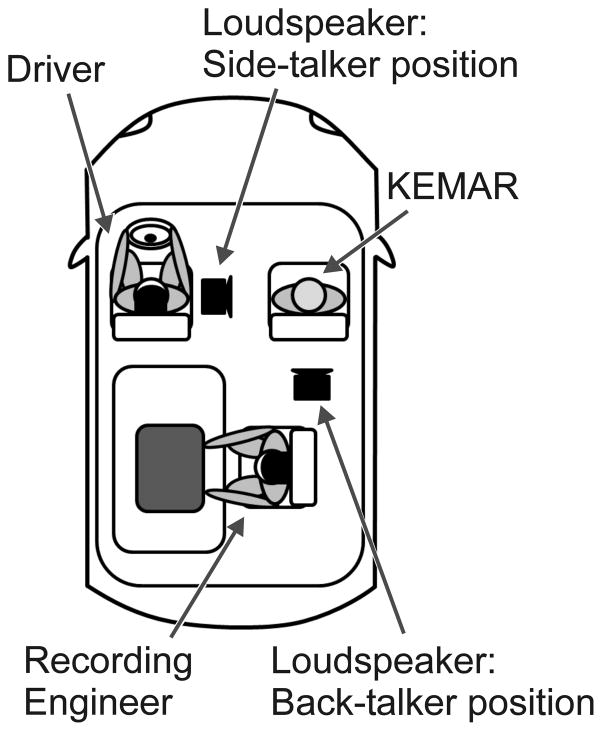

Stimuli recording

The automobile/road noise, together with the Connected Speech Test (CST; Cox et al, 1987; Cox et al, 1988) sentences, were recorded bilaterally from hearing aids coupled to KEMAR’s ears, while KEMAR was positioned on the passenger side of a 2009 Ford 150E van (see Figure 3). This customized van was chosen because it had enough voltage sources for all equipment and had enough space to accommodate the loudspeaker at different locations. KEMAR’s head was aligned with the headrest of the passenger seat. The loudspeaker that presented speech stimuli was placed either at 270° or 180° azimuth relative to the manikin. The distance between the center of the manikin’s head and the loudspeaker was 50 cm—the maximal KEMAR-loudspeaker distance allowed by the van. The CST was chosen because it was designed to approximate everyday conversations.

Figure 3.

Illustration of equipment setup in the van, top view.

The recordings were made on Iowa Highway I-80 between Exit 249 to 284. The van’s speed was set at 70 miles/hour, which was the speed limit of that section of the highway. Before each day’s recording, the speech and noise levels were calibrated. The van was driven eastbound and westbound on the highway several times. The level of the noise was measured from both of KEMAR’s ears without hearing aids. Because the manikin’s right ear was closer to the window, the road noise level at that ear (~78 dBA) was higher than the left ear (~75 dBA). After the noise level had been determined, the speech level was then set to achieve a -1 dB SNR at the ear with the higher (better) SNR. This SNR was chosen in accordance with the data of Pearson et al. (1976), in which typical SNRs across a variety of real-world environments were measured. In the back-talker position, although the noise was higher at the right ear, speech was also higher at that ear (supposedly due to the reflection of speech off of the window). Therefore, the SNR was determined by the right ear. For the side-talker position, the speech level was set according to noise level of the left ear. Figure 4 shows the mean one-third octave level of speech and noise across different days of calibration. The small variations across days indicated that the long-term average level of the noise was quite stable.

Figure 4.

Long-term average spectra of automobile/road noise and speech measured at the manikin’s ear. The numbers shown in the graph represent the mean speech or noise levels across different days of recording. Error bars indicate one standard deviation.

After the calibration, the hearing aids were placed on the manikin’s ears and were set to a given program. The hearing aids were powered by batteries without connecting to the programming interface. The van was driven on the highway to record the sound. In an effort to make the noise level equal across hearing aid models and conditions, the driver kept a constant speed using cruise control and stayed in the right lane whenever possible. Across all recordings, the windows of the van were closed. The air conditioning fan was turned on to the same position. The recordings were made during the summer on sunny days on dry roads, between 10 am and 5 pm. Also, the same section of the road was used across hearing aids and conditions. Specifically, between Exit 249 and 284, the back-talker condition was always recorded driving eastbound and the side-talker condition was always recorded driving westbound. Despite these efforts and the fact that the long-term level was stable (Figure 4a), the noise level still varied from moment to moment due to the variation of traffic and wind. To ensure the constant status of the hearing aid algorithms, the CST sentences were concatenated with a one-second pause between each sentence and then presented to the manikin.

The speech signals were generated from a laptop computer with an M-Audio ProFire 610 sound interface, routed via a Stewart Audio PA-100B amplifier, and then presented from a Tannoy i5 AW loudspeaker. The loudspeaker was placed at the same height as KEMAR’s head. The hearing aids’ output signals were routed through a G.R.A.S. Ear Simulator Type RA0045, a Type 26AC preamplifier, a Type AR12 power module, and the M-Audio ProFire 610 sound interface, to the computer. The recorded signals were digitized at a 44.1 kHz sampling rate and 16-bit resolution. The whole set of CST sentences was recorded with the automobile/road noise in 18 conditions (three hearing aid models x three hearing aid programs x two talker positions).

Stimuli processing and presentation

The stimuli were played to the participants via a pair of insert earphones in the laboratory (will be described later). To simulate a driver’s listening condition, the stimuli recorded from KEMAR’s left/right ear when positioned in the passenger seat during recording were presented to the listener’s right/left ear. The stimuli were first processed to eliminate the effect of the earphone and playback system so as to ensure that the levels and the spectra of the sounds heard by listeners were identical to what occurred in the manikin’s ear in the van. The stimuli were played to KEMAR using the laboratory playback system and earphones and were re-recorded from KEMAR’s ears using the same recording equipment used in the van. The levels and spectra of the re-recorded stimuli were then compared to those of the original recorded stimuli. The difference between the two recordings was used to design an inverse filter. The filter was then applied to the original recorded stimuli to make the playback system acoustically transparent.

After applying the filter, the stimuli were further processed to compensate for each participant’s hearing loss. For the 65 dB SPL speech input, the NAL-NL1 targets generated by the Audioscan Verifit for an individual’s hearing loss and the targets for the sloping hearing loss that was used to program the hearing aid were compared. The differences were then used to create a filter, one for each ear of the listener, to shape the spectra of the stimuli, such that the outputs of the hearing aids met an individual’s NAL-NL1 targets for a 65 dB SPL speech input.

Procedures

After agreeing to participate in the study and signing the consent form, participants’ pure tone thresholds were measured and the speech-in-noise stimuli were processed to compensate individual hearing loss according the NAL-NL1 prescription. The participant was then seated in the middle of a sound treated booth and the processed CST sentences with noise were presented via earphones. Participants were asked to complete two tasks. The first task was a speech recognition test. During testing, the listener was asked to repeat the CST sentences. One pair of CST passages was presented and scored in each condition. Each passage consisted of nine or ten sentences. Scoring was based on the number of key words repeated by the listener out of 25 key words per passage, totaling 50 key words per condition. The CST was administered in the 18 conditions mentioned above. The test order of the condition was randomized.

The second task assessed listener’s preference of technology. The three programs (OMNI, DIR, and NewTech) were compared within each hearing aid model and within each talker position using the forced-choice paired-comparison paradigm. In each comparison, two recordings of the same CST sentence, which were recorded through two different programs of a given hearing aid model in a given talker position, were presented. The sentence was randomly chosen from the whole corpus of the CST sentences. After listening to the samples, the listeners were asked to express their preference based on overall impression. That is, which hearing aid program they would choose to use to if they were driving an automobile for a long period of time. For a given pair of programs, the comparison was repeated ten times. The presentation order of programs was counter-balanced. In total, each participant made 180 judgments (three hearing aid models x three comparisons between three programs x two talker positions x ten repetitions).

For all tests, stimuli were generated by a PC and a LynxTwo sound interface, routed to a GSI-61 audiometer, and then presented through a pair of Sennheiser IE8 insert earphones. Before the experiment commenced, the stimuli were presented to listeners so that they could adjust the level as if they were adjusting the volume of their hearing aids. Three participants asked to increase the stimulus level by 2 dB and six participants asked to reduce the level by 2 to 6 dB. All measurements were completed in one session lasting approximately two hours.

RESULTS

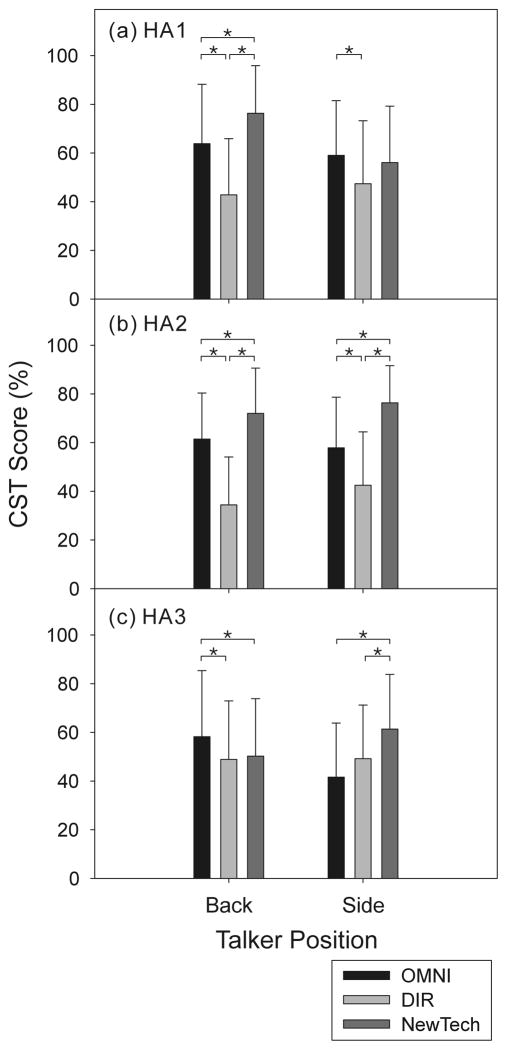

The mean CST score of each combination of hearing aid model, hearing aid program, and talker position is shown in Figure 5. Since the comparison between hearing aid models was not the interest of the current study, a two-factor, repeated-measures analysis of variance (ANOVA) was performed within each hearing aid model. The within-subjects independent variables were hearing aid program (OMNI, DIR, or NewTech) and talker position (back or side), and the dependent variable was the CST score. Original scores were transformed into rationalized arcsine units to homogenize the variance before analysis (Studebaker, 1985). It was found that the interaction between hearing aid program and talker position was significant for all three models (HA1: p < 0.0001; HA2: p = 0.037; HA3: p < 0.0001). Due to the significant interaction, the effect of program was examined for each talker position. Significant main effect of program was observed in all talker positions for all models (Table 1).

Figure 5.

Mean CST score in each test condition. Error bars indicate one standard deviation.

Table 1.

Effect of technology (OMNI, DIR, or NewTech program) on CST score.

| Hearing Aid Model | Talker Position | Df | F | p level |

|---|---|---|---|---|

| HA1 | Back | 2, 48 | 57.07 | <0.0001 |

| HA1 | Side | 2, 48 | 4.67 | 0.014 |

| HA2 | Back | 2, 48 | 132.38 | <0.0001 |

| HA2 | Side | 2, 48 | 61.97 | <0.0001 |

| HA3 | Back | 2, 48 | 5.29 | 0.0084 |

| HA3 | Side | 2, 48 | 18.27 | <0.0001 |

Post hoc analyses of the effect of hearing aid program with Bonferroni correction for multiple comparisons were conducted. The comparisons that reached the significance level (i.e., adjusted p < 0.05) are shown in Figure 5 as the star symbols. In general, the DIR program had a detrimental effect on CST score in relation to the OMNI program in all comparisons, except for HA3 in the side-talker position. For HA1, which was equipped with Back-DIR processing, the CST performance with the NewTech program was significantly better than with the OMNI and DIR programs when speech was from the back. For HA2, which used both Back-DIR and Side-Transmission processing schemes, NewTech had the best performance in both talker positions. For HA3, which had Side-Suppression processing, the performance with NewTech was better than OMNI and DIR in the side-talker position while worse than OMNI in the back-talker position.

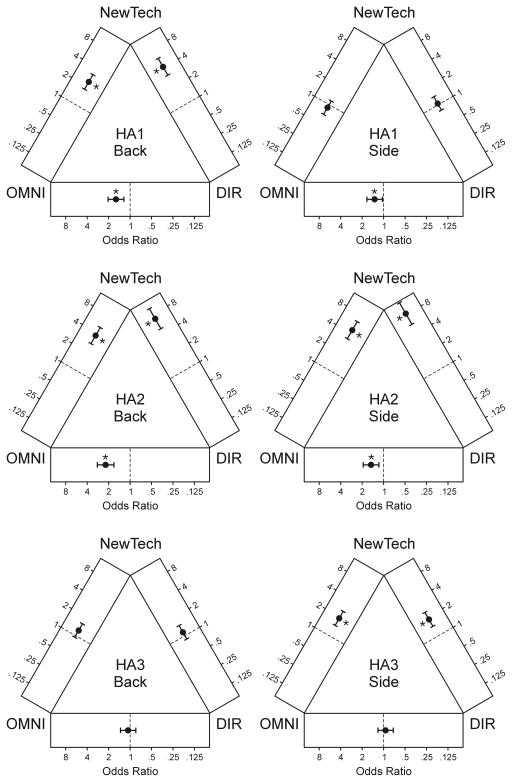

A logistic regression was performed to analyze the paired comparison data to determine if listeners preferred one hearing aid program over another. The analysis was conducted for each hearing aid model and talker position separately. Results are shown in Figure 6. Each triangle represents the results from a given hearing aid model in a given talker position. The data shown at the sides of the triangles represent the odds ratio of preferring a given hearing aid program. An odds ratio is the probability of a given program being preferred divided by the probability of another program being preferred. If a program was preferred five times out of ten comparisons (i.e., 50% of the time), the odds ratio would be one. Basically, the closer the solid circle is to the corner of the triangle, the more likely the listeners preferred the hearing aid program shown at that corner.

Figure 6.

Results of preference judgment from the back-talker position (left column) and side-talker position (right column) of each hearing aid model. Solid circles represent the odds ratio of preferring the OMNI program (in the comparison of OMNI vs. DIR) and the NewTech program (in the comparisons of NewTech vs. OMNI and NewTech vs. DIR). Error bars indicate the 95% confidence interval.

The comparisons that reached the significance level were marked by the star symbols in Figure 6. In general, the results were consistent with the speech recognition results. DIR was less often preferred than OMNI in both talker positions except for HA3. For HA1, NewTech was preferred more often than OMNI and DIR only in the back-talker position. For HA2, listeners preferred NewTech in both talker positions. For HA3, NewTech was preferred only in the side-talker position.

DISCUSSION

The results of this study revealed that the automobile was a difficult listening environment. The van/road noise tested in this study was as high as 78 dBA. At this noise level, we expect that the SNR for listening to conversational speech would be low, around −1 dB (Pearsons et al, 1976). At this unfavorable SNR, our listeners could only understand approximately 60% of the speech using the omnidirectional amplification program (Figure 5). Therefore, unless the talkers constantly raise their voices or the listeners turn their heads toward to the talkers (which is unlikely for drivers), listeners with hearing impairment would have great difficulty following the conversation.

Note that the automobile/road noise observed in this study could be relatively high because (1) the van was heavier and, therefore, nosier than most family cars and (2) the van speed used in recording was high (70 miles/hr). To further understand if this high-level noise could be the case for other automobiles at a lower speed, the noise level of five different sedan car models was measured using a sound level meter. When the speed of these five vehicles was set to 35 miles/hr, the noise level ranged from 60–69 dBA, with a mean of 64.4 dBA. When the speed was set to 65 miles/hr, the noise level ranged from 64 to 73 dBA, with a mean of 67.9 dBA. Therefore, although modern family cars would be quieter, the noise level is still high and the SNR would still be low (poor), resulting in a difficult listening environment. Listening would be even more difficult with open windows because of wind noise or if the radio was turned on.

The effect of hearing aid processing schemes

When the aids were set to the omnidirectional mode, listeners’ mean CST performance was similar across hearing aid models and talker positions (~60% correct), except for HA3 in the side-talker position (41.6 % correct). A repeated-measures ANOVA and post hoc analyses confirmed that the performance in the HA3/OMNI/side-talker condition was significantly poorer than any of the other five OMNI conditions (all adjusted p < 0.002). Since the mean CST score for HA3 in the OMNI/back-talker condition (58.2% correct; Figure 5c) was close to the other two hearing aid models, it is unlikely the poorer performance with HA3 in the side-talker position was due to hearing aid algorithms. One possible explanation was the variation in the road noise. As mentioned, although the long-term average noise level was quite stable (Figure 4a), the road traffic changed momentarily. It could be possible that when the stimuli of the HA3/OMNI/side-talker condition were recorded, the traffic was busier than that in other conditions. To examine this possibility, the SNR of the stimuli recorded in each test condition was estimated. Specifically, the noise level at the one-second pauses between sentences and the combined speech and noise level were calculated. The speech level was then derived using power subtraction. The SNR was calculated and averaged across two ears. Table 2 shows the results. The SNR was first found to be highly correlated to the CST score shown in Figure 5 (r = 0.92, p < 0.0001), suggesting the validity of the SNR estimation. The result that the SNR of the stimuli used in the HA3/OMNI/side-talker condition (−6.53 dB) was lower (poorer) than those used in other OMNI/side-talker conditions (−4.73 and −4.91 dB) supported the explanation of inconsistent road noise.

Table 2.

Estimated signal-to-noise ratio in each test condition.

| Back-talker Position

|

Side-talker Position

|

|||||

|---|---|---|---|---|---|---|

| OMNI | DIR | NewTech | OMNI | DIR | NewTech | |

|

|

|

|||||

| HA1 | −1.18 | −4.83 | 0.42 | −4.73 | −7.22 | −5.16 |

| HA2 | −4.07 | −8.23 | 0.16 | −4.91 | −8.14 | 1.07 |

| HA3 | −5.22 | −5.00 | −5.68 | −6.53 | −5.37 | −4.54 |

Our research revealed that, for HA1 and HA2, the conventional adaptive directional processing had a detrimental effect on speech recognition and was not preferred by listeners. This negative effect was larger when speech was from the back. The performance with HA3 in the DIR program was not worse than OMNI when speech was from the side (Figure 5c). This result was due to the low performance in the OMNI program, which likely resulted from the nosier traffic as mentioned previously, rather than to the effect of algorithms. These results clearly indicated that clinicians should not assume that listeners’ performance in noisy environments with directional processing will be always better than that with omnidirectional processing.

The data further indicated that the three new signal processing schemes could improve speech understanding in real-world automobile listening situations. When speech was from the back, Back-DIR processing of HA1 and HA2 in the NewTech program was activated and speech recognition performance increased by 12.5% and 10.6% (relative to OMNI), respectively. The benefit was even larger when compared to DIR (33.5% and 37.6%, respectively). However, note that the magnitude of benefit shown here was much smaller than the 5 to 9 dB benefit reported by laboratory studies (Chalupper et al, 2011; Mueller et al, 2011; Kuk and Keenan, 2012), assuming that 1 dB benefit represents an increase of 8 to 10% in speech understanding (Cox et al, 1988; Dillon, 2001). The reduction in benefit is likely because (1) the reverberation in the van was higher than that in the laboratory test setting and (2) the benefit measured using the CST was affected by the ceiling/floor effect.

The Side-Transmission scheme improved CST performance for HA2 in the side-talker position. The benefit in CST score was 18.5% (re: OMNI) and 33.8% (re: DIR). The result that the performance with Side-Transmission processing was better than with omnidirectional processing warrants some further comment. In the anechoic chamber (Figure 2), the Side-Transmission scheme in the speech 270°/noise 90° configuration chose an omnidirectional pattern for the ear with the better SNR (left ear). This is also the case in a directivity pattern measure conducted later using a speech 270°/noise 0°, 90°, 180°, 270° configuration. If in the van the left hearing aid had selected the omnidirectional pattern in the NewTech/side-talker condition, the SNR in KEMAR’s left ear in the OMNI program would be identical to that in the NewTech program. If listener’s performance was determined by the SNR of the left ear (i.e., better ear listening), the mean CST score in the OMNI and NewTech programs would be the same. It is likely that the better performance involved binaural processing. Specifically, binaural masking level difference (BMLD) refers to the phenomenon that the detection of a signal in noise is improved when the phase differences of the signal at the two ears are not the same as the masking noise (Robinson and Jeffress, 1963; Levitt and Rabiner, 1967).

The effect of this binaural processing is maximized when there is no difference in the level of signal or noise between the ears. For example, increasing the noise level at one ear relative to the other ear by 10 dB could nearly eliminate the BMLD (Weston and Miller, 1965). In the side-talker position, because the attenuation of the speech due to head shadow was as high as 6 dB (Figure 4c), in the OMNI program the effect of binaural processing would be very small. The performance was mainly determined by the better ear SNR. When Side-Transmission processing was enabled, both ears were listening to the same speech and noise signals processed by the left hearing aid, with a delay due to wireless transmission (approximately 5 ms, derived from the measure shown in Figure 2). In addition to the processed sounds, automobile/road noise (especially at mid to low frequencies) would enter the ear canal through the ear dome. Because (1) the speech levels at the two ears were similar (so were noise levels) and (2) the phase differences of the speech at the two ears (5 ms delay) are not the same as the automobile/road noise (less correlated, especially at mid to low frequencies), listeners might be able to use binaural processing to extract more speech cues from noise, resulting in better performance. This speculation was consistent with the study by Richards et al. (2006), in which the benefit of transferring signals between two hearing aids were modeled and examined.

The effect of Side-Suppression processing of HA3 in the side-talker position was less conclusive. When compared to OMNI, the NewTech program resulted in a higher CST score (Figure 5c) and was more often preferred (Figure 6). Because of the higher road noise level in the OMNI condition (Table 2), it is unclear if the Side-Suppression processing really provided benefit relative to omnidirectional processing. The CST performance with the NewTech was also better than that with the DIR program (Figure 5c). However, because the better ear SNR determined the performance (as previously discussed), the better performance with NewTech was unlikely due to the Side-Suppressing processing scheme reducing the noise at the ear with the poorer SNR. It is more likely that, for the ear with the better SNR (i.e., KEMAR’s left ear), the directivity pattern selected in the NewTech program provided a higher (better) SNR than that selected in the DIR program. This speculation was supported by the directivity pattern measure conducted in the anechoic chamber using a speech 270°/noise 0°, 90°, 180°, 270° configuration. The measure suggested that, for the left aid, a hypercardioid-like pattern was selected in HA3’s DIR program while an omnidirectional pattern was chosen in the NewTech program. Because the omnidirectional pattern had the directivity beam facing toward the speech source (270°, see the directivity pattern of HA2/Left/Speech 270°/Noise 90° in Figure 2 as an example), this pattern provided a better SNR than the hypercardioid pattern, resulting a higher CST score in the NewTech condition than the DIR condition.

Real world applications and study limitations

While in general, the findings regarding the effect of new processing schemes were encouraging, the present study has some limitations concerning real world application. Firstly, during the stimuli recording, the CST sentences were presented continuously and the road noise was relatively stable on the highway. As a result, the hearing aid algorithms had enough time and information to determine the location of the talker and decide the best setting. That said, if the speech was dissimilar to continuous discourse and the road noise was intermittent (e.g., stop-and-go traffic), the algorithms may not be ideal. Secondly, when there is more than one talker (e.g., spouse talking at the side and kids talking in the back), the algorithms may result in different patterns of focus and attenuate the speech that the listener is trying to understand or not adapt quickly enough to capture key words from short phrases. Thirdly, although many of the paired-comparison results reached the significant level, the odds ratios of some comparisons were very close to unity (i.e., no preference; see Figure 6). Therefore, it could be difficult for some listeners to detect and appreciate the benefit of the technologies in the real world.

Another consideration regarding real-world application was that the current study did not test the possible negative impact on localization ability. Specifically, it has been shown that adaptive directional processing could degrade localization cues (Van den Bogaert et al, 2006; Van den Bogaert et al, 2008). The diotic-like listening created by Side-Transmission processing would very likely compromise the listener’s localization ability. Therefore, although the new processing schemes could improve speech understanding in automobiles, they may reduce the ability of the driver to localize important sounds while driving, such as sirens.

CONCLUSIONS

The primary findings of the present study are: (1) conventional adaptive directional systems could have a detrimental effect on speech understanding in automobiles relative to omnidirectional processing; and (2) signal processing schemes that employ unique directivity patterns and wireless technology to transmit acoustic signals or communicate data between hearing aids could enhance communication in automobiles. These results suggest that (1) it is critical to use the right technologies in the right situations in order to obtain benefit from hearing aid technologies, and (2) it is feasible to use hearing aid technologies to provide a better listening experience for hearing aid users in automobiles. The study also highlights how critical it is for clinicians to thoroughly counsel hearing aid users as to when and how to use such technologies properly, and how important it is for patients to try different hearing aid settings/technologies to maximize their performance in the real world.

Acknowledgments

Sources of support: This work was supported by a research grant from Siemens Hearing Instruments, Inc.

This research was supported by a research grant from Siemens Hearing Instruments, Inc. The authors thank Dr. Sepp Chalupper for his contributions in research design. The authors also thank Dr. Gus Mueller for his helpful suggestions and comments on an early version of this paper. The authors thank Dr. Bruce Tomblin for letting the research team use the van, which is property of the NIH/NIDCD Outcomes of Children with Hearing Loss (OCHL) study. The authors are also grateful to Dr. Xuyang Zhang for his statistical support.

ABBREVIATIONS

- BMLD

binaural masking level difference

- CST

Connected Speech Test

- DIR

a test condition in which conventional adaptive directional processing was programmed

- KEMAR

Knowles Electronics Manikin for Acoustic Research

- NewTech

a test condition in which newer signal processing schemes were programmed

- OMNI

a test condition in which omnidirectional processing was programmed

- SNR

signal-to-noise ratio

Footnotes

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

Portions of this paper were presented at the annual meeting of the American Academy of Audiology, 2012, Boston, MA

References

- ANSI. Specification for audiometers (ANSI S3.6) New York: American national standards institute; 2004. [Google Scholar]

- Chalupper J, Wu YH, Weber J. New algorithm automatically adjusts directional system for special situations. Hear J. 2011;64(1):26–33. [Google Scholar]

- Cox RM, Alexander GC, Gilmore C. Development of the Connected Speech Test (CST) Ear Hear. 1987;8(5):119S–126S. doi: 10.1097/00003446-198710001-00010. [DOI] [PubMed] [Google Scholar]

- Cox RM, Alexander GC, Gilmore C, Pusakulich KM. Use of the Connected Speech Test (CST) with hearing-impaired listeners. Ear Hear. 1988;9(4):198–207. doi: 10.1097/00003446-198808000-00005. [DOI] [PubMed] [Google Scholar]

- Dillon H. NAL-NL-1: a new prescriptive fitting procedure for nonlinear hearing aids. Hear J. 1999;52(4):10–16. [Google Scholar]

- Dillon H. Hearing Aids. New York: Thieme; 2001. [Google Scholar]

- Erber NP. Interaction of audition and vision in the recognition of oral speech stimuli. J Speech Hear Res. 1969;12(4):423–425. doi: 10.1044/jshr.1202.423. [DOI] [PubMed] [Google Scholar]

- Hagerman B, Olofsson A. A method to measure the effect of noise reduction algorithms using simultaneous speech and noise. Acta Acoust. 2004;90(2):356–361. [Google Scholar]

- Hawkins DB, Montgomery AA, Mueller HG, Sedge R. Noise as a public health problem: Proceedings of the 5th International Congress on Noise as a Public Health Problem, held in Stockholm, August 21-28, 1988. Stockholm: Swedish Council for Building Research; 1988. Assessment of speech intelligibility by hearing impaired listeners; pp. 241–246. [Google Scholar]

- Jensen NS, Nielsen C. Auditory ecology in a group of experienced hearing-aid users: Can knowledge about hearing-aid users’ auditory ecology improve their rehabilitation? In: Rasmussen AN, Poulsen T, Andersen T, Larsen CB, editors. Hearing Aid Fitting. Kolding, Denmark: The Danavox Jubilee Foundation; 2005. pp. 235–260. [Google Scholar]

- Kates JM. Digital hearing aids. San Diego: Plural Publishing; 2008. [Google Scholar]

- Kuk F, Keenan D. Efficacy of a reverse cardioid directional microphone. J Am Acad Audiol. 2012;23(1):64–73. doi: 10.3766/jaaa.23.1.7. [DOI] [PubMed] [Google Scholar]

- Levitt H, Rabiner LR. Predicting binaural gain in intelligibility and release from masking for speech. J Acoust Soc Am. 1967;42(4):820–829. doi: 10.1121/1.1910654. [DOI] [PubMed] [Google Scholar]

- Mueller HG, Weber J, Bellanova M. Clinical evaluation of a new hearing aid anti-cardioid directivity pattern. Int J Audiol. 2011;50(4):249–254. doi: 10.3109/14992027.2010.547992. [DOI] [PubMed] [Google Scholar]

- Nyffeler M. 2012 Auto ZoomControl: Automatic change of focus to speech signals of interest. 2010 Apr 9; Retrieved April 9th, 2012, from http://www.phonakpro.com/content/dam/phonak/b2b/C_M_tools/Library/Field_Study_News/en/FSN_2010_September_GB_AutoZoomControl.pdf.

- Nyffeler M, Dechant S. Field study on user control of directional focus: Benefits of hearing the facets of a full life. Hear Rev. 2009;16(1):24–28. [Google Scholar]

- Olson N. Survey of motor vehicle noise. J Acoust Soc Am. 1972;52(5A):1291–1306. doi: 10.1121/1.1913246. [DOI] [PubMed] [Google Scholar]

- Pearsons KS, Bennett RL, Fidell S. Speech levels in various environments. Report to the Office of Resources and Development, Environmental Protection Agency. BBN Report # 3281. Cambridge, MA: Bolt, Beranek and Newman; 1976. [Google Scholar]

- Richards VM, Moore BCJ, Launer S. Potential benefits of across-aid communication for bilaterally aided people: Listening in a car. Int J Audiol. 2006;45(3):182–189. doi: 10.1080/14992020500250054. [DOI] [PubMed] [Google Scholar]

- Ricketts T. Impact of noise source configuration on directional hearing aid benefit and performance. Ear Hear. 2000;21(3):194–205. doi: 10.1097/00003446-200006000-00002. [DOI] [PubMed] [Google Scholar]

- Robinson DE, Jeffress LA. Effect of varying the interaural noise correlation on binaural signal detection. J Acoust Soc Am. 1963;35(12):1947–1952. [Google Scholar]

- Schum DJ. The Audiology in Agil - A Whitepaper. 2010 Retrieved April 9th, 2012, from http://www.oticonusa.com/SiteGen/Uploads/Public/Downloads_Oticon/Agil/101386_1.pdf.

- Sockalingam R, Holmberg M. Evidence of the effectiveness of a spatial noise management system. Hear Rev. 2010;17(9):44–47. [Google Scholar]

- Studebaker GA. A “rationalized” arcsine transform. J Speech Hear Res. 1985;28(3):455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Stuermann B. auto ZoomControl: Objective and subjective benefits with auto ZoomControl. 2011 Retrieved April 9th, 2012, from http://www.phonakpro.com/content/dam/phonak/b2b/C_M_tools/Library/Field_Study_News/en/FSN_auto_ZoomControl_benefits_210x297_EN_V1.00_2011-01.pdf.

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. J Acoust Soc Am. 1954;26(2):212–215. [Google Scholar]

- Takahashi G, Martinez CD, Beamer S, Bridges J, Noffsinger D, Sugiura K, Bratt GW, Williams DW. Subjective measures of hearing aid benefit and satisfaction in the NIDCD/VA follow-up study. J Am Acad Audiol. 2007;18(4):323–349. doi: 10.3766/jaaa.18.4.6. [DOI] [PubMed] [Google Scholar]

- Van den Bogaert T, Doclo S, Wouters J, Moonen M. The effect of multimicrophone noise reduction systems on sound source localization by users of binaural hearing aids. J Acoust Soc Am. 2008;124(1):484–497. doi: 10.1121/1.2931962. [DOI] [PubMed] [Google Scholar]

- Van den Bogaert T, Klasen TJ, Moonen M, Van Duen L, Wouters J. Horizontal localization with bilateral hearing aids: Without is better than with. J Acoust Soc Am. 2006;119(1):515–526. doi: 10.1121/1.2139653. [DOI] [PubMed] [Google Scholar]

- Wagener KC, Hansen M, Ludvigsen C. Recording and classification of the acoustic environment of hearing aid users. J Am Acad Audiol. 2008;19(4):348–370. doi: 10.3766/jaaa.19.4.7. [DOI] [PubMed] [Google Scholar]

- Weston PB, Miller JD. Use of noise to eliminate one ear from masking experiments. J Acoust Soc Am. 1965;37(4):638–646. doi: 10.1121/1.1909385. [DOI] [PubMed] [Google Scholar]

- Wu YH, Bentler RA. A method to measure hearing aid directivity index and polar pattern in small and reverberant enclosures. Int J Audiol. 2011;50(6):405–416. doi: 10.3109/14992027.2010.551219. [DOI] [PubMed] [Google Scholar]

- Wu YH, Bentler RA. Do older adults have social lifestyles that place fewer demands on hearing? J Am Acad Audiol. doi: 10.3766/jaaa.23.9.4. in press. [DOI] [PubMed] [Google Scholar]