Abstract

OBJECTIVE

A high quality screening mammography program should find breast cancer when it exists, when it’s small, and ensure that suspicious findings receive prompt follow-up. The Mammography Quality Standards Act (MQSA) guidelines related to tracking outcomes are insufficient for assessing quality of care. We used data from a quality improvement project to determine whether mammography screening facilities could show that they met certain quality benchmarks beyond those required by MQSA.

METHODS

Participating facilities (N=52) provided aggregate data on screening mammograms conducted in calendar year 2009 and corresponding diagnostic follow-up, including lost to follow-up and timing of diagnostic imaging and biopsy, cancer detection rates, and the proportion of cancers detected as minimal and early stage tumors.

RESULTS

The percentage of institutions meeting each benchmark varied from 27% to 83%. Facilities with American College of Surgeons or National Comprehensive Cancer Network designation were more likely to meet benchmarks pertaining to cancer detection and early detection, and Disproportionate Share facilities were less likely to meet benchmarks pertaining to timeliness of care.

CONCLUSIONS

Results suggest a combination of care quality issues and incomplete tracking of patients. To accurately measure quality of the breast cancer screening process, it is critical that there be complete tracking of patients with abnormal screening mammograms so that results can be interpreted solely in terms of quality of care. The Mammography Quality Standards Act guidelines for tracking outcomes and measuring quality indicators should be strengthened to better assess quality of care.

INTRODUCTION

In the United States, non-Hispanic (nH) Black women are more likely than nH White women to die from breast cancer despite being less likely to be diagnosed with the disease. In Chicago, this disparity is especially large: breast cancer mortality in Chicago is 63% higher for AA women, representing one of the highest documented disparities in the country [1]. There are many potential contributors to the disparity, including established differences in tumor aggressiveness [2–4], access and utilization of mammography [5], and timeliness and quality of treatment [6]. Central to this paper is the question of whether variation in the quality of mammography and its effectiveness could also contribute to this disparity [7]. A Taskforce was established in Chicago in 2007 to explore this possibility [1] in addition to examining the other factors outlined above.

Indications of problems with the quality of mammography were first seen in the mid 1980s. A study known as the Nationwide Evaluation of X-Ray Trends (NEXT)[8], which was conducted by state radiation control agencies in cooperation with the Food and Drug Administration (FDA) found that image quality produced in perhaps as many as one-third of the facilities was less than desirable. The Mammography Quality Standards Act (MQSA) was instituted in 1992 in response and as an attempt to improve the quality of breast cancer screening with mammography nationwide. It set out basic standards that a facility needed to meet in order to be certified under the Act. These included standards related to mammography machine calibration, maintenance and quality control, and qualifications of staff. The Act was reauthorized in 1999 to update experience and continuing education requirements for medical physicists and radiologic technologists and clarify equipment standards. With this update, each facility was additionally required to have a system in place to ensure that mammogram results were communicated to patients in a timely manner and in terms that a lay person would understand [9]. Federal regulations derived under the authority of the Act effective in 2002 spelled out further quality assurance measures. This included a requirement of a medical outcomes audit to follow up on the disposition of all positive mammograms and correlation of pathology results with the interpreting physician’s findings [10]. These outcomes analyses are required to be made individually and collectively for all interpreting physicians at the facility.

While the regulations state that these provisions are “designed to ensure the reliability, clarity, and accuracy of the interpretation of mammograms”, in truth, the regulations do not require rigorous patient tracking for several reasons. First, a facility is only required to obtain pathology and surgical reports and review screening and diagnostic mammograms on those cases that “subsequently become known to the facility”. There is no requirement for due diligence in actively determining whether a patient with an abnormal mammogram is subsequently diagnosed with breast cancer. Second, the regulations only require analysis of mammograms interpreted as “suspicious” or “highly suggestive of malignancy” (i.e., BI-RADs 4 and 5) rather than all abnormal results including those designated “incomplete” (i.e., BI-RADs 0). Thus FDA guidance documents acknowledge that a screening program that never classifies a patient as BI-RADs 4 or 5 (e.g., facilities that conduct exclusively screening, or those that do not use BI-RADs 4 or 5 categories to interpret their screening mammograms) will have no required patients to track and thus no patients to include in the required audit. Third, MQSA law and regulations do not require facilities to separate out screening from diagnostic mammogram results, without which measures of screening quality become meaningless [10]. Fourth, MQSA law and regulations do not specify what quality metrics need to be included in the medical audits for facilities or individual radiologists, leaving this to the discretion of each facility and radiology practice [10].

Besides MQSA guidelines, other organizations have recommended additional strategies to improve the quality of mammography. The National Cancer Policy Board (NCPB) was asked by Congress to review the adequacy of MQSA, which was due for reauthorization in 2007. NCPB published a report through the Institute of Medicine in 2005 recommending that the required medical audit component of MQSA be standardized, and that institutions voluntarily participate in a more advanced medical audit [11]. NCPB reiterated the importance of separating screening mammography data from diagnostic mammography data. The American College of Radiology also recommends that facilities meet certain additional quality benchmarks above and beyond the MQSA guidelines pertaining to proportion of abnormal screening mammograms (recall rate), timeliness of follow-up, extent of screen-detection (i.e., cancer detection rate for screening mammograms), and ability to detect small and early stage tumors [11]. To date, none of these recommendations have been incorporated into MQSA guidelines.

It is unclear the extent to which institutions are tracking or attempting to track additional data beyond that necessary to meet minimum MQSA requirements and whether the data are of sufficient quality to make statements about screening mammography quality. Very little research has been done to examine how institutions go about tracking patients whose mammogram results were abnormal, especially for those performing screening mammography but not generally performing biopsies, and the level of success in obtaining follow-up information from other institutions. We used data collected from mammography screening facilities as part of a quality improvement project, and determined whether facilities were able to collect data in order to calculate certain quality metrics and to show that they could meet certain benchmarks pertaining to quality of the mammography process.

MATERIALS AND METHODS

Data for these analyses were collected by the Chicago Breast Cancer Quality Consortium (Consortium), which is a project of the Metropolitan Chicago Breast Cancer Task Force [1]. The Consortium was created in 2008 in an effort to address Chicago’s breast cancer mortality disparity through quality improvement. The aim of the Consortium was to recruit institutions that screen for, diagnose and treat breast cancer, and to measure and improve the quality of breast care provided. Participating facilities included lower resourced facilities and those serving predominantly minority or underserved patients. Expert advisory boards were established for both mammography screening quality and breast cancer treatment quality in order to identify which measures were both high priority and could be feasibly estimated through collection of aggregate data from facilities. All participating institutions obtained a signed data sharing agreement and Institutional Review Board (IRB) approval for the study from their institution. Institutions that lacked an IRB used the Rush University IRB. Electronic data collection forms were designed for collecting data on the screening mammography process pertaining to screening mammograms conducted during calendar year 2009. A series of webinars were conducted in order to familiarize staff at each institution with the data collection form and submission process, and emphasize specific points pertaining to quality data. Emphases were placed on submission of patient-level counts as opposed to procedure level counts, and explicitly accounting for missing data, issues that arose during a pilot data collection of screening mammogram data during for calendar year 2006.

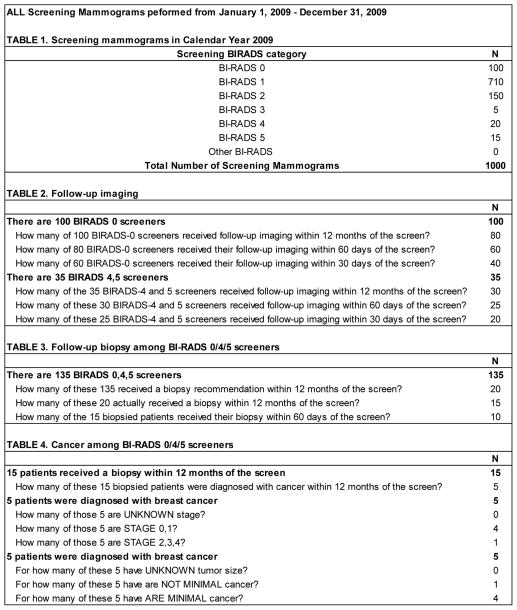

The screening mammography process was defined as the entire process from the initial screening mammogram, through diagnostic follow-up imaging, biopsy, and breast cancer diagnosis. Figure 1 provides a listing of the requested aggregate counts pertaining to patients who were screened at each institution during calendar year 2009. The data collection instrument was created in a spreadsheet with each bolded section of Figure 1 in its own table within the spreadsheet. Auto-calculated cells and data validation checks were built into the instrument to help guide the data collection and entry process. For example, in the purely hypothetical data in Figure 1, among 1000 screened patients, there were 135 abnormal screens resulting in 15 biopsied patients and 5 diagnosed breast cancers, of which 4 were known to be early stage and minimal cancers (Figure 1).

FIGURE 1.

Example of the data collection instrument used to collect counts pertaining to mammography screening processes at individual institutions. Data shown are examplar and do not come from an actual institution.

From these data we estimated the following 11 measures of screening process. Benchmarks for these measures were established by consulting American College of Radiology benchmarks, and through consultation with clinical experts in these fields who participate on our Mammography Quality Advisory Board. The benchmarks also take into account population-based estimates and ranges for these measures from the Breast Cancer Surveillance Consortium [12].

The following six measures of mammogram interpretation and diagnostic follow-up were calculated for all participating facilities:

Recall rate: The proportion of screening mammograms interpreted as abnormal (BI-RADs 0, 4 or 5). The benchmark for recall rate was met if no less than 5% and no greater than 14% of screening mammograms were interpreted as abnormal.

Not lost at imaging: The proportion of abnormal screening mammograms receiving follow-up diagnostic imaging within 12 months of the screening mammogram (benchmark of 90% and above).

Timely follow-up imaging: The receipt of diagnostic imaging within 30 days of an abnormal screen, among those receiving diagnostic imaging within 12 months of the screen (benchmark of 90% and above).

Biopsy recommendation rate: proportion abnormal screening mammograms resulting in a recommendation for biopsy (benchmark of 8–20%).

Not lost at biopsy: The proportion of women with a biopsy recommendation that received a biopsy within 12 months of the abnormal screen (benchmark of 70% and above).

Timely Biopsy: The receipt of a biopsy within 60 days of the abnormal screen, among those receiving a biopsy within 12 months of the screen (benchmark of 90% and above).

The following three measures of cancer detection were calculated for facilities that reported at least 1,000 screening mammograms during calendar year 2009:

Cancer if abnormal screen: The proportion of abnormal screens that received a breast cancer diagnosis within 12 months of the screen, also known as PPV1 (benchmark of 3–8%).

Cancer if biopsied: The proportion of patients biopsied following an abnormal screen that received a breast cancer diagnosis within 12 months of the screen, also known as PPV3 (benchmark of 15–40%).

Cancer detection rate: The number of breast cancers detected following an abnormal screen for every 1000 screening mammograms performed (benchmark of 3–10 per 1000).

The following two measures of early cancer detection were calculated for facilities that reported at least 10 screen-detected breast cancers during calendar year 2009:

Proportion minimal: The proportion of screen-detected breast cancers that were either in situ or no greater than 1 cm in largest diameter (benchmark of >30%). Breast cancers with unknown minimal status were excluded from both numerator and denominator of this measure. While we attempted to collect information on lymph node status for minimal cancers, many institutions were unable to provide this data reliably and so we did not include lymph node status in our definition of minimal cancer.

Proportion early stage: The proportion of screen-detected breast cancers that were either in situ or stage 1 (benchmark of >50%). Breast cancers with unknown stage were excluded from both numerator and denominator of this measure.

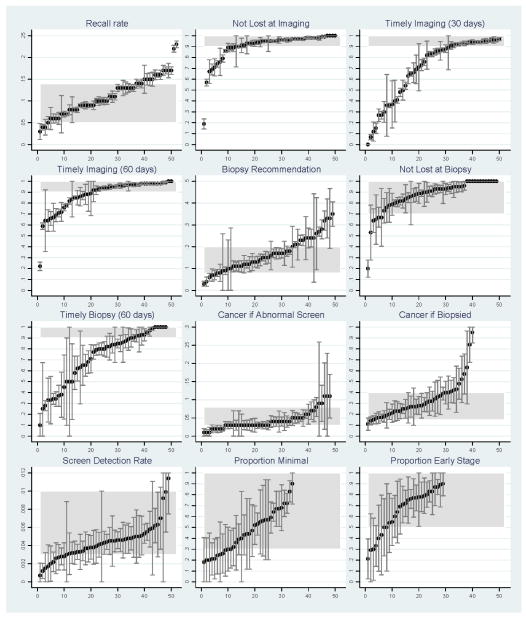

W calculated each of the above 11 measures separately for each institution when both numerator and denominator data were available. Facility estimates for each measure were then plotted along with corresponding 95% confidence intervals in order to provide a visual depiction of the range encountered as well as the stability of those estimates. The plot for each estimate was then overlaid with a shaded area to help identify those values that fell within the range of acceptable values for the benchmark (Figure 2). Next, for each measure, we calculated the percentage of facilities that were able to show that they met each benchmark. Facilities with incomplete or missing data on a given measure were defined as not able to show they met that benchmark. These results are presented in Table 1.

FIGURE 2.

Screening process measures and 95% confidence intervals for each institution, against benchmark ranges (shaded area). For each graph, facilities are ordered from smallest to largest value of the corresponding measure, therefore the ordering of specific facilities differs from graph to graph.

Table 1.

Percentage of facilities able to show they met specific benchmarks for mammography screening during Calendar Year 2009

| Measure | Benchmark | Shown to meet benchmark

|

|

|---|---|---|---|

| N | % | ||

| All Facilities (N=52) | |||

| Recall Rate | 5–14% | 36 | 69 |

| Not lost at Imaging | ≤10% | 38 | 73 |

| Timely diagnostic imaging | ≥90% | 32 | 62 |

| Biopsy recommendation rate | 8–20% | 29 | 56 |

| Not lost at Biopsy | ≥70% | 43 | 83 |

| Timely diagnostic biopsy | ≥90% | 14 | 27 |

| Cancer detection (N=45 facilities with at least 1000 screens) | |||

| Cancer if abnormal screen (PPV1) | 3–8% | 34 | 67 |

| Cancer if biopsied (PPV3) | 15–40% | 29 | 56 |

| Cancer detection rate | 3–10 per 1000 | 34 | 73 |

| Early detection (N=41 facilities with at least 10 detected cancers) | |||

| Proportion minimal | >50% | 22 | 54 |

| Proportion early stage | >30% | 22 | 54 |

Facility designation and benchmarks met

We tabulated the percentage of facilities that met each benchmark by facility designation status as assigned by the American College of Surgeons Commission on Cancer (ACS CoC) (http://www.facs.org/cancer/index.html) and the National Comprehensive Cancer Network (NCCN) (http://www.nccn.com/cancer-center.html). We compared 24 facilities with ACS CoC designation to 29 facilities without such designation on each benchmark. We also compared 10 facilities with NCCN designation to the 42 facilities without such designation. Facilities were also categorized by whether they were hospitals that met the criteria as disproportionate share hospitals (N=12) or were public facilities that served predominantly uninsured patients (N=6). These 18 facilities (hereafter collectively referred to as disproportionate share facilities) were compared to the remaining 34 facilities on benchmarks met.

RESULTS

There were 52 mammography facilities that contributed data on a total of 330,806 screening mammograms (mean 6,362, range 136 – 23,898). These facilities represented 27% of facilities in the six county area providing mammography in 2009. In the city of Chicago, half (25/49) of mammography facilities participated. There was wide variation in measures calculated across facilities (Table 1). The percentage of institutions meeting each benchmark varied from a low of 27% (receiving a timely biopsy, defined as within 60 days of the screen) to a high of 83% (receipt of a biopsy if recommended) (Table 1).

The benchmark for recall rate was met by approximately 70% of facilities (Table 1), with a disproportionate number of facilities with values above vs. below the benchmark range (Figure 2). The benchmarks for not lost to follow-up at diagnostic imaging and biopsy were met by 73% and 83% of facilities, respectively (Table 1).

The benchmark for the proportion with timely diagnostic imaging among patients not lost to follow-up was met by 62% of facilities. The remaining facilities showed a wide range of suboptimal values, some fairly close to meeting the benchmark, but many others far below the benchmark of >90% within 30 days of the screen. A similar pattern was observed for timeliness of biopsy. Estimates were stable enough to suggest real deficiencies in timeliness of care for both measures (Figure 2).

Between one half and three-fourths of facilities could show that they met benchmarks for screen-detected cancers among abnormal screens, among biopsied patients, and for all screens combined. There was a tendency for the cancer rate among abnormal screens to be below or near the low end of the benchmark for many institutions. Many institutions were below or at the low end of the benchmark for screen-cancer detection rate as well. While roughly half of institutions could show that they met benchmarks for early detection of breast cancer (proportion minimal and early stage), the stability and precision of these estimates were limited by the small number of cancers detected at many institutions (Figure 2).

Facility designation and benchmarks met

Twenty-four of our 52 participating facilities were accredited through the ACS CCN, and 10 of these 24 were accredited with the National Consortium of Breast Centers. Facilities that were accredited through either body were more likely than non-accredited facilities to meet benchmarks related to biopsy and cancer detection (Table 2). Most notably, 79% of ACS CCN centers met the benchmark for early stage detection compared with only 14% of facilities not accredited through ACS CCN (80% vs. 36%, respectively, for NCBC accreditation). Disproportionate share facilities, on the other hand, were less likely than other facilities to meet specific benchmarks, including those related to follow-up and timeliness of imaging, and timeliness of biopsy (Table 3).

Table 2.

Percentage of facilities meeting each benchmark, by facility designation.

| ACSCC designation

|

NCBC designation

|

|||

|---|---|---|---|---|

| No (N=28) | Yes (N=24) | No (N=42) | Yes1 (N=10) | |

| Recall Rate | 68 | 71 | 67 | 80 |

| Not lost at Imaging | 71 | 75 | 71 | 80 |

| Timely Imaging | 64 | 58 | 60 | 70 |

| Biopsy Recommendation | 57 | 54 | 57 | 50 |

| Not lost at Biopsy | 75 | 92 + | 81 | 90 |

| Timely Biopsy | 18 | 38 + | 19 | 60 ** |

| Cancer among biopsied | 39 | 75 * | 52 | 70 |

| Cancer among abnormal screens | 57 | 79 * | 64 | 80 |

| Cancer detection rate | 68 | 79 | 71 | 80 |

| Early stage cancers | 14 | 79 *** | 36 | 80 ** |

| Minimal cancers | 32 | 67 ** | 40 | 80 * |

P<=0.20.

P<=0.10,

P<0.01,

P<0.001

Of the 24 ACSCC accredited centers, 10 are also accredited through NCBC.

Table 3.

Percentage of facilities meeting each benchmark, by facility designation as disproportionate share

| % Shown to Meet | Disproportionate Share | ||

|---|---|---|---|

| No (N=34) | Yes (N=18) | ||

| Recall Rate | 76 | 56 | 0.12 |

| Not lost at Imaging | 82 | 56 | 0.04 |

| Timely Imaging | 76 | 33 | 0.002 |

| Biopsy Recommendation | 59 | 50 | |

| Not lost at Biopsy | 85 | 78 | |

| Timely Biopsy | 35 | 11 | 0.06 |

| Cancer among biopsied | 62 | 44 | |

| Cancer among abnormal screens | 85 | 33 | 0.001 |

| Cancer detection rate | 76 | 67 | |

| Early stage cancers | 50 | 33 | |

| Minimal cancers | 53 | 39 | |

P-values >0.20 are suppressed. Six public facilities were included in the definition of disproportionate share.

DISCUSSION

A high quality screening mammography program should find breast cancer when it exists, find it early and when it’s small so that treatments can be more effective, and ensure that when a mammogram shows something suspicious that a woman gets follow up quickly. The goal of this study was to examine the extent to which institutions are able to collect data required to measure mammography quality, and whether institutions could demonstrate that they were performing high quality screening mammography, according to established benchmarks.

Whether a facility met a particular benchmark can be a function of two distinct processes, namely quality of care and quality of data submitted to the Consortium. Certain benchmarks such as proportion minimal and early stage cancers and cancer detection rate are also likely to be sensitive to the patient mix with respect to how regularly or irregularly screened the patient population is and the age distribution. However, when one looks at these measures together, it is possible to get a reasonable indication as to whether quality issues are present. For instance, at a facility such as disproportionate share facility where the patient population is likely to be less regularly screened, one may expect the cancer detection rate to be higher. However, with such a population mix, one also expects the proportion minimal and early stage cancers detected to be lower. If on the other hand, the cancer detection rate is low and the proportion minimal and early stage cancers are also low, this could imply that some cancers are being missed. Facilities do not readily have data available on how well screened their patient population is and this is a limitation of our analysis.

The ability to accurately measure the quality of breast-related health care provided to patients depends crucially on the extent to which institutions are able to collect accurate data required to measure mammography quality. For facilities that fulfill only the minimum requirements of MQSA, accuracy of data collection is potentially threatened by lack of sufficient follow-up of abnormal screening results, and possibly by incomplete or nonexistent differentiation between screening and diagnostic mammograms. Facilities contributing data for these analyses used a wide range of systems for tracking screening mammogram results and diagnostic follow-up, from rudimentary ad-hoc methods to state of the art electronic commercial mammography databases. In addition, while many institutions lacked staff time allocated to tracking abnormal mammograms, others allocated a full-time staff member for this purpose. This lack of standardization in terms of data collection systems and staffing resources would be expected to produce variation in the quality of data submitted to this or any similar quality improvement effort. Different measures have different levels of difficulty in terms of data collection and tracking, and what follows is a description of our results interpreted in the context of possible data collection inaccuracies.

Recall rate was defined as the proportion of screening mammograms interpreted as BI-RADS category 0, 4, or 5 which, by definition, require diagnostic follow-up imaging and/or biopsy. According to the American College of Radiology the percentage of patients recalled following a screening mammogram should be 10% or less [13]. The federal government is considering instituting a reimbursement policy in favor of recall rates not exceeding 14%. Some research studies suggest that recall rates of around 5 percent achieve the best trade-off of sensitivity and positive predictive value [14, 15]. For these analyses, the benchmark for recall rate was met if no less than 5% and no greater than 14% of screening mammograms were interpreted as abnormal. We observed institutions at both ends of the spectrum in terms of recall rates that were too low or too high, and results could be used to determine if there is a need to improve quality of mammography interpretation. For example, a low recall rate could be a function of a highly screened population, but also suggests insensitive interpretation of screening mammograms. Too high a recall rate could be a function of an infrequently screened population or a patient population with less access to prior images, but also can suggest that too many patients are being worked up, resulting in excessive morbidity and financial costs.

Lost to follow-up at either follow-up imaging or biopsy could simply reflect patients that leave the facility to obtain follow-up care elsewhere. However, in order for a facility to measure and improve the effectiveness of their screening program, obtaining this follow-up information from another institution/healthcare provider is critical.

The benefits of routine screening could be diminished by long delays in receiving follow-up care. We observed institutions with apparent deficits in terms of timeliness of care. A qualitative analysis of a subset of sites revealed that all sites reported following MQSA requirements to send screening results to patients and follow up letters to those with abnormal results, but less than half attempted to contact all screening patients with abnormal results via telephone (Weldon C et al., unpublished results). This general observation could help to explain why timeliness of follow-up appeared problematic for many sites [16].

To assess effectiveness of detection of early/minimal sized cancers, we asked for the proportion of screen-detected breast cancers that were minimal and the proportion that were early stage, excluding from our calculations facilities detecting less than 10 cancers. Only about half of the remaining facilities were shown to meet each of these two benchmarks. Insufficient rates of early detection could be a function of an infrequently screened population, but also suggests that many women are having early stage breast cancers missed on a prior mammogram only to be detected on a subsequent mammogram at a later stage. This is in line with other research performed by our group, where prior images from women diagnosed with breast cancer were analyzed for potentially missed breast cancer. This research found higher rates of poorer quality imaging and potential missed detection for publicly insured women, poor women and women with less education, indicating that these groups were accessing lower quality mammography [17, 18]

There are other limitations to our study in addition to those noted above. Only 27% of facilities in the six county area and half of mammography facilities in the city of Chicago participated in this voluntary effort. Nonetheless, they represented a range of public, private and academic facilities not typically seen in mammography quality efforts of this type, which tend to be heavily weighted towards academic and higher-resource facilities. It is possible that institutions that are more secure in the quality of their data would be more likely than others to participate, in which case these results might be overly optimistic regarding the ability of institutions more generally to meet quality benchmarks.

In conclusion, we found that most institutions are not tracking additional data beyond that necessary to meet minimum MQSA requirements. The minimum MQSA requirements, in and of themselves, are not useful for understanding an institution’s mammography screening process from a radiologist’s quality of read perspective and from the perspective of timeliness of follow up and linkage to biopsy services where necessary. As a result, while data were generally consistent with many quality deficits, these same data were frequently insufficient to make definitive statements about mammography quality. Measures that were likely least affected by insufficient tracking were those pertaining to recall of abnormal screening results and timeliness of diagnostic imaging and biopsy, all of which at least in theory, were based on well-defined denominators. These measures strongly suggest that recall is sometimes too low or too high, and that timeliness is a real problem across many facilities. In order to tease out issues of tracking from issues of care quality, it is crucial that complete tracking be performed so that results can be interpreted solely in terms of quality of care. This will only happen in a general sense if MQSA is reauthorized and the guidelines for tracking outcomes and measuring quality indicators are strengthened to better reflect actual quality of care as previously suggested by the National Cancer Program Board in 2005.

Acknowledgments

This work was funded by a grant to the University of Illinois at Chicago from Agency for Health Research and Quality (Grant # 1 R01 HS018366-01A1), and by grants to the Metropolitan Chicago Breast Cancer Task Force by Susan G Komen for the Cure and the Avon Foundation for Women. We would like to thank all of the institutions and their staff who collected data for this effort.

References

- 1.Ansell D, Grabler P, Whitman S, Ferrans C, Burgess-Bishop J, Murray LR, Rao R, Marcus E. A community effort to reduce the black/white breast cancer mortality disparity in Chicago. Cancer Causes Control. 2009;20:1681–8. doi: 10.1007/s10552-009-9419-7. [DOI] [PubMed] [Google Scholar]

- 2.Carey LA, Perou CM, Livasy CA, Dressler LG, Cowan D, Conway K, Karaca G, Troester MA, Tse CK, Edmiston S, Deming SL, Geradts J, Cheang MCU, Nielsen TO, Moorman PG, Earp HS, Millikan RC. Race, breast cancer subtypes, and survival in the Carolina Breast Cancer Study. JAMA. 2006;295:2492–502. doi: 10.1001/jama.295.21.2492. [DOI] [PubMed] [Google Scholar]

- 3.Cunningham JE, Montero AJ, Garrett-Mayer E, Berkel HJ, Ely B. Racial differences in the incidence of breast cancer subtypes defined by combined histologic grade and hormone receptor status. Cancer Causes Control. 2010;21:399–409. doi: 10.1007/s10552-009-9472-2. [DOI] [PubMed] [Google Scholar]

- 4.Ooi SL, Martinez ME, Li CI. Disparities in breast cancer characteristics and outcomes by race/ethnicity. Breast Cancer Res Treat. 2011;127:729–38. doi: 10.1007/s10549-010-1191-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sabatino SA, CRJ, Uhler RJ, Breen N, Tangka F, Shaw KM. Disparities in mammography use among US women aged 40–64 years, by race, ethnicity, income, and health insurance status, 1993 and 2005. Med Care. 2008;46:692–700. doi: 10.1097/MLR.0b013e31817893b1. [DOI] [PubMed] [Google Scholar]

- 6.Elmore JG, Nakano CY, Linden HM, Reisch LM, Ayanian JZ, Larson EB. Racial inequities in the timing of breast cancer detection, diagnosis, and initiation of treatment. Med Care. 2005;43:141–8. doi: 10.1097/00005650-200502000-00007. [DOI] [PubMed] [Google Scholar]

- 7.Rauscher GH, Allgood KL, Whitman S, Conant E. Disparities in screening mammography services by race/ethnicity and health insurance. J Womens Health. 2012;21:154–60. doi: 10.1089/jwh.2010.2415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gray JE. Mammography (and Radiology?) is still Plagued with Poor Quality in Photographic Processing and Darkroom Fog. Radiology. 1994;191(2):318–19. doi: 10.1148/radiology.191.2.8153299. [DOI] [PubMed] [Google Scholar]

- 9. [accessed on 5/18/2012];Mamography Quality Standards Reauthorization Act of 1998 (MQRSA) (Public Law 105–248) Available at http://www.fda.gov/RadiationEmittingProducts/MammographyQualityStandardsActandProgram/Regulations/ucm110823.htm.

- 10.Monsees BS. The Mammography Quality Standards Act: An overview of the regulations and guidance. Radiologic Clinics of North America. 2000;38(4):759–72. doi: 10.1016/s0033-8389(05)70199-8. [DOI] [PubMed] [Google Scholar]

- 11.Nass Sharyl, Ball John., editors. Committee on Improving Mammography Quality Standards. National Cancer Policy Board, The National Academies Press; Washington, D.C: 2005. Improving Breast Imaging Quality Standards. [Google Scholar]

- 12.Breast Cancer Surveillance Consortium. [accessed 2/4/2013]; website Breastscreening.cancer.gov.

- 13.Rosenberg RD, Yankaskas BC, Abraham LA, et al. Performance benchmarks for screening mammography. Radiology. 2006;241:55–66. doi: 10.1148/radiol.2411051504. [DOI] [PubMed] [Google Scholar]

- 14.Schell MJ, Yankaskas BC, Ballard-Barbash R, et al. Evidence-based target recall rates for screening mammography. Radiology. 2007;243:681–9. doi: 10.1148/radiol.2433060372. [DOI] [PubMed] [Google Scholar]

- 15.Yankaskas BC, Cleveland RJ, Schell MJ, Kozar R. Association of recall rates with sensitivity and positive predictive values of screening mammography. Am J Roentgenol. 2001;177:543–9. doi: 10.2214/ajr.177.3.1770543. [DOI] [PubMed] [Google Scholar]

- 16.Goel A, George J, Burack RC. Telephone Reminders Increase Re-Screening in a County Breast Screening Program. Journal of Health Care for the Poor and Underserved. 2008;19(2):512–521. doi: 10.1353/hpu.0.0025. [DOI] [PubMed] [Google Scholar]

- 17.Rauscher GH, Conant EF, Khan J, Berbaum ML. Mammogram image quality as a potential contributor to disparities in breast cancer stage at diagnosis: an observational study. BMC Cancer. 2013 doi: 10.1186/1471-2407-13-208. (In Press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rauscher GH, Khan J, Berbaum ML, Conant EF. Potentially missed detection with screening mammography: does the quality of radiologist’s interpretation vary by patient socioeconomic advantage/disadvantage? Annals of Epidemiology. 2012;23(4):210–214. doi: 10.1016/j.annepidem.2013.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]