Abstract

Purpose.

Detecting and recognizing three-dimensional (3D) objects is an important component of the visual accessibility of public spaces for people with impaired vision. The present study investigated the impact of environmental factors and object properties on the recognition of objects by subjects who viewed physical objects with severely reduced acuity.

Methods.

The experiment was conducted in an indoor testing space. We examined detection and identification of simple convex objects by normally sighted subjects wearing diffusing goggles that reduced effective acuity to 20/900. We used psychophysical methods to examine the effect on performance of important environmental variables: viewing distance (from 10–24 feet, or 3.05–7.32 m) and illumination (overhead fluorescent and artificial window), and object variables: shape (boxes and cylinders), size (heights from 2–6 feet, or 0.61–1.83 m), and color (gray and white).

Results.

Object identification was significantly affected by distance, color, height, and shape, as well as interactions between illumination, color, and shape. A stepwise regression analysis showed that 64% of the variability in identification could be explained by object contrast values (58%) and object visual angle (6%).

Conclusions.

When acuity is severely limited, illumination, distance, color, height, and shape influence the identification and detection of simple 3D objects. These effects can be explained in large part by the impact of these variables on object contrast and visual angle. Basic design principles for improving object visibility are discussed.

This research examines the effects of illumination, viewing distance, color, height, and shape on the visibility of simple convex objects in low-resolution viewing conditions. Basic design principles for improving object visibility are discussed.

Introduction

The visual accessibility of a space has been defined as “the effectiveness with which vision can be used to travel safely through the space and to pursue the intended activities in the space.”1 A major issue for visual accessibility is the degree to which the objects within spaces are easily and safely identifiable and detectable by people with visual impairment. Visual accessibility includes two major issues: safety and navigability. Much of today's landscape architecture and building architecture has been built with insufficient consideration for the increasing visually impaired population. Simple means for enhancing visibility of objects and improving the accessibility of architectural spaces have been described by Arditi and Brabyn.2 For example, these authors noted that enhancing edge contrast of steps and other obstacles would improve visibility for those with impaired vision. An ultimate goal of our research on visual accessibility is to create design tools and theoretical support for the creation and optimization of visually accessible spaces. This would include architectural design principles for making key features of spaces more visible for a majority of visually impaired users, and would provide suggestions to designers for how to create and evaluate existing, retrofitted, and new construction projects in residential and public spaces.

The research presented here examines the effects of illumination, viewing distance, color, height, and shape on the identification and detectability of simple convex objects, boxes and cylinders, with severely reduced visual acuity. We tested normally sighted participants with artificially reduced acuity (wearing goggles fitted with Bangerter occlusion foils [model number <0.1; Fresnel Prism & Lens Co., Eden Prairie, MN]) rather than low-vision subjects in order to minimize individual variability. Related research in our lab is examining the generalization of results obtained with artificial acuity reduction to real low vision (Bochsler TM, Legge GE, Gage R, Kallie CS, manuscript submitted, 2012). Our purpose was to address the impact of important visual variables on object visibility under conditions of low spatial resolution and image contrast, which are generic factors that are often a consequence of low vision. We chose simple convex objects, a simple uniform gray background and gray floor layout, and artificial acuity reduction in this experiment to avoid some of the complexity associated with natural environments and real visual deficits. We reasoned that once we understood the results presented here, we would be better prepared to perform future experiments with visually impaired participants, and understand the effects of more complex real or realistically rendered environments.

We tested the effects of two environmental variables, illumination and viewing distance, and three object variables, color, height, and shape. We briefly review the rationale for examining these factors in the following subsections.

Illumination

Overall changes of illumination within a scene can have major effects on the visibility of objects. Kuyk et al.3 studied the performance of 88 low-vision subjects who walked through an obstacle course. When overall illumination was reduced from photopic to mesopic levels, the mean time to complete the obstacle course and the mean number of contacts with obstacles doubled. Another study found that under low illumination, negotiating a step was more difficult for elders than for younger individuals, even though the elders had good clinical visual acuities.4

Because we are concerned with architectural design principles, we focused on lighting arrangement rather than overall illumination level. Architects make decisions on the location and type of lighting in indoor spaces, while keeping overall illumination at required photopic levels. The Lighting Handbook5 recommends a wide range of illuminance values for different spaces and circumstances, including: safety and environmental requirements, adaptation, usage and performance requirements, activity levels, and the most common age of users. As an example, recommended illuminance values for educational building lobbies range between 25 and 200 lux.5

We reasoned that the differences in illumination (diffuse illumination versus localized directional illumination) would produce an effect on object identification and detectability. Specifically, we predicted that the even illumination provided by overhead fluorescent luminaires would produce less object visibility than the direct illumination from our artificial windows. Many people have the intuition that flat ambient illumination is better than directional lighting, and in some cases (e.g., to minimize glare) this intuition is likely to be correct. However, diffuse illumination might lower contrasts for objects. Directional lighting is more likely to produce visible luminance gradients on curved surfaces from self-occlusions between the surface and light source. In our previous study on the visibility of ramps and steps, we found relatively weak effects of lighting arrangement, but one directional lighting condition (far window condition) produced slightly better performance than overhead lighting.1 In the present study, we predicted that there would be overall increases of contrasts in and around the target objects with window illumination, due to the directional nature of the light source, and consequently, we predicted that window illumination would produce better human performance.

Distance

Viewing distance effects are important for walking. As illustrated by Sedgwick, it is ecologically important for people to estimate the distances of objects that are resting on the ground.6 Pedestrians must be able to see an object (or obstacle) early enough to avoid a collision and to plan a route around it. A number of studies showed that people slow down,4,7,8 or display more careful stepping behaviors,9 when experiencing reductions in vision. Those findings suggest that people slow down in order to maintain necessary reaction times to avoid collisions. The detection distance problem has also been studied in the context of orientation and mobility (O&M) training efficacy.10,11 Goodrich and Ludt10 noted the importance of detecting obstacles early enough to avoid incidents. In one training study,11 the authors were able to train the majority of visually impaired pedestrians to detect drop-offs, obstacles placed on the ground, and overhangs at sufficient distances to avoid collisions.

Given low-resolution viewing, visibility at distances that give reasonable response times during walking are important for visual accessibility. We predicted that closer objects would be more detectible and recognizable than distant objects, due to their increased angular size on the retina.

Color

Color can also play an important role in low-vision object recognition,12 and possibly object detection. In a study comparing normally sighted individuals and AMD participants, those with AMD showed an increased ability to identify objects in scenes with color (versus gray scale equivalents).13 Similarly, a meta-analysis (examining a variety of recognition tasks including both normal and low-vision experiments) of color (versus gray scale) and object recognition showed that color enhances object recognition.14 In the two aforementioned studies, color referred to hue and saturation. In the present study, “color” refers to gray and white objects.

In our study, we tested the effects of gray and white objects against a gray background, predicting that white objects would be more detectable, on average, than gray objects. We made this prediction because white objects create higher edge contrast with our gray background (under the majority of our lighting conditions). Internal contrast may also be more visible on white objects than the darker (gray) objects. All else being equal, luminance ratios, and, hence, internal contrasts, should be the same across gray and white objects. However, it is possible that the same contrast on a brighter surface may be slightly more visible than on a darker surface. For example, using the Atick and Redlich contrast sensitivity model,15 for a sinusoidal contrast grating of 10°C, a 10% contrast gradient is easily visible at 10 cd/m2, but at 1 cd/m2, the gradient is close to threshold visibility, and becomes invisible at 0.1 cd/m2.

Height

In the present study, the objects were placed on the ground plane. They varied in height from 2 to 6 feet (0.61–1.83 m). Similar to our prediction for distance, we predicted that taller objects would be more identifiable and detectable than shorter objects, due to their larger visual angles.

Shape

We restricted attention to convex objects (i.e., objects without any concavities) to avoid complications of self-occlusion within concavities. We chose boxes and cylinders because they possess two different basic surface types: flat and curved. When flat objects are illuminated from afar (e.g., a box illuminated by a luminaire located a few meters away), each of the reflecting flat surfaces of the box will have relatively uniform surface luminance and, hence, low internal contrast. However, a cylinder of the same size and position, seen from the same viewpoint will produce a higher luminance gradient across its curved surface, potentially producing an internal contrast cue. For this reason, if internal contrast were relevant to object detection, we would expect cylinders to be more identifiable and detectable than boxes.

Interactions

We have made predictions about the main effects of our independent variables. In addition, from informal observations of our stimuli, we expected to find noteworthy interactions, which will be discussed in the Results section.

Contrast

We based some of our predictions listed above on contrast because of its presumptive importance in identification and detection. Even mild contrast reductions in everyday scenes, especially those with veiling light producing glare, can have detrimental effects on object detection.16 Arditi and Brabyn2 noted that universal design principles for visual accessibility should address (and increase) contrast for obstacles and landmark features. They specifically noted that using lighting and color to increase contrast for steps and curbs is necessary to make those objects more visible for both visually impaired and normally sighted people.

Contrast between object and background has been studied in AMD patients, showing that enhanced contrast between object and background had a positive effect on detection of objects in two-dimensional (2D) images.17,18 Kuyk et al.3 noted that their low-vision subjects made more contacts with low contrast objects than high contrast objects while walking through an obstacle course.

To summarize, we were interested in the impact of low resolution (low acuity) on real world object visibility. We investigated the impact on object recognition and detection of important environmental variables (lighting arrangement and viewing distance) and object variables (shape, color, and size). In making predictions, we were guided by the expectation that stimulus contrast and angular size would determine performance.

Methods

Participants

Eleven normally sighted young adults (University of Minnesota-Twin Cities college undergraduates) were recruited on campus, and were paid for their participation. All participants had normal or corrected to normal visual acuity and no known eye problems. Participants gave informed consent, the experimental protocol was approved by the University of Minnesota's institutional review board, and followed the tenets of the Declaration of Helsinki.

Materials

Environment.

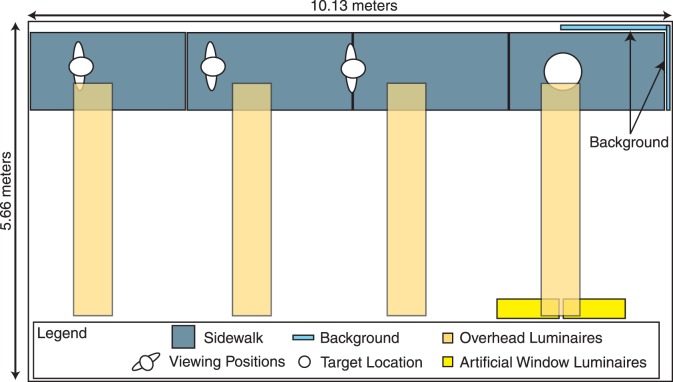

A 4-foot wide by 32-foot long by 16-inch high (1.22-m wide by 9.75-m long by 0.41-m high) sidewalk was constructed out of gray painted 4 by 8 feet (1.22 by 2.44 meter) wooden staging platforms. Gray paint was Valspar satin light gray porch and floor enamel (The Valspar Corporation, Chicago, IL). The platforms were placed lengthwise along the long side of a 10.13 by 5.66 m classroom in a layout shown in Figure 1 (schematic of room, objects, and viewing positions), and Figure 2 (photographs from the 24-foot, or 7.32-m viewing location). Polyester “silver gray” colored, felt fabric was hung at the end of the sidewalk forming the visual background for the target objects. The felt was nearly the same color as the gray paint. A 1.37 by 2.29 m piece of felt was hung at the far end, and a 1.27 by 2.29 m piece was hung on the adjacent side wall near the end (seen in Figs. 1–3). The purpose of the felt was to create a uniform background, reducing contrast cues brought on by irregular background features.

Figure 1. .

Experimental Setup. This diagram shows the dimensions and essential objects in our testing space.

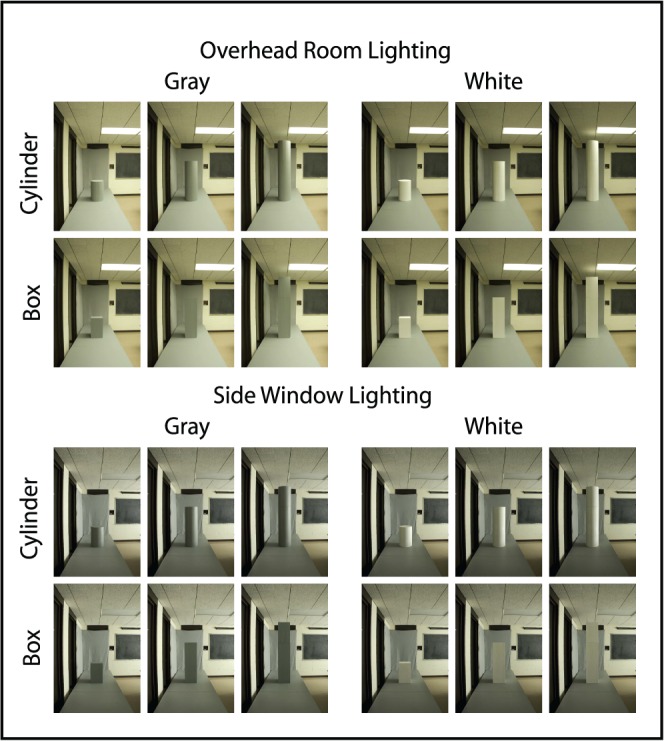

Figure 2. .

Stimuli. This figure shows the experimental stimuli from one viewing distance, including the two lighting conditions, two colors, two shapes, and three heights.

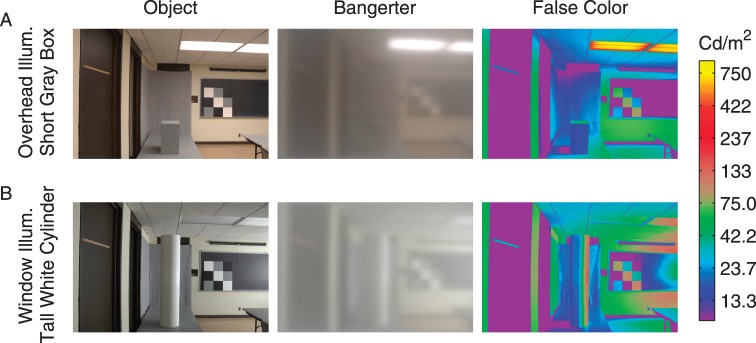

Figure 3. .

Bangerter filtered, and false colored stimuli. (A) shows one of the least detectible conditions (overhead illumination, short gray box), and (B) shows one of the most detectible conditions (window illumination, tall white cylinder). The same scenes are shown through a digital Bangerter filter approximation (for demonstration purposes in the center column), illustrating what our research subjects saw through the goggles. The Bangerter photos are based on a digital approximation of the physical filters. Finally, luminance mapped false color images are shown in the right column. All images were created using Anyhere Software; blur filtered images were created using a Nikon SLR, Anyhere Software, and Matlab.

Blur.

Using 2 overlapping Bangerter occlusion foils (model number <0.1; Fresnel Prism & Lens Co.), blur goggles were constructed from a pair of welding goggles. Individual foils were found to decrease acuity and contrast sensitivity,19 and our double layering of the foils produced an approximate Snellen acuity of 20/900, determined from measurements with the Lighthouse Distance Visual Acuity Chart, and Pelli-Robson contrast sensitivity of 0.6, previously measured in our lab.1 This level of severe acuity reduction would be categorized as “profound low vision” by ICD-9-CM (International Classification of Disease, ninth Revision, Clinical Modification),20 and by the International Council of Ophthalmology (ICO).21 We chose to study severe blur for two reasons. First, a population-based study of 2520 older adults indicated that people were not disabled in mobility tasks unless acuity was poorer than 20/200.22

Second, pilot testing with milder blur (one Bangerter occlusion foil [model number <0.1; Fresnel Prism & Lens Co.]) with an equivalent acuity of 20/140 yielded performance levels too close to ceiling in our task to be useful. We note that, although people with profound low vision often use nonvisual mobility aids such as a white cane or dog guide, they also use their remaining vision. O&M specialists have often noted the preference of people to rely on residual vision, sometimes to their detriment.11

Digital approximations of two objects and scenes viewed through the goggles are shown in Figure 3 (All images were created using Anyhere Software [in the public domain, http://www.anyhere.com]; blur filtered images were created using a Nikon SLR [Nikon, Inc., Melville, NY], Anyhere Software, and Matlab [MATLAB R2011a; MathWorks, in the public domain, http://www.mathworks.com/products/matlab/]).

Stimuli.

Solid boxes and cylinders were constructed out of expanded polystyrene using a locally fabricated nichrome hot wire foam cutter. We reasoned that for a fair comparison of the effects of shape, boxes and cylinders should be matched for volumes and heights. In the real world, for example, a 120-L trash container has the same volume and height regardless of whether it has a rectangular or circular footprint. Boxes had square bases with 0.365-m sides, and cylinders were 0.406 m in diameter. Three object heights were 2, 4, and 6 feet (0.61, 1.22, and 1.83 m). Our short objects were similar in size to a typical bench, while medium height objects were similar in size to trash receptacles, and the tall objects were representative of larger objects such as people and structural building components. Photographs of the 12 objects are shown under the two lighting conditions (described in the next subsection) in Figure 2.

Box orientation was frontal planar, providing minimal internal object contrasts when illuminated from 2 m or more. Cylinders, by nature, reveal almost 180° of their profile from any viewing angle at moderate distances, providing an opportunity for self-occlusions with direct lighting, thereby maximizing internal contrast values. We chose only one orientation for boxes (frontal planar) because it produced a worse case scenario for internal contrast. Different viewing angles of boxes would improve internal contrast measurements.

Illumination.

Many residential, commercial, and institutional spaces make use of both artificial and natural lighting. Objects and spaces under diverse lighting conditions vary dramatically in their visual appearance over different lighting situations. We reasoned that two common lighting conditions would include typical institutional overhead fluorescent illumination, and side illumination from windows (configurations shown in Fig. 1). Overhead lighting was pre-existing, fitted with recessed acrylic prismatic 4 lamp SP41 fluorescent luminaries (GE Lighting, Cleveland, OH).

We simulated window lighting with two movable box-shaped luminaires built out of sheet metal, containing 12 25-W fluorescent tubes in each, painted flat white on the inside. A calendared acrylic sheet (Evonik Cyro LLC, Parsippany, NJ) was fixed to the 0.91 by 0.91-m apertures, which were 0.25 m in front of the fluorescent tubes. Tubes were SP65 fluorescents (GE Lighting). The windows produced room illumination similar to north facing windows overlooking a sunny, snow covered landscape. Mean luminance of each window was 785 cd/m2.

Luminance levels for two of our viewing conditions, including targets and background are shown in Figure 3, where false color images show pixel-based luminance levels of the target objects and backgrounds. The checkerboard pattern to the side of the target objects was used for photometric calibration and analysis. The average luminance for our experimental stimuli ranged between 34 and 77 cd/m2.

Procedure.

Before the experiment, participants were shown all 12 objects without goggles. There were two lighting conditions and three distances, making 6 blocks. Viewing distances were 10, 17, and 24 feet (3.05, 5.18, and 7.32 m). Prior to the first trial of each block, at least one object of each height, size, and color were presented to the participant without blur goggles at the seated testing position. This preview was intended to ensure that the participant was familiar with the appearance of objects for the condition in question. Following the preview, the participant put on earmuffs (Peltor; 3M, St. Paul, MN) with amplified white noise and the goggles. The earmuffs attenuated sound by 29 dB, and the white noise further masked any sounds made by the experimenter while placing objects on the stage. Viewing was monocular, with the dominant eye chosen by a pointing task. An opaque lens on the blur goggles occluded the other eye. We chose monocular vision because many visually impaired people rely on their better eye for visual tasks.

Before each trial, the participant was asked to turn his/her head toward the wall while the next test object was placed in position. Once the object was positioned, the participant was asked to turn his/her head and view the object for up to 4 seconds. After approximately 4 seconds, the participant was asked to turn away and respond, which rarely happened because participants almost always responded before the end of the 4-second viewing interval.

There were 15 trials in each block, including 12 objects (three heights, by two colors, by two shapes) and three catch trials with no objects present. Trials in each block were randomized for each subject, and blocks were randomized between subjects to reduce order effects. There were four judgments on each trial: first a present/absent judgment for detection, second a confidence rating on detection (one not confident to five very confident), third a shape choice (box or cylinder) for identification, and fourth a height choice (short, medium, or tall) for identification. Although confidence ratings were recorded and d-prime was measured for the detection task, they were not analyzed in the present paper. We found that d-prime measurements correlated highly with hit rate, and in order to examine the pattern of results more closely, we opted for a confusion matrix analysis instead. If the subject gave an “absent” response, they were not required to report on shape or height. Feedback was not given.

Photometry.

A Minolta CS-100 Colorimeter (Konica Minolta Sensing Americas, Inc., Ramsey, NJ) was used to sample luminance values across the viewable surfaces of the objects. A luminance profile was measured and Michelson contrasts were estimated for four boundary locations around the object (top, bottom, left, and right), and also internal to the facing surface of the object.

Analysis.

Proportions correct for identification were calculated for each subject and factor combination (illumination, distance, color, height, and shape). Identification proportions correct were arcsine transformed for a 5-factor, within subjects interaction model ANOVA. We restricted our model to only the five main factors and selected interactions that we found particularly interesting. Interaction plots and means tables were constructed for graphical and tabular viewing and post hoc analysis. Confusion matrix false color maps were created to examine the distribution of confusable object types, and to show errors of identification that still had correct object detections. Identification proportion correct was correlated with six Michelson contrast measurements and angular size for objects. Internal contrast was measured from brightest and dimmest luminance samples within each object. Boundary contrasts were measured between the object and background from adjacent luminance samples at several locations on the perimeter of the object at four locations (top, bottom, left, and right).

Results

We focused primarily on object identification performance, represented by the proportion of correctly identified objects. An identification response was scored correct only if the subject identified both the shape (box or cylinder) and the height (short, medium, or tall) correctly. We will first discuss the identification results in detail, with an ANOVA for the five main factors and selected interactions. Later, we will examine the data with a confusion matrix analysis, and finally show significant correlations between identification performance and the important variables of contrast and visual angle.

ANOVA Main Effects on Identification

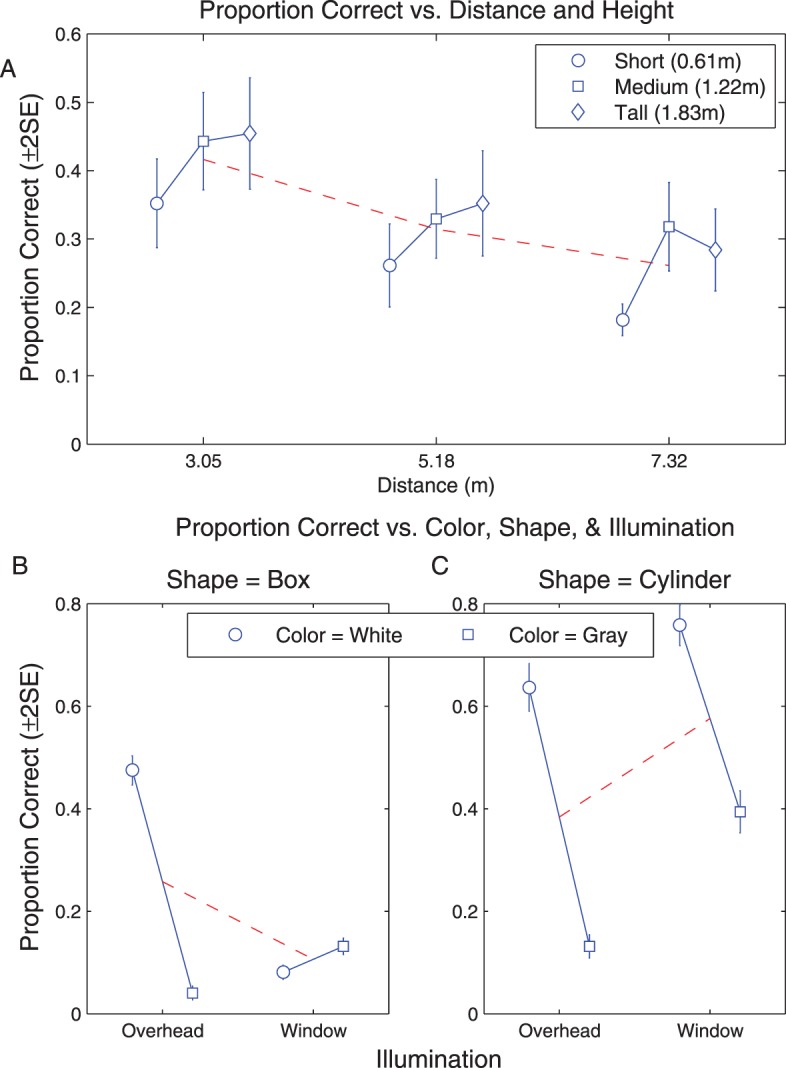

There were significant main effects for viewing distance, F(2, 766) equals 11.19, P less than 0.0001, and height, F(2, 766) equals 5.80, P equals 0.0031 (Fig. 4). Performance decreased with increasing distance. Mean proportions correct for 10, 17, and 24 feet (3.05, 5.18, and 7.32 m) were 0.42, 0.31, and 0.26, respectively. Mean proportions correct for short, medium, and tall objects were 0.26, 0.36, and 0.36, respectively. See Figure 4A and Table 1 for graphical and numerical results. We predicted increasing detectability for increasing heights and decreasing distances. The main effects support our predictions. No significant interaction effects for distance and height were found, F(4, 766) equals 0.21, P equals 0.9303. We will return to distance and height in the correlation analysis below, where we will combine distance and height in an analysis of the effect of visual angle.

Figure 4. .

Interaction Plots. (A) shows identification proportion correct versus distance and height. (B, C) show proportion correct versus illumination, shape, and color. Error bars represent ±2 SE.

Table 1. .

Main Effects (Marginal Means)

|

Main Effects Means | |||

|

Category |

Effect |

Mean |

n |

| Illumination | Overhead | 0.321 | 396 |

| Window | 0.341 | 396 | |

| Distance | 3.05 m | 0.417 | 264 |

| 5.18 m | 0.314 | 264 | |

| 7.32 m | 0.261 | 264 | |

| Color | White | 0.487 | 396 |

| Gray | 0.174 | 396 | |

| Height | Medium | 0.364 | 264 |

| Tall | 0.364 | 264 | |

| Short | 0.265 | 264 | |

| Shape | Box | 0.182 | 396 |

| Cylinder | 0.480 | 396 | |

n refers to the number of trials used to calculate each mean.

Surprisingly, our prediction of a main effect of lighting arrangement (i.e., illumination) was not confirmed, F(1, 766) equals 0.55, P equals 0.4587 (Fig. 4); identification performance did not differ significantly for overhead and “window” lighting. But there were strong interaction effects for illumination by color, and illumination by shape. These interactions can be seen in Tables 2 and 3, as well as Figure 4. We will return to these informative interaction effects, and report their statistics, after addressing the remaining main effects.

Table 2. .

Distance by Height Interaction Means

|

Distance × Height | |||

|

Distance |

Height |

Mean |

n |

| 3.05 m | Medium | 0.443 | 88 |

| 3.05 m | Tall | 0.455 | 88 |

| 3.05 m | Short | 0.352 | 88 |

| 5.18 m | Medium | 0.330 | 88 |

| 5.18 m | Tall | 0.352 | 88 |

| 5.18 m | Short | 0.261 | 88 |

| 7.32 m | Medium | 0.318 | 88 |

| 7.32 m | Tall | 0.284 | 88 |

| 7.32 m | Short | 0.182 | 88 |

Table 3. .

Illumination by Color by Shape Interaction Means

|

Illumination × Color × Shape | ||||

|

Illumination |

Color |

Shape |

Mean |

n |

| Overhead | White | Box | 0.475 | 99 |

| Overhead | White | Cylinder | 0.636 | 99 |

| Overhead | Gray | Box | 0.0404 | 99 |

| Overhead | Gray | Cylinder | 0.131 | 99 |

| Window | White | Box | 0.0808 | 99 |

| Window | White | Cylinder | 0.758 | 99 |

| Window | Gray | Box | 0.131 | 99 |

| Window | Gray | Cylinder | 0.394 | 99 |

The main effect for object color, F(1, 766) equals 132.04, P less than 0.0001, gray or white, was evident, as shown in Figure 4. For example, in three out of the four illumination by shape pairings, white was easier to detect than gray (Figs. 4B, 4C). Mean proportions correct for white and gray were 0.49 and 0.17, respectively, as shown in Table 1.

Similarly, a main effect for shape, F(1, 766) equals 119.57, P less than 0.0001, was also evident. Side-by-side comparison of two graphs (Figs. 4B, 4C) shows that cylinders (Fig. 4C) were easier to detect than boxes (Fig. 4B). Mean proportions correct for boxes and cylinders were 0.18 and 0.48, respectively, as shown in Table 1.

A main effect for subject, F(10, 766) equals 3.70, P equals 0.0001, was also observed.

ANOVA Interaction Effects on Identification

Three two-way interactions: illumination by color, F(1, 766) equals 33.01, P less than 0.0001; illumination by shape, F(1, 766) equals 39.71, P less than 0.0001; and color by shape, F(1, 766) equals 19.79, p less than 0.0001; along with the three-way interaction, illumination by color by shape, F(1, 766) equals 9.93, P equals 0.0017; were all significant (Figs. 4B, 4C). Most notable is the two-way interaction between shape and illumination (two red dashed lines in Figs. 4B, 4C), and the three-way interaction between color, shape, and illumination (four solid blue lines in Figs. 4B, 4C). All eight values for the three-way interaction are shown in Table 3 and Figures 4B and 4C. The three-way interaction is noteworthy because it represents a simple demonstration of the effects of three important variables (illumination, color, and geometry), which we argue must be considered together to predict visibility. We will return to this important issue in the Discussion.

Summary of ANOVA Findings

The most important findings from the ANOVA were: (1) cylinders were always easier to identify than boxes, (2) although white was generally easier to see than gray, under window lighting, gray boxes were easier to identify than white boxes, even though the background was always gray, (3) as expected, performance was better for larger objects and nearer viewing distances, and (4) performance did not differ between overhead and “window” lighting.

Confusion Matrix Analysis

The preceding analysis dealt with correct identification of the stimuli. In this section, we report all possible stimulus/response combinations with confusion matrix false color maps (Fig. 5). The confusions include correct identifications (which were analyzed above, and labeled “Hits” here), Correct Rejections, False Alarms, Misses, and Identification Errors. The remaining cells include shape confusions, height confusions and joint shape and height confusions, which were left as unlabeled cells in Figure 5.

Figure 5. .

Confusion matrix false color maps (by shape, height, and color). Figure 5 shows confusion matrix color maps for proportion correct for the two object types, three heights, and two colors. H, Hits; CR, Correct Rejections; FA, False Alarms; M, Miss; I, Identification errors; NO, No Object; G, Gray; W, White; B, Box; C, Cylinder; 1, Short; 2, Medium; 3, Tall.

There are three main findings from the confusion matrix analysis. First, detection errors were mostly misses and not false alarms, that is, subjects sometimes failed to see objects that were present, but rarely reported seeing objects on the catch trials. Second, most of the confusions were between shapes, and few confusions were between heights, or between shapes and heights. Third, it is interesting to see how the miss rate changed with lighting condition, object shape, and object color. In the overhead matrices (Figs. 5A–C), there were many misses for gray objects, and few misses for white objects. However, in the window matrices (Figs. 5D–F), the pattern of misses is different, where boxes have many misses and cylinders have far fewer misses. This finding further indicates illumination, color, and geometry, collectively, as important variables to predict visibility.

Correlation Analysis

We computed the correlations between identification proportion correct and several measures of stimulus contrast. We found that 58.3% of the variability in proportion correct performance was accounted for by Michelson contrast. Not surprisingly, the left edge Michelson contrast accounted for very little (2.46%), due to the fact that illumination came primarily from the right side of the objects, which can be seen in both overhead and window illumination (see Fig. 2). Michelson contrasts at the top edge and bottom edge of the objects accounted for 43.1% and 42.1% of the variance, respectively. Michelson contrast on the right edge of the objects and internal to the front surface of the object accounted for 57.7% and 57.5% of the variance, respectively. The variance accounted for by the maximum of the measured Michelson contrast values was 58.3%. Maximum Michelson contrast refers to the highest of the five measured contrast values for a given target and viewing condition.

Visual angle, computed from the height and distance of each object, accounted for 5.8% of the variability in identification. A stepwise regression analysis of all seven predictors (i.e., six contrasts plus visual angle) showed that two predictors: internal contrast plus visual angle, accounted for 63.8% of the variability in identification performance. The remaining contrast values were highly correlated with one another, and therefore did not explain significantly more of the variability.

Discussion

We are interested in object recognition in low-resolution (low-acuity) viewing because of its potential relevance to low vision and visual accessibility.

We identified several variables, which we expect to be important in low-resolution object recognition, including illumination, distance, color, height, and shape. In addition to main effects, we have identified important interactions that occur in real spaces. Our findings suggest three relatively simple heuristics for designing visually accessible spaces, as noted in the following three paragraphs.

First, and of most importance, contrast is a dominating factor for detection and identification of convex objects with blurred vision: the higher the contrast, the better the visibility.

However, the emphasis on contrast raises a second more subtle point. We would typically think of producing good visibility of an object by selecting its surface reflectance (color) to be different from the background (e.g., the white objects seen against the gray background in our experiments). However, contrast between object and background is also strongly dependent on the lighting arrangement. Consider, for example, the case in our experiment in which performance is actually better for a gray object on gray background, than for a white object on gray background (results shown in Fig. 4B, window). The interesting point here, from a design perspective, is that the typical notion that target/background contrast is always improved by increasing the reflectance difference between object and background can sometimes be wrong. This is why the interaction effects are important to consider for visual saliency. Examples shown in Figure 2 illustrate this interesting and instructive reversal. This type of situation can occur in a variety of overhead and window illumination settings, such as the common occurrence when a low-reflectance object has more direct illumination than a high-reflectance object.

Third, for detection and identification of objects with blurred vision, the angular size of objects plays an ecologically important role. In our experiment, we found that larger and closer objects are easier to see. In some cases, it may be possible to predict the viewing distance at which a person with a given level of acuity would be able to recognize an object or object feature of a given angular size. Given information on typical pedestrian travel paths and walking speeds, such predictions could be useful in assessing the likelihood that hazards would go undetected visually. We included a concrete example in Legge et al.,1 where we illustrated the relationship between viewing distance and acuity for detecting a step down.

Kuyk et al.3 and Hassan et al.23 both examined the effects of number of collisions as a function of object height in studies in which low-vision subjects walked through an obstacle course. This paradigm differs from ours, in which subjects were asked to recognize objects from a distance. Kuyk et al.3 tested a heterogeneous group of veterans. They found between-subject main effects and interactions for number of collisions as a function of object type (head level objects, floor level walk around objects, and step-over objects). Hassan et al.23 tested AMD patients and found no significant differences from a group of age-matched normally sighted controls in walking speed and number of obstacle contacts. The lack of difference between the groups and lack of an effect of obstacle height may indicate that the acuity of the AMD subjects was adequate to detect the obstacles when closely approached. The stronger effect of object height in our study is likely due to the greater importance of object angular size in measures of object visibility at a distance.

In our study, the data indicate that cylinders are much more visible than boxes (i.e., curved surfaces are more visible than frontal-planar flat surfaces). Our finding that cylinders were more visible than boxes with overhead and window illumination suggests that curves may be more generally visible than planes, as long as the surface normal of the object curves away from the source of illumination and that the illumination of the space is not completely diffuse. Further studies on orientations of flat objects and placements of curved objects in a variety of illuminations should be performed in order to determine the generalizability of our findings.

Do we expect our findings to generalize to low vision? Findings from our previous studies of the visibility of ramps and steps suggest the affirmative. In our initial study with the steps and ramps,1 48 normally sighted subjects were tested on the recognition of five targets: step up, step down, ramp up, ramp down, and a flat surface. They wore blurring goggles, constructed from Bangerter occlusion foils that reduced acuity to approximately 20/140 (mild blur) or 20/900 (severe blur). The effects of variation in lighting were milder than expected. Performance declined for the largest viewing distance, but exhibited a surprising reversal for nearer viewing. Of relevance to pedestrian safety, the step up was more visible than the step down. In a second study,24 we investigated the impact of two additional factors expected to facilitate the recognition of steps and ramps during low-acuity viewing, surface texture, and locomotion. We found that coarse texture on the ground plane reduced the visibility of ramps and steps, but self locomotion (walking) enhanced visibility. In a third study, we conducted similar measurements on a group of 16 low-vision subjects with heterogeneous eye conditions and a wide range of acuities (Bochsler TM, Legge GE, Gage R, Kallie CS, manuscript submitted, 2012). As expected, overall performance decreased with acuity. More importantly, the qualitative pattern of results was very similar for the low-vision subjects and the normals with artificial acuity reduction. In particular, the low-vision subjects showed the same performance differences across target type (e.g., step up was much more visible than step down), benefited from locomotion, and exhibited poorer performance with texture. These parallel findings between normal and low vision encourage us to expect that similar parallels would exist for object recognition.

While we expect the qualitative features of our results to generalize to people with reduced acuity associated with low vision, we mention three caveats, as noted previously in Bochsler, et al.24: first, the Bangerter blur foils reduce acuity and contrast sensitivity for normally sighted subjects, but are not necessarily representative of any particular form of low vision. Second, we studied monocular viewing to simplify the optical arrangements for our subjects, and to simplify potential extension of the findings to low vision. Many people with mild or severe low vision have unequal vision status (acuities and other visual characteristics) of the two eyes, with performance determined primarily by the better eye.25 Third, our subjects knew that one of the five targets was present in each trial, and where to look for it, but low-vision pedestrians navigating unfamiliar locations in the real world do not always know when and where obstacles will appear in their path. Such uncertainties pose challenges for mobility not present in our study. We also note that our study focused on severely reduced acuity, but it is known that severe field restriction has a major impact on low-vision mobility.3

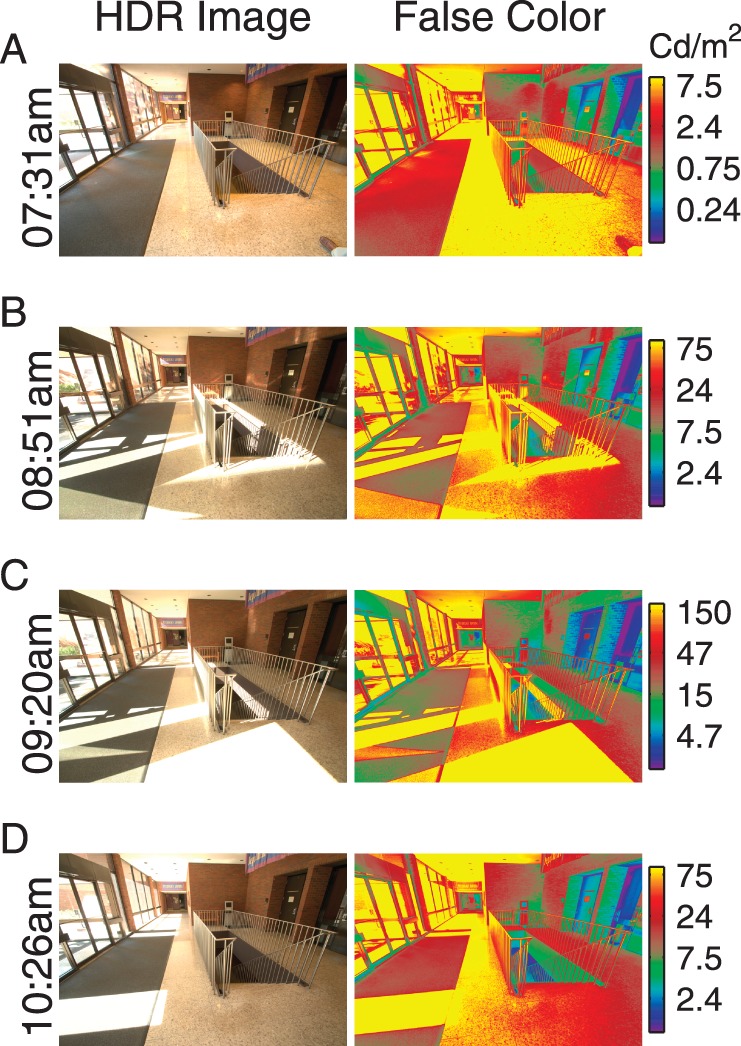

A surprising result in our study was the lack of an effect of the lighting arrangement. We think that this null result was due to the limited range of lighting conditions tested. As a counterpoint to our null finding, we conclude with an example illustrating how variations in natural lighting (sunlight) in a public space can dramatically affect the visibility of key features (Fig. 6). Using high dynamic range (photometric) photography (Anyhere Software), Figure 6 shows a lobby at four different times during a single July morning. The Michelson contrast between the floor mat and the floor is 0.67, while the Michelson contrast along the edge of the shaft of sunlight is 0.88. The pattern of sun and shade produces high contrasts, which may interfere with visual detection of object features such as the floor mat. Of more importance to safety, the complex pattern of light and dark may make it difficult to identify the open stairwell. This example illustrates another limitation of our study: the potential interfering effects on object detection and identification from high-contrast cast shadows.

Figure 6. .

Lobby. Figure 6 shows a lobby outside the experimental testing room. Images were recorded on a sunny morning in late July. Before direct sunlight reaches the lobby (A), the dominating contrast features on the floor are along the carpet and around the stairwell, thereby indicating the presence of each. However, as the rising sun passes through the lobby (B–D), the dominating contrasts are along the boarders of the shafts of sunlight, which can mask informative features of the space, such as the carpet on the left and the hazardous stairwell on the right. High dynamic range and false color images were created using Anyhere Software.

Do we expect our results to generalize to more complex and realistic environments and obstacles? In this study, we have articulated how to manipulate simple design features that can greatly enhance object visibility. We expect that the design features that accounted for much of the variability in identification performance in the present study, internal and edge contrast, and object angular size, would carry over to more complex and realistic environments. Objects with simple contours are found throughout the architectural landscape. Placing them in an environment such as the one showcased in Figure 6 would be a good step toward examining object visibility in naturally complex environments.

In summary, the reflectance of objects and their immediate backgrounds, object shape, illumination, and lighting arrangement work interactively to affect the visibility of objects, and should be considered collectively in evaluating visual accessibility.

Acknowledgments

The authors thank Allen M.Y. Cheong and Heejung Park for assistance with experimental setup and data collection; Robert A. Shakespeare, William B. Thompson, Gregory J. Ward, and the Minnesota Supercomputing Institute for providing computational advice and code; Amy A. Kalia for constructing blur foil glasses; Paul R. Schrater and Daniel J. Kersten for advice on experimental design, analysis, and image processing; Lawrence G. Kallie and Holly J. Kallie for providing computer and photographic equipment; and Jennifer M. Scholz for editing advice.

Footnotes

Supported by a grant from the National Institute of Health (EY017835).

Disclosure: C.S. Kallie, None; G.E. Legge, None; D. Yu, None

References

- 1.Legge GE, Yu D, Kallie CS, Bochsler TM, Gage R. Visual accessibility of ramps and steps. J Vis. 2010;10:8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Arditi A, Brabyn J. Signage and Wayfinding. In: Silverstone B, Lang MA, Rosenthal B, Faye EE.eds Lighthouse Handbook on Vision Impairment and Vision Rehabilitation. New York: Oxford University Press; 2000:637–650 [Google Scholar]

- 3.Kuyk T, Elliott JL, Biehl J, Fuhr PS. Environmental variables and mobility performance in adults with low vision. J Am Optom Assoc. 1996;67:403–409 [PubMed] [Google Scholar]

- 4.Alexander NB, Ashton-Miller JA, Giordani B, Guire K, Schultz AB. Age differences in timed accurate stepping with increasing cognitive and visual demand: a walking trail making test. J Gerontol A Biol Sci Med Sci. 2005;60:1558−1562 [DOI] [PubMed] [Google Scholar]

- 5.DiLaura D, Houser K, Mistrick R, Steffy G, eds The Lighting Handbook: Reference & Application. 10th ed. New York: Illuminating Engineering Society of North America; 2011 [Google Scholar]

- 6.Sedgwick A. Space Perception. In: Boff KR, Kaufman L, Thomas JP.ed Handbook of Perception and Human Performance. New York: John Wiley & Sons; 1986:21-1–21-57 [Google Scholar]

- 7.Fuhr PS, Liu L, Kuyk TK. Relationships between feature search and mobility performance in persons with severe visual impairment. Optom Vis Sci. 2007;84:393–400 [DOI] [PubMed] [Google Scholar]

- 8.Klein BEK, Klein R, Lee KE, Cruickshanks KJ. Performance-based and self-assessed measures of visual function as related to history of falls, hip fractures, and measured gait time: The Beaver Dam Eye Study. Ophthalmology. 1998;105:160–164 [DOI] [PubMed] [Google Scholar]

- 9.Vale A, Scally A, Buckley JG, Elliott DB. The effects of monocular refractive blur on gait parameters when negotiating a raised surface. Ophthalmic Physiol Opt. 2008;28:135–142 [DOI] [PubMed] [Google Scholar]

- 10.Goodrich GL, Ludt R. Assessing visual detection ability for mobility in individuals with low vision. Vis Impair Res. 2003;5:57–71 [Google Scholar]

- 11.Ludt R, Goodrich GL. Change in visual perceptual detection distances for low vision travelers as a result of dynamic visual assessment and training. J Vis Impair Blind. 2002;96:7–21 [Google Scholar]

- 12.Wurm LH, Legge GE, Isenberg LM, Luebker A. Color improves object recognition in normal and low vision. J Exp Psychol Hum Percept Perform. 1993;19:899–911 [DOI] [PubMed] [Google Scholar]

- 13.Boucart M, Despretz P, Hladiuk K, Desmettre T. Does context or color improve object recognition in patients with low vision? Vis Neurosci. 2008;25:685–691 [DOI] [PubMed] [Google Scholar]

- 14.Bramão I, Reis A, Petersson KM, Faísca L. The role of color information on object recognition: a review and meta-analysis. Acta Psychologica. In Press. [DOI] [PubMed] [Google Scholar]

- 15.Atick JJ, Redlich AN. What does the retina know about natural scenes? Neural Comput. 1992;4:196–210 [Google Scholar]

- 16.Brabyn J, Schneck M, Haegerstrom-Portnoy G, Lott L. Functional vision: “real world” impairment examples from the SKI Study. Vis Impair Res. 2004;6:35–44 [Google Scholar]

- 17.Bordier C, Petra J, Dauxerre C, Vital-Durand F, Knoblauch K. Influence of background on image recognition in normal vision and age-related macular degeneration. Ophthalmic Physiol Opt. 2011;31:203–215 [DOI] [PubMed] [Google Scholar]

- 18.Tran THC, Guyader N, Guerin A, Despretz P, Boucart M. Figure ground discrimination in age-related macular degeneration. Invest Ophthalmol Vis Sci. 2011;52:1655 −1660 [DOI] [PubMed] [Google Scholar]

- 19.Odell NV, Leske DA, Hatt SR, Adams WE, Holmes JM. The effect of Bangerter Filters on optotype acuity, vernier acuity, and contrast sensitivity. J AAPOS. 2008;12:555–559 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Anon. The International classification of diseases, 9th revision, clinical modification: ICD-9-CM. Annotated. Ann Arbor: Commission on Professional and Hospital Activities; 1986 [Google Scholar]

- 21.Colenbrander A. Assessment of functional vision and its rehabilitation. Acta Ophthalmol. 2010;88:163–173 [DOI] [PubMed] [Google Scholar]

- 22.West SK, Rubin GS, Broman AT, et al. How does visual impairment affect performance on tasks of everyday life? The SEE Project. Salisbury Eye Evaluation. Arch. Ophthalmol. 2002;120:774–780 [DOI] [PubMed] [Google Scholar]

- 23.Hassan SE, Lovie-Kitchin JE, Woods RL. Vision and mobility performance of subjects with age-related macular degeneration. Optom Vis Sci. 2002;79:697–707 [DOI] [PubMed] [Google Scholar]

- 24.Bochsler TM, Legge GE, Kallie CS, Gage R. Seeing steps and ramps with simulated low acuity: impact of texture and locomotion. Optom Vis Sci. 2012;89:E1299–1307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kabanarou SA, Rubin GS. Reading with central scotomas: is there a binocular gain? Optom Vis Sci. 2006;83:789–796 [DOI] [PubMed] [Google Scholar]