Abstract

The replicator equation is the first and most important game dynamics studied in connection with evolutionary game theory. It was originally developed for symmetric games with finitely many strategies. Properties of these dynamics are briefly summarized for this case, including the convergence to and stability of the Nash equilibria and evolutionarily stable strategies. The theory is then extended to other game dynamics for symmetric games (e.g., the best response dynamics and adaptive dynamics) and illustrated by examples taken from the literature. It is also extended to multiplayer, population, and asymmetric games.

Keywords: Nash equilibrium, evolutionarily stable strategy (ESS), dynamic stability

Game dynamics model how individuals or populations change their strategy over time based on payoff comparisons. This contrasts with classical noncooperative game theory that analyzes how rational players will behave through static solution concepts such as the Nash equilibrium (NE) (i.e., a strategy choice for each player whereby no individual has a unilateral incentive to change his or her behavior). In general, game dynamics assume that strategies with higher payoff do better. As we will see, the limiting behavior of these dynamics (i.e., the evolutionary outcome) is often a NE with additional stability properties.

The most important game dynamics is the replicator equation, defined for a single species by Taylor and Jonker (1) and named by Schuster and Sigmund (2). The replicator equation is the first game dynamics studied in connection with evolutionary game theory, a theory that was developed by Maynard Smith and Price (3) (see also ref. 4) from the biological perspective in order to predict the evolutionary outcome of population behavior without a detailed analysis of such biological factors as genetic or population size effects. With payoff translated as fitness (i.e., reproductive success), the frequency of a strategy in a large, well-mixed single species changes under the (continuous-time) replicator equation at a per capita rate equal to the difference between its expected payoff and the average payoff of the population (Eq. 1). If each strategy payoff is constant (in particular, independent of strategy frequency), the ultimate outcome of evolution is that everyone plays the strategy with highest payoff, a result that is true for all game dynamics and not only the replicator equation. In biological terms, we have Darwin’s survival of the fittest through natural selection.

Of more interest is what happens when individual payoff depends on the actions of others (i.e., when there is an actual game involved). An early success of evolutionary game theory is then the result that an evolutionarily stable strategy (ESS) is dynamically stable for the single species replicator equation described above (1, 5). The ESS, an intuitive concept of uninvadability originally generalizing the “unbeatable” sex ratios analyzed by Hamilton (6), can be defined solely in terms of payoff comparisons (4, 7). Strategic (i.e., game-theoretic) reasoning has since taken on an increasingly prominent role in predicting both individual and population behaviors in biological systems (8).

Evolutionary game theory has long since expanded beyond its biological roots and become increasingly important for analyzing human and/or social behavior. Here, changes in strategy frequencies do not result from natural selection; rather, individuals (or societies) alter their behavior based on payoff consequences. The replicator equation then emerges from, for instance, individuals making rational decisions on how to imitate observed strategies that currently receive higher payoff. Depending on what information these decision makers have (and how they use this information), a vast array of other game dynamics are possible (9–11).

Evolutionary game theory and its corresponding game dynamics have also expanded well beyond their initial emphasis on single-species (i.e., symmetric) games with a finite set of (pure) strategies where payoffs result from random one-time interactions between pairs of individuals (also called “two-player symmetric normal form games” or, more simply, “matrix games”). In this paper, we briefly highlight some features of matrix games at the beginning of the following section before generalizing to other classes of symmetric games. These are population games, games with a continuum of pure strategies, and multiplayer games. We then consider two-player asymmetric games, including extensive form games (where pairs of individuals have a series of interactions with each other and the set of actions available at later interactions may depend on what choices were made in earlier ones) as well as asymmetric population games and games with continuous strategy spaces.

Symmetric Games

Matrix games have a finite set of m pure strategies, , available for individuals to play. The payoff matrix A has entries (the payoff to when playing against ), for . To obtain the continuous-time, pure-strategy replicator equation (Eq. 1) following the original fitness approach (1), the per capita growth rate in the number of individuals using strategy at time t is taken as the expected payoff of from a single interaction with a random individual in the large population. That is, , where p is the population state in the (mixed) strategy simplex with the proportion of the population using strategy ei at time t. A straightforward calculus exercise yields the replicator equation on :

| [1] |

where is the average payoff of an individual chosen at random (i.e., the population mean payoff). From the theory of dynamical systems, trajectories of [1] leave the interior of forward invariant as well as each of its faces (12).

Theorem 1. The replicator equation for a matrix game satisfies:

-

a)

A stable rest point is a NE.

-

b)

A convergent trajectory in the interior of the strategy space evolves to a NE.

-

c)

A strict NE is locally asymptotically stable.

Theorem 1 is the Folk Theorem of Evolutionary Game Theory (9, 12, 13) applied to the replicator equation [see SI Appendix for definitions of technical terms in the statement of the theorem (SI Appendix, section 1) and throughout the paper]. The three conclusions are true for many matrix game dynamics (in either discrete or continuous time) and serve as a benchmark to test dynamical systems methods applied to general game dynamics and to nonmatrix evolutionary games such as those considered in the remaining sections of this paper.

The Folk Theorem means that biologists can predict the evolutionary outcome of their stable systems by examining NE behavior of the underlying game. It is as if individuals in these systems are rational decision makers when in reality it is natural selection through reproductive fitness that drives the system to its stable outcome. This has produced a paradigm shift toward strategic reasoning in population biology. The profound influence it has had on the analysis of behavioral ecology is greater than earlier game-theoretic methods applied to biology such as Fisher’s (14) argument [see also Darwin (15) and Hamilton (6)] for the prevalence of the 50:50 sex ratio in diploid species and Hamilton’s (16) theory of kin selection.

The importance of strategic reasoning in population biology is further enhanced by Theorem 2 that is based on the intuitive concept of an ESS (3) defined by Maynard Smith (ref. 7, p. 10) as a “strategy such that, if all members of a population adopt it, then no mutant strategy could invade the population under the influence of natural selection.” Maynard Smith goes on to say on the same page that the “definition of an ESS as an uninvadable strategy can be made more precise … if precise assumptions are made about the evolving population.” In a matrix game, if most individuals in the population use with the rest using a mutant strategy p, then the mutant will go extinct in this two-strategy model (SI Appendix, section 1) if and only if

-

i)

(NE condition) and

-

ii)

if (stability condition).

is then an ESS if it satisfies these two conditions for all other .

Theorem 2.

-

a)

is an ESS of a matrix game if and only if for all sufficiently close (but not equal) to .

-

b)

An ESS is a locally asymptotically stable rest point of the replicator equation.

-

c)

An ESS in the interior of is a globally asymptotically stable rest point of the replicator equation.

The equivalent condition for an ESS contained in Theorem 2a is the more useful characterization when generalizing the ESS concept to other evolutionary games. It is called “locally superior” (17), “neighborhood invader strategy” (NIS) (18), or “neighborhood superior” (19). One reason for the different names for this concept is that there are several ways to generalize local superiority to other evolutionary games and these have different stability consequences.

The most elegant proof (5) of the stability statements in Theorem 2 b and c shows that , where the product is taken over is a strict local Lyapunov function [i.e., and for all sufficiently close but not equal to an ESS ]. It is tempting to add these stability statements to the Folk Theorem because they remain valid for many matrix game dynamics through the use of other Lyapunov functions. There are several reasons to avoid this temptation.

First, these statements are not true for discrete-time matrix game dynamics (SI Appendix, section 1) as shown already for three-strategy games that exhibit cyclic dominance. Secondly, global stability of an interior ESS in these games is not true for important classes of game dynamics such as the monotone selection dynamics (17). Furthermore, the three-strategy games of Example 1 demonstrate that trajectories for matrix games may converge to a NE that is not an ESS or approach a heteroclinic cycle around the boundary of the strategy simplex.

Example 1 (Generalized Rock–Scissors–Paper Game): Consider the three-strategy rock–scissors–paper (RSP) matrix game with payoff matrix A given by

| [2] |

All such games with positive parameters and exhibit cyclic dominance, whereby R beats S (i.e., R strictly dominates S in the two-strategy game based on these two strategies), S beats P, and P beats R. This dominance implies that there is no NE on the boundary of . In fact the unique NE for [2] is the completely mixed strategy in the interior. It is globally asymptotically stable under the replicator equation (Fig. 1) but is not an ESS because and .

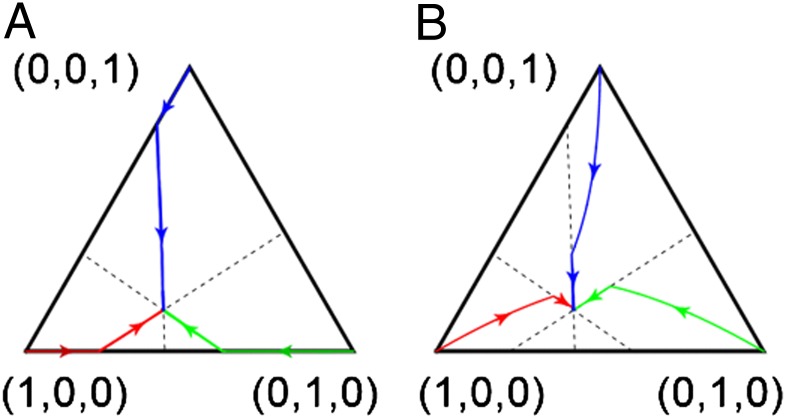

Fig. 1.

Trajectories of the replicator equation for the RSP game with payoff matrix 2.

The matrix game with payoff matrix also exhibits cyclic dominance and its trajectories under the replicator equation are the same as Fig. 1 except the direction is reversed. That is, all interior trajectories of [1] (except the one initially at ) approach a heteroclinic cycle around the boundary that joins the three pure strategies in the order . It is also well known (12) that the standard RSP game (with all in [2]) has periodic orbits of [1] around the unique NE .

Cyclic behavior is common not only in biology (e.g., predator–prey systems) but also in human behavior (e.g., business cycles, the emergence and subsequence disappearance of fads, etc.). Thus, it is not surprising that evolutionary game dynamics include cycles as well. In fact, as the number of strategies increases, even more rich dynamical behavior such as chaotic trajectories can emerge (12).

What may be more surprising is the many classes of matrix games (10) for which these complicated dynamics do not appear (e.g., potential, stable, supermodular, zero-sum, doubly symmetric games) and for these the evolutionary outcome is often predicted through rationality arguments underlying Theorems 1 and 2. The emphasis in the remainder of the paper is on such situations for nonmatrix games.

Before doing so, it is important to mention that the replicator equation for doubly symmetric matrix games is formally equivalent to the continuous-time model of natural selection at a single (diploid) locus with m alleles (12, 20). Specifically, if is the fitness of genotype and is the frequency of allele in the population, then [1] is the continuous-time selection equation of population genetics (14). It can then be shown that population mean fitness is increasing (this is one part of the fundamental theorem of natural selection). Furthermore, the locally asymptotically stable rest points of [1] correspond precisely to the ESSs of the symmetric payoff matrix A and all trajectories in the interior of converge to a NE of A (20). Analogous results hold for the nonoverlapping generation (viability) selection model (12).

Population Games.

Symmetric population games are the most straightforward generalizations of matrix games. In a symmetric population game at fixed population size with finitely many pure strategies, the payoff of strategy is an arbitrary continuous function of the population state . Matrix games then correspond to the case where is linear in the components of p. If payoff is nonlinear, these are called “playing-the-field” evolutionary games (7). In either case, equating fitness to reproductive success again leads to the replicator equation (Eq. 1) on . The Folk Theorem is true for these population games as is Theorem 2b when an ESS is defined as locally superior using Theorem 2a. [The use of the term ESS becomes problematic for nonmatrix games because it often has several different possible meanings. For instance, an ESS as in Theorem 2b is called a “local ESS” by Hofbauer and Sigmund (9) to emphasize that the condition holds only for that are sufficiently close (but not equal) to . When there is ambiguity, we will instead use the phrase “locally superior” or “neighborhood superior” as appropriate.] On the other hand, an interior ESS need no longer be globally asymptotically stable (compare with Theorem 2c) because there may be more than one such ESS.

Population games have important applications to biology [e.g., sex-ratio game; Maynard Smith (7)] as well as to human behavior [e.g., congestion games; Sandholm (10)]. The following example, taken from behavioral ecology, illustrates that important game dynamics other than the replicator equation arise naturally.

Example 2 (Habitat Selection Game and Ideal Free Distribution): The foundation of the habitat selection game for a single species was laid by Fretwell and Lucas (21) before evolutionary game theory appeared. They were interested in predicting how a species (specifically, a bird species) of fixed population size should distribute itself among several resource patches if individuals would move to patches with higher fitness. They argued the outcome would be an ideal free distribution (IFD) defined as a patch distribution whereby the fitness of all individuals in any occupied patch would be the same and at least as high as what would be their fitness in any unoccupied patch (otherwise some individuals would move to a different patch). If there are H patches (or habitats) and an individual’s pure strategy corresponds to being in patch i (for ), we have a population game by equating the payoff of to the fitness in this patch. The verbal description of an IFD in this “habitat selection game” is then none other than that of a NE.

If patch fitness is decreasing in patch density (i.e., in the population size in the patch), Fretwell and Lucas proved that there is a unique IFD at each fixed total population size. Moreover, the IFD is an ESS that is globally asymptotically stable under the replicator equation (22). To see this, let be a distribution among the patches and be the fitness in patch i. Then depends only on the proportion in this patch [i.e., has the form ]. Because the vector field is the gradient of a real-valued function defined on , we have a potential game (SI Appendix, section 1.1). Following Sandholm (10), it is a strictly stable game and so has a unique ESS which is globally asymptotically stable under the replicator equation (as well as many other game dynamics).

Although Fretwell and Lucas (21) did not attach any dynamics to their model, movement among patches is discussed implicitly. Following Krivan et al. (22), let be the probability an individual in patch j moves to patch i per unit time if the current patch distribution is p. Then the corresponding continuous-time migration (or dispersal) dynamics in vector form is

| [3] |

where is the migration matrix with entries . The following result (22) uses the (decreasing) Lyapunov function .

Theorem 3. Suppose patch fitness is a decreasing function of patch density in a single-species habitat selection game. Then any migration dynamics 3 that satisfies the following two conditions evolves to the unique IFD.

-

a)

Individuals never move to a patch with lower fitness.

-

b)

If there is a patch with higher fitness than some occupied patch, some individuals move to a patch with highest fitness.

We illustrate Theorem 3 when there are three patches. Suppose that at p, patch fitnesses are ordered and consider the two migration matrices

corresponds to a situation where individuals who move go to patch 1 because they know it has highest fitness. The corresponding games dynamics

| [4] |

where is the best response strategy to p is called the best response dynamics (SI Appendix, section 1.1).

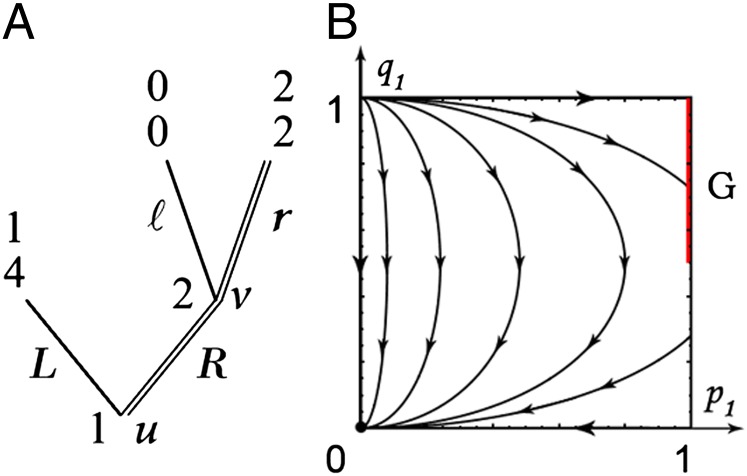

On the other hand, models individuals who only gain fitness information by sampling one patch at random, moving to this patch if it has higher fitness than its current patch [e.g., an individual in patch 2 moves if it samples patch 1 and otherwise stays in its own patch (with probabilities and , respectively)]. Several trajectories for each of these two migration dynamics are illustrated in Fig. 2 (see also SI Appendix, Fig. S1). As can be seen, all converge to the IFD as they must by Theorem 3, even though their paths to this rational outcome are quite different.

Fig. 2.

Trajectories for the habitat selection game with patch fitness functions and . (A) Best response dynamics with migration matrices of the form and (B) dynamics for nonideal animals with migration matrices of the form .

Finally, suppose information is gained by sampling a random individual, moving to its patch with probability proportional to the fitness difference only if the sampled individual has higher fitness. It is well known (13) that this “proportional imitation rule” leads to the replicator equation (SI Appendix, section 1.1). Since the proportional imitation rule satisfies the two conditions of Theorem 3, the unique IFD is globally asymptotically stable under [1].

Fretwell and Lucas (21) briefly consider their IFD concept when patch fitness increases with patch density when density is low (the so-called Allee effect). Although Theorem 3 no longer applies, these habitat selection games are still potential games (but not strictly stable). Thus, all interior trajectories under many game dynamics (including the replicator equation and best response dynamics) converge to a NE (10). Several NE are already possible for two-patch models, some of which are locally asymptotically stable and some not. There is a difference of opinion whether to define IFD as any of these NE or restrict the concept to only those that are locally superior and/or asymptotically stable (23).

Habitat selection games also provide a natural setting for the effect of evolving population sizes, a topic of obvious importance in population biology that has so far received little attention in models of social behavior. A population-migration dynamics emerges if population size N evolves through fitness taken literally as reproductive success (SI Appendix, section 1.1). As discussed in SI Appendix, section 1.1, if patch fitness is positive when unoccupied, decreases with patch density and eventually becomes negative, then the system evolves to carrying capacity whenever the migration matrix satisfies the two conditions in Theorem 3 for each N. In particular, the evolutionary outcome is independent of the time scale of migration compared with that of changing population size, a notable result since it is often not true when two dynamical processes are combined.

Games with Continuous Strategy Spaces.

Game dynamics when players can choose from a continuum of pure strategies S become complicated, especially if individual payoff depends on the population state [which is now a distribution in the set of probability measures on S]. One approach to avoid these complications is to assume that the population is always monomorphic at its mean . When S is a convex compact subset of R (i.e., a closed and bounded interval), it is further assumed that x evolves through trait substitution in the direction y of nearby mutants that can invade due to their higher payoff than x when playing against this monomorphism. If is the payoff of a mutant using strategy y in an otherwise monomorphic population x, then x increases (decreases) if is an increasing (decreasing) function of y for y close to x.

The most elementary dynamics to model these assumptions is called the “canonical equation of adaptive dynamics” which has the form (up to a change in time scale)

| [5] |

A rest point [i.e., ] is convergence stable (24) if it is asymptotically stable under [5]. is convergence stable if and only if . On the other hand, is a neighborhood-strict NE if and only if (SI Appendix, section 1.2).

Thus, under adaptive dynamics, a strict NE is not necessarily attainable from nearby populations (i.e., it need not be convergence stable) and, conversely, a convergence stable rest point need not be a NE. That is, parts a and c of the Folk Theorem are not true. A convergence stable that is not a neighborhood-strict NE is called an “evolutionary branching point” (25) since, in a dimorphic population with some individuals using pure strategies on either side of , these nearby strategies evolve away from [as can be shown by the second order Taylor polynomial expansion of about ]. For this reason, only those that satisfy both conditions i and ii given by

-

i)

(neighborhood-strict NE condition) and

-

ii)

(convergence stability condition)

are considered to be stable for models based on adaptive dynamics.

Conditions i and ii respectively are equivalent to and if x is close to and y is near x but closer to . These define the concept of a continuously stable strategy (CSS) in terms of static payoff comparisons as introduced earlier by Eshel (26) to generalize the ESS to one-dimensional (1D) continuous strategy spaces. A CSS is then stable for models based on adaptive dynamics.

However, a CSS is not stable under the replicator equation (Eq. 6) for continuous strategy spaces. When payoffs result from pairwise interactions between individuals and is interpreted as the payoff to x against y, then the expected payoff to x in a random interaction is , where P is the probability measure on S corresponding to the current distribution of the population’s strategies. With the mean payoff of the population and B a Borel subset of S, the replicator equation (27)

| [6] |

has a unique solution given any initial in the infinite dimensional space of Borel probability measures over the strategy space S (28) (SI Appendix, section 1.2). The replicator equation describes the evolution of the population strategy distribution . From this perspective, the canonical equation becomes a heuristic tool that approximates the evolution of the population mean by ignoring effects due to the diversity of strategies in the population.

From SI Appendix, section 1.2, to guarantee is stable under [6], must be a neighborhood-strict NE as well as a NIS defined as for x near (29). These two conditions are equivalent to being neighborhood superior [i.e., for all distributions P whose support is sufficiently close but not equal to ] (19).

The replicator equation as well as concepts of neighborhood-strict NE, NIS, and neighborhood superiority have straightforward generalizations to multidimensional continuous strategy spaces where the stability results remain true (19). The CSS and canonical equation of adaptive dynamics have also been generalized (30, 31) but these depend on the direction(s) in which mutants are more likely to appear. If is stable for all such directions (called “strong convergence stability”), the corresponding CSS has similar strong stability properties as the ESS does for matrix games.

Multiplayer Games.

Matrix games are special types of population games where individuals interact in two-player contests. In multiplayer games with a finite set S of pure strategies, interactions are formed among players where is fixed. For example, if , the expected payoff to in a random interaction if the population has state is

where the payoff to in the symmetric three-player game is assumed to depend on the other two players but not their order [i.e., ].

Multiplayer games are then a class of population games where payoffs are nonlinear in the population state. Thus, by the section Population Games, the Folk Theorem and Theorem 2b hold for any multiplayer game with finitely many pure strategies under the replicator equation (Eq. 1). Bukowski and Miekisz (32) (see also ref. 8) characterize an ESS in terms of uninvadability [i.e., local superiority as in Theorem 2a]. Then, for three-player games, is an ESS if and only if, for all ,

-

i)

(NE condition),

-

ii)

if , then ,

-

iii)

if and , then ,

where . That is, an ESS satisfies the NE condition i as well as two stability conditions ii and iii.

Bukowski and Miekisz (32) go on to classify the ESS structure of all three-player two-strategy games, showing in particular that an interior ESS need not be globally asymptotically stable (compare with Theorem 2c ). In fact, they also provide an example of a four-player two-strategy supersymmetric game [i.e., is the same for all permutations of the fixed indices ] that has two interior ESSs. Furthermore, for all two-strategy multiplayer games, is locally asymptotically stable if and only if is an ESS.

The above discussion assumes that the multiplayer game has finitely many pure strategies. In the following example, this is no longer the case.

Example 3 (Public Goods Game): The public goods game (PGG) is a multiplayer game where each player is given an initial endowment and then decides how much x of this endowment to contribute to a common pool (i.e., ). All contributions to the common pool are multiplied by a factor and then evenly distributed among all n players. A player’s payoff is then the remainder of his endowment plus what he receives from the public pool. If he contributes and the other players contribute , respectively, his payoff is given as

| [7] |

It is assumed that and so each player receives only part of his own contribution to the common pool (i.e., ).

The only NE is for each player to contribute 0 (i.e., to free-ride) since for all . On the other hand, each player receives his maximum possible payoff of when all players contribute E. PPG is the multiplayer version of the prisoner’s dilemma (PD) game (33) with free-riding (respectively, contributing E) corresponding to defect (respectively, cooperate). PGG and PD have been used as the standard examples of social dilemmas to investigate the evolution of cooperation in human and other societies (33, 34), both from a theoretical and empirical perspective.

Since PGG has a continuous strategy space, the Folk Theorem may no longer apply (as we saw above). It is therefore important to analyze stability under game dynamics such as the replicator equation and adaptive dynamics. From [7], the expected payoff of an individual playing y in a group whose other players are chosen at random from a population with state is , where is the average contribution of an individual in the population (SI Appendix, section 1.3). Furthermore, the population mean payoff is .

Following Cressman et al. (35), the evolution of under the replicator equation (Eq. 6) is with equality if and only if P is a distribution that has all its weight on some . Since has the same support as for all , evolves to the smallest element in the support of . In particular, if there are some free-riders in the original population distribution, evolves to in the weak topology. This result also follows from the fact that if [i.e., 0 is (globally) neighborhood superior in PGG].

The corresponding adaptive dynamics is for x in the interior of and so x evolves to 0 for this game dynamics as well. That is, neither game dynamics predicts cooperative behavior will emerge in the PGG social dilemma.

Asymmetric Games

An asymmetric game is a multiplayer game where the players are assigned roles with a certain probability and, to each role, there is a set of strategies. If there is only one role, then we have a symmetric game. Here we concentrate on two-player two-role asymmetric games with finite pure strategy sets and respectively. These are also called “two-species games” (roles correspond to species) with intraspecific (respectively, interspecific) interactions among players in the same role (respectively, different roles). We also assume that the expected payoffs and to in species 1 and to in species 2 are linear in the components of the population states and . One interpretation of linearity is that each player engages in one intraspecific and one interspecific random pairwise interaction per unit time.

The corresponding replicator equation on the dimensional strategy space is then

| [8] |

where, for example, is the mean payoff of species 1. The Folk Theorem is valid under [8], where a NE is a strategy pair such that for all and for all (it is strict if both inequalities are strict).

To generalize Theorem 2, consider the uninvadability approach of Maynard Smith and Price (3) for the resident–mutant system, where residents and mutants use strategy pairs and , respectively. is called a “two-species ESS” (36) if it is locally asymptotically stable under the corresponding 2D (mixed-strategy) replicator equation for all . The following result (9, 12, 20) corresponds to Theorem 2.

Theorem 4.

-

a)is a two-species ESS if and only if

for all strategy pairs that are sufficiently close (but not equal) to .[9] -

b)

A two-species ESS is a locally asymptotically stable rest point of the replicator equation (Eq. 8).

-

c)

A two-species ESS in the interior of is a globally asymptotically stable rest point of the replicator equation.

Condition 9 for is thus the logical extension to two-species games of local superiority. It has been further extended to asymmetric games having continuous strategy spaces where it is called “neighborhood superior” (19) when applied to pure strategy pairs and distributions with nearby support. A neighborhood superior is then stable under the measure-theoretic replicator equation that extends [6] to two-species systems. A related condition (called “neighborhood half-superior”) corresponds to the CSS concept and stability under adaptive dynamics (19, 31).

The theory with finitely many pure strategies has also been extended to two-species population games with nonlinear payoffs (10). For the habitat selection game with two competitive species, individual fitness decreases as the density of either species in its patch increases. Krivan et al. (22) show that the IFD [defined as a distribution where the fitness of species 1 in all patches occupied by this species are equal and at least as high as in any patch unoccupied by this species (and the same for species 2)] is not always stable unless it is also a two-species ESS. This result brings into question how the single-species IFD of Fretwell and Lucas (21) should be defined for two (or more) species.

Asymmetric games with no intraspecific interactions (i.e., the probability individuals in the same role interact is 0) were considered early on by Selten (37) who called them “truly asymmetric games.” With two players, two roles and our linearity assumption on expected payoffs, these become bimatrix games because and , where, for example, A is the matrix with entries .

It is well known (12) that is a locally asymptotically stable rest point of the bimatrix replicator equation (Eq. 8) if and only if it is a strict NE (SI Appendix, section 2). Moreover (38), is a strict NE if and only if it is a two-species ESS [e.g., if , then [9] implies for ]. Unfortunately, many bimatrix games have no strict NE and so game dynamics may not predict a NE outcome for them. However, more can be said when these bimatrix normal form games come from a corresponding extensive form (e.g., Theorem 5 in the following section).

Asymmetric Extensive Form Games.

Extensive form games whose decision trees describe finite series of interactions between the same two players (with the set of actions available at later interactions possibly depending on what choices were made previously) were introduced alongside normal form games by von Neumann and Morgenstern (39). From an evolutionary game perspective, differences with normal form intuition already emerge for games of perfect information (SI Appendix, section 2.1) with short decision trees as illustrated in this section (see also ref. 13).

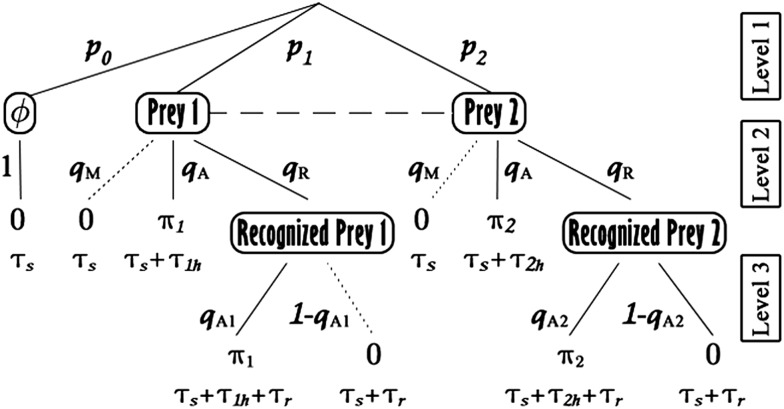

Consider the elementary perfect information game of Fig. 3A that goes by several names [e.g., the “chain store game” (38), the “entry deterrence game” (17)]. Player 1 has one decision node u where he chooses between the actions L and R. If he takes action L, player 1 gets payoff 1 and player 2 gets 4. If he takes action R, then we reach the decision node v of player 2 who then chooses between and r leading to both players receiving payoff 0 or both payoff 2, respectively.

Fig. 3.

The extensive form (A) of the chain store game and trajectories (B) of the replicator equation (Eq. 8).

The corresponding bimatrix normal form [e.g., 1 and 4 are the payoffs to players 1 and 2, respectively when they play ]

has two NE outcomes. One is the two-species ESS and strict NE pair . On the other hand, if player 1 chooses L, then player 2 is indifferent to what strategy he uses since his payoff is always 4. Furthermore, player 1 is no better off by playing R with positive probability if and only if player 2 plays at least half the time. Thus,

is a set of NE (called a “NE component” since it is a connected set of NE that is not contained in any larger connected set of NE) all corresponding to the same NE outcome; namely, the path L that leads to payoffs 1 and 4.

The trajectories of the replicator equation (Eq. 8) are shown in Fig. 3B. All points on the vertical edge ( is the probability player 1 plays L) are rest points although only those in G are limit points of interior trajectories. The six results below for this example follow since is always strictly decreasing and is strictly increasing (decreasing) if and only if for any interior trajectory. They hold in general by Theorem 5 (13).

-

1)

Every NE outcome is a single terminal node.

-

2)

Every NE component G includes a pure strategy pair.

-

3)

The outcomes of all elements of G are the same.

-

4)

Every interior trajectory converges to a NE.

-

5)

If a NE component is interior attracting, it includes the subgame perfect NE (SPNE) defined below.

-

6)

A NE is locally asymptotically stable if and only if it is a strict SPNE if and only if it is pervasive (i.e., it reaches every player decision point).

Theorem 5. These six results are true for all generic perfect information games without moves by nature.

Some game theorists argue that these games have only one rational NE equilibrium outcome and this can be found by backward induction. This procedure starts at a final player decision node (i.e., a player decision node that has no player decision points following it) and decides which unique action this player chooses there to maximize his payoff in the subgame with this as its root. The original game tree is then truncated at this node by creating a terminal node there with payoffs to the two players given by this action. The process yields the SPNE when it is continued until the game tree has no player decision nodes left. That is, the strategy constructed by backward induction produces a NE in each subgame corresponding to the subtree with root at the decision node u. This is a pure strategy pair and is indicated by the double lines in the decision tree as in Fig. 3A. If a NE is not subgame perfect, then this perspective argues that there is some player decision node where an incredible threat has been used such as player 2 forcing payoff 0 by playing if node v is reached (38).

Results 5 and 6 support this argument although, as the decisions tree becomes more complex than Fig. 3A, the SPNE (component) need no longer be stable (13). Instability of the SPNE often arises when a player has two (or more) decision nodes along a path in the tree or when the game does not have perfect information as in the following example.

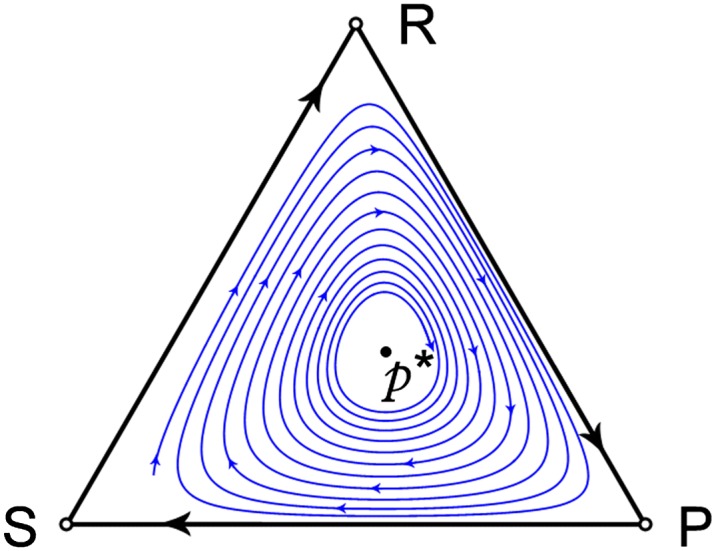

Example 4 (The Prey Recognition Game): Two prey types are distributed among a large number of patches (or microhabitats) with at most one prey in each patch. Let be the probability a patch chosen at random by a single predator contains prey of type i for and the probability the patch is empty (i.e., contains no prey) (Fig. 4, level 1). If the predator finds a prey while spending searching time in the chosen patch, it decides immediately whether to attack, move to another microhabitat to begin a new search, or spend recognition time to determine the type of prey encountered with probabilities respectively [i.e., ]. The horizontal dashed line in Fig. 4 joining these two encounter events (called an “information set”) indicates that this decision must be made without knowing the type of prey.

Fig. 4.

The decision tree for the prey recognition game. In the reduced tree, the dotted edges are deleted.

If the predator decides to spend recognition time to determine the encountered prey is of type i, then it must subsequently decide whether to attack this prey or not with probabilities and respectively (Fig. 4, level 3). If is the handling time for prey of type i and is their nutritional value, then the total predator nutritional value per unit time is

| [10] |

where (SI Appendix, section 2.2).

Optimal foraging theory (40) postulates the predator maximizes f as a function of . Game-theoretic methods can be used to find the optimal foraging behavior (41). Specifically, the agent normal form of Fig. 4 is a three-player foraging game where player 1 chooses at the information set, player 2 chooses at the node labeled “recognized prey 2” and player 3 chooses at “recognized prey 1.” Optimal foraging behavior is a NE of this game.

At a NE, the predator should never move to another patch when it first finds a prey since, by abandoning this prey, the predator wastes the time spent searching for it. Furthermore, if prey type 1 is more profitable than type 2 (i.e., as assumed throughout this example), then the predator must attack any prey 1 that it recognizes (SI Appendix, section 2.2). Thus, we assume that and in the decision tree of Fig. 4 and analyze the truncated foraging game that eliminates the three edges indicated by dotted lines there.

Following Cressman et al. (41), if the profitabilities of both prey types are nearly equal or recognition time is long, the only NE behavior of player 1 is to immediately attack any prey encountered. In fact, the only NE component is then .

Otherwise, a subset of G (see the red line segment in SI Appendix, Fig. S2) is a NE component and another NE outcome emerges where the predator spends the time to recognize the type of prey it encountered and then only attacks the most profitable type (i.e., and is a NE). This latter NE is strict and is the only one that corresponds to optimal foraging behavior. It is also the only NE outcome that is locally asymptotically stable under the adaptive dynamics (SI Appendix, section 2.2 and Fig. S2)

| [11] |

that keeps the unit square invariant.

Although the prey recognition game is not a conventional extensive form game because payoffs are nonlinear in the predator’s strategy (and the game has a continuous strategy space), the game dynamics 11 is remarkably similar to that of the replicator equation for the chain store game (Fig. 3B and SI Appendix, Fig. S2).

Discussion

The replicator equation and other deterministic game dynamics have become essential tools over the past 40 years in applying evolutionary game theory to behavioral models in the biological and social sciences. The theory that we have summarized for these dynamics assumes large homogeneous populations with random interactions. Stochastic effects (e.g., based on finite populations) and the effects of nonrandom interactions (e.g., games on graphs), which have become increasingly important in game dynamic models (10, 11), are beyond the scope of this paper.

The examples given in this paper show that static game-theoretic solution concepts (e.g., NE and ESS) play a central role in predicting the evolutionary outcome of game dynamics. Conversely, game dynamics that arise naturally in analyzing behavioral evolution lead to a more thorough understanding of issues connected to the static concepts. That is, both the classical and evolutionary approaches to game theory benefit through this interplay between them.

For instance, the prey recognition game illustrates anew the potential of game-theoretic methods to gain a better understanding of issues that arise in behavioral ecology. Here, it suggests how the predator “learns” its optimal foraging behavior through game dynamics, a question that is not often considered in optimal foraging theory. On the other hand, the analysis of this game also raises important questions in game-theoretic applications to human behavior. Specifically, this example considers the effect time spent on different interactions has on rational behavior, an aspect that becomes increasingly central when individuals have a series of interactions such as those modeled by an extensive form.

Supplementary Material

Acknowledgments

The assistance of Cong Li with the figures is appreciated. This work was partially supported by the Natural Sciences and Engineering Research Council of Canada, the National Science Foundation of China (Grant 31270439), and the National Basic Research Program of China (973 Program, 2013CB945000).

Footnotes

The authors declare no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “In the Light of Evolution VIII: Darwinian Thinking in the Social Sciences,” held January 10–11, 2014, at the Arnold and Mabel Beckman Center of the National Academies of Sciences and Engineering in Irvine, CA. The complete program and audio files of most presentations are available on the NAS website at www.nasonline.org/ILE-Darwinian-Thinking.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1400823111/-/DCSupplemental.

References

- 1.Taylor PD, Jonker L. Evolutionarily stable strategies and game dynamics. Math Biosci. 1978;40(1):145–156. [Google Scholar]

- 2.Schuster P, Sigmund K. Replicator dynamics. J Theor Biol. 1983;100(3):533–538. [Google Scholar]

- 3.Maynard Smith J, Price G. The logic of animal conflicts. Nature. 1973;246:15–18. [Google Scholar]

- 4.Maynard Smith J. The theory of games and the evolution of animal conflicts. J Theor Biol. 1974;47(1):209–221. doi: 10.1016/0022-5193(74)90110-6. [DOI] [PubMed] [Google Scholar]

- 5.Hofbauer J, Schuster P, Sigmund K. A note on evolutionary stable strategies and game dynamics. J Theor Biol. 1979;81(3):609–612. doi: 10.1016/0022-5193(79)90058-4. [DOI] [PubMed] [Google Scholar]

- 6.Hamilton WD. Extraodrinary sex ratios. Science. 1967;156:477–488. doi: 10.1126/science.156.3774.477. [DOI] [PubMed] [Google Scholar]

- 7.Maynard Smith J. Evolution and the Theory of Games. Cambridge, UK: Cambridge Univ Press; 1982. [Google Scholar]

- 8.Broom M, Rychtar J. Game-Theoretic Models in Biology. Boca Raton, FL: CRC Press; 2013. [Google Scholar]

- 9.Hofbauer J, Sigmund K. Evolutionary game dynamics. Bull Am Math Soc. 2003;40:479–519. [Google Scholar]

- 10.Sandholm WH. Population Games and Evolutionary Dynamics. Cambridge, MA: MIT Press; 2010. [Google Scholar]

- 11.Sigmund K, editor. Evolutionary Game Dynamics.Proceedings of Symposia in Applied Mathematics. Vol 69. Providence, RI: American Mathematical Society; 2011. [Google Scholar]

- 12.Hofbauer J, Sigmund K. Evolutionary Games and Population Dynamics. Cambridge, UK: Cambridge Univ Press; 1998. [Google Scholar]

- 13.Cressman R. Evolutionary Dynamics and Extensive Form Games. Cambridge, MA: MIT Press; 2003. [Google Scholar]

- 14.Fisher R. The Genetical Theory of Natural Selection. Cambridge, UK: Clarendon Press; 1930. [Google Scholar]

- 15.Darwin C. The Descent of Man and Selection in Relation to Sex. London: John Murray; 1871. [Google Scholar]

- 16.Hamilton WD. The genetical evolution of social behaviour. II. J Theor Biol. 1964;7(1):17–52. doi: 10.1016/0022-5193(64)90039-6. [DOI] [PubMed] [Google Scholar]

- 17.Weibull J. Evolutionary Game Theory. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- 18.Apaloo J. Revisiting matrix games: The concept of neighborhood invader strategies. Theor Popul Biol. 2006;69(3):235–242. doi: 10.1016/j.tpb.2005.11.006. [DOI] [PubMed] [Google Scholar]

- 19.Cressman R. CSS, NIS and dynamic stability for two-species behavioral models with continuous trait spaces. J Theor Biol. 2010;262(1):80–89. doi: 10.1016/j.jtbi.2009.09.019. [DOI] [PubMed] [Google Scholar]

- 20.Cressman R. 1992. The Solution Concept of Evolutionary Game Theory, Lecture Notes in Biomathematics (Springer, Heidelberg), Vol 94.

- 21.Fretwell DS, Lucas HL. On territorial behavior and other factors influencing habitat distribution in birds. Acta Biotheor. 1969;19(1):16–36. [Google Scholar]

- 22.Krivan V, Cressman R, Schneider C. The ideal free distribution: A review and synthesis of the game-theoretic perspective. Theor Popul Biol. 2008;73(3):403–425. doi: 10.1016/j.tpb.2007.12.009. [DOI] [PubMed] [Google Scholar]

- 23.Morris D. Measure the Allee effect: Positive density effect in small mammals. Ecology. 2002;83(1):14–20. [Google Scholar]

- 24.Christiansen FB. On conditions for evolutionary stability for a continuously varying character. Am Nat. 1991;138(1):37–50. [Google Scholar]

- 25.Doebeli M, Dieckmann U. Evolutionary branching and sympatric speciation caused by different types of ecological interactions. Am Nat. 2000;156(S4):S77–S101. doi: 10.1086/303417. [DOI] [PubMed] [Google Scholar]

- 26.Eshel I. Evolutionary and continuous stability. J Theor Biol. 1983;103(1):99–111. doi: 10.1006/jtbi.1996.0312. [DOI] [PubMed] [Google Scholar]

- 27.Bomze IM. Cross entropy minimization in uninvadable states of complex populations. J Math Biol. 1991;30(1):73–87. [Google Scholar]

- 28.Oechssler J, Riedel F. Evolutionary dynamics on infinite strategy spaces. Econ Theory. 2001;17(1):141–162. [Google Scholar]

- 29.Apaloo J. Revisiting strategic models of evolution: The concept of neighborhood invader strategies. Theor Popul Biol. 1997;77(1):52–71. doi: 10.1006/tpbi.1997.1318. [DOI] [PubMed] [Google Scholar]

- 30.Lessard S. Evolutionary stability: One concept, several meanings. Theor Popul Biol. 1990;37(1):159–170. [Google Scholar]

- 31.Leimar O. Multidimensional convergence stability. Evol Ecol Res. 2009;11(2):191–208. [Google Scholar]

- 32.Bukowski M, Miekisz J. Evolutionary and asymptotic stability in symmetric multi-player games. Int J Game Theory. 2004;33(1):41–54. [Google Scholar]

- 33.Fehr E, Gachter S. Cooperation and punishment in public goods experiments. Am Econ Rev. 2000;90(4):980–994. [Google Scholar]

- 34.Rand DG, Dreber A, Ellingsen T, Fudenberg D, Nowak MA. Positive interactions promote public cooperation. Science. 2009;325:1272–1275. doi: 10.1126/science.1177418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cressman R, Song JW, Zhang BY, Tao Y. Cooperation and evolutionary dynamics in the public goods game with institutional incentives. J Theor Biol. 2012;299:144–151. doi: 10.1016/j.jtbi.2011.07.030. [DOI] [PubMed] [Google Scholar]

- 36.Cressman R. Beyond the symmetric normal form: Extensive form games, asymmetric games and games with continuous strategy spaces. In: Sigmund K, editor. Evolutionary Game Dynamics, Proceedings of Symposia in Applied Mathematics. Vol 69. Providence, RI: American Mathematical Society; 2011. pp. 27–59. [Google Scholar]

- 37.Selten R. A note on evolutionarily stable strategies in asymmetric animal conflicts. J Theor Biol. 1980;84(1):93–101. doi: 10.1016/s0022-5193(80)81038-1. [DOI] [PubMed] [Google Scholar]

- 38.Selten R. The chain-store paradox. Theory Decis. 1978;9(2):127–159. [Google Scholar]

- 39.von Neumann J, Morgenstern O. Theory of Games and Economic Behavior. Princeton: Princeton Univ Press; 1944. [Google Scholar]

- 40.Stephens DW, Krebs JR. Foraging Theory. Princeton: Princeton Univ Press; 1986. [Google Scholar]

- 41.Cressman R, Křivan V, Brown JS, Garay J. Game-theoretic methods for functional response and optimal foraging behavior. PLoS ONE. 2014;9(2):e88773. doi: 10.1371/journal.pone.0088773. [DOI] [PMC free article] [PubMed] [Google Scholar]