Abstract

We present a methodology for dealing with recent challenges in testing global hypotheses using multivariate observations. The proposed tests target situations, often arising in emerging applications of neuroimaging, where the sample size n is relatively small compared with the observations’ dimension K. We employ adaptive designs allowing for sequential modifications of the test statistics adapting to accumulated data. The adaptations are optimal in the sense of maximizing the predictive power of the test at each interim analysis while still controlling the Type I error. Optimality is obtained by a general result applicable to typical adaptive design settings. Further, we prove that the potentially high-dimensional design space of the tests can be reduced to a low-dimensional projection space enabling us to perform simpler power analysis studies, including comparisons to alternative tests. We illustrate the substantial improvement in efficiency that the proposed tests can make over standard tests, especially in the case of n smaller or slightly larger than K. The methods are also studied empirically using both simulated data and data from an EEG study, where the use of prior knowledge substantially increases the power of the test. Supplementary materials for this article are available online.

Keywords: Adaptive design, Multivariate test, Neuroimaging, Power analysis

Supplementary materials for this article are available online. Please go to www.tandfonline.com/r/JASA

1. INTRODUCTION

In this work, we develop novel methodology for dealing with recent challenges in testing global hypotheses using multivariate observations. The classical approach for studying the problem, Hotelling's T2-test (Hotelling 1931), can efficiently detect effects in every direction of the multivariate space when the sample size n is sufficiently large. However, in settings where n approaches or becomes smaller than the observation dimension K, T2-test becomes respectively inefficient and inapplicable. This cost in efficiency, paid due to the need to search in every direction of the alternative space, seems particularly wasteful (but avoidable), if prior knowledge about the direction of the effect is available. Motivated by the latter settings, often arising in the increasingly important field of neuroimaging, we develop tests which are powerful in studies with n ≫ K, but can also be efficient in situations where n is close to or smaller than K.

The proposed tests employ adaptive designs allowing for sequential modifications of the test statistic based on accumulated data. Such adaptive designs have straightforward but not exclusive application in clinical trials. A large literature on the subject (e.g., Bauer and Köhne 1994; Proschan and Hunsberger 1995; Lehmacher and Wassmer 1999; Müller and Schäfer 2001; Brannath, Posch, and Bauer 2002; Liu, Proschan, and Pledger 2002; Brannath, Gutjahr, and Bauer 2012) deals with the derivation of flexible procedures that allow for adaptations of the initial design without inflation of the Type I error rate. Some sequential designs (e.g., Denne and Jennison 2000) also permit design adaptations, but the latter need to be preplanned and independent of the interim test statistics. Adaptive designs are employed for many kinds of adaptations including sample size recalculation (Lehmacher and Wassmer 1999; Mehta and Pocock 2011), treatment or hypothesis selection (Kimani, Stallard, and Hutton 2009), and sample allocation to treatments (Zhu and Hu 2010). Despite the fact that many authors have stressed the potential for test statistic adaptation (e.g., Bauer and Köhne 1994; Bretz et al. 2009), there are only a few papers on the subject (Lang, Auterith, and Bauer 2000; Kieser, Schneider, and Friede 2002). Furthermore, various approaches for adaptive designs in multiple testing are available (see Bretz et al. 2009). These methods can efficiently detect few independently significant outcomes. However, it is well known that standard multiple testing methods (e.g., Bonferroni and Simes tests) become conservative and inefficient in settings, such as the typical neuroimaging studies, where strong dependencies and a large number of outcomes are present (D'Agostino and Russell 2005).

Similarly to the tests developed by O'Brien (1984), Läuter, Glimm, and Kropf (1998), and Minas et al. (2012), the proposed tests are based on linear combinations of the observation vectors. The crucial element in this approach is the weighting vector reducing the observation vectors to the scalar linear combinations. This defines the direction in which we decide to search for effects, and it can substantially affect both Type I and Type II error rate of the tests. O'Brien proposed deriving the weighting vectors under the assumption of uniform mean structure, while Läuter et al. showed that if the weighting vector is derived from the observation sums of products matrix, the Type I error is controlled and high power is attained under certain factorial structures. On the other hand, the tests in Minas et al. (2012) can attain high power levels independently of the mean and covariance structure but a part of the sample is used in a separate pilot study to learn the weighting vector.

In this work, linear combination test statistics, initially constructed using weighting vectors derived from prior information, are sequentially updated based on observed data at subsequent interim analyses in an adaptive design. Early termination of the study (due to early acceptance or rejection of the null hypothesis at an interim analyses) which is often of interest, especially in clinical trials, is also possible within our approach. Our methods provide a formal framework for optimally using prior information in constructing test statistics as has been suggested, but not implemented, in earlier papers (Pocock, Geller, and Tsiatis 1987; Läuter, Glimm, and Kropf 1996; Tang, Gnecco, and Geller 1989a).

While our tests maintain the two prime targets of adaptive designs, namely flexibility and Type I error control (Brannath et al. 2012), we also focus on attaining power optimality. Specifically, we employ the methods proposed by Spiegelhalter, Abrams, and Myles (2002) to derive optimal tests maximizing the predictive power of the test at each interim analysis. The methods of proofs can be useful in deriving optimal adaptive designs in more general settings. As we illustrate in Section 3, the results of Theorem 3.1 could be used to derive optimal designs for regression analysis for example.

The power performance of a multivariate test, lying in a possibly high-dimensional design space, can be hard to illustrate and interpret. Therefore, power analysis of multivariate tests is typically restricted to a limited part of the design space. We tackle this problem by reexpressing the 𝒪(K2)-dimensional design space as a lower dimensional easily interpretable space that is still sufficient to determine power. The crucial step here is to identify a measure quantifying the angular distance between the selected weighting vector and the optimal weighting vector and proving its sufficiency in computing power. These results provide wide understanding of the behavior of linear combination tests and allow us to extend earlier work on power analysis of single stage (Pocock, Geller, and Tsiatis 1987; Follmann 1996; Logan and Tamhane 2004) and sequential (Tang, Gnecco, and Geller 1989b; Tang, Geller, and Pocock 1993) linear combination tests, beyond low-dimensional observations or specific mean and covariance structures.

We perform extensive simulation studies to explore and compare the proposed and alternative single stage and sequential procedures throughout the design space. We show that linear combination tests outperform Hotelling's T2-tests for the latter angular distance being below a certain value which, especially for sample sizes close to K, can be rather high. We further show that, in contrast to linear combination tests, such as O'Brien OLS test, with fixed weighting vectors, the adaptive linear combination tests can attain high power levels even in situations where the weighting vector selected at the planning stage is orthogonal to the true optimal (where, of course, a nonadaptive test would have zero power asymptotically). The advantages of the proposed tests are also illustrated through a real example taken from an EEG depression study (Läuter, Glimm, and Kropf 1996).

This article is organized as follows. In Section 2, we formulate the class of linear combination tests while in Section 3 we derive optimal, with respect to power, tests in this class. In Section 4, we present the results allowing us to characterize power based on low-dimensional summaries of the design parameters. In Section 5, we discuss the main results of extensive simulation studies performed using the latter results to explore power and compare the proposed tests with alternative global tests under various conditions, while in Section 6 we apply our procedures to an EEG depression study. Section 7 includes a short summary and discussion of the obtained results. Technical lemmas and proofs are provided in Supplementary Material A, while further illustrations of the simulation studies are provided in Supplementary Material B.

2. FORMULATION OF J-STAGE LINEAR COMBINATION TESTS

In the following, we formulate J-stage linear combination z and t-tests and define their error rate functions. We assume that the K-dimensional observation vectors Yij = (Yij1, …, YijK)T of subjects i = 1, 2, …, nj, participating in stage j, j = 1, 2, …, J, of the study, are independent and identically distributed Gaussian random variables

| (2.1) |

with mean μ = (μ1, …, μK)T and covariance matrix the positive definite Σ = (σkk′)Kk,k′=1. In medical applications, the mean vector is often interpreted as the treatment effect. We wish to test the global null hypothesis of no treatment effect H0 : μ = 0 = (0, 0, …, 0)T against the two-sided alternative H1 : μ ≠ 0. Note that the methods which follow equally apply to the two-sample test with common covariance matrix, but we continue with the one-sample presentation to simplify notation.

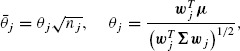

The observation vectors Yij, i = 1, 2, …, nj, of the jth stage are projected on the nonzero weighting vector wj = (wj1, wj2, …, wjK)T and the projection magnitudes form the linear combinations Lij = wTjYij, i = 1, 2, …, nj, j = 1, 2, …, J. The stagewise z and t statistics for testing H0 against H1 using the random sample of linear combinations Lij, i = 1, …, nj, when Σ is either known or unknown, are respectively

| (2.2) |

Here, σj2 is the variance and Lj, sj2 are the sample mean and sample variance of the linear combination Lj, respectively. Under assumption (2.1), the stagewise z and t statistics, Zj, Tj, j = 1, 2, …, J are respectively normally and noncentrally t distributed, Zj ∼ N(θj, 1) and Tj ∼ tνj(θj) with location parameter

|

(2.3) |

and νj = nj − 1. Under H0, the z and t statistics are standard normal and Student's t random variables, that is, Zj ∼ N(0, 1) and Tj ∼ tνj. The two-sided stagewise p values of the z and t-tests are, respectively, pzj = 2Φ(−|Zj|) and ptj = 2Ψνj(−|Tj|), where Φ(·) and Ψ(·) are the cumulative distribution functions of the standard normal and Student's t-distribution with νj degrees of freedom, respectively.

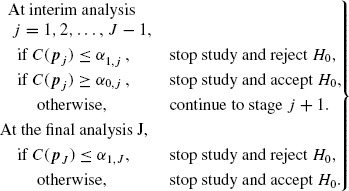

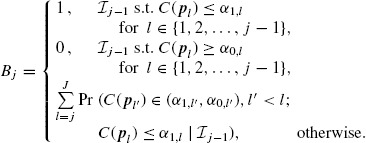

At the jth analysis, j = 1, 2, …, J, performed after the jth stage study, a combination function C(pj) is used to combine the stagewise p values, pj = (p1, …, pj), of stages 1 to j (pj either pzj or ptj). Rejection and acceptance critical values α1,j and α0,j (0 ≤ α1,j ≤ α < α0,j ≤ 1, j = 1, 2, …, J) are used to decide whether to stop the study early and either reject or accept H0, respectively. Specifically, the J-stage sequential design has the following form:

|

(2.4) |

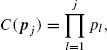

Several combination functions are proposed in the literature. Bauer and Köhne (1994) suggested the use of Fisher's product combination function

|

(2.5) |

while Lehmacher and Wassmer (1999) suggested the use of the inverse normal combination function. These two combination functions are the most commonly used in the literature (Bretz et al. 2009). The formulation and results which follow use the Fisher's product function in (2.5), but our results equally apply to other combination functions including the inverse normal.

Herein, we will refer to the J-stage tests with linear combination stagewise z and t-test statistics as the J-stage z and t-tests, respectively. The power function, that is, the probability to reject H0, of the J-stage z or t-test is β = Σjj = 1 βj where, β1 = Pr(p1 ≤ α1,1), the first stage and

| (2.6) |

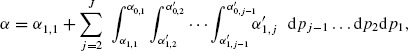

the jth stage power functions, j = 2, 3, …, J (β, βj either βz, βzj or βt, βtj, respectively). The boundaries α1,j, α0,j are suitably chosen to satisfy the Type I error equation

|

(2.7) |

where α′1,j = α1,j / p1p2 … pj−1, α′0,j = α0,j / p1p2 … pj−1 the conditional rejection and acceptance boundaries, respectively, of stage j, j = 2, 3, …, J.

3. OPTIMAL J-STAGE z AND t-TESTS

The crucial element for these J-stage linear combination z and t-tests are the stage-wise weighting vectors wj. In this section we develop a methodology for optimally deriving these weighting vectors. The next lemma is the first step for computing the weighting vectors maximizing the power of the z and t-tests.

Lemma 3.1.

Under (2.1), the power of the J-stage z and t-tests in (2.4) with combination function as in (2.5) is nondecreasing in the absolute value of θj in (2.3), j = 1, 2, …, J.

Note that it can be straightforwardly shown that the above result hold for both one-sided stagewise tests and for the inverse normal combination function. The proof of the above lemma is surprisingly complex because for some range of values of θj an increase in |θj| decreases the probability to continue to the next stage and therefore the power of the subsequent stages, β(j+1) = ΣJl=j+1 βl, decreases. In Supplementary Material A, we prove that even for these range of values of |θj|, the decrease (in absolute value) in β(j+1) is bounded above by the increase in βj.

The above result, except for being crucial for deriving Theorem 3.1, can also be useful for more general settings of adaptive designs. For example, Lemma 3.1 proves that if investigators wish to apply an adaptive z or t-test and are interested in maximizing the power of these procedures, they only need to sequentially maximize the location parameters of the stagewise test statistics separately. For instance, suppose that one is willing to conduct an adaptive design study to explore the relationship between an observation variable Y with a set of covariates X described by Yj = Xjbj + ej, ej ∼ Nn(0, σ2In), j = 1, 2, …, J, independent. Then, our results prove that to maximize the power of the J-stage test with stagewise statistics the classical z and t statistics, with respect to the experimental design, it is sufficient to maximize XTjXj, j = 1, 2, …, J, which agrees with the standard practice of deriving optimal designs.

Considering the J-stage linear combination z and t-tests, Lemma 3.1 implies that to maximize the power of these tests with respect to the weighting vectors wj, it is sufficient to maximize the value of θj, j = 1, 2, …, J. Using this result, we next derive the power-optimal weighting vector.

Theorem 3.1.

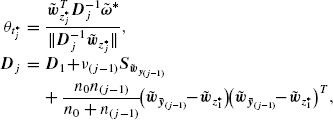

Under (2.1), the power of the J-stage z and t-tests in (2.4) with combination function as in (2.5) are maximized with respect to the weighting vectors wj, j = 1, 2, …, J, if and only if the latter are proportional to

(3.1)

The last result provides the optimal, in terms of power, weighting vector for the J-stage linear combination tests ω*. In Section 3.1, we show that ω*, which expresses the multivariate treatment effect standardized with respect to the variance matrix Σ, is central in characterizing the power of these tests. However, this optimal vector ω* depends on the unknown parameters μ and Σ and therefore is also unknown. In the next section, we develop a methodology for selecting the weighting vectors wj in practice. We propose using the information for μ and Σ, available at each interim analysis, to optimally select wj, j = 1, 2, …, J, where optimality is expressed here in terms of predictive power. The source of this information is the data collected from the stages completed before each interim analysis, but also prior information extracted from previous studies and expert clinical opinion. Predictive power allows the incorporation of this information into our procedures in a natural and plausible way. Note that, as we also explain in the next section, if Equation (2.7) is satisfied, the Type I error of these tests is controlled.

3.1. The Proposed z* and t* Tests

Prior information, ℐ0, is used to inform standard conjugate multivariate priors for the observation mean and covariance matrix. We use the Gaussian–inverse–Wishart prior

| (3.2) |

where m0 represents a prior estimate of the value of μ and n0 corresponds to the number of observations on which this prior estimate is based, while ν0 and S0 respectively represent the degrees of freedom and the (positive definite) scale matrix of the inverse-Wishart prior.

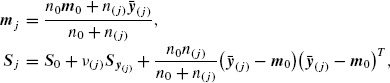

Under this standard Bayesian model (see Gelman et al. 2004), the posterior distribution of μ and Σ given the information set ℐj = {ℐ0, y(j)}, consisting of the prior information ℐ0 and the data collected up to the jth interim analysis y(j) = [y1y2 … yj] is (μ | Σ,ℐj) ∼ NK(mj, Σ / n(j)), (Σ | ℐj) ∼ IWK×K(νj, S−1j). Here,

|

(3.3) |

and ν(j) = n0 + n(j) − 1 with n(j) = n1 + n2 + … + nj and y(j) = Σjl=1 Σnji=1 yil / n(j) respectively the sample size and sample mean of y(j). Note that, due to the positive definiteness of the prior estimates S0, the posterior estimates Sj are also positive definite. Positive definiteness of S0 is required for our procedures to be applicable.

We wish to use this information to select the weighting vectors wj optimally. Optimality here is expressed in terms of predictive power of the test. Predictive power (Spiegelhalter, Abrams, and Myles 2002) in the present context is derived by averaging the power of the J-stage z and t-tests over the distributions of the model parameters for a given information set. The predictive power for the first stage given the prior information set ℐ0 is B1 = Pr(p1 < α1,1 | ℐ0) and for the jth stage, j = 2, 3, …, J, given the information set ℐj−1 is

|

(3.4) |

The next result presents the weighting vectors that we suggest to use for the stagewise linear combination z and t-tests.

Theorem 3.2.

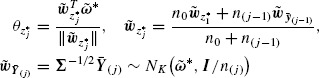

Under (2.1) and (3.2), the jth stage predictive power, Bzj, j = 1, 2, …, J, of the J-stage z-test in (3.4) is maximized with respect to the weighting vector wj if and only if wj is proportional to

(3.5)

Similarly, as we prove in Supplementary Material A, for n(j−1) → ∞, the jth stage predictive power, Btj, j = 1, 2, …, J, of the J-stage t-test in (3.4) is maximized with respect to the weighting vector wj if and only if wj is proportional to

| (3.6) |

where mj, Sj as in (3.3). The proposed J-stage tests, henceforth called (adaptive) z* and t*-tests, proceed as follows: for the jth analysis, j = 1, 2, …, J, (i) obtain wz*j or wz*j using (3.5) or (3.6), (ii) set wj equal to wz*j or wz*j and compute the stage j statistic Zj or Tj as in (2.2), (iii) calculate the stage j p-value, pzj = 2Φ(−|Zj|) or ptj = 2Ψνj(−|Tj|), (iv) use all the observed p-values to perform the combination test in (2.4).

Importantly, the weighting vectors wz*j and wt*j, given the prior information and the observed (if any) data y(j−1), are fixed before collecting yj and hence, under the standard conditions described in the following theorem, the Type I error of z* and t*-test, is preserved.

Theorem 3.3.

Under (2.1) and for α1,j, α0,j, j = 1, 2…, J satisfying Equation (2.7), the Type I error of the z* and t*-tests is preserved at the nominal α level.

4. POWER CHARACTERIZATION (POC)

To study the performance of a test, we primarily need to explore the relationship between its power function and the design parameters. The latter might be, among others, the critical values, the sample size(s), and the model parameters. The critical values and the sample size(s) are scalar and therefore it is straightforward to visualize power even across all their possible values (e.g., using simulations). Their relation to power can then be easily described and understood. In univariate settings, this is also the case for the model parameters. However, in the multivariate setting, model parameters can be high-dimensional and therefore it is not practically feasible to visualize power over the whole design space. Power analysis is then typically restricted to a limited range of different structures of the model parameters. This might be sufficient for power analysis in specific settings, but it has obvious limitations in considering the general behavior of a testing procedure.

In the following, we encounter this problem in the context of linear combination tests and we provide a solution. We first consider the case of J-stage linear combination z and t-tests with fixed weighting vectors which, apart from providing a method for performing simple and efficient power analysis of tests such as the OLS test in O'Brien (1984, see Logan and Tamhane 2004; Pocock, Geller, and Tsiatis 1987; Tang, Geller, and Pocock 1993 for earlier work), also provides the intuition for the results considering the z* and t* tests. Note that in Section 4, the critical values and sample sizes (including the “prior” sample sizes) are assumed to be fixed and described by the design vector d = (α0,1, α 0,2, …, α0,J, α1,1, α1,2, …, α1,J, ν0, n0, n1, …, nJ).

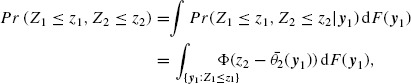

To provide greater insight to the subsequent results, it is also worth noting the joint distribution of the stagewise linear combination z statistics, Zj, j = 1, 2, …, J, here for J = 2,

|

where F(y1) the cdf of the first stage data, y1, and θ2(y1) the location parameter as in (2.3). The latter parameter is independent of y1, that is θ2(y1) = θ2, for the linear combination tests with fixed weighting vector, while for the adaptive z* and t* tests, θ2(y1) depends on y1 through the weighting vectors in (3.5) or (3.6), respectively. The next section focuses on characterizing further the effect of the weighting vector, through the parameters θj, on the power function. Note that the power function can be easily derived from the joint distribution of the stagewise statistics by replacing zj with suitable rejection or acceptance boundaries. In Supplementary Material A, we show that the above expression can be easily generalized to any J > 1 and that by replacing Φ(·) with the cdf of the Student's t-distribution Ψ(·), we can easily derive the joint distribution of Tj, j = 1, 2, …, J.

4.1. PoC for the J-Stage z and t-Tests With Fixed Weighting Vectors

To compute the power of the J-stage z and t-tests with fixed weighting vectors wj = w, it is sufficient to know the design vector d, as well as the stagewise location parameters θj in (2.3) which in this case are also fixed, that is, θj = θ. The latter can be reexpressed as

| (4.1) |

where ang(w̃j, ω̃*) denotes the angle, in measured radians at the origin, between the vectors w̃ and ω̃*. Here, w̃ = Σ1/2 w, ω̃* = Σ1/2 ω* = Σ−1/2 μ are the standardized selected and optimal weighting vectors. In particular, the latter expresses the standardized multivariate treatment effect, generalizing the univariate (K = 1) standardized treatment effect μ/σ. Considering the weighting vector selection problem, the first equation in (4.1) implies that a weighting vector that increases the mean and/or decreases the variance of the linear combination gives higher power. The ambiguity in the latter expression becomes clearer by the standardization in the second equation which implies that the weighting vector selection can be expressed as a process of learning the standardized optimal weighting vector ω̃*.

The last equation in (4.1) establishes two scalar measures which are sufficient to determine power. The first is the magnitude of ω̃*, ||ω̃*|| = (μT Σ−1μ)1/2 = Dμ, Σ which is the Mahalanobis distance between the distributions of the observation Yij under the null and the alternative hypotheses. The Mahalanobis distance is a generalization of the univariate signal-to-noise ratio and can be interpreted as a measure of deviation from the null hypothesis. In medical settings, it is a well-known global measure of the strength of the treatment effect. The second, cos(ang(w̃, ω̃*)), is a measure of angular distance between the selected and the optimal weighting vector. It is a measure, in other words, of the distance of our weighting vector selection to the optimal choice. Under this representation, it becomes clear that, for fixed weighting vectors, the location parameter θ is equal to a measure (Dμ,Σ) of the strength of the treatment effect scaled down by a measure (cos(ang(w̃, ω̃*))) of the distance between the parameters and their prior estimates. The last results are formally stated in the next theorem.

Theorem 4.1.

The design vector d, the Mahalanobis distance Dμ,Σ = (μTΣ−1μ)1/2 and the angle ang(w̃*, ω̃) between the vectors ω̃* = Σ−1/2μ and w̃ = Σ1/2w are sufficient to determine the power function β of the J-stage linear combination z and t-tests with fixed weighting vectors wj = w.

4.2. PoC for the z*-Test

The sequential adaptation of the weighting vector increases the complexity within the relation between the power function and the design parameters. However, following similar methodology as above, analogous results can be derived. For this we use two steps, the first of which involves standardizing the procedure, similarly to (4.1), and the second establishing a rotation invariance property of the power function. The next lemma is a direct consequence of the standardization step summarizing μ, Σ, and m0 to the vectors ω̃* and ω̃z*1.

Lemma 4.1.

The design vector d, the standardized optimal weighting vector ω̃* = Σ−1/2μ and the standardized first-stage weighting vector w̃zj* in (3.5) are sufficient to determine the power function βz*.

In the above result, we make use of the fact that the location parameter, θz*j, of the z*-test can be written as

|

(4.2) |

which implies that the adaptive selection of the weighting vectors can be reexpressed as a procedure of adaptive estimation of the vector ω̃*. Under this standardization, we can proceed to the rotation-invariance step which results in the next lemma.

Lemma 4.2.

The power, βz*, of the z*-test is invariant to rotations of the weighting vector w̃z*1 around the optimal weighting vector ω̃*.

The idea behind Lemma 4.2 is that if w̃z*1 is rotated around ω̃*, that is, w̃z*1 is replaced by ẇz*1 = Rw̃z*1, where R is a rotation matrix with rotation axis ω̃*, the rejection region of the test is changed. However, the new rejection region is simply a rotation of the initial rejection region. That is, for each point say w̃y(j) in the initial rejection region, we can find a unique point, say ẇy(j), in the rotated rejection region such that ẇy(j) = Rw̃y(j). Because the symmetrical Gaussian distribution of the observations w̃Y(j) ∼ NK(ω̃*, I / n(j)) remains unchanged under the rotation, the likelihood of the rejection region, that is, the power of the z*-test, remains the same. The next theorem is direct consequence of Lemmas 4.1 and 4.2.

Theorem 4.2.

The design vector d, the Mahalanobis distance Dμ,Σ, and the angle ang(ω̃*, w̃z*1) between the vectors ω̃* and w̃z*1 are sufficient to determine the power function βz*.

The above theorem states that the dependence of the power function on the model parameters and their prior estimates is described by simply a scalar measure of the strength of the treatment effect and a scalar measure of distance between the parameters and their prior estimates. It provides a sufficient description of power which is based on easily interpretable summaries and is considerably lower dimensional (importantly not depending on K, see Table 1). This allows us to perform power analysis of the adaptive J-stage z*-test in a simple way potentially covering the whole design space.

Table 1.

Model and prior parameters of the z* and t*-tests, respectively, and their dimension

| Parameters | Dimension | Parameters | Dimension |

|---|---|---|---|

| μ, Σ, m0 | (K2 + 5K)/2 | μ, Σ, m0, S0 | K2 + 3K |

| ω̃*, w̃z*1 | 2K | ω̃*, w̃z*1, D1 |  |

| Dμ,Σ, and (ω̃*, w̃z*1) | 2 | c*, cz*1 λ1 | 3K |

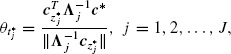

4.3. PoC for the t* Test

The need to estimate the unknown Σ increases substantially the dimension and the complexity of the design space. The sequential estimation of Σ, in addition to μ, to obtain the weighting vectors wt*j, implies that the power analysis needs to account for both estimation procedures. For this, we write the weighting vector w̃t*j, j = 1, 2, … J in (3.6) as

| (4.3) |

and w̃z*j the jth standardized weighting vector of the z*-test in (4.2). Here the Σ-deviation matrix Dj is a measure of deviation of the estimate Sj−1 in (3.3) from the parameter Σ. The weighting vector w̃t*j is then written as a product of the inverse of the matrix Dj, that accounts for the estimation of Σ, and the vector w̃z*j which accounts for the estimation of μ, the latter taking Σ as known. We next follow the same steps as in Section 4.2 for deriving the PoC of the t*-test. The standardization step results in the next lemma summarizing μ and Σ and their prior estimates m0 and S0 to the vectors ω̃* w˜z*j and the matrix D1 that have clear interpretation.

Lemma 4.3.

The design vector d, the matrix D1 in (4.3) and the vectors ω̃* and w̃z*1 are sufficient to determine the power function βt*.

Here, we use that the location parameter θt*j and the Σ-deviation matrix Dj can be written as

|

(4.4) |

and that w̃z*j can be written as the weighted average in (4.2). Here, Sw̃y(j) = Σ1/2 Sy(j) Σ−1/2 is the covariance matrix of the sample w̃yil, i = 1, 2, …, nl, l = 1, 2, …, j, where, importantly, w̃yil = Σ−1/2 Yil ∼ NK(ω̃*, I).

In a similar fashion to the previous section, we next establish the invariance of the power function under certain rotations of the prior estimates. For this, we define V = [v1 v2 … vK] to be the matrix with columns the orthonormal eigenvectors of D1 and Λ1 = diag(λ1) the diagonal matrix with diagonal λ1 = (λ11, λ12, …, λ1K)T the vector of the corresponding eigenvalues (λ11 ≥ λ21 ≥ … ≥ λ1K > 0). We can then write D1 = V Λ1VT, w̃z*1 = Vcz*1, and ω̃* = Vc* where

| (4.5) |

The rotation invariance property of the t*-test is described in the next lemma.

Lemma 4.4. —

The power function βt* is invariant to simultaneous rotations of the vector w̃z*1 and the eigenvectors of the matrix D1 around the optimal weighting vector ω̃*.

The proof of Lemma (4.4) is similar to the proof of Lemma (4.2), albeit rather more complex. The next theorem is direct consequence of Lemmas 4.3 and 4.4.

Theorem 4.3.

The design vector d, the vector of eigenvalues λ1 of the matrix D1 in (4.3), and the vectors cz*1 and c* in (4.5) are sufficient to determine the power function βt*.

As we can see in Table 1, the last result reduces the dimension of the design space of the t*-test substantially, allowing us to explore power across the design space. While the design space, due to the covariance matrix estimation, still depends on K, it is reduced from order K2 to order K.

Furthermore, this reduction provides an understanding of how the selection of the weighting vector affects power. This becomes clearer if we consider that θt*j in (4.4) can be written as

|

where

|

Here, cy(j) and Scy(j) are the sample mean and sample covariance matrix of the transformed observation vectors cyJ = [cy1 cy1 … cyj] with cyl, l = 1, 2, …, j, the matrix with columns cyil = V1T w̃y*1 ∼ NK(c*, I), i = 1, 2, … nj. The last expressions show that the distance of the prior estimates m0, S0 to the model parameters μ, Σ can be expressed by the distances of the vectors cz1* and λ1−1 = (1/λ11, …, 1/λ1K)T to c*, the latter directly reflected to power through θtj* (see the next section for more information).

In the special case of the first stage Σ-deviation matrix being proportional to the identity matrix, that is, D1 ∝ I (λ11 = λ12 = … = λ1K), as the next result shows, the design space can be reduced further.

Theorem 4.4.

For D1 = c−1I, the design vector d, the constant c, the Mahalanobis distance Dμ,Σ, and the angle ang(w̃z*1, ω̃*) are sufficient to determine the power function βt*.

The last theorem proves that, for D1 ∝ I, we can use the fact that the prior Σ-deviation matrix D1 does not change the directions of w̃z*j's, to show that the relation of βt* to the model parameters and their prior estimates can be described simply by the scalars Dμ,Σ and ang(w̃z*1, ω̃*). In the next section, we use this result and the results of Theorems 4.2 and 4.3 to perform power analysis studies.

5. EMPIRICAL STUDIES

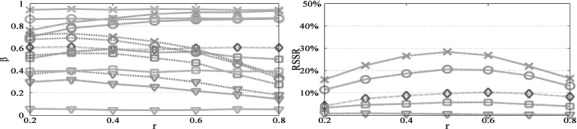

To explore properties of the adaptive z* and t*-tests as well as alternative global tests and to perform comparisons, we present empirical studies making use of the results in Theorems 4.2, 4.3, and 4.4.

In addition to z* and t*-tests, we consider linear combination z and t-tests with fixed weighting vectors, a class that includes the OLS z and t-test in O'Brien (1984). We also consider the likelihood-ratio χ2 and Hotelling's T2-test with statistics χ2 = nYΣ−1Y and T2 = n(n − K)Y SY / K(n − 1) that follow the noncentral χ2 and F distribution with K and (K, n − K) degrees of freedom, respectively, and noncentrality parameter D2μ,Σ. We consider both single stage and sequential J-stage designs for all these tests. Finally, the two-step, single-stage linear combination z+ and t+ tests proposed in Minas et al. (2012) are also considered. Note that the latter tests can be derived as special cases of the z* and t*-tests for J = 2, (α1,1, α0,1) = (0, 1) and C(p2) = p2.

A range of experiments are performed under different values of the design parameters. The power function of J-stage (J > 1) tests is not analytically tractable and therefore power is approximated by the rate of rejections in a large number of simulated replications, here R = 10,000, of a single experiment. Furthermore, to study the reduction in sample size due to early stopping of the study, we also empirically compute the rate of sample size reduction (RSSR),

where nT = n1 + n2 + … + nJ the total sample size, N the sample size used for a single replication of the study and E(N) its expected value. Note that single-stage tests have RSSR = 0, in contrast to sequential tests that allow for early stopping and thus have nonzero RSSR.

5.1 Simulation Data Examples

We next summarize the main results of a comprehensive study of the power behavior of the above tests in relation to the design parameters (more illustrations are included in Supplementary Material B). First, larger values of DμΣ and/or nT result in higher power values for all tests considered, except the z and t-tests with fixed weighting vectors w̃ orthogonal to ω̃* for which β = α. Considering the prior sample size, the results indicate that for n0 ∊ (0.5n1, 0.75n1) the prior estimates become influential, but they do not dominate the accumulated data when selecting the weighting vector while larger values of n0 enforces z* and t* to have more similar behavior to z and t-tests with fixed weighting vector. Furthermore, simulation examples confirm that larger values of the acceptance critical values α0,j increase the power of multistage tests especially for larger potential power gain in subsequent stages, at the expense of less chance of early acceptance. Simulation examples also confirm that larger power is gained if larger rejection critical values α1,j are allocated to stages with larger potential power gain, while the value of RSSR increases for larger α1,j in early stages.

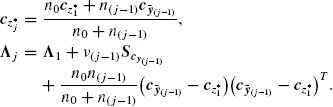

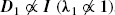

We also consider power behavior related to allocation of sample size to stages (Figure 1). For the sequential z and χ2-test, the results show that higher power is achieved if sample allocation is analogous to α-rate allocation. The z* and t*-tests generally attain higher efficiency for close to balanced allocations. For w̃z*1 close to (far from) the optimal ω̃*, slightly higher power is attained for assigning more sample to early (late) stages. Small to moderate allocation ratios r are more appropriate for the z+ test since no α rate is spent in the first stage. Further, as in the χ2-test, the z* achieves higher RSSR for r = 0.5.

Figure 1.

Power (left panel) and RSSR (right panel) versus sample allocation ratio. We plot the sequential χ2-test (magenta  ) and the z* (green –– line), sequential z (cyan –), and z+ (orange −.) tests with first stage/fixed/first step weighting vector 0 (×), 30° (○), 60° (□) and 90° (∇) angle to the optimal. The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 60, n0 = 0.5n1, Dμ,Σ = 0.65.

) and the z* (green –– line), sequential z (cyan –), and z+ (orange −.) tests with first stage/fixed/first step weighting vector 0 (×), 30° (○), 60° (□) and 90° (∇) angle to the optimal. The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 60, n0 = 0.5n1, Dμ,Σ = 0.65.

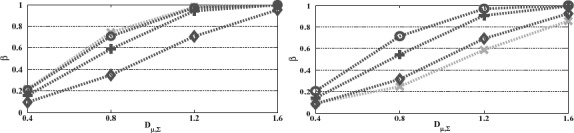

Before we proceed to comparisons, it is worth considering the impact of Σ being unknown and thus estimated on the performance of the t*-test. First, in the case of D1 ∝ I (λ1 ∝ 1 = (1, 1, …, 1)T), which as we show in Theorem 4.4 is somewhat easier case to consider, the Σ estimation variability is substantially reduced and thus we generally expect w̃t*j to be closer to w̃t*j. On the other hand, if  , the direction of λ1 is more influential on w̃t*j with the consequence being double-edged (see Figure 2). That is, compared to the situation of λ1 ∝ 1, the distance of w̃t*j's to optimal can be larger (left panel) but also smaller (right panel) depending on how close the direction of λ−11 = (1/λ11, …, 1/λ1K)T is to the optimal direction c*.

, the direction of λ1 is more influential on w̃t*j with the consequence being double-edged (see Figure 2). That is, compared to the situation of λ1 ∝ 1, the distance of w̃t*j's to optimal can be larger (left panel) but also smaller (right panel) depending on how close the direction of λ−11 = (1/λ11, …, 1/λ1K)T is to the optimal direction c*.

Figure 2.

Power of the t*-test versus Mahalanobis distance for various c*, cz1, λ1. In the left panel, the vectors c* = cz1* ∝ 1 while in the right panel c* = e1 = (1, 0, …, 0)T and cz1* ∝ 1 which, for λ1 = 1 (green −×− line), give φ = ang(c*, Λ1−1

cz1*) = ang(c*, λ−11) = 0° and 72°, respectively. In both panels, λ1

1 are also chosen to give φ = 25° (dark green –o– line), 45° (dark green −+− line) and 65° (dark green –⋄– line). The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 20, r = 0.5, n0 = 0.75n1, ν0 = n0 − 1.

1 are also chosen to give φ = 25° (dark green –o– line), 45° (dark green −+− line) and 65° (dark green –⋄– line). The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 20, r = 0.5, n0 = 0.75n1, ν0 = n0 − 1.

Finally, it is useful to note that throughout our simulations of t*-test, the cos(ang(c*, Λ1−1 czj)) is shown to be a robust summary, albeit not sufficient (see Supplementary Material B, Figure 7, Section 2.1), of the distance between the model parameters and their prior estimates. For this reason, but also to reduce complexity, in the comparisons to follow, we focus on the case of λ1 ∝ 1 (particularly, as we explain later on, in cases resembling the right panel of Figure 2), for various values of the summary cos(ang(c*, Λ1−1 cz1*)).

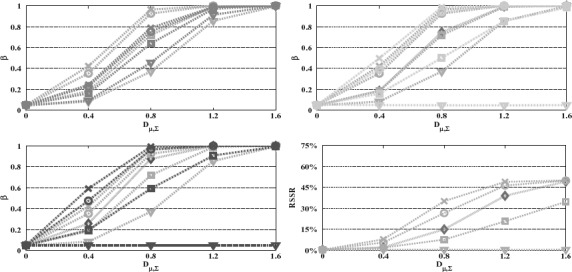

In terms of comparisons, first note that, for fixed design parameters, single-stage tests attain higher power levels than multi-stage tests, nevertheless at the expense of not allowing for early stopping and thus not allowing for sample size reduction (RSSR = 0). Furthermore, it might be useful to emphasize that for fixed design parameters, the power of the linear combination test with weighting vector (either fixed or initial) set equal to the optimal weighting vector ω* attains the maximum power and provides an upper bound to all the other presented procedures, including Hotelling's T2-test as proved in Minas et al. (2012) (Corollary 1). Compared to the z-tests with fixed weighting vectors w, as we can see in Figure 3, the adaptive z* lose some power for w̃(=w̃z1) close to optimal but gains substantial amounts of power for ω̃ far from optimal, importantly avoiding the problem of z-tests having zero power for w̃ orthogonal to optimal. This result emphasizes that, even though the power of the proposed tests remains sensitive to the prior information used to select the weighting vector, they are less sensitive to the initial selection of the weighting vector than the z and t-tests, where the weighting vector is fixed. The adaptive z*-test also has substantially higher power to z+ for small angles to the optimal and slightly lower power for large angles. Finally, the power of the single-stage and sequential χ2-tests is approximately equal to the power of the z*-test for w̃z*1 having respectively 60° and 45° angle with ω̃*. Note that, as the results in Figure 3 confirm, all the considered tests control the Type I error at the nominal level α = 0.05.

Figure 3.

Power and RSSR versus Mahalanobis distance. We plot the z*-test (green ––) with the tests z+ (orange –.) (up left), sequential z (cyan −) and χ2 (magenta  ) (up right), single stage z (blue –) and χ2 (red

) (up right), single stage z (blue –) and χ2 (red  ) (down left) and sequential χ2 (down right). The linear combination z*/z / z+ tests are performed with first stage/fixed/first step weighting vectors having 0 (×), 30° (○), 60° (□), and 90° (∇) angle to the optimal. The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 30, r = 0.5, n0 = 0.75n1, ν0 = n0 − 1.

) (down left) and sequential χ2 (down right). The linear combination z*/z / z+ tests are performed with first stage/fixed/first step weighting vectors having 0 (×), 30° (○), 60° (□), and 90° (∇) angle to the optimal. The remaining design parameters are J = 2, K = 10, α = 0.05, α1,1 = 0.01, α0,1 = 1, nT = 30, r = 0.5, n0 = 0.75n1, ν0 = n0 − 1.

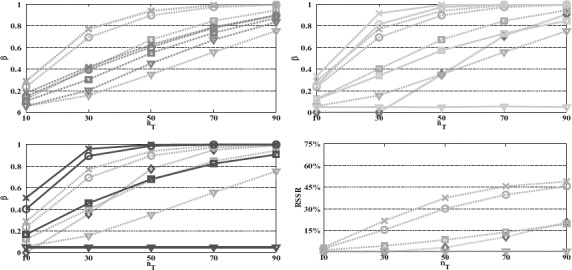

In the case of Σ unknown, we consider comparisons for the case of D1 = I which, using the results of Theorem 4.4, they can be performed in a similar way to the case of known Σ. For the simulations in Figure 4, the case of D1 = I can be thought of as representative of λ1−1 fairly distant to c* (right panel of Figure 2), since we take c* = e1 resulting in cos(ang(c*, λ−11)) = √K/K (≅0.26, angle 75°, for K = 15). As we would expect, the power of all tests is lower than their counterparts for Σ known (same design parameters), but the patterns of power difference across tests remain the same except from Hotelling's T2 which in contrast to χ2-test is highly dependent on the sample size.

Figure 4.

Power and RSSR versus the total sample size nT. We plot the t*-test (green —.) with the tests, t+ (orange −.) (up left), sequential t (cyan –) and T2 (magenta  ) (up right), single stage t (blue −) and T2 (red

) (up right), single stage t (blue −) and T2 (red  ) (down left) and sequential T2 (down right). The linear combination t*/t / t+ tests are performed with first stage/fixed/first step weighting vectors having 0 (×), 30° (○), 60° (□), and 90° (∇) angle to the optimal. The remaining design parameters are K = 15, J = 2, α = 0.05, α1,1 = 0.01, α0,1 = 1, r = 0.5, n0 = 6, ν0 = n0 − 1, Dμ,Σ = 0.7.

) (down left) and sequential T2 (down right). The linear combination t*/t / t+ tests are performed with first stage/fixed/first step weighting vectors having 0 (×), 30° (○), 60° (□), and 90° (∇) angle to the optimal. The remaining design parameters are K = 15, J = 2, α = 0.05, α1,1 = 0.01, α0,1 = 1, r = 0.5, n0 = 6, ν0 = n0 − 1, Dμ,Σ = 0.7.

As Figure 4 illustrates, for nT ≤ K or nT slightly larger than K (here, nT = 10–30 for K = 15), T2 is respectively inapplicable or very inefficient with power levels lower than the power of t* even for angles close to orthogonal. As sample size becomes considerably bigger than K (nT > 50), the power of T2-test increases sharply to yield power levels analogous to the χ2-test. For instance, for the design parameters in Figure 4, the single stage and sequential T2-tests, likewise to the χ2-test, have power close to the power of the t* for angle 60° and 45°, respectively, for large sample sizes.

6. APPLICATION TO AN EEG STUDY

We consider applications to an electroencephalogram (EEG) study, the results of which are provided in Läuter, Glimm, and Kropf (1996). As Läuter et al. described, the data are collected from nT = 19 depressive patients at the beginning and at the end of a six week therapy. For demonstration, K = 9 variables are used which represent the changes of the absolute theta power in channels 3–8, 17–19 of EEG during the therapy of each patient. In Table 2, we present the means, standard deviations, and correlation matrix of the data. Note that although an increase is indicated in all channels, none of them (mink pk = 0.04) fall below the Bonferroni corrected threshold α / K ≅ 0.0056 at the α = 5% significance level. Hotelling's T2-test also fails to reject H0 (pT2 = 0.261). On the contrary, the SS and PC t-tests proposed by Läuter et al. reject H0 at the 5% significance level (pSS = 0.0489, pPC = 0.0487).

Table 2.

Means, standard deviations, correlations, and their prior estimates for the EEG depression study presented in Läuter, Glimm, and Kropf (1996)

| ch. | 3 | 4 | 5 | 6 | 7 | 8 | 17 | 18 | 19 |

|---|---|---|---|---|---|---|---|---|---|

| yk | 0.8710 | 1.5890 | 1.0370 | 1.1460 | 0.8510 | 0.8530 | 1.4220 | 0.7510 | 0.9950 |

| m0,k | 0.5 | 3.50 | 1 | 2 | 2 | 2 | 2 | 2 | 2 |

| syk | 2.9494 | 3.5121 | 2.3637 | 2.2490 | 2.2760 | 2.0706 | 3.2624 | 2.6382 | 2.3593 |

| s0,k | 1.5 | 2.5 | 1 | 2 | 2 | 2 | 2 | 2 | 2 |

| R0\Ry | 1 | 0.9262 | 0.8115 | 0.7959 | 0.5786 | 0.4902 | 0.9323 | 0.4896 | 0.5312 |

| 4 | 0.8 | 1 | 0.6270 | 0.7835 | 0.3357 | 0.4450 | 0.9313 | 0.2778 | 0.4892 |

| 5 | 0.8 | 0.7 | 1 | 0.7882 | 0.8492 | 0.7173 | 0.7347 | 0.7145 | 0.7611 |

| 6 | 0.7 | 0.8 | 0.7 | 1 | 0.6020 | 0.7924 | 0.8180 | 0.6334 | 0.7783 |

| 7 | 0.5 | 0.4 | 0.7 | 0.55 | 1 | 0.6155 | 0.4639 | 0.6833 | 0.5992 |

| 8 | 0.4 | 0.5 | 0.55 | 0.7 | 0.6 | 1 | 0.5177 | 0.5983 | 0.7833 |

| 17 | 0.9 | 0.9 | 0.75 | 0.75 | 0.45 | 0.45 | 1 | 0.4048 | 0.5711 |

| 18 | 0.45 | 0.45 | 0.65 | 0.65 | 0.7 | 0.7 | 0.5 | 1 | 0.4445 |

| 19 | 0.75 | 0.75 | 0.8 | 0.8 | 0.65 | 0.65 | 0.8 | 0.7 | 1 |

We perform power analysis by setting the design parameters as in the above study, that is, nT = 19, K = 9, μ = y, Σ = Sy, α = 0.05. For these design parameters, the power of Hotelling's T2 is βT2 ≅ 0.68 (Dμ,Σ = 1.15). This is larger than the power of the SS and PC tests which are respectively βtSS ≅ 0.52, βtPC 0.51 (the contrasting results of the tests performed using these data are because of the different shape of the t and F distributions). The latter power values are very close to the power of the OLS t-test in O'Brien (1984), βtOLS ≅ 0.52, which uses the uniform weighting vector wOLS ∝ 1. This gives angle ang(w̃OLS, ω̃) ≅ 71°. Taking into account that the single-stage t-test for a weighting vector equal to the optimal has power βt ≅ 1, we can easily see that there is considerable scope for improvement.

Since the study was performed, there has been considerable research into EEG studies on depressive patients. There is now literature (see, e.g., Davidson et al. 2002) indicating that left-frontal hypoactivation and right-frontal hyperactivation are present in such subjects. This would indicate that a nonuniform prior over these frontal regions should be used. Using prior information based on such evidence, the adaptive t*-test can attain high power levels. For example, the prior estimates given in Table 2 are in agreement with the evidence in the literature and further, the prior correlation structure is set to be roughly coherent to the distances between the channels, that is, larger distances have smaller correlations, with larger correlations set at the highly active frontal regions (in accordance with the literature).

This prior estimate gives ang(w̃t*1) = 37.27° which is much smaller than the angle under the uniform weighting vector. For a two-stage design (J = 2), with balanced sample allocation, n1 = 10, n2 = 9, and α allocation α1,1 = 0.01, a2 = 0.0087, no early acceptance allowed, α0,1 = 1, prior sample size n0 = 7 = 0.7n1, ν0 = 6 (see previous section) and the remaining design parameters as the original study, the t*-test has power βt* = 0.84 with RSSR = 22.3% (E(N) ≅ 15). Substantial power improvement is also obtained over the t+ which, for n0 = 6, n1 = 13, n2 = 6 (r = 0.3) and the remaining design parameters as above, has power βt+ ≅ 0.64.

7. DISCUSSION

The methods developed in this work demonstrate that linear combination tests provide a substantial alternative to the classical Hotelling's T2 global test, especially in the setting, commonly encountered in recent important applications of clinical neuroscience, of the available sample size n being small compared to the observation dimension K. It is also shown that adaptive linear combination tests provide power robustness across the set of alternative hypotheses since they can correct initial selections of the weighting vector which are far from the optimal selection. The adaptive J-stage z* and t*-tests achieve high power levels for large n, independently of the initial selection of weighting vector, but most importantly they can achieve high-power performance even if n is limited.

The proposed tests achieve optimality in the sense of maximizing the predictive power of the test at each interim analysis. Predictive power has been used for sample size calculation (O'Hagan and Stevens 2001), treatment selection (Kimani, Stallard, and Hutton 2009) and to select the component-wise significance levels in multiple testing (Westfall, Krishen, and Young 1998). It is a useful tool for incorporating prior information into the design of a study, particularly as such studies can often be viewed as a decision-making process. The application in Section 6 provides an example of a setting in which prior information is available and can substantially improve the performance of existing tests.

Optimality is attained in our methods without undermining the two main targets of adaptive designs: flexibility and test specificity. This allows for future developments of the proposed test to consider further optimal design adaptations. The use of other adaptive designs techniques, such as sample size reassessment, within our methodology can improve further the performance of the proposed tests.

The power characterization in Section 4 provides a tool for understanding and alleviating to some extent the complexities of multivariate tests especially those based on response dimension reductions. The possibly high-dimensional model parameters and their prior estimates are reduced to low-dimensional summaries which are still sufficient to compute power. Importantly, these summaries have interpretations directly related to the strength of the treatment effect and the effect of the dimension reduction on power. They provide a method for performing simple power analysis, but also understanding the behavior of linear combination tests.

The methods used to derive the power characterization are also interesting in their own right. They can be generally described by two steps: standardization and rotation invariance. The first standardization step is a prevalent technique for reexpressing statistical models in the standard deviation unit and eliminating correlations. Here, it allows us to reexpress the weighting vector selection, which involves estimating the unknown model parameters, as a procedure of learning a single vector, that is, the optimal weighting vector. The second step of establishing a rotation invariance property for the power function allows us to identify the measure quantifying the angular distance between the selected and the optimal weighting vector, reducing further the design space. The question whether these results can be derived under more relaxed modeling assumptions is an area of ongoing research.

SUPPLEMENTARY MATERIALS

Additional supplementary material is provided in the following documents:

Supplement A: Technical results Technical details, lemmas, and proofs.

Supplement B: Extended simulation examples Examples from the extensive simulation studies performed to study the power of the considered tests.

REFERENCES

- Bauer P., Köhne K. Evaluation of Experiments With Adaptive Interim Analyses. Biometrics. 1994;50:1029–1041. [613,615] [PubMed] [Google Scholar]

- Brannath W., Gutjahr G., Bauer P. Probabilistic Foundation of Confirmatory Adaptive Designs. Journal of the American Statistical Association. 2012;107:824–832. [613,614] [Google Scholar]

- Brannath W., Posch M., Bauer P. Recursive Combination Tests. Journal of the American Statistical Association. 2002;97:236–244. [613] [Google Scholar]

- Bretz F., Koenig F., Brannath W., Glimm E., Posch M. Adaptive Designs for Confirmatory Clinical Trials. Statistics in Medicine. 2009;28:1181–1217. doi: 10.1002/sim.3538. [613,615] [DOI] [PubMed] [Google Scholar]

- D'Agostino R. B., Russell H. K. Multiple Endpoints, Multivariate Global Tests. New York: Wiley; 2005. [613] [Google Scholar]

- Davidson R. J., Pizzagalli D., Nitschke J. B., Putnam K. Depression: Perspectives From Affective Neuroscience. Annual Review of Psychology. 2002;53:545–574. doi: 10.1146/annurev.psych.53.100901.135148. [621] [DOI] [PubMed] [Google Scholar]

- Denne J. S., Jennison C. A Group Sequential T-test With Updating of Sample Size. Biometrika. 2000;87:125–134. [613] [Google Scholar]

- Follmann D. A Simple Multivariate Test for One-Sided Alternatives. Journal of the American Statistical Association. 1996;91:854–861. [614] [Google Scholar]

- Gelman A., Carlin J. B., Stern H. S., Rubin D. B. Bayesian Data Analysis. Boca Raton, FL: Chapman & Hall; 2004. [616] [Google Scholar]

- Hotelling H. The Generalization of Student's Ratio. The Annals of Mathematical Statistics. 1931;2:360–378. [613] [Google Scholar]

- Kieser M., Schneider B., Friede T. A Bootstrap Procedure for Adaptive Selection of the Test Statistic in Flexible Two-Stage Designs. Biometrical Journal. 2002;44:641–652. [613] [Google Scholar]

- Kimani P. K., Stallard N., Hutton J. L. Dose Selection in Seamless Phase II/III Clinical Trials Based on Efficacy and Safety. Statistics in Medicine. 2009;28:917–936. doi: 10.1002/sim.3522. [613,622] [DOI] [PubMed] [Google Scholar]

- Lang T., Auterith A., Bauer P. Trendtests With Adaptive Scoring. Biometrical Journal. 2000;42:1007–1020. [613] [Google Scholar]

- Läuter J., Glimm E., Kropf S. New Multivariate Tests for Data With an Inherent Structure. Biometrical Journal. 1996;38:1–23. [614,621] [Google Scholar]

- Läuter J. Multivariate Tests Based on Left-Spherically Distributed Linear Scores. The Annals of Statistics. 1998;26:1972–1988. [613] [Google Scholar]

- Lehmacher W., Wassmer G. Adaptive Sample Size Calculations in Group Sequential Trials. Biometrics. 1999;55:1286–1290. doi: 10.1111/j.0006-341x.1999.01286.x. [613,615] [DOI] [PubMed] [Google Scholar]

- Liu Q., Proschan M. A., Pledger G. W. A Unified Theory of Two-Stage Adaptive Designs. Journal of the American Statistical Association. 2002;97:1034–1041. [613] [Google Scholar]

- Logan B. R., Tamhane A. C. On O'Brien's OLS and GLS Tests for Multiple Endpoints. Lecture Notes-Monograph Series. 2004;47:76–88. [614,616] [Google Scholar]

- Mehta C. R., Pocock S. J. Adaptive Increase in Sample Size When Interim Results are Promising: A Practical Guide With Examples. Statistics in Medicine. 2011;30:3267–3284. doi: 10.1002/sim.4102. [613] [DOI] [PubMed] [Google Scholar]

- Minas G., Rigat F., Nichols T. E., Aston J. A. D., Stallard N. A Hybrid Procedure for Detecting Global Treatment Effects in Multivariate Clinical Trials: Theory and Applications to fMRI Studies. Statistics in Medicine. 2012;31:253–268. doi: 10.1002/sim.4395. [613,619,620] [DOI] [PubMed] [Google Scholar]

- Müller H.-H., Schäfer H. Adaptive Group Sequential Designs for Clinical Trials: Combining the Advantages of Adaptive and of Classical Group Sequential Approaches. Biometrics. 2001;57:886–891. doi: 10.1111/j.0006-341x.2001.00886.x. [613] [DOI] [PubMed] [Google Scholar]

- O'Brien P. C. Procedures for Comparing Samples With Multiple End-points. Biometrics. 1984;40:1079–1087. [613,616,619,621] [PubMed] [Google Scholar]

- O'Hagan A., Stevens J. W. Bayesian Assessment of Sample Size for Clinical Trials of Cost-Effectiveness. Medical Decision Making. 2001;21:219–230. doi: 10.1177/0272989X0102100307. [622] [DOI] [PubMed] [Google Scholar]

- Pocock S. J., Geller N. L., Tsiatis A. A. The Analysis of Multiple End-Points in Clinical-Trials. Biometrics. 1987;43:487–498. [614,616] [PubMed] [Google Scholar]

- Proschan M. A., Hunsberger S. A. Designed Extension of Studies Based on Conditional Power. Biometrics. 1995;51:1315–1324. [613] [PubMed] [Google Scholar]

- Spiegelhalter D., Abrams K. R., Myles J. Bayesian Approaches to Clinical Trials and Health-Care Evaluation. Chichester: Wiley; 2002. [614,616] [Google Scholar]

- Tang D.-I., Geller N. L., Pocock S. J. On the Design and Analysis of Randomized Clinical Trials With Multiple Endpoints. Biometrics. 1993;49:23–30. [614,616] [PubMed] [Google Scholar]

- Tang D.-I., Gnecco C., Geller N. L. An Approximate Likelihood Ratio Test for a Normal Mean Vector With Nonnegative Components With Application to Clinical Trials. Biometrika. 1989a;76:577–583. [614] [Google Scholar]

- Tang D.-I. Design of Group Sequential Clinical Trials With Multiple Endpoints. Journal of the American Statistical Association. 1989b;84:776–779. [614] [Google Scholar]

- Westfall P. H., Krishen A., Young S. S. Using Prior Information to Allocate Significance Levels for Multiple Endpoints. Statistics in Medicine. 1998;17:2107–2119. doi: 10.1002/(sici)1097-0258(19980930)17:18<2107::aid-sim910>3.0.co;2-w. [622] [DOI] [PubMed] [Google Scholar]

- Zhu H. J., Hu F. F. Sequential Monitoring of Response-Adaptive Randomized Clinical Trials. The Annals of Statistics. 2010;38:2218–2241. [613] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary materials for this article are available online. Please go to www.tandfonline.com/r/JASA