The enormous scale of medical knowledge and the unrelenting pace of advances in science has mandated continuing medical education (CME) in health care systems and among physicians. The advantages of online CME formats over the traditional course, conference or workshop methods are being recognized, especially with regard to accessibility, time and cost, in the current climate of resource limitations. This study investigated the utility of online CME for emergency physicians in Nova Scotia.

Keywords: CME, E-learning modules, Hand trauma, Online learning

Abstract

BACKGROUND:

The enormity of modern medical knowledge and the rapidity of change have created increased need for ongoing or continuing medical education (CME) for physicians. Online CME is attractive for its availability at any time and any place, low cost and potentially increased effectiveness compared with traditional face-to-face delivery.

OBJECTIVE:

To determine whether online CME modules are an effective method for delivering plastic surgery CME to primary care physicians.

METHODS:

A needs assessment survey was conducted among all emergency and family physicians in Nova Scotia. Results indicated that this type of program was appealing, and that hand trauma related topics were most desired for CME. 7 Lesson Builder (SoftChalk LLC, www.softchalk.com) was used to construct a multimedia e-learning module that was distributed along with a pretest, post-test and feedback questionnaire. Quantitative (pre- and post-test scores) and qualitative (feedback responses) data were analyzed.

RESULTS:

The 32 participants who completed the study indicated that it was a positive and enjoyable experience, and that there was a need for more resources like this. Compared with pretest scores, there was a significant gain in knowledge following completion of the module (P=0.001).

CONCLUSION:

The present study demonstrated that an e-learning format is attractive for this population and effective in increasing knowledge. This positive outcome will lead to development of additional modules.

Abstract

HISTORIQUE :

En raison de l’étendue des connaissances médicales modernes et de la rapidité des changements, les médecins ont de plus en plus besoin de formation médicale continue (FMC). La FMC virtuelle est attrayante puisqu’elle est accessible en tout temps et en tout lieu, qu’elle est peu coûteuse et qu’elle est peut-être plus efficace que les cours en salle.

OBJECTIF :

Déterminer si des modules de FMC virtuels sont efficaces pour donner des FMC en chirurgie plastique à des médecins de première ligne.

MÉTHODOLOGIE :

Un sondage d’évaluation des besoins a été distribué à tous les médecins d’urgence et les médecins de famille de la Nouvelle-Écosse. Selon les résultats, ce type de programme était attrayant, et les FMC les plus souhaitées étaient liées aux traumatismes de la main. Le programme 7 Lesson Builder (SoftChalk LLC, www.softchalk.com) a été utilisé pour construire un module d’apprentissage virtuel multimédia qui a été distribué conjointement avec un test avant le module, un test après le module et un questionnaire de rétroaction. Les données quantitatives (résultats des tests avant et après le module) et qualitatives (réponses de rétroaction) ont été analysées.

RÉSULTATS :

Les 32 participants qui ont terminé l’étude ont indiqué avoir vécu une expérience positive et agréable, et trouvaient que d’autres ressources de ce genre étaient nécessaires. Par rapport aux résultats du test avant le module, les connaissances avaient beaucoup augmenté après l’exécution du module (P=0,001).

CONCLUSION :

La présente étude démontre que l’apprentissage virtuel est attirant pour cette population et accroît leurs connaissances. Étant donné ces résultats positifs, d’autres modules seront créés.

To engage in lifelong learning is a commitment that all physicians make. Because staying current with rapid changes in medical science is not an easy task for a busy clinician, continuing medical education (CME) programs are important channels for promotion of such learning. Not only is CME an activity that physicians participate in for personal and professional satisfaction, it is also mandated by many regulatory bodies as part of maintenance of competence, accreditation and licensure.

Traditionally, CME has taken the form of face-to-face learning through courses, conferences or workshops. These sessions lead to increased knowledge, but may not have significant impact on physician behaviour or patient outcomes. Face-to-face learning has positive aspects such as social interaction and the ability to ask real-time questions of the presenter. Limitations of the traditional approach include accessibility (time, travel) and cost (1).

Online CME has been gaining in popularity over the past decade. An online format offers several important advantages over traditional CME, including ease of access, flexible timing, low cost and potentially enhanced effectiveness (2). Because of these advantages and its consequent rise in popularity, online CME is considered to be a major innovation that could lead to abandonment of traditional face-to-face CME programs (3). Investigation of online CME in 2008 found that there was a rapid increase in online CME, but it still amounted to only 6.9% to 8.8% of all CME available. The authors predicted exponential growth in the next few years, with up to 50% of CME being delivered online by 2015 (3).

The effectiveness of online CME has been studied extensively. In a randomized controlled trial, Weston et al (4) evaluated the potential of online CME to improve quality of care and found that there was evidence for online CME to improve effectiveness of physician practices. In comparing participants and nonparticipants in 114 different Internet-based CME activities, Casebeer et al (5) found that individuals who participated were more likely to make evidence-based clinical choices in practice. Ryan et al (6) demonstrated equal effectiveness of their traditional face-to-face CME delivery compared with a new online version. Fordis et al (7) compared online instruction to live workshops in a randomized controlled trial. They found the outcomes to be comparable, but with advantages associated with online that included flexible timing, ease of access and greater adaptability to individual learning styles. The common theme that emerges from a review of the literature is that there is at least equal effectiveness with online CME, and some suggestion that it could be better if studied appropriately (2).

While some online CME resources exclusively for plastic surgeons exist (8), there is a lack of information available to those on the front lines (ie, emergency department physicians) who are responsible for initial diagnoses, temporization and appropriate referrals. To characterize this situation, Anzarut et al (9) assessed educational needs of physicians making referrals to plastic surgeons in Edmonton, Alberta. They found that there was a clear need for CME in this group and identified the most popular topics.

The present study was designed to determine whether online CME is an effective way to educate emergency physicians in Nova Scotia about relevant plastic surgery topics.

METHODS

Needs assessment

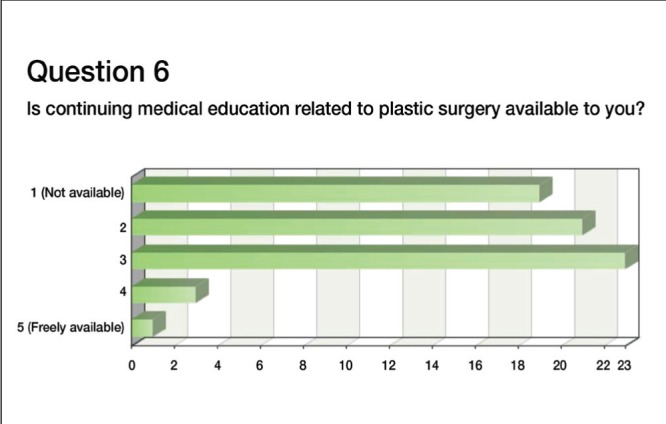

The needs assessment consisted of a survey (Opinio 6.5.1, Objectplanet) distributed to all family and emergency physicians registered with the Dalhousie University Continuing Medical Education Department (Halifax, Nova Scotia). The survey was designed following the recommended steps for a needs assessment survey (10,11). The survey consisted of 34 questions including demographic information, nature of practice and opinions regarding CME delivery; the remainder of the survey questions concerned what would be desirable for CME module content. The questions were selected based on personal experience and a study investigating a similar needs situation in Alberta by Anzarut et al (9). Figure 1 shows a sample survey question and responses. A summary of the 67 responses to the needs assessment survey is shown in Table 1. The key findings were that there was little or no available resources for plastic surgery CME, that hand trauma management education was the most needed and that online CME was appealing.

Figure 1).

Sample question and response from needs assessment report

TABLE 1.

Summary of needs assessment survey results

| Question | Response |

|---|---|

| Sex | 33 male, 34 female |

| Age, years, mean (range) | 55.5 (28–67) |

| Is plastic surgery CME currently available? | No (58%) |

| How valuable would a plastic surgery CME program be? | Valuable or very valuable (78%) |

| Ideal length of a CME module? | 15 min to 30 min (76%) |

CME Continuing medical education

Module design

Results of the needs assessment survey showed that topics related to hand trauma were most desired (Table 2); therefore, a Hand Trauma CME series was developed. SoftChalk 7 Lesson Builder (SoftChalk LLC, www.softchalk.com) was used to construct the online learning module. Selected content was based on the needs assessment, and the pilot version was designed as a ‘Basic Techniques Module’ to serve as a foundation to subsequent, more specific modules. It included hand anatomy, hand examination, local anesthetic blocks of the hand and splinting. These are hands-on skills and the module relied on video content for illustration. A series of 10 videos were made with plastic surgery resident volunteers and edited with iMovie software (Apple Inc, USA).

TABLE 2.

Top 10 desired continuing medical education topics from the needs assessment survey

| Topic | Response of 4 or 5 on the five point scale |

|---|---|

| Hand infections | 89 |

| Splinting for hand injuries | 88 |

| Burn dressings | 83 |

| Extensor tendon injury | 80 |

| Local anesthetics in hand injuries | 76 |

| Phalangeal fractures | 75 |

| Skier’s thumb | 73 |

| Metacarpal fractures | 72 |

| Nasal fractures | 72 |

| Management of minor burns | 70 |

Implementation

The module was assembled and published using SoftChalk Connect for public access. The content outline is shown in Table 3 (softchalk-cloud.com/lesson/serve/TIv50aQ3UAunsZ/html).

TABLE 3.

Outline of module content

| Module format | |

|---|---|

| Page 1 | Welcome note and introduction |

| Page 2 | Module outline |

| Page 3 | Review of Hand Anatomy: Background information and a video demonstrating and explaining basic hand anatomy |

| Page 4 | Hand Examination: Background information and a video demonstration a “Trauma Hand Exam” that is quick and comprehensive. |

| Page 5 | Local Anesthetics: Background information and 3 videos (preparation for block administration, how to perform the single injection volar digital block and how to perform the metacarpal block) |

| Page 6 | Splinting for Hand Injuries: Background information and 5 videos (preparation of materials, volar splint, ulnar gutter splint, dorsal splint, thumb spica splint) |

| Page 7 | Information on next module and contact information to ask any questions or for clarification. |

Ethics approval was obtained from the QE II Health Sciences Centre Research Ethics Board (Halifax, Nova Scotia). It is a mixed-methods study, designed to capture quantitative (test scores) and qualitative (participant experience) data.

Participants

The target audience was emergency physicians and family doctors who participate in emergency room shifts in Nova Scotia. However, because of limitations of the distribution method, many physicians who do not perform emergency work (eg, office-based family physicians) were included.

Evaluation instruments

To study the effectiveness of the module in terms of acquisition of knowledge, a pretest was designed. Twenty multiple-choice questions were developed based on the topics covered in the module. It was tested on plastic surgery residents and medical students to assess accuracy and validity. The same questions were used as a post-test to reduce the risk of bias. The results were used to quantify any knowledge increases attributable to participation in the module.

A feedback questionnaire was distributed along with the post-test to gather participants’ opinions on the module. Likert scales were used to measure opinions on topics such as module content and length. Open-ended questions requiring a narrative response were used to gather participants’ input on issues such as relevance to practice, impact on practice and how this learning activity compared with previous traditional CME experiences.

Data collection

The Dalhousie CME department e-mail distribution list was used to send an introductory letter and a hyperlink for the pretest survey to all registered family and emergency physicians in Nova Scotia by e-mail. On completion of the pretest, a hyperlink to the online module was sent to participants. Following completion of the module a final hyper-link to the post-test and feedback questionnaire was sent. The data collection period was six weeks, with a reminder sent at four weeks. Data were collected using Opinio software.

Analysis

Pre- and post-test scores were assessed for statistical significance using the paired Student’s t test and analyzed in Excel (Microsoft Corporation, USA). Likert scale responses were collected and analyzed using descriptive statistics and comments were recorded.

RESULTS

Pre- and post-testing

Pre- and post-test results are summarized in Table 4. Pretest responses were received from 68 physicians; post-test responses were received from 32. Matching of responses was performed using e-mail addresses provided by respondents and 25 complete data sets were available for statistical analysis. Some who completed the post-test evidently did not complete the pretest, or used a different e-mail address. The online module was accessed 311 times during the study period, but there may have been multiple accesses by the same individual. Mean (± SD) pretest score was 13.88±2.40 of 20 and mean post-test score was 17.24±1.64 of 20. There was a statistically significant difference between mean pre- and post-test scores (P<0.001).

TABLE 4.

Statistical analysis of pre- and post-test scores (n=25)

| n | Mean ± SD | P* | |

|---|---|---|---|

| Pretest | 25 | 13.88±2.40 | |

| Post-test | 25 | 17.24±1.64 | |

| <0.001 |

Paired t test

Feedback questionnaire

The feedback questionnaire consisted of some questions with Likert rating scales and some that required narrative responses. A summary of representative participant responses is provided for illustrative purposes.

Most responders believed that this learning experience was relevant. The majority were positive responses such as “very relevant”, “Very relevant as I am an ERP in a regional center”, “Extremely excellent resource. Very succinct, not too long”, and “Very applicable”. In terms of effect on practice, most responses included comments such as: “Will try doing volar digital blocks”, “better understanding of splinting techniques”, “Better exam technique” and “Much more comfort in dealing with hand trauma”. When asked “How would you describe your learning in this online module compared to workshops or conferences you have previously attended in person?”, most believed the online module format was superior to previously attended workshops or conferences, with comments such as: “Better”, “Format nice as can do it on own time”, “This is an excellent format – useful, very practical information accessible at home”, “I found this to be an effective and enjoyable way to learn” and “Very good and quick”.

DISCUSSION

The present study demonstrated that an online learning module is a feasible method to teach basic hand trauma management to family and emergency physicians. Comparative analysis of the pre- and post-module test scores showed a significant increase in knowledge (P<0.001). The feedback questionnaire responses from participants were generally positive. Results of the present study were consistent with positive knowledge acquisition and high participant satisfaction similar to findings in previous studies of online CME (4,7,12).

The challenges encountered in the present study were also consistent with other investigations of online CME. Curran and Fleet (2) noted that the three main challenges to online CME are ensuring learners have the requisite computer skills and hardware, attracting learners, and developing a valid and appropriate curriculum. Whether some physicians did not participate because of lack of computer or Internet skills is unknown, but likely.

Promoting participation is a problem with any voluntary activity and an external incentive of some kind is useful. Initial plans called for having this activity formally accredited, but there were many requirements that were not possible to meet for this pilot module. Using existing Dalhousie University CME Department e-mail distribution appeared to be a logical approach for the present project; however, many of the addresses were no longer valid and there was no assurance that the individuals who would benefit most from this intervention were included in the list.

The target population for the present module was physicians who treated hand injuries. This essentially includes full-time emergency department (ED) physicians and family physicians who work shifts in their local ED. The actual number that comprises this group is difficult to determine. While >800 e-mail invitations were sent, only 67 responses to the pretest and 32 responses to the post-test were received. This is a very low response rate. Factors contributing to the low response rate included the large number of undeliverable messages (>100) and the fact that all family physicians, regardless of whether they performed emergency work, were included on the list. Consideration of these factors permits the number of responses to be interpreted with perspective. Interestingly, the number of responses to the pretest was nearly identical to the number of responses received for the needs assessment survey in 2011, which was sent using the same e-mail distribution list (68 versus 67). It is reasonable to consider that this group is representative of physicians who received the invitation, found the topic relevant, and had the inclination and technological ability to complete the module. There is almost certainly an element of selection bias leading to overly positive results, with physicians who use computers frequently being more likely to complete an online survey. Given this limitation, it is not possible to generalize the results of the present study to the entire population of ED physicians in the province. The results, limited as they may be, carry a clear message that the modality is appealing and the content useful.

A barrier to participation may also have been the requirement to complete the pre- and post-tests. This provided useful quantitative data for assessment of the effect of the module, but it may have discouraged some participants. One physician commented that she was embarrassed by her poor level of pretest knowledge, and that she did not want to submit it. For future modules, questions may be included for formative purposes, but not as formal requirements to promote better participation.

There were technical challenges in the execution of the module. The platform used, SoftChalk, is easy to use and ideal for this type of e-learning. The module was hosted on the SoftChalk Connect website which was publicly accessible. There were some problems with video playback on the site – some potential participants were unable to view the videos or experienced other glitches with the website. The root of these problems is unclear, but may represent software incompatibility issues or a limitation of the SoftChalk website. These technical difficulties will be addressed before the release of future modules. For example, hosting the module on a website through Dalhousie University may be better because this website may be able to handle the memory requirements for smooth video playback.

CONCLUSION

A needs-based online CME module was developed for physicians who manage hand trauma in Nova Scotia. By comparing pre- and post-test scores the module was shown to effectively increase knowledge. Feedback results indicate that participant satisfaction is high and comments suggest that further modules in this series would be useful and desirable. The positive results of the present pilot study will facilitate and justify further module development to address other areas of deficiency identified through the needs assessment. Our approach may also serve as a template for other medical specialties to develop online CME.

Acknowledgments

The author thanks Dr Nina Hynick for her assistance with SoftChalk module design, and Drs Simon Frank and Peter Davison for their assistance with video production and data analysis.

Footnotes

DISCLOSURES: The author has no financial disclosures or conflicts of interest to declare.

REFERENCES

- 1.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance. A systematic review of the effect of continuing medical education strategies. JAMA. 1995;274:700–5. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- 2.Curran VR, Fleet L. A review of evaluation outcomes of web-based continuing medical education. Med Educ. 2005;39:561–7. doi: 10.1111/j.1365-2929.2005.02173.x. [DOI] [PubMed] [Google Scholar]

- 3.Harris JM, Jr, Sklar BM, Amend RW, Novalis-Marine C. The growth, characteristics, and future of online CME. J Contin Educ Health Prof. 2010;30:3–10. doi: 10.1002/chp.20050. [DOI] [PubMed] [Google Scholar]

- 4.Weston CM, Sciamanna CN, Nash DB. Evaluating online continuing medical education seminars: Evidence for improving clinical practices. Am J Med Qual. 2008;23:475–83. doi: 10.1177/1062860608325266. [DOI] [PubMed] [Google Scholar]

- 5.Casebeer L, Brown J, Roepke N, et al. Evidence-based choices of physicians: A comparative analysis of physicians participating in Internet CME and non-participants. BMC Med Educ. 2010;10:42. doi: 10.1186/1472-6920-10-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ryan G, Lyon P, Kumar K, Bell J, Barnet S, Shaw T. Online CME: An effective alternative to face-to-face delivery. Med Teach. 2007;29:e251–e257. doi: 10.1080/01421590701551698. [DOI] [PubMed] [Google Scholar]

- 7.Fordis M, King JE, Ballantyne CM, et al. Comparison of the instructional efficacy of Internet-based CME with live interactive CME workshops: A randomized controlled trial. JAMA. 2005;294:1043–51. doi: 10.1001/jama.294.9.1043. [DOI] [PubMed] [Google Scholar]

- 8.Lalonde DH, Handley A, Sullivan D, Rohrich RJ. Taking CME to a new level in plastic and reconstructive surgery. Plast Reconstr Surg. 2008;122:1275–8. doi: 10.1097/PRS.0b013e3181845b44. [DOI] [PubMed] [Google Scholar]

- 9.Anzarut A, Singh P, Cook G, Domes T, Olson J. Continuing medical education in emergency plastic surgery for referring physicians: A prospective assessment of educational needs. Plast Reconstr Surg. 2007;119:1933–9. doi: 10.1097/01.prs.0000259209.56609.83. [DOI] [PubMed] [Google Scholar]

- 10.Kern DE, Thomas PA, Hughes MT. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore: JHU Press; 2009. [Google Scholar]

- 11.Shannon S. Needs assessment for CME. Lancet. 2003;361:974. doi: 10.1016/S0140-6736(03)12765-1. [DOI] [PubMed] [Google Scholar]

- 12.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: A meta-analysis. JAMA. 2008;300:1181–96. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]