Abstract

Purpose

To respond to increased public and programmatic demand to address underenrollment of clinical translational research studies, the authors examine participant recruitment practices at Clinical and Translational Science Award sites (CTSAs) and make recommendations for performance metrics and accountability.

Method

The CTSA Recruitment and Retention taskforce developed and, in 2010, invited representatives at 46 CTSAs to complete an online 48-question survey querying CTSA accrual and recruitment outcomes, practices, evaluation methods, policies, and perceived gaps in related knowledge/practice. Descriptive statistical and thematic analyses were conducted.

Results

Forty-six respondents representing 44 CTSAs completed the survey. Recruitment conducted by study teams was the most common practice reported (78–91%, by study type); 39% reported their institution offered recruitment services to investigators. Respondents valued study feasibility assessment as a successful practice (39%); their desired additional resources included feasibility assessments (49%) and participant registries (44%). None reported their institution systematically required justification of feasibility; some indicated relevant information was considered prior to IRB review (30%) or contract approval (22%). All respondents’ IRBs tracked study progress, but only 10% of respondents could report outcome data for timely accrual. Few reported written policies addressing poor accrual or provided data to support recruitment practice effectiveness.

Conclusions

Many CTSAs lack the necessary framework to support study accrual. Recommendations to enhance accrual include articulating institutional expectations and policy for routine recruitment planning; providing recruitment expertise to inform feasibility assessment and recruitment planning; and developing interdepartmental coordination and integrated informatics infrastructure to drive the conduct, evaluation, and improvement of recruitment practices.

Poor participant accrual into clinical trials at academic health centers (AHCs) in the United States incurs financial costs to institutions, industry, and taxpayers, puts participants at risk, delays scientific progress, and impedes medical discovery.1 The pervasive problem of underenrollment in both clinical trials and investigator-initiated protocols is well recognized, but there are few published data describing accrual at Clinical and Translational Science Award (CTSA) sites or across the national CTSA consortium. Programmatic demand for performance metrics and accountability among CTSA sites (CTSAs) has brought increased scrutiny to institutional performance,1,2 yet there are currently no metrics or benchmarks specific to accrual. A recent assessment of the costs of underenrollment of clinical trials at one AHC estimated the direct losses to exceed $1 million annually.3 Including indirect costs and investigator-initiated studies would substantially increase this figure, and the opportunity costs to patients are incalculable. Thus, in 2009, the CTSA Recruitment and Retention RR taskforce, a subcommittee of the national CTSA Regulatory Knowledge and Support Key Function Committee (RKS KFC), was convened to assess current policies and practices at CTSAs and to make recommendations to enhance recruitment and accrual efforts.

For decades, industry has used centralized professional recruitment services--incorporating recruitment expertise, economies of scale, and data-driven marketing approaches--to optimize recruitment in commercially managed trials.4 However, at academic research institutions, recruitment traditionally has been left in the hands of individual investigators, who may have neither the resources nor the expertise to effectively execute accrual. RR taskforce members hypothesized that the lack of integration of data, policy, and practice related to recruitment and retention, and the scarcity of data in support of effective recruitment and retention practices, were impeding efforts to build infrastructure at their CTSAs to support successful accrual. To determine whether CTSAs have adequate policies, infrastructure, and processes in place to measure accrual outcomes, to address underenrollment, and to effectively improve recruitment/accrual outcomes for investigators, the RR taskforce in 2010 fielded a CTSA consortium-wide survey focused on recruitment and retention practices. Here, we describe the results of the survey and provide recommendations for improving accrual at CTSAs.

Method

The 15-member RR taskforce was convened by the chair of the RKS KFC in July 2009. All of this study’s authors were taskforce members.

Definition and scope of terms

The evaluation of recruitment or accrual outcomes is complicated by the absence of common definitions of scope and practice. Within the working definition we developed for the RR taskforce survey, the scope of “recruitment” encompasses a broad and interdisciplinary set of activities conducted across the protocol’s life span, leading up to and culminating in complete study enrollment (i.e., “accrual”). It extends beyond creating advertisements or prescreening volunteers. Recruitment activities start with refinement of the protocol design to balance burdens and benefits to participants5 and to include (1) assessment of feasibility as judged by incorporation of data concerning a broad set of factors that potentially impact the target population’s availability or willingness to participate, and (2) assessment of the availability of resources to effect recruitment. Some of the variables that affect feasibility include the restrictiveness of eligibility criteria; competing forces that affect the availability of target populations4; the time, effort, resources, and availability of the research team; the availability of staff with recruitment expertise for call management and prescreening; the resources afforded the recruiter; and the availability of infrastructure, tools, and data to rationally optimize recruitment activities as they are conducted.6 Feasibility is ultimately an assessment of the likelihood of attaining timely accrual. Our working definition for the outcome of “accrual” is the completion of enrollment of the projected target number of evaluable participants within the projected time frame. This more expansive definition of recruitment-- as a multi-step process for which effectiveness is measured against the final outcome of study accrual-- incorporates elements of psychology, customer service, marketing, efficiency, and performance improvement, and aligns with both successful commercial recruitment practices and the CTSA goals of accelerating translational science.

Survey development

Employing a broad and interdisciplinary definition of recruitment to capture the full scope of activities contributing to participant recruitment and accrual, the RR taskforce developed a 48-question survey to examine recruitment practices, infrastructure, and evaluation at CTSAs. (For the final survey, see Supplemental Digital Appendix 1). Most questions included both fixed-answer responses and comments fields. One of the authors (R.K.) created the initial draft, which was revised iteratively by the taskforce members. The draft survey instrument was pilot-tested for face and content validity by taskforce members and colleagues at their institutions and then refined based on those findings. The final survey was endorsed by the RKS KFC prior to fielding. The survey was submitted to the Rockefeller University Institutional Review Board (IRB) and was determined to be exempt from IRB review.

Survey design

The survey was specifically designed to assess: (1) the outcome of accrual, defined as the fraction of studies at the responding CTSA that attained complete study enrollment within 6 months of the projected timeline; (2) utilization and perceived success of common recruitment and retention practices; (3) availability of institutional resources and infrastructure to support recruitment; (4) evaluation methods for recruitment and retention practices; (5) institutional policy relevant to study accrual; and (6) perceived gaps in knowledge related to recruitment and retention practices. Many survey questions distinguished between studies that were sponsored (i.e., decision-making authority lies with industry/pharmaceutical company or other outside collaborators and not with the principal investigator [PI]), nonsponsored (i.e., the main decision-making authority lies with the PI), or at a dedicated center (e.g., concentrated in a cancer center, vaccine center, HIV/AIDS center).

A particular focus of the survey was whether study feasibility assessment and recruitment planning were conducted in advance of study approval or initiation. Fulfilling these functions can encompass a variety of activities. The survey asked about methods used for determination of enrollment targets and timelines, methods used for any feasibility assessments related to targets and timelines, and provision of any relevant institutional resources to investigators to support assessments of feasibility. The survey also asked whether the IRB or contract office requires demonstration of feasibility or adequate recruitment budget prior to IRB or contract review, and whether the IRB requires a target date for meeting the accrual target or has any processes in place to track or referee the submission of studies competing for the same patient population. Free-text space was provided for respondents to describe applicable requirements.

The survey also asked about the availability and administration of participant/patient registries and the ability to track participant enrollment outcomes--whether tracking is in place, whether the tracking enables assessment of the time elapsed from approval to the first enrollment, and whether studies meet enrollment targets within a specified time frame.

Other survey items asked specifically whether remedies are suggested or assistance is provided to investigators for underaccruing studies, and whether penalties are applied through the contracts office or the IRB to investigators who persistently conduct underaccruing studies. In addition, the survey asked how many studies over the prior 3 years did not fully accrue within 6 months of the stated target date and whether a written policy is in place to close studies for nonaccrual.

At the time of the survey in 2010, there were no CTSA consensus standards, definitions, or metrics related to measures of study accrual or outcomes of recruitment activities.7 To collect information about accrual outcome assessment, the survey asked directly about any evaluation methods used and the source of information for reports of attaining accrual targets or timeliness (e.g., data/source, experience, opinion). For any recruitment practices described, the survey explicitly asked respondents to describe the evaluation methods used to assess the value of those practices.

Survey fielding

The survey was fielded via an on-line platform (SurveyMonkey, www.surveymonkey.com) in March–April 2010. The survey was deployed through the RKS KFC representatives associated with each of the 46 then-funded CTSAs. To encourage accurate and complete responses, the instructions indicated that respondents should collaborate as needed with institutional colleagues to complete the survey. Printable worksheet copies were provided to allow respondents to compile input from multiple sources before submitting their final response online. The RKS KFC representative submitted the final online survey response for each institution. Nonresponders were contacted once to encourage response.

Analysis

Survey responses were analyzed using descriptive statistics for fixed responses. Free-text comments were analyzed for face content and coded with simple descriptive terms, using the respondents’ own words when appropriate and compiling like terms (e.g., registry + database, recruitment core + centralized services + consultation service, successful practices + proven practices, measures of success + metrics). The initial coding was conducted by one author (R.K.); that coding was reviewed by the convened RR taskforce during data analysis conference calls. When a given respondent provided the same information by both fixed response and free-text response for the same question, only the fixed response was included in tallies. Comments containing more than one theme were coded for all their themes.

Results

Forty-six individuals representing 44 (96%) of the 46 surveyed CTSAs returned 46 responses; two CTSAs submitted two responses, each describing a different set of practices at separate institutions within their multi-institutional CTSA.* Of the 46 respondents, 26 (57%) were university officials, senior executives, department/core directors, or IRB chairs; 11 (24%) were recruitment or administrative core coordinators or managers; 5 (11%) were senior faculty; and 4 (9%) were research subject advocates. (For a list of respondents’ self-reported positions, see Supplemental Digital Appendix 2). All respondents were directly engaged with their CTSA.

Accrual success

Across the CTSAs, there were no uniform definitions or practices for collecting or analyzing accrual data and few respondents could report on the outcome of successful accrual (fraction of studies with complete and timely enrollment). Of the 46 respondents, 37% (17) indicated specific time periods in response to the questions about elapsed time from sponsored and nonsponsored protocol approval to first enrolled subject, while most (63%, 29) selected “do not know.” Although half of the respondents (50%, 23) indicated that their IRB tracked enrollment, only 9% (4) could report the accrual outcome, that is, the fraction of protocols at their CTSA for which stated accrual targets were met within 6 months of the target date. Across a series of questions regarding the tracking of accrual progress, all 46 respondents indicated that at least one entity tracked enrollment (e.g., the IRB, the cancer center, the utilization committee, the sponsor), yet, for both sponsored and non-sponsored studies, a consistent majority (74–78%) reported there were no mechanisms to track the number of studies failing to accrue within 6 months of target or the number of studies closed for nonaccrual.

Specific recruitment practices and models for recruitment support

More than half of the 46 respondents (57%, 26) reported their institutions had no “particularly successful or valuable recruitment or retention practices” to share. Ten respondents (22%) collectively described 15 practices they deemed valuable, including metrics for tracking recruitment activities and performance (n = 2 practices), data-based feasibility assessment practices (n = 3), use of the ResearchMatch8,9 participant registry (n = 3), and recruitment cores providing support services to investigators (n = 7).

When asked to indicate the most commonly utilized approaches to recruitment for studies that were sponsored, non-sponsored, or conducted at a dedicated center, respondents’ top three choices, regardless of study type, included the research teams’ own efforts, participant self-referral from advertisements and Web sites, and referral by a primary caregiver (Table 1). Through both fixed-answer and free-text responses, 57% (26) of the 46 respondents reported the existence of investigator-managed or departmental-level databases listing research participants as a resource for recruitment. Twenty-four percent (11) of the respondents reported hosting an institution-wide registry for which there was an IRB-approved mechanism to identify and re-contact patients/participants for future research.

Table 1.

Recruitment Methods Reported as Most Commonly Used at CTSAs, by Type of Clinical Protocol, Results of a 2010 CTSA Consortium-Wide Survey (N = 46 Respondents From 44 CTSAs)a

| Participant recruitment method | No. (%) of respondents reporting method used for | ||

|---|---|---|---|

| Sponsored studiesb | Non-sponsored studiesc | Studies at a dedicated center (e.g., vaccine or cancer center)d | |

| By individual research teams (PI and coordinator/nurses) | 40 (87) | 42 (91) | 36 (78) |

| Self-referral via Web sites and advertisements | 35 (76) | 33 (72) | 29 (63) |

| Referral by a primary caregiver | 29 (63) | 30 (65) | 30 (65) |

| Mixed model: by individual teams, and by central resources | 11 (24) | 7 (15) | 12 (26) |

| Referral through a volunteer registry | 8 (17) | 11 (24) | 7 (15) |

| By a central recruitment office/team at the center | 1 (2) | 1 (2) | 6 (13) |

| By a subcontract to an outside recruiting agency | 1 (2) | 1 (2) | 0 |

| Don’t know | 1 (2) | 1 (2) | 0 |

Abbreviations: CTSA indicates Clinical and Translational Science Award; CTSAs, CTSA sites.

Respondents were asked to indicate the three types of recruitment most commonly utilized for the specific study types at their institution; there are no common standards for aggregating this data. They were encouraged to access local content experts and/or host the available institution-local version of the survey to obtain accurate data.

Defined in the survey instructions as follows: “‘Sponsored’ protocols are studies for which the main decision-making authority lies with industry/pharmaceutical, or other outside collaborators and not with the Principal Investigator.”

Defined in the survey instructions as follows: “‘Non-sponsored’ protocols are studies for which the main decision-making authority is not held by an outside sponsor and is usually held by the Principal Investigator.”

No additional definition was provided for this study type.

Despite common recognition among the respondents that one important component of successful accrual is feasibility assessment, none reported having an institutional policy to require or enforce the conduct of routine specific feasibility assessment to justify accrual targets for either sponsored or non-sponsored studies. However, respondents reported that if any feasibility information was provided, it was considered by the IRB during protocol review (30%, 14 of 46) or before finalizing clinical trial contracts (22%, 10). Neither standards for the conduct of feasibility assessments nor methods for the formal review of feasibility information at the IRB level were described by respondents. Ten (22%) respondents indicated investigators were offered some type of feasibility assessments prior to review at their institutions. A majority of the 46 respondents reported that at their institutions, accrual targets were chosen, without any basis provided, by sponsors for sponsored studies (80%, 37) and by investigators for non-sponsored studies (70%, 32).

Fourteen of the 46 respondents (30%) indicated there was an institutional process in place to help investigators to evaluate feasibility before submitting a protocol to the IRB. Their free-text descriptions of those feasibility assessments included departmental- or center-level review (n = 7 respondents); ad hoc review by recruitment core staff or peers (n = 5); and use of a repository or patient database query to assess participant availability (n = 2).

Institutional resources for recruitment

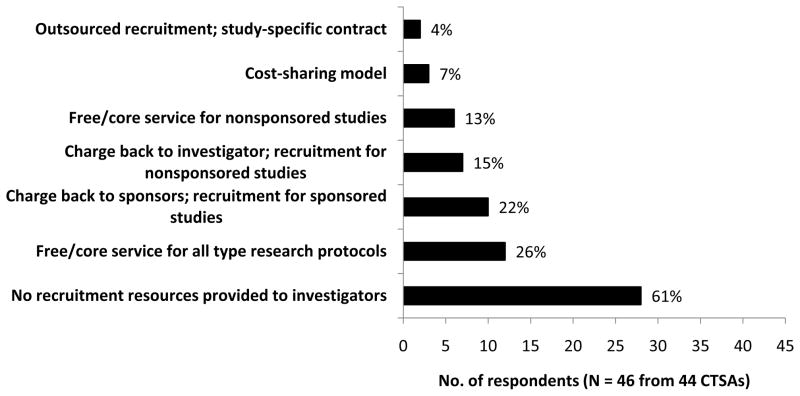

In response to a question about financial models for providing recruitment services, 26% (12) of the 46 respondents reported their CTSAs offer free recruitment consultation services to all investigators and 15% (7) indicated investigators or departments are charged for some or all recruitment services. However, 61% (28) indicated no resources are offered to investigators to support recruitment (Figure 1). Fifteen respondents provided free-text comments describing their institutions’ recruitment services; seven reported assistance offered with recruitment planning through a core or consultation service, advertising, use of registries prior to study initiation, and provision of tracking or metrics. Less commonly offered were help with feasibility assessments or community outreach.

Figure 1.

Institutional recruitment resources to assist investigators in accrual of clinical research studies: Frequency and financial model, results of a Clinical and Translational Award (CTSA) consortium-wide survey, 2010. The survey question stated: “If recruitment services are provided to investigators from a center or central service, what is the financial model?” The 46 respondents representing 44 CTSAs selected one or more options from among the choices listed along the vertical axis. The figure shows the frequency of the utilization of different service models and the general handling of the cost of the services, including instances where services were free or no services were provided.

Evaluation of recruitment activities

When asked to give their opinion as to which three aspects of recruitment activities contributed most to the success of recruitment at their CTSAs, respondents selected the quality of the recruitment plan overall (52%, 24 of 46), the conduct of a feasibility assessment (39%, 18), and the nature of the studies being offered (33%, 15) as the most important factors (Table 2). The ranking of the top two did not vary with the respondent’s seniority or role. In their free-text comments, respondents did not provide any objective measures of the effectiveness of their practices.

Table 2.

Factors Contributing to Successful Clinical Study Recruitment, Results of a 2010 CTSA Consortium-Wide Survey (N = 46 Respondents From 44 CTSAs)

| Factors affecting recruitment success | No. (%) of respondents selecting the factor as most importanta |

|---|---|

| Quality of recruitment plan overall | 24 (52) |

| Feasibility of recruitment assessment performed before starting recruitment | 18 (39) |

| The nature of the studies (e.g., trials offering novel treatments) | 15 (33) |

| Relationship established with coordinator | 13 (28) |

| Relationship established with investigator | 10 (22) |

| Financial compensation for participants | 10 (22) |

| Recruitment of prior volunteers through coordinators | 9 (20) |

| Adequate budget | 9 (20) |

| Referral of participants by their personal physicians | 8 (17) |

| Nature/quality of first interaction (telephone pre-screen, scheduling, etc.) | 5 (11) |

| Recruitment of willing volunteers from a participant registry | 5 (11) |

| Through referral/collaboration with private practitioners | 4 (9) |

| Quality of informed consent discussion with investigator | 2 (4) |

| Quality of informed consent discussion with coordinator | 1 (2) |

| Quality of advertising | 1 (2) |

| Quality of recruitment services from support center | 0 |

| Other (please specify)b | 4 (9) |

Abbreviations: CTSA indicates Clinical and Translational Science Award; CTSAs, CTSA sites.

Respondents were asked “What do you believe are the three most important elements of successful recruitment at your CTSA?,” operationally defined as timely accrual. There are currently no common standards or definitions for evaluating or aggregating this information. Respondents relied on local infrastructure, reporting, and expertise to compile responses.

The four respondents who selected this response option provided text descriptions of elements of successful recruitment plans: “having dedicated recruitment experts support the research team”; “a research-informed public”; “having access to target populations”; “the participant’s relationship with the research team.”

Institutional policies regarding recruitment and retention

Although 43% (20) of the 46 respondents reported that their IRBs required investigators to indicate a target date for completion of accrual, none reported justification for the selection of target dates or feasibility assessment as required at their institution. As noted above, half of respondents (50%, 23) reported that the IRB tracked whether protocols progressed toward enrollment. Further, in the event of underaccrual, 63% (29) said that recruitment assistance in the form of advice, consultation, or increased on-line visibility was offered to investigators to improve accrual. Most respondents indicated that their institutions had no formal policy for closing under-accruing sponsored studies (52%, 24) or non-sponsored studies (71%, 33), although 20% (9) said sponsors themselves closed their poorly accruing studies and some said the utilization review or contracts office closed sponsored (7%, 3) and nonsponsored (9%, 4) studies. Eighty-seven percent (40) responded there were no penalties to investigators for hosting poorly accruing studies.

Regarding current practices in participant retention, 96% (44) of the 46 respondents reported they had no successful retention practices or outcome data to share. Forty-six percent (21) reported that retention activities were conducted only by the research team; 17% (8) described participant newsletters, appreciation events, tokens, reminder calls, and cards as research team practices to support retention. Thirty-nine percent (18) reported there were no institutional initiatives to enhance retention. Respondents felt the top contributors to successful retention at their institutions were convenience factors such as the ease of parking and waiting time (61%, 28), the quality of the relationship with the coordinator (57%, 26), the nature of the study (41%, 19), and the use of reminder calls and e-mails (41%, 19) (Table 3).

Table 3.

Factors Contributing to Successful Clinical Study Retention, Results of a 2010 CTSA Consortium-Wide Survey (N = 46 Respondents From 44 CTSAs)

| Factors affecting successful retention | No. (%) of respondents selecting the factor as most importanta |

|---|---|

| Comfort/convenience factors: distance of commute to research site, availability of parking, ease of finding research clinic, waiting time | 28 (61) |

| Quality of the relationship established with the coordinator | 26 (57) |

| The nature of the study itself (e.g. trials offering novel treatments) | 19 (41) |

| Reminder calls/e-mails before return visits | 19 (41) |

| Quality of the relationship established with investigator | 10 (22) |

| Financial compensation for participants | 8 (17) |

| Participant’s prior experience in research studies | 7 (15) |

| Involvement and buy-in from the referring physician | 7 (15) |

| Participant appreciation efforts (gifts, gatherings, acknowledgements) | 5 (11) |

| No-placebo protocols | 2 (4) |

| Retention prediction assessment | 2 (4) |

| Referral of participants from personal physicians | 1 (2) |

| Other (please specify)b | 4 (9) |

Abbreviations: CTSA indicates Clinical and Translational Science Award; CTSAs, CTSA sites.

Respondents were asked, “What are the three most important elements of successful retention [of study participants] at your CTSA?” There are no definitions or consensus practices for assessing retention practices. Respondents relied on local infrastructure, reporting, and expertise to compile responses.

The four respondents who selected this response option provided text descriptions of successful retention practices: “the quality of the relationship with the research team and reminder calls”; “the design of the protocol itself”; “compensation”; “the study purpose.”

Perceived gaps in practice or knowledge

Respondents were asked to indicate up to three practices that they felt would, if made available at their institutions, enhance recruitment or enhance retention. To improve recruitment, respondents chose feasibility assessments (49%, 22 of 45), access to participant registries (44%, 20), and improvements to overall recruitment plans (27%, 12) (Table 4). These were the top three selections regardless of the participant’s role or seniority. Thirty-two respondents also provided free-text comments suggesting ways to improve retention of participants; these included sharing research results and study progress with participants (31%, 10 of 32), assuring adequate or free parking (28%, 9), attending to the quality of the relationships with research team members (22%, 7), appointment reminders (13%, 4), and the development of researcher-directed retention training and protocol-specific retention plans (6%, 2).

Table 4.

Recruitment Planning Practices Wish List, Results of a 2010 CTSA Consortium-Wide Survey (N = 45 Respondents From 44 CTSAs)

| Recruitment service or factor | No. (%) of respondents selecting service or factora |

|---|---|

| Feasibility of recruitment assessment performed before starting recruitment | 22 (49) |

| Recruitment of willing volunteers from a participant registry | 20 (44) |

| Quality of recruitment plan overall | 18 (40) |

| Adequate budget | 12 (27) |

| Quality of recruitment services from support center | 10 (22) |

| Referral of participants from personal physicians | 6 (13) |

| Nature/quality of first interaction (telephone pre-screen, scheduling, etc.) | 6 (13) |

| Quality of advertising | 5 (11) |

| Recruitment of prior volunteers through coordinators | 5 (11) |

| Relationship established with investigator | 4 (9) |

| The nature of the studies (e.g. trials offering novel treatments) | 2 (4) |

| Relationship established with coordinator | 2 (4) |

| Financial compensation for participants | 2 (4) |

| Quality of informed consent discussion with investigator | 1 (2) |

| Quality of informed consent discussion with coordinator | 1 (2) |

| Other (please specify)b | 10 (22) |

Abbreviations: CTSA indicates Clinical and Translational Science Award; CTSAs, CTSA sites.

Respondents were asked to render an informed opinion from the list of services and factors above to answer the following question: “Select up to three additional activities that you think would enhance recruitment at your center if they could be provided.”

Among the respondents’ comments, additional themes raised included training and retention of research coordinators, incorporating a participant-centered perspective in the design of the research study, better engagement of clinicians/practitioners/providers to refer patients to research, and helping research teams understand the impact of their interactions on participants.

When asked what recruitment models, practices, or metrics they most wanted to learn more about, 20 respondents provided free-text comments asking for metrics of successful practices (55%, 11), details of how to conduct specific recruitment practices (50%, 10), models for creating a recruitment core (40%, 8), means to tailor recruitment to specific studies or populations (35%, 7), information on setting up registries or databases (15%, 3), and means to achieve outreach through social media networks (15%, 3). Regarding the retention models, practices, or metrics they most wanted to learn about, 17 respondents commented, asking for retention metrics (35%, 6), successful organizational models and tools for retention (35%, 6), methods of predicting or measuring retention (18%, 3), and information on tailoring retention methods to study characteristics (12%, 2).

Discussion

We report here the results of a 2010 national survey of CTSAs about practices relating to clinical research recruitment and accrual. Major aspects of recruitment practices were assessed: accrual outcomes; utilization and perceived success of common recruitment and retention practices; institutional resources and infrastructure to support recruitment; evaluation methods for recruitment practices; relevant institutional policy and expectations regarding study feasibility and accrual; and perceived gaps in knowledge or practice.

All 46 respondents indicated that the IRBs at the 44 CTSAs they represented collected ongoing enrollment data and a majority indicated an enrollment target date was required. However, few reported their CTSAs required any justification for enrollment targets, and only 13% (6) reported their CTSAs had the ability to track accrual as an outcome of successful recruitment, that is, whether a study achieved timely and complete accrual. Few respondents could report how many studies had no accrual or were closed for poor accrual. For many of the questions, many respondents indicated their institutions did not track the data queried. More than 50% of the respondents said there were no policies in place to manage underaccruing studies and 87% said there were no administrative actions (penalties) consistently taken to address or limit underenrolling studies. Although 50% of the respondents reported that underenrolling studies were referred for some type of recruitment assistance or advice, few provided any measures of the effectiveness of any recruitment assistance or practices conducted at their institution.

These data suggest that most CTSAs have not created the basic framework for the systematic assessment of accrual. Federal regulations require that investigators report and IRBs monitor ongoing enrollment data at continuing review, yet one can infer from our results that CTSAs have not accessed or leveraged these data. Enrollment data appear to exist in silos, and in the absence of standard definitions or milestones. Challenges to data analysis, such as variation in the operational definition of when enrollment starts or inflation of enrollment targets to accommodate screen failures and attrition, may be present. These barriers to the use of accrual data could be lowered by the development of consensus definitions and recommended best practices for projecting and tracking accrual.

The ability to formulate and execute a successful plan for study accrual depends on having multiple integrated resources in place. Recently, following their report of the institutional impact of underenrollment,3 Kitterman et al10 reported their two-year experience with an institutional program designed to heighten awareness of investigators’ self-identified barriers to accrual. They reported wide variance across departments in improvement or worsening of accrual rates without significant net change in the overall rate of low enrolling studies.

Key elements of provision of integrated support include leadership and policies to set and reinforce expectations of successful accrual, resources and expertise to guide and support the planning and conduct of recruitment efforts, and a commitment to evaluate the effectiveness of recruitment efforts. Timely accrual is the most appropriate primary outcome measure. To date, many CTSAs have enhanced select infrastructure and support for investigators in the form of laboratory cores, standardization and centralization of training, and provision of protocol development and research coordination services.11,12 Consortium-wide efforts have focused on means to streamline study approval.13 Given the critical role of successful recruitment in all clinical research protocols, the RR taskforce makes the following recommendations to the CTSA consortium to achieve the goal of improving participant accrual:

Recommendation 1: Institutional leadership must make a clear statement that timely and effective recruitment is an expectation of the clinical research enterprise. Policies should be developed to support that expectation

There must be a major change in culture at academic institutions regarding the approach to clinical research in order to effect the needed change in productivity. Policies should address approaching study selection rationally, setting accrual targets based on data, and requiring accountability for accrual performance. Many CTSA leaders may be well positioned to facilitate addressing these challenges at the institutional level.

Accrual performance outcomes are the key benchmark for the delivery of recruitment services, yet at many institutions, accrual targets are not consistently based on rational or systematic feasibility assessments. For both sponsored and investigator-initiated studies, a robust assessment of feasibility takes into account multiple factors affecting accrual, such as the availability of and appeal the study holds for the target population, and operational factors, such as competing priorities and protocols, available personnel and resources, past experience in similar circumstances, and the opportunity costs of conducting the study. Overestimation of the anticipated accrual rate results in wasted allocation of resources and effort, whereas underestimation may leave a study team underresourced to meet the demands of conducting the study.

However, to make accrual outcome assessment a meaningful practice consortium-wide, institutions must share common definitions and standards for tracking and analyzing accrual data. Recently the CTSA Evaluation Committee engaged CTSA leadership regarding outcome metrics, drafted working definitions, and initiated a metrics pilot project to assess the feasibility of capturing accrual data across the consortium.14 It will be important to couple this top-down approach with engagement of the recruitment professionals already on the front lines as they have a broad fund of knowledge relevant to this agenda.

The value of different approaches to feasibility analysis should also be based on assessments of the effectiveness of those approaches. Many survey respondents selected feasibility assessments as among the practices of greatest value to current and future recruitment support, and they asked for best practices and metrics. Despite the widespread embrace of feasibility assessments as key to successful recruitment, there are no consensus best practices, and there is a lack of institutional requirements for rational justification of enrollment targets. Interestingly, in a study of 14 cancer centers among which the study zero-accrual rate exceeded 50%, the implementation of a requirement for a simple preliminary feasibility assessment--specifically, to demonstrate the availability of a minimal set of eligible patients--virtually eliminated the incidence of non-enrolling studies by merely focusing attention and intention on the issue.15 Some tools are already at hand within the consortium to enable broader application and testing of feasibility approaches. Several informatics-rich institutional models for assessing aspects of feasibility have been published recently.16–18 The nationwide ResearchMatch database is but one example of a volunteer registry that can be formally queried to assess participant availability.8 A fully comprehensive feasibility assessment would utilize both information gleaned from informatics queries of participant registries or patient databases and recruitment expertise to assist investigators to minimize protocol burdens, maximize benefits and incentives, reduce protocol complexity and preserve scientific goals and integrity.

Recommendation 2: Institutions should provide investigators access to recruitment expertise through consultation and/or core services to support effective prospective recruitment planning and conduct

In a 2010 report, the Institute of Medicine identified structured, consistent support for investigators to carry out translational research, including the provision of recruitment services, as a critical component in transforming clinical research.1 In its 2013 report on the CTSAs, the Institute of Medicine again noted that low or slow accrual presents a significant barrier to the conduct of translational research.2 Successful accrual often requires more effort specifically focused on recruitment than can be spared by a busy research coordinator with a broad scope of duties.12 Further, in the modern era, recruitment implementation requires expertise in marketing, social networking, registry management, advertising graphics and placement, branding, internet presence, community engagement and outreach, call management, customer service, and other special services. Planning for successful recruitment requires systematic formal assessment early, during the protocol development process, of issues such as the availability of the target patient population15,18; the presence of competing protocols across the institution and region; the operational feasibility of the study design in terms of staffing, space, intensity, burdens, and incentives; the budget for recruitment marketing; call management; and the availability of the research team to conduct study visits.4,6 The coordination of these data streams and activities is often beyond the scope of the investigator, thus, the provision of recruitment expertise by the institution is key.

Finally, it is critical to recognize the role of prospective planning in ensuring that recruitment support costs are anticipated and well-budgeted through effective institutional mechanisms to allow utilization of available recruitment services, with economies of scale where possible. At many institutions, the CTSA may be the logical home for centralized professional recruitment consultation and services. The review criteria for the most recent CTSA funding announcement19 ask whether proposed resources and services address “critical barriers for translational researchers and research teams locally.” Recent Institute of Medicine recommendations also call for accountability to common metrics for research performance outcomes.2 Concrete steps to systematically eliminate barriers to effective recruitment and accrual by providing recruitment expertise would be responsive to these recommendations.

Recommendation 3: Robust ongoing evaluation of recruitment and accrual activities should be an essential part of institutional expectations. Evaluation efforts should be supported by appropriate integrated data infrastructure and analysis resources to assess and improve performance outcomes

In our survey, only one-third of respondents indicated their institution offered some form of recruitment services for investigators (e.g., consultation, planning, feasibility assessments, management registries, advertising, call management, tracking and analysis of outcome metrics). Since the fielding of the survey, the prevalence of recruitment cores and participant and patient registries has increased steadily although there is no centralized listing to track their growth. Two years after the survey, 7 CTSAs presented participant registries at a national meeting,20 and in late March 2014, 87 institutions affiliated with 52 CTSAs were listed as participating in the national ResearchMatch registry.21 In 2014, one of the authors (R.K.) reviewed the public websites of the 62 funded CTSAs, where the descriptions of the recruitment services offered to investigators to enhance participant recruitment ranged from isolated access to ResearchMatch to local and disease-specific patient registries, informatics-assisted feasibility searches of patient databases, recruitment tool/templates, and expert consultation or services.

Despite this encouraging recent proliferation of recruitment cores and services, it is unknown whether institutions have used their new platforms to study and optimize their practices as there are few published data demonstrating the value and effectiveness of these services. Notably, ResearchMatch provides partnering institutions with private dashboards illustrating the effectiveness of their own ResearchMatch recruitment activities along the enrollment continuum8; the efficiency of ResearchMatch overall has been reported.9 Contemporaneously, there have been creative approaches to incorporate research subject advocacy and community outreach into recruitment strategies, and dedicated recruitment portals and registries have been promoted to educate the public about research opportunities.9,22 In addition, cross-consortium efforts have been made to develop validated instruments to understand participant motivations.23,24 However, demonstrations of the utility and effectiveness of these models at enhancing recruitment outcomes are lacking. RR taskforce members (including RK, SM-B, CR, HK, CDH, AD, RH) report that their recruitment cores typically capture detailed information about their callers and activities, but lack either necessary infrastructure or collaboration from research teams to routinely reconcile referral data with enrollment outcomes. We are unaware of any publications that evaluate the effectiveness of recruitment core models, yet effectiveness data are crucial when attempting to justify funding requests for support of recruitment activities in grants or within institutions, or when allocating resources internally. The support of leadership across multiple domains is required for effective service models, practices, and activities to be evaluated and disseminated.

Similarly, evidence is needed to support survey respondents’ message that participant registries are a valuable resource for accrual. As noted above, there has been a recent surge in the development of participant registries at CTSAs, which have reported a wide range of experiences in realizing the full potential of such registries.20 Some registries are configured to allow potential participants to search for protocols of interest and request additional information,25 whereas others are configured to match eligible participants to protocols in a blinded fashion based on participant profiles.8,9 Centralized research participant registries provide a potential platform for optimizing approaches to recruitment, understanding the relative merits of different approaches for different target populations, and for linking recruitment practices to accrual outcomes. The most broadly available registry is the national ResearchMatch registry with enrollment of 53,975 as of March 23, 2014.8 Data shared with us by one co-author (R.H.) indicate that, although some enrollments are underreported, of the 12,761 volunteers contacted by researchers at that author’s university as a result of being “matched” within ResearchMatch, 4,289 (34%) were confirmed to have enrolled in studies at the CTSA.26 To date, however, few other registries have published data on their effectiveness.

The lack of evaluation of various recruitment activities and of overall accrual success is almost universal even though IRB procedures uniformly require a statement of the enrollment target; this and the routine collection of accrual data as part of continuing review are required by federal guidance.27 Similarly, standard financial practices in contracting offices require capture of data on the returns and losses accruing from clinical trials. The paucity of outcome data offered by survey respondents highlights how opportunities to leverage data across functions and departments are missed in the absence of intentional efforts to bridge departmental silos. The opportunity exists at every CTSA to assess the effectiveness of recruitment practices by analyzing existing data sources, across departments and functions, to routinely track study accrual and to respond as needed to improve accrual. Such integration requires recognition at the leadership level of the need to provide resources for common data access infrastructure and processes as well as policies to require such evaluations. Coordination and partnerships between CTSA core directors, IRB officials, and contract and clinical trial staff are critical to the development and implementation of effective recruitment practices, including those related to feasibility assessments. The benefit of such a systematic approach will be the ability to provide recruitment resources in a manner demonstrated to be cost-effective.

Limitations

There were several limitations to the survey. Because most recruitment activities are delegated to research teams, our senior-level respondents, even if well informed, may have been limited in their ability to represent the full spectrum of recruitment and retention practices and issues across their CTSAs. In anticipation of this possibility, at the time of the survey fielding, we also offered hosting and analysis of a second, investigator-level version of the survey by which CTSAs could survey their own investigators to ascertain the breadth of practices specific to their institutions. Only two CTSAs used the local survey; their data were returned to them and results have not been reported. A second limitation is that the survey did not include any ascertainment of outreach directed to participants or communities. Emerging data support the important role of participant-centered values in study recruitment and retention.23,24,28 However, the focus of the current survey was to obtain broad observations of recruitment practices, support, and evaluation efforts at CTSAs. Comprehensive attention to specific practices and tests of their effectiveness in multiple contexts are logical next steps in what must be a multi-step process for improving recruitment at CTSAs.

Conclusions

The 46 respondents from 44 CTSAs who completed the RR taskforce survey reported that approximately one-third of CTSAs offer some recruitment-related support to investigators such as consultations, management of participant registries, conduct of feasibility assessments, and provision of expertise, tools, and services to execute, track, and document effective recruitment. Few institutions to date have been able to evaluate recruitment success or the effectiveness and value of recruitment services due to a lack of policy, definitions, and standard evaluation practices. The financial accountability to funding agencies and ethical accountability to participants demand that recruitment and accrual be conducted robustly, systematically, and successfully, leveraging the talents and infrastructure of the CTSAs. In alignment with the CTSA funding mechanism, CTSA Integrated Home Leadership is expected to “evaluate the effectiveness of their plan for ensuring high quality and efficient human subjects research, including the appropriateness of study design, recruitment, feasibility and timely closure of futile studies.”12 Based on the results of this survey, the RR taskforce recommends that CTSA leaders establish formal expectations for timely recruitment, support infrastructure for delivery of recruitment services and for data capture, and foster a culture of data-driven decision-making. Piloting expert recruitment consultation services, collecting performance data systematically, analyzing data to establish benchmarks, improving and evaluating the cost-effectiveness of practices, and formalizing accountability will hasten the identification of the most valuable and effective recruitment models and practices for dissemination.

Supplementary Material

Acknowledgments

The authors wish to acknowledge members of the Recruitment and Retention Taskforce who contributed to the design of the survey: Lara Brontheim, Sara Kukuljian, Liz Martinez, Halia Melnyk, Andrea Nassen, Jennelle Quenneville, Scott Serician, Stacy Sirrocco, and Stephanie Solomon. The authors also wish to thank Dr. Jody Sachs, formerly of the National Center for Research Resources, for administrative support and encouragement; Dr. Daniel Rosenblum, of the National Center for Advancing Translational Sciences, for his careful reading of the manuscript; and Ms. Tyler-Lauren Rainer and Ms. Anika Khan for technical assistance.

Funding/Support: This project has been funded in whole or in part with federal funds from the National Center for Research Resources and National Center for Advancing Translational Sciences (NCATS), and National Institutes of Health (NIH), through the Clinical and Translational Science Award (CTSA) Program, including grants numbered UL1TR000043, UL1TR000128, UL1TR000042, UL1TR000083, UL1TR000448, and UL1TR000090, TR000424, UL1 TR000064, UL1TR000436, UL1TR000071. The manuscript was approved by the CTSA Consortium Publications Committee.

Biographies

Dr. Kost is clinical research officer and director of the Regulatory Knowledge and Support Core, The Rockefeller University Center for Clinical and Translational Science, New York, New York. She was co-chair of the CTSA Consortium’s Regulatory Knowledge and Support Key Function Committee and Recruitment and Retention Taskforce at the time the work was conducted.

Ms. Mervin-Blake is assistant director for recruitment and special projects, Duke University Clinical Translational Science Institute, Chapel Hill, North Carolina. She was director of operational programs and research recruitment, University of North Carolina Translational and Clinical Sciences Institute, Chapel Hill, North Carolina, at the time the research was conducted.

Ms. Hallarn is program director, Clinical Trials Recruitment Center, Clinical and Translational Science, The Ohio State University, Columbus, Ohio.

Mr. Rathmann was director, Recruitment Enhancement Core, Institute of Clinical and Translational Science Regulatory Support Center, Center for Clinical Studies, Washington University School of Medicine in St. Louis, St Louis, Missouri, at the time the research was conducted.

Mr. Kolb is research participant advocate, Clinical and Translational Science Institute, University of Florida, Gainesville, Florida.

Dr. Dennison Himmelfarb is associate professor, Department of Health Systems and Outcomes, Johns Hopkins University School of Nursing, and Division of Health Sciences Informatics, Johns Hopkins University School of Medicine, Baltimore, Maryland.

Ms. D’Agostino is assistant vice president, Sponsored Programs and Pre-Award Management, and assistant director, Clinical and Translational Science Award Regulatory Knowledge and Support Resource, The University of Texas Medical Branch at Galveston, Galveston, Texas.

Mr. Rubinstein is executive director for research services, University of Rochester Clinical Translational Science Institute, Rochester, New York.

Dr. Dozier is associate professor, Department of Public Health Sciences, University of Rochester, Rochester, New York.

Dr. Schuff is professor of medicine and is co-director, Investigator Support and Integration Services, Oregon Clinical and Translational Research Institute, Oregon Health & Science University, Portland, Oregon. She was co-chair of the CTSA Consortium’s Regulatory Knowledge and Support Key Function Committee at the time the research was conducted.

Footnotes

The terms “CTSAs” and “institutions” are used to refer both to the CTSA institutions (i.e., individual entities within multi-entity CTSAs) and to the CTSAs (i.e., the grant awardees, which can include multiple entities or institutions) represented in the survey responses.

Other disclosures: None reported.

Ethical approval: The research project and survey were assessed by the Rockefeller University Institutional Review Board and found to be exempt from review.

Previous presentations: Preliminary and partial data from this work were presented to a limited CTSA audience at the 4th Annual Clinical Research Management Conference; Bethesda, Maryland; June 21, 2011.

References

- 1.English RA, Lebovitz Y, Griffin RB. Transforming Clinical Research in the United States:Challenges and Opportunities: Workshop Summary. Washington, DC: The National Academies Press; 2010. [Accessed March 19, 2014]. Forum on Drug Discovery, Development, and Translation. http://www.nap.edu/openbook.php?record_id=12900. [PubMed] [Google Scholar]

- 2.Leshner AI, Terry SF, Schultz AM, Liverman CT, editors. The CTSA Program at NIH: Opportunities for Advancing Clinical and Translational Research. Washington, DC: The National Academies Press; 2013. [Accessed 2013]. http://www.iom.edu/reports/2013/the-ctsa-program-at-nih-opportunities-for-advancing-clinical-and-translational-research.aspx. [PubMed] [Google Scholar]

- 3.Kitterman DR, Cheng SK, Dilts DM, Orwoll ES. The prevalence and economic impact of low-enrolling clinical studies at an academic medical center. Acad Med. 2011;86(11):1360–1366. doi: 10.1097/ACM.0b013e3182306440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Anderson DL. A Guide to Patient Recruitment and Retention. Boston, Mass: Thomson CenterWatch; 2004. [Google Scholar]

- 5.Getz KA, Wenger J, Campo RA, Seguine ES, Kaitin KI. Assessing the impact of protocol design changes on clinical trial performance. Am J Ther. 2008;15(5):450–457. doi: 10.1097/MJT.0b013e31816b9027. [DOI] [PubMed] [Google Scholar]

- 6.Kost RG, Rainer T-L, Melendez C, Corregano L, Coller BS. Unpublished manuscript. 2014. A Data-Rich Recruitment Core to Support Clinical Translational Research. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sufian M Program Officer, National Center for Research Resources, NIH Coordinator for the CTSA Evaluation Key Function Committee. Evaluation KFC had neither recommended nor endorsed any CTSA metrics for assessing recruitment or accrual. Personal communication with RG Kost in response to query to the committee leadership. Feb, 2012.

- 8.ResearchMatch. [Accessed March 23, 2014]; www.researchmatch.org.

- 9.Harris PA, Scott KW, Lebo L, Hassan N, Lightner C, Pulley J. ResearchMatch: A national registry to recruit volunteers for clinical research. Acad Med Jan. 2012;87(1):66–73. doi: 10.1097/ACM.0b013e31823ab7d2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kitterman DR, Dilts DM, Zell A, Ramsey KM, Samuels ME, Orwoll ES. Solving Study Low Enrollment: An Update and Identification of Strategic Interventions. Poster presented at: 6th Annual Clinical Research Management Conference; Washington, DC. June 2–4, 2013.Oregon Health Sciences University; [Google Scholar]

- 11.Rosenblum D, Alving B. The role of the clinical and translational science awards program in improving the quality and efficiency of clinical research. Chest Sep. 2011;140(3):764–767. doi: 10.1378/chest.11-0710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Speicher LA, Fromell G, Avery S, et al. The Critical Need for Academic Health Centers to Assess the Training, Support, and Career Development Requirements of Clinical Research Coordinators: Recommendations from the Clinical and Translational Science Award Research Coordinator Taskforce. Clin Transl Sci Dec. 2012;5(6):470–475. doi: 10.1111/j.1752-8062.2012.00423.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Drazner MK, Cobb N. Efficiency of the IRB Review Process at CTSA Sites. Paper presented at the CTSA 4th Annual Clinical Research Management Workshop; New Haven, Connecticut. June 4, 2012. [Google Scholar]

- 14.Trochim WM. Webinar: Update from the Evaluation Key Function Committee (KFC) to the Clinical Research Management KFC. Jun, 2013. CTSA Common Metrics Pilot. [Google Scholar]

- 15.Durivage H, Bridges K. Clinical trial metrics: Protocol performance and resource utilization from 14 cancer centers. J Clin Oncol. 2009;27(15S):6557. [Google Scholar]

- 16.Embi PJ, Jain A, Clark J, Harris CM. Development of an Electronic Health Record-based Clinical Trial Alert System to Enhance Recruitment at the Point of Care. AMIA Annu Symp Proc. 2005;2005:231–235. www.ncbi.nlm.nih.gov/pmc/articles/PMC1560758/. Accessed April 1, 2014. [PMC free article] [PubMed] [Google Scholar]

- 17.Embi PJ, Jain A, Harris CM. Physicians’ perceptions of an electronic health record-based clinical trial alert approach to subject recruitment: a survey. BMC Med Inform Decis Mak. 2008;8:13. doi: 10.1186/1472-6947-8-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ferranti JM, Gilbert W, McCall J, Shang H, Barros T, Horvath MM. The design and implementation of an open-source, data-driven cohort recruitment system: the Duke Integrated Subject Cohort and Enrollment Research Network (DISCERN) J Am Med Inform Assoc. 2012;19(e1):e68–e75. doi: 10.1136/amiajnl-2011-000115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.National Center for Advancing Translational Sciences. [Accessed March 26, 2014];Funding Opportunity Announcement, Institutional Clinical and Translational Science Award (U54) 2012 Jul; RFA-TR-12-006. http://grants.nih.gov/grants/guide/rfa-files/RFA-TR-12-006.html.

- 20.Recruitment Breakout Session Presentations. 5th Annual Clinical Research Management Workshop; June 2012; [Accessed March 19, 2014]. https://www.ctsacentral.org/sites/default/files/files/CRM_Recruitment_breakout.pdf. [Google Scholar]

- 21.Gregor C. Research services consultant, Vanderbilt Institute for Clinical and Translational Research. Personal communication with R Kost. Mar 27, 2014.

- 22.Winkler S. Making Sausages from Silos. Paper presented at: CTSA Regulatory Knowledge Key Function Committee Meeting; Bethesda, MD. January 24, 2011. [Google Scholar]

- 23.Kost R, Lee L, Yessis J, Coller B, Henderson D. Assessing Research Participants Perceptions of their Clinical Research Experiences. Clinical and Translational Science. 2011;4(6):403–413. doi: 10.1111/j.1752-8062.2011.00349.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Yessis JL, Kost RG, Lee LM, Coller BS, Henderson DK. Development of a Research Participants’ Perception Survey to Improve Clinical Research. Clin Transl Sci Dec. 2012;5(6):452–460. doi: 10.1111/j.1752-8062.2012.00443.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Washington University School of Medicine Research Participant Registry. [Accessed March 19, 2014]; https://vfh.wustl.edu/

- 26.Hallarn R. Program director, Recruitment and Retention, Ohio State University Medical Center Center for Clinical Translational Science. Personal communication with RG Kost. Mar 24, 2014.

- 27.U.S. Department of Health and Human Serivces. [Accessed March 25, 2014];Protection of human subjects. 45 CFR §46. Revised January 15, 2009. Effective July 14, 2009. http://www.hhs.gov/ohrp/humansubjects/guidance/45cfr46.html.

- 28.Kost RG, Lee LM, Yessis J, Wesley RA, Henderson DK, Coller BS. Assessing Participant-Centered Outcomes to Improve Clinical Research. NEJM. 2013 Dec 5;369(23):2179–2181. doi: 10.1056/NEJMp1311461. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.