Abstract

INTRODUCTION

Adherence to Advanced Cardiac Life Support (ACLS) guidelines during in69 hospital cardiac arrest (IHCA) is associated with improved outcomes, but current evidence shows that sub-optimal care is common. Successful execution of such protocols during IHCA requires rapid patient assessment and the performance of a number of ordered, time-sensitive interventions. Accordingly, we sought to determine whether the use of an electronic decision support tool (DST) improves performance during high-fidelity simulations of IHCA.

METHODS

After IRB approval and written informed consent was obtained, 47 senior medical students were enrolled. All participants were ACLS certified and within one month of graduation. Each participant was issued an iPod Touch device with a DST installed that contained all ACLS management algorithms. Participants managed two scenarios of IHCA and were allowed to use the DST in one scenario and prohibited from using it in the other. All participants managed the same scenarios. Simulation sessions were video recorded and graded by trained raters according to previously validated checklists.

RESULTS

Performance of correct protocol steps was significantly greater with the DST than without (84.7% v 73.8%, p< 0.001) and participants committed significantly fewer additional errors when using the DST (2.5 errors v. 3.8 errors, p< 0.012).

CONCLUSION

Use of an electronic DST provided a significant improvement in the management of simulated IHCA by senior medical students as measured by adherence to published guidelines.

INTRODUCTION

The practice of hospital-based and perioperative medicine requires the knowledge and application of many diverse acute care skills, including the management of in-hospital cardiac arrest (IHCA). The presence of Advanced Cardiac Life Support (ACLS) trained providers and adherence to published guidelines for the management of cardiac arrest are associated with improved outcomes.1–6 However, management skills and appropriate application of ACLS guidelines have been shown to quickly fade after training.7–9 Furthermore, current evidence shows that sub-optimal resuscitation is common in both medical and surgical patients.3,4,10–13

During rapidly evolving or deteriorating patient conditions, there is often insufficient time for physicians to re-familiarize themselves with current guidelines. Although both paper and electronic aids may facilitate guideline adherence during the management of acute patient instabilities or cardiac arrest,14–16 there is also evidence that cognitive aids can negatively affect the way in which providers deliver care during cardiac arrest.17,18 Therefore, we sought to determine whether the use of an electronic decision support tool (DST) that dynamically guides a provider through American Heart Association (AHA) ACLS protocols would improve performance during high-fidelity simulations that require the management of acute dysrhythmias and IHCA.

METHODS

Study Design

After IRB approval was granted, written informed consent was obtained from 47 ACLS-certified senior medical students one month prior to graduation (26 females, 20 males). Each participant was issued an iPod Touch™ device (Apple Inc., Cupertino, CA) on which the DST was installed. They were given a brief orientation (~30 min) to the use of the DST user interface and to the simulation environment, the simulator mannequin (SimMan 2G®, Laerdal, Inc., Stavanger, Norway), and all equipment to be used during the study (defibrillator, monitors, etc).

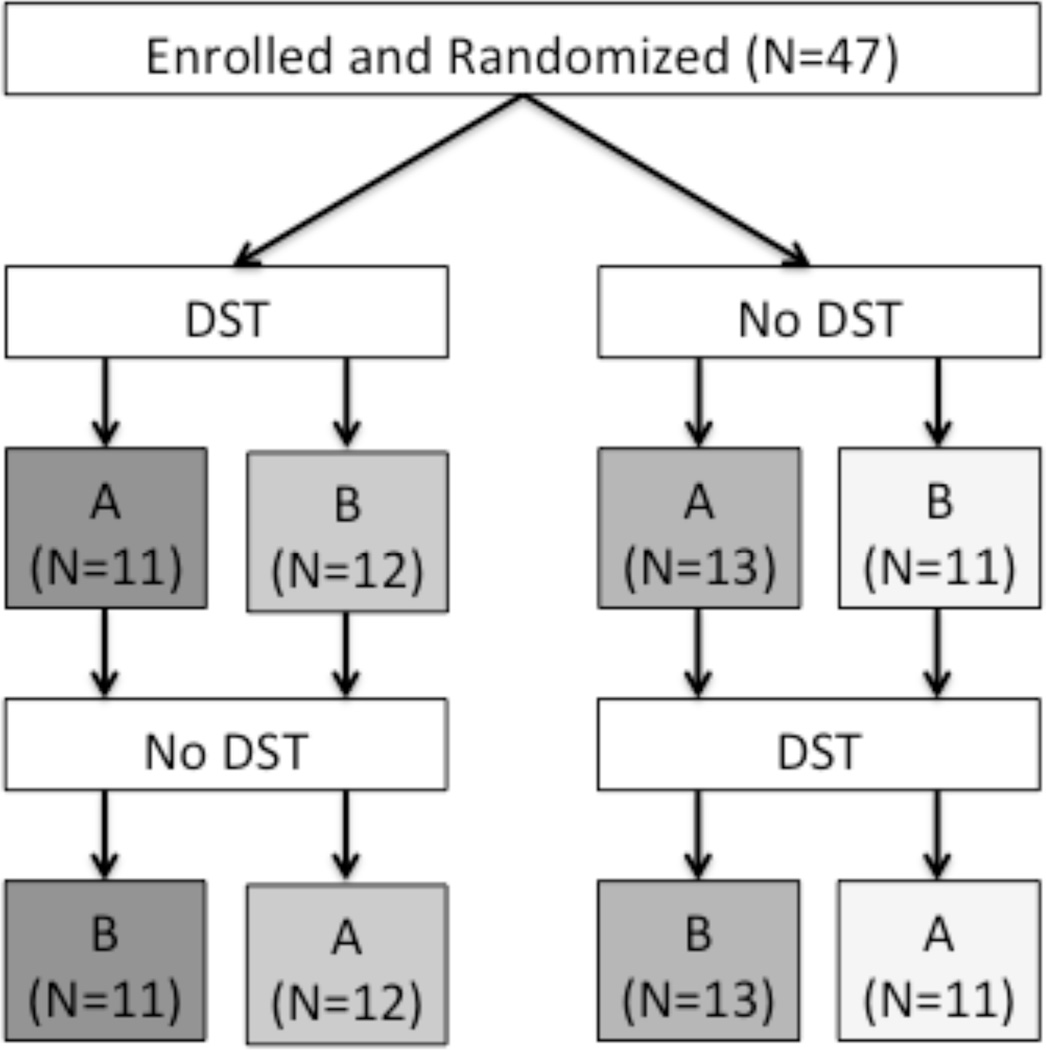

Each participant managed two high-fidelity simulation scenarios involving IHCA in a cross-over design (see Figure 1). In order to remove bias in performance due to any potential difference in scenario difficulty or due to DST order, participants were assigned to one of four combinations as shown in Figure 1 through a random number generator. The scenarios were constructed according to AHA testing standards for Megacode scenarios where a participant manages an acute dysrhythmia in a pulsatile state, then a shockable pulseless state, and then a non-shockable pulseless state. Scenario A consisted of the patient presenting with unstable bradycardia proceeding to ventricular fibrillation and finally to asystole. Scenario B consisted of the patient presenting with a narrow complex stable tachycardia proceeding to pulseless monomorphic ventricular tachycardia and finally to PEA.

Figure 1.

This figure illustrates participant randomization and allocation. Participants were randomized to one of four pathways, representing an equivalent to randomizing both the order of DST use and the order of Megacode scenario testing. After they managed the first Megacode scenario, they then managed the remaining scenario with or without the DST, opposite from how they managed the first scenario. [DST = decision support tool; A = Megacode scenario A; B = Megacode scenario B].

We performed all simulation sessions in a setting that replicated a patient room on a general care ward at our institution. The code cart and defibrillator used during these events replicated those in clinical use at our institution, as did the available medications, intravenous (IV) fluids, IV lines, medical gases, and airway devices. Each participant was told to assume the role of the physician team leader responding to a code in the university hospital. All other roles (chest compressions, airway manager, drug administration, and defibrillator manager) were played by a standardized code team that was comprised of simulation center staff trained to respond with consistent scripted responses.

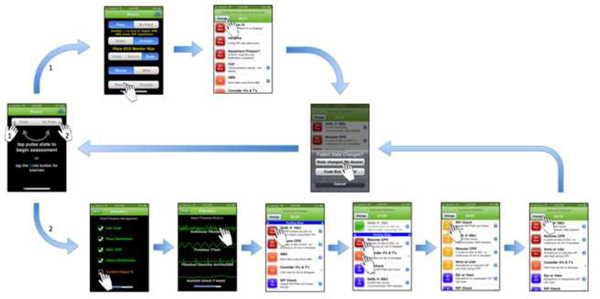

The DST was programmed for the Apple iOS and deployed on iPod™ devices, as noted above. The Appendix shows screen shots from the DST through which the user would navigate during a brief patient assessment and then management. The management steps programmed into the DST were based upon the 2005 AHA ACLS update.

Analysis and Grading

Each session was video recorded via a multi-camera system and B-line Medical® software (B-line Medical, Washington, D.C.) and then graded by two experienced raters according to previously validated scoring checklists derived from AHA training manuals.19 A score for the percentage of correct actions performed was recorded. Since only errors of omission (i.e. not doing a prescribed action) are captured in the percentage of correct steps, errors of commission were recorded in a separate error count (e.g. shocking a patient in asystole).

Global performance on each scenario was compared so that any bias due to scenario difficulty could be taken into account. With no demonstrable difference in global performance between scenario A and scenario B, further adjustment of the analysis model to account for scenario difficulty was not needed. The averaged correct actions and error count scores for each participant were used in the analysis, one from the scenario managed with the DST and one from the scenario managed without the DST. Another sensitivity analysis was also performed that took into account whether the DST was used first or second, but no difference was found. Therefore, the percent correct actions and the error counts were analyzed through an ANOVA to compare performance with and without the DST. Data are presented as Mean ± 95% CI for continuous variables and p<0.05 was considered significant.

RESULTS

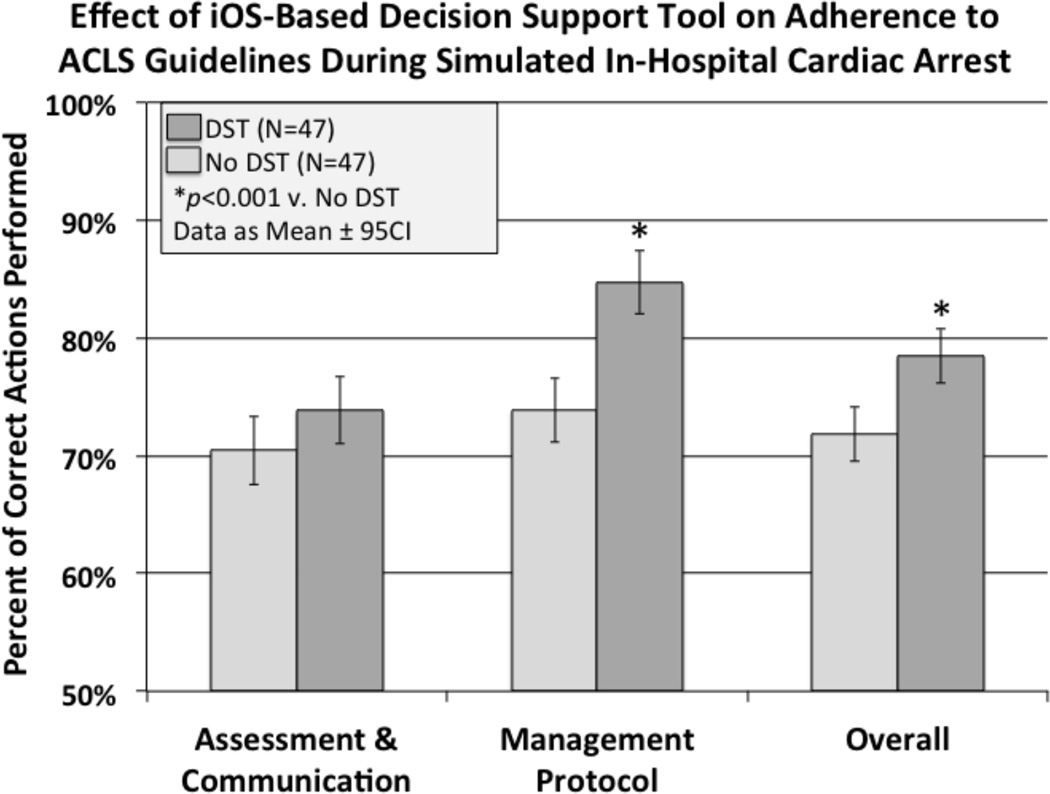

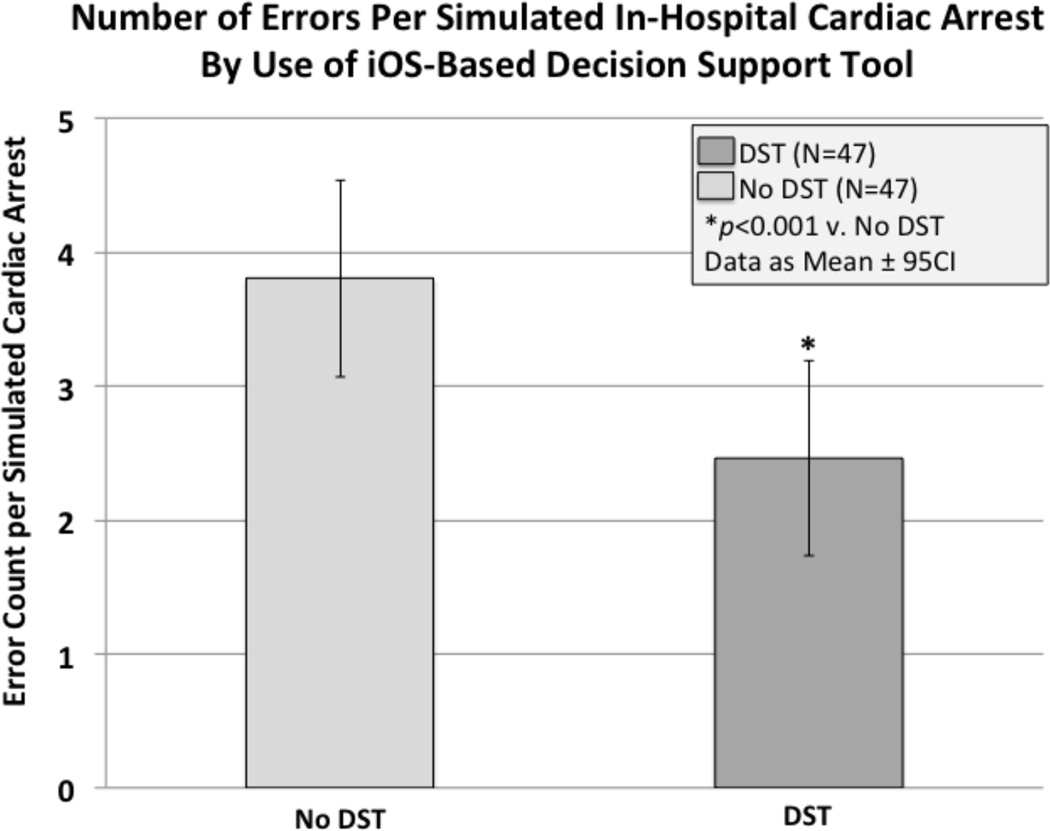

Overall, as seen in Figure 2, the total number of correct checklist items during high-fidelity simulation of IHCA was significantly improved with the iOS-based DST compared to management from memory alone (78.4% v. 71.8%, P < 0.001). Further analysis by checklist item type revealed that the number of correct management steps was significantly improved with the use of the DST (84.7 v. 73.8%, P < 0.001), while the number of patient assessment steps did not appear significantly improved (73.8 v 70.4%, P = 0.103). In addition to improving the percentage of correct guideline actions performed, participants were also less likely to make errors of commission, such as inappropriate defibrillation or incorrect medication administration, when the DST was used (2.5 errors v. 3.8 errors, P< 0.012, see Figure 3). As measured by time to first defibrillation, which was similar with and without the DST (63.6 v. 65.7 seconds, P = 0.808, respectively), use of the DST did not appear to delay timely delivery of care.

Figure 2.

This figure illustrates the difference in performance based upon use of the DST as measured by the percentage of correct actions completed during a simulated IHCA. Of note, the DST did not make a significant difference in assessment of the patient, but did significantly improve adherence to the management protocol. [DST = decision support tool]

Figure 3.

This figure illustrates the difference in performance based upon use of the DST as measured by the number of errors committed during a simulated IHCA. This only involves errors of commission, such as defibrillating when not indicated. Errors of omission are accounted for in percentage of correct actions completed shown in figure 2. [DST = decision support tool]

DISCUSSION

As noted above, adherence to published ACLS guidelines is often suboptimal with respect individual aspects such as to time to defibrillation and quality of CPR during in-hospital cardiac arrest.3,4,10,12,13 Furthermore, we have recently shown that improved overall adherence to published ACLS guidelines throughout the entire resuscitation event is associated a much greater likelihood of achieving return of spontaneous circulation (ROSC).20 Thus, we investigated whether the use of an electronic DST that dynamically guides a provider through published AHA ACLS protocols would improve performance during high-fidelity simulations requiring management of acute dysrhythmias and IHCA. The results of this study demonstrate that the DST which we tested significantly improved adherence to and reduced deviations from published guidelines. Our recent findings from a retrospective analysis of IHCA at our institution suggest that the 11% absolute improvement in adherence to guidelines that we report in this simulation study would likely be clinically relevant, possibly improving the odds of return of spontaneous circulation by approximately 30%.20

Additionally, the DST tested in this study advances upon the previous report of a smartphone cognitive aid used in simulations of IHCA,16 as the DST used in this study actively prompts the end-user with the proper decision points and actions to consider based upon prior decisions and management steps logged by the end-user (see Appendix for example). It is therefore a dynamic and fluid management tool for IHCA events. Concerning the use of DSTs in healthcare today, those deployed within the electronic medical record have been shown to improve adherence to guidelines and, in some reports, to improve patient outcomes in non-crisis settings.22–24 Furthermore, the use of mobile technology (e.g. automated text messaging reminders) has been shown to improve adherence to guidelines for clinicians and patients in the management of several conditions.25–27 Even though these DSTs and mobile technology tools are showing benefit, none of these tools are for use at the bedside in crisis situations. Our report thus advances upon what has previously been shown concerning the effect of DSTs on adherence to guidelines in high-fidelity simulation of crisis situations. Future research needs to test its application in the clinical arena.

Another important consideration with respect to testing and employing technology is that it can often change the manner in which people work, and not always in a beneficial manner. The new technology may distract and/or burden the clinician with extra work, resulting in an impediment to timely IHCA management. Zanner et al. found that a mobile phone application not only failed to improve BLS performance of laypersons, but actually slowed the performance of those with pre-existing BLS knowledge.17 Nelson et al. also found that residents using pocket cards were more likely to choose an incorrect pediatric cardiac arrest treatment algorithm, and suggested that successful cognitive aids should help guide this decision-making.18 We, therefore, used a human factors approach to streamline our dynamic DST to assist with rapid decision making and to reinforce the timely application of crucial management steps. We believe this extra attention to the human factors aspect during application development is largely responsible for our ability to demonstrate improved overall performance without slowing down IHCA management. In contrast to these previous studies, our students were able to complete a greater number of correct actions in the same amount of time while using the DST, and time to first defibrillation was similar between the groups.

Our results demonstrated that the greatest effect of the DST was on adherence to management guidelines during simulated IHCA rather than patient assessment. This is similar to a recent report by Neal et al. concerning the effect of a cognitive aid on the management of simulated local anesthetic systemic toxicity.28 In that study and ours, the checklist used for grading contained steps dealing with patient assessment and patient management. In both studies, use of the cognitive aid made a significant difference in the management steps, but not in the items related to patient assessment such as systematically checking neurological responsiveness, adequacy of airway/breathing, and existence of intravenous access. The measured difference in assessment may not have been significant because assessment of patients may occur by a mental process that is more difficult to effect through the use of a cognitive aid. Alternatively, failure to detect a difference in patient assessment may be due to challenges in the video observers’ ability to grade the assessment items. Patient assessment items graded during these acute physiologic disturbances may have been adequately considered, but not verbalized by the participants, therefore limiting the grading observers’ ability to record any difference in the assessment process. Of note, to avoid any grading bias towards the DST group, the graders did not see the assessments that may have been selected on the electronic DST, but not verbalized. The treatment steps require more definitive action by the participant, which may have allowed more precise grading and easier demonstration of improvement with the DST. While it is unfortunate that we were not able to detect a significant improvement in patient assessment with the DST, it is ultimately the correct patient treatment steps that translate into better clinical outcomes.3,4,10

There are several potential limitations of the present study that must be acknowledged. First, the study population involved ACLS-certified graduating medical students, which might be of concern with respect to generalizability of our results to more experienced clinicians. This has been partially addressed by several studies reporting ACLS certification as the factor of importance, not training level,1,29 but medical students may also be more comfortable with the use of smartphone applications than older clinicians. Second, we isolated the performance of the team leader in this study for analysis. It may be of benefit in future studies to test the impact of a DST on the performance of the entire team.30,31 While direction given by the team leader is important, it is the whole of the actions performed by the entire team that matter most to clinical outcomes. In fact, it may be even more beneficial to have an otherwise non-tasked team member solely focusing on navigating the DST in context to the real-time events. Third, there was a ceiling effect in this trial, such that performance with the DST did not reach 100% compliance even when all of the appropriate management steps were presented to the team leader. This highlights the need for ongoing human factors assessment of the technology, as there could be a problem with the user interface that was limiting or it is possible that not enough time was spent on orientation to the DST. Future studies are needed to elucidate how to improve adherence to 100% of all recommended protocol items and reduce the number of errors (i.e. inappropriate deviations from protocol). While we provided orientation regarding the use of the DST prior to study, we did not formally assess the students’ proper use of the DST prior the study.

CONCLUSION

The dynamic decision support tool investigated in this study is effective for increasing adherence to the ACLS protocols without causing any delay in treatment during high-fidelity simulation of IHCA. Further research needs to investigate whether this impact is achievable for team performance in the clinical environment and to confirm whether such improved clinical performance translates to improved patient outcomes.

Acknowledgements

Foundation for Anesthesia Education and Research (FAER), Research in Education Grant (PI: McEvoy) provided funded research time. FAER was not involved in the study design or data analysis.

South Carolina Clinical & Translational Research Institute, Medical University of South Carolina’s CTSA, supported by National Institutes of Health/National Center for Research Resources Grant Numbers UL1TR000062/UL1RR029882 provided biostatistical resources.

Appendix

Shown are a series of application screenshots as an example of the user interface. Although dynamically branching logic is built in throughout the application, the first screenshot is an early prompting for clinical assessment of the pulse. If the user indicates that the patient has a pulse (finger 1), they are prompted down a pathway (starting at arrow 1) that includes different assessment and treatment items than if the user were to select a pulseless state (finger 2 and arrow 2). As the user selects assessment items and/or checks off treatments as completed/started, the application will continuously update to show the next algorithm steps with prioritization and ongoing timing cues. At any time from any screen, the clinician may indicate that the patient's rhythm has changed and therefore quickly navigate back to the basic pulse and rhythm assessment.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest Statement:

None of the authors have any financial or personal relationships that could have any influence on this research or this manuscript.

IRB information:

MUSC IRB II - HR#19835

Contributor Information

Larry C Field, Department of Anesthesia & Perioperative Medicine, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, field@musc.edu, phone: 843-876-5744, fax: 843-876-5746.

Matthew D McEvoy, Department of Anesthesiology, Vanderbilt University, 2301 Vanderbilt University Hospital, Nashville, Tennessee 37232-7237, matthew.d.mcevoy@vanderbilt.edu.

Jeremy C Smalley, Department of Orthopedics, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, smalley@musc.edu.

Carlee A Clark, Department of Anesthesia & Perioperative Medicine, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, clarca@musc.edu.

Michael B McEvoy, Department of Anesthesia & Perioperative Medicine, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, mcevoym@musc.edu.

Horst Rieke, Department of Anesthesia & Perioperative Medicine, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, riekeh@musc.edu.

Paul J Nietert, Department of Medicine, Division of Biostatistics and Epidemiology, Medical University of South Carolina, 135 Cannon Street, Room 303J, Charleston, SC 29425, nieterpj@musc.edu.

Cory M Furse, Department of Anesthesia & Perioperative Medicine, Medical University of South Carolina, 167 Ashley Avenue, Suite 301, Charleston, SC 29425, furse@musc.edu.

REFERENCES

- 1.Moretti MA, Cesar LA, Nusbacher A, Kern KB, Timerman S, Ramires JA. Advanced cardiac life support training improves long-term survival from in-hospital cardiac arrest. Resuscitation. 2007 Mar;72(3):458–465. doi: 10.1016/j.resuscitation.2006.06.039. [DOI] [PubMed] [Google Scholar]

- 2.Sodhi K, Singla MK, Shrivastava A. Impact of advanced cardiac life support training program on the outcome of cardiopulmonary resuscitation in a tertiary care hospital. Indian journal of critical care medicine : peer-reviewed, official publication of Indian Society of Critical Care Medicine. 2011 Oct;15(4):209–212. doi: 10.4103/0972-5229.92070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chan PS, Krumholz HM, Nichol G, Nallamothu BK. American Heart Association National Registry of Cardiopulmonary Resuscitation I. Delayed time to defibrillation after in-hospital cardiac arrest. The New England journal of medicine. 2008 Jan 3;358(1):9–17. doi: 10.1056/NEJMoa0706467. [DOI] [PubMed] [Google Scholar]

- 4.Mhyre JM, Ramachandran SK, Kheterpal S, Morris M, Chan PS. American Heart Association National Registry for Cardiopulmonary Resuscitation I. Delayed time to defibrillation after intraoperative and periprocedural cardiac arrest. Anesthesiology. 2010 Oct;113(4):782–793. doi: 10.1097/ALN.0b013e3181eaa74f. [DOI] [PubMed] [Google Scholar]

- 5.Girotra S, Spertus JA, Li Y, et al. Survival trends in pediatric in-hospital cardiac arrests: an analysis from get with the guidelines-resuscitation. Circulation. Cardiovascular quality and outcomes. 2013 Jan;6(1):42–49. doi: 10.1161/CIRCOUTCOMES.112.967968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Girotra S, Nallamothu BK, Spertus JA, et al. Trends in survival after in-hospital cardiac arrest. The New England journal of medicine. 2012 Nov 15;367(20):1912–1920. doi: 10.1056/NEJMoa1109148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Smith KK, Gilcreast D, Pierce K. Evaluation of staff's retention of ACLS and BLS skills. Resuscitation. 2008 Jul;78(1):59–65. doi: 10.1016/j.resuscitation.2008.02.007. [DOI] [PubMed] [Google Scholar]

- 8.Settles J, Jeffries PR, Smith TM, Meyers JS. Advanced cardiac life support instruction: do we know tomorrow what we know today? Journal of continuing education in nursing. 2011 Jun;42(6):271–279. doi: 10.3928/00220124-20110315-01. [DOI] [PubMed] [Google Scholar]

- 9.Gass DA, Curry L. Physicians' and nurses' retention of knowledge and skill after training in cardiopulmonary resuscitation. Canadian Medical Association journal. 1983 Mar 1;128(5):550–551. [PMC free article] [PubMed] [Google Scholar]

- 10.Chan PS, Nichol G, Krumholz HM, Spertus JA, Nallamothu BK. American Heart Association National Registry of Cardiopulmonary Resuscitation I. Hospital variation in time to defibrillation after in-hospital cardiac arrest. Archives of internal medicine. 2009 Jul 27;169(14):1265–1273. doi: 10.1001/archinternmed.2009.196. [DOI] [PubMed] [Google Scholar]

- 11.Perkins GD, Boyle W, Bridgestock H, et al. Quality of CPR during advanced resuscitation training. Resuscitation. 2008 Apr;77(1):69–74. doi: 10.1016/j.resuscitation.2007.10.012. [DOI] [PubMed] [Google Scholar]

- 12.Abella BS, Sandbo N, Vassilatos P, et al. Chest compression rates during cardiopulmonary resuscitation are suboptimal: a prospective study during in-hospital cardiac arrest. Circulation. 2005 Feb 1;111(4):428–434. doi: 10.1161/01.CIR.0000153811.84257.59. [DOI] [PubMed] [Google Scholar]

- 13.Abella BS, Alvarado JP, Myklebust H, et al. Quality of cardiopulmonary resuscitation during in318 hospital cardiac arrest. JAMA : the journal of the American Medical Association. 2005 Jan 19;293(3):305–310. doi: 10.1001/jama.293.3.305. [DOI] [PubMed] [Google Scholar]

- 14.Merchant RM, Abella BS, Abotsi EJ, et al. Cell phone cardiopulmonary resuscitation: audio instructions when needed by lay rescuers: a randomized, controlled trial. Annals of emergency medicine. 2010 Jun;55(6):538–543. e531. doi: 10.1016/j.annemergmed.2010.01.020. [DOI] [PubMed] [Google Scholar]

- 15.Semeraro F, Marchetti L, Frisoli A, Cerchiari EL, Perkins GD. Motion detection technology as a tool for cardiopulmonary resuscitation (CPR) quality improvement. Resuscitation. 2012 Jan;83(1):e11–e12. doi: 10.1016/j.resuscitation.2011.07.043. [DOI] [PubMed] [Google Scholar]

- 16.Low D, Clark N, Soar J, et al. A randomised control trial to determine if use of the iResus(c) application on a smart phone improves the performance of an advanced life support provider in a simulated medical emergency. Anaesthesia. 2011 Apr;66(4):255–262. doi: 10.1111/j.1365-2044.2011.06649.x. [DOI] [PubMed] [Google Scholar]

- 17.Zanner R, Wilhelm D, Feussner H, Schneider G. Evaluation of M-AID, a first aid application for mobile phones. Resuscitation. 2007 Sep;74(3):487–494. doi: 10.1016/j.resuscitation.2007.02.004. [DOI] [PubMed] [Google Scholar]

- 18.Nelson KL, Shilkofski NA, Haggerty JA, Saliski M, Hunt EA. The use of cognitive AIDS during simulated pediatric cardiopulmonary arrests. Simulation in healthcare : journal of the Society for Simulation in Healthcare. 2008 Fall;3(3):138–145. doi: 10.1097/SIH.0b013e31816b1b60. [DOI] [PubMed] [Google Scholar]

- 19.McEvoy MD, Smalley JC, Nietert PJ, et al. Validation of a detailed scoring checklist for use during advanced cardiac life support certification. Simulation in healthcare : journal of the Society for Simulation in Healthcare. 2012 Aug;7(4):222–235. doi: 10.1097/SIH.0b013e3182590b07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McEvoy MD, Field LC, Moore HE, Smalley JC, Nietert PJ, Scarbrough S. The Effect of Adherence to ACLS Protocols on Survival of Event in the Setting of In-Hospital Cardiac Arrest. Resuscitation. 2013 doi: 10.1016/j.resuscitation.2013.09.019. in submission. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Milani RV, Lavie CJ, Dornelles AC. The impact of achieving perfect care in acute coronary syndrome: the role of computer assisted decision support. American heart journal. 2012 Jul;164(1):29–34. doi: 10.1016/j.ahj.2012.04.004. [DOI] [PubMed] [Google Scholar]

- 22.Litvin CB, Ornstein SM, Wessell AM, Nemeth LS, Nietert PJ. Adoption of a clinical decision support system to promote judicious use of antibiotics for acute respiratory infections in primary care. International journal of medical informatics. 2012 Aug;81(8):521–526. doi: 10.1016/j.ijmedinf.2012.03.002. [DOI] [PubMed] [Google Scholar]

- 23.Litvin CB, Ornstein SM, Wessell AM, Nemeth LS, Nietert PJ. Use of an Electronic Health Record Clinical Decision Support Tool to Improve Antibiotic Prescribing for Acute Respiratory Infections: The ABX-TRIP Study. Journal of General Internal Medicine. 2012 Nov;28(6):810–816. doi: 10.1007/s11606-012-2267-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Haut ER, Lau BD, Kraenzlin FS, et al. Improved prophylaxis and decreased rates of preventable harm with the use of a mandatory computerized clinical decision support tool for prophylaxis for venous thromboembolism in trauma. Archives of surgery. 2012 Oct;147(10):901–907. doi: 10.1001/archsurg.2012.2024. [DOI] [PubMed] [Google Scholar]

- 25.Free C, Phillips G, Watson L, et al. The effectiveness of mobile-health technologies to improve health care service delivery processes: a systematic review and meta-analysis. PLoS medicine. 2013 Jan;10(1):e1001363. doi: 10.1371/journal.pmed.1001363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Free C, Phillips G, Galli L, et al. The effectiveness of mobile-health technology-based health behaviour change or disease management interventions for health care consumers: a systematic review. PLoS medicine. 2013 Jan;10(1):e1001362. doi: 10.1371/journal.pmed.1001362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Devries KM, Kenward MG, Free CJ. Preventing smoking relapse using text messages: analysis of data from the txt2stop trial. Nicotine & tobacco research : official journal of the Society for Research on Nicotine and Tobacco. 2013 Jan;15(1):77–82. doi: 10.1093/ntr/nts086. [DOI] [PubMed] [Google Scholar]

- 28.Neal JM, Hsiung RL, Mulroy MF, Halpern BB, Dragnich AD, Slee AE. ASRA checklist improves trainee performance during a simulated episode of local anesthetic systemic toxicity. Regional anesthesia and pain medicine. 2012 Jan-Feb;37(1):8–15. doi: 10.1097/AAP.0b013e31823d825a. [DOI] [PubMed] [Google Scholar]

- 29.Dane FC, Russell-Lindgren KS, Parish DC, Durham MD, Brown TD. In-hospital resuscitation: association between ACLS training and survival to discharge. Resuscitation. 2000 Sep;47(1):83–87. doi: 10.1016/s0300-9572(00)00210-0. [DOI] [PubMed] [Google Scholar]

- 30.Arriaga AF, Bader AM, Wong JM, et al. Simulation-based trial of surgical-crisis checklists. The New England journal of medicine. 2013 Jan 17;368(3):246–253. doi: 10.1056/NEJMsa1204720. [DOI] [PubMed] [Google Scholar]

- 31.Ziewacz JE, Arriaga AF, Bader AM, et al. Crisis checklists for the operating room: development and pilot testing. Journal of the American College of Surgeons. 2011 Aug;213(2):212–217. e10. doi: 10.1016/j.jamcollsurg.2011.04.031. [DOI] [PubMed] [Google Scholar]