Abstract

Background

Impact factor (if) is often used as a measure of journal quality. The purpose of the present study was to determine whether trials with positive outcomes are more likely to be published in journals with higher ifs.

Methods

We reviewed 476 randomized phase iii cancer trials published in 13 journals between 1995 and 2005. Multivariate logistic regression models were used to investigate predictors of publication in journals with high ifs (compared with low and medium ifs).

Results

A positive outcome had the strongest association with publication in high-if journals [odds ratio (or): 4.13; 95% confidence interval (ci): 2.67 to 6.37; p < 0.001]. Other associated factors were a larger sample size (or: 1.06; 95% ci: 1.02 to 1.10; p = 0.001), intention-to-treat analysis (or: 2.53; 95% ci: 1.56 to 4.10; p < 0.001), North American authors (or for European authors: 0.36; 95% ci: 0.23 to 0.58; or for international authors: 0.41; 95% ci: 0.20 to 0.82; p < 0.001), adjuvant therapy trial (or: 2.58; 95% ci: 1.61 to 4.15; p < 0.001), shorter time to publication (or: 0.84; 95% ci: 0.77 to 0.92; p < 0.001), uncommon tumour type (or: 1.39; 95% ci: 0.90 to 2.13; p = 0.012), and hematologic malignancy (or: 3.15; 95% ci: 1.41 to 7.03; p = 0.012).

Conclusions

Cancer trials with positive outcomes are more likely to be published in journals with high ifs. Readers of medical literature should be aware of this “impact factor bias,” and investigators should be encouraged to submit reports of trials of high methodologic quality to journals with high ifs regardless of study outcomes.

Keywords: Impact factor, cancer trials, publication, predictive factors

1. INTRODUCTION

The impact factor (if) of a journal for a given year is calculated by dividing the number of citations that year to articles published in the journal in the preceding 2 years by the number of “citable” articles published in those 2 years1. For example:

A compilation of ifs is published annually by Thomson–Reuters as Journal Citation Reports.

Although if was not designed to measure journal quality, and the ifs of journals publishing in various medical disciplines will vary, articles published in medical journals with a high if are often perceived to be of high quality and clinical relevance2. In addition, if can be affected by editorial practices. For example, the if can be increased by including a higher percentage of review articles, which are cited more often than other types of articles3.

Regardless of the many caveats associated with if, busy clinicians might, because of time constraints, read only journals with high ifs. Their opinions and clinical practices are therefore more likely to be influenced by articles published in such journals. In a review of published emergency medicine studies, the strongest predictor of citations per year was the if of the publishing journal4. Furthermore, implicitly or explicitly, ifs can influence committees in assessing academic promotions, merit, funding, grants, and appointments3,5,6.

In a review of neonatal literature, Littner et al.7 recently showed that randomized phase iii clinical trials (rcts) with negative results are more likely than those with positive results to be published in journals with lower ifs. Whether that observation is true in oncology as well is not clear. The primary objective of the present study was to determine whether oncology rcts with statistically significant positive results are more likely than those with negative results to be published in journals with higher ifs.

2. METHODS

2.1. Identification of Studies

Using the oncology section of Journal Citation Reports for 2005, journals with an if exceeding 3 were identified (n = 51). Another 3 journals in the general or internal medicine category that frequently publish oncology rcts were also identified. We then limited our scope to journals that had published at least 3 original rcts during the period of interest (n = 13). The identified journals publish nearly all the oncology rcts in the English language8–11.

Journals ifs were arbitrarily categorized as low, medium, or high according to Journal Citation Reports for 2005. The low group (if: <10) included Annals of Oncology, Annals of Surgical Oncology, British Journal of Cancer, Breast Cancer Research and Treatment, Clinical Cancer Research, European Journal of Cancer, International Journal of Radiation Oncology · Biology · Physics, and Leukemia. The medium group (if: 10–20) included the Journal of Clinical Oncology and the Journal of the National Cancer Institute. The high group (if: >20) consisted of JAMA (The Journal of the American Medical Association), The Lancet, and the New England Journal of Medicine.

Phase iii trials were defined as rcts of cancer therapy published in one of the identified journals between 1995 and 2005. Trials with fewer than 100 patients per arm were excluded because their statistical power is sufficient to detect only large differences in treatments, and thus would be more likely to have a negative primary outcome. The rcts were identified in a manual search of each journal. Exclusion criteria were a pediatric population (<18 years of age); investigation of supportive care or palliative care, education, diagnostic imaging, screening or prevention; evaluation of different dosing schedules of the same regimen; and evaluation of bone marrow transplantation. Articles were also excluded if they involved only meta-analyses, subset analyses, overviews, or pooled data from two or more trials. For multiple reports (the same trial published more than one time), the first publication was arbitrarily chosen for inclusion in the study.

2.2. Data Collection

For each identified trial, the information collected included year of publication; journal of publication; cancer type; intent of therapy, type of therapy, and duration of accrual; number of patients randomized; number of treatment groups; use of any blinding; sample size calculation; nationality of authors; method of analysis; time to publication; and whether an independent committee reviewed response data. The source of trial funding was categorized as “non-industry” (for sponsorship by a government, foundation, or other nonprofit agency), “industry” (for full or partial sponsorship by a pharmaceutical company), or “not stated.” If funding was not explicitly stated, the primary endpoint of the rct was recorded as the outcome used in the sample size calculation. Data describing the sample size calculation (present or absent) and the type of analysis [intention-to-treat (itt) or other] were used to assess quality of reporting. The nationality of authors was defined as North American, European, international, or other using the affiliations of the authors or the cooperative group. The term “international” was used if authors came from more than one region.

A trial was classified as positive if an experimental arm was statistically superior to the control arm (p ≤ 0.05) for the primary endpoint. Studies for which the primary endpoint was not explicitly defined were classified as positive or negative based on overall survival. Noninferiority studies were classified as positive if the criteria for noninferiority were met; otherwise, they were classified as negative.

Data collection was carried out by one investigator (PAT). As a measure of data integrity, a second investigator (SW) evaluated a random sample of 61 publications, with an overall disagreement rate of 1.21%. Differences were resolved by consensus.

2.3. Statistical Analysis

Study characteristics are summarized using descriptive statistics such as median, proportion, and frequency. Time to publication was calculated by subtracting the year that enrollment was completed from the year of publication. Categories of predictors were grouped for statistical power after data collection, but before analysis. Breast, colorectal, lung, and prostate cancers were grouped as “common” tumours. Intent of therapy was grouped as adjuvant or other, trial results as positive or negative, primary endpoints as overall survival or other, source of funding as industry or other, and method of statistical analysis as itt or other.

Potential predictors of publication in high-if journals were defined before data analysis and included cancer type, type of therapy, intent of therapy, sample size, accrual rate, primary endpoint, nationality of authors, publication year, duration of accrual, time to publication, presence of an independent review committee, inclusion of the sample size calculation, itt analysis, industry funding, and positive outcome. Because of the potential influence of journal-specific editorial practices over inclusion of a sample size calculation, supportive analyses were performed excluding that variable. Predictors of publication in high-if journals (compared with low- and medium-if journals) were investigated using univariate and multivariate logistic regression models. Linear regression was also performed using actual if as the outcome. Results for both methods were similar, and so only the logistic regression results are presented. Multivariate models were constructed using a forward stepwise selection process.

Because if can vary by discipline, analyses were performed in two ways: cluster analyses assumed that trials conducted within the same tumour site were correlated, and a comparison of only medium-if journals with low-if journals excluded general medical journals (New England Journal of Medicine, JAMA, The Lancet).

Tests for trends over time were performed using the Cochran–Armitage test12. Because the consort statement was initially published in 1996 and then updated in 200013–15, rcts were grouped by publication year (1995–1997, 1998–2000, and 2001–2005) for the analysis of reporting quality over time. All tests were two-sided, and a p value of 0.05 or less was considered statistically significant.

3. RESULTS

3.1. Trends and Characteristics of the RCTs

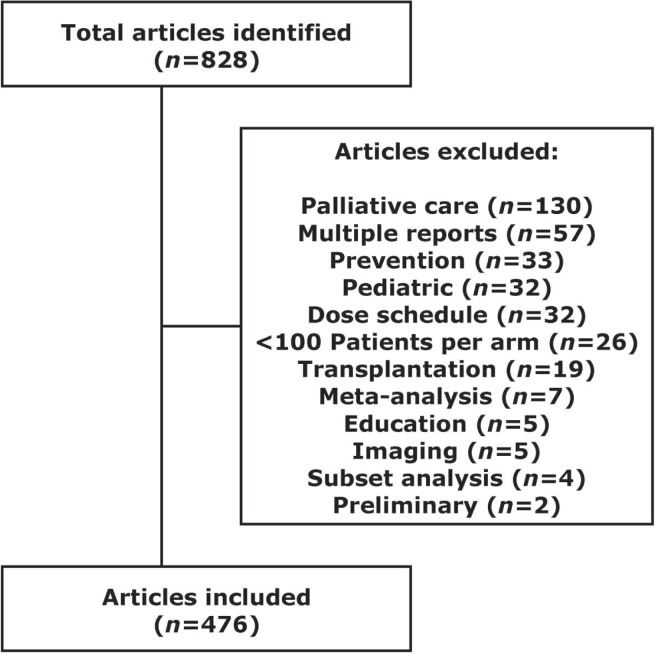

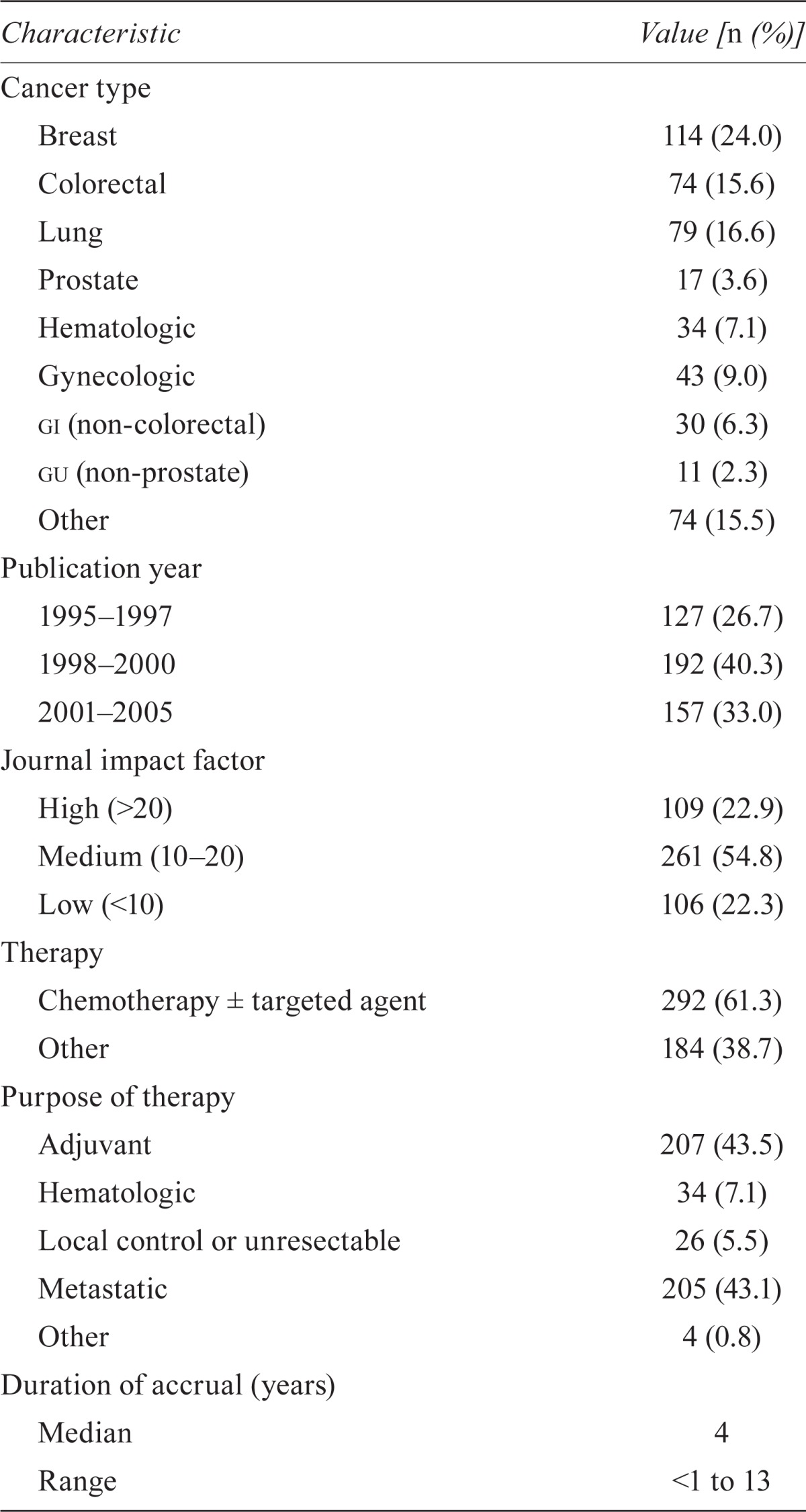

In total, 828 rcts were identified, and 476 met the inclusion criteria. Figure 1 lists the reasons for exclusion. Table i summarizes the characteristics of eligible rcts. More than half the studies were published in journals with a medium if (n = 261, 54.8%), and the most common cancer site was the breast (n = 114, 24.0%). Median time to publication was 4 years (range: 1–18 years). Across all years, publications from European authors accounted for 52.9% of the total.

Figure 1.

consort flow diagram for included and excluded randomized controlled trials.

TABLE I.

Characteristics of 476 trials

| Characteristic | Value [n (%)] |

|---|---|

| Cancer type | |

| Breast | 114 (24.0) |

| Colorectal | 74 (15.6) |

| Lung | 79 (16.6) |

| Prostate | 17 (3.6) |

| Hematologic | 34 (7.1) |

| Gynecologic | 43 (9.0) |

| gi (non-colorectal) | 30 (6.3) |

| gu (non-prostate) | 11 (2.3) |

| Other | 74 (15.5) |

| Publication year | |

| 1995–1997 | 127 (26.7) |

| 1998–2000 | 192 (40.3) |

| 2001–2005 | 157 (33.0) |

| Journal impact factor | |

| High (>20) | 109 (22.9) |

| Medium (10–20) | 261 (54.8) |

| Low (<10) | 106 (22.3) |

| Therapy | |

| Chemotherapy ± targeted agent | 292 (61.3) |

| Other | 184 (38.7) |

| Purpose of therapy | |

| Adjuvant | 207 (43.5) |

| Hematologic | 34 (7.1) |

| Local control or unresectable | 26 (5.5) |

| Metastatic | 205 (43.1) |

| Other | 4 (0.8) |

| Duration of accrual (years) | |

| Median | 4 |

| Range | <1 to 13 |

| Outcome | |

| Positive | 162 (34.0) |

| Negative | 283 (59.5) |

| Noninferiority | 29 (6.1) |

| Undetermined | 2 (0.4) |

| Independent response review | |

| Yes | 138 (29.0) |

| No | 338 (71.0) |

| Funding | |

| Industry | 183 (38.5) |

| Other | 177 (37.2) |

| Not stated | 116 (24.4) |

| Sample size calculation | |

| Yes | 406 (85.3) |

| No | 70 (14.7) |

| Blinding | |

| Yes | 44 (9.2) |

| No | 432 (90.8) |

| Analysis method | |

| Intention-to-treat | 360 (75.6) |

| Treatment received | 6 (1.3) |

| Not stated | 110 (23.1) |

| Primary endpoint | |

| Overall survival | 221 (46.4) |

| Other | 219 (46.0) |

| Not stated | 36 (7.6) |

| Region | |

| North America | 132 (27.7) |

| Europe | 252 (52.9) |

| International | 67 (14.1) |

| Other | 25 (5.3) |

| Time to publication (years) | |

| Median | 4 |

| Range | 1–18 |

gi = gastrointestinal; gu = genitourinary.

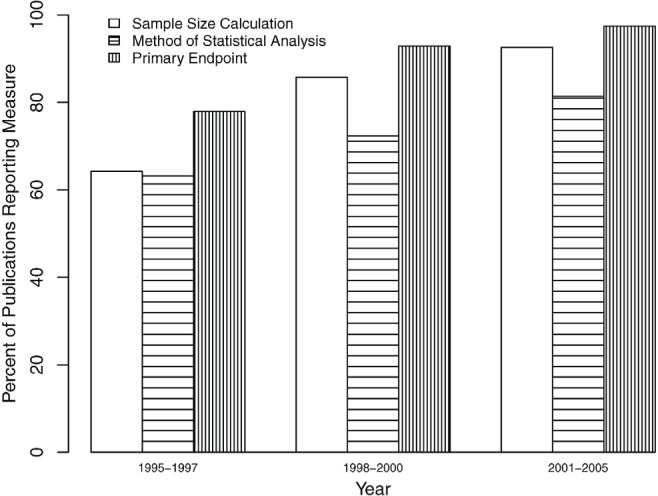

With respect to quality of reporting, 70 articles (14.7%) contained no explicit description of the sample size calculation. The method of statistical analysis was treatment received in 6 articles (1.3%) and not stated in 110 articles (23.1%), and the primary endpoint was not stated in 36 articles (7.6%). However, the reporting of those measures improved significantly (p < 0.001) over time (Figure 2).

Figure 2.

Trends in report quality indicators for randomized controlled trials in oncology.

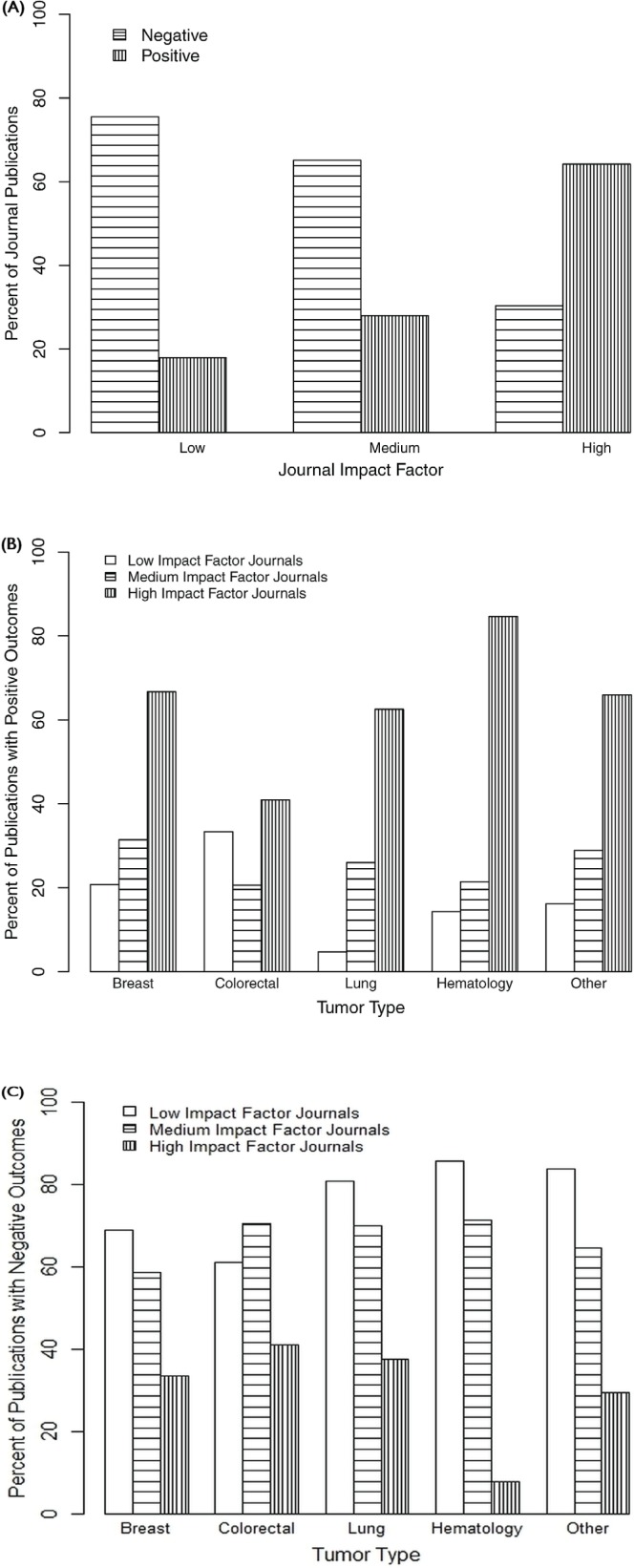

The study outcome was classified as positive (statistically significant results favouring the experimental arm) in 162 trials (34.0%). Approximately 75% of the 106 trials published in low-if journals had a negative outcome, as did 65% of the 261 trials published in medium-if journals. However, more than 60% of the 109 trials published in high-if journals had positive outcomes [Figure 3(A)]. By tumour site, the highest proportion of trials with positive outcomes appeared in high-if journals compared with medium-and low-if journals [Figure 3(B)].

Figure 3.

(A) Distribution of publications by outcome (positive or negative) and impact factor. (B) Distribution of trials with a positive outcome by tumour type and impact factor. (C) Distribution of trials with a negative outcome by tumour type and impact factor.

3.2. Predictors of Publication in High-IF Journals

Table ii summarizes univariate predictors of publication in higher-if journals. Factors that remained significant in the multivariate model (Table iii) were outcome [positive vs. negative: odds ratio (or): 4.13; 95% confidence interval (ci): 2.67 to 6.37; p < 0.001], larger sample size (p = 0.001), itt analysis (p < 0.001), nationality of authors (p < 0.001), trials of adjuvant therapy (p < 0.001), time to publication (p < 0.001) and tumour type (uncommon tumour type or: 1.39; 95% ci: 0.90 to 2.13; hematologic malignancy or: 3.15; 95% ci: 1.41 to 7.03; p = 0.012).

TABLE II.

Univariate analysis for predictors of publication in journals with a higher impact factor

| Predictor |

Journal category

|

|||||

|---|---|---|---|---|---|---|

|

All topics

|

Oncology only

|

|||||

| or | 95% ci | p Value | or | 95% ci | p Value | |

| Cancer type | 0.030 | 0.84 | ||||

| Common | Reference | Reference | ||||

| Hematologic | 2.04 | 1.03 to 4.04 | 0.81 | 0.31 to 2.08 | ||

| Other | 1.49 | 1.02 to 2.18 | 1.08 | 0.65 to 1.78 | ||

| Type of therapy | <0.001 | 0.56 | ||||

| Chemotherapy | 0.49 | 0.34 to 0.71 | 1.16 | 0.71 to 1.87 | ||

| Intent of therapy | <0.001 | 0.15 | ||||

| Adjuvant | 2.27 | 1.56 to 3.19 | 1.43 | 0.88 to 2.31 | ||

| Sample size | <0.001 | 0.040 | ||||

| Per 100 patients | 1.08 | 1.05 to 1.12 | 1.08 | 1.00 to 1.15 | ||

| Accrual rate | <0.001 | 0.030 | ||||

| Per 100 patients | 1.19 | 1.09 to 1.30 | 1.22 | 1.02 to 1.45 | ||

| Primary endpoint | 0.64 | 1.00 | ||||

| Overall survival | 0.92 | 0.65 to 1.30 | 1.00 | 0.64 to 1.58 | ||

| Independent review committee | 0.56 | 0.059 | ||||

| Yes | 0.89 | 0.61 to 1.31 | 0.63 | 0.38 to 1.02 | ||

| Analysis | <0.001 | 0.014 | ||||

| Intention-to-treat | 2.59 | 1.71 to 3.91 | 1.85 | 1.14 to 3.01 | ||

| Industry-funded | 0.42 | 0.033 | ||||

| Yes | 1.16 | 0.81 to 1.65 | 1.69 | 1.04 to 2.74 | ||

| Nationality of authors | 0.021 | <0.001 | ||||

| North American | Reference | Reference | ||||

| European | 0.55 | 0.37 to 0.83 | 0.17 | 0.08 to 0.35 | ||

| International | 0.85 | 0.48 to 1.50 | 0.34 | 0.14 to 0.86 | ||

| Non-English-speaking | 0.48 | 0.21 to 1.09 | 0.16 | 0.05 to 0.49 | ||

| Publication year | 0.60 | 0.33 | ||||

| Later | 1.02 | 0.96 to 1.08 | 1.04 | 0.96 to 1.12 | ||

| Duration of study | 0.030 | 0.38 | ||||

| Per year | 1.09 | 1.01 to 1.17 | 0.96 | 0.87 to 1.05 | ||

| Outcome | <0.001 | 0.033 | ||||

| Positive | 4.52 | 3.01 to 6.80 | 1.89 | 1.05 to 3.37 | ||

| Time to publication | 0.003 | 0.37 | ||||

| Per year | 0.90 | 0.83 to 0.96 | 0.96 | 0.88 to 1.05 | ||

TABLE III.

Multivariate analysis for predictors of publication in journals with a higher impact factor

| Predictor |

Journal category

|

|||||

|---|---|---|---|---|---|---|

|

All topics

|

Oncology only

|

|||||

| or | 95% ci | p Value | or | 95% ci | p Value | |

| Outcome | <0.001 | 0.025 | ||||

| Positive | 4.13 | 2.67 to 6.37 | 2.03 | 1.10 to 3.77 | ||

| Sample size | 0.001 | |||||

| Per 100 patients | 1.06 | 1.02 to 1.10 | ||||

| Analysis | <0.001 | 0.008 | ||||

| Intention-to-treat | 2.53 | 1.56 to 4.10 | 2.22 | 1.23 to 4.02 | ||

| Nationality of authors | <0.001 | <0.001 | ||||

| North American | Reference | Reference | ||||

| European | 0.36 | 0.23 to 0.58 | 0.13 | 0.06 to 0.29 | ||

| International | 0.41 | 0.20 to 0.82 | 0.17 | 0.06 to 0.48 | ||

| Non-English-speaking | 0.50 | 0.20 to 1.24 | 0.13 | 0.04 to 0.43 | ||

| Intent of therapy | <0.001 | 0.044 | ||||

| Adjuvant | 2.58 | 1.61 to 4.15 | 1.76 | 1.02 to 3.03 | ||

| Time to publication | <0.001 | |||||

| Per year | 0.84 | 0.77 to 0.92 | ||||

| Tumour type | ||||||

| Common | Reference | 0.012 | ||||

| Hematologic | 3.15 | 1.41 to 7.03 | ||||

| Other | 1.39 | 0.90 to 2.13 | ||||

| Industry-funded | 0.027 | |||||

| Yes | 1.89 | 1.07 to 3.34 | ||||

3.2.1. Supportive Analyses

After excluding the general medical journals (New England Journal of Medicine, The Lancet, JAMA), only journals with low and medium ifs remained. Tables ii and iii present results from the logistic regression analyses (univariate and multivariate respectively). In the multivariate analysis, itt analysis was most strongly associated with publication in the medium-if journals Journal of Clinical Oncology and Journal of the National Cancer Institute (p = 0.008), as was positive outcome (p = 0.025), adjuvant therapy trial (p = 0.044), and nationality of authors (p < 0.001). Interestingly, industry-funded studies also entered the multivariable model (p = 0.027), although it must be noted that automated selection processes are known to occasionally produce false-positive results. A cluster analysis gave results similar to those for the overall analysis, and thus for brevity, those results are not presented.

4. DISCUSSION

We reviewed 476 phase iii randomized cancer trials published between 1995 and 2005, finding that trials with statistically significant positive outcomes were more likely to be published in high-if journals. More than 60% of the trials published in high-if journals had positive outcomes; only 25% trials published in low-if journals had positive outcomes. Those results are consistent with results in earlier reviews of trials in hematology and neonatology7,16. Taken together, those findings suggest an “impact factor bias” in publication, an observation that is concerning, given that trials published in journals with high ifs are, by definition, more widely read and cited. Thus, the more frequent publication of trials with positive outcomes in those journals will not only provide a biased view of the literature to readers, but will also likely exert more influence on the practice patterns of clinicians. Highly cited studies do not always constitute the new “gold standard,” because they could potentially be followed by trials demonstrating contradictory or stronger effects17.

Gluud et al.18 examined the relationships between journal if, trial quality, and study outcome in 530 hepatobiliary rcts. However, their review did not include data about the primary endpoint, and the median sample size was only 45—meaning that most of those rcts would not be powered for survival. The percentage of positive trials was 71% in their series, much higher than the 34% observed in our study or the 33% observed in a review by Yanada et al.16. Gluud et al.18 did not observe any association between if and study outcome, but it is likely that differences with respect to primary endpoints in hepatobiliary trials account for the observed high prevalence of positive trials and the lack of an association between study outcome and if.

“Publication bias” refers to the publication or non-publication of research findings depending on the nature and direction of those findings19. It has been well documented that, compared with rcts reporting negative results, those showing significantly positive results are more likely to be published quickly20–23. In a review of outcome reporting bias studies, three publications found that, compared with trials having nonsignificant outcomes, those with statistically significant outcomes had better odds of being fully reported24. The same studies found that, in 4%–50% of published trials, at least one primary outcome had been changed, introduced, or omitted since registration of the original protocol25. Another possible manifestation of publication bias is the increased likelihood that trials with positive outcomes will be published in journals with higher ifs.

Previous studies have indicated that failure to publish results predominately from lack of submission or loss of interest by investigators because of negative results rather than from rejection of manuscripts23,26–28. Two groups have systematically evaluated the characteristics of submitted manuscripts associated with publication in JAMA29 and in the British Medical Journal, The Lancet, and the Annals of Internal Medicine30. Positive results were reported in 51.4% of studies submitted to JAMA and in 87% of studies submitted to the other three major journals. However, submitted manuscripts were not more likely to be published if they reported positive outcomes, demonstrating a lack of bias at the editorial level for those journals. Investigators should therefore be encouraged to submit trials with negative outcomes, but conducted with high methodologic standards, to high-if journals.

The consort statement was created to improve the quality of reporting of rcts and was first published in 199613–15. We found that data about the essential elements of rcts such as primary endpoint or sample size calculation were missing in 7.6% and 14.7% of publications respectively. Although 4 of the 13 journals selected for the present study have not formally endorsed the consort statement (International Journal of Radiation Oncology · Biology · Physics, Leukemia, Breast Cancer Research and Treatment, and British Journal of Cancer), only 42 of the publications in our study (8.8%) were published in those journals. In our analysis, reporting of the primary endpoint, the sample size calculation, and the method of statistical analysis improved with time. The quality of reporting is a reflection both of the submission and of editorial practices.

Pharmaceutical company involvement in medical research is an area of growing concern. A source of funding can influence research design and outcome8,9,31,32. In a multivariate analysis of rcts in breast, colorectal, and non-small-cell lung cancer, a significant p value for the primary endpoint and industry sponsorship were each independently associated with endorsement of the experimental arm8. We found that industry funding was not associated with publication in high-if journals, which is consistent with an earlier study focusing on economic analyses in oncology33. When the analysis was limited to oncology journals, industry funding was associated in logistic regression, but not in linear regression, with publication in the Journal of Clinical Oncology or the Journal of the National Cancer Institute. In our study, sponsorship for rcts with partial or full funding from a pharmaceutical company were categorized as “industry.” The absence of a “mixed” category might have influenced our results.

Trials assessing adjuvant therapy and involving uncommon tumour types were more likely to be published in high-if journals. Whether that likelihood reflects a preference of the submitting authors or of the editorial boards is unknown. Such trials might possibly be perceived to be of higher clinical importance and more likely to be published in high-if journals.

North American authors were more likely to publish in higher-if journals. One potential explanation for that observation is that two of the three high-if journals in the present study are published in North America, and several analyses have suggested the presence of an editorial bias toward accepting manuscripts whose corresponding author lives in the country where the publishing journal is located30,34,35. Alternatively, authors might be more likely to submit manuscripts to journals that are published in their country of residence.

Our review encompasses only the 1995–2005 period. Temporal changes in “impact factor bias” since the conduct of the study—specifically in the era of targeted therapy—are unknown and are a major limitation of this project.

One potential limitation of the present study is that we did not use a formal scale, such as the Jadad scale36, to assess the quality of rcts included in the present analysis. Currently, there is no commonly accepted measure of quality for rcts in oncology, and it is difficult to apply scales developed for other medical specialties (such as the Jadad scale) because many rcts in oncology are not double-blinded. Furthermore, assessment of methodologic quality depends on the particular scale used37. We applied a component method instead. Two key components that have been used by other groups were selected: analysis according to the itt principle, and description of a sample size calculation38,39.

Another limitation of our study is the inclusion of only 13 English-language journals. It is likely that some oncology rcts are published in journals not included in the present study, although it is also likely that the number of those publications would be small. It is important to note that we arbitrarily classified journals with an if of 3–10 as the low-if group. In other scientific disciplines, those ifs would be considered high. The inclusion of disease-specific oncology journals—namely, Leukemia and Breast Cancer Research and Treatment—in the low-if group could potentially lead to overrepresentation of those diseases. However, only 8 trials that met our inclusion criteria were published in those two journals, and thus the effect was minimal. In addition, we did not assess the clinical implications of the study intervention. Trials that have the potential to be practice-changing and that address important questions are more likely to be published in high-impact journals regardless of the outcome of the trial.

5. CONCLUSIONS

Our study demonstrates that an “impact factor bias” affects the publication of oncology rcts. Trials with positive outcomes are more likely to be published in journals with higher ifs, independent of other measures of methodologic quality. Investigators should be encouraged to submit trials of high methodologic quality to high-if journals regardless of the study outcomes.

6. CONFLICT OF INTEREST DISCLOSURES

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors. The authors have no financial conflicts of interest to disclose.

7. REFERENCES

- 1.Garfield E. Citation analysis as a tool in journal evaluation. Science. 1972;178:471–9. doi: 10.1126/science.178.4060.471. [DOI] [PubMed] [Google Scholar]

- 2.Seglen PO. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997;314:498–502. doi: 10.1136/bmj.314.7079.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Adam D. The counting house. Nature. 2002;415:726–9. doi: 10.1038/415726a. [DOI] [PubMed] [Google Scholar]

- 4.Callaham M, Wears RL, Weber E. Journal prestige, publication bias, and other characteristics associated with citation of published studies in peer-reviewed journals. JAMA. 2002;287:2847–50. doi: 10.1001/jama.287.21.2847. [DOI] [PubMed] [Google Scholar]

- 5.Jimenez–Contreras E, Delgado Lopez–Cozar E, Ruiz–Perez R, Fernandez VM. Impact-factor rewards affect Spanish research. Nature. 2002;417:898. doi: 10.1038/417898b. [DOI] [PubMed] [Google Scholar]

- 6.Taubes G. Measure for measure in science. Science. 1993;260:884–6. doi: 10.1126/science.8493516. [DOI] [PubMed] [Google Scholar]

- 7.Littner Y, Mimouni FB, Dollberg S, Mandel D. Negative results and impact factor: a lesson from neonatology. Arch Pediatr Adolesc Med. 2005;159:1036–7. doi: 10.1001/archpedi.159.11.1036. [DOI] [PubMed] [Google Scholar]

- 8.Booth CM, Cescon DW, Wang L, Tannock IF, Krzyzanowska MK. Evolution of the randomized controlled trial in oncology over three decades. J Clin Oncol. 2008;26:5458–64. doi: 10.1200/JCO.2008.16.5456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Peppercorn J, Blood E, Winer E, Partridge A. Association between pharmaceutical involvement and outcomes in breast cancer clinical trials. Cancer. 2007;109:1239–46. doi: 10.1002/cncr.22528. [DOI] [PubMed] [Google Scholar]

- 10.Tam VC, Hotte SJ. Consistency of phase iii clinical trial abstracts presented at an annual meeting of the American Society of Clinical Oncology compared with their subsequent full-text publications. J Clin Oncol. 2008;26:2205–11. doi: 10.1200/JCO.2007.14.6795. [DOI] [PubMed] [Google Scholar]

- 11.You B, Gan HK, Pond GR, Chen EX. Consistency in reporting of primary endpoints (pep) from registration to publication for modern randomized oncology phase iii trials [abstract 6031] J Clin Oncol. 2010;28 doi: 10.1200/JCO.2011.37.0890. [Available online at: http://meeting.ascopubs.org/cgi/content/abstract/28/15_suppl/6031; cited May 25, 2014] [DOI] [PubMed] [Google Scholar]

- 12.Armitage P. Tests for linear trends in proportions and frequencies. Biometrics. 1955;11:375–86. doi: 10.2307/3001775. [DOI] [Google Scholar]

- 13.Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials. The consort statement. JAMA. 1996;276:637–9. doi: 10.1001/jama.1996.03540080059030. [DOI] [PubMed] [Google Scholar]

- 14.Moher D, Schulz KF, Altman DG. The consort statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet. 2001;357:1191–4. doi: 10.1016/S0140-6736(00)04337-3. [DOI] [PubMed] [Google Scholar]

- 15.Schulz KF, Altman DG. Moher D on behalf of the consort Group. consort 2010 statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med. 2010;152:726–32. doi: 10.7326/0003-4819-152-11-201006010-00232. [DOI] [PubMed] [Google Scholar]

- 16.Yanada M, Narimatsu H, Suzuki T, Matsuo K, Naoe T. Randomized controlled trials of treatments for hematologic malignancies: study characteristics and outcomes. Cancer. 2007;110:334–9. doi: 10.1002/cncr.22776. [DOI] [PubMed] [Google Scholar]

- 17.Ioannidis JP. Contradicted and initially stronger effects in highly cited clinical research. JAMA. 2005;294:218–28. doi: 10.1001/jama.294.2.218. [DOI] [PubMed] [Google Scholar]

- 18.Gluud LL, Sorensen TI, Gotzsche PC, Gluud C. The journal impact factor as a predictor of trial quality and outcomes: cohort study of hepatobiliary randomized clinical trials. AM J Gastroenterol. 2005;100:2431–5. doi: 10.1111/j.1572-0241.2005.00327.x. [DOI] [PubMed] [Google Scholar]

- 19.Sterne JE, Moher D. Chapter 10: Addressing reporting biases. In: Higgins JPT, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions. Chichester, UK: John Wiley and Sons; 2009. Ver. 5.0.2. [Google Scholar]

- 20.Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA. 1990;263:1385–9. doi: 10.1001/jama.1990.03440100097014. [DOI] [PubMed] [Google Scholar]

- 21.Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–72. doi: 10.1016/0140-6736(91)90201-Y. [DOI] [PubMed] [Google Scholar]

- 22.Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev. 2009:MR000006. doi: 10.1002/14651858.MR000006.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Krzyzanowska MK, Pintilie M, Tannock IF. Factors associated with failure to publish large randomized trials presented at an oncology meeting. JAMA. 2003;290:495–501. doi: 10.1001/jama.290.4.495. [DOI] [PubMed] [Google Scholar]

- 24.Dwan K, Altman DG, Arnaiz JA, et al. Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PloS One. 2008;3:e3081. doi: 10.1371/journal.pone.0003081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dwan K, Altman DG, Cresswell L, Blundell M, Gamble CL, Williamson PR. Comparison of protocols and registry entries to published reports for randomised controlled trials. Cochrane Database Syst Rev. 2011:MR000031. doi: 10.1002/14651858.MR000031.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.De Bellefeuille C, Morrison CA, Tannock IF. The fate of abstracts submitted to a cancer meeting: factors which influence presentation and subsequent publication. Ann Oncol. 1992;3:187–91. doi: 10.1093/oxfordjournals.annonc.a058147. [DOI] [PubMed] [Google Scholar]

- 27.Timmer A, Hilsden RJ, Cole J, Hailey D, Sutherland LR. Publication bias in gastroenterological research—a retrospective cohort study based on abstracts submitted to a scientific meeting. BMC Med Res Methodol. 2002;2:7. doi: 10.1186/1471-2288-2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Camacho LH, Bacik J, Cheung A, Spriggs DR. Presentation and subsequent publication rates of phase i oncology clinical trials. Cancer. 2005;104:1497–504. doi: 10.1002/cncr.21337. [DOI] [PubMed] [Google Scholar]

- 29.Olson CM, Rennie D, Cook D, et al. Publication bias in editorial decision making. JAMA. 2002;287:2825–8. doi: 10.1001/jama.287.21.2825. [DOI] [PubMed] [Google Scholar]

- 30.Lee KP, Boyd EA, Holroyd–Leduc JM, Bacchetti P, Bero LA. Predictors of publication: characteristics of submitted manuscripts associated with acceptance at major biomedical journals. Med J Aust. 2006;184:621–6. doi: 10.5694/j.1326-5377.2006.tb00418.x. [DOI] [PubMed] [Google Scholar]

- 31.Djulbegovic B, Lacevic M, Cantor A, et al. The uncertainty principle and industry-sponsored research. Lancet. 2000;356:635–8. doi: 10.1016/S0140-6736(00)02605-2. [DOI] [PubMed] [Google Scholar]

- 32.Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ. 2003;326:1167–70. doi: 10.1136/bmj.326.7400.1167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Knox KS, Adams JR, Djulbegovic B, Stinson TJ, Tomor C, Bennet CL. Reporting and dissemination of industry versus non-profit sponsored economic analyses of six novel drugs used in oncology. Ann Oncol. 2000;11:1591–5. doi: 10.1023/A:1008309817708. [DOI] [PubMed] [Google Scholar]

- 34.Ernst E, Kienbacher T. Chauvinism. Nature. 1991;352:560. doi: 10.1038/352560b0. [DOI] [Google Scholar]

- 35.Link AM. US and non-US submissions: an analysis of reviewer bias. JAMA. 1998;280:246–7. doi: 10.1001/jama.280.3.246. [DOI] [PubMed] [Google Scholar]

- 36.Jadad AR, Moore RA, Carroll D, et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control Clin Trials. 1996;17:1–12. doi: 10.1016/0197-2456(95)00134-4. [DOI] [PubMed] [Google Scholar]

- 37.Juni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282:1054–60. doi: 10.1001/jama.282.11.1054. [DOI] [PubMed] [Google Scholar]

- 38.Huwiler–Muntener K, Juni P, Junker C, Egger M. Quality of reporting of randomized trials as a measure of methodologic quality. JAMA. 2002;287:2801–4. doi: 10.1001/jama.287.21.2801. [DOI] [PubMed] [Google Scholar]

- 39.Soares HP, Daniels S, Kumar A, et al. Bad reporting does not mean bad methods for randomised trials: observational study of randomised controlled trials performed by the Radiation Therapy Oncology Group. BMJ. 2004;328:22–4. doi: 10.1136/bmj.328.7430.22. [DOI] [PMC free article] [PubMed] [Google Scholar]