Abstract

Purpose:

Intensity-modulated adaptive radiotherapy (ART) has been the focus of considerable research and developmental work due to its potential therapeutic benefits. However, in light of its unique quality assurance (QA) challenges, no one has described a robust framework for its clinical implementation. In fact, recent position papers by ASTRO and AAPM have firmly endorsed pretreatment patient-specific IMRT QA, which limits the feasibility of online ART. The authors aim to address these obstacles by applying failure mode and effects analysis (FMEA) to identify high-priority errors and appropriate risk-mitigation strategies for clinical implementation of intensity-modulated ART.

Methods:

An experienced team of two clinical medical physicists, one clinical engineer, and one radiation oncologist was assembled to perform a standard FMEA for intensity-modulated ART. A set of 216 potential radiotherapy failures composed by the forthcoming AAPM task group 100 (TG-100) was used as the basis. Of the 216 failures, 127 were identified as most relevant to an ART scheme. Using the associated TG-100 FMEA values as a baseline, the team considered how the likeliness of occurrence (O), outcome severity (S), and likeliness of failure being undetected (D) would change for ART. New risk priority numbers (RPN) were calculated. Failures characterized by RPN ≥ 200 were identified as potentially critical.

Results:

FMEA revealed that ART RPN increased for 38% (n = 48/127) of potential failures, with 75% (n = 36/48) attributed to failures in the segmentation and treatment planning processes. Forty-three of 127 failures were identified as potentially critical. Risk-mitigation strategies include implementing a suite of quality control and decision support software, specialty QA software/hardware tools, and an increase in specially trained personnel.

Conclusions:

Results of the FMEA-based risk assessment demonstrate that intensity-modulated ART introduces different (but not necessarily more) risks than standard IMRT and may be safely implemented with the proper mitigations.

Keywords: adaptive radiotherapy, ART, FMEA, patient safety, QA

I. INTRODUCTION

Adaptive radiotherapy (ART) has garnered tremendous attention for years. In a departure from traditional treatment schemes employing a single static plan, ART incorporates anatomical feedback to guide dynamic plan adaption throughout treatment.1 By monitoring the geometric or biological variations of changing anatomy and modifying the plan accordingly, both normal tissue avoidance and target coverage can be optimized. Due to the advantages it may offer, there has been massive interest in ART as a potentially superlative treatment technique for patients who experience substantial anatomical changes throughout treatment, including weight loss or gain, tumor shrinkage or growth, anatomical deformation and motion, and even metabolic or functional changes of the tumor. Publications on tools, techniques, and potential benefits of ART are plentiful. Investigational studies demonstrating significant improvement in treatment efficacy using adaptive techniques1,2 have motivated clinical implementation. The first reports of ART in a clinical setting are now emerging and reveal dosimetric advantages in the pelvis and head and neck.1,2

But despite the myriad of promising studies and sophisticated technology supporting clinical use of ART, its unique quality assurance (QA) challenges pose a major barrier. Standard forms of QA, such as detailed pretreatment plan reviews, are impractical when imaging, planning, and treatment delivery occur within minutes, not days or weeks. There are no clear answers as to how ART (especially intensity-modulated ART) will practicably fit within a quality assurance scheme that is both safe and efficient. Recent position papers by ASTRO and AAPM have firmly endorsed pretreatment patient-specific IMRT QA, which places online intensity-modulated ART at odds with the customary, time-tested practice of traditional phantom-based IMRT QA. Furthermore, there is considerable uncertainty surrounding the potential risk and impact of ART-based errors. While analyses of radiotherapy error records from the last few decades have shed light on the origin and management of common treatment errors,3 no such data exist for ART. Because adaptive techniques can involve an expedited timeframe from planning to delivery, introduce additional sources of potential failure, and entail unique QA challenges that are not well-handled by current QA tools, there is a common belief that ART is inherently riskier than standard radiotherapy. However, there are no data demonstrating the magnitude or distribution of these risks throughout the ART process.

Given the scope of these challenges and degree of risk uncertainty, no robust quality management strategy has been established for ART. The lack of a framework for the safe implementation of ART continues to deter its practice, while the lack of ART practice limits the implementation of data-driven quality management. We aim to address this void by employing a process-based approach, which has recently been endorsed by community experts as a means to optimize radiotherapy safety strategies,4–6 to evaluate the QA and safety needs for implementation of ART. Since ART-based error data currently do not exist, process-based analysis was performed using expert-based data from the forthcoming AAPM task group 100 (TG-100) as a baseline. Failure mode and effects analysis (FMEA) was used to identify and quantify risks for potential errors occurring during ART. For simplicity, a single scheme—online intensity-modulated ART with an integrated imaging, planning, and treatment system—was considered here. Through evaluation of the ART risk profile, vulnerabilities in the ART process were identified and quality control (QC) strategies are discussed to address high-priority QA and safety needs.

II. METHODS AND MATERIALS

II.A. FMEA

FMEA involves the identification of process-based failure modes and their associated risks. Risk assessment is achieved by establishing (1) the probability of occurrence for each possible failure (O), (2) the severity of the failure effect if unmitigated (S), and (3) the probability that the failure will be undetected (D). Each is rated with a value from 1 (low probability/severity) to 10 (high probability/severity), and multiplied to achieve a single risk priority number (RPN)

| (1) |

II.B. FMEA for ART

An experienced team comprised of two clinical medical physicists, one clinically trained engineer, and one radiation oncologist was assembled. FMEA was executed for an online intensity-modulated ART scheme performed on an integrated (i.e., sharing a single database) planning, onboard imaging, and treatment device equipped with some version of automated or semiautomated segmentation/planning software. A set of 216 radiotherapy failures composed by the forthcoming AAPM TG-100 was used as a basis for analysis6 (Saiful Huq, personal communication, March 13, 2013).

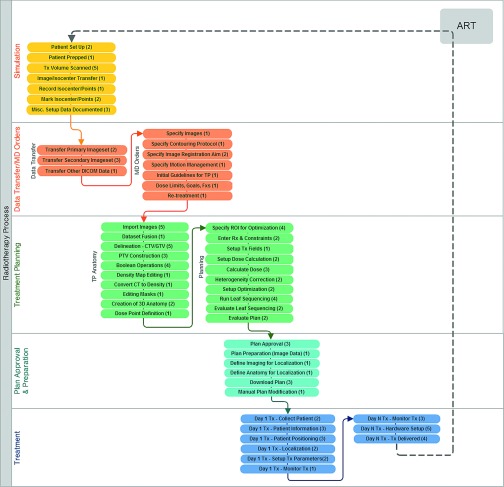

The team first identified failure modes most relevant to the ART process. In the interest of isolating ART-specific failures, it was assumed that initial simulation and planning was first performed error-free. Onboard imaging and subsequent adaptive planning were regarded as the ART simulation and treatment planning processes (Fig. 1). Failure modes related to simulation, data transfer, treatment planning (including directives, image fusion, anatomical segmentation, etc.), plan approval and preparation, and treatment were included. Those failure modes identified as low priority and unrelated to the method of treatment (standard IMRT or ART) were omitted from analysis. These included failure modes relating to initial entry of patient/treatment information into a written or electronic chart, specific choice or properties of immobilization devices, supplemental pretreatment imaging (for example, PET), initial “judgment-based” planning decisions (for example, initial choice of treatment machine or beam energy), minor inconveniences in plan optimization, evaluation of delivery system limitations (for example, gantry/table collisions), formal completion of the prescription, and abilities for the planning system to support anatomical models and 4D data. In total, 127 of 216 failures were identified as being most relevant and of high priority to an intensity-modulated ART scheme. A summary of these failure modes is presented in Table I.

FIG. 1.

Flow diagram of major intensity-modulated ART processes and subprocesses. The number of failure modes per subprocess is included in parenthesis.

TABLE I.

Summary of failure modes analyzed for FMEA.

| Potential failure modes |

| Simulation |

| Incorrect/inadequate immobilization, patient preparation or documentation |

| Faulty image data |

| Data transfer (images and other DICOM data) |

| Errors in data transfer between imaging systems and TPS (human, software, hardware) |

| Treatment planning directive (MD orders) |

| Incorrect/inadequate specification of image sets, protocols, registration goals, guidelines, dose limits, or fractionation for planning or delineation |

| Incorrect/inadequate documentation of previous treatments |

| TP anatomy |

| Errors in image import and registration |

| Incorrect/inadequate critical and target structure delineation and construction |

| Inaccurate density map/correction |

| Errors in creating, using, editing, or saving anatomical models |

| Incorrect/inadequate dose point definitions |

| Treatment planning |

| Incorrect/inappropriate region of interest (ROI) volumes for optimization |

| Incorrect/incomplete/inappropriate prescription, objectives, planning/treatment techniques, beam energy, machine, isocenter, or dose calculation parameters |

| Errors in optimization, dose calculation, heterogeneity correction, leaf sequencing, or plan evaluation |

| Plan approval and preparation |

| Bad or incorrect plan approved |

| Incorrect/inadequate localization images and protocols planned |

| Errors in plan information indicated for treatment |

| Treatment |

| Patient medical condition changes between prescription and treatment |

| Incorrect patient, records, plan data, isocenter, position, treatment parameters/accessories |

| Errors in setup, immobilization, localization, treatment parameter data, machine operation, or treatment delivery |

Each of the 127 failures was then evaluated for likeliness of occurrence (O), outcome severity (S), and likeliness of being undetected (D). The FMEA rating scale proposed by AAPM TG-100 was referenced for scoring.7 Replicating the methodology employed for TG-100, it was assumed that no specialty QA tools (including patient-specific QA) or increased staffing was utilized for ART. Factors relating to increased pressures (e.g., time constraints, real-time distractions, etc.) were considered, and their effects on O and D values were taken into account. The team also considered changes in overall severity of failures due to error accumulation over multiple fractions. Using the associated TG-100 values for standard IMRT as a baseline, the team established new O, S, and D values for each potential failure upon consensus agreement, and new RPN values were calculated.

Failures characterized by O ≥ 6 (at least moderate occurrence), S ≥ 7 (serious injury or death), and D ≥ 5 (at least a moderate chance of going undetected), yielding a RPN equal to 210, were categorized as high priority. For simplicity, the team designated failures with an RPN ≥ 200 as potentially critical. Finally, quality control tools, resources, and processes were identified for points of critical failure.

III. RESULTS

III.A. Overall trends

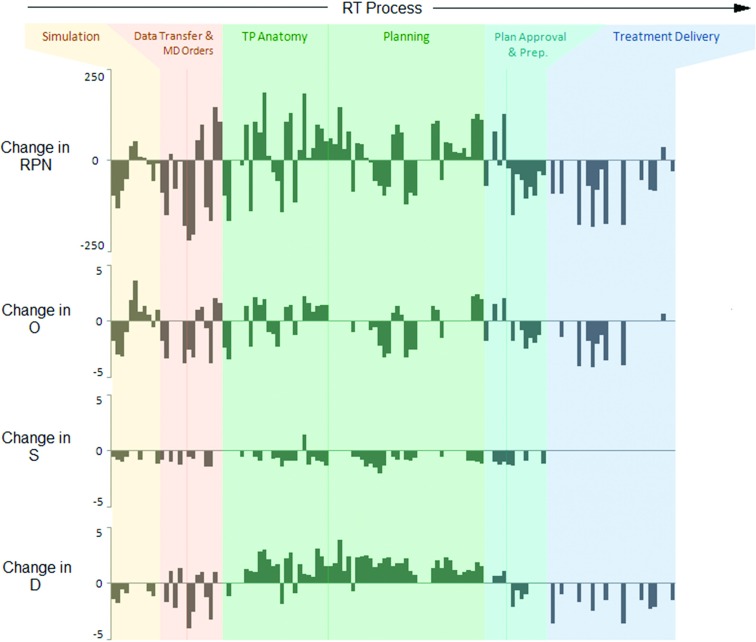

ART demonstrated a wider range and higher maximum of O, D, and RPN values compared to standard IMRT (Table II). RPN values increased for 38% (n = 48/127) of potential failures, with 75% (n = 36/48) attributed to failures in the segmentation and treatment planning processes. Increased O values were observed for 26% (n = 33/127) of potential failures, with 36% (n = 12/33) attributed to failures in segmentation. S values increased for only 1% of potential failures. D values increased (i.e., decreased probability of detection) for 44% (n = 56/127) of potential failures, with 86% (n = 48/56) attributed to failures in the segmentation and treatment planning processes. This was largely due to increased time constraints, user inattention, and inadequate training of onsite personnel.

TABLE II.

Mean, standard deviation (σ), minimum, maximum, and range values for occurrence (O), severity (S), detectability (D), and RPN values for 127 standard IMRT and intensity-modulated ART steps.

| Standard IMRT | Intensity-modulated ART | |||||||

|---|---|---|---|---|---|---|---|---|

| O | S | D | RPN | O | S | D | RPN | |

| Mean ± σ | 5 ± 1 | 7 ± 1 | 6 ± 1 | 188 ± 60 | 4 ± 2 | 7 ± 1 | 6 ± 2 | 174 ± 105 |

| Minimum | 2 | 3 | 2 | 46 | 1 | 3 | 1 | 11 |

| Maximum | 7 | 9 | 8 | 366 | 8 | 9 | 9 | 441 |

| Range | 5 | 6 | 6 | 320 | 7 | 6 | 8 | 430 |

III.B. Process-specific trends

There was a reduction in O and D values for simulation processes and treatment planning directives due to the availability of prior knowledge of immobilization, imaging, and treatment directives given for the initial plan. For the majority of segmentation and planning failures, RPN values increased (Fig. 2). This was largely due to tighter time constraints and user inattention. O and D values were reduced for some failures due to the availability of the initial treatment plan as a reference. These included failures in specifying optimization goals, planning constraints, prescription information, dose calculation parameters, and beam energy.

FIG. 2.

Change in RPN, O, S, and D values for intensity-modulated ART (relative to standard IMRT) for each potential failure. (TP = Treatment planning).

Increased RPN values for plan approval failures were attributed to increased time constraints, user inattention, and inadequate training of onsite personnel. Plan preparation, however, experienced reduced RPN values. Failure occurrence was deemed to decrease for many plan preparation failure modes (preparation of localization images, transferring the plan to the delivery system, etc.) due to system integration. The probability of detecting these errors was improved since the image and treatment data were handled by staff immediately before transfer to the machine, making any discrepancies more obvious than they otherwise would be.

Reduction of treatment delivery RPN values largely corresponded to reduction of daily setup, positioning, and localization errors, which were improved by ART. The integrated nature of the ART system reduced the likelihood of inconsistencies between the planning and treatment systems. RPN values for severe, systematic delivery system failures experienced no substantial changes.

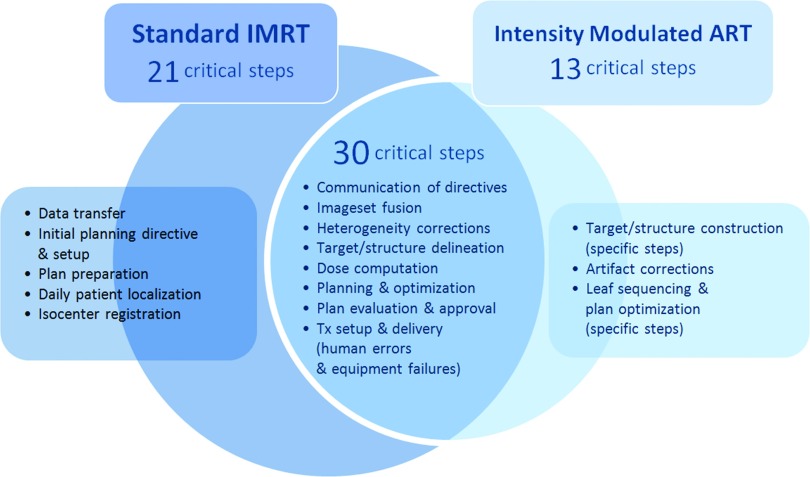

III.C. Critical failures

Forty-three of 127 potential failures analyzed for intensity-modulated ART were identified as potentially critical (RPN ≥ 200). Under the same criteria, 51 failures were identified as potentially critical for standard IMRT. While ART introduced 13 new critical failures, 30 critical failures were common between ART and standard IMRT (Fig. 3). RPN values were higher in the majority (n = 23/30) of these common failures for ART than for standard IMRT. Delineation errors, optimization errors, and equipment failures during treatment delivery remained as some of the highest ranked for both standard IMRT and intensity-modulated ART, due to difficulties in detection. Most failures were elevated to a “critical” rating due to increased time constraints, real-time distractions, and inadequate training of onsite personnel.

FIG. 3.

Processes with occurrence of common and unique critical failures for intensity-modulated ART and standard IMRT. (Tx = treatment).

III.D. Quality control strategies

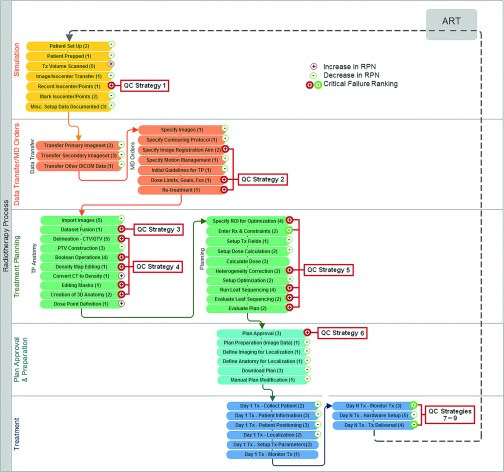

Nine major points in the overall ART process were identified as vulnerable to critical failures. Control strategies for each critical failure are listed in Table III and referenced in the process map displayed in Fig. 4. Key strategies are discussed below.

TABLE III.

Mitigation strategies for ART processes with critical failures.

| Failure | QC strategy | Prototypes and commercial tools | |

|---|---|---|---|

| (1) | Isocenter documentation | Automated isocenter capture, checklists, monitoring trends in daily patient shifts | |

| (2) | Miscommunication of planning directives and failure to properly account for dose accumulation | Well-defined protocols, stable clinical workflow, staff training, integrated record management, electronic physician order, and whiteboard systems | Santanam (Ref. 8), Mallalieu (Ref. 9) |

| (3) | Poor dataset fusion | Automated fusion tools, specialty training for onsite staff | |

| (4) | Incorrect target/structure delineation and construction | Automated contour integrity verification software | ImSimQAcontour, StructSure (not specifically designed for ART) |

| (5) | Poor plan optimization and or incorrect dose computation | Automated software verifying: | RadCalc (LifeLine Software), IMSure (Standard Imaging), muCheck (Oncology Data Systems Imaging), Sun (Ref. 16), Xing (Ref. 24), Yang (Ref. 12) |

| • dose computation | |||

| • leaf sequencing | |||

| • plan integrity | |||

| (6) | Poor plan review | Automated comparisons between planning goals and achieved goals, decision support software | Zhu (Ref. 13), Moore (Ref. 14) |

| (7) | Incorrect interpretation of plan data for treatment delivery | Independent verification software comparing data indicated by the planning to data read by the delivery system | QAPV (IHE-RO) (Ref. 15) |

| (8) | Failures in treatment parameter setup on treatment machine | Simulated delivery, pretreatment (running gantry rotations and MLC patterns without dose output) | Sun (Ref. 16), QUASAR™ Automated Delivery QA Software (Modus Medical) |

| Retrospective MLC QA, post-treatment | |||

| (9) | Failures occurring during treatment delivery | Transmission detectors | In vivo EPID dosimetry, DAVID harp chamber, MatriXXEvolution, investigational transmission detectors [Islam (Ref. 19), Goulet (Ref. 20), Wong (Ref. 21)] |

| Real-time MLC/gantry monitoring | Jiang (Ref. 22) |

FIG. 4.

Flow diagram of major intensity-modulated ART processes and subprocesses. The number of failure modes per subprocess is included in parenthesis. Each subprocess is annotated to indicate an increase or decrease in average RPN of the associated failure modes. Critical failures are indicated by bold symbols. Process points necessitating QC strategies are also indicated. Corresponding QC strategies are listed in Table II. QC = Quality control.

Failures associated with isocenter documentation and communication of planning and fusion directives for adaptive treatment (including the proper documentation and interpretation of tracked dose accumulation from fraction to fraction) necessitate a combination of well-documented protocols, stable clinical workflow, staff training, and a reliable record management system. Electronic physician ordering and whiteboard systems are also an effective means for mitigating communication failures.8,9 Quality control measures for dataset fusion include both automated fusion tools and trained manual inspection.10

Although automated segmentation tools will be commonplace for online ART to increase efficiency and reduce human error, automated quality control software is necessary for error detection. A system for inspection and comparison of the position, size, shape, and volume of newly contoured structures to previously contoured structure(s) would enable an evaluation of contour accuracy using quantifiable metrics. Commercial software tools such as ImSimQAcontour (Modus Medical Devices, Inc.) and StructSure (Standard Imaging, Inc.) already offer similar capabilities designed to test interuser and intersystem agreement, and could be extended for detection of recontouring errors. For application to ART, appropriate metrics and tolerances for mobile and deformable structures must be established. One option is to use a clinical database of acceptable ranges. Tolerances could also be constructed based on physiologic models. Alternatively, a redundancy check using a separate, independent autosegmentation system could also be employed.

Automated quality control software will also be instrumental in detecting planning errors and facilitating more effective plan review. Peng et al.11 have described the use of a commercial monitor unit verification tool for online ART QA. Yang and Moore12 have described a more comprehensive tool for automated verification of plan integrity, which includes specific error detection for contours (empty, incomplete, etc.), beams (inconsistencies in isocenter, type, etc.), dose calculation parameters, IMRT optimization, and other plan components. The authors found that implementation of their tool led to a decrease in plan-related failures in the clinic, and it is now standard use in their clinical practice. This type of comprehensive automated analysis is ideal for rapid error detection of adaptive plans.

Plan review failures (i.e., approval of poor plans) can be partially mitigated by the quality control software just described, however, those tools are designed to specifically address technical planning errors. Decision support software enabling easily interpreted automated comparisons between planning goals and achieved goals should be commonplace on ART planning software. A more sophisticated solution is to incorporate an automated check against a database of quality-rated plans. This approach for plan evaluation has been explored using various methodologies, including machine learning13 and pareto-front type modeling.14 Moore et al.14 demonstrated that the clinical implementation of such a feedback system during planning of head and neck and prostate cases could improve tissue sparing and planning efficiency. This approach could expedite and stabilize the plan review process by establishing a robust baseline for achievable dosimetric goals specific to the site, planning, technique, patient geometry, and other parameters.

Alternatives to the traditional patient-specific phantom-based IMRT QA process (which was assumed to be absent) for detection of treatment parameter interpretation/setup failures should be considered. Software- or user-based plan data interpretation errors affecting the accurate delivery of the intended plan could be detected with independent software. The ongoing development of the QAPV (Quality Assurance with Plan Veto) profiler by the IHE-RO group (Integrating the Healthcare Enterprise-Radiation Oncology)15 would ideally support such a solution. The QAPV framework is designed to compare treatment plan parameters read by the delivery system with plan parameters indicated by the planning system in order to identify discrepancies. In the absence of a secondary verification check, additional measures would need to be taken to verify that treatment parameters are correctly uploaded by the treatment machine. One approach is to perform a “simulated” treatment before delivery, which would entail running the treatment machine through planned gantry angles and multileaf collimator (MLC) patterns without activating the radiation beam. Comparison of machine delivery log files to planned patterns could reveal failures—a practice that has been shown to be both feasible and effective for postdelivery verification.16 This approach is also much less resource intensive than physical measurement-based QA. Retrospective post-treatment QA (simulated or physical phantom-based) could be adequate for delivery systems that demonstrate time-tested stability. It is important to note, however, that these methods (patient-specific IMRT QA included) do not detect clinical planning errors such as suboptimal or poor dosimetry, which collectively yielded higher RPN values than treatment parameter setup errors for both standard IMRT and ART.

Pretreatment verification is also not necessarily sensitive to failures occurring during delivery, one of the highest rated critical failures for both standard IMRT and ART. In an analysis performed by Ford et al.,17 EPID dosimetry was found to be one of the most effective QA methods of all those commonly available, with the ability to detect errors that even pretreatment phantom-based IMRT missed. Investigational studies demonstrate that real-time EPID dosimetry could even be used to detect errors as they occur,18 enabling immediate intervention. Transmission detectors that can be mounted onto the gantry head for online treatment monitoring have also been under development by many groups,19–21 and could play a key QA role for ART. Devices which are commercially available include the MatriXXEvolution transmission detector (IBA Dosimetry, Germany), which is marketed as an arc treatment QA device, and the DAVID harp chamber device (PTW, Germany), which is specifically designed for real-time dosimetric monitoring. Although not based on direct dosimetric measurements, post-treatment or real-time monitoring of MLC log files is a simpler solution. This approach has been demonstrated by several groups16,22 and log-file QA software is now commercially available for use in the clinic (Modus Medical Devices, Inc.). It has been suggested that supplementing MLC log file analysis with independent dose calculations may be a better QA method than traditional measurement-based approaches due to increased sensitivity to dose calculation errors, heterogeneity errors, and beam modeling errors.16

Many of these tools are not yet commercially available, however, their need is widely recognized. Most (if not all) of these tools can be of value for standard IMRT as well. As these tools continue to be developed, data demonstrating their efficacy will offer valuable insight into their usefulness for ART.

IV. DISCUSSION

Evaluation of the ART risk profile suggests that intensity-modulated ART introduces different (but not necessarily more) risks than standard IMRT. While ART was particularly more vulnerable to failures in planning and delivery, most of the critical failures were deemed high-risk for both ART and standard IMRT. Critical risks unique to intensity-modulated ART were deemed manageable with proper mitigation. Furthermore, risks associated with patient positioning and localization failures were substantially reduced, illustrating a primary advantage of adaptive techniques. In many cases, the severity of systematic errors accumulating over multiple fractions was deemed less severe for ART. It was reasoned that a systematic error occurring for standard IMRT would affect every treatment fraction leading to a larger cumulative error, while a systemic error occurring for ART would affect only the fractions for which the ART plan was used.

Examining the risks of intensity-modulated ART with respect to risks of standard IMRT is intended to offer clinical context, and not to draw direct comparisons between the overall levels of risk of these two treatment techniques. Overall risk will be dependent on cumulative error and the frequency and nature of plan adaptation, which may be difficult to quantify. The use of FMEA, however, enables identification and comparison of process-specific risks. Using the recommended FMEA values of TG-100 provides a well-developed and standardized baseline for analysis, and allows for the comparison of QA needs for standard and adaptive treatment. The use of a standardized baseline is an important feature of this work, since the assessment of risk values can be subjective across groups performing the FMEA. However, a major limitation of the prospective FMEA presented here is that it relies on expert opinion. It is important to appreciate that every clinic is unique. Prior to implementing ART in practice, each clinic should develop their own specific process map to identify potential error pathways. This is not only an essential component for characterizing specific risk profiles for individual practices, but also opens a dialogue for the identification and management of new risks that emerge with the implementation of new tools and technologies. The value of a process-based framework is that it is easily extendable as new tools and resources are introduced, however it does not serve to guarantee risk-free implementation of new procedures. As ART is brought to practice, data-driven analysis will provide a more quantitative assessment of risk. For example, Ford et al.17 have demonstrated how the use of a clinical error database can be used to identify common failures and assess the effectiveness of quality control strategies for standard radiotherapy.

While process-based quality management is a relatively new practice for the radiotherapy field, it offers a pragmatic approach to a complex problem. When viewed within the traditional quality management framework, developing an implementation strategy for ART is daunting. Conventional QA practices generally embody a micromanagement strategy which emphasizes detection of technical errors and device-based testing. This is problematic in an environment of increasingly more complex systems, and some conventional QA methods are becoming impractical. For example, there is now evidence that phantom-based IMRT QA is one of the least effective routine QA measures,17 and some have suggested that software tools could potentially replace measurement-based pretreatment QA as RT equipment becomes increasingly stable.16,23 This position is controversial, and will undoubtedly continue to be a point of contention.

At the very least, the development of alternative strategies, such as onboard QA devices and quality control software, indicates that investigators and vendors alike are taking measures to overcome such challenges. Furthermore, the recent advocacy of process-based approaches for RT quality management4–6 indicates an appreciation for the shifting QA paradigm by community leaders. This study demonstrates the value of such a process-based technique in facilitating the safe clinical implementation of adaptive treatment.

ACKNOWLEDGMENTS

This publication was supported by the Washington University Institute of Clinical and Translational Sciences Grant No. UL1 TR000448, Subaward No. TL1 TR000449, from the National Center for Advancing Translational Sciences. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. The authors also report the following possible conflicts of interest (those not listed reported no potential conflicts): Dr. Camille Noel: Recipient of grant from the National Institutes of Health (NIH). Dr. Parag Parikh: Recipient of grants/grants pending from ViewRay, Inc., Varian Medical Systems; recipient of payment for lectures including service on speakers’ bureaus from Varian Medical Systems. Dr. Sasa Mutic: Recipient of payment for consultancy for ViewRay, Inc.; recipient of grants/grants pending from Varian Medical Systems, ViewRay, Inc.; recipient of payment for lectures including service on speakers’ bureaus from Varian Medical Systems.

REFERENCES

- 1. Wu Q. J., Li T., Wu Q., and Yin F. F., “Adaptive radiation therapy: Technical components and clinical applications,” Cancer J. (Sudbury, MA) 17, 182–189 (2011). 10.1097/PPO.0b013e31821da9d8 [DOI] [PubMed] [Google Scholar]

- 2. Jaffray D. A., “Image-guided radiotherapy: From current concept to future perspectives,” Nat. Rev. 9, 688–699 (2012). 10.1038/nrclinonc.2012.194 [DOI] [PubMed] [Google Scholar]

- 3. Yeung T. K., Bortolotto K., Cosby S., Hoar M., and Lederer E., “Quality assurance in radiotherapy: Evaluation of errors and incidents recorded over a 10 year period,” Radiother. Oncol. 74, 283–291 (2005). 10.1016/j.radonc.2004.12.003 [DOI] [PubMed] [Google Scholar]

- 4. Williamson J. F., Dunscombe P. B., Sharpe M. B., Thomadsen B. R., Purdy J. A., and Deye J. A., “Quality assurance needs for modern image-based radiotherapy: Recommendations from 2007 interorganizational symposium on “quality assurance of radiation therapy: challenges of advanced technology”,” Int. J. Radiat. Oncol., Biol., Phys. 71, S2–S12 (2008). 10.1016/j.ijrobp.2007.08.080 [DOI] [PubMed] [Google Scholar]

- 5. Terezakis S. A., Pronovost P., Harris K., Deweese T., and Ford E., “Safety strategies in an academic radiation oncology department and recommendations for action,” Joint Commission J. Quality Patient Safety/Joint Commission Resources 37, 291–299 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Huq M. S., Fraass B. A., Dunscombe P. B., J. P. Gibbons, Jr., Ibbott G. S., Medin P. M., Mundt A., Mutic S., Palta J. R., Thomadsen B. R., Williamson J. F., and Yorke E. D., “A method for evaluating quality assurance needs in radiation therapy,” Int. J. Radiat. Oncol., Biol., Phys. 71, S170–S173 (2008). 10.1016/j.ijrobp.2007.06.081 [DOI] [PubMed] [Google Scholar]

- 7. Thomadsen B. R., Dunscombe P., Ford E., Huq S., Pawlicki T., and Sutlief S., “Risk assessment using TG-100 methodology Table 4-3,” Quality and Safety in Radiotherapy: Learning New Approaches in Task Group 100 and Beyond, AAPM Monograph No. 36 (AAPM, Madison, WI, 2013), pp. 95–112. [Google Scholar]

- 8. Santanam L., Brame R. S., Lindsey A., Dewees T., Danieley J., Labrash J., Parikh P., Bradley J., Zoberi I., Michalski J., and Mutic S., “Eliminating inconsistencies in simulation and treatment planning orders in radiation therapy,” Int. J. Radiat. Oncol., Biol., Phys. 85, 484–491 (2013). 10.1016/j.ijrobp.2012.03.023 [DOI] [PubMed] [Google Scholar]

- 9. Mallalieu L. B., Kapur A., Sharma A., Potters L., Jamshidi A., and Pinsky J., “An electronic whiteboard and associated databases for physics workflow coordination in a paperless, multi-site radiation oncology department,” Med. Phys. 38, 3755 (2011). 10.1118/1.3613130 [DOI] [Google Scholar]

- 10. Sharpe M. and Brock K. K., “Quality assurance of serial 3D image registration, fusion, and segmentation,” Int. J. Radiat. Oncol., Biol., Phys. 71, S33–S37 (2008). 10.1016/j.ijrobp.2007.06.087 [DOI] [PubMed] [Google Scholar]

- 11. Peng C., Chen G., Ahunbay E., Wang D., Lawton C., and Li X. A., “Validation of an online replanning technique for prostate adaptive radiotherapy,” Phys. Med. Biol. 56, 3659–3668 (2011). 10.1088/0031-9155/56/12/013 [DOI] [PubMed] [Google Scholar]

- 12. Yang D. and Moore K. L., “Automated radiotherapy treatment plan integrity verification,” Med. Phys. 39, 1542–1551 (2012). 10.1118/1.3683646 [DOI] [PubMed] [Google Scholar]

- 13. Zhu X., Ge Y., Li T., Thongphiew D., Yin F. F., and Wu Q. J., “A planning quality evaluation tool for prostate adaptive IMRT based on machine learning,” Med. Phys. 38, 719–726 (2011). 10.1118/1.3539749 [DOI] [PubMed] [Google Scholar]

- 14. Moore K. L., Brame R. S., Low D. A., and Mutic S., “Experience-based quality control of clinical intensity-modulated radiotherapy planning,” Int. J. Radiat. Oncol., Biol., Phys. 81, 545–551 (2011). 10.1016/j.ijrobp.2010.11.030 [DOI] [PubMed] [Google Scholar]

- 15. Noel C. E., Gutti V. R., Bosch W., Mutic S., Ford E., Terezakis S., and Santanam L., “Quality Assurance With Plan Veto: Reincarnation of a Record and Verify System and Its Potential Value,” Int. J. Radiat. Oncol. Biol., Phys. 88, 1161–1166 (2014). 10.1016/j.ijrobp.2013.12.044 [DOI] [PubMed] [Google Scholar]

- 16. Sun B., Rangaraj D., Boddu S., Goddu M., Yang D., Palaniswaamy G., Yaddanapudi S., Wooten O., and Mutic S., “Evaluation of the efficiency and effectiveness of independent dose calculation followed by machine log file analysis against conventional measurement based IMRT QA,” J. Appl. Clin. Med. Phys. 13 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Ford E. C., Terezakis S., Souranis A., Harris K., Gay H., and Mutic S., “Quality control quantification (QCQ): A tool to measure the value of quality control checks in radiation oncology,” Int. J. Radiat. Oncol., Biol., Phys. 84, e263–e269 (2012). 10.1016/j.ijrobp.2012.04.036 [DOI] [PubMed] [Google Scholar]

- 18. Fuangrod T., O’Connor D. J., McCurdy B. M. C., and Greer P. B., “Development of EPID-based real time dose verification for dynamic IMRT,” World Acad. Sci., Eng. Technol. 80, 609 (2011). [Google Scholar]

- 19. Islam M. K., Norrlinger B. D., Smale J. R., Heaton R. K., Galbraith D., Fan C., and Jaffray D. A., “An integral quality monitoring system for real-time verification of intensity modulated radiation therapy,” Med. Phys. 36, 5420–5428 (2009). 10.1118/1.3250859 [DOI] [PubMed] [Google Scholar]

- 20. Goulet M., Gingras L., and Beaulieu L., “Real-time verification of multileaf collimator-driven radiotherapy using a novel optical attenuation-based fluence monitor,” Med. Phys. 38, 1459–1467 (2011). 10.1118/1.3549766 [DOI] [PubMed] [Google Scholar]

- 21. Wong J. H., Fuduli I., Carolan M., Petasecca M., Lerch M. L., Perevertaylo V. L., Metcalfe P., and Rosenfeld A. B., “Characterization of a novel two dimensional diode array the “magic plate” as a radiation detector for radiation therapy treatment,” Med. Phys. 39, 2544–2558 (2012). 10.1118/1.3700234 [DOI] [PubMed] [Google Scholar]

- 22. Jiang Y., Gu X., Men C., Jia X., Li R., Lewis J., and Jiang S., “Real-time dose reconstruction for treatment monitoring [abstract],” Med. Phys. 37, 3400 (2010). 10.1118/1.3469288 [DOI] [Google Scholar]

- 23. Pawlicki T., Yoo S., Court L. E., McMillan S. K., Rice R. K., Russell J. D., Pacyniak J. M., Woo M. K., Basran P. S., Shoales J., and Boyer A. L., “Moving from IMRT QA measurements toward independent computer calculations using control charts,” Radiother. Oncol. 89, 330–337 (2008). 10.1016/j.radonc.2008.07.002 [DOI] [PubMed] [Google Scholar]

- 24. Xing L. and Li J. G., “Computer verification of fluence map for intensity modulated radiation therapy,” Med. Phys. 27, 2084–2092 (2000). 10.1118/1.1289374 [DOI] [PubMed] [Google Scholar]