Abstract

For decades, psychologists and neuroscientists have hypothesized that the ability to perceive emotions on others’ faces is inborn, pre-linguistic, and universal. Concept knowledge about emotion has been assumed to be epiphenomenal to emotion perception. In this paper, we report findings from three patients with semantic dementia that cannot be explained by this “basic emotion” view. These patients, who have substantial deficits in semantic processing abilities, spontaneously perceived pleasant and unpleasant expressions on faces, but not discrete emotions such as anger, disgust, fear, or sadness, even in a task that did not require the use of emotion words. Our findings support the hypothesis that discrete emotion concept knowledge helps transform perceptions of affect (positively or negatively valenced facial expressions) into perceptions of discrete emotions such as anger, disgust, fear and sadness. These findings have important consequences for understanding the processes supporting emotion perception.

The ability to perceive discrete emotions such as anger, disgust, fear, sadness, etc. in other people is a fundamental part of social life. Without this ability, people lack empathy for loved ones, make poor social judgments in the boardroom and classroom, and have difficulty avoiding those who mean them harm. The dominant paradigm in emotion research for the past 40 years, called the “basic emotion” approach, assumes that humans express and detect in others discrete emotions such as anger (i.e., a scowl), sadness (i.e., a pout), fear (i.e., wide eyes), disgust (i.e., a wrinkled nose), or happiness (i.e., a smile) (Ekman et al., 1987; Izard, 1971; Matsumoto, 1992; Tracy & Robins, 2008). Scientists largely assume that this detection ability is inborn, universal across all cultures, and psychologically primitive (i.e., it cannot be broken down into more basic psychological processes). Concept knowledge about discrete emotion that is represented in language is assumed to be irrelevant to the ability to perceive discrete emotion in faces (Ekman & Cordaro, 2011). This “basic emotion” view is a standard part of the psychology curriculum taught at universities in the Western world, and drives research in a range of disciplines including cognitive neuroscience (Sprengelmeyer et al., 1998), interpersonal communication and conflict negotiation (Kuppens et al., in press) and psychopathology (Fu et al., 2008; Kohler et al., 2010). The US government also relies on this framework to train security personnel to identify the covert intentions of people who pose a threat to its citizens (Burns, 2010; Weinberger, 2010).

Emotion concepts shape discrete emotion perception

Despite the prevalence of the basic emotion view, growing evidence suggests that discrete emotion perception is not psychologically basic, and in fact depends on more “elemental” psychological processes such as 1) perceptions of basic affective valence in faces (i.e., detecting facial behaviors that correspond to positive v. negative v. neutral feelings), and 2) the ability to make meaning of those affective facial behaviors using concept knowledge about discrete emotion (i.e., the set of concepts about discrete emotion that a person knows, which are relevant to a given culture; Barrett, Lindquist, et al., 2007; Lindquist & Gendron, 2013).1

A growing literature demonstrates that accessible emotion concept knowledge shapes how individuals make meaning of affective facial expressions as instances of discrete emotions such as “anger,” “disgust,” “fear,” etc. For instance, two-year old children, who possess only the rudimentary concepts of “sad” and “happy,” can correspondingly only perceive faces in terms of affective valence (e.g., they categorize all unpleasant faces, including scowling, pouting, wide eyed and wrinkle nosed faces as “sad” and all smiling faces as “happy”). Yet as children gradually acquire additional discrete emotion concepts over the course of development (e.g., “anger,” “disgust,” “fear”), they are subsequently able to perceive unpleasant faces (pouts, scowls, wide eyes, wrinkled noses) as instances of distinct discrete emotion categories. For instance, by age seven, when children have learned the meanings of “sad,” “anger,” “fear” and “disgust” they reliably perceive pouting faces as “sad,” scowling faces as “anger,” wide eyed faces as “fear,” and wrinkle nosed faces as “disgust” (Widen & Russell, 2010).

The role of concept knowledge in emotion perception is not limited to early development, however. Evidence suggests that even healthy adults rely on concept knowledge during emotion perception tasks, regardless of whether that task explicitly involves labeling of faces or not. For instance, healthy adults from most cultures can easily select the word that best matches the expression with a relatively high degree of agreement (e.g., the word “anger” would best match a scowling face; Elfenbein & Ambady, 2002) when emotion word labels are explicitly given in an experimental task (e.g., a posed facial expression is presented with a set of emotion adjectives). Yet it is possible to dramatically impair discrete emotion perception—and thus reduce accuracy on a task such as this—by merely manipulating those adults’ ability to access the meaning of discrete emotion words. This even occurs in tasks that do not explicitly require labeling of faces. For instance, disrupting access to the meaning of discrete emotion concepts such as “anger,” “disgust,” or “fear,” by having participants repeat other words during the discrete emotion perception task (called “verbal overshadowing”), impairs participants’ ability to distinguish between facial portrayals of anger and fear as categorically different emotional expressions (Roberson & Davidoff, 2000). The simple removal of discrete emotion words from the experimental task produces a similar effect (Fugate et al., 2010). An experimental manipulation that temporarily renders the meaning of an emotion word inaccessible—called semantic satiation—also reduces the speed and accuracy of discrete emotion perception (Lindquist et al., 2006). For instance, after repeating a relevant discrete emotion word (e.g., “anger”) out loud 30 times until the meaning of the word becomes temporarily inaccessible, participants are slower and less accurate to judge that two scowling faces match one another in emotional content (Lindquist et al. 2006). Since the emotion judgment task used in Lindquist et al. (2006) might implicitly require use of discrete emotion words, we replicated and extended these findings more recently using a perceptual priming task that does not require the use of emotion words. We found that following semantic satiation of a relevant discrete emotion word (e.g., “anger”), a face posing discrete emotion (e.g., a scowling face) is literally seen differently by participants. For instance, the emotional face perceived when the meaning of an emotion word is inaccessible (e.g., a scowling face perceived after semantic satiation of the word “anger”) does not perceptually prime itself on a later presentation (e.g., the same scowling face perceived when the word “anger” is accessible; Gendron et al., 2012). Although these careful experimental manipulations produced data consistent with the hypothesis that concept knowledge about emotion shapes instances of positive and negative facial muscle movements into perceptions of discrete emotions, a powerful test of this hypothesis is to examine discrete emotion perception in people who have naturally occurring and permanently impaired concept knowledge.

A case study of discrete emotion perception in semantic dementia

In the present report, we assessed discrete emotion perception in individuals with a neurodegenerative disease that impairs access to and use of concept knowledge. Semantic dementia is a progressive neurodegenerative disease—one form of primary progressive aphasia (Gorno-Tempini et al., 2011b)—that results in notable impairments in concept knowledge availability and use (Hodges & Patterson, 2007). Research on semantic dementia has traditionally documented impairments to conceptual knowledge for objects, plants, and animals (Bozeat et al., 2002; Hodges et al., 2000; Lambon Ralph et al., 2009). Such impairments are associated with relatively focal (typically left-lateralized) neurodegeneration in the anterior temporal lobes, which are hypothesized to be hubs in a distributed network subserving semantic memory and conceptual knowledge (Binder et al., 2009; Lambon Ralph et al., 2009; Visser et al., 2010). Early in the course of the disease, semantic dementia is associated with the very specific inability to understand the meaning of words, amidst normal visual processing, executive control, comportment and behavior.

Sometimes termed the “temporal lobe” variant of frontotemporal dementia (FTD), semantic dementia is a sub-class of the broader diagnosis of frontotemporal dementia. As such, some patients can develop a broader set of lesions in frontal or other temporal lobe regions affecting brain areas involved in other psychological processes such as executive control (e.g., dorsolateral prefrontal cortex) or visuo-spatial processing (e.g., hippocampus, perirhinal cortex). Due the heterogeneity inherent in frontotemporal dementia, we carefully selected three patients who had specific anatomical and behavioral profiles of semantic dementia; this allowed us to perform a very precise test of our hypothesis about emotion perception. First, we selected patients with a specific neurodegeneration pattern: we selected only those patients with relatively focal lesions to the anterior temporal lobes. Second, we selected patients displaying specific behavioral patterns: we performed a host of neuropsychological tests and control experimental tasks to ensure that the patients in our sample had semantic deficits but relatively preserved executive function and visuospatial processing. To rule out that patients had more general affective abnormalities that might result in impaired discrete emotion perception, we also relied on clinical assessments to ensure that patients had normal comportment and behavioral approach and avoidance. Together, these rigorous inclusion criteria allowed us to perform a strong test of the hypothesis that impaired conceptual knowledge results in impaired discrete emotion perception.

Growing evidence documents deficits in emotion perception in semantic dementia (Calabria et al., 2009; Rosen et al., 2004), but no research to date has specifically addressed the hypothesis that impairments in concept knowledge contribute to impairments in discrete emotion perception. Patients with semantic dementia have difficulties labeling facial expressions of emotion (Calabria et al., 2009; Rosen et al., 2004), but such findings are typically interpreted as evidence that patients can understand the meaning of emotional faces but perform poorly on experimental tasks due to an inability to manipulate labels (Miller et al., 2012). If this were the case, language would have an impact on the communication of discrete emotion perception, but not the understanding of emotional facial behaviors. Yet the possibility remains that concept knowledge plays a much more integral role in discrete emotion perception by helping to transform perceptions of affective facial expressions into perceptions of discrete emotions. In this view, conceptual knowledge for emotion is necessary for discrete emotion perception to proceed. To test this hypothesis, we use a case study approach to test emotion perception abilities in three patients with semantic dementia. Because these three patients have relatively isolated impairments in semantic memory without impairments in executive function, visuospatial abilities, comportment, or behavioral approach or avoidance, they provide an opportunity to perform a targeted test of the hypothesis that concept knowledge is necessary for discrete emotion perception.

The Present Study

To assess whether concept knowledge is important to normal discrete emotion perception, we designed a task in which patients with semantic dementia (who have difficulty using semantic labels) could demonstrate their discrete emotion perception abilities without relying on linguistic emotion concepts. We thus designed a sorting task that would assess spontaneous emotion perception and not emotion labeling per se. Patients were presented with pictures of scowling, pouting, wide eyed, wrinkle nosed, and smiling faces, as well as posed neutral faces and were asked to freely sort the pictures into piles representing as many categories as were meaningful. We used posed depictions of discrete emotions (the standard in most scientific studies of emotion perception) because they are believed to be the clearest and most universally recognizable signals of discrete emotions by basic emotion accounts (Ekman et al., 1987; Matsumoto, 1992).

To rule out that patients had other deficits that would impair their performance on the discrete emotion sort task, we also asked them to also perform a number of control sort tasks. In these control sort tasks, patients were asked to sort the faces into six piles anchored with six numbers (a number anchored sort), to sort the faces into six piles anchored with six posed discrete emotional facial expressions (a face anchored sort), to sort the faces into six piles anchored with six emotion category words (a word anchored sort), or to free sort the faces by identity (an identity sort). Whereas the number and word anchored sorts ruled out the alternate hypothesis that patients could perform a discrete sort when cued to the correct number or names of categories, the face anchored and identity sorts ruled out the more general alternative interpretations that 1) patients did not understand how to sort pictures, 2) that they had visual impairments that prevented them from perceiving differences between faces (i.e., prosopagnosia or more general visuospatial deficits), 3) or that they had cognitive impairments in executive function that would cause them to perform poorly on any sorting task. Patients’ performance on these sorts,along with our neuropsychological findings (see supplementary materials), rules out alternate explanations of patients’ performance on the discrete emotion sort tasks.

In line with our hypothesis that normal discrete emotion perception relies on concept knowledge of emotion, we predicted that patients’ semantic deficits would be associated with difficulty perceiving same-valence discrete emotional facial expressions (e.g., anger vs. fear vs. disgust vs. sadness). Yet mirroring the developmental findings that infants and young children, who have limited conceptual knowledge of emotion, can detect positive and negative affect on faces (for a review see, Widen & Russell, 2008b), we predicted that patients with semantic dementia would have relatively preserved perception of positive vs. negative vs. neutral expressions (i.e., affective valence). This hypothesis would be supported in our study if healthy control adults, who have access to conceptual knowledge of emotion, spontaneously produced six piles for anger, disgust, fear, sadness, happiness and neutral expressions and rarely confused multiple negative faces for one another (e.g., would not treat pouting, scowling, wide-eyed and wrinkled nose faces as members of the same category by placing them in the same pile). Patients, on the other hand, would spontaneously sort faces into piles corresponding to positive, negative, and neutral affect and would additionally make errors in which they confused multiple negative faces for one another (e.g., would treat pouting, scowling, wide-eyed and wrinkled nose faces as members of the same category by placing them in the same pile)2. In contrast, a basic emotion hypothesis would predict that emotion perception is a psychologically primitive process evolved from ancestral primate communicative displays (Lewis, 1993; Sauter et al., 2010) that does not rely on concept knowledge (cf., Ekman & Cordaro, 2011). If this hypothesis were supported, we would not observe a difference between control participants’ and patients’ sorts. At the very least, if patients were impaired in discrete emotion perception as compared to controls, a basic emotion view would not predict that patients would show maintained perception of affect (positive, negative and neutral valence). According to the basic emotion view, the perception of discrete emotion is more psychologically fundamental than the perception of affect, meaning that discrete emotion perception should precede affect perception (e.g., a person has to know that a face is fearful to know it’s negative; Keltner & Ekman, 2000).

Methods

Participants

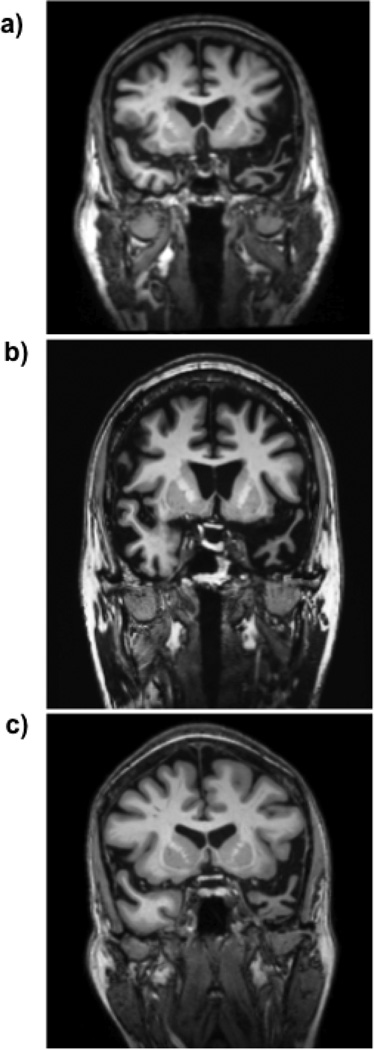

We studied three patients (EG, a 70-year old right-handed male; FZ, a 64-year old right-handed male; and CP, a 53-year old right-handed female) with a relatively rare form of neurodegenerative disorder known as semantic dementia. Each patient was diagnosed with semantic dementia by a team of neurologists based on their behavioral symptoms, neuroanatomy, and performance on neuropsychological assessments. Each patient presented to the clinic with gradually progressive problems recalling the meaning of words (i.e., anomia) and was found to have a semantic memory deficit on neuropsychological testing. Neuropsychological tests were collected in a hospital clinic; we rely on the neuropsychological data collected in that setting. Neuropsychological tests revealed that patients had relatively specific semantic deficits amidst normal intellectual abilities, executive function, and visuospatial performance. No patient exhibited evidence of impaired recognition of visual objects (i.e., visual agnosia) or faces (i.e., prosopagnosia). See supplementary online materials (SOM) for case histories and neuropsychological test results confirming the specificity of each patient’s semantic impairments. Consistent with the diagnosis of semantic dementia, structural Magnetic Resonance Imaging revealed relatively focal left temporal pole atrophy in each patient’s brain (see Figure 1, a–c).

Figure 1. MRI scans of patients EG, FZ and CP.

T1-weighted MRI scans of (a) patient EG (b) patient FZ (c) and patient CP showing left-lateralized anterior temporal lobe atrophy; images are shown in radiological orientation (the left hemisphere appears on the right hand side of the image).

Patients’ performance was compared to the performance of 44 age-matched control participants (Mage = 74.14, SDage =5.89), who also performed the discrete emotional free sort. Control participants were recruited from the community and participated at a local university. We did not include a patient control sample because it was difficult to find a sample of patients with another form of dementia whose performance would demonstrate a clear double dissociation on the sort tasks we employed. For instance, patients with other types of neurodegenerative diseases (e.g., Behavioral Variant Frontomteporal Dementia; Alzheimer’s Disease) can perform poorly on the type of sort task we used for numerous reasons including attentional deficits, behavioral impulsivity, or impairments in affective valuation. As a result, we compared the performance of our semantic dementia sample directly to healthy controls, while also instituting multiple control tasks within the semantic dementia sample to rule out alternate explanations for our findings. This approach was warranted for several reasons. First, the aim of our study was not to unveil new defining characteristics of semantic dementia, but rather to isolate those characteristics already known to exist in order to test a specific hypothesis about emotion perception. Second, we instituted a number of within-subjects control tasks with our patient sample to rule out other explanations of our findings. Notably, both of these approaches are taken in other published case studies of patients with semantic dementia (Lambon-Ralph et al. 2010).

Materials

Face sort stimuli

The set contained posed, caricatured, pictures of facial expressions corresponding to 6 different emotion categories (anger=scowl, sadness=pout, disgust=wrinkled nose, fear=wide eyes, happiness=smile, neutral=relaxed facial muscles). Images were selected from the IASLab Face Set (www.affective-science.org) and the Research Network on Early Experience and Brain Development Face Set (2006, NimStim Face Stimulus Set; http://www.macbrain.org/resources.htm). A long version of the task contained 20 identities and a short version contained 6. All identities were European American and an equal number of male and female identities were used. Patients did not sort faces systematically by gender on the emotion sort tasks, so we do not discuss the gender of faces further.

Number anchors

Six pieces of 8.5 × 11 paper containing the numbers 1–6 served as pile anchors.

Face anchors

Six pictures of a single woman from the NimStim Face Stimulus set (Tottenham et al., 2009) posing a scowl, wrinkled nose, wide eyes, pout, smile and a posed neutral face served as pile anchors.

Word anchors

Six pieces of 8.5 × 11 paper containing the words “anger,” “disgust,” “fear,” “happiness,” “sadness” and “neutral” served as pile anchors.

Procedure

The discrete emotion perception tasks were designed to probe changes in affective processing and/or discrete emotion perception. The free sort procedure was designed to identify the categories that patients spontaneously perceived in posed facial expressions with minimal constraints and without asking patients to explicitly use emotion words. As such, we asked participants to sort into the categories they found “meaningful.” This open-ended instruction necessarily ensured that the emotion categories hypothesized to guide perception were not explicitly invoked by the experimental procedure at the outset of the task. Fortunately, the performance of control participants ensured that alternate explanations of the free sort findings were not possible. All control participants immediately understood that discrete emotion categories were the most meaningful way to sort the faces (despite the fact that other categories such as identity and gender were also possible). Furthermore, testing patients on subsequent control tasks ensured that patients did not have difficulty following instructions, as they were able to sort by perceptual features of the expressions when cued. Importantly, additional control tasks (constraining the number of piles patients were told to make, and cueing patients with the names of emotion categories) did not help their performance, which would be predicted if impaired performance was simply due to the interpretation of open-ended instructions. These additional tasks thus helped to clarify the interpretation that patients’ semantic deficits led to impairments in discrete emotion perception. Finally, the identity sort ruled out that patients did not understand how to sort faces, had a visual impairment that prevented them from perceiving differences between faces (i.e., prosopagnosia or other visuospatial deficits), or had cognitive impairments in executive function that would cause them to perform poorly on any sorting task.

Emotional free sort

Participants were handed the face sort stimuli and were asked to freely make piles to represent the number of meaningful categories they perceived in the set. Patients were told, “I am going to give you a pile of pictures. What I want you to do is organize them into groups that are meaningful to you. You can create as many piles as you need to. At the end, each pile should be sorted so that only one type of picture is included. It is sometimes helpful to look through the set of pictures first before you begin sorting. This is not timed, so feel free to take as long as you need. You can also change the piles while you are sorting or at the end—it is up to you. Do you have any questions?” Patients were then asked to sort the pictures into piles on the table. Following completion of the sort, the researcher asked the patient several questions including 1) “Can you tell me about how you sorted the pictures?” Since all patients indicated that they had sorted by feeling, the researcher next asked 2) “How confident are you that all of the people in each pile feel exactly the same way? Not confident, somewhat confident or very confident?” Next, the researcher went through each pile with the participant and asked, “What is in this pile?” If the patient responded with emotion or affect words (e.g., “happy,” “disgust,” “fear” or “good,” “bad”), the researcher asked the patient to label the facial action on the next round by asking, “What expression is on these people’s faces?” If the patient never used an emotion word to describe the pile, the researcher prompted, “What emotion are the people in this pile feeling?” In the present report, all patients understood the instructions well and each immediately sorted the faces into piles representing the affective meaning of the face (positive, negative or neutral). Once the content of each pile was recorded, the researcher shuffled the faces and moved on to the next task.

Number anchored sort

The researcher indicated that the patient should make six piles by laying down the six number anchors. This control task cued the patient to the fact that there were six perceptual categories in the face set and ruled out the alternate interpretation that participants merely did not understand that we wanted them to sort into six piles. Patients were told, “Now I want you to again sort these pictures based on feeling, but this time I am going to ask you to make 6 piles. I have 6 different numbers that I will lay out for you so that you can keep track of how many piles you create. Again, I want you to sort based on feeling. In each pile, there should be only people who feel the same way. At the end, each pile you’ve made should have pictures of only people who feel the same way. Are these instructions clear?”

Face anchored sort

The researcher indicated that the patient should make six piles by laying down the six face anchors. This control task cued the patient to the fact that we were asking them to sort into six categories based on the perceptual features of the faces in the set. It ruled out the alternate interpretations that participants merely did not understand that we wanted them to sort based on the facial expressions or that patients had difficulty visually detecting differences in the expressions. Patients were told, “Now I want you to again sort these pictures based on feeling, but this time I am going to start the piles for you. Here are six different pictures of the same woman. The woman feels differently in each of the pictures. Again, I want you to sort the pictures into these piles I have already started based on feeling. In each pile, there should be only people who feel the same way. At the end of your sorting, each pile you’ve made should have pictures of only people who feel the same. Are these instructions clear?” After patients made piles, the task proceeded as in the emotion free sort.

Word anchored sort

The researcher indicated that the patient should make six piles by laying down the six word anchors. This cued the patient to the names of the six emotion categories in the face set. Adding words to the experimental task typically helps healthy adults become more accurate at discrete emotion perception (Russell, 1994). The word-anchored sort task therefore ruled out several important alternative explanations of our data. First, it ruled out the possibility that patients were able to perceive and sort by discrete emotion but had not done so in the free sort task because the instructions were too vague or because they found other categories to be more relevant. Second, this control task ruled out the possibility that participants had intact concept knowledge but performed poorly on the free sort because they didn’t have the ability or the motivation to spontaneously access those emotion concepts.

To start the sort task, the researcher stated, “Now I want you to again sort these pictures based on feeling, but this time I am going tell you what should be in each of the 6 piles. I have 6 different words that I will lay out for you so that you can keep track of the piles. In each pile, there should be only people who feel the same way. In this pile, I want you to sort people who feel happy. In this pile, I want you to sort people who feel neutral. In this pile, I want you to sort people who feel angry. In this pile, I want you to sort people who feel fearful. In this pile, I want you to sort people who feel sad. In this pile, I want you to sort people who feel disgusted. At the end of your sorting, each pile you’ve made should have pictures of only people who feel the same way. Are these instructions clear?” After patients made piles, the task proceeded like the emotion free sort.

Identity free sort

The researcher instructed the patient to sort into piles based on identity. If patients were unable to do this accurately, then this would be evidence that they had other cognitive or visual deficits that would make them unsuitable participants for the emotion perception task. The researcher stated, “In this pile there are pictures of a bunch of people. There are several pictures of each person in the pile. What I would like you to do is to sort the pictures into piles based on their identity. You can create as many new piles as you need to. At the end, each pile you’ve made should have pictures of only 1 person in it. Are these instructions clear?” When the patient finishes, the researcher asks, “How confident are you that all of the people in each pile are the same exact person? Not confident, somewhat confident or very confident?”

Data Analysis

To assess patients’ performance, we computed the number of piles they created on the emotional free sort and the percentage of errors (where patients confused one type of face for another). Errors were the percentage of faces portraying a different expression from the predominant expression in the same pile (e.g., the number of scowling and wide eyed faces in a pile consisting predominantly of pouting faces). Error types were computed by determining the overall number of within-valence (e.g., one negative face confused with another) or cross-valence (e.g., one negative face confused with a neutral face) errors. See Table 1 for a list of error types.

Table 1.

Emotion Free Sort Performance

| # piles |

NEG-NEUT | POS-NEUT | NEG-NEG | NEUT-NEG | POS-NEG | NEG-POS | NEUT-POS | % Total Errors |

|

|---|---|---|---|---|---|---|---|---|---|

| EGa | 3 | 1.66% | 0% | 46.67%* | 5.83% | 0% | 1.66% | 5.83%* | 61.66%* |

| FZb | 4 | 0% | 0% | 44.44%* | 0% | 0% | 0% | 0% | 44.44% |

| CPb | 4 | 0% | 0% | 36.11%* | 0% | 0% | 2.77% | 0% | 38.89% |

| 44 OAa | 7.82 (SD=2.99) | 2.88% | 0.13% | 21.72% | 2.80% | 0.27% | 0.55% | 1.69% | 30.04% |

long variant of free sort

short variant of free sort

indicates statistically different from controls (p<.05)

Abbreviations:

SD: standard deviation

OA: older adults

NEG-NEUT: errors in which negative faces were put in a pile of predominantly neutral faces

POS-NEUT: refers to errors in which positive faces were put in a pile of predominantly neutral faces

NEG-NEG: errors in which one type of negative face was put in a pile consisting predominantly of another negative face

NEUT-NEG: errors in which neutral faces were put in a pile of predominantly negative faces

POS-NEG: errors in which positive faces were put in a pile of predominantly negative faces

NEG-POS: errors in which negative faces were put in a pile of predominantly positive faces

NEUT-POS: errors in which neutral faces were put in a pile of predominantly positive faces

We used a modified t-test (Anderson et al., in press) to statistically compare patients’ error percentages to those of the 44 control participants. This method is frequently used in case studies to compare patient samples to control samples.

Results and Discussion

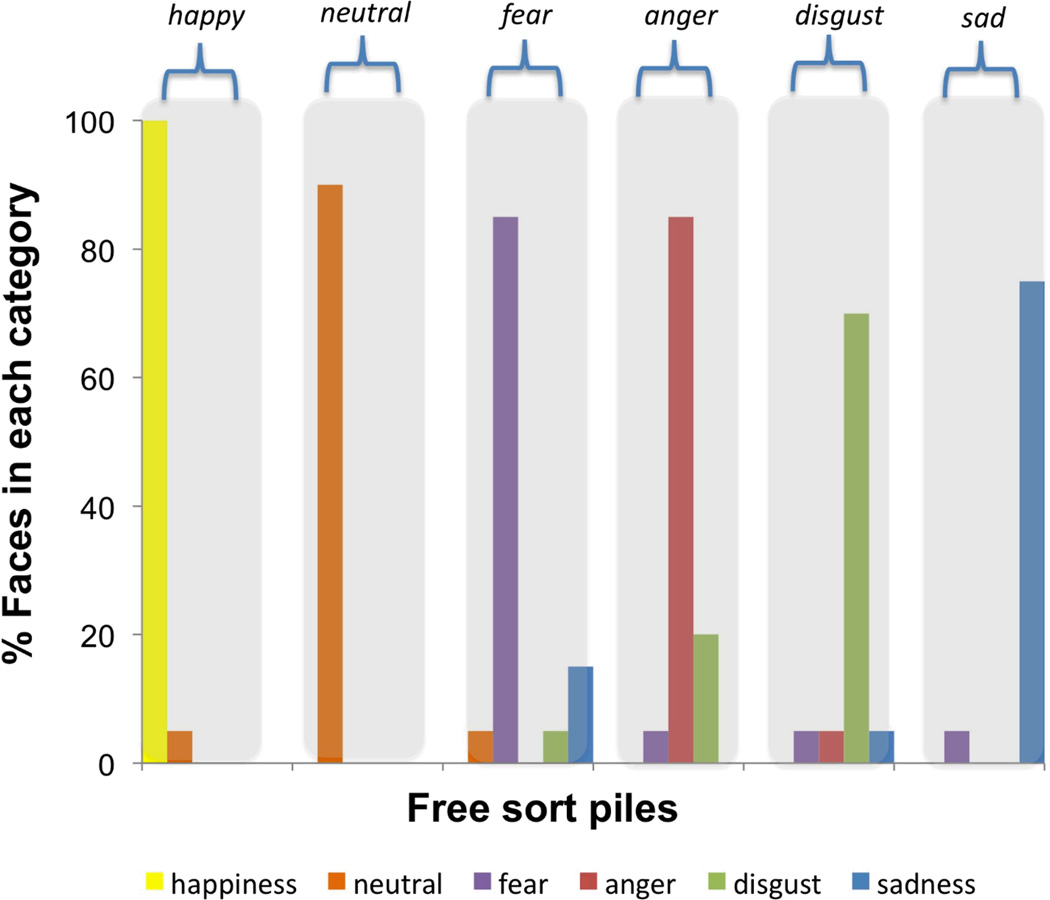

Consistent with our hypothesis that concept knowledge is necessary for normal emotion perception, control participants who had intact concept knowledge for discrete emotion spontaneously perceived scowling, pouting, wide-eyed, wrinkle-nosed, smiling faces, and neutral faces, as instances of anger, sadness, fear, disgust, happiness and neutral emotion. This occurred despite the open-ended nature of our instructions to sort the categories into those they found most “meaningful.” Control participants on average produced six (or more) piles to represent the discrete emotion categories in the set and tended not to confuse negative faces with one another in their piles (i.e., had low neg-neg error rates; see Table 1). The majority of control participants (61%) spontaneously produced either six or seven piles and 96% of control participants produced six or more. Only two control participants (4%) produced fewer than six piles on the sort task; one participant produced four piles and one produced five. Notably, no control participants produced three piles. That only one individual from the control sample (2.2%) spontaneously produced four piles on the sort task, and none produced three, stands in stark contrast to the fact that 100% of our patient sample produced four or fewer piles on the sort task. See Table 1 for controls’ and patients’ mean error rates and Figure 3 for an example of a control participant’s performance.

Figure 3. Examples of a control participants’ performance on the free sort task.

A 69-year old man made six piles to represent the six categories.

Contrary to control participants, and as predicted, patients with semantic dementia, who have impaired concept knowledge, did not spontaneously perceive discrete emotion on faces. The patients in our case study demonstrated preserved affect perception, however, consistent with our hypothesis that affective processing would be intact even in the presence of impaired conceptual knowledge. One interpretation of these findings is that patients were able to perceive discrete emotion on faces, but merely thought that affect was the more “meaningful” category. Yet patients’ performance on the various control tasks effectively rules out this alternate interpretation. For instance, no patient was able to sort by discrete emotion when asked to sort the faces into six categories, or when explicitly asked to sort into piles for “anger,” “disgust,” “fear,” “sadness,” “happiness” or “neutral.” Another interpretation of our findings is that affect perception was merely easier for patients than discrete emotion perception. Again, the performance of the control participants, along with the performance of the patients on the various control tasks, rules out this alternate interpretation. If affect perception was easier than discrete emotion perception, then control participants could also have taken the “easy” route and sorted faces by affect as well, but they did not. More to the point, patients continued to sort by affect on the control tasks, even when these tasks provided extra structure and removed cognitive load by cuing patients to the number, appearance, and even names of the discrete emotion categories. The most parsimonious explanation of our findings is thus that participants had a preserved ability to perceive affect but were unable to perceive discrete emotion on faces.

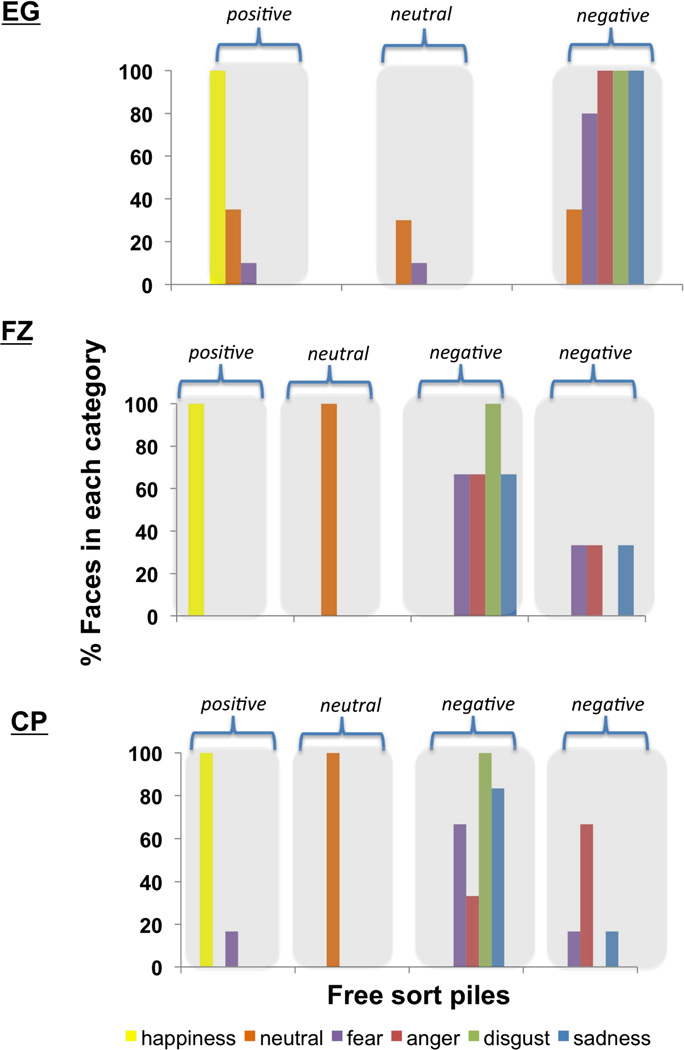

We begin by discussing the findings from patient EG, who was our first case, and as a result, performed fewer control tasks than subsequent cases. We next discuss the findings from patients FZ and CP, who performed all the control tasks in our battery.

Patient EG

Emotional free sort

Consistent with our hypothesis that concept knowledge is necessary for discrete emotion perception, but not affect perception, EG free sorted emotional facial expressions into three piles (see Figure 4) that he later labeled “happy,” “nothing” and “rough.” Compared to controls, EG made more errors in which he confused negative (scowling, pouting, wide-eyed and wrinkle-nosed faces) faces with each other [t(43)=2.78, p<.01; see Table 1], indicating that he could not perceive the differences between expressions for anger, disgust, fear, and sadness.

Figure 4. Patient’s performance on the emotion free sort task.

In EG’s free sort, the first pile contained predominantly happy faces, the second pile contained predominantly neutral faces and the third pile contained predominantly negative faces (scowling, pouting, wide-eyed and wrinkle-nosed faces). In FZ’s free sort, the first pile contained all happy faces, the second pile contained all neutral faces, and the third and fourth piles contained all negative faces. In CP’s free sort, the first pile contained predominantly happy faces, the second pile contained all neutral faces, and the third and fourth piles contained all negative faces.

Face-anchored sort

EG’s inability to distinguish negative discrete emotional expressions from one another was not due to an inability to detect the facial actions of pouting, scowling, wide-eyes, etc. or a general inability to perform any sort task. EG performed the control face-anchored sort task perfectly; he could detect perceptual differences in the expressions and match a scowl to a scowl, a pout to a pout, and so forth. In light of these findings, his performance on the free sort indicated that without access to emotion concept knowledge, he did not understand the psychological meaning of facial expressions at a level more nuanced than simple affective valence.

Patients FZ and CP

Emotional free sort

Both FZ and CP performed similarly to EG on the emotional free sort. FZ produced 4 piles (see Figure 4), which he labeled “happy,” “sad,” “normal,” and a fourth pile that he variously called “sad,” “mad,” and “questioning” at different points throughout the study (indicating that what these faces shared in common was negative valence). Like EG, FZ made more errors in which he confused negative faces with one another (scowling, pouting, wide-eyed and wrinkle-nosed faces) than did controls [t(43)=2.53, p<.02; see Table 1], but he never confused negative faces for positive (smiling) faces.

CP made four piles (see Figure 4), which she labeled “funny/happy,” “regular,” “not up,” and “really not up at all.” Like EG and FZ, CP made more errors in which she confused negative faces with one another (scowling, pouting, wide-eyed and wrinkle-nosed faces) than did controls [t(43)=1.60, p<.058; one-tailed; see Table 1], but she rarely confused negative faces for positive (smiling) faces. Although CP produced one pile that contained predominantly scowling faces on the emotion free sort, we do not think this is evidence that she understood the category of anger. First, CP did not produce this pile spontaneously. She began the task by sorting faces into three piles representing positive, neutral and negative affect (pile 3 and 4 were a single pile), but she randomly split this negative pile into two negative piles following a cue from researchers that she could check her piles before moving on. The fact that she split her pile into additional piles following a cue from the researchers suggests that she might have realized that there should be more categories in the set (even if she could not perceive them). Second, the name that CP spontaneously used to label these two piles implies that she did not see the faces in pile 3 as categorically different from the faces in pile 4. Rather, the labels “not up” and “really not up” suggest that she experienced the faces in her two negative piles as differing in intensity of unpleasantness (although the faces she placed in this pile were not rated as more intense by a separate group of healthy individuals). Finally, as we discuss below, CP did not show a consistent pattern of distinguishing scowling faces from other negative faces in the subsequent sort tasks that she and FZ performed.

At first blush, it might also appear that both FZ and CP were able to specifically perceive disgust because they placed all wrinkled-nose faces in a single pile in the free sort task, but it is unlikely that they were displaying discrete emotion perception. When asked to perform later sorts (e.g., the number and word anchored sorts), neither FZ nor CP continued to place wrinkled nose faces into a single pile (e.g., see Figure 6 for a depiction of CP’s number-anchored sort, where she places wrinkled nose faces in 3 of the 4 negative piles she creates), indicating instability in their perception of these faces.

Face-anchored sort

Like EG, FZ and CP completed the face-anchored sort to ensure that they could in fact distinguish the perceptual differences on the negative faces.3 Both FZ and CP performed better on this task than they had on the emotion free sort task (see Table 2), indicating that their performance on the emotion free sort was unlikely to stem from the inability to detect perceptual differences on the faces. Like EG, their performance suggested that they could detect differences between facial expressions but did not understand the psychological meaning beyond basic affective valence.

Table 2.

Performance on All Emotion Sort Tasks

| # piles |

NEG-NEUT | POS-NEUT | NEG-NEG | NEUT-NEG | POS-NEG | NEG-POS | NEUT-POS | % Total Errors |

|

|---|---|---|---|---|---|---|---|---|---|

| FZ | |||||||||

| Free sort | 4 | 0% | 0% | 44.44% | 0% | 0% | 0% | 0% | 44.44% |

| Number anchor | 5 | 0% | 0% | 33.33% | 0% | 0% | 2.78% | 2.78% | 38.89% |

| Face anchor | 8 | 0% | 0% | 19.44% | 0% | 0% | 0% | 0% | 19.44% |

| Word anchor | 6 | 0% | 0% | 27.78% | 0% | 0% | 2.78% | 0% | 30.56% |

| CP | |||||||||

| Free sort | 4 | 0% | 0% | 36.11% | 0% | 0% | 2.77% | 0% | 38.89% |

| Number anchor | 6 | 2.86% | 0% | 34.29% | 0% | 0% | 0% | 2.86% | 39.00% |

| Face anchor | 6 | 5.71% | 0% | 17.14% | 0% | 0% | 0% | 0% | 22.00% |

| Word anchor | 6 | 5.71% | 0% | 31.43% | 0% | 0% | 0% | 0% | 36.00% |

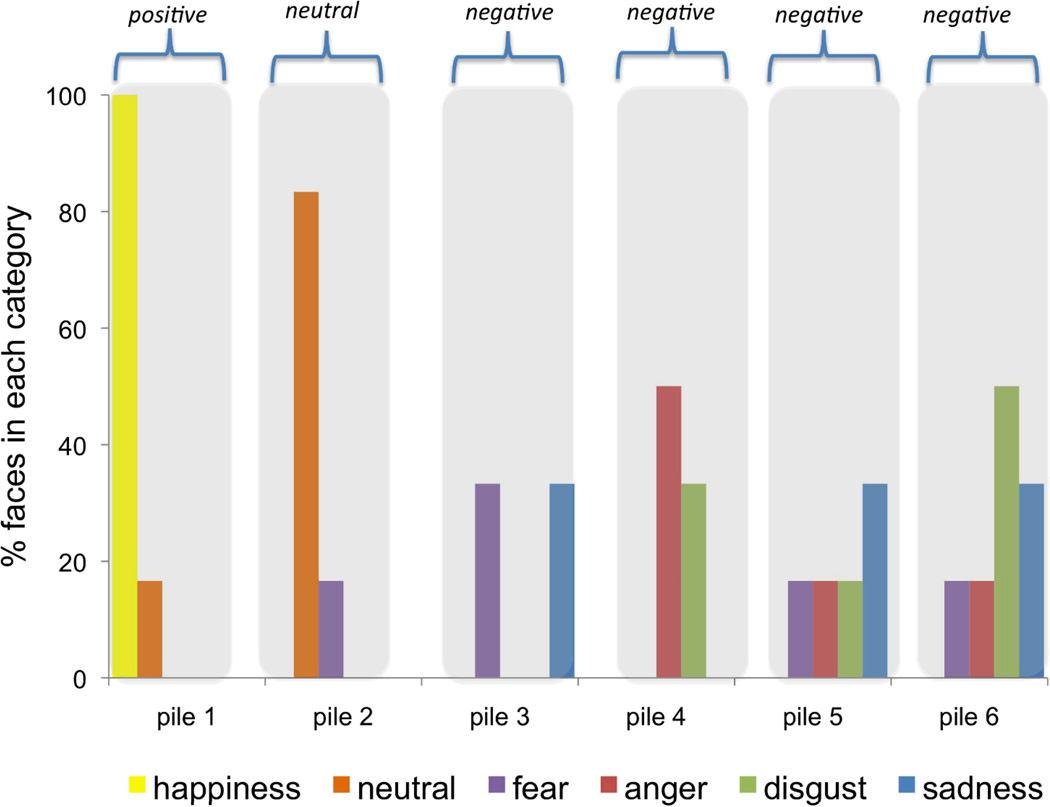

Number-anchored sort

FZ and CP performed the number-anchored sort to provide additional support for the interpretation that they did not perceive six meaningful categories in the test stimuli (even when they were cued to the correct number). FZ made five piles corresponding to affect (one pile for smiling faces, one for neutral faces, and four piles containing various mixes of negative faces in which he confused scowling, pouting, wide-eyed and wrinkle-nosed faces; see Table 2 for errors). CP made six piles corresponding to affect (one pile for smiling faces, one for neutral faces, and four piles containing various mixes of negative faces in which she confused scowling, pouting, wide-eyed and wrinkle-nosed faces; see Table 2 for errors). Notably, neither patient seemed to think that six categories were appropriate for describing the perceptual categories present in the face set. FZ chose not to use the sixth anchor during his sort and CP spontaneously asked why we had asked her to sort the stimuli into so many piles. This number-anchor sort also allowed us to observe the instability in both patients’ negative piles across sort tasks. For instance, although CP produced one pile in the free sort that contained more scowling faces than the other pile, these scowling faces were distributed across three negative piles in the number-anchored sort, indicating that she did not in fact perceive them as members of a single coherent emotion category (see Figure 5).

Figure 5.

When asked to sort faces into six piles anchored with the numbers 1–6, CP created one pile for positive faces, one for neutral faces and four for negative faces. This task indicated the instability in her sorting from one instance to the next.

The instability in sorting that FZ and CP demonstrated from one task to the next is similar to the instability in sorting that was observed in the patient LEW, who became aphasic after a stroke (Roberson et al., 1999). LEW produced different piles when asked to sort faces across three different instances. These findings suggest that without access to the meaning of words, patients cannot make reliable psychological interpretations of discrete emotional facial expressions across instances. In comparison to the earlier work with LEW, our findings are novel in that our patients demonstrated stable affect perception across sort tasks, even as they could not reliably distinguish sadness, fear, disgust, and anger from one another across tasks. Moreover, unlike LEW, who had deficits in lexical retrieval but not semantic memory, our patients’ lack of discrete emotion concept knowledge availability provides the best test of the hypothesis that discrete emotion concept knowledge is necessary for discrete emotion perception (but not affect perception).

Word-anchored sort

FZ and CP next performed the word-anchored sort to address the possibilities that 1) they did not find discrete emotion categories to be the most “meaningful” categories in the set, but could sort by these categories when prompted, 2) that they were merely unable to spontaneously retrieve the words to support discrete emotion perception, but that they could perform the task if we provided the correct words for them. Adding emotion words to a discrete emotion perception task almost always improves healthy adults’ performance: they are much more “accurate” at detecting the discrete emotional meaning of a facial action (e.g., a scowl) when asked to select the meaning from a list of words (e.g., “anger,” “disgust,” “fear,” “happiness,” “sadness”) than when they are asked to spontaneously generate the label themselves (Russell, 1994). Adults remember facial expressions as being more intense exemplars of a particular discrete emotion (e.g., happiness) when they have previously paired that facial expression with a word (e.g., “happy”) than a non-word (e.g., a nonsense word) (Halberstadt et al., 2009; Halberstadt & Niedenthal, 2001). Providing labels for facial expressions can also impose the perception of categories where it did not exist before (Fugate et al., 2010) in healthy adults. Even young children are more accurate when asked to match a face (e.g., scowl) to a word (e.g., “anger”) than when asked to match a face to another face depicting the same expression (e.g., another scowl) (Russell & Widen, 2002). Yet anchoring the piles with emotion words did not improve FZ’s and CP’s performance (see Table 2); their error rate increased above the level observed in the face-anchored sort. Words did not help FZ and CP because they did not understand their meaning (e.g., CP spontaneously asked “what is anger?” as if she had never encountered the word before). Even our attempts to describe a word’s meaning to patients (e.g., “anger is a feeling you have when someone does something bad to you”) did not help, and patients could not use this information to make meaning of the facial expressions posed in the photographs. These findings confirm that our patients did in fact have impaired concepts for emotion.

Identity sort

Finally, FZ and CP performed the identity sort to rule out alternate interpretations that they did not understand the instructions of a sort task, had visual deficits that impaired performance on the emotional sort tasks, or had general executive impairments that would interfere with any sorting task. Both FZ and CP sorted the faces perfectly by identity (producing 0 errors), ruling out that their performance on the previous tasks were caused by other cognitive or perceptual deficits unrelated to emotion concept knowledge.

Conclusion

Our findings are consistent with rapidly growing evidence that emotion concept knowledge supports the normal perception of discrete emotion categories such as anger, disgust, fear, sadness, etc. (for reviews see Barrett, 2011; Barrett, Lindquist, et al., 2007; Lindquist & Gendron, 2013; Roberson et al., 2010; Widen, 2013; Widen & Russell, 2008b). Previous findings from our labs indicate that temporarily impairing access to and use of emotion concept knowledge in healthy young individuals impairs discrete emotion categorization (Lindquist et al., 2006), influencing even the formation of emotion percepts from the structural features of posed faces (Gendron et al., 2012). By contrast, adding words to a task helps healthy participants perceive categorical boundaries between posed affective facial expressions where they otherwise could not (Fugate et al. 2010). Children (Widen & Russell, 2003) and adults (Nook et al., in prep) are more accurate at pairing a scowl with the word “anger” than with another scowling face, suggesting that words signifying concept knowledge might actually add something to the perception of a discrete emotion, transforming a percept of a negative face into a discrete percept of anger. Even neuroimaging evidence is consistent with the idea that concept knowledge plays a role in constructing instances of discrete emotion: brain areas involved in the representation of semantic knowledge such as the medial prefrontal cortex, anterior temporal lobe, medial temporal lobe, and ventrolateral prefrontal cortex (Binder et al., 2009) are routinely involved in both emotional perceptions and experiences across the neuroimaging literature (Lindquist, Wager, Kober, et al., 2012). Our data thus add a crucial dimension to this literature by demonstrating that adults with semantic impairment due to anterior temporal lobe neurodegeneration cannot perceive anger, sadness, fear, or disgust as discrete emotions in people’s faces.

Previous research has documented general decreases in discrete emotion perception accuracy in patients with semantic dementia when they are asked to pair faces with words (Calabria et al., 2009; Miller et al., 2012; Rosen et al., 2002). To date, these data have been interpreted with the understanding that language is epiphenomenal to emotion: deficits observed on discrete emotion perception tasks are thought to stem from difficulties labeling stimuli, not from difficulties in discrete emotion perception per se (e.g., Miller et al., 2012). Our findings show that patients’ inability to perceive discrete emotion is directly linked to their semantic impairments in a task that did not require the use of emotion words, and that cannot be attributable to other deficits such as loss of executive control, prosopagnosia, visuospatial impairments, or affective deficits.

Our findings might, at first blush, seem inconsistent with other recent evidence from frontotemporal dementia (FTD) patients (including, but not limited to, semantic dementia patients) that specific patterns of neurodegeneration spanning frontal, temporal, and limbic regions are associated with impairments in labeling specific emotions (Kumfor et al., 2013). For instance, Kumfor et al. found that across patients with semantic dementia and other variants of FTD, deficits in labeling wide-eyed faces as “fear” were relatively more associated with neurodegeneration in the amygdala whereas deficits in labeling wrinkled-nose faces as “disgust” were relatively more associated with neurodegeneration in the insula. Although the authors took this as evidence for the biological basicness of certain discrete emotion categories, these findings might not ultimately be at odds with our own findings. Because the authors looked for areas of neurodegeneration that correlated with impairments in the perception of specific discrete emotions (while controlling for relationships between brain areas and impairments in perceiving other discrete emotions) they were not likely to reveal brain areas, such as the anterior temporal lobe, that are general to impairments in perceiving all negative discrete emotions. Growing evidence demonstrates that concept knowledge is represented in a “hub and spokes” manner (Patterson et al., 2007), in which the anterior temporal lobe serves as a “hub” to a set of “spokes” consisting of patterns of brain activity spanning other regions involved in sensation, motor behavior, affect and language, such as those investigated by Kumfor and colleagues. Although speculation at this point, Kumfor et al.’s (2013) findings and our own might thus be evidence for both “spokes” and “hubs” in the representation of emotion concept knowledge—concept knowledge about certain discrete emotions might be supported by distributed and somewhat distinctive patterns of brain activity, but these patterns might converge functionally in the anterior temporal lobe. Although research to date has not explicitly assessed the distributed patterns of brain activity involved in representing perceptions of different discrete emotions, meta-analytic evidence from our own lab suggests that it is quite distributed (Lindquist et al., 2012). Other evidence from cognitive neuroscience is suggestive that a hub and spokes formation might represent emotion knowledge. fMRI studies demonstrate distributed patterns of brain activity associated with perceptions of other semantic categories such as bicycles, bottles, athletes, etc. (Huth et al., 2012), but focal lesions to the anterior temporal lobes (as occurs in semantic dementia) impair perception of these categories (Lambon Ralph et al., 2010). Future research should thus investigate the extent to which concept knowledge of emotion is represented by distributed, multi-modal, brain areas united by an amodal hub in the anterior temporal lobe.

Importantly, our findings demonstrate something important that previous findings assessing emotion perception in semantic dementia patients have not: Despite their semantic impairments, patients in our case study were still able to perceive affective valence in faces. These findings suggest that perceptions of affective valence are more psychologically basic than (i.e., superordinate to) perceptions of discrete emotion and counter basic emotion views claiming that valence is a descriptive “umbrella” term that is applied to a face after it is perceived as an instance of discrete emotion (e.g., that a person needs to know that a face is fearful to know it is unpleasant; Keltner & Ekman, 2000). It’s possible to argue that affect perceptions are just easier than discrete emotion perceptions. Then again, it is equally possible to claim that valence perception it is more difficult than discrete emotion perception (because it involves seeing similarity across perceptually distinct facial expressions). Regardless, patients persisted in making valence distinctions, even when subsequent control tasks provided extra structure and removed cognitive load by cuing patients to the number, appearance, and even names of the discrete emotion categories, suggesting that affect perceptions were all patients were capable of.

The finding that affect is superordinate to judgments of discrete emotion is consistent with several sources of data. First, this finding is consistent with behavioral research in healthy adults demonstrating that the dimension of valence describes similarities in discrete emotion categories (Russell & Barrett, 1999). Second, this finding is consistent with evidence showing that infants, toddlers, and non-human primates, who lack sophisticated language capacity, can perceive affective valence in faces, voices, and bodies even when they do not reliably distinguish discrete emotional expressions from one another (for a discussion Lindquist, Wager, Bliss-Moreau, et al., 2012). Only as children acquire the meaning of emotion words such as “anger,” “fear,” “sadness” and “disgust” with normal development do they acquire the ability to reliably distinguish between scowling, wide eyed, pouting and wrinkled nosed faces as expressions of these categories (Widen & Russell, 2008a). Third, this finding is consistent with cross-cultural evidence that all cultures perceive valence on faces, even amidst differences in the specific discrete emotions they perceive on faces (Russell, 1991) or even differences in whether discrete emotions are perceived at all (Gendron et al., under revision). Finally, similar to other “last in, first out” theories of development vs. neurodegeneration, our findings are consistent with other research on semantic dementia documenting the progressive “pruning” of concepts over the course of disease progression from the semantically subordinate level (e.g., lion vs. tiger) to the basic level (e.g., cat vs. dog) to the superordinate level (e.g., animal vs. plant) (Hodges et al., 1995; Rogers et al., 2006). To our knowledge, our findings are the first to demonstrate a similar pattern of “pruning” for emotion concepts. Although it is not addressable in the present study, future research might use longitudinal methods to specifically investigate the “pruning” hypothesis as it pertains to discrete emotion v. valence perception. If valence judgments rely on concept knowledge, and valence concepts are superordinate to discrete emotion concepts, then we might expect valence perception to diminish over the course of neurodegeneration, following discrete emotion perception. Yet if intact valence perception relies on other psychological mechanisms besides concept knowledge per se (e.g., “mirroring” or mimicry of others’ affective states that occurs in the so-called “mirroring” network of the brain; Spunt & Lieberman, 2012) then it is possible that valence perception will be maintained over the course of the disease.

Implications

The observation that people with semantic dementia have preserved affect perception but impaired discrete emotion perception has important implications for both basic and applied science. These findings cannot be accommodated by “basic emotion” accounts (Ekman et al., 1987; Keltner & Ekman, 2000; Lewis, 1993; Matsumoto et al., 2008; Sauter et al., 2010) which assume that emotional expressions are “psychological universals and constitute a set of basic, evolved functions, that are shared by all humans” and which have evolved from early primate communication (cf., Sauter et al., 2010). Although our findings run contrary to basic emotion models, our study was not designed to specifically test other models of emotion, such as appraisal models, that themselves make no predictions about the role of language and conceptual knowledge in emotion perception. Appraisal models make specific predictions about the role of appraisal “checks” (Scherer & Ellgring, 2007) or “action tendencies” (Frijda, 1987) for the production of facial expressions. Aside from evidence suggesting that healthy adults make appraisal-consistent personality inferences about scowling, pouting and smiling faces (Hareli & Hess, 2010), there is little evidence specifically assessing the role of appraisal checks in emotion perception. Relatively more studies have addressed the role of action tendencies during emotion perception, with most studies focusing on the perceiver’s general approach v. avoidance behaviors following the perception of discrete emotion in a posed face (e.g., wide-eyed v. scowling faces; Adams et al., 2006; Marsh et al., 2005). It thus remains a possibility that patients could sort by appraisal checks or action tendencies if prompted to do so, but such a question is beyond the scope of our study. Our data demonstrate that participants did not spontaneously sort faces based on appraisals (e.g., whether the person expressing emotion has control over the situation, whether the person expressing emotion finds the situation certain) or action tendencies (e.g., whether the person expressing emotion is likely to flee the situation or would make the perceiver want to flee the situation). Nor did any of the patients in our sample make comments related to appraisals or action tendencies when sorting faces (e.g., none said “he looks uncertain” or “he is going to run away”). One possibility is that the content of appraisals concerning the situation (e.g., knowing whether the situation in which emotion occurs is certain, controllable, etc.) and knowledge about which action tendencies accompany certain emotions are part of the discrete emotion concept knowledge (Barrett & Lindquist, 2008; Barrett, Mesquita, et al., 2007; Lindquist & Barrett, 2008) that becomes impaired in semantic dementia. This hypothesis would be important to test in future research.

In conclusion, our data suggest that the 1) perception of affect and 2) categorization that is supported by emotion concept knowledge (Barrett, 2006; Barrett, Lindquist, et al., 2007; Lindquist & Gendron, 2013; Russell, 2003) are both important “ingredients” in normal emotion perception. These findings augment the growing argument that discrete emotions are psychologically constructed events that are flexibly produced in the mind of a perceiver and dependent on context, culture and language, rather than innate modules for specific invariant categories (Barrett, 2006, 2012; Barrett, Lindquist, et al., 2007; Lindquist & Gendron, 2013). Accordingly, it seems worth investigating in future research whether the discrete emotion perception deficits that have been documented in aging (Ruffman et al., 2008) and in patients with neuropsychiatric disorders (e.g., autism; Baron-Cohen & Wheelright, 2004; e.g., schizophrenia; Kohler et al., 2010; e.g., Alzheimer’s Disease; Phillips et al., 2010) originate from changes in more fundamental psychological processes (e.g., conceptual processing and/or affective processing). Finally, the results presented here suggest that the theories of discrete emotion being disseminated in textbooks and scientific papers throughout the Western world—and being used to train security agents and other government officials—should be refined by considering the role of discrete emotion concept knowledge in discrete emotion perception.

Figure 2. Example of face stimuli.

Examples of face stimuli from the IASLab set used in the face sort tasks.

Acknowledgements

We thank Derek Issacowitz and Jenny Stanley for their assistance in collecting control data. This research was supported by a National Institutes of Health Director’s Pioneer award (DP1OD003312) to Lisa Feldman Barrett, a National Institute on Aging grant (R01-AG029840), an Alzheimer’s Association grant to Bradford Dickerson, and a Harvard University Mind/Brain/Behavior Initiative Postdoctoral Fellowship to Kristen Lindquist. We appreciate the commitment of our participants and their families to this research.

Footnotes

In this paper, as in our prior work, we use the terms “affect” or “affective valence” to refer to hedonic tone (i.e., positivity, negativity, and neutrality). We use the term “discrete emotion” to refer to instances of specific emotion categories such as anger, disgust, fear, etc.

Unfortunately, since there were not multiple basic-level categories within the superordinate category of “positive,” we could not conduct a comparable analysis for positive faces. This is because most basic emotion accounts consider happiness to be the only positive basic emotion.

FZ and CP performed the face-anchored sort after the number-anchored sort, although we discuss their performance on the face-anchored sort first for ease of comparison with EG (who did not perform a number-anchor sort). Otherwise, the control sorts are discussed in the order in which they were implemented during the testing session.

K.A.L. and M.G. contributed equally to manuscript preparation and should be considered joint first authors. L.F.B. and B.C.D. contributed equally and should be considered joint senior authors.

References

- Baron-Cohen S, Wheelright S. The empathy quotient: An investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders. 2004;34:163–175. doi: 10.1023/b:jadd.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review. 2006;10:20–46. doi: 10.1207/s15327957pspr1001_2. [DOI] [PubMed] [Google Scholar]

- Barrett LF. Emotions are real. Emotion. 2012;12:413–429. doi: 10.1037/a0027555. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bozeat S, Lambon Ralph MA, Patterson K, Hodges JR. When objects lose their meaning: What happens to their use? Cogn Affect Behav Neurosci. 2002;2:236–251. doi: 10.3758/cabn.2.3.236. [DOI] [PubMed] [Google Scholar]

- Burns B. Tsa spot program: Still going strong. 2010 Retrieved from http://blog.tsa.gov/2010/05/tsa-spot-program-still-going-strong.html.

- Calabria M, Cotelli M, Adenzato M, Zanetti O, Miniussi C. Empathy and emotion recognition in semantic dementia: A case report. Brain and Cognition. 2009 doi: 10.1016/j.bandc.2009.02.009. [DOI] [PubMed] [Google Scholar]

- Crawford JR, Howell DC. Comparing an individual’s test score against norms derived from small samples. The Clinical Neuropsychologist. 1998;12:482–486. [Google Scholar]

- Ekman P, Cordaro D. What is meant by calling emotions basic. Emotion Review. 2011;3:364–370. [Google Scholar]

- Ekman P, Friesen WV, O’Sullivan M, Chan A, Diacoyanni-Tarlatzis I, Heider K, et al. Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology. 1987;53:712–717. doi: 10.1037//0022-3514.53.4.712. [DOI] [PubMed] [Google Scholar]

- Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin. 2002;128:203–235. doi: 10.1037/0033-2909.128.2.203. [DOI] [PubMed] [Google Scholar]

- Fu CH, Mourao-Miranda J, Costafreda SG, Khanna A, Marquand AF, Williams SCR, et al. Pattern classification of sad facial processing: Toward the development of neurobiological markers in depression. Biological Psychiatry. 2008;63:656–662. doi: 10.1016/j.biopsych.2007.08.020. [DOI] [PubMed] [Google Scholar]

- Fugate J, Gouzoules H, Barrett LF. Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion. 2010;10:544–544. doi: 10.1037/a0019017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gendron M, Lindquist KA, Barsalou LW, Barrett LF. Emotion words shape emotion percepts. Emotion. 2012;12:314–325. doi: 10.1037/a0026007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Hillis AE, Weintraub S, Kertesz A, Mendez M, Cappa SF, et al. Classification of primary progressive aphasia and its variants. Neurology. 2011;76:1006–1014. doi: 10.1212/WNL.0b013e31821103e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halberstadt J, Niedenthal PM. Effects of emotion concepts on perceptual memory for emotional expressions. Journal of Personality and Social Psychology. 2001:587–598. [PubMed] [Google Scholar]

- Halberstadt J, Winkielman P, Niedenthal PM, Dalle N. Emotional conception: How embodied emotion concepts guide perception and facial action. [10.1111/j.1467-9280.2009.02432.x] Psychological Science: A Journal of the American Psychological Society / APS. 2009;20:1254–1261. doi: 10.1111/j.1467-9280.2009.02432.x. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Bozeat S, Lambon Ralph MA, Patterson K, Spatt J. The role of conceptual knowledge in object use evidence from semantic dementia. Brain. 2000;123:1913–1925. doi: 10.1093/brain/123.9.1913. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Graham N, Patterson K. Charting the progression in semantic dementia: Implications for the organization of semantic memory. Memory. 1995;3:463–495. doi: 10.1080/09658219508253161. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K. Semantic dementia: A unique clinicopathological syndrome. Lancet Neurol. 2007;6:1004–1014. doi: 10.1016/S1474-4422(07)70266-1. [DOI] [PubMed] [Google Scholar]

- Izard CE. The face of emotion. Vol. 23. New York: Appleton-Century-Crofts; 1971. [Google Scholar]

- Keltner D, Ekman P. Facial expressions of emotion. In: Haviland-Jones JM, Lewis M, editors. Handbook of emotions. Vol. 3. New York: Guilford; 2000. pp. 236–249. [Google Scholar]

- Kohler CG, Walker JB, Martin EA, Healey KM, Moberg PJ. Facial emotion perception in schizophrenia: A meta-analytic review. Schizophrenia Bulletin. 2010;36:1009–1019. doi: 10.1093/schbul/sbn192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambon Ralph MA, Pobric G, Jefferies E. Conceptual knowledge is underpinned by the temporal pole bilaterally: Convergent evidence from rtms. Cerebral Cortex. 2009;19:832–838. doi: 10.1093/cercor/bhn131. [DOI] [PubMed] [Google Scholar]

- Lambon-Ralph MA, Sage K, Jones RW, Mayberry EJ. Coherent concepts are computed in the anterior temporal lobes. Proceedings of the National Academy of Sciences. 2010;107:2717–2722. doi: 10.1073/pnas.0907307107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF, Bliss-Moreau E, Russell JA. Language and the perception of emotion. Emotion. 2006;6:125–138. doi: 10.1037/1528-3542.6.1.125. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M. What’s in a word: Language constructs emotion perception. Emotion Review. (in press) [Google Scholar]

- Lindquist KA, Wager TD, Bliss-Moreau E, Kober H, Barrett LF. What are emotions and where are they in the brain? Behavioral and Brain Sciences. 2012:175–184. doi: 10.1017/s0140525x1100183x. [DOI] [PubMed] [Google Scholar]

- Matsumoto D. American-japanese cultural differences in the recognition of universal facial expressions. Journal of Cross-Cultural Psychology. 1992;23:72–83. [Google Scholar]

- Matsumoto D, Keltner D, Shiota MN, O’Sullivan M, Frank M. Facial expressions of emotion. In: Haviland-Jones JM, Lewis M, Barrett LF, editors. Handbook of emotions. 3 ed. New York: Guilford; 2008. pp. 211–234. [Google Scholar]

- Miller LA, Hsieh S, Lah S, Savage S, Hodges JR, Piguet O. One size does not fit all: Face emotion processing impairments in semantic dementia, behavioural-variant frontotemporal dementia and alzheimer’’s disease are mediated by distinct cognitive deficits. Behavioural Neurology 25. 2012:53–60. doi: 10.3233/BEN-2012-0349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris MW, Keltner D. How emotions work: An analysis of the social functions of emotional expression in negotiations. Review of Organizational Behavior. 2000;22:1–50. [Google Scholar]

- Phillips LH, Scott C, Henry JD, Mowat D, Bell JS. Emotion perception in alzheimer’s disease and mood disorder in old age. Psychology of Aging. 2010;25:38–47. doi: 10.1037/a0017369. [DOI] [PubMed] [Google Scholar]

- Roberson D, Damjanovic L, Kikutani M. Show and tell: The role of language in categorizing facial expression of emotion. Emotion Review. 2010;2:255–260. [Google Scholar]

- Roberson D, Davidoff J. The categorical perception of colors and facial expressions: The effect of verbal interference. Memory & Cognition. 2000;28:977–986. doi: 10.3758/bf03209345. [DOI] [PubMed] [Google Scholar]

- Roberson D, Davidoff J, Braisby N. Similarity and categorisation: Neuropsychological evidence for a dissociation in explicit categorisation tasks. Cognition. 1999;71:1–42. doi: 10.1016/s0010-0277(99)00013-x. [DOI] [PubMed] [Google Scholar]

- Rogers TT, Ivanoiu A, Patterson K, Hodges JR. Semantic memory in alzheimer’s disease and the frontotemporal dementias: A longitudinal study of 236 patients. Neuropsychology. 2006;20:319–335. doi: 10.1037/0894-4105.20.3.319. [DOI] [PubMed] [Google Scholar]

- Rosen HJ, Pace-Savitsky K, Perry RJ, Kramer JH, Miller BL, Levenson RW. Recognition of emotion in the frontal and temporal variants of frontotemporal dementia. [10.1159/000077154] Dementia and Geriatric Cognitive Disorders. 2004;17:277–281. doi: 10.1159/000077154. [DOI] [PubMed] [Google Scholar]

- Rosen HJ, Perry RJ, Murphy J, Kramer JH, Mychack P, Schuff N, et al. Emotion comprehension in the temporal variant of frontotemporal dementia. Brain. 2002;125:2286–2286. doi: 10.1093/brain/awf225. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience and Biobehavioral Reviews. 2008;32:863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Russell JA. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychological Bulletin. 1994;115:102–141. doi: 10.1037/0033-2909.115.1.102. [DOI] [PubMed] [Google Scholar]

- Russell JA. Core affect and the psychological construction of emotion. Psychological Review. 2003;110:145–145. doi: 10.1037/0033-295x.110.1.145. [DOI] [PubMed] [Google Scholar]

- Russell JA, Barrett LF. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. Journal of Personality and Social Psychology. 1999;76:805–819. doi: 10.1037//0022-3514.76.5.805. [DOI] [PubMed] [Google Scholar]

- Russell JA, Widen SC. Words versus faces in evoking preschool children’s knowledge of the causes of emotions. International Journal of Behavioral Development. 2002;26:97–97. [Google Scholar]

- Sauter DA, Eisner F, Ekman P, Scott SK. Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences. 2010;107:2408–2412. doi: 10.1073/pnas.0908239106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shariff AF, Tracy JL. What are emotion expressions for? Current Directions in Psychological Science. 2011;20:395–399. [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H. Neural structures associated with recognition of facial expressions of basic emotions. Proceedings of the Royal Society B: Biological Sciences. 1998;265:1927–1927. doi: 10.1098/rspb.1998.0522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tracy JL, Robins RW. The automaticity of emotion recognition. Emotion. 2008;8:81–95. doi: 10.1037/1528-3542.8.1.81. [DOI] [PubMed] [Google Scholar]

- Visser M, Jefferies E, Lambon Ralph MA. Semantic processing in the anterior temporal lobes: A meta-analysis of the functional neuroimaging literature. Journal of Cognitive Neuroscience. 2010;22:1083–1094. doi: 10.1162/jocn.2009.21309. [DOI] [PubMed] [Google Scholar]

- Weinberger S. Airport security: Intent to deceive? Nature. 2010;465:412–415. doi: 10.1038/465412a. [DOI] [PubMed] [Google Scholar]

- Widen SC. Children’s interpretation of other’s facial expressions. Emotion Review. (in press) [Google Scholar]

- Widen SC, Russell JA. Children acquire emotion categories gradually. Cognitive Development. 2008a;23:291–312. [Google Scholar]

- Widen SC, Russell JA. Young children’s understanding of others’ emotions. In: Haviland-Jones JM, Lewis M, Barrett LF, editors. Handbook of emotions. 3 ed. New York: Guilford; 2008b. pp. 348–363. [Google Scholar]

- Widen SC, Russell JA. Differentiation in preschooler’s categories of emotion. Emotion. 2010;10:651–661. doi: 10.1037/a0019005. [DOI] [PubMed] [Google Scholar]