Abstract

Objectives:

The objectives of this review are to summarize the current training modalities and assessment tools used in urological robotic surgery and to propose principles to guide the formation of a comprehensive robotics curriculum.

Materials and Methods:

The PUBMED database was systematically searched for relevant articles and their citations utilized to broaden our search. These articles were reviewed and summarized with a focus on novel developments.

Results:

A multitude of training modalities including didactic, dry lab, wet lab, and virtual reality have been developed. The use of these modalities can be divided into basic skills-based exercises and more advanced procedure-based exercises. Clinical training has largely followed traditional methods of surgical teaching with the exception of the unique development of tele-mentoring for the da Vinci interface. Tools to assess both real-life and simulator performance have been developed, including adaptions from Fundamentals of Laparoscopic Surgery and Objective Structured Assessment of Technical Skill, and novel tools such as Global Evaluative Assessment of Robotic Skills.

Conclusions:

The use of these different entities to create a standardized curriculum for robotic surgery remains elusive. Selection of training modalities and assessment tools should be based upon performance data-based validity and practical feasibility. Comparative assessment of different modalities (cross-modality validity) can help strengthen the development of common skill sets. Constant data collection must occur to guide continuing curriculum improvement.

Keywords: Curriculum, learning, robotic surgery, training

INTRODUCTION

Urology is often at the forefront of technological developments in medicine. Perhaps the clearest example of this is the ever-expanding use of robotic surgery in the field. As of 2011, four out of five radical prostatectomies in the United States were robot assisted.[1] In 2012, there was a reported 25% increase in the total number of da Vinci robotic procedures performed from 2011.[2] Given the rising demand for urologists proficient in robotics, graduating residents are now expected to be experienced in the approach. Additionally, urologists practicing in the community are finding the need to adopt robotics into their practices. Even urologists experienced in open surgery require at least 200-250 cases of robotic prostatectomies to reach the same proficiency held using traditional modalities.[3]

In this review, the major modalities and utilization of these training tools for teaching robotics will be discussed. Relevant assessment tools available today for determining proficiency will also be summarized. Finally, the principles that should guide the development of a training curriculum using these building blocks will be proposed.

Training modalities

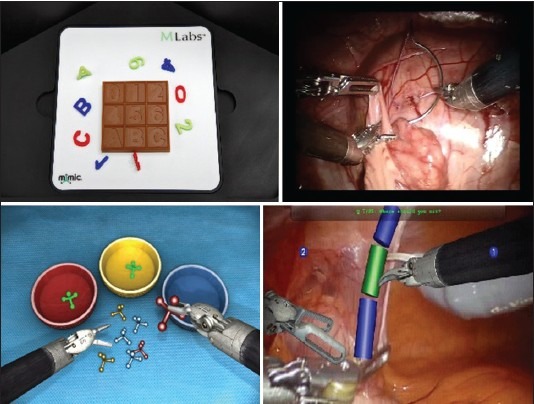

Tools for training surgeons include preclinical and clinical modalities. Within preclinical modalities, traditional methods such as didactics, inanimate exercises, tissue labs, and animal/cadaveric models have been utilized. Novel modalities such as virtual reality (VR) have been developed and are rapidly increasing in popularity [Figure 1]. Clinical experience remains the traditional method of training with observation, assisting in the operating room, and direct clinical work with mentorship. The current status and use of these modalities are reviewed here.

Figure 1.

Upper Left: inanimate tasks/dry lab (Mimic Technologies), Upper Right: Animal in vivo[10], Lower Left: Virtual reality (Mimic Technologies), Lower Right: Augmented reality (Mimic Technologies)[30]

Preclinical training

Didactic

There is a need for learners to obtain a clear understanding of the involved pathology, physiology, and technology prior to technical skill acquisition.[4] Currently, a fully validated and standardized course for robotic surgery training remains elusive, but the American Urological Association (AUA) has developed a “Basics of Urologic Laparoscopy and Robotics” module within their core curriculum.[5] The objectives of this course include familiarity with robotic surgery indications, instrumentation, procedures and postoperative care, and diagnosing and managing complications. This basic course represents a starting point for further development of a standardized robotic surgery training curriculum.

Inanimate tasks/dry lab

The use of inanimate tasks to introduce the learner to instruments and their basic functions is commonly used in laparoscopy training and has been expanded to robotic surgery. Advantages of this modality include ease of access and low cost if a robot is already available.[6] Fundamentals of Laparoscopic Surgery (FLS) is a well-known and commonly used and validated assessment tool for laparoscopic surgical proficiency. It encompasses a curriculum of dry lab exercises with scoring based on time, error rate, and successful completion of objective. The AUA has formally adapted this curriculum for urology in its Basic Laparoscopic Urologic Surgery (BLUS). BLUS uses both traditional FLS exercises and a novel urology-specific exercise (clip-applying). As in FLS, assessment of each exercise is tailored to each task.[7] While there is no robotics-specific FLS-based curriculum to date, FLS concepts have been used to assess robotic performance in the literature.[8]

Goh et al. developed four training exercises derived from the deconstruction of a robotic prostatectomy.[9] Construct validity and learning in novices was demonstrated in a small cohort. A study from the University of Southern California (USC) externally confirmed the construct validity and demonstrated good correlation between VR and in vivo robotic performance for these tasks (cross-modality validity).[10] Also at USC, Ramos et al. found that dry lab exercises derived from the Mimic VR platform had excellent construct validity and showed moderate performance correlation to the corresponding VR exercises.[11]

A group from the University of Texas developed and validated a curriculum for robotic surgery based on inanimate exercises. Initially, a list of 23 deconstructed skills from robotic operations were generated and used to develop nine exercises scored based on time to completion and exercise-specific error rate. A series of studies evaluated these exercises and were used to show face, content, and construct validity in addition to economic feasibility for use in a robotics curriculum.[8,12,13]

Ex vivo/tissue lab

Exercises using ex vivo tissue are commonly used in surgical training to bridge inanimate and in vivo exercises. Unfortunately, there are few documented and validated exercises in this modality for robotic surgery training. Tissue-only robots are prohibitively expensive at most institutions and not widely available.

Marecik et al. used intestinal tissue in a comparison between hand sewing and robotic sewing of an intestinal anastamosis by residents. The study showed both quality and time to completion when using the robot improved with repetitions.[14] Hung et al. developed an ex vivo model for robot-assisted partial nephrectomy using a porcine kidney with an embedded Styrofoam ball. Face, content, and construct validity was shown with this model.[15]

In vivo/cadaver

Like other training tools, the use of in vivo and cadaver models has been adapted from traditional surgical training. Cadavers provide an environment with accurate anatomy, while in vivo animal models provide a surrogate for perfused and living tissue.[4] There has been adaptation of these models for robotic surgery training courses as noted by Schreuder et al.[6] Research into developing this modality has been more limited. A method for simulating pseudotumors and pseudothrombi in both live porcine models and cadavers by infusing gelatin, metamucil, and methylene blue into the renal vein has been developed.[16]

Virtual reality

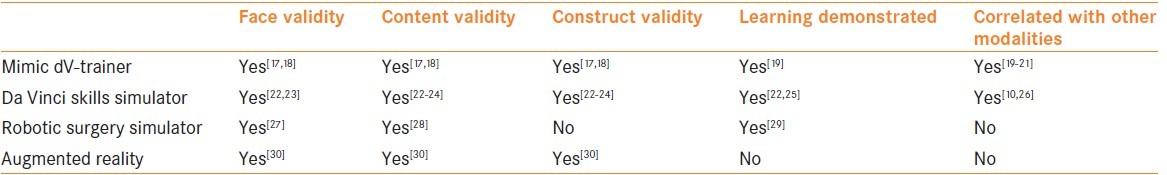

A modality that has been increasingly utilized given its low-stakes environment and ability to simulate multiple exercises in different settings is virtual reality (VR). Available simulators include the Mimic dV-Trainer (MdVT, Mimic Technologies, Seattle, WA, USA), da Vinci Skills Simulator (dVSS, Intuitive Surgical, Sunnyvale, CA), and Robotic Surgery Simulator (RoSS, Simulated Surgical Systems, Williamsville, NY). These simulators have undergone various stages of validation and have shown varying levels of educational impact [Table 1].

Table 1.

Summary: Validation of robotic simulators

Mimic dV-trainer

The MdVT is one of the most widely studied and validated models.[17,18] The console is a table top unit with foot pedals simulating the da Vinci Surgeon Console. Its proprietary scoring system (MScore) can track user performance and errors to generate an individual and overall scoring metrics for users. Lerner et al. demonstrated that training on the MdVT improved skill with inanimate tasks on the da Vinci Surgical System.[19] Another study found a strong correlation between performance on the MdVT and actual robotic performance on dry lab tasks, suggesting the MdVT could be used as an assessment tool for robotic skills.[20] A similar study demonstrated clear correlation between MdVT total task time and total errors and da Vinci Surgical System total task time and errors for dry lab exercises.[21]

da Vinci skills simulator

The dVSS is a “backpack” accessory that allows the da Vinci Surgical System to be used as a Mimic VR simulator outside operating hours. Face, content, and construct validity and learning have previously been demonstrated for this system.[22,23,24,25] Additionally, it has been suggested to have better face and content validity than the MdVT and is simpler to use.[24] A subjective comparison at a 2012 AUA course found the dVSS to be more beneficial to training than either the MdVT or RoSS.[4]

The concurrent and predictive validity of the dVSS with ex vivo exercises was studied at USC.[26] Baseline simulator performance significantly correlated with study baseline and final ex vivo tissue performance. A 10-week intervening training program on the simulator was found to significantly improve ex vivo performance in those with low baseline scores. Additionally, the dVSS has been shown to have cross-modality validity (correlation) when compared to inanimate tasks and in vivo performance.[10]

A recent study from USC investigated the correlation of simulator training and clinical performance amongst residents and fellows. Performance was evaluated using simulation metrics on the dVSS and Global Evaluative Assessment of Robotic Skills (GEARS) for clinical tasks. Interestingly, this initial study showed no significant correlation between dVSS and clinical performance. Of note, all study participants had already spent time on the dVSS. The authors concluded that perhaps more complex training simulations are needed, as currently only basic skills are practiced.[31]

Robotic surgery simulator

The RoSS is a stand-alone system that is designed to mimic the da Vinci Surgical System. It features full VR surgical procedures although these have not been validated. Abboudi et al. reviewed the face and content validity of this simulator.[27,28,32] Learning has also been demonstrated in the form of less time taken to complete robotic dry lab tasks with RoSS training.[29] No studies of construct validation for this modality have been published to date.

Augmented reality

Augmented reality (AR) is a novel simulation modality that takes elements from VR and didactics intended to create an immersive, interactive, virtual experience. It is in the initial stages of development and validation and currently has been prototyped for kidney surgery at USC. In AR, subjects are shown 3D video footage of robotic surgery and manipulate augmented virtual instruments that are over-laid to identify anatomy, demonstrate technical skills, and learn steps of the operation. An initial study has demonstrated face, content, and construct validity.[30]

Clinical training

Observation and assistance

Observational learning occurs through watching live surgeries or watching videos with or without an instructor. Its role in robotic surgery has changed little from its role in traditional surgery teaching. Graduation from observation to bedside assistant is suggested.[33]

Assisting in surgeries is a logical and necessary bridge between observation and surgical autonomy. In robotic surgery, it has been proposed that trainees start clinical training as the bedside assistant to the console surgeon. This is thought to provide knowledge of the functionality and limitations of the robot and different strategies and techniques used in different procedures.[33]

Operating under mentorship

Operating under mentorship is defined as performance of procedures by the trainee on the surgeon console under the supervision, and if necessary assistance of an expert robotic surgeon. Experts provide verbal instruction to the trainee and take over the operation if necessary or at technically advanced steps.[33] A nuance of robotic surgery that can make this challenging is the fact that many robots only have one surgeon console. Thus, the expert may not have immediate control of the operation while the trainee is operating.[33] A solution to this problem lies in use of an additional “mentoring” console that allows the expert to operate at the same time as the trainee.

Telementoring represents an additional form of mentorship under study. It allows an expert surgeon to remotely observe a robotic surgery in real-time, providing verbal advice and guidance to the operating trainee as needed. In more advanced models, the expert may indicate target areas on the visual display or even take control of the camera and instruments. In particular, the da Vinci Surgical System has features under investigation that may facilitate this modality.[34] Current challenges facing this modality include latency and bandwidth constraints and unclear medico-legal responsibilities.[35]

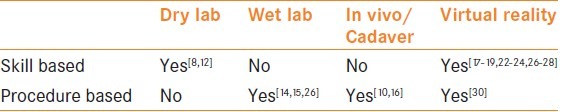

Skills and procedure-based training

Once training modalities have been defined, it is important to examine how they are being used in robotic training. Training exercises for each modality can be broken into skills- vs. procedure-based [Table 2]. An individual “skill” is an entity that has been deconstructed from one or more robotic procedures. Examples of skills include depth perception, dissection, retraction, cutting, and suturing.[12] Exercises that are skills-based focus on fundamental motions and concepts required to complete an operation or step of an operation.

Table 2.

Validation of skill based vs. procedure based

Exercises that train by procedure seek to replicate an actual operation or step of an operation, incorporating multiple pertinent skills. Examples of this include ex vivo partial nephrectomy and bladder cystotomy repair.[26]

Cross-modality performance correlation

In order to create a coherent robotic surgery curriculum, a correlation between skill-based and procedure-based exercises must be demonstrated ("cross-modality" validity). Several groups have demonstrated correlation between skills-based exercises across modalities.[20,21] However, correlation of skills-based and procedure-based exercises, within a modality or across modalities, has received less attention.

USC has published a series of studies examining this concept. One paper captured baseline ex vivo performance in three procedure-based exercises in two groups of trainees. One group then underwent training in skill-based exercises on the dVSS. The ex vivo procedure-based exercises were completed later by the same groups. Training on the dVSS improved performance on ex vivo nephrectomy, cystotomy, and bowel resection models, showing initial concurrent and predictive validity of performance on the simulator.[26] A subsequent study primarily examined the cross-modality validity (correlation) of inanimate, VR, and in vivo training platforms. Both performance on the skills-based inanimate and VR exercises were found to correlate with performance on the procedure-based in vivo exercises.[10]

Assessment of robotic performance

The ability to assess performance on each modality and correlate this with an overall standard is vital to a successful robotics curriculum. Several tools have been proposed to evaluate performance on both the actual robotic system and on simulators. These include both traditional and novel approaches, utilizing objective metrics and subjective evaluation by experts.

Assessment systems can be divided into those used to evaluate performance on the actual robot and those used to evaluate performance in simulators. Objective Structured Assessment of Technical Skill (OSATS), Global Evaluative Assessment of Robotic Skills (GEARS), and kinematic tracking all represent systems designed to evaluate clinical robotic performance. Scoring metrics that are incorporated into simulator software are used in evaluation of simulator performance.

Assessment of live robotic performance

One of the oldest and most widely used systems for assessing operative performance is OSATS.[36] This method can include procedure-specific checklists, detailed global rating, and determination of pass/fail. A form of OSATS modified for robotic surgery was used to show a significant improvement in handling of the patient side manipulator in suturing in regard to tissue respect, suture spacing, and minimum throws on knots.[37]

GEARS is a new system specifically used for the evaluation of robotic surgery. Development of GEARS stemmed from the Global Operative Assessment of Laparosopic Skills (GOALS) system.[38] GEARS consists of six domains derived from deconstruction of multiple robotic procedures, that are scored on five point scales by an expert. The initial paper showed validity as an assessment system and consistency and reliability among raters.[39] In another study, GEARS was used to evaluate in vivo performance on a porcine model. Cross-modality validity between GEARS scores and both inanimate tasks and VR performance was demonstrated.[10] Furthermore, the applicability of GEARS to assess dry lab performance was investigated in another study at USC, where a moderate correlation between performances was found.[11]

A novel study that utilized and compared multiple assessment systems recorded the stereo video, instrument motion, and button/pedal events for operations on the actual da Vinci robot.[40] This data was analyzed using both OSATS and physical workspace measures. Shorter distance traveled, smaller volumes handles, and faster task performance were seen in experts. Progression toward expert standards was seen with each repetition by trainees. OSATS scoring was found to be significantly and positively correlated with performance on workspace metrics.

Assessment of performance in simulators

Simulator performance evaluation is largely based on objective motion and time-based metrics.[10,11,22,23,25] However, these metrics and scoring systems are developed without knowing their clinical significance. This presents a unique challenge to researchers and educators as these metrics must then be reverse validated. A group using the MdVT was able to demonstrate construct validity for errors and that the overall scoring metric correlated with actual robotic performance.[21] Perrenot et al. also used the MdVT and suggested time and economy of motion were the most relevant metrics, as they were able to demonstrate statistically significant construct validity for these.[20]

In the initial paper from USC showing face, content, and construct validity of the dVSS, 11 simulation metrics were used to show construct validity for ten exercises.[22] A later study also by USC found that performance based on the dVSS performance had cross-modality validity with inanimate and in vivo performance.[10]

A different group showed that for novices, learning occurred on the metrics of overall score, time to completion, instrument collisions, instruments out of view, and critical errors in the first ten repetitions of an exercise.[25] A separate study examining the dVSS metrics found 11 to be unique in regard to the operative skill assessed.[23] Using these metrics, construct validity was demonstrated for eight exercises.

Assessment of performance on hybrid simulators has expanded beyond use of simple metrics. A novel augmented reality platform can assess performance by using a combination of metrics within steps and multiple choice questioning about anatomic and technical components of a procedure. This system has been shown to have initial construct validity.[30]

CONCLUSIONS

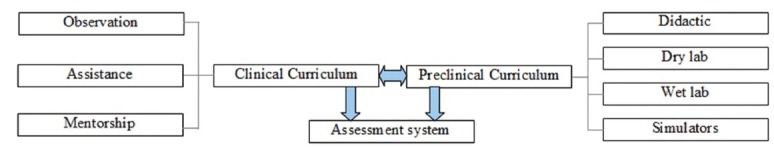

There is a variety of training modalities and assessment tools available for robotic surgery. These building blocks will have to be used to develop a data-based curriculum for teaching robotic surgery. Incorporation of the specific modalities and assessment tools should be based on real data that proves validity, learning, and practical feasibility. Comparative assessment of different modalities (cross-modality correlation of performance or cross-modality validity) can be preformed to ensure that each component works to develop the same or complementing skill sets. Improvement on one modality should correspond to improvement on another [Figure 2].

Figure 2.

Flowchart of a robotics curriculum

Furthermore, having one common assessment tool across modalities may facilitate teaching skills and identifying points of weakness. For example, GEARS has now been validated in not only live clinical cases,[39] but its application has also been established for dry lab and in vivo training as well.[10,11] Additionally, a correlation between Mimic simulation metrics and corresponding global assessment metrics has been elucidated.[10]

A proposed curriculum must have data collected from the date of its implementation. As it would be nearly impossible for a study of a curriculum to include a control group of no training, continuous data collection should be used to guide curriculum evolution. Utilizing these principles, a real, standardized, and evidence-based curriculum that evolves continuously to meet the changing demands of robotic surgery in urology is within reach.

Footnotes

Source of Support: Nil

Conflict of Interest: None declared.

REFERENCES

- 1.Phillips C. Tracking the Rise of Robotic Surgery for Prostate Cancer. NCI Cancer Bulletin [Internet] 2011. Aug 8, [Last cited 2013 May 25]. p. about 5. Available from: http://www.cancer.gov/ncicancerbulletin/080911/page4 .

- 2.Intuitive Surgical [Internet]. Sunnyvale (CA); c1995.2013 [updated 2013 Jan] Company Profile; [about 3 screens] [Last cited on 2013 May 25]. Available from: http://www.intuitivesurgical.com/company/profile.html .

- 3.Herrell SD, Smith JA. Robotic-assisted laparoscopic prostatectomy: What is the learning curve.? Urology. 2005;66(Suppl 5):105–7. doi: 10.1016/j.urology.2005.06.084. [DOI] [PubMed] [Google Scholar]

- 4.Liss MA, McDougall EM. Robotic surgical simulation. Cancer J. 2013;19:124–9. doi: 10.1097/PPO.0b013e3182885d79. [DOI] [PubMed] [Google Scholar]

- 5.American Urological Association [Internet] Linthicum (MD); Urology Core Curriculum; [about 2 screens] [Last cited on 2013 May 25]. Available from: http://www.auanet.org/education/modules/core .

- 6.Schreuder HW, Wolswijk R, Zweemer RP, Schijven MP, Verheijen RH. Training and learning robotic surgery, time for a more structured approach: A systematic review. BJOG. 2012;119:137–49. doi: 10.1111/j.1471-0528.2011.03139.x. [DOI] [PubMed] [Google Scholar]

- 7.Sweet RM, Beach R, Sainfort F, Gupta P, Reihsen T, Poniatowski LH, et al. Introduction and validation of the American Urological Association Basic Laparoscopic Urologic Surgery skills curriculum. J Endourol. 2012;26:190–6. doi: 10.1089/end.2011.0414. [DOI] [PubMed] [Google Scholar]

- 8.Dulan G, Rege RV, Hogg DC, Gilberg-Fisher KM, Arain NA, Tesfay ST, et al. Proficiency-based training for robotic surgery: Construct validity, workload, and expert levels for nine inanimate exercises. Surg Endosc. 2012;26:1516–21. doi: 10.1007/s00464-011-2102-6. [DOI] [PubMed] [Google Scholar]

- 9.Goh A, Joseph R, O’Malley M, Miles B, Dunkin B. Development and validation of inanimate tasks for robotic surgical skills assessment and training. J Urol. 2010;183(Suppl 4):e516. [Google Scholar]

- 10.Hung AJ, Jayaratna IS, Teruya K, Desai MM, Gill IS, Goh AC. Comparative assessment of three standardized robotic surgery training methods. BJU Int. 2013;112:864–71. doi: 10.1111/bju.12045. [DOI] [PubMed] [Google Scholar]

- 11.Ramos P, Montez J, Ng C, Dunn M, Gill I, Hung A. Validation of dry lab exercises for robotic training using global assessment tool. American Urological Association Annual Meeting. 2013 [Google Scholar]

- 12.Dulan G, Rege RV, Hogg DC, Gilberg-Fisher KM, Arain NA, Tesfay ST, et al. Developing a comprehensive, proficiency-based training program for robotic surgery. Surgery. 2012;152:477–88. doi: 10.1016/j.surg.2012.07.028. [DOI] [PubMed] [Google Scholar]

- 13.Dulan G, Rege RV, Hogg DC, Gilberg-Fisher KK, Tesfay ST, Scott DJ. Content and face validity of a comprehensive robotic skills training program for general surgery, urology, and gynecology. Am J Surg. 2012;203:535–9. doi: 10.1016/j.amjsurg.2011.09.021. [DOI] [PubMed] [Google Scholar]

- 14.Marecik SJ, Prasad LM, Park JJ, Jan A, Chaudhry V. Evaluation of midlevel and upper-level residents performing their first robotic-sutured intestinal anastomosis. Am J Surg. 2008;195:333–7. doi: 10.1016/j.amjsurg.2007.12.013. [DOI] [PubMed] [Google Scholar]

- 15.Hung AJ, Ng CK, Patil MB, Zehnder P, Huang E, Aron M, et al. Validation of a novel robotic-assisted partial nephrectomy surgical training model. BJU Int. 2012;110:870–4. doi: 10.1111/j.1464-410X.2012.10953.x. [DOI] [PubMed] [Google Scholar]

- 16.Eun D, Bhandari A, Boris R, Lyall K, Bhandari M, Menon M, et al. A novel technique for creating solid renal pseudotumors and renal vein-inferior vena caval pseudothrombus in a porcine and cadaveric model. J Urol. 2008;180:1510–4. doi: 10.1016/j.juro.2008.06.005. [DOI] [PubMed] [Google Scholar]

- 17.Lendvay TS, Casale P, Sweet R, Peters C. VR robotic surgery: Randomized blinded study of the dV-Trainer robotic simulator. Stud Health Technol Inform. 2008;132:242–4. [PubMed] [Google Scholar]

- 18.Sethi AS, Peine WJ, Mohammadi Y, Sundaram CP. Validation of a novel virtual reality robotic simulator. J Endourol. 2009;23:503–8. doi: 10.1089/end.2008.0250. [DOI] [PubMed] [Google Scholar]

- 19.Lerner MA, Ayalew M, Peine WJ, Sundaram CP. Does training on a virtual reality robotic simulator improve performance on the da Vinci surgical system? J Endourol. 2010;24:467–72. doi: 10.1089/end.2009.0190. [DOI] [PubMed] [Google Scholar]

- 20.Perrenot C, Perez M, Tran N, Jehl JP, Felblinger J, Bresler L, et al. The virtual reality simulator dV-Trainer ® is a valid assessment tool for robotic surgical skills. Surg Endosc. 2012;26:2587–93. doi: 10.1007/s00464-012-2237-0. [DOI] [PubMed] [Google Scholar]

- 21.Lee JY, Mucksavage P, Kerbl DC, Huynh VB, Etafy M, McDougall EM. Validation study of a virtual reality robotic simulator-role as an assessment tool.? J Urol. 2012;187:998–1002. doi: 10.1016/j.juro.2011.10.160. [DOI] [PubMed] [Google Scholar]

- 22.Hung AJ, Zehnder P, Patil MB, Cai J, Ng CK, Aron M, et al. Face, content and construct validity of a novel robotic surgery simulator. J Urol. 2011;186:1019–24. doi: 10.1016/j.juro.2011.04.064. [DOI] [PubMed] [Google Scholar]

- 23.Lyons C, Goldfarb D, Jones SL, Badhiwala N, Miles B, Link R, et al. Which skills really matter. Proving face, content, and construct validity for a commercial robotic simulator? Surg Endosc. 2013;27:2020–30. doi: 10.1007/s00464-012-2704-7. [DOI] [PubMed] [Google Scholar]

- 24.Liss MA, Abdelshehid C, Quach S, Lusch A, Graversen J, Landman J, et al. Validation, correlation, and comparison of the da Vinci trainer™ and the daVinci surgical skills simulator™ using the Mimic™ software for urologic robotic surgical education. J Endourol. 2012;26:1629–34. doi: 10.1089/end.2012.0328. [DOI] [PubMed] [Google Scholar]

- 25.Brinkman WM, Luursema JM, Kengen B, Schout BM, Witjes JA, Bekkers RL. da Vinci skills simulator for assessing learning curve and criterion-based training of robotic basic skills. Urology. 2013;81:562–6. doi: 10.1016/j.urology.2012.10.020. [DOI] [PubMed] [Google Scholar]

- 26.Hung AJ, Patil MB, Zehnder P, Cai J, Ng CK, Aron M, et al. Concurrent and predictive validation of a novel robotic surgery simulator: A prospective, randomized study. J Urol. 2012:187630–7. doi: 10.1016/j.juro.2011.09.154. [DOI] [PubMed] [Google Scholar]

- 27.USC Institute of Urology. Does virtual performance correlate with clinical skills in robotics. Investigating concurrent validity of da Vinci simulation with clinical performance? American Urological Association Annual Meeting 2013. 2013 [Google Scholar]

- 28.Seixas-Mikelus SA, Kesavadas T, Srimathveeravalli G, Chandrasekhar R, Wilding GE, Guru KA. Face validation of a novel robotic surgical simulator. Urology. 2010;76:357–60. doi: 10.1016/j.urology.2009.11.069. [DOI] [PubMed] [Google Scholar]

- 29.Seixas-Mikelus SA, Stegemann AP, Kesavadas T, Srimathveeravalli G, Sathyaseelan G, Chandrasekhar R, et al. Content validation of a novel robotic surgical simulator. BJU Int. 2011:1071130–5. doi: 10.1111/j.1464-410X.2010.09694.x. [DOI] [PubMed] [Google Scholar]

- 30.Abboudi H, Khan MS, Aboumarzouk O, Guru KA, Challacombe B, Dasgupta P, et al. Current status of validation for robotic surgery simulators-a systematic review. BJU Int. 2013;111:194–205. doi: 10.1111/j.1464-410X.2012.11270.x. [DOI] [PubMed] [Google Scholar]

- 31.Stegemann AP, Ahmed K, Syed JR, Rehman S, Ghani K, Autorino R, et al. Fundamental skills of robotic surgery: A multi-institutional randomized controlled trial for validation of a simulation-based curriculum. Urology. 2013;81:767–74. doi: 10.1016/j.urology.2012.12.033. [DOI] [PubMed] [Google Scholar]

- 32.USC Institute of Urology. Novel augmented reality video simulation for robotic partial nephrectomy surgery training. American Urological Association Annual Meeting 2013. 2013 [Google Scholar]

- 33.Guzzo TJ, Gonzalgo ML. Robotic surgical training of the urologic oncologist. Urol Oncol. 2009;27:214–7. doi: 10.1016/j.urolonc.2008.09.019. [DOI] [PubMed] [Google Scholar]

- 34.Challacombe B, Wheatstone S. Telementoring and telerobotics in urological surgery. Curr Urol Rep. 2010;11:22–8. doi: 10.1007/s11934-009-0086-8. [DOI] [PubMed] [Google Scholar]

- 35.Santomauro M, Reina GA, Stroup SP, L’Esperance JO. Telementoring in robotic surgery. Curr Opin Urol. 2013;23:141–5. doi: 10.1097/MOU.0b013e32835d4cc2. [DOI] [PubMed] [Google Scholar]

- 36.Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 1997;84:273–8. doi: 10.1046/j.1365-2168.1997.02502.x. [DOI] [PubMed] [Google Scholar]

- 37.Suh I, Mukherjee M, Oleynikov D, Siu KC. Training program for fundamental surgical skill in robotic laparoscopic surgery. Int J Med Robot. 2011 Jun 17;7:327–333. doi: 10.1002/rcs.402. [DOI] [PubMed] [Google Scholar]

- 38.Vassiliou MC, Feldman LS, Andrew CG, Bergman S, Leffondré K, Stanbridge D, et al. A global assessment tool for evaluation of intraoperative laparoscopic skills. Am J Surg. 2005;190:107–13. doi: 10.1016/j.amjsurg.2005.04.004. [DOI] [PubMed] [Google Scholar]

- 39.Goh AC, Goldfarb DW, Sander JC, Miles BJ, Dunkin BJ. Global evaluative assessment of robotic skills: Validation of a clinical assessment tool to measure robotic surgical skills. J Urol. 2012;187:247–52. doi: 10.1016/j.juro.2011.09.032. [DOI] [PubMed] [Google Scholar]

- 40.Kumar R, Jog A, Vagvolgyi B, Nguyen H, Hager G, Chen CC, et al. Objective measures for longitudinal assessment of robotic surgery training. J Thorac Cardiovasc Surg. 2012;143:528–34. doi: 10.1016/j.jtcvs.2011.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]