Significance

Forecasting is a vital part of strategic intelligence, offering policy makers indications about probable future conditions and aiding sound decision making. Nevertheless, there has not been a concerted effort to study the accuracy of intelligence forecasts over a large set of assessments. This study applied quantitative measures of forecasting accuracy commonly used in other areas of expert forecasting to over 1,500 strategic intelligence forecasts spanning approximately 6 y of intelligence production by a strategic assessment unit. The findings revealed a high level of discrimination and calibration skill. We also show how calibration may be improved through postforecast transformations. The study illustrates the utility of proactively applying scoring rules to intelligence forecasts as a means of outcome-based quality control.

Keywords: forecasting, prediction, intelligence analysis, quality control, recalibration

Abstract

The accuracy of 1,514 strategic intelligence forecasts abstracted from intelligence reports was assessed. The results show that both discrimination and calibration of forecasts was very good. Discrimination was better for senior (versus junior) analysts and for easier (versus harder) forecasts. Miscalibration was mainly due to underconfidence such that analysts assigned more uncertainty than needed given their high level of discrimination. Underconfidence was more pronounced for harder (versus easier) forecasts and for forecasts deemed more (versus less) important for policy decision making. Despite the observed underconfidence, there was a paucity of forecasts in the least informative 0.4–0.6 probability range. Recalibrating the forecasts substantially reduced underconfidence. The findings offer cause for tempered optimism about the accuracy of strategic intelligence forecasts and indicate that intelligence producers aim to promote informativeness while avoiding overstatement.

Strategic intelligence assists high-level decision makers, such as senior government leaders, in understanding the geopolitical factors shaping the world around them. Such intelligence can help decision makers anticipate future events, avoid strategic surprises, and make informed decisions. Policy-neutral intelligence that is timely, relevant, and accurate can be of significant value to decision makers (1). Although not all intelligence is predictive, forecasts are an important part of intelligence, serving to reduce uncertainty about future events for decision makers (2). Forecasts compose a substantial part of the type of judgment that Sherman Kent (widely regarded as the father of modern intelligence analysis) identified as most informative—namely, judgments that go beyond the information given (3). A question arising, then, is how good are intelligence analysts at forecasting geopolitical events?

The answer to that question should be of value to multiple stakeholders. First, intelligence consumers should want to know how good the forecasts they receive actually are. This should guide the weight they assign to them and the trust they place in their sources. Second, intelligence managers directly accountable for analytic quality control should want to know how well their analysts are doing. An objective scorecard might mitigate accountability ping-pong pressures in which the intelligence community reactively shifts its tolerance levels for false-positive and false-negative errors to “now get it right” (4). Third, analysts, an intellectually curious breed, should want to know how good their forecasts are. Beyond satisfying curiosity, receiving objective performance feedback on a regular basis can encourage adaptive learning by revealing judgment characteristics (e.g., overconfidence) that would be hard to detect from case-specific reviews (5). Finally, citizens should want to know how well the intelligence community is doing. Not only does personal security depend on national security, intelligence is a costly enterprise, running into the tens of billions each year in the United States (6).

Despite good reasons to proactively track the accuracy of intelligence forecasts, intelligence organizations seldom keep an objective scorecard of forecasting accuracy (7). There are many reasons why they do not do so. First, analysts seldom use numeric probabilities, which lend themselves to quantitative analyses of accuracy (8). Many analysts, including Kent’s poets (3), are resistant to the prospect of doing so (9). Second, intelligence organizations do not routinely track the outcomes of forecasted events, which are needed for objective scorekeeping. Third, only recently have behavioral scientists offered clear guidance to the intelligence community on how to measure the quality of human judgment in intelligence analysis (10–13). Finally, there may be some apprehension within the community regarding what a scorecard might reveal.

Such apprehension is understandable. The intelligence community has been accused of failing to connect the dots (14), which would seem to be a requisite skill for good forecasting. Nor does literature on human forecasting accuracy inspire high priors for success. It has long been known that people are miscalibrated in their judgment (15), tending toward overconfidence (16). Tetlock’s study of political experts’ forecasts showed that although experts outperformed undergraduates, even the best human forecasters—the Berlinian foxes—were left in the dust compared with the savvier statistical models he tested (17), recalling earlier studies showing how statistical models outperform human experts’ predictions (18, 19).

Nevertheless, it would be premature to draw a pessimistic conclusion about the accuracy of intelligence forecasting without first-hand, quantitative examination of a sufficient number of actual intelligence forecasts. In addition, some experts, such as meteorologists (20, 21) and bridge players (22), are very well calibrated. Intelligence analysts share some similarities with these experts. For instance, their forecasts are a core product of their expertise. This is not true of all experts, not even those who make expert probabilistic judgments. For instance, physicians may be overconfident (23), but if their patients recover, they are unlikely to question their forecasts. The same is true for lawyers and their clients (24). In these cases, the success of experts’ postforecast interventions will matter most to clients. However, analysts do not make policy decisions or intervene directly. Their expertise is shown directly by the quality of their policy-neutral judgments.

Here we report the findings of a long-term field study of the quality of strategic intelligence forecasts where each forecast was expressed with a numeric probability of event occurrence (from 0/10 to 10/10). We examined an extensive range of intelligence reports produced by a strategic intelligence unit over an approximate 6-y period (March 2005 to December 2011). From each report, every judgment was coded for whether it was a forecast, given that not all judgments are predictive (e.g., some are explanatory). The judgments were generally intended to be as specific as possible, reflecting the fact that the intelligence assessments were written to assist policy makers in reaching decisions. Examples of actual forecasts (edited to remove sensitive information) include the following: “It is very unlikely [1/10] that either of these countries will make a strategic decision to launch an offensive war in the coming six months” and “The intense distrust that exists between Country X and Group Y is almost certain [9/10] to prevent the current relationship of convenience from evolving into a stronger alliance.” In cases, such as the latter example, where a resolution time frame is not specified in the forecast itself, it was inferable from the time frame of the report.

Subject matter experts were used to track the outcomes of the forecasted events, enabling us to quantify several aspects of forecast quality. Those aspects are roughly grouped into two types of indices. The first, discrimination [also called resolution (25)], refers to how well a forecaster separates occurrences from nonoccurrences. The second, calibration [or reliability (25)], refers to the degree of correspondence between a forecaster’s subjective probabilities and the observed relative frequency of event occurrences. We use multiple methods for assessing discrimination and calibration, including receiver operating characteristic (ROC) curve analysis (26), Brier score decomposition (25, 27, 28), and binary logistic models for plotting calibration (29, 30), thus allowing for a wide range of comparison with other studies.

We examined discrimination and calibration for the overall set of forecasts and whether forecast quality was influenced by putative moderating factors, including analyst experience, forecast difficulty, forecast importance, and resolution time frame, all of which were coded by experts. Would senior analysts forecast better than junior analysts? The literature offers mixed indications. For example, although expertise benefitted the forecasts of bridge players (22), it had no effect on expert political forecasters (17). Would easier forecasts be better than harder forecasts? Calibration is often sensitive to task difficulty such that harder problems yield overconfidence that is attenuated or even reversed (producing underconfidence) for easier problems—what is commonly termed the hard–easy effect (31). Would forecast quality be better for forecasts assessed by expert coders as more (versus less) important for policy decision making? This question is of practical significance because although intelligence organizations strive for accuracy in all their assessments, they are especially concerned about getting it right on questions of greatest importance to their clients. Finally, would accuracy depend on whether the resolution time frame was shorter (0–6 mo) or longer (6 mo to about 1 y). Longer time frames might offer better odds of the predicted event occurring, much as a larger dartboard would be easier than a smaller one to hit.

In addition to these descriptive aims, we were interested in the possible prescriptive value of the work. Prior research has shown that forecasting quality may be improved through postforecast transformations. Some approaches require the collection of additional forecasts. For example, using small sets of related probability estimates, Karvetski, Olson, Mandel, and Twardy (32) were able to improve discrimination and calibration skill by transforming judgments so that they minimized internal incoherence. Other studies have shown that calibration can be improved through transformations that do not require collecting additional judgments (33–35). To the extent that forecasts in this study were miscalibrated, we planned to explore the potential benefit and usability of transformations that do not require the collection of additional forecasts.

Results

Discrimination.

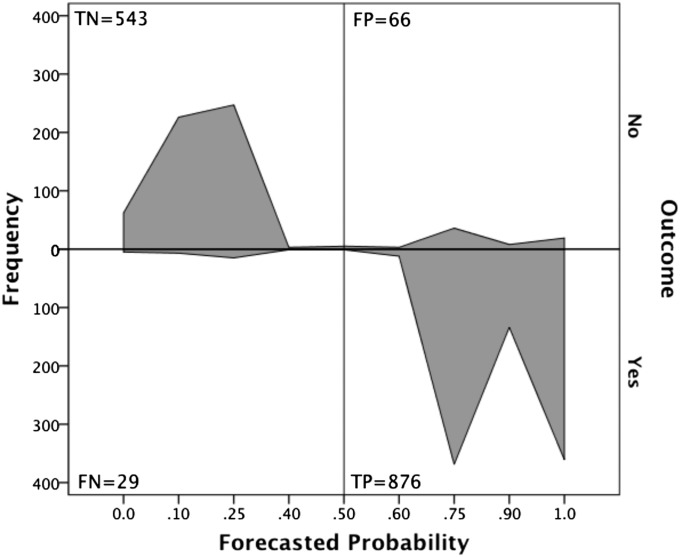

Fig. 1 shows a discrimination diagram of the forecast–outcome cross-tabulation data. The data are arrayed such that the four quadrants of the diagram show the frequency of true positives (TP), false positives (FP), false negatives (FN), and true negatives (TN) when forecast probabilities ≤0.5 indicate event nonoccurrence and probabilities >0.5 indicate event occurrence. Fig. 1 shows that most forecasts (94%) were correctly classified as either TP or TN cases. As well, few cases were in the middle (0.4–0.6) range of the probability scale. This is advantageous because such high-uncertainty forecasts are less informative for decision makers.

Fig. 1.

Discrimination diagram. FN, false negatives; FP, false positives; TN, true negatives; TP, true positives.

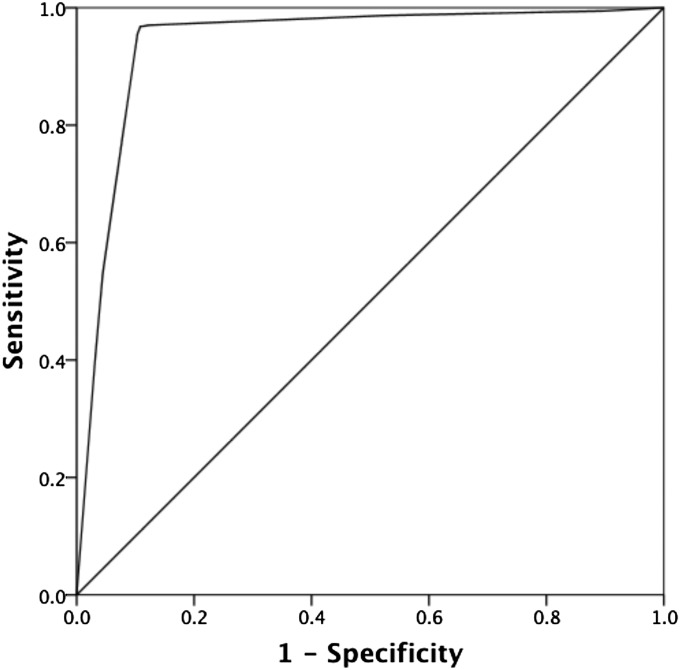

Fig. 2 shows the ROC curve, plotting the TP rate (sensitivity) as a function of the FP rate (1 − specificity). A useful measure of discrimination skill is the area under the ROC curve, A (25), the proportion of the total area of the unit square defined by the two axes of the ROC curve. A can range from 0.5 (i.e., the area covered by the 45° no-discrimination line) to 1.0 (perfect discrimination). A was 0.940 (SE = 0.007), which is very good.

Fig. 2.

ROC curve.

To assess the effect of the putative moderators, Ai was compared across levels of each moderator using the following Z-score equation (36):

| [1] |

Discrimination was significantly higher for senior analysts (A1 = 0.951, SE = 0.008) than for junior analysts (A2 = 0.909, SE = 0.017), Z = 2.24, P = 0.025. Likewise, discrimination was higher for easier forecasts (A1 = 0.966, SE = 0.010) than for harder forecasts (A2 = 0.913, SE = 0.011), Z = 3.57, P < 0.001. Discrimination did not differ by importance or resolution time frame (P > 0.25).

Finally, discrimination was also very good when the forecast probabilities were taken into account using Brier score decomposition. The Brier score, B, is the squared deviation between a forecast and the outcome coded 0 (nonoccurrence) or 1 (occurrence). The mean Brier score is a proper scoring rule for probabilistic forecasts:

| [2] |

where N is the total number of forecasts (1,514 in this study); fi is the subjective probability assigned to the ith forecast; and oi is the outcome of the ith event, coded 0 or 1. However, because is affected not only by forecaster skill but also by the uncertainty of the forecasting environment, it is usually decomposed into indices of variance (VI), discrimination (DI), and calibration (CI):

| [3] |

where

| [4] |

| [5] |

and

| [6] |

In Eqs. 4–6, is the base rate or overall relative frequency of event occurrence, K is the number of forecast categories (9 in this study), Nk is the number of forecasts in category k, fk is the subjective probability assigned to forecasts in category k, and is the relative frequency of event occurrence in category k. In this study, = 0.074, VI = 0.240, and DI = 0.182. Because DI is upper-bounded by VI, it is common to normalize discrimination:

| [7] |

An adjustment is sometimes made to this measure (27). However, it had no effect in this study. Normalized discrimination was very good, with forecasts explaining 76% of outcome variance, η2 = 0.758.

Calibration.

The calibration index, CI, which sums the squared deviations of forecasts and the relative frequencies of event occurrence for each forecast category, is perfect when CI = 0. In this study, CI = 0.016. An alternative measure of calibration called calibration-in-the-large (28), CIL, is the squared deviation between the mean forecast and the base rate of event occurrence over all categories:

| [8] |

Calibration in the large was virtually nil, CIL = 3.60e−5. Thus, both indices showed that forecasts were well calibrated.

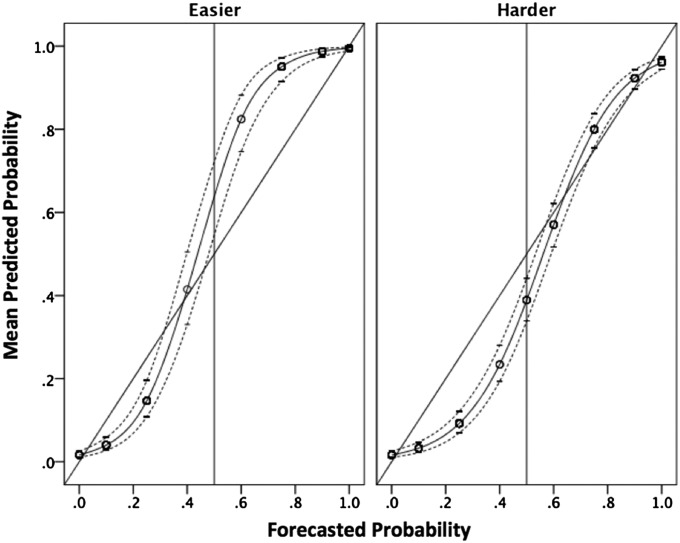

Calibration curves were modeled using a generalized linear model with a binary logistic link function. Event outcomes were first modeled on forecast, difficulty, importance, experience, resolution time frame, and all two-way interactions with forecast. Two predictors were significant: forecast and the forecast × difficulty interaction. Using forecast and difficulty as predictors, the model fit for forecast only and forecast with the interaction term were compared using Akaike information criterion (AIC) (37). The model including the interaction term improved model fit (AIC = 118.14) over the simpler model (AIC = 148.47). Fig. 3 shows the calibration curves plotting mean predicted probability of the event occurring as a function of forecast and difficulty. It is evident that in both the easier and harder sets of forecast, miscalibration was mainly due to underconfidence, as revealed by the characteristic S-shape of the curves. This pattern of calibration is also referred to as underextremity bias because the forecasts are not as extreme as they should be (38). In the easier set, underconfidence is more pronounced for forecasts above 0.5, whereas underconfidence is more pronounced for forecasts below 0.5 in the harder set.

Fig. 3.

Model-based calibration curves. Dotted lines show 95% confidence intervals.

A signed calibration of confidence index (39), CCI, was computed as follows, such that negative values indicated underconfidence and positive values indicated overconfidence:

| [9] |

This measure omits the small number of cases where fk = 0.5. In this study, CCI = −0.076 (s = 0.096), therefore indicating underconfidence. The 99% confidence interval on the estimate ranged from −0.082 to −0.070, placing zero well outside its narrow range. Given the nonnormality of subsample distributions of underconfidence, we examined the effect of the moderators on underconfidence using Mann–Whitney u tests. There was no effect of experience or resolution time frame (P > 0.15). However, underconfidence was greater for harder forecasts (median = −0.106) than for easier forecasts (median = −0.044), Z = −13.39, P < 0.001. Underconfidence was also greater for forecasts of greater importance (median = −0.071) rather than lesser importance (median = −0.044), Z = −4.49, P < 0.001. Given that difficulty and importance were positively related (r = 0.17, P = 0.001), we tested whether each had unique predictive effects on underconfidence using ordinal regression. Both difficulty (b = 1.26, SE = 0.10, P < 0.001) and importance (b = 0.32, SE = 0.12, P = 0.007) predicted underconfidence, together accounting for roughly an eighth of the variance in underconfidence (Nagelkerke pseudo-R2 = 0.124).

Sensitivity.

The high degree of forecasting quality raises questions about the sensitivity of the results to excluded cases. There were two types of exclusions we explored: cases with ambiguous outcomes and cases without numeric probabilities. The first type accounted for about 20% of numeric forecasts and typically occurred when the event in question was not defined crisply enough to make an unambiguous determination of outcome. In such cases, the coders either assigned partial scores indicating evidence in favor of occurrence or nonoccurrence, or else an unknown code. We dummy coded partial nonoccurrences as 0.25, unknown cases as 0.50, and partial occurrences as 0.75 and recomputed the mean Brier score. The resulting value was 0.090. A comparable analysis was undertaken to assess the effect of excluding the roughly 16% of forecasts that did not have numeric probabilities assigned. In these cases, terms such as could or might were used, and these were deemed to be too imprecise to assign numeric equivalents. For the present purpose, we assume that such terms are likely to be interpreted as being a fifty-fifty call (40), and accordingly, we assign a probability of 0.50 to those cases. The recomputed mean Brier score was 0.097. Given that both recomputed Brier scores are still very good, we can be confident that the results are not due to case selection biases.

Recalibration.

Given that forecasts exhibited underextremity bias, we applied a transformation that made them more extreme. Following ref. 33–35, which draw on Karmarker’s earlier formulation (41), we used the following transformation:

| [10] |

where t is the transformed forecast and a is a tuning parameter. When a > 1, the transformed probabilities are made more extreme. We varied a by 0.1 increments from 1 to 3 and found that the optimal value for minimizing CI was 2.2. Fig. S1 plots CI as a function of a. Fig. S2 shows the transformation function when a = 2.2. Recalibrating the forecasts in this manner substantially improved calibration, CI = 0.0018. To assess the degree of improvement, it is useful to consider the square root of CI in percentage format, which is the mean absolute deviation from perfect calibration. This value is 12.7% for the original forecasts and 4.2% for the transformed forecasts, a 66.9% decrease in mean absolute deviation.

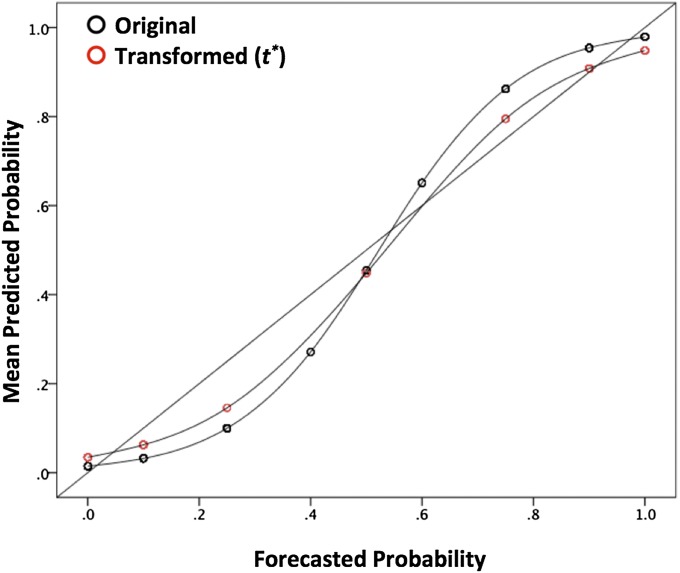

Although the Karmarker transformation improved calibration, the resulting forecast probabilities have no analog on the numeric scale used by the intelligence unit that we studied. To assess whether a more feasible recalibration method that uses the original scale points may be of value, the transformed values were mapped onto their closest original scale point, resulting in a remapping of the original forecasts, fk, to the new transformed forecasts, t*k as follows for [ fk, t*k]: [0, 0], [0.1, 0], [0.25, 0.1], [0.4, 0.25], [0.5, 0.5], [0.6, 0.75], [0.75, 0.9], [0.9, 1], and [1, 1]. With this feasibility adjustment, CI = 0.0021. The mean absolute deviation from perfect calibration was 4.6%, a slight difference from the optimized Karmarker transformation. Fig. 4 shows the calibration curve for the 1,514 forecasts before and after remapping, whereas Fig. S3 shows the curves before and after the Karmarker transformation was applied. The calibration curves for the transformed forecasts shown in the two figures are almost indistinguishable.

Fig. 4.

Calibration curves before and after recalibration to t*.

Discussion

Our findings warrant tempered optimism about the quality of strategic intelligence forecasts. The forecasts fared exceptionally well in terms of discrimination and calibration, and these results were not particularly sensitive to case exclusions. The results provide a stark comparison with Tetlock’s (17) findings. Whereas the best political forecasters in his sample explained about 20% of the outcome variance, forecasts in this study explained 76% of the variance in geopolitical outcomes. Likewise, the mean absolute deviation from perfect calibration (the square root of the calibration index) was 25% smaller in our study than in Tetlock’s, reflecting better calibration. Experience had no effect in his study, but analytic experience led to a practically beneficial improvement in discrimination in this study. Not only did senior analysts discriminate better than junior analysts, they produced 68% of the forecasts despite constituting less than half of the analyst sample (Materials and Method). Finally, whereas experts in Tetlock’s study showed overconfidence, forecasts in this study were underconfident. Although unbiased confidence is optimal, the cost of overconfidence in intelligence will usually outweigh that of underconfidence. Underconfident forecasting occurs when analysts discriminate better than they think they can. Although underconfidence reduces informational value for decision makers by expressing forecasts too timidly, it signals good judgment. Overconfidence, in contrast, indicates that forecasts are communicated with unwarranted certainty, implying that they are more error-prone than experts predict.

Several of the findings suggest that intelligence producers adhere to a professional norm of promoting informativeness while avoiding overstatement. First, there were few uninformative forecasts near maximum uncertainty (probabilities of 0.4–0.6). If analysts were only concerned with playing it safe, we would likely have seen a bulge rather than a trough in that region. Second, underconfidence was more pronounced when the issues were relatively complex and more important for policy makers. These variations are consistent with a norm of caution, which both difficulty and consequence of judgment should heighten. Indeed, the effect of difficulty may otherwise appear surprising given that harder problems usually produce greater overconfidence (30). As well, this pattern (greater underconfidence for harder forecasts) does not support the explanation that underconfidence in this study was mainly due to easy forecasting problems. A norm of caution may serve the intelligence community well in the absence of systematic feedback on the forecasting quality. With such feedback, adjustments to cautionary normative pressure could lead to crisper indications as analysts and their managers become aware of characteristics such as good discrimination (5). That would, in turn, better serve the aim of reducing uncertainty in the mind of the decision maker (2).

A deeper account of the present findings might trace normative pressures for cautious, yet informative assessments to analysts’ accountability to multiple, skeptical audiences. Accountability pressure has been shown to reduce overconfidence in predicting personality attributes (42), reduced overattribution bias (43), deeper information processing (44), and better awareness of the informational determinants of one’s choices (45). As Arkes and Kajdasz (46) have already noted about a preliminary summary of our findings presented to the National Research Council Committee on Behavioral and Social Science Research to Improve Intelligence Analysis for National Security, the strategic analysts who made the forecasts studied here had much more severe accountability pressures than experts in Tetlock’s (17) study and in other academic research. Experts in such studies know that their forecasts will be anonymously analyzed in the aggregate. In contrast, analysts are personally accountable to multiple, skeptical audiences. They have to be able to defend their assessments to their managers. Together with those managers, they need to be able to speak truth to power. Analysts are acutely aware of the multiple costs of getting it wrong, especially on the most important issues. The flipside of accountability pressure is feedback, which may also benefit accuracy. Given that analysts assessments must be vetted by managers and may receive input from peers, they may also benefit from the underlying team process, as has recently been verified in a controlled geopolitical forecasting tournament (47).

As noted earlier, underconfidence, as a source of miscalibration, is the lesser of two evils. It is also to a large extent correctable. We showed that miscalibration could be attenuated postforecast in ways that are organizationally usable. Using the Karmarker transformation as a guide, we remapped forecasts to the organizational scale value that had come closest to the transformed value. This procedure yielded a substantial 63.8% decrease in mean absolute deviation from perfect calibration. If intelligence organizations were able to discern stable biases in forecasting, such corrective measures could potentially be implemented between forecast production and finished intelligence dissemination to consumers.

Of course, recalibration, like any model-fitting exercise, requires caution. Aside from the philosophical matter of whether the transformed values still represent subjective probabilities (48), care ought to be taken not to overfit or overgeneralize the approach. This is especially likely in cases where the recalibration rules are based on small sets of forecasts or where attempts are made to generalize to other contexts. Accordingly, we would not advise a different intelligence organization to similarly make their forecasts more extreme without having first studied their own forecast characteristics. A further complication may arise if intelligence consumers have already learned to recalibrate a source’s forecasts. For instance, if policy makers know that the forecasts they receive from a given source tend to be underconfident, they may intuitively adjust for that bias by decoding the forecast in more extreme terms (33). Thus, a change in procedure that resulted in debiased forecasts would somehow have to be indicated to consumers so that they do not continue to correct the corrected forecasts. What this work suggests, however, is that at least in principle, such correctives are doable.

Materials and Methods

Forecast data were acquired from internal versions of intelligence reports produced by 15 strategic intelligence analysts that composed the analytic team of the Middle East and Africa Division within the Intelligence Assessment Secretariat (IAS) over the study period. IAS is the Canadian government’s strategic intelligence analysis unit, providing original, policy-neutral assessments on foreign developments and trends for the Privy Council Office and senior clients across government. The analysts were subject matter experts, normally with postgraduate degrees and in many cases extensive experience working or traveling in their regions of responsibility (especially for senior analysts).

The internal versions of reports included numerical probabilities assigned to unconditional forecasts, which could take on values of 0, 1, 2.5, 4, 5, 6, 7.5, 9, or 10 (out of 10). Such numbers were only assigned to judgments that were unconditional forecasts and they were not included in finished reports. Analysts within the division over the period of examination made the initial determination of whether their judgments were unconditional forecasts, conditional forecasts (e.g., of the form “if X happens, then Y is likely to occur”), or explanatory judgments (e.g., judgments of the probable cause of a past or ongoing geopolitical event), and those were subsequently reviewed and, if necessary, discussed with the analyst’s manager, who had the final say. Typically, each page of an intelligence report will contain several judgments of these various types, and these judgments represented a wide range of unique events or situations.

In cases, where the same judgment appeared multiple times, only the first judgment was entered into the database. For example, if a forecast appeared as a key judgment (i.e., a select set of judgments highlighted at the start of a report) and was then repeated later in the report, only the first appearance was counted. Moreover, a single analyst initially would produce a given forecast, which would subsequently be reviewed by the analyst’s manager. The drafting process also normally involves extensive consultation with other experts and government officials. Thus, the forecasts that appear in intelligence reports are seldom the result of a single individual arriving at his or her judgment in isolation. It is more accurate to regard an analytical forecast as an organizational product reflecting the input of the primary analyst, his or her manager, and possibly a number of peer analysts.

From a total set of 3,881 judgments, classifications were as follows: 59.7% (2,315) were unconditional forecasts, 15.0% were conditional forecasts, and 25.3% were explanatory judgments. From the unconditional forecasts, on which we focus, 83.8% (1,943) had numeric probabilities assigned. The remaining cases were not assigned numeric probabilities, because the verbal expressions of uncertainty used were deemed too imprecise to warrant a numeric translation.

Two subject matter experts unaffiliated with the division coded outcomes. Each coder handled a distinct set of forecasts, although both coded the same 50 forecasts to assess reliability, which was at 90% agreement. Although having unambiguous, mutually exclusive and exhaustive outcome possibilities for all forecasts is desirable (17), such control was impossible here. In 80.4% (i.e., 1,562/1,943) of cases, forecasts were articulated clearly enough that the outcome could be coded as an unambiguous nonoccurrence or occurrence. In the remaining cases, however, the outcome was ambiguous, usually because the relevant forecast lacked sufficient precision and actual conditions included a mix of pro and con evidence. In such cases, outcomes were assigned to “partial nonoccurrence,” “partial occurrence,” or “unknown” categories, depending on the coders’ assessments of the balance of evidence. However, the primary analyses are restricted to the unambiguous set. Note that outcomes themselves could represent either commissions and affirmed actions (e.g., President X will be elected) or omissions and negated actions (e.g., President X will not be elected). We further excluded 30 forecast cases that were made by teams of analysts and additional 18 cases for which full data on the coded moderator variables were unavailable. The final set of cases thus included 1,514 forecasts (Dataset S1).

Experts also coded the four moderating variables examined here. A.B. coded analyst experience, assigning the analyst to either a junior or senior category. In general, analysts with 0–4 y of experience as an analyst in the IAS were coded as junior, and those with more than 4 y of experience were coded senior. However, this was modified on occasion if other factors were in play, such as whether the analyst had prior experience in a similar strategic assessment unit or extensive experience working or traveling in their region of responsibility. Among the 15 analysts, seven were coded as senior (accounting for 1,027 or roughly 68% of the forecasts), and two of those became senior over the course of the study (those two thus contributed to both the junior and senior groupings). Expert coders coded the remaining three variables: forecast difficulty (easier/harder), forecast importance (lesser/greater), and resolution time frame (less than/greater than 6 mo). A.B. reviewed the codes and discrepant assignments were resolved by discussion. Further details on the procedures for coding these variables are available in Supporting Information.

Supplementary Material

Acknowledgments

We thank Quan Lam, Jamie Marcus, Philip Omorogbe, and Karen Richards for their research assistance. This work was supported by Defence Research and Development Canada’s Joint Intelligence Collection and Analytic Capability Project and Applied Research Program 15dm Project and by Natural Sciences and Engineering Research Council of Canada Discovery Grant 249537-07.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1406138111/-/DCSupplemental.

References

- 1.Treverton GF. In: Analyzing Intelligence: Origins, Obstacles, and Innovations. George RZ, Bruce JB, editors. Washington, DC: Georgetown Univ Press; 2008. pp. 91–106. [Google Scholar]

- 2.Fingar T. In: Intelligence Analysis: Behavioral and Social Scientific Foundations. Fischhoff B, Chauvin C, editors. Washington, DC: Natl Acad Press; 2011. pp. 3–27. [Google Scholar]

- 3.Kent S. 1964. Words of estimative probability. Available at https://www.cia.gov/library/center-for-the-study-of-intelligence/csi-publications/books-and-monographs/sherman-kent-and-the-board-of-national-estimates-collected-essays/6words.html.

- 4.Tetlock PE, Mellers BA. Intelligent management of intelligence agencies: beyond accountability ping-pong. Am Psychol. 2011;66(6):542–554. doi: 10.1037/a0024285. [DOI] [PubMed] [Google Scholar]

- 5.Rieber S. Intelligence analysis and judgmental calibration. Int J Intell CounterIntell. 2004;17(1):97–112. [Google Scholar]

- 6. Reuters (October 28, 2011) Fiscal 2011 U.S. intelligence budget was 54.6 bln. Available at http://www.reuters.com/article/2011/10/28/usa-intelligence-budget-idUSN1E79R1CN20111028.

- 7.Betts RK. 2007. Enemies of Intelligence: Knowledge and Power in American National Security (Columbia Univ Press, New York), 241 pp.

- 8.Horowitz MC, Tetlock PE. 2012. Trending upward: How the intelligence community can better see into the future. Foreign Policy (Sept. 6). Available at http://www.foreignpolicy.com/articles/2012/09/06/trending_upward.

- 9.Weiss C. Communicating uncertainty in intelligence and other professions. Int J Intell CounterIntell. 2008;21(1):57–85. [Google Scholar]

- 10.Fischhoff B, Chauvin C, editors. Intelligence Analysis: Behavioral and Social Scientific Foundations. Washington, DC: Natl Acad Press; 2011. , 338 pp. [Google Scholar]

- 11.Derbentseva N, McLellan L, Mandel DR. 2011. Issues in Intelligence Production: Summary of Interviews with Canadian Intelligence Managers (Def Res and Dev Can, Toronto), Tech Rep 2010-144.

- 12.National Research Council . Field Evaluation in the Intelligence and Counterintelligence Context: Workshop Summary. Washington, DC: Natl Acad Press; 2010. , 114 pp. [Google Scholar]

- 13.National Research Council . Intelligence Analysis for Tomorrow: Advances from the Behavioral and Social Sciences. Washington, DC: Natl Acad Press; 2011. , 102 pp. [Google Scholar]

- 14.National Commission on Terrorist Attacks upon the United States . The 9/11 Commission Report: Final Report of the National Commission on Terrorist Attacks upon the United States. Washington, DC: US Gov Print Off; 2004. , 585 pp. [Google Scholar]

- 15.Lichtenstein S, Fischhoff B, Phillips LD. In: Judgment Under Uncertainty: Heuristics and Biases. Kahneman D, Slovic P, Tversky A, editors. New York: Cambridge Univ Press; 1982. pp. 306–334. [Google Scholar]

- 16.Fischhoff B, Slovic P, Lichtenstein S. Knowing with certainty: The appropriateness of extreme confidence. J Exp Psychol Hum Percept Perform. 1977;3(4):552–564. [Google Scholar]

- 17.Tetlock PE. Expert Political Judgment: How Good Is It? How Can We Know? Princeton: Princeton Univ Press; 2005. , 321 pp. [Google Scholar]

- 18.Dawes RM. The robust beauty of improper linear models in decision making. Am Psychol. 1979;34(7):571–582. [Google Scholar]

- 19.Meehl PE. Clinical versus Statistical Prediction: A Theoretical Analysis and a Review of the Evidence. Minneapolis: Univ Minn Press; 1954. , 149 pp. [Google Scholar]

- 20.Murphy AH, Winkler RL. Probability forecasting in meteorology. J Am Stat Assoc. 1984;79(387):489–500. [Google Scholar]

- 21.Charba JP, Klein WH. Skill in precipitation forecasting in the National Weather Service. Bull Am Meteorol Soc. 1980;61(12):1546–1555. [Google Scholar]

- 22.Keren G. Facing uncertainty in the game of bridge: A calibration study. Organ Behav Hum Decis Process. 1987;39(1):98–114. [Google Scholar]

- 23.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121(5) Suppl:S2–S23. doi: 10.1016/j.amjmed.2008.01.001. [DOI] [PubMed] [Google Scholar]

- 24.Goodman-Delahunty J, Granhag PA, Hartwig M, Loftus EF. Insightful or wishful: Lawyers’ ability to predict case outcomes. Psychol Public Policy Law. 2010;16(2):133–157. [Google Scholar]

- 25.Murphy AH. A new vector partition of the probability score. J Appl Meteorol. 1973;12(4):595–600. [Google Scholar]

- 26.Swets JA. Form of empirical ROCs in discrimination and diagnostic tasks: Implications for theory and measurement of performance. Psychol Bull. 1986;99(2):181–198. [PubMed] [Google Scholar]

- 27.Yaniv I, Yates JF, Smith JEK. Measures of discrimination skill in probabilistic judgment. Psychol Bull. 1991;110(3):611–617. [Google Scholar]

- 28.Yates JF. Judgment and Decision Making. Englewood Cliffs, NJ: Prentice Hall; 1990. , 430 pp. [Google Scholar]

- 29.Cox DR. Two further applications of a model for binary regression. Biometrika. 1958;45(3-4):562–565. [Google Scholar]

- 30.Budescu DV, Johnson TR. A model-based approach for the analysis of the calibration of probability judgments. Judgm Decis Mak. 2011;6(8):857–869. [Google Scholar]

- 31.Lichtenstein S, Fischhoff B. Do those who know more also know more about how much they know? Organ Behav Hum Perform. 1977;20(2):159–183. [Google Scholar]

- 32.Karvetski CW, Olson KC, Mandel DR, Twardy CR. Probabilistic coherence weighting for optimizing expert forecasts. Decis Anal. 2013;10(4):305–326. [Google Scholar]

- 33.Shlomi Y, Wallsten TS. Subjective recalibration of advisors’ probability estimates. Psychon Bull Rev. 2010;17(4):492–498. doi: 10.3758/PBR.17.4.492. [DOI] [PubMed] [Google Scholar]

- 34.Turner BM, Steyvers M, Merkle EC, Budescu DV, Wallsten TS. Forecast aggregation via recalibration. Mach Learn. 2014;95(3):261–289. [Google Scholar]

- 35.Baron J, Mellers BA, Tetlock PE, Stone E, Ungar LH. Two reasons to make aggregated probability forecasts more extreme. Decis Anal. 2014;11(2):133–145. [Google Scholar]

- 36.Pearce J, Ferrier S. Evaluating the predictive performance of habitat models developed using logistic regression. Ecol Modell. 2000;133(3):225–245. [Google Scholar]

- 37.Akaike H. A new look at the statistical model identification. IEEE Trans Automat Contr. 1974;19(6):716–723. [Google Scholar]

- 38.Koehler DJ, Brenner L, Griffin D. In: Heuristics and Biases: The Psychology of Intuitive Judgment. Gilovich T, Griffin D, Kahneman D, editors. Cambridge, UK: Cambridge Univ Press; 2002. pp. 686–715. [Google Scholar]

- 39.Wallsten TS, Budescu DV, Zwick R. Comparing the calibration and coherence of numerical and verbal probability judgments. Manage Sci. 1993;39(2):176–190. [Google Scholar]

- 40.Fischhoff B, Bruine de Bruin W. Fifty-fifty=50%? J Behav Decis Making. 1999;12(2):149–163. [Google Scholar]

- 41.Karmarkar US. Subjectively weighted utility: A descriptive extension of the expected utility model. Organ Behav Hum Perform. 1978;21(1):61–72. [Google Scholar]

- 42.Tetlock PE, Kim JI. Accountability and judgment processes in a personality prediction task. J Pers Soc Psychol. 1987;52(4):700–709. doi: 10.1037//0022-3514.52.4.700. [DOI] [PubMed] [Google Scholar]

- 43.Tetlock PE. Accountability: A social check on the fundamental attribution error. Soc Psychol Q. 1985;48(3):227–236. [Google Scholar]

- 44.Chaiken S. Heuristic versus systematic information processing and the use of source versus message and cues in persuasion. J Pers Soc Psychol. 1980;39(5):752–766. [Google Scholar]

- 45.Hagafors R, Brehmer B. Does having to justify one's decisions change the nature of the judgment process? Organ Behav Hum Perform. 1983;31(2):223–232. [Google Scholar]

- 46.Arkes HR, Kajdasz J. In: Intelligence Analysis: Behavioral and Social Scientific Foundations. Fischhoff B, Chauvin C, editors. Washington, DC: Natl Acad Press; 2011. pp. 143–168. [Google Scholar]

- 47.Mellers B, et al. Psychological strategies for winning geopolitical forecasting tournaments. Psychol Sci. 2014;25(5):1106–1115. doi: 10.1177/0956797614524255. [DOI] [PubMed] [Google Scholar]

- 48.Kadane J, Fischhoff B. A cautionary note on global recalibration. Judgm Decis Mak. 2013;8(1):25–28. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.