Significance

A major challenge for visual perception is to select behaviorally relevant objects from scenes containing a large number of distracting objects that compete for limited processing resources. Here, we show that such competitive interactions in human visual cortex are reduced when distracters are positioned in commonly experienced configurations (e.g., a lamp above a dining table), leading to improved detection of target objects. This indicates that the visual system can exploit real-world regularities to group objects that typically co-occur; a plate flanked by a fork and a knife can be grouped into a dining set. This interobject grouping effectively reduces the number of competing objects and thus can facilitate the selection of targets in cluttered, but regular environments.

Keywords: object perception, visual regularity, biased competition, chunking, natural scenes

Abstract

In virtually every real-life situation humans are confronted with complex and cluttered visual environments that contain a multitude of objects. Because of the limited capacity of the visual system, objects compete for neural representation and cognitive processing resources. Previous work has shown that such attentional competition is partly object based, such that competition among elements is reduced when these elements perceptually group into an object based on low-level cues. Here, using functional MRI (fMRI) and behavioral measures, we show that the attentional benefit of grouping extends to higher-level grouping based on the relative position of objects as experienced in the real world. An fMRI study designed to measure competitive interactions among objects in human visual cortex revealed reduced neural competition between objects when these were presented in commonly experienced configurations, such as a lamp above a table, relative to the same objects presented in other configurations. In behavioral visual search studies, we then related this reduced neural competition to improved target detection when distracter objects were shown in regular configurations. Control studies showed that low-level grouping could not account for these results. We interpret these findings as reflecting the grouping of objects based on higher-level spatial-relational knowledge acquired through a lifetime of seeing objects in specific configurations. This interobject grouping effectively reduces the number of objects that compete for representation and thereby contributes to the efficiency of real-world perception.

In daily life, humans are confronted with complex and cluttered visual environments that contain a large amount of visual information. Because of the limited capacity of the visual system, not all of this information can be processed concurrently. Consequently, elements within a visual scene are competing for neural representation and cognitive processing resources (1, 2). Such competitive interactions can be observed in neural responses when multiple stimuli are presented at the same time. Single-cell recordings in monkey visual cortex revealed that activity evoked by a neuron's preferred stimulus is suppressed when a nonpreferred stimulus is simultaneously present in the neuron's receptive field (3–5). Corresponding evidence for mutually suppressive interactions among competing stimuli has been obtained from human visual cortex using functional magnetic resonance imaging (fMRI) (6).

According to biased competition theory, these competitive interactions occur between objects rather than between the parts of a single object (1). This idea of object-based competition is supported by behavioral studies showing that judgments on two properties of one object are more accurate than judgments on the same properties distributed over two objects (7). However, the degree of competition among objects is strongly influenced by contextual factors, such as stimulus similarity (8–10), geometric relationships between stimuli (11), and perceptual grouping (12, 13). For example, competitive interactions in human visual cortex are greatly reduced when multiple single stimuli form an illusory contour and hence can be perceptually grouped into a single gestalt (12).

Whereas the attentional benefit of grouping based on low-level cues is well established, much less is known about object grouping at more conceptual levels. Many objects in real-world scenes occupy regular and predictable locations relative to other objects. For example, a bathroom sink is typically seen together with a mirror in a highly regular spatial arrangement. When considering highly regular object pairs like these it becomes clear that the world can be carved up at different levels: based on low-level cues such as those specified by gestalt laws, but also based on conceptual knowledge and long-term visual experience; a plate flanked by a fork and a knife is both a dinner plate set and three separate objects.

In the present fMRI and behavioral studies, we asked whether grouping based on real-world regularities modulates attentional competition. We hypothesized that objects that appear in frequently experienced configurations are, to some extent, grouped, resulting in reduced competition between these objects. To test this prediction, we presented pairs of common everyday objects either in their typical, regular configuration (e.g., a lamp above a table) or in an irregular configuration (e.g., a lamp below a table). Our findings indicate that grouping of objects based on real-world regularities effectively reduces the number of competing objects, leading to reduced neural competition and more efficient visual perception.

Results

fMRI Experiment.

To measure competitive interactions between objects in human visual cortex, we followed the rationale of classical single-cell recording studies that indexed competition as the difference between neural activity evoked by a neuron's preferred stimulus presented in isolation and neural activity evoked by a neuron's preferred stimulus presented together with nonpreferred stimuli (3–5). The stronger the nonpreferred stimuli compete for representation, the more the neuron’s response will be reduced. For example, an increase in the number of nonpreferred stimuli would lead to a decrease in the response to (and representation of) the neuron’s preferred stimulus.

Because of the relatively poor spatial resolution of fMRI, the preferred stimulus in our study was the category houses, capitalizing on the finding that a region in the parahippocampal cortex (the parahippocampal place area, PPA; Fig. 1C) responds preferentially to houses relative to other objects (14, 15). To induce competition, the house stimuli were presented together with pairs of common everyday objects—nonpreferred stimuli for the PPA. The pairs were presented either in their regular, commonly experienced configuration or in an irregular configuration, where pairs were vertically reversed (Fig. 1A). Thus, displays with regular and irregular object pairs differed only with regard to the relative spatial position of the single objects within pairs, whereas all other stimulus aspects were identical. This allowed us to test for differences in neural competition as a function of the relative spatial positions of the objects: if regularly positioned objects are grouped, effectively reducing the number of competing nonpreferred elements, they should compete less with houses than irregularly positioned objects. This would predict stronger PPA responses to houses presented together with the regular than with the irregular object pairs.

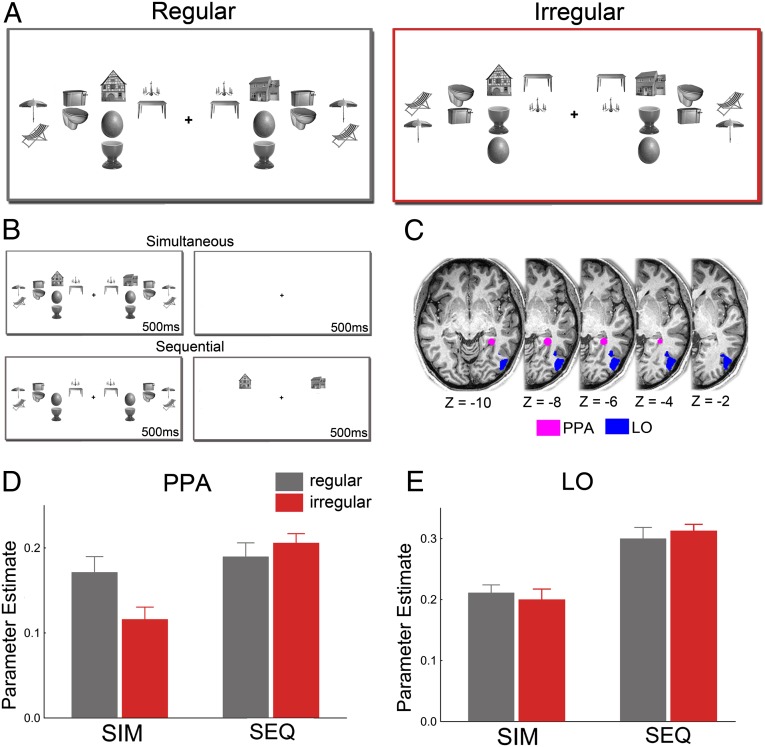

Fig. 1.

Increased house-evoked activity in PPA when simultaneously presented object distracters are positioned according to real-world regularities. (A) In each display, two house stimuli were surrounded by a total of eight object pairs. The configurations of the objects were either regular (Left) or irregular (Right) relative to their real-world configurations. (B) Attentional competition was manipulated by either presenting the houses and the surrounding pairs simultaneously for 500 ms, followed by a 500-ms blank screen, or sequentially, for 500 ms each. (C) Location of right-hemispheric PPA and LO in a representative participant. (D) When houses and object pairs were presented simultaneously (SIM), house-selective PPA showed stronger responses when the object pairs were positioned according to real-world regularities than when they were not, indicating reduced attentional competition. No such difference was observed in the absence of competition between houses and objects—when houses and object pairs were presented sequentially (SEQ). (E) In contrast to house-selective PPA, responses in object-selective LO were not modulated by pair configuration.

Importantly, to ensure that response differences between the regular and irregular conditions reflected differences in attentional competition rather than differential responses to the regular and irregular object pairs themselves, we additionally included conditions in which the house and object stimuli were presented sequentially (6, 8, 12, 13). Competitive interactions among houses and object pairs are expected to occur in the simultaneous condition but not in the sequential condition (6, 8, 12, 13). By including the sequential condition, we controlled for possible differences in the responses evoked by the regular and irregular object pairs themselves: The object arrays presented in the simultaneous and sequential conditions are identical. We designed the experiment in this way because our interest was in the competition between the object arrays (nonpreferred stimuli for PPA) and the houses (preferred stimuli for PPA), rather than in differences between regular and irregular object pairs themselves. The critical test, therefore, is the interaction between presentation order (simultaneous, sequential) and pair configuration (regular, irregular). While viewing the displays, participants were engaged in a fixation task that was unrelated to the house and object stimuli.

Results showed that activity in functionally defined PPA was stronger to houses presented together with regularly positioned objects than to houses presented together with irregularly positioned objects (t[22] = 2.24, P = 0.035; Fig. 1D), indicating reduced competition from regularly positioned objects. Importantly, in the absence of competitive interactions among houses and objects—when the house and object displays were presented sequentially (Fig. 1B)—no difference between the object conditions was observed (t[22] = 0.96, P = 0.35; presentation order × pair configuration interaction: F[1,22] = 6.35, P = 0.019; Fig. 1D). This indicates that the differential PPA responses in the simultaneous presentation condition reflected differences in competitive interactions between houses and objects rather than differential responses to regular and irregular object arrays themselves. These results generalized to alternative PPA definition procedures (SI Text), and were also obtained in analyses of event-related time course data (SI Text).

These results were specific to the PPA. Responses in object-selective lateral occipital cortex (LO; Fig. 1C) were generally lower in the simultaneous condition than in the sequential condition (F[1,22] = 27.17, P < 0.001). Unlike in the PPA, however, the competition effect was not modulated by the configuration of the object pairs (presentation order × pair configuration interaction: F[1,22] = 1.16, P = 0.29; Fig. 1E). The activation pattern observed in LO was significantly different from the pattern in PPA (three-way interaction including region: F[1,22] = 5.59, P = 0.027). Additional face-selective control regions showed the same pattern of results as LO (SI Text).

Together, these fMRI results indicate that competitive interactions between preferred (houses in PPA) and nonpreferred (objects in PPA) stimuli are reduced when objects are positioned according to real-world regularities.

Visual Search Experiments.

To test whether the reduced competition observed at the neural level leads to behavioral facilitation, we modified the displays of the fMRI experiment for use in a behavioral paradigm aimed at measuring accuracy of visual perception. In a series of visual search experiments (Fig. 2A), participants located single target objects surrounded by pairs of distracter objects (Fig. 2B), which were positioned in either their regular or irregular configuration. We used the same object arrays that were used in the fMRI experiment, but replaced the house stimuli with uniquely nameable everyday objects as targets (Methods). The search displays were presented briefly (200 ms) and participants were instructed to indicate as accurately as possible whether the target object appeared to the left or to the right of fixation.

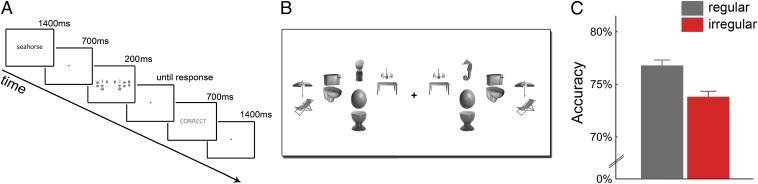

Fig. 2.

Enhanced visual search performance when distracters are positioned according to real-world regularities. (A) The visual search paradigm consisted of a word cue that corresponded to a single target object. Participants indicated whether the target was on the right or on the left side of a briefly presented cluttered visual display. (B) Search arrays were the same displays as in the fMRI experiment, but houses were replaced by the search target on one side and a single distracter on the other side. Again, all distracter pairs could be presented in a regular or irregular configuration. (C) Regular distracter pairs led to higher accuracy than irregular pairs.

Accuracy in localizing the target object was higher when the pairs of distracter objects were presented in their regular configurations relative to when they were presented in irregular configurations (t[16] = 2.88, P = 0.011; Fig. 2C), with no difference in response times (t[16] = 0.70, P = 0.50). This suggests that distracters positioned according to real-world regularities are more efficiently processed, leaving more resources for target detection in visual search. These results were replicated in a response-time–based version of this experiment, showing shallower search slopes for distracter pairs presented in regular compared with irregular configurations (SI Text).

To control for potential low-level differences between regular and irregular object pairs, in a second experiment we added conditions with inverted distracter pairs (Fig. 3A). Inversion preserves all low-level differences between regular and irregular conditions but disrupts higher-level grouping. The benefit of regularly positioned distracters was again found for upright displays (t[13] = 4.49, P = 0.0064; Fig. 3B) but, crucially, not for inverted displays (t[13] = 0.071, P = 0.94; interaction: F[1,13] = 5.57, P = 0.035). Response times did not differ significantly between conditions (all F[1,13] < 0.40, P > 0.50). This rules out the possibility that low-level visual differences between the distracter arrays accounted for the effect.

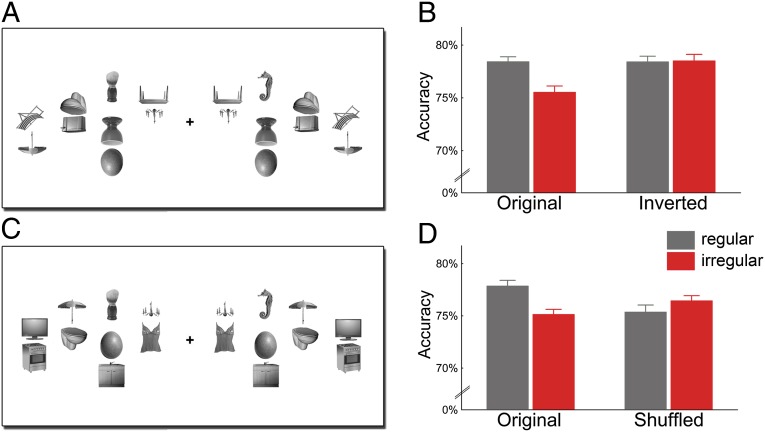

Fig. 3.

Improved detection of targets among regularly positioned distracters cannot be explained by low-level grouping: when the distracter pairs were inverted (A), regular and irregular distracters led to comparable target detection accuracy (B). Also the relative position of single objects cannot account for the effect: when the top objects were interchanged between pairs (shuffled condition, C), the accuracy benefit for regular configurations disappeared (D). Original conditions are independent replications of the first experiment.

For a second control experiment, we generated new object pairs by shuffling the top objects of the original regular and irregular pairs (Fig. 3C). These shuffled pairs did not follow real-world regularities, but the specific locations at which single objects were presented were identical to the original pairs. Results again showed a benefit for regularly relative to irregularly positioned distracter pairs (t[17] = 2.96, P = 0.0088) but no corresponding benefit for the shuffled pairs (t[17] = 0.85, P = 0.41; interaction: F[1,17] = 5.63, P = 0.030; Fig. 3D). Again, response times did not differ between conditions (all F[1,17] < 1.40, P > 0.20). Thus, the specific position of single objects is not sufficient to explain the effect.

Together, these visual search experiments demonstrate improved perception of target objects when distracter objects are positioned according to real-world regularities, thus providing behavioral evidence for reduced competition from regularly positioned distracters.

Discussion

Our findings demonstrate that the visual system exploits learned regularities to perceptually group objects that typically co-occur in specific configurations. Through this process, the effective number of objects that compete for representation is reduced. These findings have implications for attentional selection in real-world situations where multiple but often regularly positioned distracter objects compete for visual representation.

Previous studies have demonstrated that contextual factors can reduce competitive interactions among simple, artificial stimuli that were perceptually grouped based on physical similarity, geometric relationships, or gestalt principles (12, 13). Distractors that can be grouped based on such low-level cues can be rejected at once rather than on an item-by-item basis, leading to enhanced target detection (16–22). For example, when distracters can be grouped by color, search performance depends on the number of distracter groups rather than on the number of individual distracters in each group (17). Our results show that benefits of grouping are not limited to grouping based on low-level cues, but that these can also be observed for grouping based on knowledge about the typical spatial relations between objects in our visual environment.

The present way of measuring neural competition closely resembles the logic of monkey electrophysiology work on attentional competition (3–5), in that we recorded neural activity to a region’s preferred stimuli in the presence of competing nonpreferred stimuli. Reduced neural competition from nonpreferred stimuli was reflected in an increased PPA response to the region’s preferred house stimuli when the PPA’s nonpreferred object stimuli could be grouped based on real-world regularities. The sequential presentation condition, in which houses and objects did not compete for attention, provided an important control, showing that the increased PPA response was not driven by response differences between the regular and irregular object pairs themselves.

This raises the interesting question of whether there are brain regions that differentially respond to regular and irregular object pairs. None of our regions of interest (ROIs) showed such a difference, and no regions were found in a whole-brain analysis testing for the main effect of pair configuration (SI Text). Previous work that tested for response differences as a function of action relations between objects (e.g., a hammer positioned to hit a nail) provided evidence for greater LO activity to interacting objects than to noninteracting objects (23, 24). Patient and transcranial magnetic stimulation studies further showed that action relationships are processed independently of attentional influences from parietal cortex (25, 26). Together with the absence of grouping effects in LO in the current study, these previous findings suggest a special status of object grouping based on action relations (26). Future studies are needed to test this notion, directly comparing effects of grouping based on real-world regularities, action cues (23, 24), and more basic perceptual cues (27–30).

Beneficial effects of grouping are not limited to object perception and attentional competition but have also been observed in studies of visual working memory (VWM). Similar to its effects on attention, low-level grouping has been shown to enhance VWM capacity (31, 32). Recent studies have started to investigate VWM grouping based on statistical regularities in relative stimulus positions (33): stimuli that appeared in regular combinations were better remembered (34, 35), as if they had been compressed into a single VWM representation. An interesting avenue for future study will be to test whether VWM capacity is similarly enhanced for real-world object pairs like those used here, as suggested by accurate memory for objects in natural scenes (36).

The reduced competition from regularly positioned objects demonstrated in the present study may constitute a powerful neural and perceptual mechanism to contend with the multitude of visual information contained in real-world scenes. The present findings could thus contribute to the understanding of perceptual efficiency in real-world scenes: Target detection in natural scenes is surprisingly efficient considering the large number of distracter objects present in real-world environments (37). As an explanation for this efficiency, it has been proposed that scene context guides attention to likely target locations (38, 39). For example, we look above the sink when searching for a mirror. Such contextual guidance can stem from implicit or explicit memory for specific target locations within a specific context (38–40), global scene properties (41, 42), and also from relations between target and nontarget objects (43, 44). At a general level, the current results might similarly reflect the learning of real-world correlational structure. However, our study differs from previous work in that it addressed the grouping of distracter objects independently of their role in guiding attention toward the search target, as the targets were completely unrelated to the distracters. Thus, such high-level grouping of objects forms an additional mechanism likely to support efficient target detection in cluttered real-world environments. Future studies are needed to extend our findings to attentional selection in real-world scenes. Because scenes contain a large number of objects that occur in regularly positioned groups of two or more objects, grouping of items according to real-world regularities might operate on many objects at the same time to greatly enhance the efficiency of real-world perception.

Methods

fMRI Experiment.

Participants.

Twenty-five participants (eight male, mean age 25.5 y, SD = 4.9) took part in the experiment. All procedures were carried out in accordance with the Declaration of Helsinki and were approved by the ethical committee of the University of Trento. Two participants were excluded from all analyses: One due to excessive head movement, and one because we were unable to define functional regions of interest at the adopted statistical threshold.

Stimuli.

The stimulus set consisted of 12 object pairs of everyday objects with a typical spatial configuration in the vertical direction, such as a lamp above a dining table, a mirror above a bathroom sink, or an air vent above a stove. The pairs could be placed in their typical configuration (regular condition) or vertically interchanged (irregular condition). For each single object, two different exemplars were collected, resulting in four different exemplar combinations for each pair, and thus a total of 48 regular and 48 irregular pairs. Additionally, 36 images of houses were used. Each display contained four different object pairs and a house on each side of fixation. The pairs on the right side of fixation were always the perfect mirror image of the pairs left of fixation, whereas the house’s position was mirrored but two different house exemplars were presented on each side. Single objects subtended a visual angle of ∼1.5°. For each side, objects were placed in a jittered 4 × 4 grid, with the house stimulus always appearing in one of the four central locations of the grid (i.e., second or third row and second or third column). The nearest objects to fixation appeared with a horizontal offset of 2°. To control for interdisplay variability, each particular display (i.e., each particular combination of exemplars and positions) was used once in each condition. Stimuli were presented using the Psychtoolbox (45) and projected on a translucent screen at the end of the scanner bore. Participants viewed the screen through a pair of tiled mirrors mounted on the head coil.

Main Experiment Procedure.

Attentional competition was manipulated using an event-related variant of the sequential/simultaneous paradigm (6). In the simultaneous condition, the whole display was presented for 500 ms, followed by a blank period of 500 ms. In the sequential condition, the house stimuli and the pair stimuli were presented in direct succession for 500 ms each (with the house appearing first in half of the trials and the surrounding pairs appearing first in the other half). Trials were separated by a 1,500-ms intertrial interval. Thus, the stimulation summed over a trial was the same in both conditions. However, whereas the simultaneous presentation of the house stimuli and the surrounding object stimuli was expected to induce competitive interactions, no such competition should be present when the stimuli were presented sequentially (6). Importantly, we manipulated the regularity of the object pairs: In the regular condition, all pairs were presented in their typical configuration (e.g., lamp above a dining table), whereas in the irregular condition, all pairs were presented with individual object positions interchanged (e.g., lamp below a dining table). The resulting four conditions were randomly intermixed within each run. There was a total of eight runs, each lasting approximately 5 min and consisting of 120 trials, of which 20% were fixation-only trials. Participants were instructed to maintain fixation at a central cross throughout the experiment and to respond to small size changes of the fixation cross (size increases of ∼15%). Participants detected the changes with high accuracy (92.3% correct, SE = 0.9%), and there were no significant differences between conditions (presentation order × pair configuration ANOVA, all F[1,22] < 0.31, P > 0.55). Similarly, response times did not differ between conditions (all F[1,22] < 2.81, P > 0.10).

Functional Localizer Procedure.

In addition to the eight experimental runs, participants completed two functional localizer runs of 5 min each. Participants performed a one-back task while viewing images of faces, houses, everyday objects (different exemplars than in the main experiment), and scrambled objects. Each stimulus category included 36 individual exemplars. Within each run, there were four blocks of each stimulus category and four blocks of fixation baseline, with all blocks lasting 16 s. Block order was randomized for the first 10 blocks and then mirror reversed for the remaining 10 blocks. Each nonfixation block included two one-back stimulus repetitions. To find the maximally selective voxels for the house stimuli, we used the same house exemplars as in the main experiment.

fMRI Data Acquisition.

Imaging was conducted on a Bruker BioSpin MedSpec 4T head scanner (Bruker BioSpin), equipped with an eight-channel head coil. T2*-weighted gradient-echo echo-planar images were collected as functional volumes for the main experimental runs and the functional localizer runs (repetition time = 2.0 s, echo time = 33 ms, 73° flip angle, 3 × 3 × 3 mm voxel size, 1-mm gap, 34 slices, 192 mm field of view, 64 × 64 matrix size). A T1-weighted image (MPRAGE; 1 × 1 × 1 mm voxel size) was obtained as a high-resolution anatomical reference.

fMRI Preprocessing.

All neuroimaging data were analyzed using MATLAB and SPM8. The volumes were realigned, coregistered to the structural image, resampled to a 2 × 2 × 2 mm grid and spatially normalized to the Montreal Neurological Institute (MNI)-305 template (as included in SPM8). Functional volumes were then smoothed using a 6-mm full-width half-maximum Gaussian kernel. All analyses were performed on the smoothed data.

fMRI Data Analysis—Functional Localizer.

The blood-oxygen-level–dependent (BOLD) signal of each voxel in each participant in the localizer runs was modeled using four regressors, one for each stimulus category (faces, houses, objects, and scrambled objects), and six regressors for the movement parameters obtained from the realignment procedure. Functional ROIs were defined in individual participants using t contrasts. House-selective PPA (14, 15) was localized with the houses > objects contrast. Object-selective LO (46) was localized with the objects > scrambled contrast. Bilateral LO and PPA ROIs were defined as spheres of 4-mm radius (including 33 voxels) around the peak MNI coordinates of activation [left PPA: x = −22.1 (1.4), y = −43.4 (2.1), z = −7.9 (1.2); right PPA: x = 25.0 (1.3), y = −45.1 (1.4), z = −6.5 (1.0); left LO: x = −45.9 (1.0), y = −79.0 (1.1), z = −3.0 (2.0); right LO: x = 46.6 (1.1), y = −77.0 (1.1), z = −5.0 (1.8); SEs in parentheses], with the threshold set at P < 0.01, uncorrected. We chose these relatively small spherical ROIs to maximize selectivity in PPA (SI Text shows results in the peak voxel of PPA).

fMRI Data Analysis—Main Experiment.

For the main experiment, the BOLD signal of each voxel in each participant was estimated with 11 regressors in a general linear model: 4 regressors for the experimental conditions, 1 regressor for the fixation-only trials, and 6 regressors for the movement parameters obtained from the realignment procedure. All models included an intrinsic temporal high-pass filter of 1/128 Hz to correct for slow scanner drifts. ROI analysis was done using the MARSBAR toolbox for SPM8 (47). For each ROI and each hemisphere, we estimated response magnitudes from the generalized linear model beta values of the conditions of interest relative to the beta values of the fixation-only trials. For each ROI, responses were then averaged across hemispheres.

Visual Search Experiments.

Participants.

Eighteen participants (six male, mean age 22.7 y, SD = 2.5) volunteered for behavioral experiment 1, 13 participants (two male; mean age 22.2 y, SD = 2.9) for experiment 2, and 18 participants (one male; mean age 22.8 y, SD = 2.2) for experiment 3.

Stimuli.

We used the same displays as in the fMRI experiment, but replaced the houses with a single target object on one side of the display and a single nontarget object on the other side. For this purpose, an additional 100 uniquely nameable everyday objects were collected (taken from an online database; 48).

Procedure.

In each trial, participants localized a single target object presented in the left or in the right hemifield. Each trial started with a word (e.g., “seahorse”) displayed for 1,400 ms, indicating the object participants had to localize. After 700 ms, a search array was displayed for 200 ms. Each array contained four different object pairs and one single object on each side of fixation. The pairs on the right of fixation were always the perfect mirror image of the pairs left of fixation, whereas the single objects’ positions were mirrored but the single objects (i.e., target and nontarget object) differed between the two sides. One of the single objects was always the target item, with the target position (left versus right) randomly varying, whereas the overall probability for each side was fixed at 50%. Each single object appeared equally often in each condition as a target or a nontarget, with no specific target–nontarget pair being repeated multiple times throughout the experiment. To control for the variability between displays, each particular distracter array was shown once in each condition (i.e., each particular combination of distracter pairs and their positions). Participants used the left and right arrow keys on a keyboard to indicate as accurately as possible, without speed pressure, on which side the target object had appeared. After entering their response, participants received feedback. Trials were separated by an intertrial interval of 1,400 ms. The experiments were divided into blocks of 50 trials. The order of the first half of blocks was counterbalanced between subjects, and the order of the second half was generated by mirror reversing this order. In each block, the object pairs appeared in either the regular or the irregular configuration. In experiment 1, participants completed eight blocks of the task. In experiment 2, we exactly replicated experiment 1, and additionally included blocks with inverted object pairs, in which all distracter pairs were presented upside down (i.e., rotated by 180°), whereas the single objects appeared in normal orientation. This inverted condition was included to control for the potential influence of low-level grouping effects, as inversion disrupts the object pairs’ configuration, although all low-level properties are identical to the original upright pairs. In experiment 3, blocks with “shuffled” pairs were included, in which the top and bottom items of the pairs were recombined into new pairs. These shuffled pairs (e.g., computer screen above stove) did not form typical spatial configurations, whereas the actual position of individual objects was identical to the original upright pairs. Thus, the inclusion of this shuffled condition allowed us to control for the potential influence of the actual position of single objects within pairs.

Supplementary Material

Acknowledgments

We thank Paul Downing and Sabine Kastner for comments on an earlier version of the manuscript. The research was funded by the Autonomous Province of Trento, call “Grandi Progetti 2012,” project “Characterizing and improving brain mechanisms of attention” (ATTEND).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1400559111/-/DCSupplemental.

References

- 1.Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 2.Kastner S, Ungerleider LG. The neural basis of biased competition in human visual cortex. Neuropsychologia. 2001;39(12):1263–1276. doi: 10.1016/s0028-3932(01)00116-6. [DOI] [PubMed] [Google Scholar]

- 3.Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229(4715):782–784. doi: 10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- 4.Miller EK, Gochin PM, Gross CG. Suppression of visual responses of neurons in inferior temporal cortex of the awake macaque by addition of a second stimulus. Brain Res. 1993;616(1-2):25–29. doi: 10.1016/0006-8993(93)90187-r. [DOI] [PubMed] [Google Scholar]

- 5.Rolls ET, Tovee MJ. The responses of single neurons in the temporal visual cortical areas of the macaque when more than one stimulus is present in the receptive field. Exp Brain Res. 1995;103(3):409–420. doi: 10.1007/BF00241500. [DOI] [PubMed] [Google Scholar]

- 6.Kastner S, De Weerd P, Desimone R, Ungerleider LG. Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI. Science. 1998;282(5386):108–111. doi: 10.1126/science.282.5386.108. [DOI] [PubMed] [Google Scholar]

- 7.Duncan J. Selective attention and the organization of visual information. J Exp Psychol Gen. 1984;113(4):501–517. doi: 10.1037//0096-3445.113.4.501. [DOI] [PubMed] [Google Scholar]

- 8.Beck DM, Kastner S. Stimulus context modulates competition in human extrastriate cortex. Nat Neurosci. 2005;8(8):1110–1116. doi: 10.1038/nn1501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Beck DM, Kastner S. Stimulus similarity modulates competitive interactions in human visual cortex. J Vis. 2007;7(2):1–12. doi: 10.1167/7.2.19. [DOI] [PubMed] [Google Scholar]

- 10.Knierim JJ, van Essen DC. Neuronal responses to static texture patterns in area V1 of the alert macaque monkey. J Neurophysiol. 1992;67(4):961–980. doi: 10.1152/jn.1992.67.4.961. [DOI] [PubMed] [Google Scholar]

- 11.Kapadia MK, Ito M, Gilbert CD, Westheimer G. Improvement in visual sensitivity by changes in local context: Parallel studies in human observers and in V1 of alert monkeys. Neuron. 1995;15(4):843–856. doi: 10.1016/0896-6273(95)90175-2. [DOI] [PubMed] [Google Scholar]

- 12.McMains SA, Kastner S. Defining the units of competition: Influences of perceptual organization on competitive interactions in human visual cortex. J Cogn Neurosci. 2010;22(11):2417–2426. doi: 10.1162/jocn.2009.21391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.McMains S, Kastner S. Interactions of top-down and bottom-up mechanisms in human visual cortex. J Neurosci. 2011;31(2):587–597. doi: 10.1523/JNEUROSCI.3766-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392(6676):598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- 15.Aguirre GK, Zarahn E, D’Esposito M. An area within human ventral cortex sensitive to “building” stimuli: Evidence and implications. Neuron. 1998;21(2):373–383. doi: 10.1016/s0896-6273(00)80546-2. [DOI] [PubMed] [Google Scholar]

- 16.Banks WP, Prinzmetal W. Configurational effects in visual information processing. Percept Psychophys. 1976;19(4):361–367. [Google Scholar]

- 17.Bundesen C, Pedersen LF. Color segregation and visual search. Percept Psychophys. 1983;33(5):487–493. doi: 10.3758/bf03202901. [DOI] [PubMed] [Google Scholar]

- 18.Donnelly N, Humphreys GW, Riddoch MJ. Parallel computation of primitive shape descriptions. J Exp Psychol Hum Percept Perform. 1991;17(2):561–570. doi: 10.1037//0096-1523.17.2.561. [DOI] [PubMed] [Google Scholar]

- 19.Humphreys GW, Quinlan PT, Riddoch MJ. Grouping processes in visual search: Effects with single- and combined-feature targets. J Exp Psychol Gen. 1989;118(3):258–279. doi: 10.1037//0096-3445.118.3.258. [DOI] [PubMed] [Google Scholar]

- 20.Rauschenberger R, Yantis S. Perceptual encoding efficiency in visual search. J Exp Psychol Gen. 2006;135(1):116–131. doi: 10.1037/0096-3445.135.1.116. [DOI] [PubMed] [Google Scholar]

- 21.Roelfsema PR, Houtkamp R. Incremental grouping of image elements in vision. Atten Percept Psychophys. 2011;73(8):2542–2572. doi: 10.3758/s13414-011-0200-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wolfe JM, Bennett SC. Preattentive object files: Shapeless bundles of basic features. Vision Res. 1997;37(1):25–43. doi: 10.1016/s0042-6989(96)00111-3. [DOI] [PubMed] [Google Scholar]

- 23.Kim JG, Biederman I. Where do objects become scenes? Cereb Cortex. 2011;21(8):1738–1746. doi: 10.1093/cercor/bhq240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Roberts KL, Humphreys GW. Action relationships concatenate representations of separate objects in the ventral visual system. Neuroimage. 2010;52(4):1541–1548. doi: 10.1016/j.neuroimage.2010.05.044. [DOI] [PubMed] [Google Scholar]

- 25.Kim JG, Biederman I, Juan CH. The benefit of object interactions arises in the lateral occipital cortex independent of attentional modulation from the intraparietal sulcus: A transcranial magnetic stimulation study. J Neurosci. 2011;31(22):8320–8324. doi: 10.1523/JNEUROSCI.6450-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Riddoch MJ, Humphreys GW, Edwards S, Baker T, Willson K. Seeing the action: Neuropsychological evidence for action-based effects on object selection. Nat Neurosci. 2003;6(1):82–89. doi: 10.1038/nn984. [DOI] [PubMed] [Google Scholar]

- 27.Altmann CF, Bülthoff HH, Kourtzi Z. Perceptual organization of local elements into global shapes in the human visual cortex. Curr Biol. 2003;13(4):342–349. doi: 10.1016/s0960-9822(03)00052-6. [DOI] [PubMed] [Google Scholar]

- 28.Fang F, Kersten D, Murray SO. Perceptual grouping and inverse fMRI activity patterns in human visual cortex. J Vis. 2008;8(7):1–9. doi: 10.1167/8.7.2. [DOI] [PubMed] [Google Scholar]

- 29.Kim JG, Biederman I. Greater sensitivity to nonaccidental than metric changes in the relations between simple shapes in the lateral occipital cortex. Neuroimage. 2012;63(4):1818–1826. doi: 10.1016/j.neuroimage.2012.08.066. [DOI] [PubMed] [Google Scholar]

- 30.Murray SO, Kersten D, Olshausen BA, Schrater P, Woods DL. Shape perception reduces activity in human primary visual cortex. Proc Natl Acad Sci USA. 2002;99(23):15164–15169. doi: 10.1073/pnas.192579399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Woodman GF, Vecera SP, Luck SJ. Perceptual organization influences visual working memory. Psychon Bull Rev. 2003;10(1):80–87. doi: 10.3758/bf03196470. [DOI] [PubMed] [Google Scholar]

- 32.Xu Y. Understanding the object benefit in visual short-term memory: The roles of feature proximity and connectedness. Percept Psychophys. 2006;68(5):815–828. doi: 10.3758/bf03193704. [DOI] [PubMed] [Google Scholar]

- 33.Brady TF, Tenenbaum JB. A probabilistic model of visual working memory: Incorporating higher order regularities into working memory capacity estimates. Psychol Rev. 2013;120(1):85–109. doi: 10.1037/a0030779. [DOI] [PubMed] [Google Scholar]

- 34.Brady TF, Konkle T, Alvarez GA. Compression in visual working memory: Using statistical regularities to form more efficient memory representations. J Exp Psychol Gen. 2009;138(4):487–502. doi: 10.1037/a0016797. [DOI] [PubMed] [Google Scholar]

- 35.Olson IR, Jiang Y, Moore KS. Associative learning improves visual working memory performance. J Exp Psychol Hum Percept Perform. 2005;31(5):889–900. doi: 10.1037/0096-1523.31.5.889. [DOI] [PubMed] [Google Scholar]

- 36.Hollingworth A. Scene and position specificity in visual memory for objects. J Exp Psychol Learn Mem Cogn. 2006;32(1):58–69. doi: 10.1037/0278-7393.32.1.58. [DOI] [PubMed] [Google Scholar]

- 37.Wolfe JM, Alvarez GA, Rosenholtz R, Kuzmova YI, Sherman AM. Visual search for arbitrary objects in real scenes. Atten Percept Psychophys. 2011;73(6):1650–1671. doi: 10.3758/s13414-011-0153-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chun MM. Contextual cueing of visual attention. Trends Cogn Sci. 2000;4(5):170–178. doi: 10.1016/s1364-6613(00)01476-5. [DOI] [PubMed] [Google Scholar]

- 39.Wolfe JM, Võ ML-H, Evans KK, Greene MR. Visual search in scenes involves selective and nonselective pathways. Trends Cogn Sci. 2011;15(2):77–84. doi: 10.1016/j.tics.2010.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brockmole JR, Henderson JM. Recognition and attention guidance during contextual cueing in real-world scenes: Evidence from eye movements. Q J Exp Psychol (Hove) 2006;59(7):1177–1187. doi: 10.1080/17470210600665996. [DOI] [PubMed] [Google Scholar]

- 41.Neider MB, Zelinsky GJ. Scene context guides eye movements during visual search. Vision Res. 2006;46(5):614–621. doi: 10.1016/j.visres.2005.08.025. [DOI] [PubMed] [Google Scholar]

- 42.Torralba A, Oliva A, Castelhano MS, Henderson JM. Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychol Rev. 2006;113(4):766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- 43.Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5(8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- 44.Võ ML-H, Wolfe JM. The interplay of episodic and semantic memory in guiding repeated search in scenes. Cognition. 2013;126(2):198–212. doi: 10.1016/j.cognition.2012.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10(4):433–436. [PubMed] [Google Scholar]

- 46.Malach R, et al. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci USA. 1995;92(18):8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Brett M, Anton J-L, Valabregue R, Poline J-B. Region of interest analysis using an SPM toolbox. Neuroimage. 2002;16(2):1140–1141. [Google Scholar]

- 48.Brady TF, Konkle T, Alvarez GA, Oliva A. Visual long-term memory has a massive storage capacity for object details. Proc Natl Acad Sci USA. 2008;105(38):14325–14329. doi: 10.1073/pnas.0803390105. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.