Abstract

We have proposed that haptic activation of the shape-selective lateral occipital complex (LOC) reflects a model of multisensory object representation in which the role of visual imagery is modulated by object familiarity. Supporting this, a previous functional magnetic resonance imaging (fMRI) study from our laboratory used inter-task correlations of blood oxygenation level-dependent (BOLD) signal magnitude and effective connectivity (EC) patterns based on the BOLD signals to show that the neural processes underlying visual object imagery (objIMG) are more similar to those mediating haptic perception of familiar (fHS) than unfamiliar (uHS) shapes. Here we employed fMRI to test a further hypothesis derived from our model, that spatial imagery (spIMG) would evoke activation and effective connectivity patterns more related to uHS than fHS. We found that few of the regions conjointly activated by spIMG and either fHS or uHS showed inter-task correlations of BOLD signal magnitudes, with parietal foci featuring in both sets of correlations. This may indicate some involvement of spIMG in HS regardless of object familiarity, contrary to our hypothesis, although we cannot rule out alternative explanations for the commonalities between the networks, such as generic imagery or spatial processes. EC analyses, based on inferred neuronal time series obtained by deconvolution of the hemodynamic response function from the measured BOLD time series, showed that spIMG shared more common paths with uHS than fHS. Re-analysis of our previous data, using the same EC methods as those used here, showed that, by contrast, objIMG shared more common paths with fHS than uHS. Thus, although our model requires some refinement, its basic architecture is supported: a stronger relationship between spIMG and uHS compared to fHS, and a stronger relationship between objIMG and fHS compared to uHS.

1. INTRODUCTION

Many studies have shown that various visual cortical regions are active during haptic perception (see Amedi et al., 2005a; Sathian & Lacey, 2007; Lacey & Sathian, 2011, for reviews). Among such regions, the most extensively researched is the lateral occipital complex (LOC), a visually shape-selective region in the ventral visual pathway (Malach et al., 1995). The LOC is also haptically shape-selective for both 3D (Amedi et al. 2001; Zhang et al. 2004; Stilla & Sathian 2008) and 2D shapes (Stoesz et al. 2003; Prather, Votaw & Sathian, 2004), but is not activated during object recognition triggered by object-specific sounds (Amedi et al. 2002), unless the task demands shape processing (James et al., 2011) or when auditory object recognition is mediated by a visual-auditory sensory substitution device that converts shape information into an auditory ‘soundscape’ (Amedi et al., 2007); it is hence thought to be a processor of geometric shape.

An intuitive explanation for haptically-evoked activation of visual cortex is mediation by visual imagery (Sathian et al., 1997). The LOC is active during mental imagery of familiar objects previously explored haptically by blind individuals or visually by sighted individuals (De Volder et al. 2001), and during recall of geometric and material object properties from memory (Newman, Klatzky, Lederman & Just, 2005). In addition, individual differences in ratings of the vividness of visual imagery strongly predicted haptic shape-selective activation magnitudes in the right LOC (Zhang et al. 2004). One argument that has been advanced against the visual imagery hypothesis depends on the observation that the congenitally blind show shape-related activity in the same regions as the sighted: since the congenitally blind do not have visual imagery, such imagery, it is argued, cannot account for the activations seen in the sighted (Pietrini et al., 2004). However, the fact that the blind do not have visual imagery during haptic perception is certainly no reason to exclude this possibility in the sighted, particularly in view of the extensive evidence for cross-modal plasticity in studies of visual deprivation (Pascual-Leone, Amedi, Fregni & Merabet, 2005; Sathian, 2005; Sathian & Stilla, 2010). Another argument against the role of visual imagery is that activation magnitude in the LOC during visual imagery was found to be only about 20% of that during haptic shape perception, implying that visual imagery is relatively unimportant to this process (Amedi et al., 2001). However, this study did not monitor performance on the visual imagery task and so the lower LOC activity during imagery could simply mean that participants were not performing the task consistently or were not maintaining their visual images throughout the imagery scan.

We recently tested the visual imagery hypothesis, predicting that, if visual imagery mediated LOC recruitment during haptic shape (HS) perception, these two conditions would activate common areas with activation magnitudes being correlated across conditions, and that the two conditions would also show similar patterns of effective connectivity (EC). In contrast to earlier studies, visual imagery was verified by monitoring participants’ performance on a visual imagery task requiring a same-different judgment. Comparing this visual imagery task to HS perception of familiar objects yielded an extensive network of common regions, including bilateral LOC and a number of prefrontal areas, many of which showed significant, positive inter-task correlations (Lacey et al., 2010). When visual imagery was compared to HS perception of unfamiliar objects, however, there were very few common regions, with only one (in the intraparietal sulcus [IPS]) showing a significant, positive inter-task correlation (Lacey et al., 2010). Examination of EC within the cortical networks involved in visual imagery and HS perception revealed that the visual imagery network strongly resembled the familiar (fHS), but not the unfamiliar, haptic shape (uHS) network (Deshpande et al., 2010a). In sum, we demonstrated that visual imagery is strongly linked to haptic perception of familiar objects but only weakly associated with haptic perception of unfamiliar objects. We also noted that the visual imagery and fHS tasks probably engaged visual object imagery (objIMG) rather than spatial imagery (spIMG) (Kozhevnikov et al., 2002, 2005; Blajenkova et al., 2006; Blazhenkova & Kozhevnikov, 2009), and that the latter might underpin the uHS task.

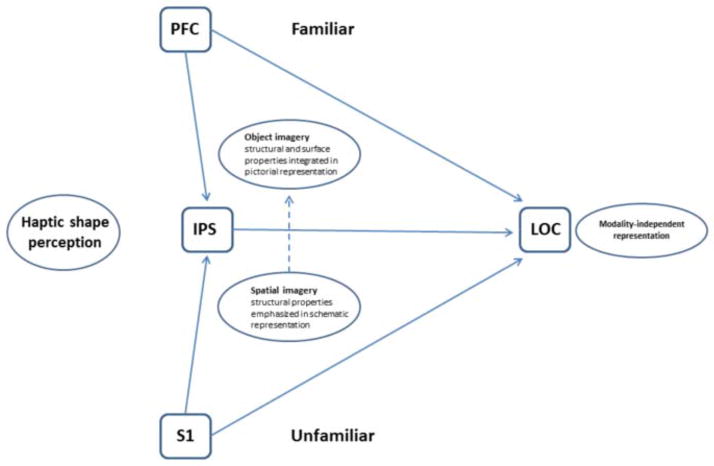

Based on these findings, we proposed a conceptual framework for visuo-haptic object representation that integrates the visual imagery and multisensory approaches (Lacey et al. 2009). In this model, the LOC contains a representation that is independent of the input sensory modality and is flexibly accessible via either bottom-up or top-down pathways, depending on object familiarity (or other task attributes). For familiar objects, global shape can be inferred relatively easily, perhaps from distinctive features that suffice to retrieve a visual image, and so the model emphasizes top-down contributions from parietal and prefrontal regions that drive objIMG during fHS perception. By contrast, because there is no stored representation of an unfamiliar object, its global shape has to be computed by exploring it in its entirety; uHS may therefore rely more on bottom-up pathways from somatosensory cortex to the LOC. Since parietal cortex in and around the IPS is implicated in visuo-haptic perception of both shape and location (Stilla & Sathian 2008; Sathian et al., 2011), the model also implicates these parietal regions in processing the relative spatial locations of object parts to assemble a global object representation from its component parts, facilitated by spIMG.

Here, we tested the aspect of the model dealing with spIMG in relation to fHS and uHS. We compared a spIMG task, in which participants mentally construct a novel shape from its component parts, to fHS and uHS. We predicted that activation magnitude during the spIMG task, particularly in regions in and around the IPS, would correlate across participants with relevant behavioral variables: accuracy on the spIMG task and individual preference for spatial imagery as measured by the Object-Spatial Imagery and Verbal Questionnaire (OSIVQ) (Blazhenkova & Kozhevnikov, 2009). We expected, further, that there would be more common regions, and more inter-task correlations of activation magnitude, between spIMG and uHS, compared to spIMG and fHS. We also hypothesized that the EC network for spIMG would more closely resemble that of uHS than fHS. Our earlier study (Deshpande et al., 2010a) used EC analyses of the measured blood oxygenation level-dependent (BOLD) signal time series, whereas in the present study, the hemodynamic response function (HRF) was deconvolved from the measured BOLD signals to yield inferred neuronal time series (Havlicek et al., 2011) on which the EC analyses were performed. The latter method removes the smoothing introduced by the HRF and has the advantage of being impervious to inter-regional as well as inter-individual variations of the HRF. Both simulations (Ryali, Supehar, Chen & Menon, 2011) and experimental data (David et al., 2008) have demonstrated improvements in GC-based effective connectivity metrics obtained from deconvolved as compared to raw BOLD data. To allow proper evaluation of our model, we re-analyzed our earlier data (Deshpande et al., 2010a) using the same methods as here. We expected that, in contrast to spIMG, visual object imagery (objIMG) would have more paths in common with fHS than uHS.

2. MATERIALS AND METHODS

2.1 Participants

Twelve neurologically normal participants (6 males, 6 females; mean age 22 years 7 months) took part after giving informed consent. All participants were right-handed, based on the validated subset of the Edinburgh handedness inventory (Raczkowski et al., 1974). We excluded participants with calluses on their hands, and those for whom American English was a second/non-native language, because the control task for the spIMG task relied on verbal stimuli. The Institutional Review Board of Emory University approved all procedures.

2.2 Procedures

Participants performed three tasks, as detailed below: a spIMG task that was completed in one session and two haptic tasks (fHS, uHS), which were completed in either one or two sessions (6 participants each) in order to accommodate individuals’ schedules. The order of the tasks was counterbalanced across participants; within each task, the order of runs was also counterbalanced.

2.2.1 Spatial imagery task

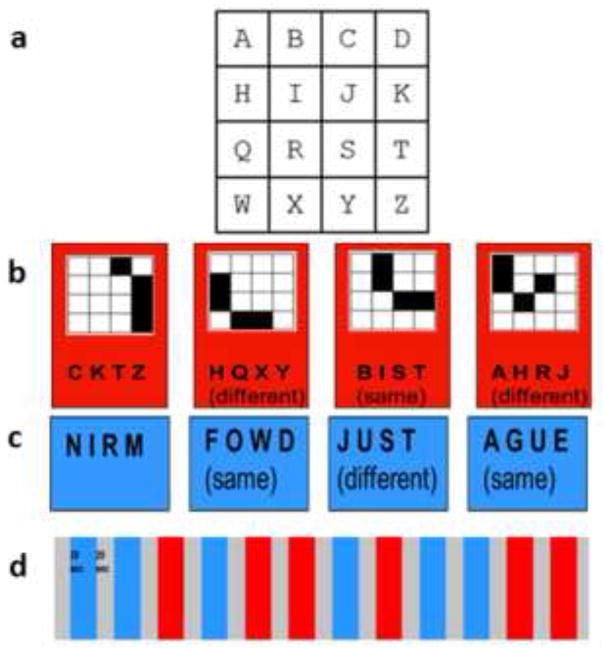

The spIMG task required imagining a previously memorized 4×4 matrix with one letter in each position (Figure 1a). Participants imagined the shape that would result if four cells in the matrix, cued by an auditory four-letter string, were filled in, and performed a one-back same/different discrimination on the imagined shape. Thus, participants had to compute global shape using the spatial relationships between component parts, analogous to the processes hypothesized for uHS in our model (see above). Participants were trained on this task, the day before the fMRI scan for the task. No time limit was set for this and no instructions were provided regarding the method of memorization, to allow for spontaneous use of individually preferred strategies. We first asked participants to memorize the lettered matrix and then, to test for accurate memorization, asked them to identify the four-letter sequences that formed all the horizontal rows, vertical columns, 2×2 squares, and diagonal lines. They next had to describe the shapes represented by the four-letter sequences, read aloud by the experimenter, for all the horizontal rows, vertical columns, and the 2×2 squares in the four corners of the grid (note that none of these shapes appeared in the main task). If errors were made, participants were allowed more time to study the matrix. When all these questions could be answered correctly, participants were given a practice run of the actual task, as described below, to accustom them to generating images cued by four-letter strings at the speed of the actual experiment and without feedback about errors. The shapes in ‘same’ trials were represented by letter sequences that differed from their predecessors (Figure 1b), thus ensuring that participants had to construct mental images of the shapes and could not perform the task solely on the basis of the letters. Since changing the letters necessarily changed the location of the shape in the matrix (Figure 1b), participants were instructed that they should make their decisions based on the shapes they constructed, ignoring their locations in the matrix. ‘Different’ shapes were, of course, necessarily represented by different letters (Figure 1b). None of the letter strings resulted in a real word, thus restricting the possibility of verbal strategies, although some were pronounceable non-words, e.g., ‘B-I-S-T’ (Figure 1b). In the control task, participants also heard four-letter strings that made either a real word or a non-word (WnW) and made a one-back same/different word category discrimination (Figure 1c). This control task was essentially the same as that used for objIMG in our previous study (Lacey et al., 2010).

Figure 1.

Stimuli and imaging paradigm for spIMG runs: (a) 4×4 lettered matrix; (b) example 4-trial spIMG block showing letter strings and imagined shapes for ‘same’ and ‘different’ trials; (c) letter strings for the word/non-word task (a “same” response was required if both strings represented the same category (word or non-word); (d) each run contained interleaved spIMG and WnW blocks (see text for details).

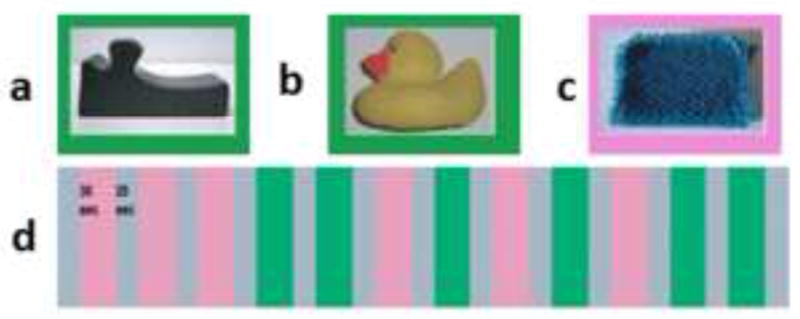

2.2.2 Haptic shape tasks

In both tasks, participants were presented with a series of objects and performed a one-back same/different shape discrimination, as in our previous study (Lacey et al., 2010). In the uHS task, the shape stimuli were 42 three-dimensional, meaningless wooden blocks with smooth, painted surfaces, measuring approximately 5×5×2.5 cm, and varying in shape (e.g., Figure 2a). These abstract objects were previously unfamiliar to participants who thus had no haptic or visual information about them stored in memory. In the fHS task, the shape stimuli were 42 three-dimensional familiar objects, for example, a rubber duck (Figure 2b), a plastic spoon, a toy car, etc. All the familiar objects were MRI-safe or had been rendered so by the removal of all metallic parts. The control task for both fHS and uHS was a haptic texture (HT) task. It comprised a one-back same/different texture discrimination in which the 42 stimuli were 4×4×0.3 cm cardboard substrates onto which textured fabric or upholstery was glued (e.g., Figure 2c). The shape stimuli were all of the same texture in the uHS task; in the fHS task, they were grouped in blocks of similarly textured stimuli, in order to minimize textural cues. The texture stimuli were all of the same shape. Both shape and texture stimuli were consistently presented in a fixed orientation and participants were told not to rotate or re-orient stimuli during exploration.

Figure 2.

Example stimuli and imaging paradigm for HS runs with either (a) unfamiliar or (b) familiar objects and (c) textures; (d) each run contained interleaved HS and HT blocks (see text for details).

2.2.3 Object and spatial imagery preferences

After the final scan session, participants completed the OSIVQ (Blazhenkova & Kozhevnikov, 2009). In order to classify them as either object or spatial imagers, we deducted the spatial imagery score from the object imagery score for each participant: negative OSIQ difference scores therefore denoted a preference for spatial imagery while positive scores denoted a preference for object imagery (Lacey, Lin & Sathian, 2011). These difference scores were used to examine correlations between imagery preference and activation magnitude during the spIMG task.

2.2.4 Functional imaging

Participants lay supine in the scanner. They were blindfolded and instructed to keep their eyes closed. They held a fiberoptic response box in the right hand and used the second and third digits to press appropriate buttons indicating a ‘same’ or ‘different’ response. Auditory stimuli and cues were presented through headphones that attenuated external sounds by 20 dB to muffle scanner noise. The onset of each active block was preceded by an auditory cue (‘grid’ for spIMG, ‘words’ for WnW, ‘shape’ for HS, ‘texture’ for HT) to instruct the participant as to which task was to be performed. Each rest block was preceded by the auditory cue ‘rest’.

In the spatial imagery session, participants completed 4 runs consisting of 6 blocks each of the spIMG and WnW tasks, pseudorandomly distributed through each run, with an equal number of ‘same’ and ‘different’ correct responses in each run (Figure 1d). Each block contained 4 trials (making a total of 96 trials for each task). In each block, participants heard 4 four-letter strings, each lasting 4s, with 3s between strings to respond. Both tasks involved one-back same/different comparisons (either shape or word category) and therefore required three responses in each 28s block (72 responses for each task). There was a 20s rest period at the start and end of each run and between each block, each run therefore lasted 596s. The stimuli were presented, and responses recorded, via Presentation software (Neurobehavioral Systems Inc., Albany, California).

In the haptic tasks, the procedure was the same as in Lacey et al. (2010). Participants were blindfolded and had their eyes closed during haptic exploration. They were never allowed to see the haptic stimuli. A block design paradigm similar to that for the imagery session was used, except for the following details (Figure 2d). Active blocks were of 30s duration. On each trial, an experimenter standing at the scanner aperture placed a stimulus directly into the participant’s right hand; the stimulus was then explored for 4s, after which the object was removed. There was a 1s inter-stimulus interval during which responses were made using the left hand. Each type of active block was repeated six times, giving 36 shape and 36 texture trials in each run. Two runs each were performed for uHS and fHS. Thus, there were 72 shape and 72 texture trials in each 2-run sequence, with an approximately equal number of ‘same’ and ‘different’ correct responses in each run. The sequence and timing of object presentation and exploration were guided by preprogrammed instructions displayed to the experimenter on a computer screen using Presentation software.

2.2.5 MR scanning

MR scans were performed on a 3 Tesla Siemens Trio whole body scanner (Siemens Medical Solutions, Malvern, PA), using a 12-channel head coil. T2*-weighted functional images were acquired using a single-shot, gradient-recalled, echoplanar imaging (EPI) sequence for BOLD contrast. For all functional scans, 29 axial slices of 4mm thickness were acquired using the following parameters: repetition time (TR) 2000ms, echo time (TE) 30ms, field of view (FOV) 220mm, flip angle (FA) 90°, in-plane resolution 3.4×3.4mm, and in-plane matrix 64×64. High-resolution 3D anatomic images were acquired during both haptic and imagery sessions, using an MPRAGE sequence (TR 2300ms, TE 3.9ms, inversion time 1100ms, FA 8°) comprising 176 sagittal slices of 1mm thickness (FOV 256mm, in-plane resolution 1×1mm, in-plane matrix 256×256). Once magnetic stabilization was achieved in each run, the scanner triggered the computer running Presentation software so that the sequence of experimental trials was synchronized with scan acquisition. The order of imagery and haptic sessions was counterbalanced across participants and genders.

2.2.6 Image processing and analysis

Image processing and analysis was performed using BrainVoyager QX v1.6.3 and v1.9.10 (Brain Innovation, Maastricht, Netherlands). In individual analysis, each participant’s functional runs were real-time motion-corrected utilizing Siemens 3D-PACE (prospective acquisition motion correction). Functional images were preprocessed utilizing sinc interpolation for slice scan time correction, trilinear-sinc interpolation for intra-session alignment of functional volumes, and high-pass temporal filtering to 3 cycles per run to remove slow drifts in the data without affecting task-related effects (since there were 6 blocks of each task per run). Anatomic 3D images were processed, co-registered with the functional data, and transformed into Talairach space (Talairach & Tournoux, 1988).

For group analysis, the transformed data were spatially smoothed with an isotropic Gaussian kernel (full-width half-maximum 4mm). The 4mm filter is within the 3–6mm range recommended to reduce the possibility of blurring together activations that are in fact anatomically and/or functionally distinct (White et al., 2001). This is particularly important given our hypothesis of inter-task overlapping activations. This filter size is comparable to that used in other studies of the LOC (Amedi et al., 2005b [4mm]; James et al., 2002 [6mm]; Stilla and Sathian, 2008 [4mm]). Runs were normalized using the ‘z-baseline’ option in BrainVoyager which uses time-points where the predictor values are at, or close to, zero (the default criterion of 30.1 was used); this reduces inter-subject variance. Statistical analysis of group data used general linear models (GLM) treating the number of participants as a random factor (so that the degrees of freedom equal n-1, i.e. 11), followed by pairwise contrasts (spIMG – WnW and u/fHS – HT). Correction for multiple comparisons was achieved using the false discovery rate (FDR) method within a group whole brain mask (12 participant average) (spIMG task, FDR q<0.001, t11>6.55; HS tasks, FDR q<0.02, t11>5.36); different FDR thresholds were used for the spIMG and HS tasks in order to produce comparably focal activations and thus allow better anatomical specification. Activations were localized with respect to 3D cortical anatomy with the help of an MRI atlas (Duvernoy, 1999).

In order to test for the existence of significant inter-task overlaps in the spIMG and u/fHS-evoked activations in each experiment, we carried out conjunction analyses to find voxels active on both the spIMG > WnW and the u/fHS > HT contrast. This is a rigorous test of overlap, requiring the presence of significant activations in both spIMG and u/fHS tasks. Correction of these conjunction analyses for multiple comparisons was performed by imposing a threshold for the volume of clusters comprising contiguous voxels that passed a voxel-wise threshold of p<0.01, within the group whole brain mask, using a 3D extension (implemented in BrainVoyager QX) of the Monte Carlo simulation procedure described by Forman et al. (1995), with 1000 iterations. For the spIMG-uHS conjunction, the cluster threshold was 43 voxels and the FWHM estimate was 2.42 voxels; for the spIMG-fHS conjunction, the cluster threshold was 41 voxels and the FWHM estimate was 2.38 voxels (all voxel numbers refer to 3×3×3 mm functional voxels as resampled in BrainVoyager). To examine the relationships between activations evoked by the spIMG and u/fHS tasks, we created regions of interest (ROIs) centered on the center of gravity of the conjoint activation t-maps. The ROIs were constrained to be no larger than 125mm3 (5×5×5mm cube). Within these ROIs, the z-baseline normalized beta weights for the spIMG and u/fHS conditions (relative to baseline) were determined for each participant. Taking these beta weights as indices of activation strengths, inter-task, across-subject correlations were run on them. Note that, although the correlations were examined on conjointly active ROIs, the problem of circularity (Kriegeskorte, Lindquist, Nichols, Poldrack & Vul, 2010) is avoided because these correlations were based on inter-individual variance in activation magnitude relative to baseline, whereas the activation analyses were based on regions commonly active across individuals, in both tasks relative to their respective controls.

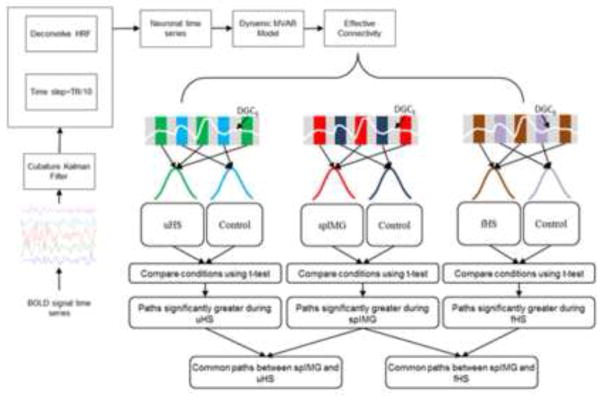

2.2.7 EC analyses

Granger causality analysis (GCA) is an exploratory method that can be used to study directional influences between different brain regions. The principle underlying GCA is that, if past values of time series A help predict the future values of time series B, then a directional causal influence from time series A to time series B can be inferred (Granger, 1969). Numerous earlier studies from our group and others (Deshpande, LaConte, James, Peltier, & Hu, 2009; Deshpande, Hu, Stilla, & Sathian, 2008; Abler, et al., 2006; Roebroeck, Formisano, & Goebel, 2005; Sathian, et al., 2011) characterized the Granger causal relationship between the BOLD signal time series from different brain regions using multivariate autoregressive models (MVAR). However, estimating GC metrics from raw BOLD signal time series can potentially lead to confounded causality measures, due to the spatial variability of the HRF (Deshpande, Sathian, & Hu, 2010b; David, et al., 2008). The obtained connectivity metrics can be improved by deconvolving the HRF from the raw BOLD signal time series and using the deconvolved time series to obtain the GC metrics. Such blind hemodynamic deconvolution removes the smoothing effect of the HRF and also its inter-subject and inter-regional variability (Handwerker, Ollinger, & D’Esposito, 2004). Both simulations (Ryali et al., 2011) and experimental data (David et al., 2008) have demonstrated improvements in GC-based effective connectivity metrics obtained from deconvolved as compared to raw BOLD data. Therefore, in this study the BOLD signal time series from selected ROIs were deconvolved using a cubature Kalman filter-based blind method (Havlicek et al., 2011). Then the dynamic MVAR model (of order 5, obtained from the Akaike (Akaike, 1974) and Bayesian (Schwartz, 1978) information criteria) was applied to the set of hidden neuronal variables obtained after deconvolution. Since the independent variables in our model were not significantly correlated with the error term, there was no endogeneity bias. Also, the deconvolved time series were input to the model without having to artificially slice the time series, by permitting the MVAR model coefficients to vary as a function of time to obtain dynamic GC metrics. The resulting connectivity time series were then time-sliced to obtain condition-specific EC values. Both recent simulations (Wen, Rangarajan, & Ding, 2013) and experimental results (Katwal, Gore, Gatenby, & Rogers, 2013) indicate that MVAR modeling methodology is very reliable for making inferences about path directionality and significance. Further details of the method have been presented earlier (Sathian, Deshpande & Stilla, 2013).

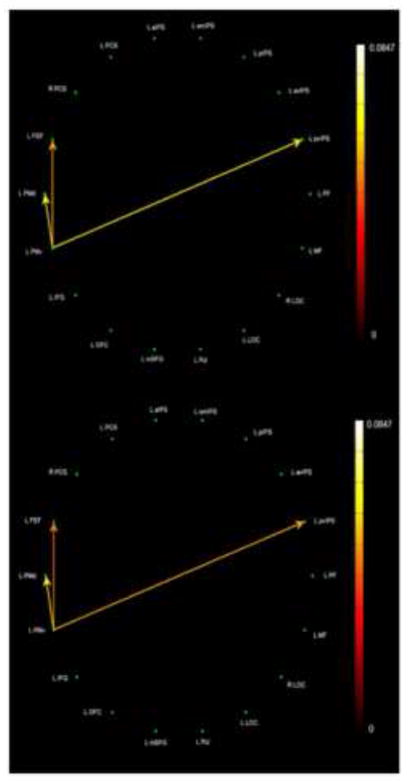

BOLD signal time series were extracted from 31 ROIs for each participant. The rationale for ROI selection is given in the Results. The dynamic GC metrics obtained across all the participants were populated into different samples corresponding to the task of interest (uHS, fHS or spIMG) and corresponding control conditions (i.e. the time-slicing referred to above). Empirical chi-squared tests of independence confirmed that connectivity values for any given path in the experimental and control conditions were independent (all p values < .05). t-tests were then performed between the condition-specific samples to obtain paths significantly stronger during the tasks of interest (relative to the corresponding control tasks), and thence, paths which were common between the spIMG & uHS tasks and the spIMG & fHS tasks were deduced. Figure 3 shows a schematic of the connectivity analysis.

Figure 3.

Schematic showing the steps in the EC analyses.

3. RESULTS

3.1 Psychophysics

In the spIMG task, mean (± SEM) accuracy was 81.2 ± 3.4% with a mean reaction time (RT) of 1481 ± 82ms. In the WnW task, mean accuracy was 81.8 ± 2.6% with a mean RT of 1244 ± 72ms. Paired t-tests (two-tailed) showed a significant difference between these tasks in RTs (t11 = 3.35, p = .006) but not accuracy (t11 = −.18, p = .86). Mean accuracy for the uHS task was 91.2 ± 1.4% and for the HT task 93.4 ± 1.3%; this difference was significant (t11 = −2.99, p = .01). For the fHS task, mean accuracy was 98 ± .7% and for the HT task 97.3 ± 1%; this difference was not significant (t11 = .62, p = .55). Although there were small differences in accuracy between tasks, accuracy was excellent in all tasks. Since the haptic trials involved exploration of objects handed to participants by an experimenter, haptic RTs were not measured.

Since spIMG RTs and uHS accuracy showed significant differences compared to the respective control tasks, we ran correlation maps (p <.05; equivalent to r10 > .58; cluster-corrected within the group whole brain mask) between these psychophysical measures and activation magnitude (z-baseline normalized beta value) in the corresponding task. For spIMG RTs, only cerebellar/pontine regions showed significant correlations (cluster threshold = 194 voxels; FWHM = 1.89) (Supplementary Table 1); none of these overlapped with the cerebellar regions seen on the spIMG > WnW contrast. For uHS accuracy, there were no significantly correlated regions at the chosen statistical threshold. Thus, we conclude that the observed differences in task performance are unlikely to confound the main activation analyses. (Although accuracy on fHS and spIMG was not significantly different from their respective control tasks, we ran the correlation maps for completeness – Supplementary Table 2).

For the HS and HT tasks, there were no blocks in which all trials were associated with incorrect responses; there were 7 such blocks for the spIMG task and 4 for the WnW task. Since these constituted only 2.4% and 1.3%, respectively, of the total number of blocks in these tasks (288 across the entire experiment) and since all participants reported actively trying to solve the tasks, these blocks were not removed for the analyses.

3.2 Activations during spIMG

(See Appendix for all anatomical abbreviations). Relative to the WnW task, the spIMG task activated the right LOC and multiple bilateral foci in the IPS, as well as other parietal and frontal foci. These activations are detailed in Supplementary Table 3; representative activations are displayed in Supplementary Figure 1.

3.3 Activations during HS

Relative to the HT task, the uHS task activated the right LOC while the fHS task activated the LOC bilaterally. Both HS tasks activated multiple bilateral parietal foci in the IPS, although these were fewer and smaller for fHS. The uHS task additionally activated the PCG and PCS of left primary somatosensory cortex (S1), consistent with participants exploring objects with their right hand, while fHS activated several right frontal regions. Activations on the HS tasks are detailed in Supplementary Tables 4 (uHS) and 5 (fHS) with representative activations displayed in Supplementary Figures 2 and 3, respectively.

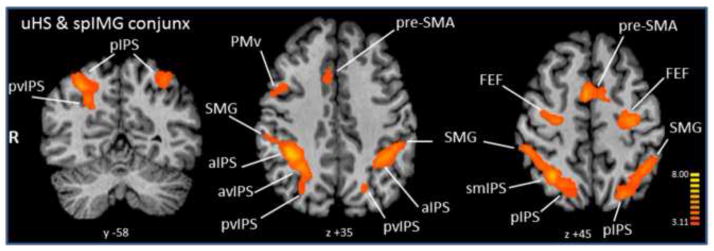

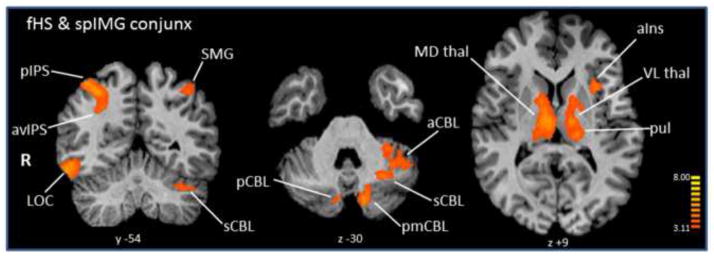

3.4 Conjoint activations during spIMG and HS

Conjunction analyses showed common areas between spIMG and both HS tasks in multiple bilateral IPS foci as well as bilateral SMG and FEF, and right premotor areas. The spIMG-uHS conjoint activations are listed in Table 1, with representative areas shown in Figure 4. The fHS conjunction with spIMG (Table 2, Figure 5) was distinguished by the presence of right LOC and multiple bilateral cerebellar and thalamic areas that were not seen in the spIMG-uHS conjunction. Selected BOLD signal time-courses from each task are shown in Supplementary Figure 4.

Table 1.

Conjunction analysis of activations during spIMG and uHS. R, L: right, left; x, y, z: Talairach coordinates for the centers of gravity of the activations; r, p: correlation coefficients and associated p values for correlations between beta weights of spIMG- and HS-evoked activations relative to baseline (only significant correlations shown);

| Region | x | y | z | r | p |

|---|---|---|---|---|---|

| pre-SMA | 1.7 | 7.9 | 44.4 | ||

| R FEF* | 26.1 | −9.4 | 53.6 | .62 | .03 |

| R PMv | 47.2 | 0.3 | 29.6 | ||

| R SMG | 42.5 | −40.7 | 43.1 | ||

| R smIPS | 29.3 | −54.3 | 43.6 | ||

| R aIPS | 33.9 | −41.5 | 34.3 | ||

| R pIPS | 19.8 | −64.0 | 46.3 | ||

| R avIPS | 25.6 | −56.0 | 32.9 | ||

| R pvIPS* | 26.7 | −71.4 | 30.5 | .64 | .02 |

| L FEF | −26.5 | −11.5 | 51.3 | ||

| L SMG | −40.5 | −48.1 | 43.5 | ||

| L aIPS | −37.6 | −43.7 | 35.5 | ||

| L pIPS | −28.9 | −64.2 | 46.9 | ||

| L pvIPS | −20.1 | −68.4 | 39.0 |

ROIs included in EC analyses.

Figure 4.

Conjunction analysis of activations during spIMG and uHS. Talairach plane given below each slice; radiologic convention used (right hemisphere on left). Color t-scale on right.

Table 2.

Conjunction analysis of activations during spIMG and fHS. (Key as for Table 1)

| Region | x | y | z | r | p |

|---|---|---|---|---|---|

| pre-SMA | 2.6 | 11.9 | 44.5 | ||

| R aCing | 9.3 | 15.2 | 35.9 | ||

| R SPG | 6.8 | −62.3 | 55.8 | ||

| R FEF | 24.1 | −7.2 | 53.7 | ||

| R SMG (2 foci) | 46.3 | −36.0 | 41.5 | ||

| 52.3 | −33.5 | 33.4 | |||

| R aIPS | 34.3 | −41.0 | 36.2 | ||

| R pIPS | 27.9 | −59.1 | 48.3 | .60 | .03 |

| R AG* | 27.8 | −61.3 | 38.7 | .62 | .03 |

| R PMd | 32.0 | −4.1 | 39.4 | ||

| R PMv | 41.0 | 3.1 | 28.3 | ||

| R IFG | 40.0 | 20.6 | 22.7 | ||

| R MD thal | 6.0 | −15.3 | 11.9 | ||

| R VL thal | 8.1 | −7.2 | 6.1 | ||

| R LP thal | 11.2 | −16.6 | 14.0 | ||

| R pul | 13.9 | −22.4 | 11.3 | ||

| R LOC | 49.6 | −50.7 | −11.7 | ||

| L FEF | −25.5 | −9.6 | 51.8 | ||

| L smIPS/LaIPS | −32.2 | −59.5 | 51.5 | ||

| L aIPS | −39.7 | −41.5 | 36.8 | ||

| L SMG | −42.0 | −49.2 | 46.0 | ||

| L aIns | −29.5 | 12.4 | 8.0 | ||

| L VL thal | −11.2 | −13.5 | 7.1 | ||

| L LP thal | −11.9 | −15.6 | 12.0 | ||

| L pul* | −15.1 | −23.2 | 11.7 | .76 | .004 |

| CBL vermis | −0.4 | −65.2 | −15.8 | ||

| L aCBL* (2 foci) | −30.9 | −40.5 | −28.7 | .72 | .008 |

| −38.1 | −50.3 | −28.2 | |||

| L sCBL* | −29.4 | −59.2 | −27.0 | .58 | .04 |

| L pmCBL | −14.3 | −73.4 | −28.4 | ||

| R pCBL | 6.2 | −74.6 | −26.7 |

Figure 5.

Conjunction analysis of activations during spIMG and fHS. Details as in Figure 4.

3.5 Inter-task correlations

We examined across-subject correlations of activation magnitude between spIMG and each of the HS tasks in the ROIs revealed by the corresponding conjunction analysis. As shown in Tables 1 & 2, relatively few of the conjunction foci demonstrated significant inter-task correlations (all positive), with a few more between spIMG and fHS (Table 2) than between spIMG and uHS (Table 1). Parietal cortical foci featured in both sets of correlations: the right pvIPS for spIMG-uHS and the right pIPS and AG for spIMG-fHS, while there were no inter-task correlations for the LOC in either case. These results suggest some involvement of spIMG in both fHS and uHS, which is not in accordance with our model.

3.6 Correlations between spatial imagery activations and behavioral variables

We ran correlation maps (p <.05; equivalent to r10 > .58; cluster-corrected within the group whole brain mask) between the activation magnitudes from the spIMG > baseline contrast (z-baseline normalized beta value) and accuracy on the spIMG and HS tasks as well as the OSIVQ difference scores. As predicted, spIMG accuracy was positively correlated with activation magnitudes in bilateral parietal foci: avIPS, POF and SPG, as well as bilateral aCa and RSP (Supplementary Table 2a); there were no negatively correlated areas at the chosen statistical threshold. Activation magnitudes from the fHS > baseline contrast were positively correlated with accuracy in the left PCS, SMG, and left parietal foci (Supplementary Table 2b); there were no negatively correlated areas at the chosen statistical threshold. There were no correlated areas for the uHS task. OSIVQ difference scores were negatively correlated with the activation magnitudes of foci in the caudate head and putamen bilaterally (Supplementary Table 6); since the difference scores run from negative (spatial imagery preference) to positive (object imagery preference) values, this means that activation magnitudes decreased as the preference for spatial imagery decreased and that for object imagery increased. No areas were positively correlated with OSIVQ difference scores at the chosen statistical threshold, and notably, there were no significant correlations in IPS regions (contrary to our prediction).

3.7 EC analyses

After eliminating duplicate ROIs and those with non-selective timecourses (i.e., those equally active during the task of interest and its control task), the 31 ROIs entered into the EC analyses were: 6 showing a positive inter-task correlation of activation magnitudes between spIMG and u/fHS (Tables 1 and 2); 2 each from the contrasts fHS > uHS and uHS > fHS (Supplementary Table 7a & b) of which one each also showed an inter-task correlation with spIMG; 5 from the conjunction of uHS and fHS (Supplementary Table 7c); 9 regions in which accuracy on the spIMG task was positively correlated with activation magnitudes from the contrast spIMG > baseline (Supplementary Table 2a); 4 in which OSIVQ difference scores were negatively correlated with that contrast (Supplementary Table 6); one L aIPS region in which accuracy on the fHS task was positively correlated with activation magnitudes from the contrast fHS > baseline (Supplementary Table 2b); and bilateral LOC for the spIMG > WnW contrast (the right LOC from Supplementary Table 3; the left LOC did not survive correction but was included because of its centrality in the model). Paths which were significantly stronger (p<0.05) in the tasks of interest: spIMG, fHS, and uHS, compared to their respective control conditions are shown in Supplementary Figures 5–7. Since the connectivities were themselves obtained from a multivariate model, the connectivities of different paths at any given instant are not independent (note that the connectivities obtained from different conditions for the same paths do form independent samples as discussed above). Therefore, the tests performed on different paths are not independent; hence, no multiple comparisons correction was performed.

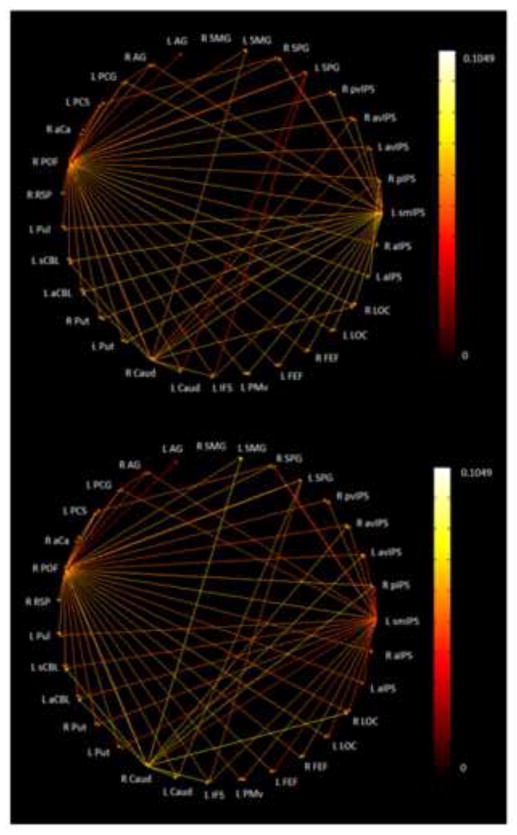

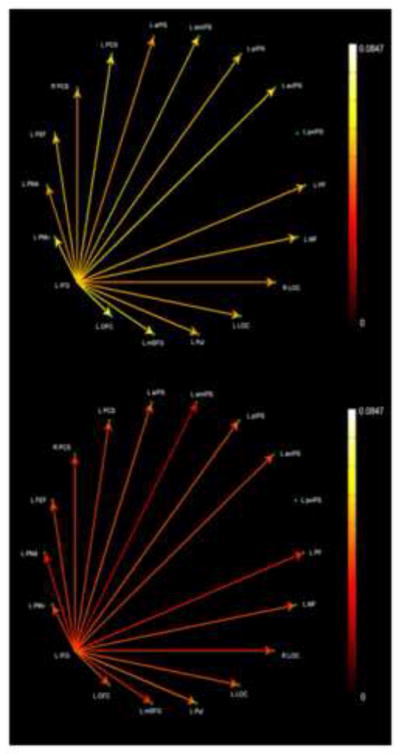

Figure 6 shows the paths which were significantly stronger (p<0.05) during both the spIMG and uHS tasks as compared to their respective control tasks while Fig. 7 shows the same for the spIMG and fHS tasks. Note that, in each case, the network is virtually identical whether paths are represented by spIMG or the HS task path weights. It is apparent that more significant paths were common between spIMG and uHS than between spIMG and fHS. In the EC network for spIMG and uHS (Figure 6), the main outputs were from the right POF and the left smIPS, both of which drove bilateral LOC, as predicted by the role for parietal cortical foci in our model. S1 ROIs (left PCG, PCS), in addition to input from one or other of the main drivers, were also driven by right SPG (with a path going to left PCS), and a path from the left PCS targeted early visual cortex (right aCa) while the left PCG projected to the right AG. The right caudate focus projected to the right LOC, left IFS, and multiple parietal and subcortical foci while the left SPG drove the left caudate and IFS. By contrast, the common paths for spIMG and fHS (Figure 7) were dominated by output from the right POF, which projected to every other ROI except the right SMG. The left smIPS in this case only projected to two other parietal foci, while the left PCG projected to the left caudate. Notably, the right AG was the only parietal focus demonstrating an inter-task correlation (between spIMG and fHS) that also featured distinctively in the EC networks (receiving a projection from the left PCG in the spIMG and uHS networks, but not the fHS network). Thus, overall, the uHS and spIMG networks shared more interactions between parietal cortical foci, the LOC and S1 compared to the paths shared by the fHS and spIMG networks: this is broadly consistent with our model.

Figure 6.

Paths significantly stronger during both spIMG and uHS tasks as compared to the corresponding control task: (top) edge color represented by spIMG task path weights; (bottom) edge color represented by uHS task path weights.

Figure 7.

Paths significantly stronger during both spIMG and fHS tasks as compared to the corresponding control task: (top) edge color represented by spIMG task path weights; (bottom) edge color represented by fHS task path weights.

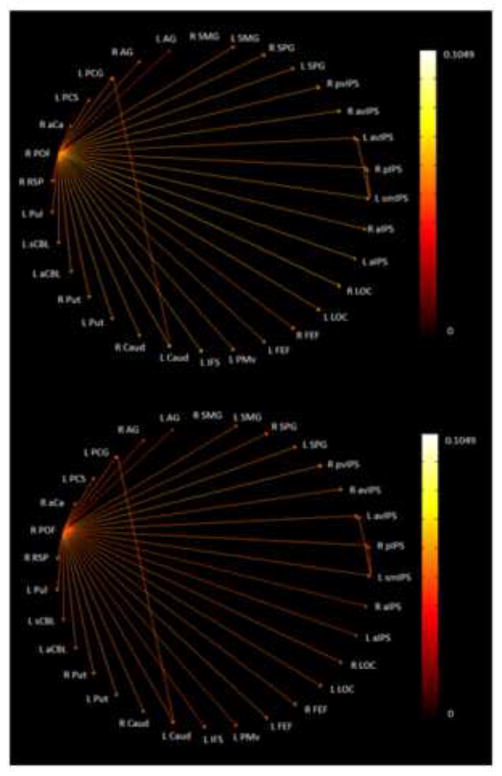

In order to compare all aspects of the model on the same basis, we re-analyzed the data from Deshpande et al. (2010a) using the same EC methods described here. Fig. 8 shows the rather sparse network common to objIMG and uHS in which L PMv is the single source, driving only a limited number of targets: L PMd, FEF, and pvIPS. Fig. 9 shows the common objIMG and fHS network; here, L IFG is the single source but drives every other ROI except for pvIPS. Thus, objIMG and fHS clearly engaged a more extensive common network (Fig. 9) compared to the sparse network that was shared by objIMG and uHS (Fig. 8). By contrast, spIMG and uHS engaged a more extensive common network (Fig. 6) than that seen for spIMG and fHS (Fig. 7). These asymmetries are in keeping with our model.

Figure 8.

Paths significantly stronger during both objIMG and uHS tasks as compared to the corresponding control task: (top) edge color represented by objIMG task path weights; (bottom) edge color represented by uHS task path weights.

Figure 9.

Paths significantly stronger during both objIMG and fHS tasks as compared to the corresponding control task: (top) edge color represented by objIMG task path weights; (bottom) edge color represented by fHS task path weights.

4. DISCUSSION

Our proposed model of multisensory object representation conceptualizes haptic activation of the visually shape-selective LOC as being associated with objIMG during fHS, but depending on spIMG during uHS (Lacey et al., 2009). Consistent with this model, we previously found that there were more conjointly active regions, with more inter-task correlations of activation magnitude, for objIMG and fHS than there were for objIMG and uHS (Lacey et al., 2010). In addition, objIMG and fHS shared similar effective connectivity networks that were markedly different from the network involved in uHS (Deshpande et al., 2010a). Here we tested whether spIMG and uHS have a similar preferential relationship to that seen for objIMG and fHS.

Activation analyses of the present study showed that there were more conjointly active regions (31 vs 14), and more inter-task correlations (5 vs 2), for spIMG and fHS (Table 2) than for spIMG and uHS (Table 1) although 3 of the 5 correlated spIMG-fHS regions were subcortical or cerebellar. Moreover, parietal foci featured in both sets of correlations (1 of the 2 cortical regions in each case). Thus, while the activation analyses in our previous study showed clear evidence for a preferential relationship between objIMG and fHS (Lacey et al., 2010), the activation analyses of the present study did not support such a relationship for spIMG and uHS. Rather, these analyses suggest that spIMG might be relevant to HS perception, at some level, regardless of familiarity, and thus dictate adjustments to our model. Relevant to this idea, Jäncke et al. (2001) found activity in bilateral AG and pIPS for haptic exploration of unfamiliar meaningless objects and for the haptic reconstruction of such objects from plasticine lumps (each compared to a motor control condition). The right AG foci for haptic exploration and construction in this earlier study were very close to the right AG focus (spIMG-fHS correlation) of the present study (Euclidean distances of 7mm and 4mm), while the right pIPS foci of Jäncke et al. (2001) for haptic exploration and construction were slightly further away from our right pIPS focus (spIMG-fHS correlation) (Euclidean distances of 8mm and 12mm). Note, too, that the left AG/pIPS focus related to haptic exploration and construction (Jäncke et al., 2001) is close to both of the correlated left IPS regions in Lacey et al. (2010) for uHS and fHS, at Euclidean distances of 7mm and 9mm. Additionally, the right pvIPS (spIMG-uHS correlation) and right pIPS (spIMG-fHS correlation) foci of the present study are near the corresponding foci (Euclidean distance ~5.5 mm and 14mm respectively) that were haptically location-selective in an earlier study from our laboratory, requiring judgments of the position of a bump on a card – analogous to locating a part of an object (Sathian et al., 2011). However, these parietal foci for haptic exploration and construction were not active during mental imagery of haptic exploration and construction (compared to actual exploration and construction) and largely showed stronger activity during actual haptic exploration and construction when compared to the imagery condition (Jäncke et al., 2001). We should note, too, that the imagery task in Jäncke et al. (2001) required participants to imagine haptically constructing objects and thus likely involved elements of both object and spatial, as well as motor, imagery. Thus, while these findings might fit with the idea of spIMG being involved in both uHS and fHS, they are also consistent with the possibility that the underlying commonality might reflect spatial processing important for HS perception, rather than spatial imagery per se.

Another possibility emerges from the study of Mechelli et al. (2004), who found both prefrontal and parietal regions contributing to visual imagery. Crucially, the parietal foci, unlike the prefrontal foci, were insensitive to image content (and therefore, presumably, also to content familiarity) and were, instead, considered to reflect generic imagery processes such as image generation (Mechelli et al., 2004). Since such processes are common to both object and spatial imagery, it would not be surprising to find such areas correlated with either spatial imagery in the present study, or object imagery as in Lacey et al. (2010), but by itself this finding would not necessarily be indicative of the type of imagery involved. In agreement with this line of thought, object and spatial imagers could be distinguished by differential activity in LOC but not superior parietal cortex during an object imagery task (Motes et al., 2008), suggesting that parietal cortex supports generic imagery processing unrelated to individual preferences for imagery type. However, the parietal foci in Mechelli et al. (2004) were all in the left hemisphere, presumably because the (object) imagery task used in that study was verbally cued, whereas the correlated parietal foci in the present study were all in the right hemisphere. Thus, we cannot confidently rule in or rule out the possibility that generic imagery processes, rather than specifically spatial imagery processes, account for the observed parietal cortical correlations between spIMG and uHS/fHS in the present study.

In order to evaluate the model in terms of connectivity, we reanalyzed the data from our earlier study (Deshpande et al., 2010a) using the same EC methods as here so that we could make direct comparisons between objIMG and spIMG. These analyses revealed that spIMG and uHS engaged a more extensive and complex common network (Fig. 6) than that for spIMG and fHS (Fig. 7), whereas objIMG and fHS shared a more extensive network (Fig. 9) than that for objIMG and uHS (Fig. 8). Thus, the broad architecture of the full model is supported by the EC networks showing quite different relationships for spIMG-uHS and objIMG-fHS, despite the results of the activation analyses of the present study.

At a more detailed level, the model predicts greater involvement of somatosensory and parietal cortex for uHS compared to fHS, since unfamiliar objects must be explored in their entirety, in order to compute global shape from the spatial relationships between component parts, whereas this may not be essential for fHS (Lacey et al., 2009). Supporting this, the spIMG-uHS network showed that LOC, bilaterally, is driven by the right POF and left smIPS, with complex cross-talk between IPS foci, notably the left smIPS which drove the avIPS bilaterally and the right pIPS and pvIPS, consistent with the important role of parietal cortex in spatial imagery (e.g. Mellet et al., 1996; Schicke et al., 2006; Sack et al., 2008). By comparison, these complex IPS interactions are almost completely absent from the spIMG-fHS network (which essentially consisted of the right POF as a driver of all other regions except the right SMG). Similarly, in accordance with model predictions, the spIMG-uHS network showed paths between parietal and somatosensory foci (from the left smIPS to the left PCG, the right SPG to the left PCS, the left PCG to the right AG and POF, and the left PCS to the right POF) that are entirely absent from the spIMG-fHS network. In addition, there was a cluster of paths in the spIMG-uHS network between early visual cortex in the right aCa, parietal cortex in the right POF, and somatosensory foci in the left PCS and PCG; again, this cluster was entirely absent from the spIMG-fHS network. Thus, the spIMG-uHS network is consistent with our model proposing that the component parts of an unfamiliar object are explored, providing information that is processed through somatosensory-parietal connections, and assembled via spatial imagery processes that depend on intra-parietal connections into global shape representations in the LOC.

Other aspects of the spIMG-uHS network, while not explicitly predicted, are nevertheless explicable by reference to the model. In this respect, it is interesting to note the cluster of connections between basal ganglia and cerebellar and cortical foci: the right caudate head driving left cerebellar and multiple bilateral IPS foci as well as the right POF and LOC, and also receiving input from the left SPG; and bilateral putamen being driven by the left smIPS and right POF: these paths are, again, entirely absent from the spIMG-fHS network. Since the basal ganglia are involved in planning and execution of voluntary movement (e.g., Jankowski, Scheef, Hüppe & Boecker, 2009), this cluster could reflect greater coordination of haptic object exploration and manipulation for unfamiliar, compared to familiar, objects. As we previously suggested, global shape can be inferred more easily for familiar objects from a few distinctive parts or even a single diagnostic part, whereas for unfamiliar objects global shape has to be computed from an exploration of the complete object (Lacey et al., 2009). Note that Jankowski et al. (2009) found that the caudate head was bilaterally involved in planning novel movement sequences, as would be the case for unfamiliar objects, and that their right caudate head focus was within a Euclidean distance of less than 4mm from that reported here (after translating from MNI to Talairach coordinates). However, the caudate nucleus, and basal ganglia more generally, are also involved in higher cognitive functions (Middleton & Strick, 2000; Grahn, Parkinson & Owen, 2009) via cortico-subcortico-cortical loops (see Alexander, Crutcher & DeLong, 1990; Kotz, Anwander, Axer & Knösche, 2013). Here, it may be relevant to note, for example, that dopamine receptor availability in the caudate and putamen is positively correlated with spatial task performance (Reeves et al., 2005). Apart from these subcortical connections, the left PMv driving the left PMd, FEF and pvIPS in the spIMG-uHS network may similarly reflect greater haptic exploration for unfamiliar, compared to familiar, objects. Increased haptic exploration may also have been required for the unfamiliar objects used in this experiment because, unlike the familiar objects, they did not vary in texture. However, it is hard to quantify what additional haptic exploration was occasioned by the lack of texture cues over and above that required by unfamiliarity. Texture might have provided important material cues that account for slightly greater accuracy in the fHS task but, arguing against this, the familiar objects were grouped to minimize texture differences within each block of the fHS task, thus favoring reliance on shape cues alone. Additionally, accuracy differences between the fHS and uHS tasks, albeit significant (see Supplementary Results), were quite minor. We did not measure haptic RTs and the fHS task might have been performed faster than the uHS task. However, it would not be surprising if familiarity facilitates the speed of object recognition. In any case, we think it improbable that minor differences of texture or accuracy could account for the substantial network differences we observed between uHS and fHS.

By contrast to the bottom-up somatosensory and parietal paths involved in spIMG and uHS, the relationship between objIMG and fHS is characterized by top-down paths from frontal cortex (Lacey et al., 2009; Deshpande et al., 2010a). This is supported by the reanalysis of our earlier EC data, showing that the left IFG drives activity in the LOC bilaterally in the objIMG-fHS network, with no such paths observed in the sparse objIMG-uHS network.

Because of the differences in EC methods, it is difficult to directly compare the networks reported here with our earlier study (Deshpande et al., 2010a). We note that, although S1 foci are involved in the spIMG-uHS but not the spIMG-fHS network, the direct drive of LOC by S1 (right PCS) seen in the uHS network derived from the BOLD time series in our earlier study (Deshpande et al., 2010a, Fig. 1) is not supported by the present results which are based on the inferred neuronal time series after HRF deconvolution. We therefore propose to amend the description of our model slightly to show indirect drive of LOC from S1, mediated via spatial imagery processes in parietal cortex, rather than direct drive of LOC from S1 as originally proposed (Lacey et al., 2009, p272). In this respect, it is interesting to note that LOC activity is only directly correlated between tasks for objIMG and fHS, not for objIMG and uHS (Lacey et al., 2010) and not for spIMG and HS regardless of familiarity (present results). This suggests that, while object imagery for familiar objects may directly involve global object representations in LOC (with the help of prefrontal cortical processes), spatial imagery may operate primarily at the level of part-based representations in parietal cortex. A recent study found HS-selective activity for familiar objects in numerous ventral visual regions, including the LOC, as well as primary visual cortex (Snow, Strother & Humphreys, 2014), but acknowledged that visual imagery could not be ruled out as an explanation. Imagery-mediated activation of these visual areas by haptic stimuli would be in agreement with our model and, in the case of primary visual cortex, would suggest that haptic inputs can access the most fundamental component of the most detailed model of visual imagery, since primary visual cortex is the putative site of the ‘visual buffer’ in which images occur and can be inspected or scanned (Kosslyn, Thompson & Ganis, 2006; Kosslyn, 1994). This might well involve somatosensory-occipital cortical pathways, perhaps via parietal cortex. Cortical pathways between primary somatosensory and primary visual cortices have previously been demonstrated in the macaque (Négyessy, Nepusz, Kocsis & Bazsó, 2006) and these surely merit further examination.

Object familiarity exists along a continuum, rather than a dichotomy, and the same is true for individual preferences for object and spatial imagery in both the visual (Kozhevnikov et al., 2002, 2005; Blajenkova et al., 2006; Blazhenkova et al., 2009) and the haptic (Lacey et al., 2011) modalities; it is therefore likely that the relationship between imagery type and object familiarity is also continuous rather than dichotomous, although this is yet to be tested. The present results and those of our previous studies, together with the arguments set out above, suggest that the model proposed in Lacey et al. (2009) is broadly correct and well-supported, particularly by the task-specific EC analyses reported here. However, the inter-task correlations between spIMG and HS in the present study, as outlined above, do not fit the model, as they were present regardless of familiarity. We suggest that they could be explained by one or more of three possibilities: (i) involvement of a modicum of spatial imagery in HS, regardless of object familiarity (in the case of fHS, this might simply be to process enough component parts to trigger retrieval of an object image from long-term memory) (e.g., Lacey et al., 2009); (ii) involvement of generic imagery processes in all three tasks (e.g., Mechelli et al., 2004); (iii) spatial processing in all three tasks unrelated to spatial imagery per se (e.g., Jäncke et al., 2001). Further work will be necessary to definitively distinguish between these three possibilities. Since our proposed model argued that the global shape of unfamiliar objects has to be computed by exploring them in their entirety while that of familiar objects can be inferred from a few, perhaps distinctive and diagnostic, parts (Lacey et al., 2009), it would seem prudent to modify the model to allow for the aforementioned three possibilities. Accordingly, we propose a revised model, as shown in Figure 10, which attempts to reconcile all the available data. The revised model retains the asymmetry between object and spatial imagery in being more relevant, respectively, to familiar vs. unfamiliar HS perception, but admits that spatial imagery may also have a role for familiar objects. As pointed out above, this apparent role might also be ascribed to alternative possibilities such as generic imagery processes or generic spatial processing; hence the dotted arrow linking spatial imagery and the familiar half of the model. This revised model merits further testing, particularly in regard to individual differences in the relationship between object familiarity and object-spatial imagery during HS perception.

Figure 10.

Revised schematic model of haptic object representation. In this model, LOC activity during haptic shape perception is modulated by object familiarity and imagery type. For unfamiliar objects, the LOC interacts with a complex network involving S1 and spatial imagery processes in parietal cortex in and around the IPS. For familiar objects, the LOC is driven mainly top-down from prefrontal cortex via object imagery processes. To the extent that parietal cortex is involved regardless of familiarity, this may reflect (i) a limited role for spatial imagery or (ii) generic imagery processes or (iii) general spatial processing unrelated to imagery. While this schematic focuses on haptics, a similar schematic is thought to apply to vision. The LOC thus houses an object representation that is flexibly accessible, both bottom-up and top-down, and which is modality-independent (Lacey et al., 2007, 2011).

Supplementary Material

HIGHLIGHTS.

Spatial imagery and haptic shape perception share some commonality

Object familiarity modulates similarity of haptic shape and spatial imagery networks

Spatial imagery network more similar to unfamiliar than familiar shape network

Visual object imagery network more similar to familiar than unfamiliar shape network

Findings support proposed model of haptic shape perception in relation to imagery

Acknowledgments

Support to KS from the National Institutes of Health (RO1 EY012440) and the Veterans Administration is gratefully acknowledged.

Anatomical abbreviations

Prefixes

- a

anterior

- av

anteroventral

- i

inferior

- p

posterior

- pm

posteromedial

- pv

posteroventral

- s

superior

- sm

superomedial

Regions

- AG

angular gyrus

- Ca

calcarine sulcus

- Caud

caudate

- CBL

cerebellum

- Cing

cingulate

- FEF

frontal eye fields

- IFG

inferior frontal gyrus

- IFS

inferior frontal sulcus

- Ins

insula

- IPS

intra-parietal sulcus

- LOC

lateral occipital complex

- MFG

middle frontal gyrus

- PCG

postcentral gyrus

- PCS

postcentral sulcus

- PMd

dorsal premotor cortex

- PMv

ventral premotor cortex

- POF

parieto-occipital fissure

- pre-SMA

pre-supplementary motor area

- pul

pulvinar

- put

putamen

- RSP

retrosplenial cortex

- SFG

superior frontal gyrus

- SMG

superior marginal gyrus

- SPG

superior parietal gyrus

- thal

thalamus

- MD

mediodorsal nucleus

- LP

lateral posterior nucleus

- VL

ventrolateral nucleus

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abler B, Roebroeck A, Goebel R, Hose A, Schönfeldt-Lecuona C, Hole G, Walter H. Investigating directed influences between activated brain areas in a motor-response task using fMRI. Magnetic Resonance Imaging. 2006;24:181–185. doi: 10.1016/j.mri.2005.10.022. [DOI] [PubMed] [Google Scholar]

- Akaike H. A new look at the statistical model identification. IEEE Transactions on Automatic Control. 1974;19:716–723. [Google Scholar]

- Alexander GE, Crutcher MD, DeLong MR. Basal ganglia-thalamocortical circuits: parallel substrates for motor, oculomotor, “prefrontal” and “limbic” functions. Progress in Brain Research. 1990;85:119–146. [PubMed] [Google Scholar]

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cerebral Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neuroscience. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Pascual-Leone A. Negative BOLD differentiates visual imagery and perception. Neuron. 2005b;48:859–872. doi: 10.1016/j.neuron.2005.10.032. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, et al. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nature Neuroscience. 2007;10:687–689. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Amedi A, von Kriegstein K, van Atteveldt NM, Beauchamp MS, Naumer MJ. Functional imaging of human crossmodal identification and object recognition. Experimental Brain Research. 2005a;166:559–571. doi: 10.1007/s00221-005-2396-5. [DOI] [PubMed] [Google Scholar]

- Blajenkova O, Kozhevnikov M, Motes MA. Object-spatial imagery: a new self-report imagery questionnaire. Applied Cognitive Psychology. 2006;20:239–263. [Google Scholar]

- Blazhenkova O, Kozhevnikov M. The new object-spatial-verbal cognitive style model: Theory and measurement. Applied Cognitive Psychology. 2009;23(5):638–663. [Google Scholar]

- David O, Guillemain I, Saillet S, Reyt S, Deransart C, Segebarth C, Depaulis A. Identifying Neural Drivers with Functional MRI: An Electrophysiological Validation. PLoS Biology. 2008;6(12):2683–2697. doi: 10.1371/journal.pbio.0060315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Lacey S, Stilla R, Sathian K. Object familiarity modulates effective connectivity during haptic shape perception. NeuroImage. 2010a;49:1991–2000. doi: 10.1016/j.neuroimage.2009.08.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Hu X, Stilla R, Sathian K. Effective connectivity during haptic perception: A study using Granger causality analysis of functional magnetic resonance imaging data. NeuroImage. 2008;40:1807–1814. doi: 10.1016/j.neuroimage.2008.01.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, LaConte S, James G, Peltier S, Hu X. Multivariate Granger causality analysis of brain networks. Human Brain Mapping. 2009;30(4):1361–1373. doi: 10.1002/hbm.20606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deshpande G, Sathian K, Hu X. Effect of hemodynamic variability on Granger causality analysis of fMRI. NeuroImage. 2010b;52(3):884–896. doi: 10.1016/j.neuroimage.2009.11.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Volder AG, Toyama H, Kimura Y, Kiyosawa M, Nakano H, et al. Auditory triggered mental imagery of shape involves visual association areas in early blind humans. NeuroImage. 2001;14:129–139. doi: 10.1006/nimg.2001.0782. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. The human brain. Surface, blood supply and three-dimensional sectional anatomy. Springer; New York: 1999. [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, et al. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magnetic Resonance in Medicine. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Parkinson JA, Owen AM. The role of the basal ganglia in learning and memory: neuropsychological studies. Behavioural Brain Research. 2009;199:53–60. doi: 10.1016/j.bbr.2008.11.020. [DOI] [PubMed] [Google Scholar]

- Granger CWJ. Investigating causal relations by econometric models and cross-spectral methods. Econometrica. 1969;37:424–438. [Google Scholar]

- Handwerker D, Ollinger J, D’Esposito M. Variation of BOLD hemodynamic responses across subjects and brain regions and their effects on statistical analyses. NeuroImage. 2004;21:1639–1651. doi: 10.1016/j.neuroimage.2003.11.029. [DOI] [PubMed] [Google Scholar]

- Havlicek M, Friston K, Jan J, Brazdil M, Calhoun V. Dynamic modeling of neuronal responses in fMRI using cubature Kalman filtering. NeuroImage. 2011;56(4):2109–2128. doi: 10.1016/j.neuroimage.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, Humphrey GK, Gati JS, Servos P, Menon RS, et al. Haptic study of three-dimensional objects activates extrastriate visual areas. Neuropsychologia. 2002;40:1706–1714. doi: 10.1016/s0028-3932(02)00017-9. [DOI] [PubMed] [Google Scholar]

- James TW, Stevenson RW, Kim S, VanDerKlok RM, James KH. Shape from sound: evidence for a shape operator in the lateral occipital cortex. Neuropsychologia. 2011;49:1807–1815. doi: 10.1016/j.neuropsychologia.2011.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jäncke L, Kleinschmidt A, Mirzazade S, Shah NJ, Freund HJ. The role of the inferior parietal cortex in linking the tactile perception and manual construction of object shapes. Cerebral Cortex. 2001;11:114–121. doi: 10.1093/cercor/11.2.114. [DOI] [PubMed] [Google Scholar]

- Jankowski J, Scheef L, Hüppe C, Boecker H. Distinct striatal regions for planning and executing novel and automated movement sequences. NeuroImage. 2009;44:1369–1379. doi: 10.1016/j.neuroimage.2008.10.059. [DOI] [PubMed] [Google Scholar]

- Katwal SB, Gore JC, Gatenby JC, Rogers BP. Measuring relative timings of brain activities using fMRI. NeuroImage. 2013;66:436–448. doi: 10.1016/j.neuroimage.2012.10.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn SM. Image and Brain: The Resolution of the Imagery Debate. Cambridge, MA: MIT Press; 1994. [Google Scholar]

- Kosslyn SM, Thompson WL, Ganis G. The Case for Mental Imagery. New York: Oxford University Press Inc; 2006. [Google Scholar]

- Kotz SA, Anwander A, Axer H, Knösche TR. Beyond cytoarchitectonics: the internal and external connectivity structure of the caudate nucleus. PLoS ONE. 2013;8:e70141. doi: 10.1371/journal.pone.0070141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kozhevnikov M, Hegarty M, Mayer RE. Revising the visualiser-verbaliser dimension: evidence for two types of visualisers. Cognition & Instruction. 2002;20:47–77. [Google Scholar]

- Kozhevnikov M, Kosslyn SM, Shephard J. Spatial versus object visualisers: a new characterisation of cognitive style. Memory & Cognition. 2005;33:710–726. doi: 10.3758/bf03195337. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Lindquist MA, Nichols TE, Poldrack RA, Vul E. Everything you never wanted to know about circular analysis, but were afraid to ask. Journal of Cerebral Blood Flow & Metabolism. 2010;30:1551–1557. doi: 10.1038/jcbfm.2010.86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Campbell C, Sathian K. Vision and touch: Multiple or multisensory representations of objects? Perception. 2007;36:1513–1521. doi: 10.1068/p5850. [DOI] [PubMed] [Google Scholar]

- Lacey S, Flueckiger P, Stilla R, Lava M, Sathian K. Object familiarity modulates the relationship between visual object imagery and haptic shape perception. NeuroImage. 2010;49:1977–1990. doi: 10.1016/j.neuroimage.2009.10.081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Lin JB, Sathian K. Object and spatial imagery dimensions in visuo-haptic representations. Experimental Brain Research. 2011;213(2–3):267–273. doi: 10.1007/s00221-011-2623-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacey S, Sathian K. Multisensory object representation: insights from studies of vision and touch. Progress in Brain Research. 2011;191:165–176. doi: 10.1016/B978-0-444-53752-2.00006-0. [DOI] [PubMed] [Google Scholar]

- Lacey S, Tal N, Amedi A, Sathian K. A putative model of multisensory object representation. Brain Topography. 2009;21:269–274. doi: 10.1007/s10548-009-0087-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, et al. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proceedings of the National Academy of Sciences USA. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechelli A, Price CJ, Friston KJ, Ishai A. Where bottom-up meets top-down: neuronal interactions during perception and imagery. Cerebral Cortex. 2004;14:1256–1265. doi: 10.1093/cercor/bhh087. [DOI] [PubMed] [Google Scholar]

- Mellet E, Tzourio N, Crivello F, Joliot M, Denis M, Mazoyer B. Functional anatomy of spatial imagery generated from verbal instructions. Journal of Neuroscience. 1996;16:6504–6512. doi: 10.1523/JNEUROSCI.16-20-06504.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton FA, Strick PL. Basal ganglia output and cognition: evidence from anatomical, behavioral, and clinical studies. Brain & Cognition. 2000;42:183–200. doi: 10.1006/brcg.1999.1099. [DOI] [PubMed] [Google Scholar]

- Motes MA, Malach R, Kozhevnikov M. Object-processing neural efficiency differentiates object from spatial visualizers. NeuroReport. 2008;19:1727–1731. doi: 10.1097/WNR.0b013e328317f3e2. [DOI] [PubMed] [Google Scholar]

- Négyessy L, Nepusz T, Kocsis L, Bazsó F. Prediction of the main cortical areas and connections involved in the tactile function of the visual cortex by network analysis. European Journal of Neuroscience. 2006;23:1919–1930. doi: 10.1111/j.1460-9568.2006.04678.x. [DOI] [PubMed] [Google Scholar]

- Newman SD, Klatzky RL, Lederman SJ, Just MA. Imagining material versus geometric properties of objects: an fMRI study. Cognitive Brain Research. 2005;23:235–246. doi: 10.1016/j.cogbrainres.2004.10.020. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Amedi A, Fregni F, Merabet LB. The plastic human brain. Annual Review of Neuroscience. 2005;28:377–401. doi: 10.1146/annurev.neuro.27.070203.144216. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WHC, et al. Beyond sensory images: Object-based representation in the human ventral pathway. Proceedings of the National Academy of Sciences USA. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prather SC, Votaw JR, Sathian K. Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia. 2004;42:1079–1087. doi: 10.1016/j.neuropsychologia.2003.12.013. [DOI] [PubMed] [Google Scholar]

- Raczkowski D, Kalat JW, Nebes R. Reliability and validity of some handedness questionnaire items. Neuropsychologia. 1974;12:43–47. doi: 10.1016/0028-3932(74)90025-6. [DOI] [PubMed] [Google Scholar]

- Reeves SJ, Grasby PM, Howard RJ, Bantick RA, Asselin MC, Mehta MA. A positron emission tomography (PET) investigation of the role of striatal dopamine (D2) receptor availability in spatial cognition. NeuroImage. 2005;28:216–226. doi: 10.1016/j.neuroimage.2005.05.034. [DOI] [PubMed] [Google Scholar]

- Roebroeck A, Formisano E, Goebel R. Mapping directed influence over the brain using Granger causality and fMRI. NeuroImage. 2005;25:230–242. doi: 10.1016/j.neuroimage.2004.11.017. [DOI] [PubMed] [Google Scholar]

- Ryali S, Supekar K, Chen T, Menon V. Multivariate dynamical systems models for estimating causal interactions in fMRI. NeuroImage. 2011;54:807–823. doi: 10.1016/j.neuroimage.2010.09.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sack AT, Jacobs C, De Martino F, Staeren N, Goebel R, Formisano E. Dynamic premotor-to-parietal interactions during spatial imagery. Journal of Neuroscience. 2008;28:8417–8429. doi: 10.1523/JNEUROSCI.2656-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sathian K. Visual cortical activity during tactile perception in the sighted and the visually deprived. Developmental Psychobiology. 2005;46:279–286. doi: 10.1002/dev.20056. [DOI] [PubMed] [Google Scholar]

- Sathian K, Deshpande G, Stilla R. Neural changes with tactile learning reflect decision-level reweighting of perceptual readout. Journal of Neuroscience. 2013;33:5387–5398. doi: 10.1523/JNEUROSCI.3482-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sathian K, Lacey S. Journeying beyond classical somatosensory cortex. Canadian Journal of Experimental Psychology. 2007;61:254–264. doi: 10.1037/cjep2007026. [DOI] [PubMed] [Google Scholar]

- Sathian K, Lacey S, Stilla R, Gibson GO, Deshpande G, et al. Dual pathways for haptic and visual perception of spatial and texture information. NeuroImage. 2011;57:462–475. doi: 10.1016/j.neuroimage.2011.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sathian K, Stilla R. Cross-modal plasticity of tactile perception in blindness. Restorative Neurology and Neuroscience. 2010;28:271–281. doi: 10.3233/RNN-2010-0534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sathian K, Zangaladze A, Hoffman JM, Grafton ST. Feeling with the mind’s eye. NeuroReport. 1997;8:3877–3881. doi: 10.1097/00001756-199712220-00008. [DOI] [PubMed] [Google Scholar]

- Schicke T, Muckli L, Beer AL, Wibral M, Singer W, Goebel R, Rösler F, Röder B. Tight covariation of BOLD signal changes and slow ERPs in the parietal cortex in a parametric spatial imagery task with haptic acquisition. European Journal of Neuroscience. 2006;23:1910–1918. doi: 10.1111/j.1460-9568.2006.04720.x. [DOI] [PubMed] [Google Scholar]

- Schwarz G. Estimating the dimension of a model. Annals of Statistics. 1978;6:461–464. [Google Scholar]

- Snow JC, Strother L, Humphreys GW. Haptic shape processing in visual cortex. Journal of Cognitive Neuroscience. 2014;26:1154–1167. doi: 10.1162/jocn_a_00548. [DOI] [PubMed] [Google Scholar]

- Stilla R, Sathian K. Selective visuo-haptic processing of shape and texture. Human Brain Mapping. 2008;29:1123–1138. doi: 10.1002/hbm.20456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoesz MR, Zhang M, Weisser VD, Prather SC, Mao H, Sathian K. Neural networks active during tactile form perception: common and differential activity during macrospatial and microspatial tasks. International Journal of Psychophysiology. 2003;50:41–49. doi: 10.1016/s0167-8760(03)00123-5. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the brain. Thieme Medical Publishers; New York: 1998. [Google Scholar]

- Wen X, Rangarajan G, Ding M. Is Granger causality a viable technique for analyzing fMRI data? PloS ONE. 2013;8(7):e67428. doi: 10.1371/journal.pone.0067428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White T, O’Leary D, Magnotta V, Arndt S, Flaum M, Andreasen NC. Anatomic and functional variability: The effects of filter size in group fMRI data analysis. NeuroImage. 2001;13:577–588. doi: 10.1006/nimg.2000.0716. [DOI] [PubMed] [Google Scholar]

- Zhang M, Weisser VD, Stilla R, Prather SC, Sathian K. Multisensory cortical processing of object shape and its relation to mental imagery. Cognitive, Affective & Behavioral Neuroscience. 2004;4:251–259. doi: 10.3758/cabn.4.2.251. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.