Abstract

This paper presents a novel virtual reality navigation (VRN) input device, called the VRNChair, offering an intuitive and natural way to interact with virtual reality (VR) environments. Traditionally, VR navigation tests are performed using stationary input devices such as keyboards or joysticks. However, in case of immersive VR environment experiments, such as our recent VRN assessment, the user may feel kinetosis (motion sickness) as a result of the disagreement between vestibular response and the optical flow. In addition, experience in using a joystick or any of the existing computer input devices may cause a bias in the accuracy of participant performance in VR environment experiments. Therefore, we have designed a VR navigational environment that is operated using a wheelchair (VRNChair). The VRNChair translates the movement of a manual wheelchair to feed any VR environment. We evaluated the VRNChair by testing on 34 young individuals in two groups performing the same navigational task with either the VRNChair or a joystick; also one older individual (55 years) performed the same experiment with both a joystick and the VRNChair. The results indicate that the VRNChair does not change the accuracy of the performance; thus removing the plausible bias of having experience using a joystick. More importantly, it significantly reduces the effect of kinetosis. While we developed VRNChair for our spatial cognition study, its application can be in many other studies involving neuroscience, neurorehabilitation, physiotherapy, and/or simply the gaming industry.

Keywords: virtual reality, navigation, kinetosis, spatial cognition, neurorehabilitation

Introduction

In the past decade, due to advancement in computing power, virtual reality (VR) experiments have gained much more popularity. A VR environment allows natural world emulation for different purposes by replicating real world tasks, such as navigation; movement in a VR environment mimics traversing in the real environment. Experiments in a VR environment offer repeatability and flexibility to modify the testing environment. In contrast, modifying real (natural) environment platforms for research purposes is costly, time consuming, and in some cases, not feasible. Thus, repetitive testing requiring different environmental setting may not be feasible in a natural environment. Moreover, monitoring movement parameters such as position and trajectories of a participant in a natural environment is fairly sophisticated, whereas in a VR environment, it is relatively simple.1

Interactive VR simulation requires input devices to receive commands from participants. In VR navigation, the input commands are mainly the desired movement that the participant applies to the simulator. In order to engender a naturalistic sense in VR experiments, the input devices should provide adequate coherence with the command applied to the simulator.

Several ordinary computer input devices are employed for navigation in VR experiments. The joystick is one of the most common input devices; it consists of a handle that is pivoted on a base. A sensor mounted on the pivot of the joystick reports the angle and the amount of deviation. The magnitude and the angle of the deviation are received by the computer as input commands. A joystick is operated by tilting its handle: pushing the handle forward/backward commands the connected device to move in that direction, while pushing it to the left/right commands the connected device to rotate left/right. Joysticks have been used in several applications such as aircrafts, industrial machines, as well as computer games.

For VR navigation, the joystick is preferred compared to other input devices such as keyboard arrow keys or a mouse because it can provide smooth movement between forward/backward and rotational movements. However, using a joystick is not convenient for people who may have no experience with such devices or with computers in general. More importantly, the use of a joystick for navigation in a VR environment can cause kinetosis (motion‐sickness).

Kinetosis occurs when the vestibular response and visual information are out of sync. During navigation in the real world, the human brain perceives motion through several senses, mainly through vision, vestibular system, and proprioceptors; thus, one can estimate and orient him/herself in the surrounding environment.2 Optical flow provides a perception of the velocity as well as the balance of the body relative to the environment (mainly by the horizon line and perspective point).3 In other words, it provides information about motion by tracking visual features and clues in the environment, and comparing their position and direction in each successive frame. The perception of motion is based on the relative movement of the features. Unlike optical flow, the inertial sense perceived by the vestibular organ through its semi‐circular canals enables one to perceive inertial information from the linear and axial accelerations in 3‐dimention about the fundamental X, Y, and Z axes.4 Using this information, the brain localizes the new position from the applied inertial forces and the elapsed time, which is called inertial navigation.5

Thus far, VR simulators are mainly able to stimulate the visual sense of the participant by providing the illusion of navigation in a virtual environment. Once the simulation is adequately naturalistic, the participant experiences immersion in the VR.6 However, the asynchrony between the optical flow and vestibular response in the current virtual reality navigation (VRN) environments can cause kinetosis, especially in older adults.7 This was the main problem that we faced when running the first version of our developed VRN assessment on individuals in an age range of 40+ years.8

In our pilot study,8 as well as another study,9 it was observed that about 28–30% of the participants experienced kinetosis during the VRN experiment, which stopped the participant from continuing the experiment. Therefore, the objective of this study was to design a novel input device to replace the joystick such that it can resolve the kinetosis problem during VR navigation. In addition, the use of joystick to navigate in a VR environment requires some familiarity with joysticks and computer games in general; that may cause a bias in the accuracy of the participants’ performance across different age groups with different exposure to gaming.

In this study, we designed a motion capture unit for a wheelchair to be used with the enhanced version of our recent8 VRN experiment for testing spatial cognition. The wheelchair in essence replaces the joystick or keyboard to navigate in the VR domain. The resultant technology, called “VRNChair” (VR navigational environment that is operated using a wheelchair), addresses both of the above mentioned problems: it provides a naturalistic navigation input command, and also reduces the kinetosis effect significantly. While we developed the VRNChair for our current spatial cognition study, it can have application in many other studies involving neuroscience, neurorehabilitation, physiotherapy, and/or simply gaming industries.

Methods

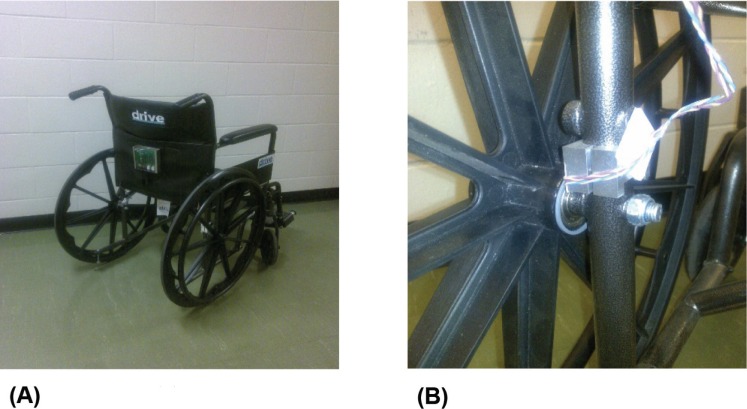

There are different structures of wheelchairs. However, the most popular one consists of two large main wheels attached to either side of the center of the wheelchair and two additional smaller wheels or casters on either side of the front of the wheelchair. We used this type of wheelchair, in particular the “Drive Medical, Silver Sport 2–Dual Axle” wheelchair, to design the VRNChair technology. The participant sits in the wheelchair (the VRNChair), while a laptop is placed on a wooden tray resting on the wheelchair handle bars. Then, the participant moves in the VR environment by moving the VRNChair.

The VRNChair has a custom designed motion capture unit, which contains two encoders and a microcontroller. The microcontroller estimates the magnitude and the direction of movement taken by the wheelchair and reflects that to the computer with ultra‐low latency (less than two milliseconds using USB 2.0 interface) as the navigation input command. The motion capture unit used for the VRNChair is an input device that acquires and estimates properties of the motion of a given system. The acquired motion information of the VRNChair is sent to a computer as a human interface device (HID). Using the HID standard for interfacing the device to a computer eliminates any need for additional drivers; in addition, it makes the device suitable for other commercial applications and games.

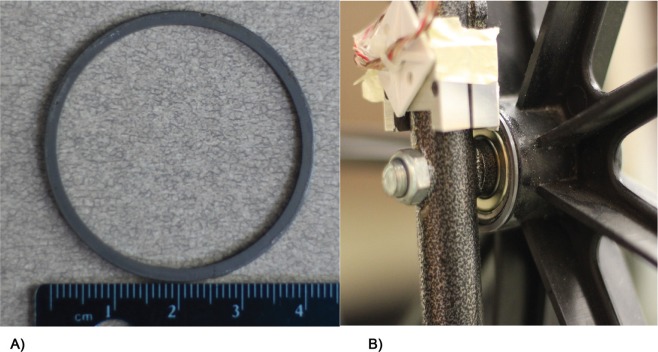

To communicate between the wheelchair and motion capture unit, the VRNChair’s main wheels are coupled with custom‐made off‐axis magnetic encoders. The magnetic encoders entail a special magnetic multi‐pole ring composed of 128 magnets (Fig. 1) facing a smart hall sensor. The rotation of the wheels, caused by the movement of the VRNChair, rotates the magnetic ring confronting the Hall sensor. The hall sensor generates relative pulses, which resemble the amount of rotation for both wheels (Fig. 2).

Figure 1.

(A) Top view of the magnetic multi pole ring, (B) the position of the smart Hall sensor.

Figure 2.

(A) Back view of the VRNChair, (B) left wheel with magnetic encoder.

The encoders mounted on the wheels produce a quadrature output (A and B output). The quadrature output defines the magnitude and the direction of the movement. By capturing the received pulses from the encoder in a constant time (the derivative of the displacement), the angular velocity of each wheel can be estimated. The obtained wheel velocity is then used to estimate the motion of the VRNChair for transferring it to the VR software.

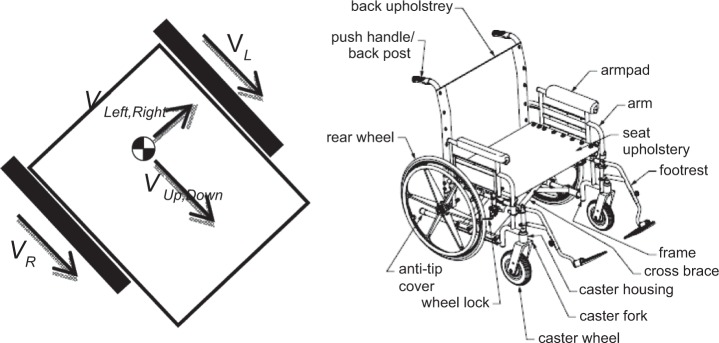

The VRNChair can move in two different ways, and can combine both: translation (VUp,Down) and rotation (VLeft,Right). The magnitude of velocity for each wheel is presented using two vectors, VL and VR. The velocity vectors of the two wheels will induce two types of motions on the center of the wheelchair, VUp,Down and VLeft,Right. The difference between the velocity magnitudes of the two wheels causes rotational velocity. In addition, the regularity of the radii of the two wheels causes translational movement. Based on the described relationship between the velocity of each wheel and the motion of the wheelchair, a kinematic model was developed, in which the VUp,Down and VLeft,Right are calculated from the VL and VR. Therefore, the sum of the magnitude of VL and VR in the same direction causes the wheelchair to move forward or backward. In contrast, different directions of VL and VR imply rotational movement for the wheelchair.

| (1) |

| (2) |

The outcome of the described kinematic model of the wheelchair was used as the input for movement in the VR environment. Figure 3 shows the schematic description of the wheelchair used as the based platform for the VRNChair.

Figure 3.

Velocity vectors and the schematic description of the wheelchair used for the VRNChair.12

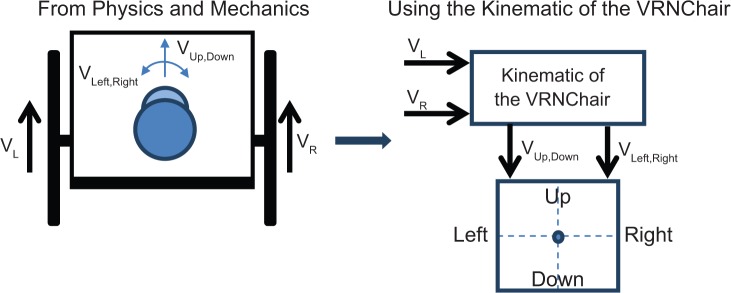

Based on the proposed kinematic model and the velocity magnitude acquired by the encoders of both main wheels, the motion capture unit estimates the overall movement of the VRNChair. The estimated motion of the VRNChair passes through a low‐pass filter to assure the quality of the estimation and reduction of any unwanted noise. Figure 4 shows the kinematic model of the wheelchair used for the VRNChair.

Figure 4.

VRNChair kinematic model.

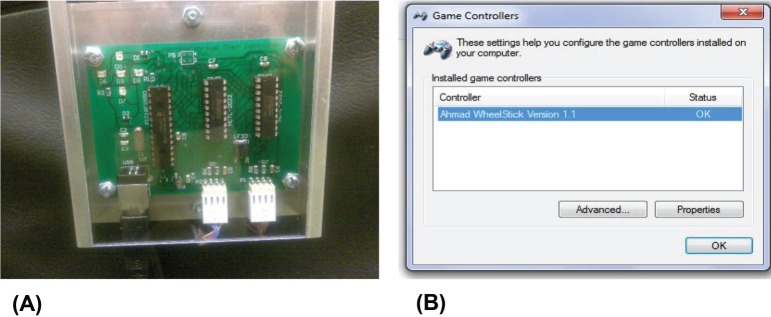

The participant moves the VRNChair by applying force to the wheels. In cases where the VRNChair is used as a walker, it is moved using the push handles. As a result of the friction between the VRNChair and the ground, motion applied to the VRNChair will cause relative rotation in the wheels. The relation between the motion of the wheels caused by the motion of the VRNChair obeys the inverse kinematic model. Estimation of the real motion of the VRNChair is the main function of the implemented motion capture unit, which presents the result of motion estimation to the host computer as a velocity vector; basically, it simulates a standard joystick on the host computer. Figure 5 depicts the motion capture unit and the HID control panel (Game Controllers) on Microsoft Windows®.

Figure 5.

(A) Top view of the VRNChair controller; (B) Microsoft® Windows Control Panel (Game Controller) view of the VRNChair.

The VR experiment should be calibrated such that distance traversed in the physical environment by the VRNChair is reflected by a scaled down (logarithmic relationship in our design) distance in the VR. Rotation, however, should be calibrated such that a rotation of 360° by the VRNChair in the physical environment produces exactly 360° of rotation in the virtual environment; this is important in order to provide a naturalistic navigation in VR.

Verification

The physical inputs given by the participant result in the same motion in both the physical and virtual environments when using the VRNChair but not when using a joystick, as illustrated in Table 1.

Table 1.

Comparison between the usage of a joystick and the VRNChair.

| DESIRED MOVEMENT | USING JOYSTICK | USING VRNCHAIR |

|---|---|---|

| Forward or backward translation | Tilting the joystick handle away or toward the user | Moving the VRNChair forward or backward |

| Rotation (yaw axis) | Tilting the joystick handle to left or right | Turning the VRNChair’s wheels in opposite directions |

| Turning toward left or right | Tilting the joystick handle diagonally | Turning one of the VRNChair wheels faster than the other |

To investigate the effect of the VRNChair on reducing kinetosis as well as the bias of the experience with the joystick, 17 individuals (26 ± 5.2 years, all males) were asked to perform the VR navigation task as described by Byagowi and Moussavi8 with a joystick and another 17 age‐matched individuals (27.6 ± 3.2, all males) performed the same experiment with the VRNChair; then, the performance of the two groups were compared. All study participants were engineering graduate students at the University of Manitoba to ensure both groups had the same exposure to VR technologies. We chose only male students because gender can be another independent variable affecting the results; to adjust for the plausible gender bias one needs a much larger population that is beyond the verification stage and scope of this study. Furthermore, we used two different groups of age‐matched male individuals, in order to remove the plausible learning bias, eg the effect of the order of use of joystick or wheelchair’s use for performing the experiment.

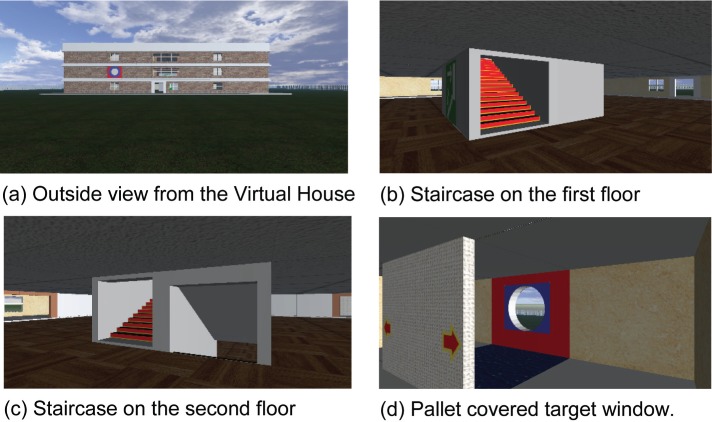

In addition, we tested one male 55‐year old individual performing the task using both a joystick and a wheelchair. The joystick used for the experiments was a Logitech ATK 3.10 The VR navigation experiment consisted of a first person view, traversing a lawn of a 3‐story building, entering the building, and approaching a specified target window on the second floor of the building; this was repeated four times for four different target windows. In each trial the target window was assigned randomly. The target window was shown to the participant from outside the building by rotating the entire building in VR. Then, the participant was instructed to go inside and find the target. For details please see Ref. 8. Figure 6 shows the VR environment used for this experiment.

Figure 6.

VR environment used for the experiment.

The trajectory of navigation in the VR experiment was stored and compared between the use of joystick and the VRNChair. The VR simulator stores the absolute position of the participant in the VR environment in five millisecond intervals. The absolute position is based on a fixed reference point inside the VR environment. The obtained trajectory of the participant is taken from the navigational input received by VR simulator from the VRNChair or the joystick. It should be noted that while using the VRNChair as an input device for the navigation commands, unwanted movements may occur during the operation. Therefore, the trajectory obtained from the input device contains unwanted noise and shuddering. To have a fair comparison between the trajectories of the joystick and the VRNChair, we filtered the traversed tractor of both the VRNChair and the joystick.

The spatial performance was measured similar to what has been described by Zen et al,11 in which we assigned an error score of 4 and 2 for side and corner errors. Side error was defined as when the participant visits a target window on the wrong side of the building; corner error was defined as when the participants is on the correct side of the building but makes a mistake in terms of left/right corners. If the participant has three or more side errors, it was considered as “totally lost” and a score error of 10 was assigned. The sum of the errors over the four trials of navigation was considered as the navigation error; thus, the maximum error for each experiment would be 40 (4 trials each with 10 maximum error score).

To test any statistical difference between the performances of the two groups, paired t‐test was used between the error score, averaged distance, and average time spent over the four trials of the study participants of the two groups. The study was approved by the Biomedical Ethics board of the University of Manitoba and all participants gave a written consent prior to the experiment.

Results

None of the 34 young participants of this study felt kinetosis when using either the joystick or the VRNChair. The spatial errors of the two groups were very similar: it was 6.4 ± 8.5 and 7.1 ± 7.5 for the two groups navigating with the joystick and the VRNChair, respectively. It should be noted that seven participants in the joystick group and six participants in the VRNChair group performed the experiment with zero error; the maximum spatial errors were 30 and 24 in each of the two groups of joystick and VRNChair, respectively. Obviously, there was no statistical difference between the navigational error of the two groups (P > 0.68).

The average and standard deviation of the traversed trajectory for the joystick and the VRNChair groups were 38.3 ± 13.3 m and 43.5 ± 13.3 m, respectively; however, the difference between the two groups were not statistically significant (P > 0.26). The average and standard deviation of the time spent to finish the trials were 65.5 ± 20.0 and 99.9 ± 32.8 seconds for the joystick and VRNChair groups, respectively. The difference of the spent time was found to be statistically significant between the two groups (P < 0.006). This difference was expected as navigation by VRNChair is slower than that by joystick by default. Table 2 presents the summary of the experimental results.

Table 2.

Experimental comparison between the usage of a joystick and the VRNChair.

| STUDY SUBJECTS (ALL MALES) | AGE (YEAR) | TOTAL SPATIAL NAVIGATION ERROR | TRAVERSED DISTANCE IN A TRIAL (m) | TIME SPENT TO FINISH A TRIAL (SECONDS) |

|---|---|---|---|---|

|

| ||||

| Joystick group | 27.6 ± 3.2 | 6.4 ± 8.5 | 38.3 ± 13.3 | 65.5 ± 20.2 |

|

| ||||

| VRNChair group | 26 ± 5.2 | 7.1 ± 7.5 | 43.5 ± 13.3 | 99.9 ± 32.8 |

|

| ||||

| One older subject | 55 | 37 | 55.0 | 87.5 |

|

|

||||

| Joystick | 13 | 41.7 | 134.7 | |

|

|

||||

| VRNChair | ||||

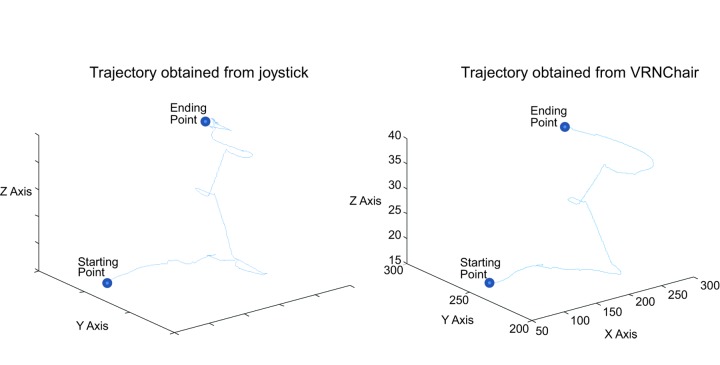

We also tested one older adult (55 year, male) with both the joystick and the VRNChair. The error scores of this participant were 37 and 13 when using the joystick and the VRNChair, respectively. Figure 7 shows the obtained trajectory for a particular trial for that participant using the joystick and the VRNChair for the same VRN experiment. The traversed distances for this particular trial were 61.95 m (VR domain) and 42.42 m for the joystick and the VRNChair, respectively. On average in the four trials, his traversed distances were 55 and 41.7 m for the joystick and the VRNChair, respectively. His averaged spent time was 87.5 and 134.7 seconds for the joystick and the VRNChair, respectively. It is interesting that younger participants on average had longer traversed distance for the VRNChair than the joystick. This reversed difference in length for the older participant might be due the participant’s lack of previous joystick experience; this is also congruent with his higher error score when using joystick. However, this could not be generalized as we have had only one middle age participant; we could not repeat the experiment in older adults because many of them expressed headache and nausea when performing the experiment with joysticks; thus, we did not continue.

Figure 7.

Trajectories obtained from a participant using a joystick and the VRNChair.

When the joystick was used as the navigation input device for the experiment, about 30% of the participants of age 35+ years in our other ongoing spatial assessment study experienced kinetosis; this percentage was higher in people of age 50+ years. That was the main motivation for designing the VRNChair. Since we replaced the joystick with VRNChair for the experiment, we have had more than 370 participants of age 50+ years so far, who have used the VRNChair for navigation [unpublished data]; only about 2% of this population complained of a mild degree of dizziness and nausea after using the VRNChair. We acknowledge that due to asyn‐chrony between the subjects’ peripheral vision and what they see on the screen during the VRN experiment, some may still feel a reduced degree of kinetosis.

Discussion

The designed VRNChair merges the sense of motion observed by the vision (optical flow) with the inertial sense of motion of the participant. The visual sense of motion provided by the VR environment matches the inertial sense of movement caused by the motion of the VRNChair in reality. Therefore, the main cause of kinetosis in VR navigation experiments is addressed.

On the VR simulator, motion information acquired from the joystick is used as navigation commands. Both a joystick and the VRNChair are recognized by the host computer as an HID. Using a standard HID device relieves the host computer of additional driver requirement for using the VRNChair. In addition, the VRNChair can be used on any simulator and commercial games that are able to support a standard joystick.

In our VR spatial navigational study, the performance of the participants is measured based on their spatial accuracy (visiting the correct side and corner of the building), traversed distance from the beginning point to the target window, and the time spent to reach the target in the VR environment. In general, it is expected that the lack of experience with joystick may affect the spatial performance of older adults in comparison with that of young adults; the result of our one older adult (55 years) is also congruent with that. Therefore, the skill of using the joystick may swerve the overall result instead of reflecting the dependency to the participant’s cognition skills affected by age. On the other hand, it has been found that younger adults (participants of this study) are less dependent on using either the VRNChair or the joystick as input device to perform in VR based experiments. Therefore, using the VRNChair can reduce the bias of prior exposure to the input device, ie joystick across different age groups.

In addition to the application of VRNChair’s use as an input device for VR experiments, it can be used for rehabilitation purposes as well as leisurely exercise. Those who become disabled and must learn to use a wheelchair, can use the VRNChair to help them into their new primary mode of transportation. For example, if a recently disabled person is uncomfortable crossing the street on a wheelchair, they can first become accustomed to the movements required in a realistic VR environment in a safe location of their choosing. This would be by way of crossing the street in a virtual world, designed to mimic the sights and sounds of a real street. In a similar fashion, computer games can be designed or modified to promote movement and exercise in those who use wheelchairs. Such games can take advantage of the physical motion of the VRNChair to immerse users in intricate virtual environments, encouraging the user to move about with the VRNChair, which is a form of exercise. The VRNChair provides the wheelchair‐bound the opportunity to experience VR in a more realistic manner.

Conclusion

The designed VRNChair technology successfully provides an input device to receive navigational commands, and addresses two major problems of the VR navigations: kinetosis and the effect of gaming experience in different generations. Since the VRNChair requires the participant to move naturally as they walk (or move the wheelchair while sitting in it) in the VR environment, the participant receives both vestibular stimulation and optical flow. Vestibular stimulation is perceived from the physical movement in the real world, while the optical flow is perceived from the simulation of movement in the VR environment. By providing a more naturalistic input method for VR navigation experiments, the VRNChair reduces the kinetosis effect significantly. In addition, it removes the bias of gaming and computer experience across the ages; thus, provides a more accurate means to measure spatial and other cognitive capabilities.

Footnotes

Author Contributions

Conceived and designed the experiments: AB, DM, ZM. Analyzed the data: AB, ZM. Wrote the first draft of the manuscript: AB, DM. Contributed to the writing of the manuscript: AB, DM, ZM. Agree with manuscript results and conclusions: AB, ZM. Jointly developed the structure and arguments for the paper: AB, ZM. Made critical revisions and approved final version: ZM. All authors reviewed and approved of the final manuscript.

DISCLOSURES AND ETHICS

As a requirement of publication the authors have provided signed confirmation of their compliance with ethical and legal obligations including but not limited to compliance with ICMJE authorship and competing interests guidelines, that the article is neither under consideration for publication nor published elsewhere, of their compliance with legal and ethical guidelines concerning human and animal research participants (if applicable), and that permission has been obtained for reproduction of any copyrighted material. This article was subject to blind, independent, expert peer review. The reviewers reported no competing interests.

ACADEMIC EDITOR: Raphael Pinaud, Editor in Chief

COMPETING INTERESTS: Authors disclose no potential conflicts of interest.

FUNDING: Authors disclose no funding sources.

REFERENCES

- 1.Sauzéon H, Arvind Pala P, Larrue F, Wallet G, Déjos M, Zheng X, Guitton P, N’Kaoua B. The use of virtual reality for episodic memory assessment: effects of active navigation. Exp Psychol. 2011;59(II):99–108. doi: 10.1027/1618-3169/a000131. [DOI] [PubMed] [Google Scholar]

- 2.Ian Q, Whishaw Dustin J, Hines D, Wallace G. Dead reckoning (path integration) requires the hippocampal formation: evidence from spontaneous exploration and spatial learning tasks in light (allothetic) and dark (idiothetic) tests. Behav Brain Res. 2001;127:49–69. doi: 10.1016/s0166-4328(01)00359-x. [DOI] [PubMed] [Google Scholar]

- 3.Byagowi A. Fifth International Conference on Computational Intelligence, Robotics and Autonomous Systems. Linz, Austria: 2008. Man’s Vision Based SLAM by Using Visual Odometry and Perspective Images. [Google Scholar]

- 4.Massot S, Schneider A, Chacron MJ, Cullen KE. The vestibular system implements a linear‐nonlinear transformation in order to encode self‐motion. PLoS Biol. 2012;10(7):e1001365. doi: 10.1371/journal.pbio.1001365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Stovall SH. Basic Inertial Navigation. China Lake, California: Naval Air Warfare Center Weapon Division; 1997. [Google Scholar]

- 6.Mueller C, Luehrs M, Baecke S, Adolf D, Luetzkendorf R, Luchtmann M, Bernarding J. Building virtual reality fMRI paradigms: a framework for presenting immersive virtual environments. J Neurosci Methods. 2012;209(2):290–298. doi: 10.1016/j.jneumeth.2012.06.025. [DOI] [PubMed] [Google Scholar]

- 7.Ohyama S, Nishiike S, Watanabe H, Matsuoka K, Akizuki H, Takeda N, Harada T. Autonomic responses during motion sickness induced by virtual reality. Auris Nasus Larynx. 2007;34(3):303–306. doi: 10.1016/j.anl.2007.01.002. [DOI] [PubMed] [Google Scholar]

- 8.Byagowi A, Moussavi Z. Design of a Virtual Reality Navigational (VRN) experiment for assessment of egocentric spatial cognition. Engineering in Medicine and Biology Society (EMBS); 2012 Annual International Conference of the IEEE; 2012; San Diego, California. [DOI] [PubMed] [Google Scholar]

- 9.Moffat S, Zonderman AB, Resnick SM. Age differences in spatial memory in a virtual environment navigation task. Neurobiol Aging. 2001;22(5):787–796. doi: 10.1016/s0197-4580(01)00251-2. [DOI] [PubMed] [Google Scholar]

- 10.Logitech Logitech Attack™ 3 Joystick. 2012. http://www.logitech.com/en-ca/gaming/joysticks/302.

- 11.Zen D, Byagowi A, Garcia Campuzano MT, Kelly D, Lithgow B, Moussavi Z. The perceived orientation in people with and without Alzheimer’s; 6th International IEEE EMBS Conference on Neural Engineering; San Diego California. 2013. [Google Scholar]

- 12.Drive Medical Design and Manufacturing 2012. https://www.drivemedical.com. https://www.drivemedical.com/catalog/product_info.php?cPath=87_144&products_id=978.

- 13.Byagowi A. Man’s vision based slam by using visual odometry and perspective images; Fifth International Conference on Computational Intelligence, Robotics and Autonomous Systems; 2008; Linz, Austria. [Google Scholar]

- 14.Lin C, Chuang S, Chen Y, Ko L, Liang S, Jung T. EEG effects of motion sickness induced in a dynamic virtual reality environment. Conf Proc IEEE Eng Med Biol Soc. 2007;2007:3872–3875. doi: 10.1109/IEMBS.2007.4353178. [DOI] [PubMed] [Google Scholar]