Abstract

A central question about spatial attention is whether it is referenced relative to the external environment or to the viewer. This question has received great interest in recent psychological and neuroscience research, with many but not all, finding evidence for a viewer-centered representation. However, these previous findings were confined to computer-based tasks that involved stationary viewers. Because natural search behaviors differ from computer-based tasks in viewer mobility and spatial scale, it is important to understand how spatial attention is coded in the natural environment. To this end, we created an outdoor visual search task in which participants searched a large (690 square feet), concrete, outdoor space to report which side of a coin on the ground faced up. They began search in the middle of the space and were free to move around. Attentional cuing by statistical learning was examined by placing the coin in one quadrant of the search space on 50% of the trials. As in computer-based tasks participants learned and used these regularities to guide search. However, cuing could be referenced to either the environment or the viewer. The spatial reference frame of attention shows greater flexibility in the natural environment than previously found in the lab.

Keywords: Spatial attention, visual statistical learning, spatial reference frame, probability cuing

Introduction

In perceiving and understanding the world, spatial attention allows us to prioritize goal-relevant locations and objects. An important feature of spatial attention is its spatial reference frame: attended locations may be coded relative to the viewer, relative to the external environment, or relative to both. Which reference frame is used has important functional consequences. People often move in their environment. If attention is viewer-centered then it will likely change its environmental location when the viewer moves. But if attention is environment-centered then it will likely stay in the same environmental locations following viewer movements. Understanding the spatial reference frame of attention is also theoretically important. Most researchers agree that attention reflects diverse cognitive and brain mechanisms. However exactly how to subdivide attention remains a contentious issue (Awh, Belopolsky, & Theeuwes, 2012; Chun, Golomb, & Turk-Browne, 2011; Desimone & Duncan, 1995; Egeth & Yantis, 1997). Understanding whether different spatial reference frames are used to support different forms of attention is valuable for identifying subsystems of attention.

Spatial reference frame of attention

Several studies have examined the spatial reference frame of attention by asking people to move their eyes between the initial attentional cue and the subsequent probing of attention. Results from these studies are mixed, with many but not all, finding evidence for a viewer-centered (particularly retinotopic) representation of attention (Abrams & Pratt, 2000; Burr & Morrone, 2012; Cavanagh, Hunt, Afraz, & Rolfs, 2010; Golomb, Chun, & Mazer, 2008; Jiang & Swallow, 2013a, 2013b; Mathôt & Theeuwes, 2010; Pertzov, Zohary, & Avidan, 2010; Wurtz, 2008). However, these studies have investigated relatively transient forms of attention that rise and dissipate within several hundred milliseconds. Yet attentional preferences in the real world could accumulate through repeated experience with the same environment. Indeed, laboratory experiments have demonstrated that people develop relatively stable attentional preferences for important locations under these circumstances (probability cuing; Druker & Anderson, 2010; Geng & Behrmann, 2002; Jiang, Swallow, Rosenbaum, & Herzig, 2013; Miller, 1988; Umemoto, Scolari, Vogel, & Awh, 2010). Because it takes time to form an environment-centered (e.g., spatiotopic) representation of space (Burr & Morrone, 2012), this form of sustained, experience-driven attention could rely on the external environment as its preferred reference frame.

However, recent studies on incidentally learned attention have found that it predominantly uses a viewer-centered frame of reference (Jiang & Swallow, 2013a). In one study, participants searched for a target on a display laid flat on a table. Unbeknownst to the participants, across multiple trials the target was more often found in one quadrant of the display than in the other quadrants. Although participants did not notice this, they became faster finding the target when it appeared in the target-”rich” quadrant than the “sparse” quadrants. After acquiring an attentional bias toward the rich quadrant, participants stood up and walked to an adjacent side of the table, producing a 90° change in viewpoint (Jiang & Swallow, 2013a). Results showed that the previously acquired attentional bias persisted for several hundred trials. Critically, the attentional bias moved to new environmental locations when the participant moved, suggesting that it relied on a viewer-centered reference frame (see also Jiang, Swallow, & Sun, 2014).

The egocentric nature of incidentally learned attention additionally manifested as a failure to prioritize environmentally rich locations that were random relative to the viewer’s perspective (Jiang, Swallow, & Capistrano, 2013; Jiang & Swallow, submitted). Participants in one study conducted visual search on a display laid flat on a stand. They moved to a random side of the display before each trial. Although the target was most often placed in a fixed quadrant of the display, participants were not faster in finding the target in the rich quadrant compared with the sparse quadrants (Jiang, Swallow, & Capistrano, 2013). However, when the target-rich quadrant was consistently referenced relative to the participants (e.g., it was always in the participants’ lower right visual field) participants were able to prioritize the rich quadrant (Jiang & Swallow, submitted). These findings indicated that incidentally learned attention was viewer-centered and was not updated by the viewers’ locomotion.

The viewer-centered representation poses several challenges for interpretation. The visual search tasks used above are reminiscent of natural behaviors such as foraging, where the searchers frequently change their location during the search task. Given that locations that are rich in food or other environmental resources should be independent of the searcher’s viewpoint, it is puzzling that incidentally learned attention would remain egocentric when evolutionary pressures should encourage the formation of environment-centered representations. How can we reconcile the empirical finding that incidentally learned attention is egocentric with the prediction that it should be environment-centered? One potential answer is that the nature of spatial representation differs between laboratory tasks and more naturalistic tasks. As reviewed next, laboratory tasks and naturalistic tasks differ significantly in their computational demands. These differences can potentially change how spatial attention is coded. To explain the discrepancy between the empirical findings in the lab and theoretical considerations of natural search and foraging behavior, it is necessary to examine the spatial reference frame of attention in a large-scale, natural environment.

Unique computational demands in large-scale natural environments

Several psychologists have championed an ecological approach to the study of human cognition (Droll & Eckstein, 2009; Gibson, 1986; Hayhoe, Shrivastava, Mruczek, & Pelz, 2003; Jovancevic, Sullivan, & Hayhoe, 2006; Neisser, 1976; Simons & Levin, 1998), with some researchers questioning whether laboratory findings are replicable in the real world (Kingstone, Smilek, Ristic, Kelland Friesen, & Eastwood, 2003). Although there are many reasons to assume that findings discovered in the lab will generalize to the real world, significant and potentially important differences exist between laboratory and real-world situations. These differences motivated us to examine spatial attention and learning in a large-scale, natural environment.

Consider the task of searching for a friend’s face in a crowded airport. Here, the search space is large and extends to distant regions, rather than being confined to a nearby computer monitor (as in the lab). Differences in the size and distance of the search region may affect how spatial attention is allocated. First, when the entire search space is within the searcher’s field of view, visual search primarily involves covert shifts of attention and overt eye movements. When the search region extends beyond one’s field of view, the searcher often needs to turn their body or head and may even need to move to new locations to fully explore it. In this situation, search relies more heavily on locomotion and entails active updating of the searcher’s location and heading. Second, in the laboratory the computer monitor is within arm’s reach, positioning the search items close enough for the searcher to readily act upon them (they are in “action space”). In contrast, most locations in the real world are beyond the searcher’s reach and therefore fall into “perception space,” where they can be perceived, but not readily acted upon. Because near and far locations may be attended differently (Brozzoli, Gentile, Petkova, & Ehrsson, 2011; Vatterott & Vecera, 2013), conclusions drawn from computerized tasks may not generalize to the real world.

A potential difference between attending to items in perception space and action space is the spatial reference frame used to code their locations. When perceiving objects, people often code the spatial properties of an object in an allocentric (e.g., object or environment-centered) coordinate system (Bridgemen, Kirch, & Sperling, 1981). In contrast, when performing visuomotor action, people typically code the spatial properties of an object in an egocentric coordinate system (Goodale & Haffenden, 1998). Although spatial attention affects both visual perception and visuomotor action, changes from perception space to action space may correspond to a change in whether the attended locations are predominantly coded relative to the viewer or relative to an external reference frame (Obhi & Goodale, 2005; Wraga, Creem, & Proffitt, 2000).

To our knowledge few studies have systematically examined spatial attention in a large, open space in the natural environment. The closest are a study of visual search in a mailroom (Foulsham, Chapman, Nasiopoulos, & Kingstone, 2014) and a set of studies of foraging behavior in a medium-sized room (Smith, Hood, & Gilchrist, 2008, 2010). In the mailroom search study, Foulsham and colleagues tracked participants’ eye movements while they searched for a pre-specified mail slot at a University faculty mailroom. They found that the mailroom search task relied on participants’ knowledge about what the target mail slot looks like, but was relatively insensitive to the bottom-up saliency of the slot (Foulsham et al., 2014; Tatler, Hayhoe, Land, & Ballard, 2011). They concluded that “principles from visual search in the laboratory influence gaze in natural behavior.” (pp. 8).

A second set of studies specifically examined the spatial reference frame used to code attended locations. Smith et al. (2010) tested participants in a room (4 × 4 meters2) that had lights embedded in the floor and that contained few environmental landmarks or visual features to guide attention. Participants’ task was to find the light that switched on to a pre-specified color. However, without telling the participants, Smith et al. (2010) placed the target light frequently (80% probability) in one side of the room. Participants learned this regularity and used it to guide foraging when both their starting position and the “rich” region were fixed in the environment (i.e., they found the target faster when it was on the “rich” side rather than the “sparse” side). In contrast, when the location of the rich region was variable relative to either the environment or the forager, the search advantage diminished. For example, when the rich side was fixed in the room but the participants’ starting position was randomized there was little advantage for the rich side. In addition, no advantage was observed when the starting position was random and the rich region was fixed relative to the participant (e.g., always on the left side of their starting position). These findings suggest that stability in both the environmental and egocentric references frames is necessary for learning to attend to important spatial locations.

Although the Smith and colleagues’ studies (2008; 2010) could serve as a bridge between computer-based tasks and natural search, several features limit the conclusions that can be drawn from them. First, participants were tested in an indoor space that included few environmental landmarks. In natural search tasks environmental cues could facilitate spatial updating and allocentric coding of the attended locations. Second, the target and distractor lights were indistinguishable until they were switched on, removing features that could attract or visually guide attention. This raises doubts about the utility of this task in characterizing major drivers of attention because both stimulus-driven attention and top-down attentional guidance would have played a limited role in this task (Egeth & Yantis, 1997). Finally, published studies using this paradigm administered no more than 80 trials per experiment, raising concerns about statistical power. The mailroom search task was visually guided and had indoor environmental cues, but it involved a single-trial design not suitable for investigating learning (Foulsham et al., 2014).

The current study characterizes the spatial reference frame of attention in a large, outdoor environment. By testing how people update their attentional bias when searching from different perspectives, our study serves as a bridge between research on spatial cognition (Mou, Xiao, & McNamara, 2008; Wang & Spelke, 2000) and visual attention (Nobre & Kastner, 2014; see Jiang, Swallow, & Sun, 2014 for additional discussions). However, unlike spatial cognition studies in which participants explicitly report their spatial memory after perspective changes, in our study participants were not required to remember the search environment. The formation of any spatial representations was simply a byproduct of performing visual search. Our study has three goals.

First, the methodological goal is to establish an experimental paradigm that quantifies visual search behavior in an external environment. Participants were tested in an outdoor space with nearly unlimited access to environmental landmarks (Figure 1). The search space was large: 8 × 8 meter2 (about 690 square feet), necessitating head movement and locomotion. The task was to find a small coin on the ground and identify which side of the coin was up. Although we did not place distractors on the ground, naturally occurring visual distraction (e.g., patches of dirt, blowing leaves, cracks, bugs and debris) made detection of the coin difficult. This setup allowed us to examine visual search behavior relatively quickly: A 75-min testing session included about 200 trials, which was more than twice as many as in previous large-scale search studies.

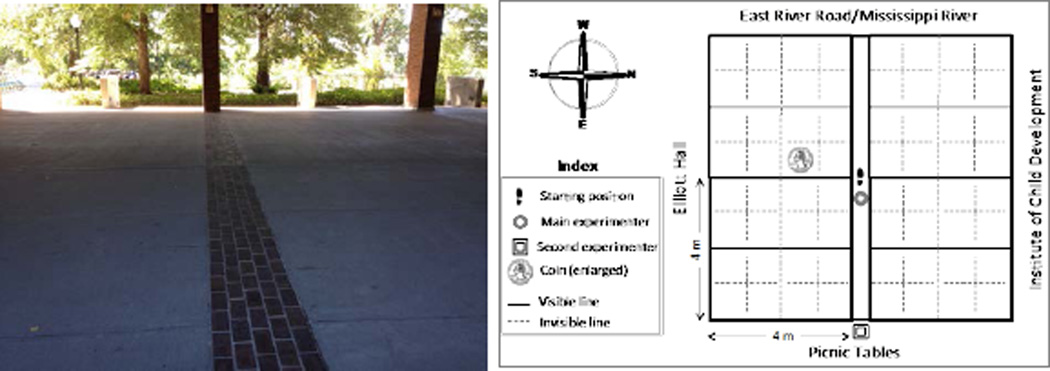

Figure 1.

Left. A photograph of the search space looking west from the second experimenter’s perspective. Right. An illustration of the search space and locations of major players.

The second goal is to address the theoretical question about the spatial reference frame of attention. We first established that similar to laboratory findings, natural search behavior was sensitive to statistical regularities in the environment (Druker & Anderson, 2010; Geng & Behrmann, 2002; Jiang, Swallow, Rosenbaum, & Herzig, 2013; Miller, 1988; Umemoto et al., 2010). We then asked whether large-scale search, in which the search region extends beyond proximal locations, was also egocentric.

By constructing a new paradigm and addressing the theoretical question raised above, the current study achieved our third goal of empirically characterizing spatial attention and relating it to everyday behavior.

Method

Overview

In each of three experiments participants were asked to search for a coin in a large, outdoor area, and to report which side of the coin was facing up. The search space was large and the coin was small and masked by the concrete, making search relatively difficult. As in previous experiments on location probability cuing, the coin was more likely to be in one quadrant of the search region than in others. Across experiments we manipulated whether the “rich” region was consistently located relative to the environment, relative to the viewer or both,

Participants

Forty-eight students from the University of Minnesota participated in this study for extra course credit or cash payment. Their ages ranged from 18 to 35 years old. All participants were naïve to the purpose of the study and completed just one experiment. Participants had normal or corrected-to-normal visual acuity and could walk unassisted. Participants signed an informed consent before the study.

There were 16 participants in each of three experiments. The sample size was chosen based on previous visual search studies that examined location probability learning (e.g., Jiang, Swallow, Rosenbaum et al., 2013, N = 8). These studies revealed large effect sizes (e.g., Cohen’s d = 1.60 in Jiang, Swallow, Rosenbaum et al., 2013’s Experiment 3), yielding an a priori statistical power of over .95 with a sample size of 16.

Testing space

The study was conducted in an open area underneath a large overhang of Elliott Hall, which houses the Psychology Department at the University of Minnesota. The overhang partially shielded participants from direct sun exposure or rain and was approximately 3.5 meters above the ground. Other than being shielded from above and slightly occluded by distant pillars, participants had full access to the outdoor environment in all directions around them. On the west side were a pedestrian sidewalk and East River Road, beyond which were trees and the Mississippi River. On the east side were several picnic tables and other University buildings. The south side of the search space was the main wing of Elliott Hall. The north side was a parking lot beyond which was the Institute of Child Development (Figure 1).

The ground was made of light gray concrete slabs, each measuring approximately 2 × 4 meter2. The search space consisted of 8 concrete slabs. A narrow strip (0.5 meters width) of red bricks divided the space along the East-West direction (Figure 1). Centering on the red bricks, the search space could be naturally divided into four equal-sized regions (“quadrants”), each subtending 4 × 4 meter2. The entire search space was 64 meter2 (excluding the 0.5-meter wide red bricks along the median). The border of the space was marked by colored chalk and was visible from the center.

This part of campus was relatively free of pedestrian traffic. Most pedestrians avoided entering the search space and on occasions when they did, were quick to exit it. We allowed naturally occurring visual distraction to remain on the ground. These included debris, cracks, paint blobs, chalk marks, bugs, blowing leaves, and sunlight patches.

Participants were instructed to dress warmly for the outdoor experiment, which was conducted during the daytime and only when the outdoor temperature was comfortable and when there was no strong wind or blowing rain.

Materials and Equipment

The search space was divided into an invisible 8 × 8 matrix, yielding 64 possible target locations. The target could be placed in the approximate center of each cell. Participants stood at the center of the red bricks (the center of the search space) before each trial. A MacBook laptop was placed about 1 foot away from the participant on the bricks. Participants used an Apple Magic Mouse to make the response, which was recorded by the laptop via its long-range Bluetooth wireless connection. The Magic Mouse provided more reliable timing than standard USB wireless mouse, especially at long distances between the mouse and the computer. The trial sequence was pre-generated and saved as PDF files. The PDF files were either printed out or sent to a smart phone. The experimenter relied on the printout or smart phone images to direct participants about their standing direction and to determine where the coin should be placed and which side should be up.

The target was a US quarter (diameter 2.4 cm) or a similar sized zinc coin. A piece of paper was taped on each side of the coin to reduce the sound the coin would make when contacting the concrete floor. The paper was black on one side of the coin and silver on the other. These colors were chosen because they did not pop-out from the concrete and they appeared equally distinct from the gray concrete. The coin was not easy to spot partly because the search space was more than 70,000 times the area of the coin.

To ensure that participants could not hear the experimenter’s footsteps when the coin was placed, participants wore earplugs throughout the experiment that dampened sound by 30 dB. In addition, once participants were in position they closed their eyes. The experimenter left his/her original position only after participants had closed their eyes. The experimenter wore quiet shoes, was swift in placing the coin and returning to the original location, and minimized extraneous sounds or motion that might give away their whereabouts. Pilot testing and queries after experiments verified that participants were unaware of where the experimenter had been when their eyes were closed.

Procedure

At the beginning of each testing trial, the participant returned to the center of the search space (marked by a footprint drawing) and faced one of four directions as indicated by the experimenter: the River, Elliott Hall, Picnic Tables, or Child Development Building. Once in position the experimenter asked the participants to close their eyes. The experimenter then swiftly but quietly placed the coin in the search space. The location of the coin and the side that was up (black or silver) were specified by the printout/smart phone. The experimenter returned to the original location on the red bricks and shouted “Go!” Participants then clicked the left side of the Bluetooth mouse. A beep indicated that the trial had started and the computer’s timer had been engaged. Participants opened their eyes to start search. They were allowed to freely move in the search space, which they often did (the results section will describe the movement pattern). Participants carried the Bluetooth mouse with them. Once they found the coin, they could respond immediately by clicking the left (for “black”) or right (for “silver”) side of the mouse to report the coin’s color. This stopped the computer’s timer. Participants had 30 s to complete each trial. Trials in which participants failed to respond within 30s were assigned the maximum value (30s) as the response time. The percentage of trials that were timed out was 2.2% in Experiment 1, 2.0% in Experiment 2, and 2.4% in Experiment 3. The computer sounded a tone to indicate whether the response was correct. Participants then returned to the center of the search space for the next trial. To speed up the transition between trials and to reduce physical strain on the main experimenter, a second experimenter ran in and picked up the coin from the preceding trial while the main experimenter placed the coin for the next trial. The second experimenter always returned to a fixed location outside the search space at the start of the next trial. Only one coin was on the ground during a trial.

One unexpected difficulty in executing this study was the physical strain exerted on the experimenters, who needed to bend down and place the coin rapidly and quietly 192 times in the 75-minute testing session. In later phases of our research we improved the procedure by substituting the US quarters with zinc coins. The experimenters relied on a magnetic pickup tool to help place and retrieve the coin. Half of the participants in each experiment were tested using the improved setup. This change did not affect the experimental procedure from the participants’ perspective.

Design

Participants were tested in 192 trials in each experiment. One participant in Experiment 3 completed only 185 trials before the computer ran out of battery. We manipulated the direction the participants faced at the beginning of the trial and where the coin was likely to be located. The search space was divided into four equal-sized regions centered on the starting position of the participant.

In Experiment 1 participants always faced the same direction at the beginning of the search trial. The coin was in one “rich” region of the space on 50% of the trials, and in each of the three “sparse” regions on 16.7% of the trials. The region that was “rich” was fixed in the external environment (e.g., the quadrant nearest the River and Elliott Hall) and relative to the direction the participant faced at the beginning of the trial (e.g., their front left). Exactly which quadrant was “rich” was counterbalanced across participants, but remained the same for a given participant throughout the experiment. The main question was whether there would be a search advantage when the coin was in the rich quadrant rather than the sparse quadrants.

In Experiments 2 and 3 we dissociated the viewer-centered and environment-centered reference frames by changing the facing direction of the participants on each trial. Participants always started search from the center of the space. However, on each trial they faced a randomly selected direction (River, Picnic Tables, Elliott Hall, or Child Development). In Experiment 2, the target-rich quadrant was fixed in the environment (e.g., always near the River and Elliott Hall) regardless of which direction the participants faced at the beginning of the trial, which was uncorrelated with the target-rich region. In Experiment 3, the target-rich quadrant was tied to the participants’ starting direction (e.g., always to the right and back side of the participants). The target-rich quadrant was completely random relative to the external environment. These two experiments tested whether learning of frequently attended locations depends on environmental stability, viewpoint consistency, or both.

Participants received no information about the target’s location probability. However, after earplugs were removed at the end of the experiment we asked them several questions. First, was the coin’s location random or was it more often found in some parts of the search space than others? Regardless of their answer, we then informed participants that the coin’s location was not random. They were led to the center of the search space and were asked to select the region where the target was most often found. Finally, participants were then free to comment on any strategies they might have used in the experiment.

Videos of the experimental procedure can be found at: http://jianglab.psych.umn.edu/LargeScaleSearch/LargeScaleSearch.html

Results

1. Accuracy

Incorrect responses were relatively infrequent. Most of the recorded errors were attributed to two technical problems. (1) The Magic Mouse, which had no distinctive left and right buttons, mistakenly assigned the participants’ response to the wrong button; (2) The participant started the trial before the experimenter was ready, and was told to skip the trial by clicking the wrong button. Even though we could not tell which of the error trials were technical errors and which were participant errors, overall error rates were low in all experiments. Error rates, including technical errors, were 4.6% in Experiment 1, 5.1% in Experiment 2, and 2.6% in Experiment 3. Error trials were excluded from the RT analyses.

2. Experiment 1

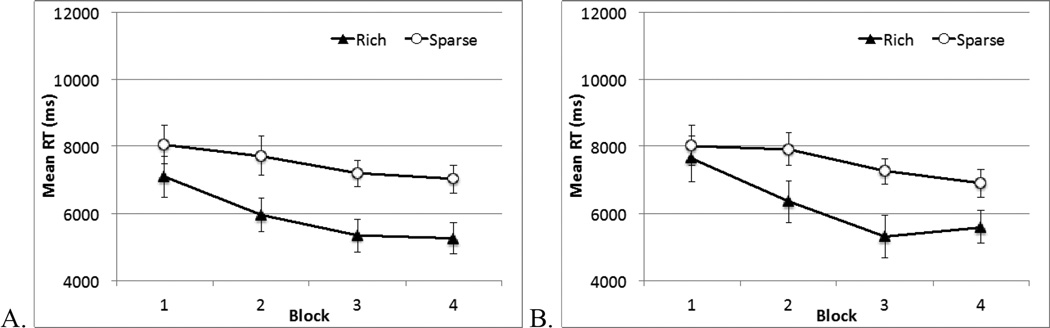

We divided the experiment into four blocks of trials and calculated mean RT for each block, separately for trials in which the coin was in the rich quadrant and the sparse quadrants (Figure 2A). Participants found the coin faster when it appeared in the rich quadrant, leading to a significant main effect of target location, F(1, 15) = 25.01, p < .001, ηp2 = .63. The main effect of block was significant, F(3, 45) = 5.58, p = .002, ηp2 = .26, showing faster RT in later blocks. The interaction between target location and block was not significant, F(3, 45) = 1.17, p > .30. Similar to previous studies, probability cuing occurred rapidly and became noticeable during the first block of 48 trials (Jiang, Swallow, Rosenbaum, et al., 2013; Smith et al., 2010; Umemoto et al., 2010). RT was not faster in the very first trial in which the target was in the rich quadrant than in the very first trial in which it was in the sparse quadrants (RT in the rich quadrant on the first trial: mean = 7429 ms, median = 6124 ms; RT in a sparse quadrant on the first trial: mean = 7415 ms, median = 5469), t(15) = 0.01, p > .50. Similar results were found in the next two experiments (ps > .50 when comparing the first trial in which the target was in the sparse or rich conditions). The early emergence of the location probability effect likely reflected early learning rather than other extraneous factors that were controlled by careful counterbalancing and randomization.

Figure 2.

Results from Experiment 1. (A) Data from all trials. (B) Data including only trials in which the target was in a different region than in the preceding trial. Error bars show ±1 S.E. of the mean.

The data reported above showed that visually guided search behavior in the natural world was sensitive to statistical regularities. Could this result be attributed to short-term, repetition priming of the target’s location? Previous laboratory studies showed that targets are found faster when their location is repeated on consecutive trials (Maljkovic & Nakayama, 1996; Walthew & Gilchrist, 2006). Although the coin rarely appeared in the same location on consecutive trials in this experiment (there were 64 locations) it did appear in the same quadrant as the previous trial about a quarter of the time. Because repetition was more likely for the rich than for the sparse quadrants, it could produce differential task performance in these two conditions. A further analysis that included only trials in which the target was in a different quadrant than on the preceding trial (when short-term repetition priming is counter-productive) was therefore performed. This analysis removed 29.0% of the trials: 23.1% from the rich condition and 5.9% from the sparse condition. The results were similar to the full dataset (Figure 2B). RT was significantly faster in the rich quadrant than the sparse quadrants, F(1, 15) = 14.74, p < .002, ηp2 = .50, significantly faster in later blocks than earlier blocks, F(3, 45) = 6.03, p < .002, ηp2 = .29. These two factors showed a significant interaction, as the preference for the rich quadrant became stronger in later blocks, F(3, 45) = 2.89, p < .05, ηp2 = .16. Thus, location probability cuing does not depend on immediate quadrant repetitions.

3. Experiment 2

Participants in Experiment 2 faced a random direction at the start of the visual search trial. Although the coin was more often placed in a fixed region of the environment (e.g., nearest to the River and Elliott Hall), the rich region was random relative to the participants at the beginning of the trial.

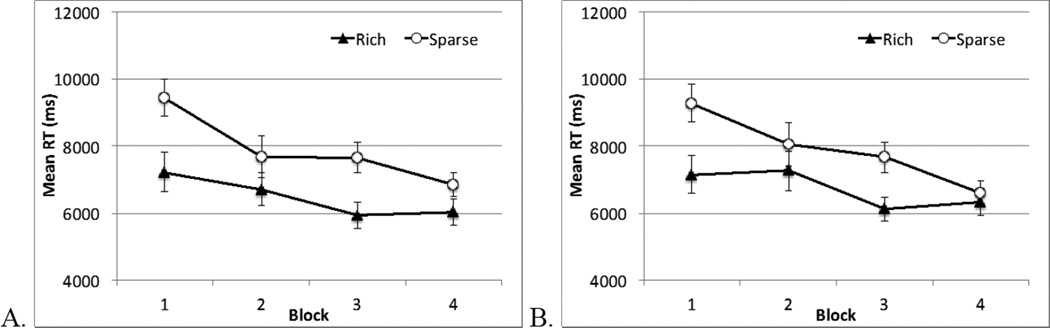

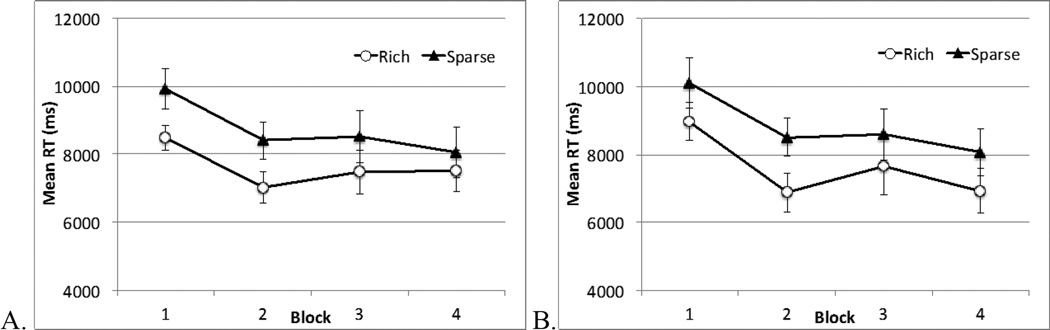

In contrast to the computer-based search task (Jiang, Swallow, & Capistrano, 2013), the location probability manipulation influenced search even when the rich region was randomly positioned relative to the participant. When data from all trials were considered (Figure 3A), RT was faster when the target was in the environmentally rich region rather than the sparse regions, F(1, 15) = 15.98, p < .001, ηp2 = .52. Overall RT also became faster as the experiment progressed, F(3, 45) = 14.08, p < .001, ηp2 = .48. A significant interaction between the two factors showed that the preference for the rich quadrant became smaller as the experiment progressed, F(3, 45) = 3.73, p = .018, ηp2 = .20. To ensure that the advantage in the rich quadrant was not due to short-term priming from location repetition, in an additional analysis we only included trials in which the target’s quadrant on the current trial differed from that on the preceding trial (Figure 3B). This analysis removed 31.7% of the trials (24.6% from the rich condition and 6.1% from the sparse condition). Similar to the full dataset, search was significantly faster in the rich condition than the sparse condition, F(1, 15) = 17.09, p < .001, ηp2 = .53, and this effect became smaller as the experiment progressed, resulting in a significant interaction between condition and block, F(3, 45) = 3.04, p = .039, ηp2 = .17.

Figure 3.

Results from Experiment 2. The rich region was environmentally stable but random relative to the participants at the beginning of the trial. (A) Data from all trials. (B) Data from trials in which the target was in a different region than in the preceding trial. Error bars show ±1 S.E. of the mean.

Experiment 2 presented evidence for environment-centered learning of attended locations in a large-scale search task. Participants were able to prioritize an environmentally rich region of the search space, even though that region was randomly located relative to their perspective at the start of the trial. This learning emerged rapidly but declined somewhat as the experiment progressed.

4. Experiment 3

Is it possible for participants to acquire visual statistics that are stable relative to their starting position, even though those statistics are random in the environment? Note that this question is not redundant to that of Experiment 2. Environmental stability and viewpoint consistency may each be a sufficient condition or they may both be necessary. That is, evidence for an environment-centered coding from Experiment 2 does not preclude the possibility that viewpoint consistency alone could be sufficient for probability cuing. In Experiment 3 the coin appeared equally often in any of the four regions in the environment. However, it was more often placed in one region relative to the direction that the participant faced at the beginning of the trial (e.g., behind them and to their right).

One difficulty with this design, however, is that some participants were likely to search the locations that were in front of them first. As a result, search should be faster for regions that are in front of the participant, regardless of whether they were rich or sparse, and front-back differences could swamp effects of location probability. The front-back imbalance was not an issue in Experiment 2 because the target-rich region could be anywhere relative to the participants. Therefore in Experiment 3 we focused our analyses on trials in which the front-back confound was removed [footnote1].

Figure 4A showed data from the rich region and the directionality-matched sparse region. For half of the participants the rich region was in front while for the other half the rich region was behind. Table 1 lists mean RT for these two groups of participants.

Figure 4.

Results from Experiment 3. The rich region was defined relative to the participants’ facing direction at the start of the trial. We included only data from the sparse region that had the same front-back direction as the rich region. (A) Data from all trials. (B) Data from trials in which the target was in a different quadrant than the preceding trial’s quadrant in the viewer-centered space. Error bars show ±1 S.E. of the mean.

Table 1.

Mean RT (ms) from Experiment 3. The sparse quadrant matched the rich quadrant in directionality relative to the viewer (both front, or both back). There were 8 participants in each group. Standard error of the mean is shown in parenthesis.

| Directionality | Condition | Block 1 | Block 2 | Block 3 | Block 4 |

|---|---|---|---|---|---|

| Front | Rich | 8011 (465) | 5852 (610) | 5969 (564) | 6082 (502) |

| Sparse | 8858 (779) | 7473 (734) | 7081 (994) | 6452 (712) | |

| Back | Rich | 8960 (563) | 8199 (425) | 8976 (870) | 8939 (881) |

| Sparse | 10986 (753) | 9336 (746) | 9973 (968) | 9656 (1047) |

We conducted an ANOVA using directionality (front or behind) as a between-subject factor, target location (rich or sparse) and block (1–4) as within-subject factors. This analysis included only data from the sparse quadrant that matched the rich quadrant in directionality. The main effect of directionality was significant, F(1, 14) = 22.94, p < .001, ηp2 = .62, with faster RT for regions in front rather than behind the participants. However, directionality did not interact with other factors. The main effect of target region was significant, F(1, 14) = 5.89, p = .029, ηp2 = .30, showing faster RT in the rich region than the sparse regions. In addition, RT improved as the experiment progressed, leading to a significant main effect of block, F(3, 42) = 3.52, p = .023, ηp2 = .20. None of the other effects were significant, all Fs < 1.

Because Experiment 3 involved a viewer-centered manipulation, the rich condition was no more likely than the sparse condition to include trials in which the coin repeatedly appeared in the same region of external space. However, repetition in the viewer-centered space happened more often in the rich condition than the sparse condition. We therefore analyzed data from trials in which the current trial’s target was in a different quadrant than the preceding trial’s quadrant in the viewer-centered space (e.g., on trial N-1 the target was in the viewer’s upper left, on trial N the target was in the viewer’s upper right). Similar to the earlier analysis, only directionality-matched data were included. Altogether 27.8% of the data were removed, 25.8% from the rich condition and 2.0% from the sparse condition. As shown in Figure 4B, participants were significantly faster in the rich condition than the sparse condition, even on trials when the target’s quadrant in a viewer-centered space did not repeat, F(1, 14) = 5.08, p < .05, ηp2 = .27.

5. Across-experiment comparisons

All three experiments revealed location probability learning. However, it is possible that either the lack of viewer-consistency (Experiment 2) or environmental stability (Experiment 3) interfered with learning, relative to when the two reference frames were aligned (Experiment 1). To address this question we performed two planned comparisons. First, we compared Experiment 2 with Experiment 1 to examine whether the lack of viewpoint consistency interfered with learning. An ANOVA using experiment as a between-subject factor (Experiment 1 or 2), target location (rich or sparse quadrants) and block (1–4) as within-subject factors revealed just one significant effect involving experiment: the three-way interaction among the three factors, F(3, 90) = 3.79, p < .013, ηp2 = .11. Specifically, when viewpoint was consistent (Experiment 1), the preference for the rich quadrant increased over time, but when viewpoint was inconsistent (Experiment 2), the preference for the rich quadrant decreased over time.

Next, we compared Experiment 3 with Experiment 1 to test whether the lack of environmental stability (Experiment 3) interfered with location probability learning. This analysis included just the directionality-matched data from both experiments. Participants in Experiment 3 were significantly slower overall, F(1, 30) = 4.34, p < .05, ηp2 = .13. However, experiment did not interact with quadrant condition or block, all ps > 25.

6. Recognition

Did participants become aware of where the target was likely to be found? Table 2 lists the recognition responses. When asked whether they thought the coin was more often in some places than in others, the vast majority (42 of 48) said no, and only 1 participant reported deliberately searching the rich quadrant first. However, probability cuing was not strictly implicit either. A strict criterion of implicit learning is chance-level performance on forced-choice recognition tests (Smyth & Shanks, 2008; Stadler & Frensch, 1998). The vast majority of the participants were able to identify the rich quadrant. Chi-square tests showed that recognition rates were significantly above chance in Experiment 1 (p < .001) and Experiment 2 (p < .001) but not in Experiment 3 (p > .50). Although recognition rate was high, participants did not spontaneously notice and use the statistical information to guide visual search. From our own observations, participants who said the coin’s location was random (42 of 48 participants) took several seconds to identify (often correctly) the rich region when asked to do so. The type of learning exhibited in this study therefore fell into a gray area between strictly implicit learning and explicit learning. We will discuss the implications of this finding later.

Table 2.

The number of people whose answers indicated explicit knowledge for each question during the recognition test (out of 16 in each experiment)

| Experiment | Coin was more often in one quadrant than others |

Identified the rich region in forced choice |

|---|---|---|

| 1 | 3 | 11 |

| 2 (environment) | 2 | 14 |

| 3 (viewer) | 2 | 5 |

A few participants reported trying to find a pattern in the coin’s location early in the experiment. However, upon failing to detect the pattern they reported settling on idiosyncratic search patterns. One person reported looking at where the coin was found on the last trial, consistent with short-term priming. However, one other person reported avoiding the region where the coin was found last, consistent with inhibition of return. Some participants reported using strategies that were viewer-centered, such as looking to the front left before sweeping clockwise, or searching the regions in front before turning to the back.

7. Qualitative description of search behavior

Observations during testing showed that participants used a combination of locomotion, body and/or head turns, and eye movements during search. Locomotion in the search space was common earlier in the experiment, but it gradually shifted to body and/or head turns. Far locations were associated with more movement in the search space.

One concern is that participants could have decided to align their viewer-centered and environment-centered reference frames before initiating search. For example, a participant might always turn to face south after hearing the “Go” signal to start search. We did not observe any participant adopting this strategy. In addition, a small subset of the participants wore a head-mounted camera in the experiment to verify these observations. Sample footage can be found at the following website: http://jianglab.psych.umn.edu/LargeScaleSearch/LargeScaleSearch.html.

General Discussion

We designed a new experimental paradigm to characterize the spatial reference frame of attention in a large-scale, natural environment. Participants searched for a coin and identified its color in an outdoor space (8 × 8 meter2) that afforded them unlimited access to existing external landmarks. In addition, they were free to move in the space and to turn their body and head. Because the search space was large and search could not be accomplished with eye movements or covert shifts of attention alone, the allocation of spatial attention may not be the same as that observed in tasks administered on a computer screen. The main findings and their theoretical implications are as follows.

In three experiments visual search in the large-scale task exhibited sensitivity to statistical regularities in the location of the target coin. When the target was more often placed in one region of the environment, participants were faster finding it in the rich region. Learning emerged relatively quickly, often as early as the first block of 48 trials. By the end of the experiment (less than 200 trials), search was 10–30% faster in the rich region than in the sparse regions.

The natural search task showed evidence of environment-centered and viewer-centered representations of attention. Location probability cuing was observed when the target-rich region was consistent relative to both the environment and the viewer (Experiment 1), when it was consistent relative to the environment but not to the initial facing direction of the viewer (Experiment 2), as well as when it was consistent relative to the initial facing direction of the viewer but not to the environment (Experiment 3). Subtle differences were also noted in these experiments. The attentional preference for the rich quadrant was less sustained in Experiment 2 relative to Experiment 1, suggesting that viewpoint consistency was useful in sustaining location probability learning. Probability cuing was less robust in Experiment 3 than in Experiment 1, suggesting that environmental stability was useful in producing stable effects. Despite of these differences, all three experiments revealed large and significant location probability learning.

These findings differed qualitatively from both computer-based studies and an indoor floor-light foraging study (Jiang, Swallow, & Capistrano, 2013; Smith et al., 2010). When tested on a computer monitor laid flat on a stand, participants were unable to acquire location probability cuing for regions that were environmentally rich but variable relative to their perspective. Viewer-centered learning in location probability cuing occurs even when participants performed a realistic, but computer-based task (e.g., finding a traffic icon on a satellite map of a University campus; Jiang & Swallow, submitted). In contrast, the floor-light foraging task used in other studies showed no evidence of viewer-centered learning. When the starting position was random, participants failed to prioritize the rich side of search space when it was consistently located to their left or right (Smith et al., 2010). In the outdoor large-scale search task, however, either environmental stability or viewpoint consistency is sufficient for location probability learning. Therefore, spatial attention appeared to be more flexibly referenced in the natural environment than in other settings.

In Experiment 3, the rich region was always in a consistent part of the space relative to the viewer’s facing direction at the beginning of the trial. However, because participants were free to move in the search space, the relationship between their viewpoint and the rich quadrant would change as soon as the participant moved (e.g., by walking to a new location or turning their head/body). Any learning here would have to reflect the consistent relationship between their initial facing direction and the rich region. Indeed, significant effects were found only after separating trials according to whether the rich quadrant was in front of, or behind, the participants. The fact that learning occurred despite this difficulty suggests that movements through the search space were sensitive to learning where the target was likely to be located. Participants could be learning which direction to move at the start of the trial (such as sweeping the visual field in a counterclockwise direction). In the laboratory the viewer-centered component of attention is primarily retinotopic (e.g., Golomb et al., 2008) or head-centered (Jiang & Swallow, 2013b). The viewer-centered component in the natural environment may also be head/eye-centered, although it may also include body-centered representations. The exact format of viewer-centered attention should be further investigated in the future.

The environment-centered learning shown in Experiment 2 suggests that humans are capable of acquiring visual statistical learning even when their viewpoint changed from trial to trial. The way that they accomplish this learning, however, is unclear. There are several possibilities. One is that participants could have encoded the target’s location relative to the external world, perhaps by using the geometry of the large space or salient landmarks. Alternatively, participants could have encoded the target’s location in a viewer-centered map that included environmental landmarks or that was updated with movements through space. Finally, participants might have acquired several viewer-centered representations that were retrieved based on salient landmarks (e.g., when facing Elliott Hall the target was more likely to be in front and to the right, but when facing the river the target was more likely to be in front and to the left). Although environment-centered representations seem more parsimonious than either type of viewer-centered representations, it is empirically difficult to fully dissociate these possibilities (Wang & Spelke, 2000). In fact, a similar debate in object recognition remains contentious (Biederman & Gerhardstein, 1993; Logothetis & Pauls, 1995; Tarr & Bülthoff, 1995).

Regardless of the nature of the representations and computations used to support environment-centered coding, this study is the first to demonstrate that statistical learning can tolerate changes in viewpoint. This finding is importantly different from, and contradictory to, findings from search tasks performed on a computer-monitor (Jiang & Swallow, submitted; Jiang, Swallow, & Capistrano, 2013). Combined these data suggest that statistical learning and its influence on spatial attention is supported by multiple systems. Some may be viewpoint specific and others may be viewpoint invariant.

Why was spatial attention more flexibly referenced in the large, outdoor environment than in laboratory, computer-based tasks? There are several possibilities. First, the outdoor environment has richer environmental cues in a greater range of depth (e.g., the Mississippi River, large buildings, and other landmarks) that may facilitate environment-centered coding. Second, in the outdoor task participants had greater explicit access to the target’s location probability, especially when the rich quadrant is environmentally stable. This may increase the flexibility of how spatial attention can be coded (see Jiang, Swallow, & Capistrano, 2013; Jiang, Swallow, & Sun, 2014, for additional evidence that explicit awareness mediates the nature of spatial coding). Finally, owing to increased locomotion and head/body turns, the outdoor search task involves more diverse forms of attentional movement than that shown in computer-based tasks. It is possible that whereas premotor or oculomotor movements of attention are viewer-centered, other forms of attentional movements (e.g. those that involve locomotion and head/body rotation) may be more environment-centered (Jiang & Swallow, 2013b). Future studies are needed to pinpoint factors that contribute to a change in reference frame between large-scale outdoor tasks and small-scale computer-based tasks. Regardless of the answer to this question, the current study helps resolve some puzzling findings in previous research. Our findings showed that when tested in the natural environment, visual statistical learning exhibits great flexibility in acquiring environmental statistics. Not only can people acquire an attentional bias toward important locations defined relative to their own perspective, but they also can extract environmental statistics that are unstable due to self-movement. Our findings therefore provide some of the most compelling evidence for the utility of statistical learning in everyday attention and human performance. Whether such learning is possible in the complete absence of awareness, however, is an important question to test in the future.

Our study shows that conclusions drawn based on computerized tasks may not always generalize to more naturalistic tasks. These findings echo the call of several psychologists who have championed an ecological approach to the study of human cognition (Gibson, 1979; Kingstone et al., 2003; Neisser, 1976; Simons & Levin, 1998). At the very least, our findings call for a re-evaluation of how the factors that are controlled in a laboratory setting might obscure the way attention and other cognitive processes work in naturalistic settings (Kingstone et al., 2003).

The new experimental paradigm used here may be fruitfully used in future studies to characterize spatial attention in large-scale spaces. The paradigm has several strengths. Unlike the indoor floor-light search task, this paradigm tests visually guided search behavior in a relatively small amount of time. In addition, the outdoor testing environment enhances the similarities between the testing environment and those encountered in everyday search tasks. Finally, the setup is simple. It does not require expensive or heavy equipment and therefore can be widely used by many laboratories.

A major disadvantage of this paradigm is its reliance on humans to place the target. This led to several seconds of pauses between trials and exerted physical strain on the experimenters. These disadvantages may be addressed with virtual reality. However, although the current technology used in virtual reality provides good visual and proprioceptive cues, it does not produce good proximal cues such as the feeling of the feet on the ground and the change in air pressure when moving. In rats, place cells fire less vigorously in a virtual reality environment than in the real world (Ravassard et al., 2013). This difference may change the degree to which environmental stability can support coding of attended locations.

The tradeoff between the ease of administration and realism needs to be explored in future studies. One contribution of the current study is that it establishes a basis for comparison with future virtual reality studies. Such comparison may inform us about the relative importance of different locomotion cues in spatial attention.

Summary & Conclusion

We designed a new experimental paradigm that tested spatial attention in a large-scale natural environment. Like computerized tasks, large-scale search showed sensitivity to statistical regularities in the visual environment. Participants learned to prioritize locations that had a high probability of containing the target, and they did so often without deliberate intention. However, unlike computerized tasks or indoor foraging tasks, participants were able to reference attended locations relative to either the external environment or their own facing direction. These differences between large-scale and computer-based search tasks suggest that spatial attention may rely on several distinct mechanisms that are recruited according to the task and search context. Some forms of attention, such as those relying primarily on the oculomotor or premotor systems of attention, may be egocentric. Other forms of attention, such as those that support large-scale locomotion and shift of attention, may be more flexibly referenced. Our study calls for future investigations that extend computerized findings to large-scale environments. It is no longer adequate to assume that findings in the lab generalize to more natural analogs.

Acknowledgments

This study was supported in part by NIH MH102586. Y.V.J. and B.Y.W. made equal contribution to the design and execution of the study. Author contributions are as follows. Y.V.J. and K.M.S. conceptualized the study, all authors designed the study, Y.V.J., B.Y.W., and D.M. set up the study and performed the experiments, Y.V.J. and B.Y.W. analyzed the data, Y.V.J. and K.M.S. wrote the paper. We are grateful for research assistants who served as the second experimenter in this study: Anthony Asaad, Joe Enabnit, Hyejin Lee, Youngki Hong, Ryan Smith, Bryce Palm, and Tegan Carr. Thanks to Gordon Legge for discussion and Fang Li for the suggestion to use magnetic tools.

Footnotes

When data from all regions were included in Experiment 3, mean RT in the rich condition was 7786, 7025, 7472, and 7510 ms across the four blocks. Mean RT in the sparse condition was 9230, 8084, 7840, and 7180 ms across the four blocks. None of the effects involving condition were significant, all p > .10.

References

- Abrams RA, Pratt J. Oculocentric coding of inhibited eye movements to recently attended locations. Journal of Experimental Psychology: Human Perception and Performance. 2000;26(2):776–788. doi: 10.1037//0096-1523.26.2.776. [DOI] [PubMed] [Google Scholar]

- Awh E, Belopolsky AV, Theeuwes J. Top-down versus bottom-up attentional control: a failed theoretical dichotomy. Trends in Cognitive Sciences. 2012;16(8):437–443. doi: 10.1016/j.tics.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biederman I, Gerhardstein PC. Recognizing depth-rotated objects: evidence and conditions for three-dimensional viewpoint invariance. Journal of Experimental Psychology. Human Perception and Performance. 1993;19(6):1162–1182. doi: 10.1037//0096-1523.19.6.1162. [DOI] [PubMed] [Google Scholar]

- Bridgemen B, Kirch M, Sperling A. Segregation of cognitive and motor aspects of visual function using induced motion. Perception & Psychophysics. 1981;29(4):336–342. doi: 10.3758/bf03207342. [DOI] [PubMed] [Google Scholar]

- Brozzoli C, Gentile G, Petkova VI, Ehrsson HH. FMRI adaptation reveals a cortical mechanism for the coding of space near the hand. Journal of Neuroscience. 2011;31(24):9023–9031. doi: 10.1523/JNEUROSCI.1172-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burr DC, Morrone MC. Constructing stable spatial maps of the world. Perception. 2012;41(11):1355–1372. doi: 10.1068/p7392. [DOI] [PubMed] [Google Scholar]

- Cavanagh P, Hunt AR, Afraz A, Rolfs M. Visual stability based on remapping of attention pointers. Trends in Cognitive Sciences. 2010;14(4):147–153. doi: 10.1016/j.tics.2010.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Golomb JD, Turk-Browne NB. A taxonomy of external and internal attention. Annual Review of Psychology. 2011;62:73–101. doi: 10.1146/annurev.psych.093008.100427. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Droll J, Eckstein M. Gaze control and memory for objects while walking in a real world environment. Visual Cognition. 2009;17(6–7):1159–1184. [Google Scholar]

- Druker M, Anderson B. Spatial probability AIDS visual stimulus discrimination. Frontiers in Human Neuroscience. 2010:4. doi: 10.3389/fnhum.2010.00063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egeth HE, Yantis S. Visual attention: control, representation, and time course. Annual Review of Psychology. 1997;48:269–297. doi: 10.1146/annurev.psych.48.1.269. [DOI] [PubMed] [Google Scholar]

- Foulsham T, Nasiopoulos E, Kingstone A. Top-Down and Bottom-Up Aspects of Active Search in a Real-World Environment. Canadian Journal of Experimental Psychology. 2014;68(1):8–19. doi: 10.1037/cep0000004. [DOI] [PubMed] [Google Scholar]

- Geng JJ, Behrmann M. Probability cuing of target location facilitates visual search implicitly in normal participants and patients with hemispatial neglect. Psychological Science. 2002;13(6):520–525. doi: 10.1111/1467-9280.00491. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The Ecological approach to visual perception. Hillsdale (N.J.): Lawrence Erlbaum Associates; 1986. [Google Scholar]

- Golomb JD, Chun MM, Mazer JA. The native coordinate system of spatial attention is retinotopic. Journal of Neuroscience. 2008;28(42):10654–10662. doi: 10.1523/JNEUROSCI.2525-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodale MA, Haffenden A. Frames of reference for perception and action in the human visual system. Neuroscience and Biobehavioral Reviews. 1998;22(2):161–172. doi: 10.1016/s0149-7634(97)00007-9. [DOI] [PubMed] [Google Scholar]

- Hayhoe MM, Shrivastava A, Mruczek R, Pelz JB. Visual memory and motor planning in a natural task. Journal of Vision. 2003;3(1):49–63. doi: 10.1167/3.1.6. [DOI] [PubMed] [Google Scholar]

- Jiang YV, Swallow KM. Body and head tilt reveals multiple frames of reference for spatial attention. Journal of Vision. 2013a;13(13):9. doi: 10.1167/13.13.9. [DOI] [PubMed] [Google Scholar]

- Jiang YV, Swallow KM. Spatial reference frame of incidentally learned attention. Cognition. 2013b;126(3):378–390. doi: 10.1016/j.cognition.2012.10.011. [DOI] [PubMed] [Google Scholar]

- Jiang YV, Swallow KM, Capistrano CG. Visual search and location probability learning from variable perspectives. Journal of Vision. 2013;13(6):13. doi: 10.1167/13.6.13. [DOI] [PubMed] [Google Scholar]

- Jiang YV, Swallow KM, Rosenbaum GM, Herzig C. Rapid acquisition but slow extinction of an attentional bias in space. Journal of Experimental Psychology. Human Perception and Performance. 2013;39(1):87–99. doi: 10.1037/a0027611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang YV, Swallow KM, Sun L. Egocentric coding of space for incidentally learned attention: Effects of scene context and task instructions. Journal of Experimental Psychology. Learning, Memory, and Cognition. 2014;40(1):233–250. doi: 10.1037/a0033870. [DOI] [PubMed] [Google Scholar]

- Jovancevic J, Sullivan B, Hayhoe M. Control of attention and gaze in complex environments. Journal of Vision. 2006;6(12):1431–1450. doi: 10.1167/6.12.9. [DOI] [PubMed] [Google Scholar]

- Kingstone A, Smilek D, Ristic J, Kelland Friesen C, Eastwood JD. Attention, researchers! it is time to take a look at the real world. Current Directions in Psychological Science. 2003;12(5):176–180. [Google Scholar]

- Logothetis NK, Pauls J. Psychophysical and physiological evidence for viewer-centered object representations in the primate. Cerebral Cortex. 1995;5(3):270–288. doi: 10.1093/cercor/5.3.270. [DOI] [PubMed] [Google Scholar]

- Maljkovic V, Nakayama K. Priming of pop-out: II. The role of position. Perception & Psychophysics. 1996;58(7):977–991. doi: 10.3758/bf03206826. [DOI] [PubMed] [Google Scholar]

- Mathôt S, Theeuwes J. Gradual remapping results in early retinotopic and late spatiotopic inhibition of return. Psychological Science. 2010;21(12):1793–1798. doi: 10.1177/0956797610388813. [DOI] [PubMed] [Google Scholar]

- Miller J. Components of the location probability effect in visual search tasks. Journal of Experimental Psychology: Human Perception and Performance. 1988;14(3):453–471. doi: 10.1037//0096-1523.14.3.453. [DOI] [PubMed] [Google Scholar]

- Mou W, Xiao C, McNamara TP. Reference directions and reference objects in spatial memory of a briefly viewed layout. Cognition. 2008;108(1):136–154. doi: 10.1016/j.cognition.2008.02.004. [DOI] [PubMed] [Google Scholar]

- Neisser U. Cognition and reality: principles and implications of cognitive psychology. San Francisco: W.H. Freeman; 1976. [Google Scholar]

- Nobre AC, Kastner S. The Oxford handbook of attention. Oxford: Oxford University Press; 2014. [Google Scholar]

- Obhi SS, Goodale MA. The effects of landmarks on the performance of delayed and real-time pointing movements. Experimental Brain Research. 2005;167(3):335–344. doi: 10.1007/s00221-005-0055-5. [DOI] [PubMed] [Google Scholar]

- Pertzov Y, Zohary E, Avidan G. Rapid formation of spatiotopic representations as revealed by inhibition of return. Journal of Neuroscience. 2010;30(26):8882–8887. doi: 10.1523/JNEUROSCI.3986-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravassard P, Kees A, Willers B, Ho D, Aharoni D, Cushman J, … Mehta MR. Multisensory control of hippocampal spatiotemporal selectivity. Science. 2013;340(6138):1342–1346. doi: 10.1126/science.1232655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simons DJ, Levin DT. Failure to detect changes to people during a real-world interaction. Psychonomic Bulletin & Review. 1998;5(4):644–649. [Google Scholar]

- Smith AD, Hood BM, Gilchrist ID. Visual search and foraging compared in a large-scale search task. Cognitive Processing. 2008;9(2):121–126. doi: 10.1007/s10339-007-0200-0. [DOI] [PubMed] [Google Scholar]

- Smith AD, Hood BM, Gilchrist ID. Probabilistic cuing in large-scale environmental search. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2010;36(3):605–618. doi: 10.1037/a0018280. [DOI] [PubMed] [Google Scholar]

- Smyth AC, Shanks DR. Awareness in contextual cuing with extended and concurrent explicit tests. Memory & Cognition. 2008;36(2):403–415. doi: 10.3758/mc.36.2.403. [DOI] [PubMed] [Google Scholar]

- Stadler MA, Frensch . Handbook of implicit learning. Thousand Oaks: Sage; 1998. [Google Scholar]

- Tarr MJ, Bülthoff HH. Is human object recognition better described by geon structural descriptions or by multiple views? Comment on Biederman and Gerhardstein (1993) Journal of Experimental Psychology: Human Perception and Performance. 1995;21(6):1494–1505. doi: 10.1037//0096-1523.21.6.1494. [DOI] [PubMed] [Google Scholar]

- Tatler BW, Hayhoe MM, Land MF, Ballard DH. Eye guidance in natural vision: reinterpreting salience. Journal of Vision. 2011;11(5):5. doi: 10.1167/11.5.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Umemoto A, Scolari M, Vogel EK, Awh E. Statistical learning induces discrete shifts in the allocation of working memory resources. Journal of Experimental Psychology: Human Perception and Performance. 2010;36(6):1419–1429. doi: 10.1037/a0019324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vatterott DB, Vecera SP. Prolonged disengagement from distractors near the hands. Frontiers in Psychology. 2013;4:533. doi: 10.3389/fpsyg.2013.00533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walthew C, Gilchrist ID. Target location probability effects in visual search: an effect of sequential dependencies. Journal of Experimental Psychology: Human Perception and Performance. 2006;32(5):1294–1301. doi: 10.1037/0096-1523.32.5.1294. [DOI] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Updating egocentric representations in human navigation. Cognition. 2000;77(3):215–250. doi: 10.1016/s0010-0277(00)00105-0. [DOI] [PubMed] [Google Scholar]

- Wraga M, Creem SH, Proffitt DR. Perception-action dissociations of a walkable Müller-Lyer configuration. Psychological Science. 2000;11(3):239–243. doi: 10.1111/1467-9280.00248. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Neuronal mechanisms of visual stability. Vision Research. 2008;48(20):2070–2089. doi: 10.1016/j.visres.2008.03.021. [DOI] [PMC free article] [PubMed] [Google Scholar]