Abstract

How we perceive emotional signals from our environment depends on our personality. Alexithymia, a personality trait characterized by difficulties in emotion regulation has been linked to aberrant brain activity for visual emotional processing. Whether alexithymia also affects the brain’s perception of emotional speech prosody is currently unknown. We used functional magnetic resonance imaging to investigate the impact of alexithymia on hemodynamic activity of three a priori regions of the prosody network: the superior temporal gyrus (STG), the inferior frontal gyrus and the amygdala. Twenty-two subjects performed an explicit task (emotional prosody categorization) and an implicit task (metrical stress evaluation) on the same prosodic stimuli. Irrespective of task, alexithymia was associated with a blunted response of the right STG and the bilateral amygdalae to angry, surprised and neutral prosody. Individuals with difficulty describing feelings deactivated the left STG and the bilateral amygdalae to a lesser extent in response to angry compared with neutral prosody, suggesting that they perceived angry prosody as relatively more salient than neutral prosody. In conclusion, alexithymia may be associated with a generally blunted neural response to speech prosody. Such restricted prosodic processing may contribute to problems in social communication associated with this personality trait.

Keywords: alexithymia, emotional prosody, amygdala, superior temporal gyrus, inferior frontal gyrus

INTRODUCTION

The perception and interpretation of emotional signals is an important part of social communication. Body gestures and posture, facial expressions as well as the tone of voice provide crucial insight into another person’s mind (Van Kleef, 2009). However, how emotional signals are perceived and interpreted may differ considerably across individuals, and the same emotional signal can evoke a different response in different people (Hamann and Canli, 2004; Ormel et al., 2013). Indeed, recent neuroimaging studies indicate that personality modulates the brain’s response to emotional signals in our environment (e.g. Canli et al., 2001; Hooker et al., 2008; Brück et al., 2011a; Brühl et al., 2011; Frühholz et al., 2011).

Difficulties interpreting emotions lie at the core of alexithymia (‘no words for feelings’), a personality construct referring to a specific deficit in emotional processing (Sifneos, 1973). Individuals scoring high on alexithymia have difficulty identifying, analyzing and verbalizing their feelings, reading emotions from faces (e.g. Parker et al., 2005; Prkachin et al., 2009; Swart et al., 2009) and describing other’s emotional experiences (Bydlowski et al., 2005). These difficulties in the cognitive processing of emotions, which constitute the cognitive alexithymia dimension, may be accompanied by reduced capacities to experience emotional arousal (affective alexithymia dimension). Flattened affect paired with diminished empathy for the feelings of others (Guttman and Laporte, 2002; Grynberg et al., 2010) may lead to a perception of alexithymic individuals as cold and distant (Spitzer et al., 2005) and interpersonally indifferent (Vanheule et al., 2007), resulting in problems in social life.

In the past decade, neuroimaging studies have begun to reveal the neural basis of emotion processing deficits associated with alexithymia. By means of functional magnetic resonance imaging (fMRI), these studies demonstrated aberrant brain activity in individuals scoring high on alexithymia for a variety of emotional processing tasks, (for a meta-analysis, see van der Velde et al., 2013) such as the viewing of facial and bodily expressions of emotions (Berthoz et al., 2002; Mériau et al., 2006; Kugel et al., 2008; Pouga et al., 2010), during empathy for pain (Bird et al., 2010) and during the imagery of autobiographic emotional events (Mantani et al., 2005). Such differences were not only found during explicit processing (i.e. when participants were asked to explicitly evaluate the emotional dimension of the stimuli) but also during implicit processing (i.e. when participants were asked to direct their attention toward another dimension than emotion or were unaware of the emotional dimension; see De Houwer, 2006), such as during the brief presentation of masked emotional faces (Leweke et al., 2004; Kugel et al., 2008; Duan et al., 2010; Reker et al., 2010; see Grynberg et al., 2012, for a review).

Previous research into the neural basis of alexithymia has mostly focused on the processing of visual emotional stimuli such as facial or bodily expressions of emotions or emotional pictures and videos. Surprisingly, the impact of alexithymia on the perception of emotional prosody (the melody of speech) has received little attention despite its importance in conveying emotion through the voice in daily conversation. In an electroencephalography (EEG) study using a cross-modal affective priming paradigm, we tested the impact of alexithymia on the N400 component, an indicator of the perception of mismatches in affective meaning, in response to music and speech (Goerlich et al., 2011). Alexithymia correlated negatively with N400 amplitudes for mismatching music and speech, suggesting that people scoring high on this personality trait may be less sensitive to aurally perceived emotions. The suggestion of a reduced sensitivity to emotional speech prosody in alexithymia was confirmed in a further EEG study, in which we additionally observed that alexithymia did not only affect the explicit but also the implicit perception of emotional prosody qualities (Goerlich et al., 2012).

Taken together, these findings suggest that alexithymia is linked to differences in the way the brain processes emotions conveyed through the voice. However, the brain regions underlying such differences remain unknown as neuroimaging studies with the necessary higher spatial resolution to identify them are currently lacking. Therefore, this fMRI study aimed to investigate how alexithymia affects the neural processing of emotional prosody. As the impact of alexithymia may vary depending on whether attention is directed toward the emotional prosodic dimension or not, we investigated both explicit processing (participants categorized emotional prosody, i.e. attention was directed toward the emotional dimension) and implicit processing of the same prosodic stimuli (participants evaluated the metrical stress position, i.e. attention was directed toward a different dimension than emotional prosody).

The neural network underlying emotional prosody perception has been investigated by numerous studies over the past decades (for a recent review, see e.g. Kotz and Paulmann, 2011). A recent meta-analysis of the lesion literature concluded that the right hemisphere is relatively more involved in prosodic processing than the left (Witteman et al., 2011). Regarding a specific network for prosodic processing, converging evidence suggests the involvement of fronto-temporal regions and subcortical structures (for a recent meta-analysis, see Witteman et al., 2012). The processing of emotional prosody has been proposed to involve three phases (Schirmer and Kotz, 2006). In the initial phase, basic acoustic properties are extracted, a process presumably mediated by the primary auditory cortex and adjacent middle superior temporal gyrus (STG) (Wildgruber et al., 2009). In the second phase, the extracted acoustic information is integrated into an emotional percept or ‘gestalt’. This process takes place in the superior temporal cortex (STC), with the laterality and anterior–posterior distribution of activity within the STC being sensitive to stimulus-specific features (phonetic medium, valence) as well as to task conditions such as attentional focus (for meta-analyses, see Frühholz and Grandjean, 2013; Witteman et al., 2012). In the third and final phase, emotional prosody is explicitly evaluated and integrated with other cognitive processes in the inferior frontal gyrus (IFG) (Brück et al., 2011b). With respect to the involvement of subcortical structures, the amygdala has been implicated by several studies in emotional prosody processing (Sander et al., 2005; Wildgruber et al., 2008; Ethofer et al., 2009; Wiethoff et al., 2009; Leitman et al., 2010; Brück et al., 2011a; Mothes-Lasch et al., 2011; Jacob et al., 2012; Frühholz and Grandjean, in press, but see Adolphs and Tranel, 1999; Adolphs et al., 2001; Wildgruber et al., 2005; Witteman et al., 2012), with the role ascribed to the amygdala in this context being the initial detection of emotional salience and relevance (Kotz and Paulmann, 2011). The amygdala is a crucial structure for the processing of emotions in general (see Armony, 2013, for a recent review) and has further been suggested to act as a general detector of personal significance (Sander et al., 2003). Amygdala responsiveness seems to depend on personality characteristics, as has recently been demonstrated for the case of neuroticism (Brück et al., 2011a), and several studies reported a reduced responsiveness of the amygdala during the implicit and explicit processing of visual emotional information in alexithymia (Leweke et al., 2004; Kugel et al., 2008; Pouga et al., 2010).

Taken together, previous evidence suggests that the STG, the IFG and the amygdala are involved in the neural processing of emotional prosody. Therefore, these three regions were chosen as a priori regions of interest (ROIs) for the present study. The aim of the study was to investigate the impact of alexithymia on the neural processing of emotional prosody under explicit as well as under implicit task conditions. Given previous evidence of a reduced sensitivity to emotional prosody and a reduced responsiveness of the amygdala to visual emotional information in alexithymia, we hypothesized diminished ROI activity during the implicit and explicit processing of emotional prosody with higher levels of alexithymia.

METHODS

Participants

A total of 22 subjects (nine males; mean age 24.8 ± 5.3) participated in the study. Three subjects performed at chance level on the implicit task while performing normally on the explicit task. Therefore, analyses of the implicit task did not consider the data of these subjects. All participants were right-handed as determined by the Edinburgh Handedness Inventory (mean 88.11 ± 11.32, minimum 67). Participants were native speakers of Dutch, had normal or corrected-to-normal vision, no hearing problems and no psychiatric or neurological disorder in present or past. All participants gave informed consent prior to the experiment and received compensation for participation. The study was approved by the local ethics committee and conducted in accordance with the Declaration of Helsinki.

Materials

Pseudowords (see Appendix A) with a bisyllabic structure were generated. All pseudowords obeyed Dutch phonotactics and were verified for absence of semantic content. All pseudowords were expressed with neutral, (pleasantly) surprised and angry prosody, with stress on the first and second syllable by two professional actors (one male and one female). Stimuli were recorded at 16 bit resolution and a 44.1 kHz bit sample rate in a sound proofed cabin. Surprised and angry in addition to neutral prosody was chosen to sample positive and negative emotions that are both considered to be approach emotions (thus, there was no confounding role of the approach-withdrawal dimension). Items were intensity normalized (i.e. did not differ in mean intensity between categories) and had a mean duration of 756 ± 65 ms. In line with previous literature (Scherer, 2003), the emotional categories differed from neutral prosody in mean F0 and F0 variability, and anger additionally in intensity variability (Table 1). The validity of the intended prosodic contrasts was verified by a panel of five healthy volunteers who classified each stimulus in a forced choice task (categories: angry, surprised, happy, sad, neutral and other). Only pseudowords whose prosodic category was classified correctly by at least 80% of the participants for both actors were chosen.

Table 1.

Acoustic properties of the prosodic stimuli per emotional category

| Neutral | Anger | Surprise | |

|---|---|---|---|

| Mean intensity (dB) | 79.45 | 79.27 | 80.64 |

| Mean variation (s.d.) intensity | 8.84 | 10.74 | 8.83 |

| Mean F0 (Hz) | 180.73 | 281.35 | 282.46 |

| Mean variation (s.d.) F0 | 44.78 | 78.56 | 101.19 |

| Mean total duration (s) | 0.79 | 0.76 | 0.72 |

Two categorization tasks were created presenting identical stimuli in an implicit and an explicit emotional task. For the implicit task, the subjects’ attention was directed toward a non-emotional dimension as they were asked to decide whether the metrical stress lay on the first or the second syllable of the pseudoword. For the explicit task, the subjects’ attention was explicitly directed toward the emotional prosody dimension as subjects decided whether the pseudowords were spoken with neutral, angry or surprised intonation. Tasks and instructions were identical except for the words that instructed participants to either respond to non-emotional (implicit task) or emotional (explicit task) prosodic characteristics.

From the pool of validated stimuli, 32 items of each emotional category were selected, half of which had metrical stress on the first syllable, the other half on the second syllable. Speaker gender was balanced across items.

Procedure

Each subject completed both tasks (12 min each). The implicit task was always presented first to prevent subjects from devoting attention explicitly to the emotional dimension of the stimuli in this task. Subjects were instructed that they would hear a nonsense word and to categorize the task-relevant category (emotion or metrical stress) as fast and accurately as possible with a right-hand button press. Assignment of individual categories to response buttons was counterbalanced across subjects. Participants were instructed that they could respond while the stimulus was still playing (i.e. RT was recorded from the onset of the stimulus).

Subjects first practiced the tasks in the scanner with simulated scanner noise until reaching at least 75% performance accuracy. Then, the experimental trials started which encompassed a total of 96 trials (32 items per emotional category). Throughout the experiment, a black fixation cross was presented in the center of a gray background. Auditory stimuli were presented binaurally through MR-compatible headphones and a trial ended 2 s after stimulus onset. Stimuli were presented in an event-related fashion with a jittered inter-stimulus interval (between 4 and 8 s). The order of stimulus presentation was pseudo-random with the restriction of no more than two consecutive presentations of the same stimulus category. Subjects were instructed to fix their gaze on the fixation cross throughout the experiment. Stimulus presentation was controlled using E-prime 1.2, and stimulus material was presented at 16 bit resolution and a 44.1 kHz sampling frequency at a comfortable intensity level. All subjects reported that the stimuli could be perceived clearly despite the scanner noise.

Twenty-item Toronto Alexithymia Scale

The 20-item Toronto Alexithymia Scale (TAS-20) is the most widely used measure of alexithymia with a demonstrated validity, reliability and stability (Bagby et al., 1994a,b). A validated Dutch translation of the scale was used (Kooiman et al., 2002). The TAS-20 consists of 20 self-report items rated on a five-point Likert scale (1: strongly disagree, 5: strongly agree), with five negatively keyed items. It comprises three subscales: (1) difficulty identifying feelings (e.g. ‘I often don’t know why I’m angry’), (2) difficulty describing feelings (e.g. ‘I find it hard to describe how I feel about people’) and (3) externally oriented thinking (e.g. ‘I prefer talking to people about their daily activities rather than their feelings’). Possible scores range from 20 to 100, higher scores indicate higher degrees of alexithymia.

State-Trait Anxiety Inventory

The State-Trait Anxiety Inventory (STAI) (Spielberger et al., 1970) differentiates between the temporary condition of ‘state anxiety’ and the more general and long-standing quality of ‘trait anxiety’. For this study, the Trait Anxiety version of the STAI (T-STAI) was used to control for trait anxiety, which has been reported to be closely linked to alexithymia (Berthoz et al., 1999). The T-STAI evaluates relatively stable aspects of anxiety proneness (general states of calmness, confidence and security), and thus refers to a general tendency to respond with anxiety to perceived threats in the environment. The scale consists of 20 items rated from 1 (almost never) to 4 (almost always); higher scores indicate more trait anxiety.

fMRI data acquisition

Imaging data were acquired on a Philips 3.0 T Achieva MRI scanner with an eight-channel SENSE head coil for radiofrequency transmission and reception (Philips Medical Systems, Best, The Netherlands). For each task, whole-brain fMRI data were acquired using T*2-weighted gradient-echo echo-planar imaging (EPI) with the following scan parameters: 355 volumes (the first five were discarded to reach signal equilibrium); 38 axial slices scanned in ascending order; repetition time (TR) = 2200 ms; echo time (TE) = 30 ms; flip angle = 80°; FOV = 220 × 220 mm; 2.75 mm isotropic voxels with a 25 mm slice gap. A high-resolution anatomical image (T1-weighted ultra-fast gradient-echo acquisition; TR = 9.75 ms; TE = 4.59 ms; flip angle = 8°; 140 axial slices; FOV = 224 × 224 mm; in-plane resolution 0.875 × 0.875 mm; slice thickness = 1.2 mm) and a high-resolution T*2-weighted gradient-echo EPI scan (TR = 2.2 s; TE = 30 ms; flip angle = 80°; 84 axial slices; FOV = 220 × 220 mm; in-plane resolution 1.96 × 1.96 mm; slice thickness = 2 mm) were additionally acquired for registration to standard space.

fMRI data preprocessing

Prior to analysis, all fMRI data sets were submitted to a visual quality control check to ensure that no gross artifacts were present in the data. Data were analyzed using FMRIB Software Library (FSL) (www.fmrib.ax.ac.uk/fsl), version 4.1.3. The following preprocessing steps were applied to the EPI data sets: motion correction, removal of non-brain tissue, spatial smoothing using a Gaussian kernel of 8 mm full width at half maximum, grand-mean intensity normalization of the entire 4D dataset by a single multiplicative factor and a high-pass temporal filter of 70 s (i.e. ≥0.07 Hz). The dataset was registered to the high-resolution EPI image, the high-resolution EPI image to the T1-weighted image and the T1-weighted image to the 2 mm isotropic MNI-152 standard space image (T1-weighted standard brain averaged over 152 subjects; Montreal Neurological Institute, Montreal, QC, Canada). The resulting transformation matrices were then combined to obtain a native to MNI space transformation matrix and its inverse (MNI to native space).

Data analysis

Behavioral data analysis

Accuracy (ACC, proportion correct responses) and reaction times (RT for correct responses) were entered as dependent variables in analyses of covariance (ANCOVA) with the within-subjects factors Task (Explicit vs Implicit), Emotion (Neutral vs Angry vs Surprised), Sex as a between-subjects factor, and scores on the TAS-20 alexithymia questionnaire and the T-STAI anxiety scale included as covariates.

fMRI data analysis

Data analysis was performed using FEAT (FMRI Expert Analysis Tool) Version 5.98, part of FSL. In native space, the fMRI time series was analyzed in an event-related fashion using the General Linear Model with local autocorrelation correction applied (Woolrich et al., 2004).

For both runs, onset of each of the stimulus categories (neutral, angry and surprised) was modeled separately as an event with an 800 ms duration at the first level. Each effect was modeled on a trial-by-trial basis using a square wave function convolved with a canonical hemodynamic response function and its temporal derivative. At second level, a whole-brain analysis was performed across both tasks to examine the main effect of emotional (angry and surprised) vs neutral prosody, using clusters determined by P < 0.01 (z > 2.3), and a cluster-corrected significance threshold PFDR < 0.05. If errors were present, these trials were included in the model but not in the contrasts of interest.

ROI analyses

ROI analyses were performed on three a priori regions: the STG (mid and posterior part), the IFG (pars opercularis) and the amygdala (all bilaterally). Anatomical ROIs for these regions were created as defined by the Harvard-Oxford cortical structural atlas (http://www.fmrib.ox.ac.uk/fsl/data/atlas-descriptions.html#ho). Mean z-scores of each ROI were calculated for each stimulus category (anger, surprise and neutral) against baseline for each task and extracted for each participant using Featquery (http://www.fmrib.ox.ac.uk/fsl/feat5/featquery.html). These z-transformed parameter estimates indicate how well the mean signal of each ROI is explained by the model. To identify emotion-specific ROI activity, mean z-scores were further extracted for the contrasts angry vs neutral and surprised vs neutral prosody.

Statistical analyses of ROI data

The first analysis used the z-scores against baseline as a dependent variable in a repeated measures (RM) ANCOVA with Task (Explicit vs Implicit), ROI (STG vs IFG vs Amygdala), Hemisphere (Left vs Right) and Emotion (Angry vs Surprised vs Neutral) as within-subject factors, Sex as between-subject factor and TAS-20 alexithymia and T-STAI anxiety scores as covariates. This analysis served to identify the impact of alexithymia on ROI activity in response to angry, surprised and neutral prosody compared to baseline (scanner noise).

In the second analysis, emotional prosody (angry and surprised) was contrasted to neutral prosody in an RM-ANCOVA using the mean z-scores of the contrasts angry vs neutral and surprised vs neutral prosody as dependent variable, with all other factors being identical to the first analysis. In keeping with the common procedure of contrasting emotional to neutral stimuli employed in the alexithymia literature, this analysis served to identify the impact of alexithymia on ROI activity specifically in response to emotional (angry/surprised) relative to neutral prosody.

For both analyses, follow-up tests and Pearson’s correlations were conducted to identify the sources of the observed effects. As the aim of this study was to investigate the influence of alexithymia on prosodic processing, results will be reported with a focus on main effects of and interactions with the TAS-20 alexithymia scale (the effects of Task and Emotion will be reported elsewhere).

RESULTS

Behavioral data

TAS-20 alexithymia scores ranged from 26 to 60 (mean: 42.18 ± 7.45), i.e. none of the participants had clinical alexithymia (cut-off score ≥61, see Taylor et al., 1997). Alexithymia scores were unrelated to age (r = −0.143, P = 0.526) and did not differ between male and female participants (t = 1.19, P = 0.245). TAS-20 scores were significantly correlated with T-STAI anxiety scores (r = 0.498, P = 0.018), indicating more trait anxiety in individuals with higher alexithymia scores. T-STAI scores were included in all analyses as covariates of no interest to control for the impact of trait anxiety.

Analysis of the accuracy data revealed no significant effect of Task [F(1,15) = 2.695, P = 0.121]. Neither TAS-20 nor T-STAI scores showed a significant effect on accuracy [TAS-20: F(1,15) = 1.290, P = 0.274, STAI: F < 1], suggesting that alexithymia did not impair the identification of neutral, angry, and surprised prosody (explicit task) and of metrical stress (implicit task). Analysis of RT data likewise revealed no significant main effects or interactions.

fMRI data

Whole-brain analysis

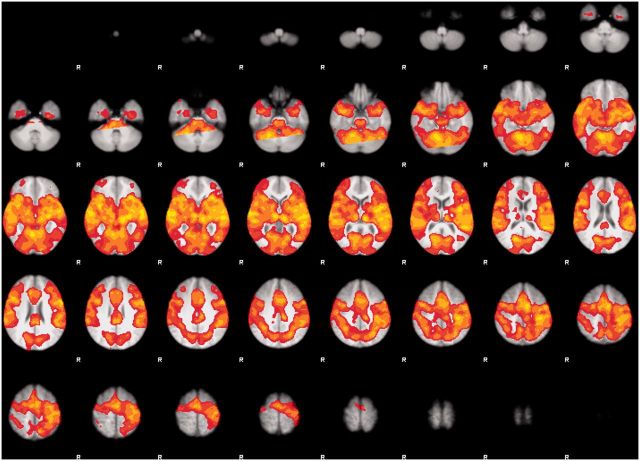

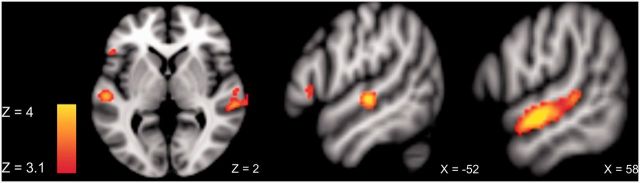

The whole-brain cluster-corrected (PFDR < 0.05) analysis for emotional > neutral prosody across both tasks revealed a large cluster in the right STG (Table 2). Figure 1 shows the global brain activity for prosodic stimuli compared with baseline (scanner noise) across tasks and Figure 2 shows the specific brain activity for emotional compared to neutral prosody across tasks.

Table 2.

Results of the whole-brain cluster-corrected analysis for emotional vs neutral prosody across tasks

| Area | Cluster size (voxels) | PFDR value | Z score | Cluster peaks |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Right STG | 673 | 0.05 | 4.48 | 60 | −10 | −6 |

| 3.95 | 62 | 0 | −10 | |||

| 3.95 | 64 | 4 | −10 | |||

| 3.71 | 60 | −30 | 2 | |||

| 3.47 | 72 | −28 | 4 | |||

| 3.42 | 52 | −42 | 8 | |||

Fig. 1.

Global brain activity during prosodic processing across tasks at the threshold Puncorr < 0.001.

Fig. 2.

ROI activity for emotional > neutral prosody across tasks at the threshold Puncorr < 0.001.

ROI analyses

Prosody vs baseline

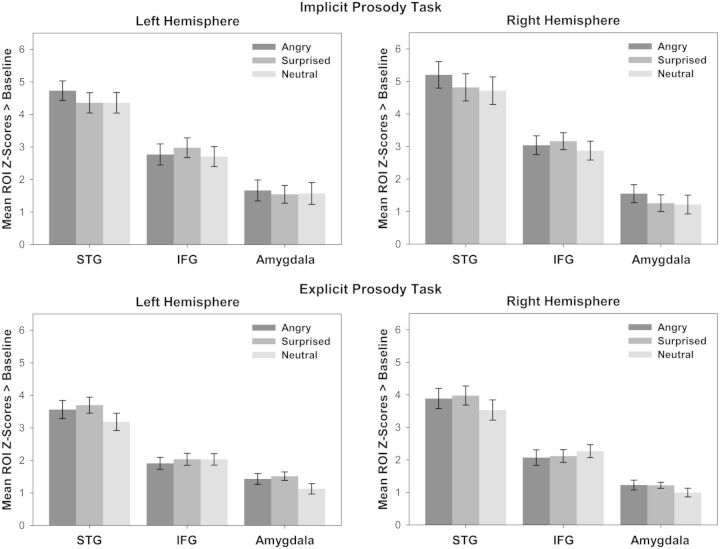

Figure 3 shows the mean ROI activity (STG, IFG and amygdala) in the left and right hemisphere in response to angry, surprised and neutral prosody vs baseline in the implicit (top panel) and the explicit (bottom panel) prosody task. RM-ANCOVA with z-scores of angry, surprised and neutral prosody vs baseline as dependent variable revealed a significant main effect of TAS-20 alexithymia scores as a between-subjects factor [F(1,15) = 7.119, P = 0.018]. T-STAI scores and Sex as between-subjects factors were not significant (F < 1). There was no main effect of Emotion and no interaction of Emotion with alexithymia, suggesting that effects applied to neutral prosody as well as to angry and surprised prosody. There was a significant main effect of ROI [F(2,30) = 3.663, P = 0.038] and Hemisphere [F(1,15) = 4.785, P = 0.045] as well as a three-way interaction ROI × Hemisphere × TAS-20 scores [F(2,30) = 3.698, P = 0.037].

Fig. 3.

Left-hemispheric (left) and right-hemispheric (right) ROI activity (mean z-scores) in response to angry, surprised and neutral prosody compared with baseline, in the implicit (top) and the explicit prosody task (bottom). Error bars indicate the standard error of the mean.

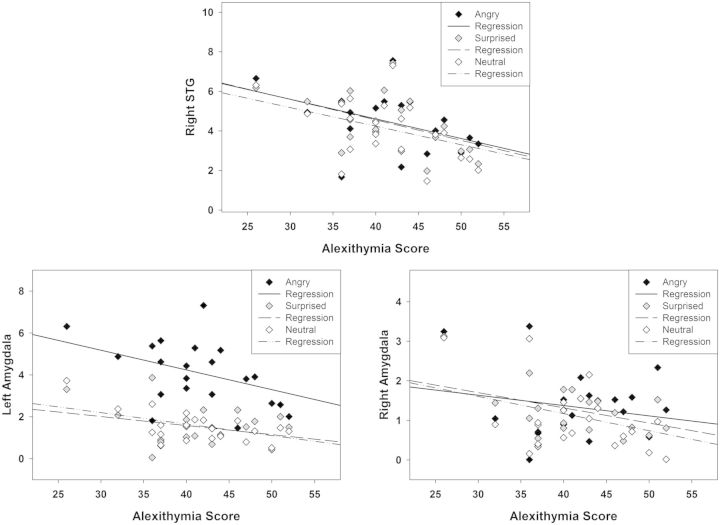

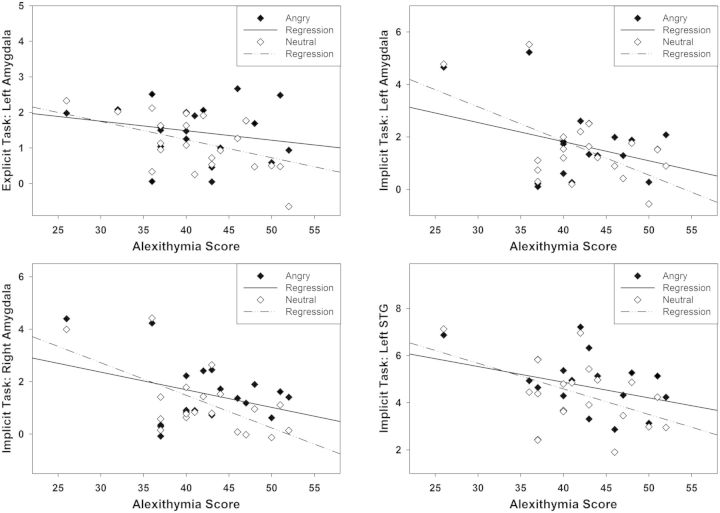

Follow-up tests on the factor ROI showed a significant main effect of TAS-20 scores as a between-subjects factor for the amygdala [F(1,15) = 8.302, P = 0.011], suggesting reduced activity of the left and right amygdala for neutral, angry and surprised prosody in individuals with high alexithymia scores. For the STG, there was a trend for TAS-20 alexithymia scores as a between-subjects factor [F(1,15) = 3.883, P = 0.068]. Furthermore, a main effect of Hemisphere [F(1,15) = 8.353, P = 0.011] and a Hemisphere × TAS-20 scores interaction [F(1,15) = 8.740, P = 0.010] was found, indicative of reduced activity of the right STG for neutral, angry and surprised prosody with increasing scores on alexithymia. Pearson’s correlations confirmed these effects. Figure 4 visualizes the correlations of alexithymia with activity of the left and right amygdala and the right STG for neutral, angry and surprised prosody vs baseline. Additional Pearson’s correlations on the three alexithymia facets showed that none of these correlations was driven by a particular facet but rather by the entire personality construct. For the IFG, there was no significant main effect or interaction with TAS-20 alexithymia scores.

Fig. 4.

Alexithymia correlations with ROI activity in response to angry, surprised and neutral prosody vs baseline across tasks. Top panel: Negative correlation of alexithymia with activity of the right STG. Bottom panel: Negative correlation of alexithymia with the left and right amygdala.

Emotional vs neutral prosody

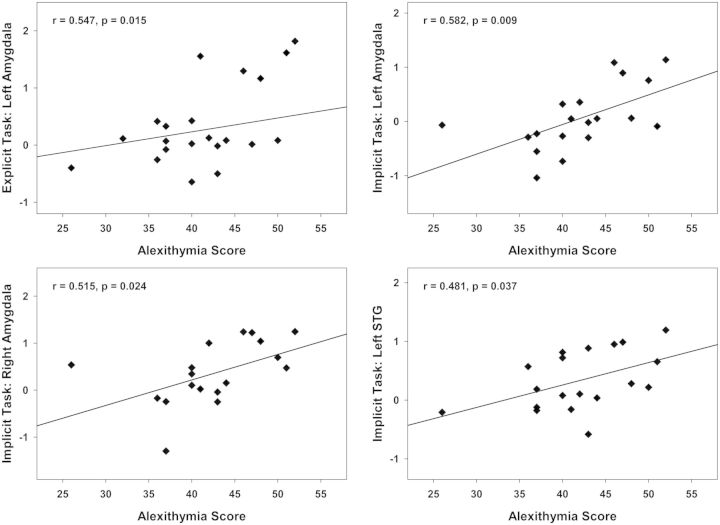

A second RM-ANCOVA with z-scores of angry vs neutral and surprised vs neutral prosody as dependent variable was conducted to identify emotion-specific effects of alexithymia. The results revealed a trend toward a main effect of TAS-20 alexithymia scores as a between-subjects factor [F(1,15) = 4.027, P = 0.063], and no main effect of T-STAI scores and Sex (F < 1). There was a significant Task × ROI × Hemisphere × Emotion × TAS-20 interaction [F(2,30) = 7.989, P = 0.002]. Follow-up Pearson’s correlations indicated positive correlations of alexithymia with activity of the left amygdala during the explicit evaluation of angry (>neutral) prosody (r = 0.547, P = 0.015). During the implicit perception of angry (>neutral) prosody, alexithymia correlated significantly with activity of the left (r = 0.582, P = 0.009) and right amygdala (r = 0.515, P = 0.024), and with activity of the left STG (r = 0.481, P = 0.037). A comparable pattern was observed in the right STG; however, this correlation was only marginally significant (r = 0.403, P = 0.087). Figure 5 shows the significant correlations of alexithymia with the bilateral amygdalae and the left STG for angry vs neutral prosody.

Fig. 5.

Alexithymia correlations with ROI activity for angry prosody directly contrasted to neutral prosody. Top panel: Positive correlation of alexithymia with activity of the left amygdala in the explicit (left) and the implicit task (right). Bottom panel: Positive correlations of alexithymia with activity of the right amygdala (left) and the left STG (right) in the implicit task.

However, rather than indicating higher ROI activity with increasing alexithymia scores, these seemingly positive correlations were caused by a relatively stronger ROI deactivation in response to neutral prosody (>baseline) than to angry prosody (>baseline) with increasing alexithymia scores, as visualized in Figure 6. Thus, individuals with higher alexithymia scores deactivated the left STG and the bilateral amygdalae less in response to angry prosody than in response to neutral prosody, suggesting that angry prosody was perceived as relatively more salient than neutral prosody.

Fig. 6.

Comparison between alexithymia correlations with ROI activity for neutral prosody > baseline (dashed lines) and angry prosody > baseline (solid lines), demonstrating stronger ROI deactivation for neutral than for angry prosody. Top panel: Negative correlation of alexithymia with activity of the left amygdala in the explicit (left) and the implicit task (right). Bottom panel: Negative correlations of alexithymia with activity of the right amygdala (left) and the left STG (right) in the implicit task.

Additional Pearson’s correlations with the three alexithymia facets showed that the correlation with the left amygdala in the explicit and implicit task and the correlation with the left STG in the implicit task were mainly driven by the facet ‘difficulty describing feelings’.

DISCUSSION

The aim of this study was to investigate the impact of alexithymia on the neural processing of emotions conveyed by speech prosody. Our initial analysis contrasting prosody to baseline showed that activity of the right STG and the bilateral amygdalae for angry, surprised and neutral prosody was reduced with increasing scores on alexithymia, both during implicit and explicit emotional processing. When specifically contrasting emotional vs neutral prosody, we observed a relatively stronger deactivation of the left STG and the bilateral amygdalae for neutral compared with angry prosody, particularly in individuals with difficulty describing their feelings.

This study is the first to investigate the neural basis of emotional prosody processing in alexithymia. Our finding of modulated brain activity in response to emotional prosody confirms previous findings of alexithymia-related deficits in visual emotional processing (e.g. Berthoz et al., 2002; Kano et al., 2003; Karlsson et al., 2008; Pouga et al., 2010) and suggests that such aberrant emotional processing extends to the auditory level. Our results further confirm previous EEG findings indicating a reduced sensitivity to the emotional qualities of speech prosody in alexithymia (Goerlich et al., 2011, 2012), and extend these findings by suggesting that such blunted processing may be localized to the STG and the amygdalae. In line with our result of a deactivation in these regions, the same regions have previously been observed to be less responsive to facial expressions of emotion in alexithymia (for a review, see Grynberg et al., 2012). Reduced STG activity has been found during the implicit (masked) processing of surprised (Duan et al., 2010) and happy and sad faces (Reker et al., 2010). Deactivation of the amygdala has been observed in response to fearful bodily expressions (Pouga et al., 2010) and during the implicit and explicit processing of emotional faces (Leweke et al., 2004; Kugel et al., 2008). The present findings extend these results by suggesting that the amygdalae and the STG also show a blunted response to emotions conveyed through the voice with increasing alexithymia scores. In addition, they confirm that also in the auditory domain, alexithymia affects emotional processing not only when attention is explicitly directed toward the emotional dimension but also when emotion is implicitly processed.

In line with a previous behavioral study on prosodic processing in alexithymia (Swart et al., 2009), we observed no alexithymia-related differences at the behavioral level. This pattern of differences at the neural or electrophysiological level in the absence of behavioral differences has been observed repeatedly in alexithymia during the processing of visual emotional information (Franz et al., 2004; Mériau et al., 2006; Vermeulen et al., 2008). In our previous EEG studies (Goerlich et al., 2011, 2012), we did not observe significant alexithymia-related differences in behavioral performance for angry and sad prosody either, despite of significant differences at the electrophysiological level. In addition, a recent study investigating prosodic processing as a function of neuroticism found a similar pattern of differences at the neural level in the absence of behavioral differences (Brück et al., 2011a). Thus, it appears that deficits in emotional prosody processing in a non-clinical alexithymia sample such as the current one might be of a rather subtle nature, and can thus be detected at the neural level even though they do not tend to surface at the behavioral level. A subtle deficit in emotional prosody processing seems not surprising considering that alexithymic individuals are generally high-functioning and socially adapted people with a pronounced tendency to social conformity.

In favor of this interpretation, alexithymia was associated with reduced activity in the STG and the amygdala, regions that are involved in earlier phases of emotional prosody processing (Wildgruber et al., 2009; Brück et al., 2011b; Kotz and Paulmann, 2011), but not in the IFG. The IFG is thought to mediate the final phase of emotional prosody processing, in which emotional prosodic information is explicitly evaluated and integrated with other cognitive processes (Brück et al., 2011b). The lack of a modulation of IFG activity in relation to alexithymia could be due to the good performance of participants and the absence of behavioral differences as a function of alexithymia. Taken together, the here observed pattern of alexithymia-related modulations of neural activity in areas involved in earlier phases of emotional prosody perception on the one hand, and the absence of neural modulations at the final stage on the other hand suggests that alexithymia might predominantly affect earlier stages of prosodic processing. These processing differences may be compensated in the final (pre-response execution) stage of this process, resulting in adequate behavioral performance.

Interestingly, we found that individuals with high alexithymia scores showed reduced ROI activity not only for emotional (angry and surprised) but also for neutral prosody, irrespective of whether they focused on emotional aspects of the stimuli or not. In fact, direct comparisons between angry and neutral prosody revealed that alexithymia was associated with a significantly higher deactivation of the left STG and the bilateral amygdalae for neutral compared with angry prosody. This association was driven particularly by the alexithymia facet difficulty describing feelings. On the one hand, this suggests that also individuals with difficulty describing feelings perceive an angry tone of voice as relatively more salient and attention-capturing than a neutral one, in line with the well-known phenomenon of emotional stimuli being more attention-capturing than neutral ones (for a review on emotional attention, see Vuilleumier, 2005). On the other hand, this finding indicates that individuals with difficulty describing their feelings may assign less personal significance to human voices conveying emotions, and even less to those using neutral prosody. This would indicate an opposite pattern than in psychopathological conditions such as borderline personality disorder, in which neutral faces can be perceived as threatening (Wagner and Linehan, 1999) and elicit the same degree of amygdala hyperactivity as emotional faces (Donegan et al., 2003), and in schizophrenia, in which amygdala deactivations for fearful compared with neutral faces in fact resulted from increased amygdala activity for neutral faces (Hall et al., 2008). Recent advances in neuroscience suggest that the role of the amygdala does not seem to be restricted to emotional stimuli but that this structure may represent a more general relevance detector for salient, personally and socially relevant, or novel stimuli (for reviews, see Armony, 2013; Jacobs et al., 2012; Sander et al., 2003). For instance, amygdala activity was found to be higher for neutral stimuli if these were socially relevant compared with neutral, non-social stimuli (Vrtička et al., 2012). In this line of reasoning, our findings of diminished neural responses to human voices regardless of emotionality could hint to the existence of a more general deficit in the processing of not only emotional but generally socially relevant information including speech prosody in alexithymia. However, this hypothesis should be considered speculative and remains to be tested in future studies.

Limitations

While the use of the TAS-20 scale facilitates comparability of our results to previous findings, it should be noted that this scale assesses only the cognitive dimension of alexithymia. Recent evidence suggests that the affective dimension of alexithymia, the dimension of emotional experience, may differentially affect emotional processing (Moormann et al., 2008; Bermond et al., 2010). In addition, while this study controlled for the influence of trait anxiety, alexithymia may also be associated with depression (Picardi et al., 2011) not assessed here, and levels of depression may alter the perception of emotional prosody (Naranjo et al., 2011; for a review, see Garrido-Vásquez et al., 2011). Thus, it may be worthwhile to take both alexithymia dimensions into account and to additionally control for levels of depression in future studies on prosodic perception in alexithymia.

Conclusions

Alexithymia seems to be associated with a blunted response of the STG and the amygdalae to speech prosody. This diminished response does not seem to be specific for emotional prosody but occurs also for neutral prosody, hinting to the possibility of a more general deficit in the processing of socially relevant information in alexithymia. Neural alterations in the processing of speech prosody may contribute to problems in social communication associated with this personality trait.

Appendix A. Pseudowords

KONPON

DINPIL

DULDIN

KONDON

DULDUN

DALDAN

PALDAN

DALPAL

REFERENCES

- Adolphs R, Tranel D. Intact recognition of emotional prosody following amygdala damage. Neuropsychologia. 1999;37:1285–92. doi: 10.1016/s0028-3932(99)00023-8. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H. Emotion recognition from faces and prosody following temporal lobectomy. Neuropsychology. 2001;15:396–404. doi: 10.1037//0894-4105.15.3.396. [DOI] [PubMed] [Google Scholar]

- Armony JL. Current emotion research in behavioral neuroscience: the role(s) of the amygdala. Emotion Review. 2013;5:104–15. [Google Scholar]

- Bagby R, Parker J, Taylor G. The twenty-item Toronto Alexithymia Scale: I. Item selection and cross-validation of the factor structure. Journal of Psychosomatic Research. 1994a;38:23–32. doi: 10.1016/0022-3999(94)90005-1. [DOI] [PubMed] [Google Scholar]

- Bagby R, Taylor G, Parker J. The twenty-item Toronto Alexithymia Scale: II. Convergent, discriminant, and concurrent validity. Journal of Psychosomatic Research. 1994b;38:33–40. doi: 10.1016/0022-3999(94)90006-x. [DOI] [PubMed] [Google Scholar]

- Bermond B, Bierman D, Cladder M, Moormann P, Vorst H. The cognitive and affective alexithymia dimensions in the regulation of sympathetic responses. International Journal of Psychophysiology. 2010;75:227–33. doi: 10.1016/j.ijpsycho.2009.11.004. [DOI] [PubMed] [Google Scholar]

- Berthoz S, Artiges E, Van de Moortele PF, et al. Effect of impaired recognition and expression of emotions on frontocingulate cortices: an fMRI study of men with alexithymia. The American Journal of Psychiatry. 2002;159:961–7. doi: 10.1176/appi.ajp.159.6.961. [DOI] [PubMed] [Google Scholar]

- Berthoz S, Consoli S, Perez-Diaz F, Jouvent R. Alexithymia and anxiety: compounded relationships? A psychometric study. European Psychiatry. 1999;14:372–8. doi: 10.1016/s0924-9338(99)00233-3. [DOI] [PubMed] [Google Scholar]

- Bird G, Silani G, Brindley R, White S, Frith U, Singer T. Empathic brain responses in insula are modulated by levels of alexithymia but not autism. Brain. 2010;133:1515–25. doi: 10.1093/brain/awq060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brück C, Kreifelts B, Kaza E, Lotze M, Wildgruber D. Impact of personality on the cerebral processing of emotional prosody. NeuroImage. 2011a;58:259–68. doi: 10.1016/j.neuroimage.2011.06.005. [DOI] [PubMed] [Google Scholar]

- Brück C, Kreifelts B, Wildgruber D. Emotional voices in context: a neurobiological model of multimodal affective information processing. Physics of Life Reviews. 2011b;8:383–403. doi: 10.1016/j.plrev.2011.10.002. [DOI] [PubMed] [Google Scholar]

- Brühl A, Viebke M, Baumgartner T, Kaffenberger T, Herwig U. Neural correlates of personality dimensions and affective measures during the anticipation of emotional stimuli. Brain Imaging and Behavior. 2011;5:86–96. doi: 10.1007/s11682-011-9114-7. [DOI] [PubMed] [Google Scholar]

- Bydlowski S, Corcos M, Jeammet P, et al. Emotion-processing deficits in eating disorders. International Journal of Eating Disorders. 2005;37:321–9. doi: 10.1002/eat.20132. [DOI] [PubMed] [Google Scholar]

- Canli T, Zhao Z, Desmond JE, Kang E, Gross J, Gabrieli JDE. An fMRI study of personality influences on brain reactivity to emotional stimuli. Behavioral Neuroscience. 2001;15:33–42. doi: 10.1037/0735-7044.115.1.33. [DOI] [PubMed] [Google Scholar]

- De Houwer J. What are implicit measures and why are we using them. In: Wiers RW, Stacy AW, editors. The Handbook of Implicit Cognition and Addiction. Mahwah, NJ: Erlbaum; 2006. pp. 219–44. [Google Scholar]

- Donegan HN, Sanislow CA, Blumberg HP, et al. Amygdala hyperreactivity in borderline personality disorder: implications for emotional dysregulation. Biological Psychiatry. 2003;54:1284–93. doi: 10.1016/s0006-3223(03)00636-x. [DOI] [PubMed] [Google Scholar]

- Duan X, Dai Q, Gong Q, Chen H. Neural mechanism of unconscious perception of surprised facial expression. NeuroImage. 2010;52:401–7. doi: 10.1016/j.neuroimage.2010.04.021. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Kreifelts B, Wiethoff S, et al. Differential influences of emotion, task, and novelty on brain regions underlying the processing of speech melody. Journal of Cognitive Neuroscience. 2009;21:1255–68. doi: 10.1162/jocn.2009.21099. [DOI] [PubMed] [Google Scholar]

- Franz M, Schaefer R, Schneider C, Sitte W, Bachor J. Visual event-related potentials in subjects with alexithymia: modified processing of emotional aversive information? American Journal of Psychiatry. 2004;161:728–35. doi: 10.1176/appi.ajp.161.4.728. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Ceravolo L, Grandjean D. Specific brain networks during explicit and implicit decoding of emotional prosody. Cerebral Cortex. 2011;22:1107–17. doi: 10.1093/cercor/bhr184. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Grandjean D. Multiple subregions in superior temporal cortex are differentially sensitive to vocal expressions: a quantitative meta-analysis. Neuroscience and Biobehavioral Reviews. 2013a;37:24–35. doi: 10.1016/j.neubiorev.2012.11.002. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Grandjean D. Amygdala subregions differentially respond and rapidly adapt to threatening voices. Cortex. 2013b;49:1394–1403. doi: 10.1016/j.cortex.2012.08.003. [DOI] [PubMed] [Google Scholar]

- Garrido-Vásquez P, Jessen S, Kotz SA. Perception of emotion in psychiatric disorders: on the possible role of task, dynamics, and multimodality. Social Neuroscience. 2011;6:515–36. doi: 10.1080/17470919.2011.620771. [DOI] [PubMed] [Google Scholar]

- Goerlich KS, Aleman A, Martens S. The sound of feelings: electrophysiological responses to emotional speech in alexithymia. PLoS One. 2012;7:e36951. doi: 10.1371/journal.pone.0036951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goerlich KS, Witteman J, Aleman A, Martens S. Hearing feelings: affective categorization of music and speech in alexithymia, an ERP study. PLoS One. 2011;6:e19501. doi: 10.1371/journal.pone.0019501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grynberg D, Luminet O, Corneille O, Grezes J, Berthoz S. Alexithymia in the interpersonal domain: a general deficit of empathy? Personality and Individual Differences. 2010;49:845–50. [Google Scholar]

- Grynberg D, Chang B, Corneille O, et al. Alexithymia and the processing of emotional facial expressions (EFEs): systematic review, unanswered questions and further perspectives. PLoS One. 2012;7:e42429. doi: 10.1371/journal.pone.0042429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guttman H, Laporte L. Alexithymia, empathy, and psychological symptoms in a family context. Comprehensive Psychiatry. 2002;43:448–55. doi: 10.1053/comp.2002.35905. [DOI] [PubMed] [Google Scholar]

- Hall J, Whalley HC, McKirdy JW, et al. Overactivation of fear systems to neutral faces in schizophrenia. Biological Psychiatry. 2008;64:70–3. doi: 10.1016/j.biopsych.2007.12.014. [DOI] [PubMed] [Google Scholar]

- Hamann S, Canli T. Individual differences in emotion processing. Current Opinion in Neurobiology. 2004;14:233–38. doi: 10.1016/j.conb.2004.03.010. [DOI] [PubMed] [Google Scholar]

- Hooker C, Verosky S, Miyakawa A, Knight R, D'Esposito M. The influence of personality on neural mechanisms of observational fear and reward learning. Neuropsychologia. 2008;46:2709–24. doi: 10.1016/j.neuropsychologia.2008.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacob H, Kreifelts B, Brück C, Erb M, Hösl F, Wildgruber D. Cerebral integration of verbal and nonverbal emotional cues: impact of individual nonverbal dominance. NeuroImage. 2012;61:738–47. doi: 10.1016/j.neuroimage.2012.03.085. [DOI] [PubMed] [Google Scholar]

- Jacobs RH, Renken R, Aleman A, Cornelissen FW. The amygdala, top-down effects, and selective attention to features. Neuroscience and Biobehavioral Reviews. 2012;36:2069–84. doi: 10.1016/j.neubiorev.2012.05.011. [DOI] [PubMed] [Google Scholar]

- Kano M, Fukudo S, Gyoba J, et al. Specific brain processing of facial expressions in people with alexithymia: an H2 15O-PET study. Brain. 2003;126:1474–84. doi: 10.1093/brain/awg131. [DOI] [PubMed] [Google Scholar]

- Karlsson H, Näätänen P, Stenman H. Cortical activation in alexithymia as a response to emotional stimuli. The British Journal of Psychiatry. 2008;192:32–8. doi: 10.1192/bjp.bp.106.034728. [DOI] [PubMed] [Google Scholar]

- Kooiman C, Spinhoven P, Trijsburg R. The assessment of alexithymia: a critical review of the literature and a psychometric study of the Toronto Alexithymia Scale-20. Journal of Psychosomatic Research. 2002;53:1083–90. doi: 10.1016/s0022-3999(02)00348-3. [DOI] [PubMed] [Google Scholar]

- Kotz S, Paulmann S. Emotion, language, and the brain. Language and Linguistics Compass. 2011;5:108–25. [Google Scholar]

- Kugel H, Eichmann M, Dannlowski U, et al. Alexithymic features and automatic amygdala reactivity to facial emotion. Neuroscience Letters. 2008;435:40–4. doi: 10.1016/j.neulet.2008.02.005. [DOI] [PubMed] [Google Scholar]

- Leitman DI, Wolf DH, Ragland JD, et al. “It’s not what you say but how you say it”: a reciprocal temporo-frontal network for affective prosody. Frontiers in Human Neuroscience. 2010;4:19–31. doi: 10.3389/fnhum.2010.00019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leweke F, Stark R, Milch W, et al. Patterns of neuronal activity related to emotional stimulation in alexithymia. Psychotherapy and Psychosomatic Medicine. 2004;54:437–44. doi: 10.1055/s-2004-828350. [DOI] [PubMed] [Google Scholar]

- Mantani T, Okamoto Y, Shirao N, Okada G, Yamawaki S. Reduced activation of posterior cingulate cortex during imagery in subjects with high degrees of alexithymia: a functional magnetic resonance imaging study. Biological Psychiatry. 2005;57:982–90. doi: 10.1016/j.biopsych.2005.01.047. [DOI] [PubMed] [Google Scholar]

- Mériau K, Wartenburger I, Kazzer P, et al. A neural network reflecting individual differences in cognitive processing of emotions during perceptual decision making. NeuroImage. 2006;33:1016–27. doi: 10.1016/j.neuroimage.2006.07.031. [DOI] [PubMed] [Google Scholar]

- Moormann P, Bermond B, Vorst H, Bloemendaal A, Teijn S, Rood L. New Avenues in Alexithymia Research: the Creation of Alexithymia Types. Emotion Regulation: Conceptual and Clinical Issues [e-book] New York, NY: Springer Science + Business Media; 2008. pp. 27–42. [Google Scholar]

- Mothes-Lasch M, Mentzel H, Miltner W, Straube T. Visual attention modulates brain activation to angry voices. The Journal of Neuroscience. 2011;31:9594–8. doi: 10.1523/JNEUROSCI.6665-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naranjo C, Kornreich C, Campanella S, et al. Major depression is associated with impaired processing of emotion in music as well as in facial and vocal stimuli. Journal of Affective Disorders. 2011;128:243–51. doi: 10.1016/j.jad.2010.06.039. [DOI] [PubMed] [Google Scholar]

- Ormel J, Bastiaansen JA, Riese H, et al. The biological and psychological basis of neuroticism: current status and future directions. Neuroscience and Biobehavioral Reviews. 2013;37:59–72. doi: 10.1016/j.neubiorev.2012.09.004. [DOI] [PubMed] [Google Scholar]

- Parker PD, Prkachin KM, Prkachin GC. Processing of facial expressions of negative emotion in alexithymia: the influence of temporal constraint. Journal of Personality. 2005;73:1087–107. doi: 10.1111/j.1467-6494.2005.00339.x. [DOI] [PubMed] [Google Scholar]

- Picardi A, Fagnani C, Gigantesco A, Toccaceli V, Lega I, Stazi M. Genetic influences on alexithymia and their relationship with depressive symptoms. Journal of Psychosomatic Research. 2011;71:256–63. doi: 10.1016/j.jpsychores.2011.02.016. [DOI] [PubMed] [Google Scholar]

- Pouga L, Berthoz S, de Gelder B, Grèzes J. Individual differences in socioaffective skills influence the neural bases of fear processing: the case of alexithymia. Human Brain Mapping. 2010;31:1469–81. doi: 10.1002/hbm.20953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prkachin G, Casey C, Prkachin K. Alexithymia and perception of facial expressions of emotion. Personality and Individual Differences. 2009;46:412–7. [Google Scholar]

- Reker M, Ohrmann P, Rauch AV, et al. Individual differences in alexithymia and brain response to masked emotion faces. Cortex. 2010;46:658–67. doi: 10.1016/j.cortex.2009.05.008. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Vocal communication of emotion: a review of research paradigms. Speech Communication. 2003;40:227–56. [Google Scholar]

- Sander D, Grafman J, Zalla T. The human amygdala: an evolved system for relevance detection. Reviews in the Neurosciences. 2003;14:303–16. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Pourtois G, et al. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. NeuroImage. 2005;28:848–58. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends in Cognitive Science. 2006;10:24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Sifneos PE. The prevalence of ‘alexithymic’ characteristics in psychosomatic patients. Psychotherapy & Psychosomatics. 1973;22:255–62. doi: 10.1159/000286529. [DOI] [PubMed] [Google Scholar]

- Spielberger CD, Gorsuch RL, Lushene RE. STAI Manual for the State-Trait Anxiety Inventory. Palo Alto: Consulting Psychologists Press; 1970. [Google Scholar]

- Spitzer C, Siebel-Jurges U, Barnow S, Grabe HJ, Freyberger HJ. Alexithymia and interpersonal problems. Psychotherapy & Psychosomatics. 2005;74:240–6. doi: 10.1159/000085148. [DOI] [PubMed] [Google Scholar]

- Swart M, Kortekaas R, Aleman A. Dealing with feelings: characterization of trait alexithymia on emotion regulation strategies and cognitive-emotional processing. PLoS One. 2009;4:e5751. doi: 10.1371/journal.pone.0005751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor GJ, Bagby RM, Parker JDA. Disorders of Affect Regulation: Alexithymia in Medical and Psychiatric Illness. Cambridge: Cambridge University Press; 1997. [Google Scholar]

- van der Velde J, Servaas MN, Goerlich KS, et al. Neural correlates of alexithymia: A meta-analysis of emotion processing studies. Neuroscience & Biobehavioral Reviews. 2013;37:1774–85. doi: 10.1016/j.neubiorev.2013.07.008. [DOI] [PubMed] [Google Scholar]

- Vanheule S, Desmet M, Meganck R, Bogaerts S. Alexithymia and interpersonal problems. Journal of Clinical Psychology. 2007;63:109–17. doi: 10.1002/jclp.20324. [DOI] [PubMed] [Google Scholar]

- Van Kleef G. How emotions regulate social life: the emotions as social information (EASI) model. Current Directions in Psychological Science. 2009;18:184–8. [Google Scholar]

- Vermeulen N, Luminet O, de Sousa MC, Campanella S. Categorical perception of anger is disrupted in alexithymia: Evidence from a visual ERP study. Cognition and Emotion. 2008;22:1052–67. [Google Scholar]

- Vrtička P, Sander D, Vuilleumier P. Lateralized interactive social content and valence processing within the human amygdala. Frontiers in Human Neuroscience. 2012;6:358. doi: 10.3389/fnhum.2012.00358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Wagner AW, Linehan MM. Facial expression recognition ability among women with borderline personality disorder: implications for emotion regulation? Journal of Personality Disorders. 1999;13:329–44. doi: 10.1521/pedi.1999.13.4.329. [DOI] [PubMed] [Google Scholar]

- Wiethoff S, Wildgruber D, Grodd W, Ethofer T. Response and habituation of the amygdala during processing of emotional prosody. Neuroreport. 2009;20:1356–60. doi: 10.1097/WNR.0b013e328330eb83. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Ethofer T, Grandjean D, Kreifelts B. A cerebral network model of speech prosody comprehension. International Journal of Speech-Language Pathology. 2009;11:277–81. [Google Scholar]

- Wildgruber D, Ethofer T, Kreifelts B, Grandjean D. 2008. Cerebral processing of emotional processing: a network model based on fMRI studies. SP-2008, pp. 217–22. http://isca-seech.org/archive/sp2008/papers/2p08_217.pdf. [Google Scholar]

- Wildgruber D, Riecker A, Hertrich I, et al. Identification of emotional intonation evaluated by fMRI. NeuroImage. 2005;24:1233–41. doi: 10.1016/j.neuroimage.2004.10.034. [DOI] [PubMed] [Google Scholar]

- Witteman J, Van Heuven VJP, Schiller NO. Hearing feelings: a quantitative meta-analysis on the neuroimaging literature of emotional prosody perception. Neuropsychologia. 2012;50:2752–63. doi: 10.1016/j.neuropsychologia.2012.07.026. [DOI] [PubMed] [Google Scholar]

- Witteman J, van IJzendoorn M, van de Velde D, van Heuven VJP, Schiller NO. The nature of hemispheric specialization for linguistic and emotional prosodic perception: a meta-analysis of the lesion literature. Neuropsychologia. 2011;49:3722–38. doi: 10.1016/j.neuropsychologia.2011.09.028. [DOI] [PubMed] [Google Scholar]

- Woolrich M, Jenkinson M, Brady J, Smith S. Fully Bayesian spatio-temporal modelling of FMRI data. IEEE Transactions on Medical Imaging. 2004;23:213–31. doi: 10.1109/TMI.2003.823065. [DOI] [PubMed] [Google Scholar]