Abstract

Processing information from faces is crucial to understanding others and to adapting to social life. Many studies have investigated responses to facial emotions to provide a better understanding of the processes and the neural networks involved. Moreover, several studies have revealed abnormalities of emotional face processing and their neural correlates in affective disorders. The aim of this study was to investigate whether early visual event-related potentials (ERPs) are affected by the emotional skills of healthy adults. Unfamiliar faces expressing the six basic emotions were presented to 28 young adults while recording visual ERPs. No specific task was required during the recording. Participants also completed the Social Skills Inventory (SSI) which measures social and emotional skills. The results confirmed that early visual ERPs (P1, N170) are affected by the emotions expressed by a face and also demonstrated that N170 and P2 are correlated to the emotional skills of healthy subjects. While N170 is sensitive to the subject’s emotional sensitivity and expressivity, P2 is modulated by the ability of the subjects to control their emotions. We therefore suggest that N170 and P2 could be used as individual markers to assess strengths and weaknesses in emotional areas and could provide information for further investigations of affective disorders.

Keywords: facial expression of emotion, visual ERPs, emotional skills, healthy adults

INTRODUCTION

Facial expressions are assumed to be one of the main aspects of face processing involved in social interaction. More rapid than language, facial emotions make it possible for us to infer states of mind rapidly and to understand the feelings and intentions of others, allowing us to establishing interpersonal connections early in life. By adulthood, individuals are not only highly proficient and very quick at recognising expressions of emotion in others (De Sonneville et al., 2002; Ekman, 2003) but also able to identify even very subtle expressions of emotion (Calder et al., 1996). Because of their importance in everyday life, responses to emotional faces have been the subject of considerable interest in the field of psychology, neuroscience and psychiatry.

Emotional skills involve the ability to carry out (i) accurate decoding and understanding of emotional messages as well as (ii) accurate reasoning about emotions and the ability to use emotions and emotional knowledge to enhance thought. In this view, the first of these skills could rely more on emotional sensitivity (ES), whereas the second could be more linked to emotional expressivity (EE) and emotional control (EC). A more complete understanding of emotional ability requires an appreciation of how its measures relate to life outcomes, i.e. what does emotional abilities/intelligence predict (or not) in life outcomes? [for a review see (Mayers et al., 2008)]. The most consistent findings reported in literature relate emotional intelligence to social functioning. Some studies have addressed the ways in which emotions facilitate thinking and action. For example, emotions allow people to be better decision makers (Lyubomirsky et al., 2005), helping them pick the more relevant response in term of survival and social benefits (Damasio, 1998). In addition, higher emotional abilities lead to greater self-perception of social competence and less use of destructive interpersonal strategies (Brackett et al., 2004; Lopes et al., 2004). Moreover, individuals with higher emotional skills are perceived more positively by others (Brackett et al., 2006; Lopes et al., 2005). In a more individual approach, emotional abilities also count for a better psychological well-being (Matthews et al., 2002; Bastian et al., 2005; Gohm et al., 2005). Thus, these influences of emotional abilities may be considered as a part of the mechanisms driving individual and social adaptation. In the point above, we have briefly painted a picture regarding what the consequences in different emotional skills might lead to. We will now distill what we regard as key results concerning neural correlates of faces and emotion processing.

Over the past 15 years, several electrophysiological studies have investigated the timing of face processing (Vuilleumier and Pourtois, 2007). A ‘face-sensitive’ component, referred as N170 (Bentin et al., 1996; George et al., 1996), as well as more sensory visual ERPs (P1, P2) appeared to be related to face processing at different time points. Although the P1 is not ‘ face specific’, this reflection of the first stages of visual processing (detection) (Taylor and Pang, 1999; Batty and Taylor, 2002), registered as early as 90–130 ms at occipital sites, presents shorter latency in response to faces than objects (Taylor et al., 2001). Seventy milliseconds after, in occipito-temporal sites, the N170 has been also shown to be larger for pictures of faces than for other object categories (Bentin et al., 1996; Ganis et al., 2012; Itier and Taylor, 2004) and to be modulated by face size, orientation and inversion (Itier et al., 2006; Chammat et al., 2010; Jacques and Rossion, 2010). Finally, at the same sites as P1, recorded at around 200–250 ms, the P2 component may reflect a later stage of face processing, corresponding to the perception of second-order configural information (spatial relationship between the different facial features) (Itier and Taylor, 2004; Boutsen et al., 2006).

Emotion recognition and face processing are highly intertwined. Thus, it is not surprising that numerous electroencephalographic (EEG) and magnetoencephalographic (MEG) studies have found emotional modulation on N170 (Batty and Taylor, 2003; Streit et al., 2003; Caharel et al., 2005; Morel et al., 2009). For example, the amplitude of N170 in response to fearful faces is greater than to neutral or surprised faces (Batty and Taylor, 2003; Ashley et al., 2004; Rotshtein et al., 2010), and positive emotions evoke a N170 significantly earlier than negative emotions (Batty and Taylor, 2003). P1 is also affected by facial emotion (Batty and Taylor, 2003; Eger et al., 2003; Pourtois et al., 2004; Brosch et al., 2008), suggesting the possibility of extremely rapid emotional face processing. For example, fear evokes a delayed P1 component compared to positive emotions (happy and surprised faces), whereas happy faces evoked an earlier P1 than the three negative emotions (fear, disgust and sadness) as early as childhood (Batty and Taylor, 2006). P1 amplitude was also modulated by emotion in adults, increasing in response to sad (Chammat et al., 2010), fearful (Pourtois et al., 2004, 2005; Rotshtein et al., 2010) and happy faces (Duval et al., 2011) compared with neutral faces. Fearful faces also evoked a larger P1 response than happy faces (Pourtois et al., 2004, 2005). It therefore seems that facial expression of emotion involves processes occurring as early as 100–170 ms. Moreover, recent findings have also revealed later emotion-related modulations of P2 (200–250 ms) (Holmes et al., 2003; Ashley et al., 2004; Eimer and Holmes, 2007; Rotshtein et al., 2010; Yang et al., 2010), the EPN (Early Posterior Negativity: 240–280 ms) (Junghofer et al., 2001; Sato et al., 2001; Schupp et al., 2004) and the LPP (Late Positive Potential: 400–800 ms) (Krolak-Salmon et al., 2001; Sato et al., 2001; Batty and Taylor, 2003; Schupp et al., 2004). Both early and late ERP components affected by facial emotions are thought to reflect distinct cognitive processes. Specifically, early ERP components such as P1 and P2 as well as EPN are thought to reflect early selective visual processing of emotional stimuli, whereas late ERP components such as LPP are thought to reflect more strategic high-level processes such as decision making, response criteria and sustained elaborate analysis of emotional content (Batty and Taylor, 2003; Ashley et al., 2004; Schupp et al., 2004; Moser et al., 2006), and are thought to be driven by motivational salience (Hajcak et al., 2010).

Men and women seem to process emotions differently. From infancy through adulthood, reviews of the literature on sex differences have revealed a female advantage in the ability to distinguish between and interpret facial expressions as well as other non-verbal emotional cues (Boyatzis et al., 1993; Leppanen and Hietanen, 2001; Lewin et al., 2001; Lewin and Herlitz, 2002; Grispan et al., 2003). In addition to common knowledge of gender differences in processing emotion, neuroimaging studies have also reported gender differences in cerebral activity in response to relevant emotional stimuli (Batty and Taylor, 2006; Lee et al., 2010; Proverbio et al., 2010). Therefore, both behavioural and cerebral sex differences have been pointed out in emotional processing, suggesting that emotional skills might interact with brain activity.

Difficulties in face processing and emotional face processing have been reported in almost all affective disorders, such as autistic spectrum disorders (ASD) (Hobson, 1986; Deruelle et al., 2004; Clark et al., 2008; Wright et al., 2008), major depression (Surguladze et al., 2004; Sterzer et al., 2011) and bipolar disorders (BD) (Addington and Addington, 1998; Malhi et al., 2007; Rosen and Rich, 2010). Behaviourally, these alterations include impairments in the recognition and labelling of facial affect and/or an attentional bias towards negative emotional cues, which could explain some of the social interactions problems in such patients. Moreover, recent studies have revealed abnormalities in the timing of neural activations involved in face and emotion perception in these affective disorders. Both the P1 and the N170 component appeared to exhibit atypical characteristics (latencies and amplitude) perceiving faces expressing emotions in people with autism compared with control subjects (Dawson et al., 2004; O'Connor et al., 2005; De Jong et al., 2008; Akechi et al., 2010; Batty et al., 2011). Moreover, dipole source analysis revealed that the activation of the generator sustaining ERP relating to face processing (visual cortex, fusiform gyrus, medial prefrontal lobe) were significantly weaker and/or slower in autism compared with controls during both explicit and implicit emotion-processing tasks (Wong et al., 2008). Other results have provided evidence of dysfunctional ERP patterns underlying facial affect processing in subjects with BD, patients exhibiting reduced N170 (Degabriele et al., 2011) and P1 amplitude (Degabriele et al., 2011) compared with controls. Finally, abnormalities in the early visual processing of faces and emotions have also been reported in major depression, with larger P1 amplitude for sad cues compared with the normal group (Dai and Feng, 2009).

Therefore, both emotional behaviour and ERP responses to facial emotion appear to be affected in affective disorders, again suggesting that emotional skills interact with brain activity.

The aim of the current study was to investigate the relationship between visual ERPs in response to facial expressions of emotion and individual differences in emotional skills in healthy adults. Previous studies on healthy people have revealed differential modulation of visual ERPs according to the type of emotion conveyed by the stimuli (exogenous factor). Moreover, with regard to previous findings regarding sex differences and affective disorders, we hypothesise that these components should also be influenced by the subjects’ emotional abilities (endogenous factor).

METHODS

Participants

Twenty-eight young adults with typical development and normal or corrected to normal vision were tested (14 males, aged from 21 to 30 years, M = 23.75 ± 2 years). None of the participants were reported to suffer from social disorders (including depressive syndrome) or to be taking medication that could affect their mood or cerebral activity. All subjects provided informed written consent prior to their participation in the study.

Stimuli, task and assessments of emotional skills

We used EEG recordings and the Social Skills Inventory (SSI) (Riggio, 1989) to investigate the relationship between characteristics of visual ERPs in response to expression of facial emotion and individual differences in emotional skills.

For the social and emotional assessments, participants completed the SSI (Riggio, 1989), which measures social and ES, expressivity and control. The SSI is a 90 items self-reported measurement tool widely used in psychological research to assess basic social skills that underlie social competence. It evaluates social and emotional skills and identifies social strengths and weaknesses. Subjects respond to the 90 items using a 5-point scale ranging from ‘Not at all like me’ to ‘Exactly like Me’, indicating the extent to which the description of the item applies to them. Scores are recorded for each of the scales, and a combined score is given to indicate overall social abilities. The SSI assesses skills with six distinct factors (15 questions measuring each factor): EE, ES, EC, Social Expressivity, Social Sensitivity and Social Control. Once these six primary skill scores have been calculated, the SSI also provides an Equilibrium Index (EI) score indicating the balance between all the different factors. An EI score over 39 suggests a satisfactory balance between the SSI dimensions and is indicative of strong overall social skills, whereas an EI score under 39 indicates a significant imbalance between the social skills dimensions and general ineffectiveness in communication skills (Riggio, 2003). In this study, we were especially interested in the emotional scores and the EI. On the Emotional dimension, EE assesses the ability to encode and transmit emotional messages, ES evaluates skill in decoding and understanding emotional messages and EC corresponds to the degree to which the responder is efficiently able to regulate his non-verbal display of felt emotions.

For the ERP task, 210 black and white photographs of adult faces expressing the six basic emotions (Batty and Taylor, 2003b) as well as neutral faces (Figure 1) were presented twice in three blocks in random order. Each block contained 70 different facial stimuli, 10 of each expression, 5 males and 5 females. The mean luminance and local contrasts were equalised across expressions (Batty and Taylor, 2003). All photographs were 11 × 8 cm and were presented using Presentation software on a light grey background on a computer screen 120 cm in front of the subject (visual angle of the stimuli = 3.8°) for 500 ms, with a random ISI (1200–1600 ms). Subjects were comfortably seated on a chair and were told that they would be shown a set of photographs without any further information. To ensure that subjects paid attention to the stimuli, we asked the participants to stare at a fixation cross that preceded each stimulus. They were naïve regarding the specific issues being investigated. Total recording time lasted 15 min including breaks.

Fig. 1.

Examples of faces used as stimuli, expressing the six basic emotional expressions (sadness, fear, disgust, anger, surprise and happiness) and neutral. All of the faces were correctly classified by emotion by at least 80% of 20 observers (who did not serve as subjects in this experiment). See Batty and Taylor (2003a).

Electrophysiological acquisition and analysis

EEG data were recorded from 18 Ag/AgCl active electrodes fixed on the scalp according to the international 10–20 system (Oostenveld and Praamstra, 2001): Fz, Cz, F3, C3, O1, T3, T5 and their homologous locations on the right hemiscalp. Additional electrodes were placed at M1 and M2 (left and right mastoid sites), and vertical electro-oculography (EOG) were recorded with electrodes at the superior and inferior orbital ridge. A nose electrode was chosen as reference during the recording, an average reference being calculated offline (Picton et al., 2000; Joyce and Rossion, 2005). Impedance was maintained below 5 kΩ.

The EEG and EOG were amplified with a band-pass filter (0.1–70 Hz) and digitised at a sampling rate of 500 Hz. The whole experiment was controlled by a Compumedics NeuroScan EEG system (Synamps amplifier, Scan 4.3 and Stim2 software). EEG contaminated with ocular and motor activity was rejected before averaging. Trials were then averaged according to the emotions expressed by the faces (a threshold of 50 trials per subject per emotion was required) over a 1100 ms analysis period, including a 100 ms pre-stimulus baseline, and were digitally filtered (0–30 Hz). The ELAN software package for analysis and visualisation of EEG–ERPs was used (Aguera et al., 2011). Visual ERPs (P1, N170 and P2) latencies were measured for each subject at the electrodes sites of interest. ERPs amplitudes were measured at this latency on both hemispheres within a 40 or 60 ms time window around the peak of the grand average waveform, as recommended in international ERP guidelines (Picton et al., 2000). P1 and P2 were measured at O1, O2 (between 80–120 ms and 190–250 ms, respectively); N170 was measured at T5 and T6 (between 120 and 180 ms).

Data were analysed using repeated-measures analysis of variance (ANOVA) with sex (2; men, women) as the between-subject factor and emotions (7; sadness, fear, disgust, anger, surprise and happiness plus neutral) and hemisphere (2; right and left) as the within-subject factors. Results were corrected by Huynh–Feldt test. Significant interactions were followed by Newman–Keuls post hoc comparisons to determine where the differences lay. Then, in order to meet the study’s aims, correlations were performed to investigate how early visual ERPs were related to the subjects’ emotional abilities. Pearson correlations were computed between amplitude and latency of N170, P1 and P2 components and the four emotional subscores (EE, ES, EC and EI) with the SSI.

RESULTS

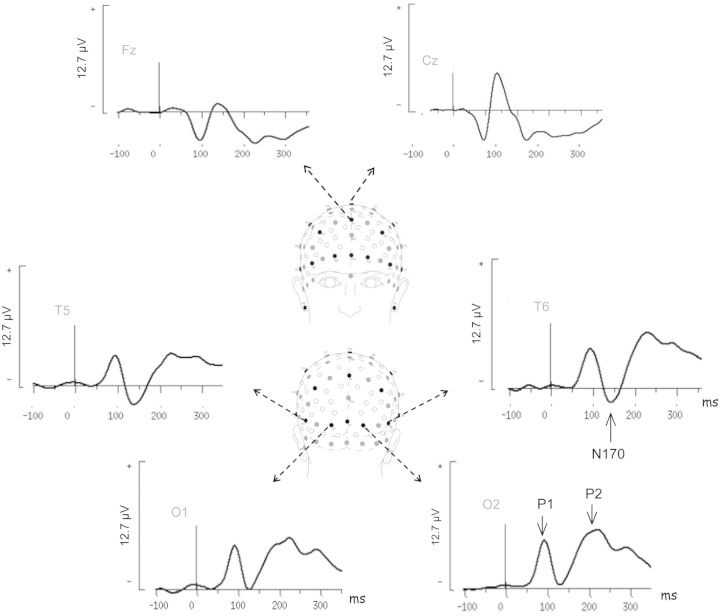

Different stages of processing were assessed by measuring the P1, P2 and N170 ERP components previously shown to be differentially sensitive to faces over posterior temporo-occipital regions (Figure 2). Statistical results and post hoc comparisons are summarised in Tables 1 and 2, respectively.

Fig. 2.

Grand average ERPs for neutral faces from six electrodes (O1, O2, T5, T6, Fz and Cz). The arrow indicates the components measured: P1 and P2 were recorded at occipital sites (O1 and O2) and N170 in temporal regions (T5 and T6). Head image indicating electrode positions and labels in the 10–20 system (black circles) was extracted from Oostenveld and Praamstra (2001).

Table 1.

Summary analysis of ANOVA and Pearson correlations

| P1 |

N170 |

P2 |

||||

|---|---|---|---|---|---|---|

| Amplitude | Latency | Amplitude | Latency | Amplitude | Latency | |

| ANOVA | ||||||

| Emotion | P = 0.044* | P = 0.025* | P = 00.09 | P < 0.00* | NS | NS |

| Sex | P = 0.006* | NS | NS | P = 0.012* | NS | NS |

| Hemisphere | NS | NS | NS | NS | NS | NS |

| Pearson | ||||||

| EE | NS | NS | P = 0.035* | NS | NS | NS |

| ES | NS | NS | NS | P = 0.036* | NS | NS |

| EC | NS | NS | NS | NS | P = 0.039* | NS |

| EI | NS | NS | NS | NS | NS | NS |

ERP data were submitted to repeated-measures of ANOVA according to emotion (7), sex (2) and hemisphere (2). Pearson correlations were performed between the ERP characteristics (amplitude and latency) averaged for all emotions and the SSI emotion subscores (EE, ES, EC, EI).

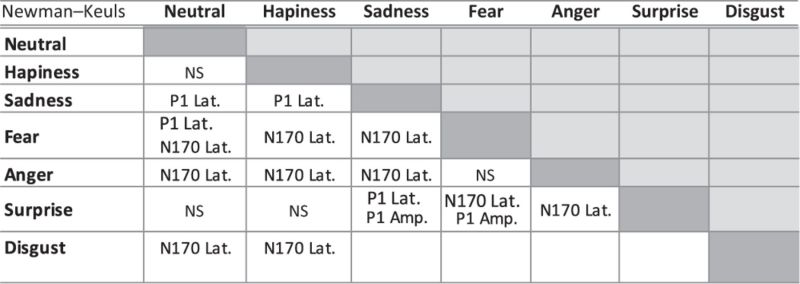

Table 2.

Summary analysis of Neuman-Keuls post hoc

|

Significant effects of emotion revealed by ANOVA analyses were followed by Newman-Keuls post hoc comparisons to determine where the differences lay.

ANOVA analysis: effects of hemisphere, sex and emotions expressed by faces

P1 had a mean latency of 95.3 ± 8.7 ms and mean amplitude of 5.4 ± 3.7 µV, which varied overall with the facial emotions expressed by the faces. An effect of emotion was found on P1 latency [F (6,156) = 2.49, P = 0.025, ε (H–F) = 0.78] post hoc analyses revealed that sadness evoked a later P1 response than disgust (P < 0.05), neutral (P < 0.01), surprise (P < 0.02) or happiness (P < 0.03); fearful faces evoked a later P1 response compared with neutral faces (P < 0.02) (Table 3). The overall effect of emotion on P1 amplitude [F (6,156) = 2.22, P = 0.045, ε (H–F = 0.98)] was influenced by a smaller P1 response for surprised faces than sad (P < 0.01), disgusted (P < 0.02) or fearful faces (P < 0.04) (Figure 3A and Table 3). For P1 amplitude, there was a main effect of sex [F (1, 26) = 8.65, P = 0.006], women having a larger P1 response (7.2 ± 3.5 µV) than men (3.6 ± 2.8 µV). No hemisphere effect was observed on P1. No significant or tendency to significant interaction between sex and emotion was found.

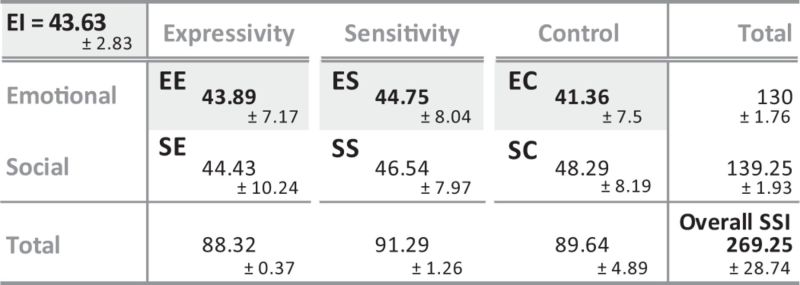

Table 3.

Mean SSI profile of the 28 healthy young adults

|

The participants were all in the 'normal' range defined by the SSI (cut-off: 37.30 < EE < 56.36; 36.71 < ES < 58.25; 37.58 < EC < 54.25 and 39.25 < EI < 45.71). Only the emotional scores (EE; ES; EC) and the EI were investigated.

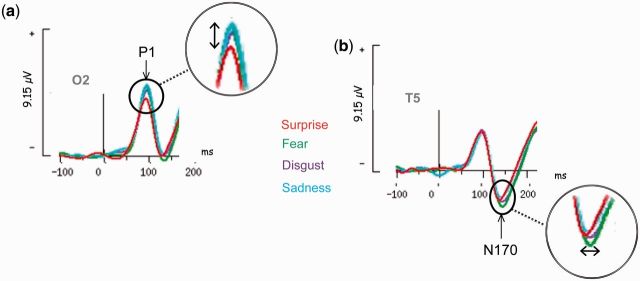

Fig. 3.

Emotional effects. (A) Grand average ERPs for surprise, disgust, fear and sadness at the right occipital electrode (O2). An overall emotional effect was found on amplitude of this early visual component. For example, P1 evoked by surprised faces was smaller than P1 recorded for disgusted, sad and fearful faces. (B) Grand average ERP for surprise, disgust, fear and sadness from a left temporo-occipital electrode (T5). An overall effect of emotion was found on N170 latency. For example, N170 evoked by fearful faces was later than the component registered for surprised faces.

N170 latency (around 146 ± 10.2 ms) varied according to the emotion displayed [F (6,156) = 7.75, P = 0.00002, ε (H–F) = 0.74]. N170 latency to faces expressing fear, disgust and anger was delayed compared with happy (P < 0.01), surprised (P < 0.02), sad (P < 0.02) and neutral (P < 0.01) faces (Figure 3B and Table 3). N170 latency was also sensitive to sex [F (1, 26) = 7.243, P = 0.012]: N170 peaking earlier in women (142.2 ± 7.8 ms) compared with men (150.5 ± 10.8 ms). No effects of emotion (P = 0.09), sex or hemisphere were found on N170 amplitude (− 3.03 ± 2.3 µV). No significant or tendency to significant interaction between sex and emotion was found.

We also measured the P2 characteristics. ANOVA revealed no effects of emotion, sex or hemisphere on either the latency (209.3 ± 18.2 ms) or the amplitude (5.73 ± 3.8 µV) of this component. No significant or tendency to significant interaction between sex and emotion was observed neither.

Pearson correlation: effect of SSI emotional subscores

To meet the study’s aims, correlations were used to investigate how early visual ERPs were related to the subjects’ emotional abilities. Pearson correlations were computed between amplitude and latency of N170, P1 and P2 components averaged for all emotions and the EE, ES, EC and EI scores of the SSI. The mean SSI scores obtained are reported in Table 3. No correlation between the different emotional subscores (EE, ES, EC) was revealed, but a sex effect was found only on the EC score (Mann–Whitney, Z = 2.59; P = 0.009), women presenting lower skills for this aspect of emotional processing.

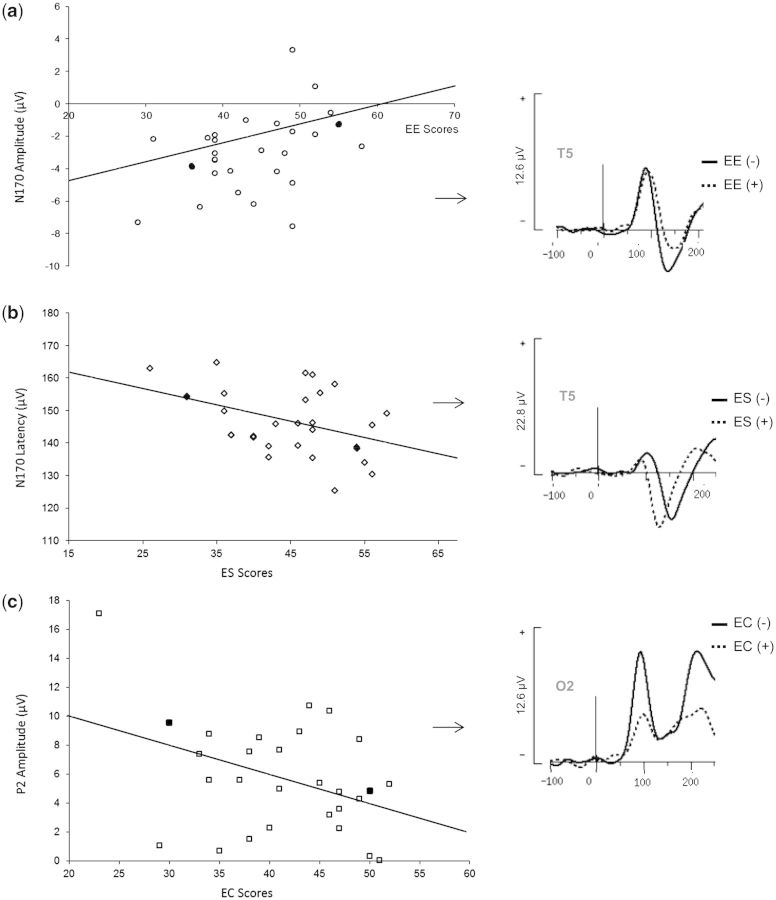

The Pearson analysis revealed that neither the amplitude nor the latency of P1 was correlated with EE, ES, EC or EI SSI score, but did indicate significant correlations between N170 characteristics and the subjects’ social abilities. N170 amplitude presented greater negativity for subjects with low EE subscore than subjects with high EE subscore (r = 0.39, P < 0.04) (Figure 4A). Spearman correlations also showed that higher scores for ES subscore were associated with shorter N170 latency (r = −0.39, P < 0.04) (Figure 4B). Moreover, the Pearson correlation was significant between the mean P2 amplitude for all emotions and EC (r = −0.39; P < 0.04): the P2 component decreased with greater EC abilities (Figure 4C).

Fig. 4.

Correlation between emotional subscores of SSI and visual ERP characteristics. (A) N170 amplitude presented greater negativity for subjects with low EE subscores than subjects with high EE subscores (N170 Amp. = −8.9 + 0.13 * EE). (B) Higher scores for ES subscores were associated with shorter N170 latency (N170 Lat. = 168.9 – 0.51 * ES). (C) The P2 component became smaller with greater EC skills (P2 Amp. = 14.1 – 0.21 * EC). For each correlation, the two full points correspond to the two subjects selected because of their representative patterns. These two subjects were referred as (+) and (−) according to their emotional scores, and their individual ERPs waveforms are presented at the right of the correlation representation.

When an emotion effect was revealed by ANOVA, Pearson correlations were also used for ERPs averaged according to the emotion expressed by the face. None of the ERP components evoked by one particular type of emotion was correlated by the SSI emotion scores (EE, ES, EC, EI). Pearson analyses only reached significance when the ERPs evoked by neutral and emotional faces were mixed together.

DISCUSSION

Summary results

The presentation of faces expressing the six different emotions produced significant effects both in terms of amplitude and latency starting at the P1 component (around 95 ms). Fifty milliseconds later (at a mean latency of 146 ms), the N170 component showed differential modulation according to the emotions expressed by a face. Moreover, N170 was significantly modulated by the emotional abilities of the subjects (expressivity and sensitivity scores). Finally, at 210 ms, while no emotional effect was shown, the amplitude of the P2 component was correlated with the ability of the subjects to control their emotions (EC scores).

Early visual ERPs were modulated by facial emotions (exogenous factor)

The timing of emotional processing is still a matter of debate. Several studies have failed to report an early effect of emotions (Lang et al., 1990; Munte, 1998; Swithenby et al., 1998), suggesting that emotions are processed quite late, in agreement with the Bruce and Young’s model (Bruce and Young, 1986). However, studies in the past 10 years have reported that brain activity could be affected early by the emotions conveyed by faces. Comparing neutral to emotional faces and positive to negative emotions, early effects in response to emotions have been recorded between 110 and 250 ms (Marinkovic and Halgren, 1998; Krolak-Salmon et al., 2001; Pizzagalli et al., 2002). These studies did not reveal any specific emotional effect (i.e. from one emotion to another) before 400–750 ms (Marinkovic and Halgren, 1998; Munte, 1998; Krolak-Salmon et al., 2001), consistent with emotions being processed quite late, and after identity (Munte, 1998).

However, due to the role of emotions in ecological behaviour, it seems obvious that fear or anger need to be distinguished from happiness very rapidly. For example, a recent study showed that participants were indeed slower to make an eye movement away from an angry face presented at fixation than from either a neutral or a happy face (Belopolsky et al., 2010). This finding indicates that differential emotion processing seems to occur before 100 ms when efficient processing is crucial for survival.

In agreement with this essential role of detection and recognition of emotions for survival, the present study revealed differences in latency and amplitude for early visual ERPs in response to emotional expressions. P1 was later for sadness than for happiness, larger for surprised faces than sad faces and N170 was later for fear, disgust and anger compared with other emotions, confirming that the encoding of facial emotion processes start as early as 100 ms and are still occurring 50 ms later. If one takes the survival argument to completion, this seems counter-intuitive—that some negative expressions that may signal danger have slower N170 latencies. However, if a subcortical pathway is activated for negative emotions, sending information rapidly to different levels of the ventral pathway (Adolphs, 2002), then N170 latency may be later for these emotions due to including information arriving from the subcortical processing (Batty and Taylor, 2003). These findings are in agreement with other recent studies in which the P1 and N170 components have been shown to be differently modulated by the perception of different expressions of facial emotion (Batty and Taylor, 2003, 2006; Eger et al., 2003; Pourtois et al., 2004; Williams et al., 2004; Caharel et al., 2007; Dennis et al., 2009; Utama et al., 2009). Variation of the differential emotional effect found across studies may be due to task differences, which can mask individual effects of emotion. In fact, studies that have reported early emotional effects have involved an implicit emotional task: the instructions did not mention taking into account emotional expression (Batty and Taylor, 2003; Eger et al., 2003; Pourtois et al., 2004; Williams et al., 2004; Caharel et al., 2007; Campanella et al., 2012). For example, using pairs of morphed faces during an implicit oddball task (delayed same–different matching task) Campanella et al. (2002) have reported emotional modulations of the N170 component. In contrast, when studies revealed a late emotional effect, an explicit task was involved: subjects were instructed to pay attention to facial expressions (Marinkovic and Halgren, 1998; Munte, 1998; Krolak-Salmon et al., 2001). These results confirm the ecological role of emotion. When no emotional perception is expected, facial emotion automatically and rapidly modulates visual responses (as early as P1), whereas when emotional stimulation is expected, the effect is seen later, abolishing the ecological effect. The differences in emotional effects seen between studies could also be due to habituation, as most studies have used only a few faces, shown repeatedly to subjects, without considering the effects of habituation (Breiter et al., 1996; Feinstein et al., 2002).

Early visual ERPs were influenced by individual emotional skills (endogenous factor)

Abnormalities in the behavioural and cerebral processing of emotional faces have been reported in affective disorders, such as autism spectrum disorder (ASD) (Hobson, 1986; McPartland et al., 2004; Wong et al., 2008; Batty et al., 2011), major depression (Surguladze et al., 2004; Degabriele et al., 2011; Sterzer et al., 2011) and BD (Dai and Feng, 2009; for review see Rosen and Rich, 2010). Therefore, affective disorders are characterised by impairment of emotional capacities and abnormal N170 patterns. Enlightened by this literature, in this study, we explored the link between individual emotional skills and the cerebral responses to emotional stimuli in healthy adults.

Our findings revealed that the N170 and the P2 components evoked by faces expressing emotions are correlated with the subject’s ES, expressivity and control in healthy mature subjects. This study thus provides evidence of a real relationship between visual ERPs characteristics and people’s emotional skills. Variability of the N170 and P2 patterns might reflect heterogeneity in personal characteristics in the general population and might reveal strengths and weaknesses in emotional skills. Very few studies have investigated sensitivity of ERPs to individual factors (McPartland et al., 2004; Carlson and Iacono, 2006; Lewis et al., 2006; Carlson et al., 2009; Dennis et al., 2009; Cheung et al., 2010; Hileman et al., 2011; Yuan et al., 2011). For example, P300 responses were reported to be associated with both cognitive and socio-emotional factors (Carlson and Iacono, 2006) and to be a possible index of developmental level of decision-making skills in affective–motivational situations (Carlson et al., 2009). Other studies have investigated the relationship between ERP components, face processing and social behaviour. P1 and N170 components evoked by faces appeared to be influenced by differences in social personality traits (Cheung et al., 2010) especially in case of social anxiety (Rossignol et al., 2012), recognition memory for faces (McPartland et al., 2004), social cognitive skills (Hileman et al., 2011) and emotion regulation (Dennis et al., 2009). For example, smaller P1 amplitude and more negative N170 amplitude in response to upright faces were associated with better social skills in children with typical development (Hileman et al., 2011). Hileman’s study examined ERP measurements in relation to social skills more broadly in children, whereas the current study more specifically examined ERP measurements in relation to adult emotional skills.

In our study, less negative N170 amplitude was associated with higher EE and shorter N170 latency with higher ES. Moreover, lower P2 was correlated with high EC. The differences in impact of emotional skills on the N170 and the P2 components are consistent with rapports that these components indexing separate stages of face processing when emotions are not expressed (Linkenkaer-Hansen et al., 1998; Liu et al., 1999; Taylor et al., 2001; Itier and Taylor, 2002; Latinus and Taylor, 2006). Latinus and Taylor (2006) proposed a model for face processing that highlights a link between P1, N170 and P2 and the different stages of face processing (Latinus and Taylor, 2006). The authors claimed that face processing starts by general low-level features analysis that may correspond to P1 in ERPs. This first stage is followed by face structural analysis based on first-order relational configuration and holistic processing of the face that may be reflected in the N170 pattern (Jeffreys, 1993; Bentin et al., 1996, 2006; George et al., 1996; Eimer, 1998; Jemel et al., 1999; Rossion et al., 1999; Sagiv and Bentin, 2001; Latinus and Taylor, 2006; Zion-Golumbic and Bentin, 2007; Eimer et al., 2011). It was proposed that this second stage leads to face detection. Another accepted concept regarding N170 is that face sensitivity reflected by the N170 component can account for the level of expertise of face recognition (Rossion et al., 2002) or for some combination of processing effort and expertise (Hileman et al., 2011). Finally, the P2 component may correspond to second-order configural processing that leads to final face recognition (confirmation) (Latinus and Taylor, 2006) and may also reflect a neurophysiological correlate of ‘learning/control’ processes (Latinus and Taylor, 2005). However, it should be noticed that the processes underlying visual ERPs in response to faces without emotion are still controversial. For example, some studies have suggested an association between N170 (or M170 in MEG) and part-based analysis of individual face parts (Harris and Nakayama, 2008; Eimer et al., 2011). Moreover, while ERP has been used to investigate the timing of emotional processing, the underlying processes reflected by the early visual ERPs (P1, N170, P2) have not been investigated in response to emotional face until recently. In a very nice study, Utama et al. (2009) presented several morphed images containing seven facial emotions (neutral, anger, happiness, disgust, sadness, surprise and fear) at 10 graded intensity levels (i.e. ‘affective valence’) while N170 and P1 were recorded. They proposed that rapid detection of facial emotions occurs within the first 100 ms, as the P1 component was closely associated with the correct detection of the emotions, and detailed processing (identification), notably intensity assessment, occurs shortly afterwards in the time window of the N170 component (Utama et al., 2009). These results are in agreement with previous finding, which also proposed that the N170 may reflect the perception of intensity (Sprengelmeyer and Jentzsch, 2006). Therefore, the detection of facial emotion and the assessment of emotional intensity appeared to be based on different neural mechanisms (Utama et al., 2009), providing a possible interpretation to our differential emotional effect on the P1 and the N170.

Enlightened by this previous works, we hypothesised that having high EE and ES skills suggest high face expertise and configural face processing abilities, both mechanisms that were reported to be underlain by the N170. These high levels of ability may involve more automatic and efficient networks, which lead to faster and effortless identification of facial emotion. This may explain why the N170 component was earlier and smaller in responses to faces expressing emotions when subjects had high ES and expressivity. Finally, the visual P2 component has been reported to reflect second-order configural processing (Latinus and Taylor, 2006) and to be sensitive to learning and control processing (Latinus and Taylor, 2005) (i.e. P2 amplitude decreased after a learning/control task). In our study, P2 amplitude decreased with increased EC ability. On the basis of our results and previous investigations, we therefore suggest that expertise resulting from efficient learning of emotion facilitates the development a high EC in healthy subjects. Then greater are the subject’s abilities to control his emotions, less significant the confirmation of the previously identified emotion is, evoking a smaller P2.

Some articles have suggested that individuals’ skills/personality factors may be related to sex differences observed on ERPs (Campanella et al., 2004, 2012). We agree that respective contribution of sex and personality factors on ERPs modulations in response to emotional indices should be envisaged together. Indeed, better non-verbal abilities and socio-emotional skills are reported in females than males (Hall, 1978 for a meta-analysis; McClure, 2000; Hall et al., 2006; Hall and Schmid Mast, 2008). This well-known behavioural female advantage may be attributable to greater tendencies to experience, express and dwell on their emotions. Men, on the other hand, are viewed as tending to suppress or avoid both the experience and expression of emotions. In other words, according to this view, men and women should be depicted by different ES, EE and EC scores. However, in this study, we failed to find any significant effect of sex on the SSI scores except for EC abilities, i.e. women presenting lower skills for this aspect of emotional processing. This result is in the line of studies defending gender differences in emotional regulation strategies (for review see Nolen-Hoeksema, 2012) but against the thesis which widely viewed females as the sensitive and expressive emotional sex. We have also pointed out that gender of the participant influenced P1 amplitude and N170 latency, and not the P2 component which, according to our results, is the ERPs wave related to EC skills. Moreover, N170 peaked earlier in women compared with men, but the N170 latency is only correlated with ES, which is not influenced by sex difference. Taking together, data seem to indicate that the link between ERPs modulations and individuals emotional skills observed in this study is not sustained by sex differences. In other words, sex differences measured on the P1 and the N170 component appear not to be done in combination with specific personality traits of women and men. These results are consistent with previous studies showing that the female-specific socio-emotional sensitivity is neurophysiologically mainly indexed by modulation of the N2 and P3b components and arguing that gender differences observed in the processing of emotional stimuli could originate at the attentional level of the information processing (Campanella et al., 2004; Li et al., 2008; Lithari et al., 2010). In this view, the gender effect found on P1 and N170 here could be more related to men/women differences in perceptual processing on face representations and not in specific emotion processing.

We have presented here that early visual ERPs were influenced by individual emotional skills (endogenous factor) during emotional face processing. Besides the need for an independent replication of these results, possible limitations should also be considered. First, the relatively small sample (n = 28) used for an individual differences study combined with some modest reported associations (correlations about 0.40) suggest that these results should be considered as preliminary. This concern might be avoided in further experiments. Second, we choose to focus in the present study on early visual ERP components (P1/N170/P2). Other later post-perceptual components modulated by emotional dimensions of faces may have been envisaged to correlate with emotional skills, such as the P300 (Krolak-Salmon et al., 2001; Campanella et al., 2002), the LPP (400–800 ms, centro-parital regions) (Sato et al., 2001; Schupp et al., 2004) and the EPN (240–280 ms, temporo-occipital regions) (Junghofer et al., 2001; Sato et al., 2001; Schupp et al., 2004). Third, no overt behavioural task was proposed to the participants, making difficult to surmise if the subjects had engaged in implicit or explicit processing during the EEG recording. We are aware that this concern may be a weakness of the current study. However, the choice of this paradigm was made as in future we will use this protocol with children with pervasive developmental disorders, which requires a very short and passive paradigm. How differences in implicit vs explicit processing of emotion are related to individuals’ emotional skills needs further investigation. Finally, we have detailed the modulations of ERPs in case of extreme individual skills, like in affective disorders, in the introduction and the discussion section but we have not checked for these clinical factors in our population. Although the SSI subscores were within the normal cut-offs scale, suggesting that the emotional and social profiles of our participants are standard, the use of specific clinical tools like depression or anxiety rating scale would bring benefits to our data, allowing us to ensure that ERPs’ modulations observed here are driven by individual personality skills and not by extreme clinical factors.

CONCLUSIONS AND FUTURE DIRECTIONS

In conclusion, in light of the extended literature, the findings confirm that early ERPs are sensitive to the nature of the emotion expressed by a face as early as 90 ms (P1) and later at 140 ms (N170). Moreover, this study examined the relationship between visual ERPs and the subject’s emotional abilities. The N170 and the P2 components are significantly modulated by endogenous factors (sensitivity, expressivity and control emotional skills). We therefore suggest that N170 and P2 could be used as individual markers to assess strength or weakness in emotional areas. Finally, this study provides information for further investigations of affective disorders. Future studies should continue to examine how visual ERP components relate to individual differences in emotional skills in autism. Clinically, such markers could be used to target emotional impairment areas and to investigate the evolution of these impairments throughout therapeutic interventions (e.g. Pallanti et al., 1999; Habel et al., 2010). These results highlight the importance of understanding individual differences in socio-emotional motivation in both typical and atypical populations.

Conflict of Interest

None declared.

Acknowledgments

The authors thank all the subjects for their time and effort spent participating in this study. Special thanks are due to Sylvie Roux for her valuable help with the experimental design and analyses. This work was supported by grants from Foundation ORANGE.

References

- Addington J, Addington D. Facial affect recognition and information processing in schizophrenia and bipolar disorder. Schizophrenia Research. 1998;32(3):171–81. doi: 10.1016/s0920-9964(98)00042-5. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Neural systems for recognizing emotion. Current Opinion in Neurobiology. 2002;12(2):169–77. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Aguera PE, Jerbi K, Caclin A, Bertrand O. ELAN: a software package for analysis and visualization of MEG, EEG, and LFP signals. Computational Intelligence and Neuroscience. 2011 doi: 10.1155/2011/158970. 2011, 158970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akechi H, Senju A, Kikuchi Y, Tojo Y, Osanai H, Hasegawa T. The effect of gaze direction on the processing of facial expressions in children with autism spectrum disorder: an ERP study. Neuropsychologia. 2010;48(10):2841–51. doi: 10.1016/j.neuropsychologia.2010.05.026. [DOI] [PubMed] [Google Scholar]

- Ashley V, Vuilleumier P, Swick D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport. 2004;15(1):211–6. doi: 10.1097/00001756-200401190-00041. [DOI] [PubMed] [Google Scholar]

- Bastian VA, Burns NR, Nettelbeck T. Emotional intelligence predicts life skills, but not as well as personality and cognitive abilities. Personality and Individual Differences. 2005;39:1135–45. [Google Scholar]

- Batty M, Meaux E, Wittemeyer K, Roge B, Taylor MJ. Early processing of emotional faces in children with autism: an event-related potential study. Journal of Experimental Child Psychology. 2011;109:430–44. doi: 10.1016/j.jecp.2011.02.001. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Visual categorization during childhood: an ERP study. Psychophysiology. 2002;39(4):482–90. doi: 10.1017.S0048577202010764. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Brain Research Cognitive Brain Research. 2003;17(3):613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9(2):207–20. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Belopolsky AV, Devue C, Theeuwes J. Angry faces hold the eyes. Visual Cognition. 2010;19(1):27–36. [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8(6):551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Golland Y, Flevaris A, Robertson LC, Moscovitch M. Processing the trees and the forest during initial stages of face perception: electrophysiological evidence. Journal of Cognitive Neuroscience. 2006;18(8):1406–21. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- Boutsen L, Humphreys GW, Praamstra P, Warbrick T. Comparing neural correlates of configural processing in faces and objects: an ERP study of the Thatcher illusion. NeuroImage. 2006;32(1):352–67. doi: 10.1016/j.neuroimage.2006.03.023. [DOI] [PubMed] [Google Scholar]

- Boyatzis CJ, Chazan E, Ting CZ. Preschool children's decoding of facial emotions. Journal of Genetic Psychology. 1993;154(3):375–82. doi: 10.1080/00221325.1993.10532190. [DOI] [PubMed] [Google Scholar]

- Brackett M, Mayer JD, Warner RM. Emotional intelligence and the prediction of behavior. Personality Individual Differences. 2004;36:1387–402. [Google Scholar]

- Brackett MA, Rivers SE, Shiffman S, Lerner N, Salovey P. Relating emotional abilities to social functioning: a comparison of self-report and performance measures of emotional intelligence. Journal of Personality and Social Psychology. 2006;91(4):780–95. doi: 10.1037/0022-3514.91.4.780. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17(5):875–87. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Brosch T, Sander D, Pourtois G, Scherer KR. Beyond fear: rapid spatial orienting toward positive emotional stimuli. Psychological Science. 2008;19(4):362–70. doi: 10.1111/j.1467-9280.2008.02094.x. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77(Pt 3):305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Caharel S, Bernard C, Thibaut F, et al. The effects of familiarity and emotional expression on face processing examined by ERPs in patients with schizophrenia. Schizophrenia Research. 2007;95(1–3):186–96. doi: 10.1016/j.schres.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Caharel S, Courtay N, Bernard C, Lalonde R, Rebai M. Familiarity and emotional expression influence an early stage of face processing: an electrophysiological study. Brain and Cognition. 2005;59(1):96–100. doi: 10.1016/j.bandc.2005.05.005. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Perett DI, Etcoff NL, Rowland D. Categorical perception of morphed facial expressions. Visual Cognition. 1996;3:81–117. [Google Scholar]

- Campanella S, Falbo L, Rossignol M, et al. Sex differences on emotional processing are modulated by subclinical levels of alexithymia and depression: a preliminary assessment using event-related potentials. Psychiatry Research. 2012;197(1–2):145–53. doi: 10.1016/j.psychres.2011.12.026. [DOI] [PubMed] [Google Scholar]

- Campanella S, Quinet P, Bruyer R, Crommelinck M, Guerit JM. Categorical perception of happiness and fear facial expressions: an ERP study. Journal of Cognitive Neuroscience. 2002;14(2):210–27. doi: 10.1162/089892902317236858. [DOI] [PubMed] [Google Scholar]

- Campanella S, Rossignol M, Mejias S, et al. Human gender differences in an emotional visual oddball task: an event-related potentials study. Neuroscience Letters. 2004;367(1):14–18. doi: 10.1016/j.neulet.2004.05.097. [DOI] [PubMed] [Google Scholar]

- Carlson SM, Zayas V, Guthormsen A. Neural correlates of decision making on a gambling task. Child Development. 2009;80(4):1076–96. doi: 10.1111/j.1467-8624.2009.01318.x. [DOI] [PubMed] [Google Scholar]

- Carlson SR, Iacono WG. Heritability of P300 amplitude development from adolescence to adulthood. Psychophysiology. 2006;43(5):470–80. doi: 10.1111/j.1469-8986.2006.00450.x. [DOI] [PubMed] [Google Scholar]

- Chammat M, Foucher A, Nadel J, Dubal S. Reading sadness beyond human faces. Brain Research. 2010 doi: 10.1016/j.brainres.2010.05.051. 1348, 95–104. [DOI] [PubMed] [Google Scholar]

- Cheung CH, Rutherford HJ, Mayes LC, McPartland JC. Neural responses to faces reflect social personality traits. Society for Neuroscience. 2010;5(4):351–9. doi: 10.1080/17470911003597377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark TF, Winkielman P, McIntosh DN. Autism and the extraction of emotion from briefly presented facial expressions: stumbling at the first step of empathy. Emotion. 2008;8(6):803–9. doi: 10.1037/a0014124. [DOI] [PubMed] [Google Scholar]

- Dai Q, Feng Z. Deficient inhibition of return for emotional faces in depression. Progress in Neuropsychopharmacology and Biological Psychiatry. 2009;33(6):921–32. doi: 10.1016/j.pnpbp.2009.04.012. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Emotion in the perspective of an integrated nervous system. Brain Research Review. 1998;26(2–3):83–6. doi: 10.1016/s0165-0173(97)00064-7. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, Carver L, Panagiotides H, McPartland J. Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Developmental Science. 2004;7(3):340–59. doi: 10.1111/j.1467-7687.2004.00352.x. [DOI] [PubMed] [Google Scholar]

- De Jong MC, Van Engeland MD, Kemner C. Attentional effects of gaze shifts are influenced by emotion and spatial frequency, but not in autism. Journal of the American Academy of Child and Adolescent Psychiatry. 2008;47(4):443–54. doi: 10.1097/CHI.0b013e31816429a6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Sonneville LM, Verschoor CA, Njiokiktjien C, Op het Veld V, Toorenaar N, Vranken M. Facial identity and facial emotions: speed, accuracy, and processing strategies in children and adults. Journal of Clinical and Experimental Neuropsychology. 2002;24(2):200–13. doi: 10.1076/jcen.24.2.200.989. [DOI] [PubMed] [Google Scholar]

- Degabriele R, Lagopoulos J, Malhi G. Neural correlates of emotional face processing in bipolar disorder: an event-related potential study. Journal of Affective Disorders. 2011;133(1–2):212–20. doi: 10.1016/j.jad.2011.03.033. [DOI] [PubMed] [Google Scholar]

- Dennis TA, Malone MM, Chen CC. Emotional face processing and emotion regulation in children: an ERP study. Developmental Neuropsychology. 2009;34(1):85–102. doi: 10.1080/87565640802564887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deruelle C, Rondan C, Gepner B, Tardif C. Spatial frequency and face processing in children with autism and Asperger syndrome. Journal of Autism and Developmental Disorders. 2004;34(2):199–210. doi: 10.1023/b:jadd.0000022610.09668.4c. [DOI] [PubMed] [Google Scholar]

- Duval S, Foucher A, Jouvent R, Nadel J. Human spots emotion in non humanoid robots. Social Cognitive and Affective Neuroscience. 2011;6:90–7. doi: 10.1093/scan/nsq019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Jedynak A, Iwaki T, Skrandies W. Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41(7):808–17. doi: 10.1016/s0028-3932(02)00287-7. [DOI] [PubMed] [Google Scholar]

- Eimer M. Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroreport. 1998;9(13):2945–8. doi: 10.1097/00001756-199809140-00005. [DOI] [PubMed] [Google Scholar]

- Eimer M, Gosling A, Nicholas S, Kiss M. The N170 component and its links to configural face processing: a rapid neural adaptation study. Brain Research. 2011 doi: 10.1016/j.brainres.2010.12.046. 1376, 76–87. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. Event-related brain potential correlates of emotional face processing. Neuropsychologia. 2007;45(1):15–31. doi: 10.1016/j.neuropsychologia.2006.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Emotions Revealed. New York: NY: Owl Books; 2003. [Google Scholar]

- Feinstein JS, Goldin PR, Stein MB, Brown GG, Paulus MP. Habituation of attentional networks during emotion processing. Neuroreport. 2002;13(10):1255–8. doi: 10.1097/00001756-200207190-00007. [DOI] [PubMed] [Google Scholar]

- Ganis G, Smith D, Schendan HE. The N170, not the P1, indexes the earliest time for categorical perception of faces, regardless of interstimulus variance. Neuroimage. 2012;62(3):1563–74. doi: 10.1016/j.neuroimage.2012.05.043. [DOI] [PubMed] [Google Scholar]

- George N, Evans J, Fiori N, Davidoff J, Renault B. Brain events related to normal and moderately scrambled faces. Brain Research. Cognitive Brain Research. 1996;4(2):65–76. doi: 10.1016/0926-6410(95)00045-3. [DOI] [PubMed] [Google Scholar]

- Gohm CL, Corser GC, Dalsky DJ. Emotional intelligence under stress: useful, unnecessary, or irrelevant? Personality and Individual Differences. 2005;39:1017–28. [Google Scholar]

- Grispan D, Hemphill A, Nowicki SJ. Improving the ability of elementary school-age children to identify emotion in facial expression. Journal of Genetic Psychology. 2003;164:88–100. doi: 10.1080/00221320309597505. [DOI] [PubMed] [Google Scholar]

- Habel U, Koch K, Kellermann T, et al. Training of affect recognition in schizophrenia: Neurobiological correlates. Society of Neuroscience. 2010;5(1):92–104. doi: 10.1080/17470910903170269. [DOI] [PubMed] [Google Scholar]

- Hajcak G, MacNamara A, Olvet DM. Event-related potentials, emotion, and emotion regulation: an integrative review. Developmental Neuropsychology. 2010;35(2):129–55. doi: 10.1080/87565640903526504. [DOI] [PubMed] [Google Scholar]

- Hall JA. Gender effect in decoding nonverbal cues. Psychological Bulletin. 1978;85:845–57. [Google Scholar]

- Hall JA, Murphy NA, Schmid-Mast M. Recall of nonverbal cues: exploring a new definition of interpersonal sensitivity. Journal of Nonverbal Behavior. 2006;30:141–55. [Google Scholar]

- Hall JA, Schmid Mast M. Are women always more interpersonally sensitive than men? Impact of goals and content domain. Personality and Social Psychology Bulletin. 2008;34(1):144–55. doi: 10.1177/0146167207309192. [DOI] [PubMed] [Google Scholar]

- Harris A, Nakayama K. Rapid adaptation of the m170 response: importance of face parts. Cerebral Cortex. 2008;18(2):467–76. doi: 10.1093/cercor/bhm078. [DOI] [PubMed] [Google Scholar]

- Hileman CM, Henderson H, Mundy P, Newell L, Jaime M. Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Developmental Neuropsychology. 2011;36(2):214–36. doi: 10.1080/87565641.2010.549870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobson RP. The autistic child's appraisal of expressions of emotion. Journal of Child Psychology and Psychiatry. 1986;27(3):321–42. doi: 10.1111/j.1469-7610.1986.tb01836.x. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Brain Research. Cognitive Brain Research. 2003;16(2):174–84. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Latinus M, Taylor MJ. Face, eye and object early processing: what is the face specificity? Neuroimage. 2006;29(2):667–76. doi: 10.1016/j.neuroimage.2005.07.041. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage. 2002;15:353–72. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14(2):132–42. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Jacques C, Rossion B. Misaligning face halves increases and delays the N170 specifically for upright faces: implications for the nature of early face representations. Brain Research. 2010 doi: 10.1016/j.brainres.2009.12.070. 1318, 96–109. [DOI] [PubMed] [Google Scholar]

- Jeffreys DA. The influence of stimulus orientation on the vertex positive scalp potential evoked by faces. Experimental Brain Research. 1993;96(1):163–72. doi: 10.1007/BF00230449. [DOI] [PubMed] [Google Scholar]

- Jemel B, George N, Olivares E, Fiori N, Renault B. Event-related potentials to structural familiar face incongruity processing. Psychophysiology. 1999;36(4):437–52. [PubMed] [Google Scholar]

- Joyce C, Rossion B. The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology. 2005;116(11):2613–31. doi: 10.1016/j.clinph.2005.07.005. [DOI] [PubMed] [Google Scholar]

- Junghofer M, Bradley MM, Elbert TR, Lang PJ. Fleeting images: a new look at early emotion discrimination. Psychophysiology. 2001;38(2):175–8. [PubMed] [Google Scholar]

- Krolak-Salmon P, Fischer C, Vighetto A, Mauguiere F. Processing of facial emotional expression: spatio-temporal data as assessed by scalp event-related potentials. European Journal of Neuroscience. 2001;13(5):987–94. doi: 10.1046/j.0953-816x.2001.01454.x. [DOI] [PubMed] [Google Scholar]

- Lang SF, Nelson CA, Collins PF. Event-related potentials to emotional and neutral stimuli. Journal of Clinical and Experimental Neuropsychology. 1990;12(6):946–58. doi: 10.1080/01688639008401033. [DOI] [PubMed] [Google Scholar]

- Latinus M, Taylor MJ. Holistic processing of faces: learning effects with Mooney faces. Journal of Cognitive Neuroscience. 2005;17(8):1316–27. doi: 10.1162/0898929055002490. [DOI] [PubMed] [Google Scholar]

- Latinus M, Taylor MJ. Face processing stages: impact of difficulty and the separation of effects. Brain Research. 2006;1123(1):179–87. doi: 10.1016/j.brainres.2006.09.031. [DOI] [PubMed] [Google Scholar]

- Lee SH, Kim EY, Kim S, Bae SM. Event-related potential patterns and gender effects underlying facial affect processing in schizophrenia patients. Neuroscience Research. 2010;67(2):172–80. doi: 10.1016/j.neures.2010.03.001. [DOI] [PubMed] [Google Scholar]

- Leppanen JM, Hietanen JK. Emotion recognition and social adjustment in school-aged girls and boys. Scandinavian Journal of Psychology. 2001;42(5):429–35. doi: 10.1111/1467-9450.00255. [DOI] [PubMed] [Google Scholar]

- Lewin C, Herlitz A. Sex differences in face recognition—women's faces make the difference. Brain and Cognition. 2002;50(1):121–8. doi: 10.1016/s0278-2626(02)00016-7. [DOI] [PubMed] [Google Scholar]

- Lewin C, Wolgers G, Herlitz A. Sex differences favoring women in verbal but not in visuospatial episodic memory. Neuropsychology. 2001;15(2):165–73. doi: 10.1037//0894-4105.15.2.165. [DOI] [PubMed] [Google Scholar]

- Lewis MD, Lamm C, Segalowitz SJ, Stieben J, Zelazo PD. Neurophysiological correlates of emotion regulation in children and adolescents. Journal of Cognitive Neuroscience. 2006;18(3):430–43. doi: 10.1162/089892906775990633. [DOI] [PubMed] [Google Scholar]

- Li H, Yuan J, Lin C. The neural mechanism underlying the female advantage in identifying negative emotions: an event-related potential study. Neuroimage. 2008;40(4):1921–9. doi: 10.1016/j.neuroimage.2008.01.033. [DOI] [PubMed] [Google Scholar]

- Linkenkaer-Hansen K, Palva JM, Sams M, Hietanen JK, Aronen HJ, Ilmoniemi RJ. Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neuroscience Letters. 1998;253(3):147–50. doi: 10.1016/s0304-3940(98)00586-2. [DOI] [PubMed] [Google Scholar]

- Lithari C, Frantzidis CA, Papadelis C, et al. Are females more responsive to emotional stimuli? A neurophysiological study across arousal and valence dimensions. Brain Topography. 2010;23(1):27–40. doi: 10.1007/s10548-009-0130-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu L, Ioannides AA, Streit M. Single trial analysis of neurophysiological correlates of the recognition of complex objects and facial expressions of emotion. Brain Topography. 1999;11(4):291–303. doi: 10.1023/a:1022258620435. [DOI] [PubMed] [Google Scholar]

- Lopes PN, Brackett MA, Nezlek JB, Schutz A, Sellin I, Salovey P. Emotional intelligence and social interaction. Personality and Social Psychology Bulletin. 2004;30(8):1018–34. doi: 10.1177/0146167204264762. [DOI] [PubMed] [Google Scholar]

- Lopes PN, Salovey P, Cote S, Beers M. Emotion regulation abilities and the quality of social interaction. Emotion. 2005;5(1):113–8. doi: 10.1037/1528-3542.5.1.113. [DOI] [PubMed] [Google Scholar]

- Lyubomirsky S, King L, Diener E. The benefits of frequent positive affect: does happiness lead to success? Psychological Bulletin. 2005;131(6):803–55. doi: 10.1037/0033-2909.131.6.803. [DOI] [PubMed] [Google Scholar]

- Malhi GS, Lagopoulos J, Sachdev PS, Ivanovski B, Shnier R, Ketter T. Is a lack of disgust something to fear? A functional magnetic resonance imaging facial emotion recognition study in euthymic bipolar disorder patients. Bipolar Disorders. 2007;9(4):345–57. doi: 10.1111/j.1399-5618.2007.00485.x. [DOI] [PubMed] [Google Scholar]

- Marinkovic K, Halgren E. Human brain potentials related to the emotional expression, repetition, and gender of faces. Psychobiology. 1998;26:248–356. [Google Scholar]

- Matthews G, Zeidner M, Roberts RD. Emotional Intelligence: Science and Myth. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- Mayers JD, Richard DR, Barsade SG. Human abilities: emotional intelligence. Annual Review of Psychology. 2008;59:507–36. doi: 10.1146/annurev.psych.59.103006.093646. [DOI] [PubMed] [Google Scholar]

- McClure EB. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychological Bulletin. 2000;126(3):424–53. doi: 10.1037/0033-2909.126.3.424. [DOI] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45(7):1235–45. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- Morel S, Ponz A, Mercier M, Vuilleumier P, George N. EEG-MEG evidence for early differential repetition effects for fearful, happy and neutral faces. Brain Research. 2009 doi: 10.1016/j.brainres.2008.11.079. 1254, 84–98. [DOI] [PubMed] [Google Scholar]

- Moser JS, Hajcak G, Bukay E, Simons RF. Intentional modulation of emotional responding to unpleasant pictures: an ERP study. Psychophysiology. 2006;43(3):292–6. doi: 10.1111/j.1469-8986.2006.00402.x. [DOI] [PubMed] [Google Scholar]

- Munte TF, Brack M, Grootheer O, Wieringa B, Matzke M, Johannes S. Brain potentials reveal the timing of face identity and expression judgments. Neuroscience Research. 1998;30(1):25–34. doi: 10.1016/s0168-0102(97)00118-1. [DOI] [PubMed] [Google Scholar]

- Nolen-Hoeksema S. Emotion regulation and psychopathology: the role of gender. Annual Review of Clinical Psychology. 2012;8:161–87. doi: 10.1146/annurev-clinpsy-032511-143109. [DOI] [PubMed] [Google Scholar]

- O'Connor K, Hamm JP, Kirk IJ. The neurophysiological correlates of face processing in adults and children with Asperger's syndrome. Brain and Cognition. 2005;59(1):82–95. doi: 10.1016/j.bandc.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Praamstra P. The five percent electrode system for high-resolution EEG and ERP measurements. Clinical Neurophysiology. 2001;112(4):713–9. doi: 10.1016/s1388-2457(00)00527-7. [DOI] [PubMed] [Google Scholar]

- Pallanti S, Quercioli L, Pazzagli A. Effects of clozapine on awareness of illness and cognition in schizophrenia. Psychiatry Research. 1999;86(3):239–49. doi: 10.1016/s0165-1781(99)00033-5. [DOI] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, et al. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology. 2000;37(2):127–52. [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Marqui RD, Davidson RJ. Affective judgments of faces modulate early activity (approximately 160 ms) within the fusiform gyri. Neuroimage. 2002;16(3 Pt 1):663–77. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14(6):619–33. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Thut G, Grave de Peralta R, Michel C, Vuilleumier P. Two electrophysiological stages of spatial orienting towards fearful faces: early temporo-parietal activation preceding gain control in extrastriate visual cortex. Neuroimage. 2005;26(1):149–63. doi: 10.1016/j.neuroimage.2005.01.015. [DOI] [PubMed] [Google Scholar]

- Proverbio A, Riva F, Martin E, Zani A. Face coding is bilateral in the female brain. PLoS One. 2010;5(6):e11242. doi: 10.1371/journal.pone.0011242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riggio RE. Manual for the Social Skills Inventory: Research edition. Palo Alto, CA: Consulting Psychologists Press; 1989. [Google Scholar]

- Riggio RE. Socials Skills Manual. 2nd edn. Menlo Park, CA: Mind Garden Inc; 2003. [Google Scholar]

- Rosen HR, Rich BA. Neurocognitive correlates of emotional stimulus processing in pediatric bipolar disorder: a review. Postgraduate Medicine. 2010;122(4):94–104. doi: 10.3810/pgm.2010.07.2177. [DOI] [PubMed] [Google Scholar]

- Rossignol M, Campanella S, Maurage P, Heeren A, Falbo L, Philippot P. Enhanced perceptual responses during visual processing of facial stimuli in young socially anxious individuals. Neuroscience Letters. 2012;526(1):68–73. doi: 10.1016/j.neulet.2012.07.045. [DOI] [PubMed] [Google Scholar]

- Rossion B, Curran T, Gauthier I. A defense of the subordinate-level expertise account for the N170 component. Cognition. 2002;85(2):189–96. doi: 10.1016/s0010-0277(02)00101-4. [DOI] [PubMed] [Google Scholar]

- Rossion B, Delvenne JF, Debatisse D, et al. Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biological Psychology. 1999;50(3):173–89. doi: 10.1016/s0301-0511(99)00013-7. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Richardson MP, Winston JS, et al. Amygdala damage affects event-related potentials for fearful faces at specific time windows. Human Brain Mapping. 2010;31(7):1089–105. doi: 10.1002/hbm.20921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagiv N, Bentin S. Structural encoding of human and schematic faces: holistic and part-based processes. Journal of Cognitive Neuroscience. 2001;13(7):937–51. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Matsumura M. Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport. 2001;12(4):709–14. doi: 10.1097/00001756-200103260-00019. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghofer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41(3):441–9. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Jentzsch I. Event related potentials and the perception of intensity in facial expressions. Neuropsychologia. 2006;44(14):2899–906. doi: 10.1016/j.neuropsychologia.2006.06.020. [DOI] [PubMed] [Google Scholar]

- Sterzer P, Hilgenfeldt T, Freudenberg P, Bermpohl F, Adli M. Access of emotional information to visual awareness in patients with major depressive disorder. Psychological Medicine. 2011;41(8):1615–24. doi: 10.1017/S0033291710002540. [DOI] [PubMed] [Google Scholar]

- Streit M, Dammers J, Simsek-Kraues S, Brinkmeyer J, Wolwer W, Ioannides A. Time course of regional brain activations during facial emotion recognition in humans. Neuroscience Letters. 2003;342(1–2):101–4. doi: 10.1016/s0304-3940(03)00274-x. [DOI] [PubMed] [Google Scholar]

- Surguladze SA, Young AW, Senior C, Brebion G, Travis MJ, Phillips ML. Recognition accuracy and response bias to happy and sad facial expressions in patients with major depression. Neuropsychology. 2004;18(2):212–8. doi: 10.1037/0894-4105.18.2.212. [DOI] [PubMed] [Google Scholar]

- Swithenby SJ, Bailey AJ, Brautigam S, Josephs OE, Jousmaki V, Tesche CD. Neural processing of human faces: a magnetoencephalographic study. Experimental Brain Research. 1998;118(4):501–10. doi: 10.1007/s002210050306. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Edmonds GE, McCarthy G, Allison T. Eyes first! Eye processing develops before face processing in children. Neuroreport. 2001;12(8):1671–6. doi: 10.1097/00001756-200106130-00031. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Pang EW. Developmental changes in early cognitive processes. Electroencephalography and Clinical Neurophysiology. Supplement. 1999;49:145–53. [PubMed] [Google Scholar]

- Utama NP, Takemoto A, Koike Y, Nakamura K. Phased processing of facial emotion: an ERP study. Neuroscience Research. 2009;64(1):30–40. doi: 10.1016/j.neures.2009.01.009. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45(1):174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Williams LM, Liddell BJ, Rathjen J, et al. Mapping the time course of nonconscious and conscious perception of fear: an integration of central and peripheral measures. Human Brain Mapping. 2004;21(2):64–74. doi: 10.1002/hbm.10154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong TK, Fung PC, Chua SE, McAlonan GM. Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: dipole source analysis of event-related potentials. European Journal of Neuroscience. 2008;28(2):407–16. doi: 10.1111/j.1460-9568.2008.06328.x. [DOI] [PubMed] [Google Scholar]

- Wright B, Clarke N, Jordan J, et al. Emotion recognition in faces and the use of visual context in young people with high-functioning autism spectrum disorders. Autism. 2008;12(6):607–26. doi: 10.1177/1362361308097118. [DOI] [PubMed] [Google Scholar]

- Yang J, Yuan J, Li H. Emotional expectations influence neural sensitivity to fearful faces in humans: an event-related potential study. Science China. Life Science. 2010;53(11):1361–8. doi: 10.1007/s11427-010-4083-4. [DOI] [PubMed] [Google Scholar]

- Yuan J, Zhang J, Zhou X, et al. Neural mechanisms underlying the higher levels of subjective well-being in extraverts: pleasant bias and unpleasant resistance. Cognitive, Affective and Behavioral Neuroscience. 2012;12:175–92. doi: 10.3758/s13415-011-0064-8. [DOI] [PubMed] [Google Scholar]

- Zion-Golumbic E, Bentin S. Dissociated neural mechanisms for face detection and configural encoding: evidence from N170 and induced gamma-band oscillation effects. Cerebral Cortex. 2007;17(8):1741–9. doi: 10.1093/cercor/bhl100. [DOI] [PubMed] [Google Scholar]