Abstract

Orthopaedic surgical skill is traditionally acquired during training in an apprenticeship model that has been largely unchanged for nearly 100 years. However, increased pressure for operating room efficiency, a focus on patient safety, work hour restrictions, and a movement towards competency-based education are changing the traditional paradigm. Surgical simulation has the potential to help address these changes. This manuscript reviews the scientific background on skill acquisition and surgical simulation as it applies to orthopaedic surgery. It argues that simulation in orthopaedics lags behind other disciplines and focuses too little on simulator validation. The case is made that orthopaedic training is more efficient with simulators that facilitate deliberate practice throughout resident training and more research should be focused on simulator validation and the refinement of skill definition.

Introduction

Orthopaedic surgical skill is traditionally acquired during training in an apprenticeship model that has been largely unchanged for nearly 100 years. However, increased pressure for operating room efficiency, a focus on patient safety, work hour restrictions, and a movement towards competency-based education are changing the traditional paradigm. Surgical simulation has the potential to help address these changes. This manuscript reviews the scientific background on skill acquisition and surgical simulation as it applies to orthopaedic surgery. It argues that simulation in orthopaedics lags behind other disciplines and focuses too little on simulator validation. The case is made that orthopaedic training is more efficient with simulators that facilitate deliberate practice throughout resident training and more research should be focused on simulator validation and the refinement of skill definition.

The Need for Deliberate Practice in Surgical Skill Acquisition

It is widely accepted that surgeons benefit from practice. The more often a surgical procedure is performed, the lower its morbidity1-6 and the better the outcome7. However, practice should not be confused with repetition; performance does not improve simply because a task is repeated. The key to consistent improvement is “deliberate practice” combined with structured training. Deliberate practice involves engaging learners in focused, effortful skill repetition in progressive exercises that provide informative feedback. Trainees must receive immediate, informative feedback while trying to improve, with the particular feedback matching the characteristics of the task. Trainees should also perform the same or a very similar task repeatedly to increase performance8.

Motor skills are essential to surgical precision. Deliberate practice improves motor skills9-10. Learners advance quickly by repeating well-defined, level-appropriate tasks and receiving immediate feedback that allows for error correction. For example, the steep learning curve for a radical prostatectomy, a mildly complex surgery, does not plateau until 250 performed operations11-12. For a hip fracture surgery, residents require an average of 20-30 trials before attaining expert speed, but learning curves vary widely13.

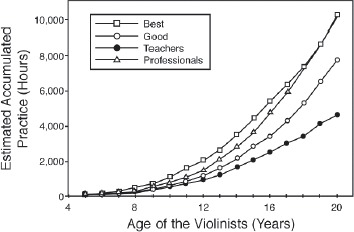

Ericsson, Krampe, and Tesch-Römer proposed a theoretical framework for the acquisition of expert performance through deliberate practice10. Using the framework of deliberate practice, Ericsson et al. found the time to achieve expertise depends on cumulative time spent deliberately practicing, rather than the time since the activity was initiated. The famous standard of 10,0 hours of deliberate practice to become an expert is based on a study of the number of hours of deliberate practice by violinists (Fig. 1). By the age of 20, the most advanced group of expert violinists accumulated 10,0 hours, while the next most accomplished group had 2,500 fewer hours. The least accomplished group of experts accumulated 5,000 hours of deliberate practice10.

Figure 1. The relationship between level of expertise in violinists and the accumulated hours of deliberate practice. From Ericsson, et al.10, used with permission.

The concept of deliberate practice has also been explored in the medical domain. Residents who distributed practice on a drilling task over four weeks outperformed residents who logged all of their practice in one day14. The benefit of periodic practice over lumped practice is consistent with research in many other domains15. This advocates for repetitious skills practice throughout a curriculum, rather than an all-day session focusing on a specific skill.

Of fourteen studies reviewed in a meta-analysis, deliberate practice using simulation-based techniques was found overall to be a superior method of training compared to the apprenticeship model in the medical field16. The current apprenticeship model in orthopaedics does not facilitate deliberate practice, because it is organized around ad hoc experiential opportunities driven by patient needs rather than providing repetition of key skills based on learner needs. The key to facilitating deliberate practice is effective simulation.

The Role of Dedicated Simulation in Surgical Skills Training

Over the past decade, the role of surgical simulation in the acquisition of cognitive and technical skills has grown, particularly for minimally invasive and limited- exposure surgery. Many disciplines have shifted away from the apprenticeship model to teach surgical skills. The field of orthopaedics has not kept pace with these developments. Medical students and residents trained on simulators demonstrate improved performance in actual surgeries17. For example, experts performed reliably better on a bronchoscopy simulator than doctors with less or no experience18. Residents who had been trained to a certified level of competency on a laparoscopic simulator performed their first actual surgery with fewer errors, and caused fewer injuries, than a control group of residents without simulator training19.

A primary factor motivating programs to search for new, more efficient surgical training methods is a 2003 ACGME-mandated reduction in residents' work hours to a maximum of 80 hours per week. Although these changes threaten the amount of time residents have to practice surgical skills, it does not necessarily follow that their competency will decrease. Some practice is more effective at increasing skills than others, so the number of hours of practice may not be the most reliable measure of skill acquisition. To borrow a sports analogy, a baseball player comes up to bat three to four times a game. During each at-bat, the player sees an average of four pitches. This means in a game that averages nearly three hours, a batter sees roughly 12-16 pitches. There are simply not enough in-game hours to reach the expert batting level. In a batting cage a player can hit 50 balls in five minutes, the equivalent of playing four consecutive games. Simulation could supplement apprenticeship training by enabling residents to effectively practice more in less time.

The High Cost of Resident Training in an Apprenticeship Model

The apprenticeship model is the epitome of “hands-on, real world” training, but it comes at a cost. Any increased time in the operating room is expensive, a cost which is passed to the patient and the health care system. Bridges and Diamond compared operating times in general, pediatric, vascular, plastic, urologic, and trauma surgery procedures where residents were present to those with no residents in attendance20. In their four-year study, operations with residents took a net duration of 12.64 minutes longer on average, from the first incision to leaving the room. Over a total of four years, the lost time was approximately 11,184 minutes per resident. In orthopaedics, a study of ACL reconstruction noted an average increased cost of $661.85 due to longer operative time when a resident completed the surgery compared to a faculty surgeon21.

Thus, the extra cost of resident training in the operating room further justifies the expense of simulation training. Simulation training before entering the operating room significantly decreases operation time19, 22-25. Thus, simulation has the potential to decrease the cost of training. In order to realize these potential cost savings, the simulation must represent the skills to be trained well enough that training on simulators will reliably improve performance.

Developing and Validating Surgical Simulators

Building an effective simulator requires the designer to understand both the simulated task and the underlying skills required for the effective task execution. This requires an assessment of the procedure and its constituent tasks. Some surgical tasks are common among different procedures. Other tasks are unique to a particular surgery or situation. Some skills are applicable to many tasks. Other skills are task-specific. There may always be skills that need to be learned in the operating room, but many skills can and should be acquired elsewhere. Often, the initial acquisition of a surgical skill depends on developing the necessary psychomotor skills. Psychomotor skill is the coordination between the physical movement of the trainee and their cognitive processes. Psychomotor skills effectively develop through deliberate practice and structured training.

Kneebone26 proposed the following criteria for critically evaluating a new or existing simulation:

Simulations should allow for sustained, deliberate practice within a safe environment, ensuring that newly acquired skills are consolidated within a defined curriculum that assures regular reinforcement.

Simulations should provide access to expert tutors when appropriate, ensuring that such support fades when it is no longer needed.

Simulations should map onto real clinical experience, so that learning supports the experience gained within communities of practice.

Simulation-based learning environments should provide a supportive, motivational, and learner-centered milieu that is conducive to learning.

Physical and virtual reality surgical simulators, alone or in tandem, have proven themselves as viable training platforms. Physical simulators range in sophistication from low-fidelity representations of a prescribed task, such as laparoscopic suturing, to very realistic, instrumented mannequins. Some simulators are task trainers. These are designed to teach a solitary task in a procedure through repetition. Other simulators are full procedural trainers. These are designed to replicate the entire scope of a complex scenario, such as a surgical procedure.

Some virtual reality surgical simulations involve haptic force-feedback (Fig. 2)27-29. This is useful when the targeted technical skill includes both tactile force-feedback and eye-hand coordination, such as fluoroscopic wire navigation. When forces do not play an important role, basic eye-hand coordination may be trained without force feedback.

Figure 2. Haptic force-feedback shown in orthopaedic drilling simulation. (Left) Tsai and Tsai27, (middle) Froelich, et al.28, and (right) Vankipuram, et al.29, each used with permission.

The determination of a simulator's psychometric properties (i.e., its reliability and validity) is one of the most important facets in the development of a simulator. Reliability refers to the degree of consistency or reproducibility with which an instrument measures what it is intended to measure. If something cannot be measured reliably, then the question of validity is rendered largely moot. The concept of validity addresses the question of whether the measurements obtained from the simulator vary with the educational construct the simulator is intended to measure. The most common categories of validity found in the medical simulation realm include face, content, construct, concurrent, and predictive validity. Each type of validity should ideally be defined within the context of the particular assessment30.

Ultimately, a new simulator should pass multiple validity tests in order to be considered for skills training and competency assessment31. Face validity addresses the question, “To what extent does the platform simulate what it is supposed to simulate, e.g., the surgical procedure?” It refers to the subjective opinion about a test - its appropriateness for the intended use within the target population. Face validity is important for a test's practical utility and success of implementation32, particularly with respect to whether or not trainees will accept the simulation as a valid educational tool. Face validity is usually assessed with expert responses to questionnaires or surveys.

Content validity addresses the question, “Does the simulation measure the relevant dimensions of the task under study?” Content validity is often assessed with a thorough search of the literature and by interviewing expert surgeons. Face and content validity are subjective and arguably not as rigorous as some other assessments of a simulator's validity.

Construct validity defines the extent to which the simulator measures the specific trait or traits that it was designed to measure. Many construct validity studies demonstrate that different learner skill levels are associated with variations in the measurements made by the simulator. Demonstrating a significant difference in expert and novice scores demonstrates that the simulator correctly identifies quantifiable aspects of surgical skill.

Concurrent validity measures the extent to which the simulator agrees with an existing performance measure of a surgical task or procedure. The concurrent validity of surgical simulators is often assessed by comparing simulator scores with the Objective Structured Assessment of Technical Skill (OSATS) or against another, previously validated, simulator. It is frequently difficult and often impossible to assess transfer of learning when attempting to validate educational simulation technology16. The most convincing evidence is provided when researchers can correlate performance on the simulator with real world performance. With appropriate estimates of reliability of both the simulation measure and the measure obtained from actual practice, a correct correlation can provide a ‘true score’ correlation between the constructs measured by the two assessments.

Validity is not a binary determination, but reflects a gradual judgment, depending on the purpose of the measurement and the proper interpretation of the results. A single instrument may be used for many different purposes, and resulting scores may be more valid for one purpose than for another33. This means that validity statements based on the evaluation of one task can be, and probably will be, different than those based on another task. Indeed, it is fundamental for creating a useful simulator-based teaching environment to recognize that a surgical procedure has to be divided into a series of steps that can be trained and measured separately34.

A validated simulator can address the lack of opportunities for deliberate practice in surgical training. It will also provide residents with immediate skill feedback, an indispensable component of deliberate practice and the development of expertise. Residents need carefully devised educational variations providing incremental challenges to improve their surgical skills35. The apprenticeship model struggles to control the real world challenges faced by the residents, and feedback requires time and attention from busy staff surgeons. Simulators can provide real-time feedback and instruction outside the operating room36.

Simulator Fidelity, Complexity, and Transfer of Skill to the Operating Room

Training simulators recreate aspects of reality. The degree of verisimilitude affects the cost, time, complexity, and technology required in development. The current trend in simulation is to strive towards building the highest fidelity simulator possible. Unfortunately, in trying to mimic minute details, these ultra-realistic simulators occasionally include irrelevant tasks.

The complement to physical fidelity is psychological fidelity. Caird defines psychological fidelity as “the degree that a simulation produces the sensory and cognitive processes within the trainee as they might occur in operational theaters37.” It is important to note that physical fidelity and psychological fidelity are not mutually exclusive. In some cases, they have synergistic effects. In many other cases, higher physical fidelity simulators produce little to no quantifiable benefit in training over lower physical fidelity simulators38-39.

Skill transfer from the simulator to real scenarios should be the pinnacle of all training simulator development goals. Skill transfer is much more closely tied to psychological fidelity than to physical fidelity. A simulator's psychological fidelity with a task is more difficult to assess than its physical fidelity. The designer must distill the essential task elements that must be supported in the simulated environment. If the correct elements are distilled, training on a simulator will transfer to the real task environment.

Each simulator presumes that some perceptual cues are important and others may be ignored. The designer's insightful selection of the correct cues is tightly coupled to the success of the training transfer to the real environment and to the cost and complexity of the simulator. A simulator in orthopaedics must make simple, explicit assumptions about what is important in the task it represents: the relative position of the hand, tool and bone; the obfuscation of the direct view of the bone; the accurate haptic sensation of the task; and the presentation of the basic bone and tool geometry in the simulated environment.

Simulation in the Training and Assessment of Orthopaedic Surgeons

Orthopaedic surgery has lagged behind other surgical disciplines in developing and incorporating simulation of surgical skills into education and assessment paradigms, particularly in comparison with laparoscopic surgery, a leader in the simulation field40-47. Previous simulation in orthopaedics has mostly emphasized learning anatomy and surgical approaches on cadavers, and placing products supplied by the medical device industry on naked surrogate bone specimens. Models for surgical skills training outside the operating room have been implemented in a few orthopaedic sub-specialties, primarily arthroscopy48 and hand surgery49. Thus, it is difficult to build on previously established simulators that demonstrate which perceptual cues are essential to orthopaedic surgery.

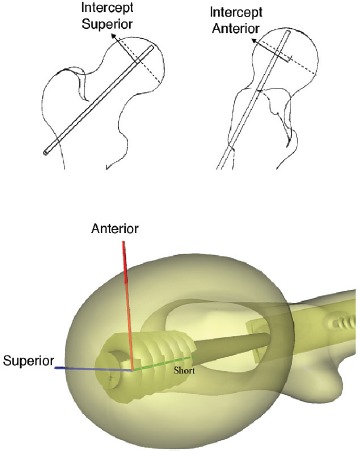

A 2010 review of virtual reality simulators for orthopaedic surgery50 found only 23 articles that dealt with specific simulators, compared with 246 citations for laparoscopic simulators. Most of the other recent contributions to orthopaedic simulation technology have emphasized haptic feedback. Several such systems have been built to simulate drilling27, 29, and to simulate the forces in amputation surgery51. Other simulators emphasizing 3D graphics over haptics have enjoyed some success. Blyth and co-workers have developed a highly idealized virtual reality training system (Fig. 3) for basic hip fracture fixation, including a surgical simulator and an assessment component52-53.

Figure 3. (top) Schematic of images seen by trainee during procedure showing intercept with center of femoral head. (bottom) The implant as viewed after the procedure highlighting 3D placement of screw. From Blyth, Stott and Anderson52, used with permission.

Unfortunately, few of these orthopaedic simulators have yet been rigorously evaluated. In a recent literature review54, Schaefer et al. identified three critical evaluative aspects of simulation in healthcare education: (1) the validity and reliability of the simulator; (2) the quality of the performance measures used to assess learning outcomes; and (3) the level of inquiry or strength of educational study design to support the evolution of theoretical perspectives. Of 4189 simulation articles reviewed, only 221 qualified as having adequate study design, only 51 meaningfully evaluated their simulators, and only 39 documented significant translational outcomes. Less than 30% examined their simulator's validity, typically assessing only face and content validity, the two most subjective validation methods.

Because simulation is increasingly being contemplated for use in high-stakes evaluation-related licensure and certification, and for identifying mechanisms to mitigate error and decrease mortality and morbidity, it is essential that data supporting the validity and reliability of simulators be vigorously pursued. When a performance assessment is conducted with a simulator, the validity and reliability of the assessment can be evaluated independently from that of the training. This allows the scoring procedure to be separated from the simulator designs, and therefore increases the generality of the work. Performance assessment validity evaluations are made with respect to the performance characteristics of the trainee, rather than with respect to the simulator. For example, simulator researchers typically assess the construct validity of a performance assessment by determining whether or not the assessment measures a performance difference between experts and novices.

Program directors and orthopaedic faculty assess orthopaedic residents using methods mandated by the Accreditation Council for Graduate Medical Education (ACGME). For procedural skills the ACGME requires residents to document surgical experience using a web- based case log system. The Residency Review Committee in orthopaedic surgery uses these case logs to assess the overall experience of residents in terms of the numbers of procedures performed. However, case logs consist only of resident self-reported participation in cases and do not specifically assess the demonstration of actual skill or competency.

Orthopaedic residents are but one group whose competency in this area needs to be assessed. The most important and widely accepted method to assess competence of orthopaedic surgeons in practice is through Board Certification. The American Board of Orthopaedic Surgery (ABOS) mandates recertification every ten years, and it has recently adopted an evolving program of more continuous maintenance of certification. This ongoing process of board certification assesses orthopaedists' competency in medical knowledge, professionalism, communication, and judgment. However, the assessment of a candidate's surgical skill is indirect, relying on radiographic review and case discussion rather than a direct measure of procedural skill.

High-fidelity simulations may be too expensive for implementation within orthopaedic surgery certification, but many of the foundational skills could be economically implemented as part of an objective structured clinical examination55. For example, once the relationship between foundational skills and performance in high- fidelity environments is established, it becomes possible to test such skills as part of competency-based assessment. Moreover, to the degree that there is an aptitude aspect to skills, skills-testing may also be an important tool for resident applicant selection.

Education in general surgery has adopted surgical simulation into a robust curriculum that has been widely adopted into general surgery Graduate Medical Education programs55-64. Residents progress through three phases that are divided into a series of modules. The Residency Review Committee in general surgery highly recommends that this curriculum be adopted by general surgery training programs. The American Board of Surgery requires passing the Fundamentals of Laparoscopic Surgery program65-66 (Fig. 4) as a basic skills competency for their certification and recertification programs in general surgery. There is no fundamental barrier preventing orthopaedics from adopting similar practices, except for the lack of validated surgical simulation training and assessment approaches.

Figure 4. An example of a commercial product featuring a Fundamentals of Laparoscopic training simulator. Figure courtesy of VTI Medical™.

Recommendations for Future Research

Simulation has a great potential to facilitate faster and more effective learning in orthopaedic surgery. In order to capture the benefits of this training, it is important that researchers and practitioners organize their efforts to gain the maximum advantage in the shortest time with the least cost. This summary of research reveals a number of opportunities for growth in the future of orthopaedic surgery simulation.

Residents need opportunities for regular, deliberate practice. Simulators should be designed so that residents can exercise their surgical skills at regular intervals, with clear feedback. Some skills may require dozens of repetitions while others require hundreds, but one or two repetitions with cadaver parts or plastic models is likely insufficient for complex skills requiring the development of specific psycho-motor behaviors.

Training opportunities for many surgical skills should be repeated in simulation until many of the underlying skills are at a level of automaticity for the residents. In fact, if an effective and valid simulator can be developed for a surgical skill at a reasonable cost, that skill should be simulated.

Orthopaedic surgery simulation should extend beyond the needs of first-year residents to later years of training. The performance of general practitioners in the area of orthopaedic surgery is likely to plateau or even diminish over time without opportunities for deliberate practice67-68. Simulators should be developed to facilitate the assessment and improvement of their skills.

Research should provide a greater emphasis on validity studies that go beyond establishing simple face validity. They should specify and, ideally, benchmark the performance of simulator elements designed to replicate critical tasks and they should design performance measures that can be tied back to the task model.

Research is also needed to further understand what specific skills are needed for orthopaedic surgery, how they are currently trained, and how effective the current approaches are. Work on new simulators should specifically describe the training and assessment procedure that is used with the simulator.

Finally, we would caution researchers to focus their simulator development on key task elements and psychological fidelity rather than focusing on the realism of the simulation.

References

- 1.Flood AB, Scott WR, Ewy W. Does practice make perfect? Part I: The relation between hospital volume and outcomes for selected diagnostic categories. Med Care. 1984;22(2):98–114. [PubMed] [Google Scholar]

- 2.Flood AB, Scott WR, Ewy W. Does practice make perfect? Part II: The relation between volume and and outcomes and other hospital characteristics. Med Care. 1984;22(2):115–125. [PubMed] [Google Scholar]

- 3.Luft HS. The relation between surgical volume and mortality: An exploration of causal factors and alternative models. Med Care. 1980;18(9):940–959. doi: 10.1097/00005650-198009000-00006. [DOI] [PubMed] [Google Scholar]

- 4.Luft HS, Bunker JP, Enthoven AC. Should operations be regionalized? The empirical relation between surgical volume and mortality. New Eng J Med. 1979;301(25):1364–1369. doi: 10.1056/NEJM197912203012503. [DOI] [PubMed] [Google Scholar]

- 5.Luft HS, Hunt SS, Maerki SC. The volume- outcome relationship: practice-makes-perfect or selective-referral patterns? Health Serv Res. 1987;22(2):157–182. [PMC free article] [PubMed] [Google Scholar]

- 6.Taylor HD, Dennis DA, Crane HS. Relationship between mortality rates and hospital patient volume for Medicare patients undergoing major orthopaedic surgery of the hip, knee, spine, and femur. J Arthroplasty. 1997;12(3):235–242. doi: 10.1016/s0883-5403(97)90018-8. [DOI] [PubMed] [Google Scholar]

- 7.Birkmeyer JD, et al. Hospital volume and surgical mortality in the United States. New Eng J Med. 2002;346(15):1128–1137. doi: 10.1056/NEJMsa012337. [DOI] [PubMed] [Google Scholar]

- 8.Trowbridge MH, Cason H. An experimental study of Thorndike's theory of learning. J Gen Psychol. 1932;7(2):245–260. [Google Scholar]

- 9.Ericsson KA. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad Med. 2004;79(10 Suppl):S70–81. doi: 10.1097/00001888-200410001-00022. [DOI] [PubMed] [Google Scholar]

- 10.Ericsson KA, Krampe RT, Tesch-Römer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363–406. [Google Scholar]

- 11.Begg CB, et al. Variations in morbidity after radical prostatectomy. New Eng J Med. 2002;346(15):1138–1144. doi: 10.1056/NEJMsa011788. [DOI] [PubMed] [Google Scholar]

- 12.Vickers AJ, et al. The surgical learning curve for prostate cancer control after radical prostatectomy. J Natl Cancer I. 2007;99(15):1171–1177. doi: 10.1093/jnci/djm060. [DOI] [PubMed] [Google Scholar]

- 13.Bjorgul K, Novicoff WM, Saleh KJ. Learning curves in hip fracture surgery. Int Orthop. 2011;35(1):113–119. doi: 10.1007/s00264-010-0950-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Moulton CAE, et al. Teaching surgical skills: What kind of practice makes perfect?: A randomized, controlled trial. Ann Surg. 2006;244(3):400–409. doi: 10.1097/01.sla.0000234808.85789.6a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dempster FN. Spacing effects and their implications for theory and practice. Educ Psychol Rev. 1989;1(4):309–330. [Google Scholar]

- 16.McGaghie WC, et al. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86(6):706–711. doi: 10.1097/ACM.0b013e318217e119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Palter VN, et al. Ex vivo technical skills training transfers to the operating room and enhances cognitive learning: A randomized controlled trial. Ann Surg. 2011;253(5):886–889. doi: 10.1097/SLA.0b013e31821263ec. [DOI] [PubMed] [Google Scholar]

- 18.Ost D, et al. Assessment of a bronchoscopy simulator. Am J Respir Crit Care Med. 2001;164(12):2248–2255. doi: 10.1164/ajrccm.164.12.2102087. [DOI] [PubMed] [Google Scholar]

- 19.Seymour NE, et al. Virtual reality training improves operating room performance: Results of a randomized, double-blinded study. Ann Surg. 2002;236(4):458–463. doi: 10.1097/00000658-200210000-00008. discussion 463-464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bridges M, Diamond DL. “The financial impact of teaching surgical residents in the operating room,”. The American Journal of Surgery. 1999;177:28–32. doi: 10.1016/s0002-9610(98)00289-x. vol. pp. [DOI] [PubMed] [Google Scholar]

- 21.Farnworth LR, et al. A comparison of operative times in arthroscopic ACL reconstruction between orthopaedic faculty and residents: the financial impact of orthopaedic surgical training in the operating room. Iowa Orthop J. 2001;21:31–35. [PMC free article] [PubMed] [Google Scholar]

- 22.Andreatta PB, et al. Laparoscopic skills are improved with LapMentor™ training: Results of a randomized, double-blinded study. Ann Surg. 2006;243(6):854–863. doi: 10.1097/01.sla.0000219641.79092.e5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fried G, et al. Comparison of laparoscopic performance in vivo with performance measured in a laparoscopic simulator. Surg Endosc. 1999;13(11):1077–1081. doi: 10.1007/s004649901176. [DOI] [PubMed] [Google Scholar]

- 24.Rambani R, et al. Computer-assisted orthopedic training system for fracture fixation. J Surg Educ. 2013;70(3):304–308. doi: 10.1016/j.jsurg.2012.11.009. [DOI] [PubMed] [Google Scholar]

- 25.Torkington J, et al. Skill transfer from virtual reality to a real laparoscopic task. Surg Endosc. 2001;15(10):1076–1079. doi: 10.1007/s004640000233. [DOI] [PubMed] [Google Scholar]

- 26.Kneebone R. Evaluating clinical simulations for learning procedural skills: A theory-based approach. Acad Med. 2005;80(6):549–553. doi: 10.1097/00001888-200506000-00006. [DOI] [PubMed] [Google Scholar]

- 27.Tsai MD, Hsieh MS, Tsai CH. Bone drilling haptic interaction for orthopedic surgical simulator. Comput Biol Med. 2007;37(12):1709–1718. doi: 10.1016/j.compbiomed.2007.04.006. [DOI] [PubMed] [Google Scholar]

- 28.Froelich JM, et al. Surgical simulators and hip fractures: A role in residency training? J Surg Educ. 2011;68(4):298–302. doi: 10.1016/j.jsurg.2011.02.011. [DOI] [PubMed] [Google Scholar]

- 29.Vankipuram M, et al. A virtual reality simulator for orthopedic basic skills: A design and validation study. J Biomed Inform. 2010;43(5):661–668. doi: 10.1016/j.jbi.2010.05.016. [DOI] [PubMed] [Google Scholar]

- 30.Schijven M, Jakimowicz J. Face-, expert, and referent validity of the Xitact LS500 laparoscopy simulator. Surg Endosc. 2002;16(12):1764–1770. doi: 10.1007/s00464-001-9229-9. [DOI] [PubMed] [Google Scholar]

- 31.Standards for educational and psychological testing. American Educational Research Association, American Psychological Association, National Council on Measurement in Education, Joint Committee on Standards for Educational Psychological Testing. 1999.

- 32.Karras DJ. Statistical methodology: II. Reliability and validity assessment in study design, Part B. Acad Emerg Med. 1997;4(2):144–147. doi: 10.1111/j.1553-2712.1997.tb03723.x. [DOI] [PubMed] [Google Scholar]

- 33.Gaberson KB. Measurement reliability and validity. AORN J. 1997;66(6):1092–1094. doi: 10.1016/s0001-2092(06)62551-9. [DOI] [PubMed] [Google Scholar]

- 34.Grantcharov TP, et al. Assessment of technical surgical skills. Eur J Surg. 2002;168(3):139–144. doi: 10.1080/110241502320127739. [DOI] [PubMed] [Google Scholar]

- 35.Ericsson KA. An expert performance perspective of research on medical expertise: The study of clinical performance. Med Educ. 2007;41(12):1124–1130. doi: 10.1111/j.1365-2923.2007.02946.x. [DOI] [PubMed] [Google Scholar]

- 36.Karam MD, Westerlind B, Anderson DD, Marsh JL. Development of an orthopaedic surgical skills curriculum for post-graduate year one resident learners - The University of Iowa experience. Iowa Orthop J. 2013;33:178–184. [PMC free article] [PubMed] [Google Scholar]

- 37.Caird J. Persistent issues in the application of virtual environment systems to training. Proceedings to the Third Annual Symposium on Human Interaction with Complex Systems. 1996 IEEE. [Google Scholar]

- 38.Lintern G, et al. Transfer of landing skills in beginning flight training. Hum Factors. 1990;32(3):319–327. [Google Scholar]

- 39.Lintern G, Roscoe SN, Sivier JE. Display principles, control dynamics, and environmental factors in pilot training and transfer. Hum Factors. 1990;32(3):299–317. [Google Scholar]

- 40.Wilson M, Middlebrook A, Sutton C, R. Stone R, McCloy R. “MIST VR: a virtual reality trainer for laparoscopic surgery assesses performance,”. Annals of the Royal College of Surgeons of England. 1997;79:403. vol. p. [PMC free article] [PubMed] [Google Scholar]

- 41.Seymour NE, Gallagher AG, Roman SA, O'Brien mK, Bansal VK, Andersen DK, et al. “Virtual reality training improves operating room performance: results of a randomized, double-blinded study,”. Annals of surgery. 2002;236:458. doi: 10.1097/00000658-200210000-00008. vol. p. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kothari SN, Kaplan BJ, DeMaria EJ, Broderick TJ, Merrell RC. “Training in laparoscopic suturing skills using a new computer-based virtual reality simulator (MIST-VR) provides results comparable to those with an established pelvic trainer system,”. Journal of Laparoendoscopic & Advanced Surgical Techniques. 2002;12:167–173. doi: 10.1089/10926420260188056. vol. pp. [DOI] [PubMed] [Google Scholar]

- 43.Gallagher AG, Richie K, McClure N, McGuigan J. “Objective psychomotor skills assessment of experienced, junior, and novice laparoscopists with virtual reality,”. World journal of surgery. 2001;25:1478–1483. doi: 10.1007/s00268-001-0133-1. vol. pp. [DOI] [PubMed] [Google Scholar]

- 44.Torkington J, Smith S, Rees B, Darzi A. “Skill transfer from virtual reality to a real laparoscopic task,”. Surgical endoscopy. 2001;15:1076–1079. doi: 10.1007/s004640000233. vol. pp. [DOI] [PubMed] [Google Scholar]

- 45.Chaudhry A, Sutton C, Wood J, Stone R, McCloy R. “Learning rate for laparoscopic surgical skills on MIST VR, a virtual reality simulator: quality of human-computer interface,”. Annals of the Royal College of Surgeons of England. 1999;81:281. vol. p. [PMC free article] [PubMed] [Google Scholar]

- 46.Derossis AM, Fried GM, Abrahamowicz M, Sigman HH, Barkun JS, Meakins JL. “Development of a model for training and evaluation of laparoscopic skills,”. The American journal of surgery. 1998;175:482–487. doi: 10.1016/s0002-9610(98)00080-4. vol. pp. [DOI] [PubMed] [Google Scholar]

- 47.Vassiliou M, Ghitulescu G, Feldman L, Stanbridge D, Leffondre K, Sigman H, et al. “The MISTELS program to measure technical skill in laparoscopic surgery,”. Surgical Endoscopy And Other Interventional Techniques. 2006;20:744–747. doi: 10.1007/s00464-005-3008-y. vol. pp. [DOI] [PubMed] [Google Scholar]

- 48.Insel A, et al. The development of an objective model to assess arthroscopic performance. J Bone Joint Surg Am. 2009;91(9):2287–2295. doi: 10.2106/JBJS.H.01762. [DOI] [PubMed] [Google Scholar]

- 49.Van He, est A, et al. Assessment of technical skills of orthopaedic surgery residents performing open carpal tunnel release surgery. J Bone Joint Surg Am. 2009;91(12):2811–2817. doi: 10.2106/JBJS.I.00024. [DOI] [PubMed] [Google Scholar]

- 50.Mabrey JD, Reinig KD, Cannon WD. Virtual reality in orthopaedics: Is it a reality? Clin Orthop Relat Res. 2010;468(10):2586–2591. doi: 10.1007/s11999-010-1426-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hsieh MS, Tsai MD, Yeh YD. An amputation simulator with bone sawing haptic interaction. Biomed Eng-App Bas C. 2006;18(5):229–236. [Google Scholar]

- 52.Blyth P, Stott N, Anderson I. A simulation-based training system for hip fracture fixation for use within the hospital environment. Injury. 2007;38(10):1197–1203. doi: 10.1016/j.injury.2007.03.031. [DOI] [PubMed] [Google Scholar]

- 53.Blyth P, Stott N, Anderson I. Virtual reality assessment of technical skill using the Bonedoc DHS simulator. Injury. 2008;39(10):1127–1133. doi: 10.1016/j.injury.2008.02.006. [DOI] [PubMed] [Google Scholar]

- 54.Schaefer III JJ, et al. Literature review: Instructional design and pedagogy science in healthcare simulation. Simul Healthc. 2011;6(7):S30–S41. doi: 10.1097/SIH.0b013e31822237b4. [DOI] [PubMed] [Google Scholar]

- 55.Phillips D, Zuckerman JD, Strauss EJ, Egol KA. Objective structured clinical examinations: A guide to development and implementation in orthopaedic residency. J Am Acad Orthop Surg. 2013;21(10):592–600. doi: 10.5435/JAAOS-21-10-592. [DOI] [PubMed] [Google Scholar]

- 56.Boehler ML, et al. A theory-based curriculum for enhancing surgical skillfulness. J Am Coll Surg. 2007;205(3):492–497. doi: 10.1016/j.jamcollsurg.2007.04.018. [DOI] [PubMed] [Google Scholar]

- 57.DaRosa D, et al. Impact of a structured skills laboratory curriculum on surgery residents' intraoperative decision-making and technical skills. Acad Med. 2008;83(10 Suppl):S68–S71. doi: 10.1097/ACM.0b013e318183cdb1. [DOI] [PubMed] [Google Scholar]

- 58.Dunnington GL, Williams RG. Addressing the new competencies for residents' surgical training. Acad Med. 2003;78(1):14–21. doi: 10.1097/00001888-200301000-00005. [DOI] [PubMed] [Google Scholar]

- 59.Kapadia MR, et al. Current assessment and future directions of surgical skills laboratories. J Surg Educ. 2007;64(5):260–265. doi: 10.1016/j.jsurg.2007.04.009. [DOI] [PubMed] [Google Scholar]

- 60.Kim MJ, et al. Refining the evaluation of operating room performance. J Surg Educ. 2009;66(6):352–356. doi: 10.1016/j.jsurg.2009.09.005. [DOI] [PubMed] [Google Scholar]

- 61.Sanfey H, Dunnington G. Verification of proficiency: A prerequisite for clinical experience. Surg Clin N Am. 2010;90(3):559–567. doi: 10.1016/j.suc.2010.02.008. [DOI] [PubMed] [Google Scholar]

- 62.Sanfey H, et al. Verification of proficiency in basic skills for postgraduate year 1 residents. Surgery. 2010;148(4):759–766. doi: 10.1016/j.surg.2010.07.018. discussion 766-7. [DOI] [PubMed] [Google Scholar]

- 63.Sanfey HA, Dunnington GL. Basic surgical skills testing for junior residents: Current views of general surgery program directors. J Am Coll Surg. 2011;212(3):406–412. doi: 10.1016/j.jamcollsurg.2010.12.012. [DOI] [PubMed] [Google Scholar]

- 64.Scott DJ, et al. The changing face of surgical education: Simulation as the new paradigm. J Surg Res. 2008;147(2):189–193. doi: 10.1016/j.jss.2008.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vassiliou MC, et al. FLS and FES: Comprehensive models of training and assessment. Surg Clin N Am. 2010;90(3):535–558. doi: 10.1016/j.suc.2010.02.012. [DOI] [PubMed] [Google Scholar]

- 66.Xeroulis G, Dubrowski A, Leslie K. Simulation in laparoscopic surgery: A concurrent validity study for FLS. Surg Endosc. 2009;23(1):161–165. doi: 10.1007/s00464-008-0120-9. [DOI] [PubMed] [Google Scholar]

- 67.Ericsson KA. “The influence of experience and deliberate practice on the development of superior expert performance,”. The Cambridge handbook of expertise and expert performance. 2006:683–703. pp. [Google Scholar]

- 68.Ericsson KA, Krampe RT, Tesch-Römer C. “The role of deliberate practice in the acquisition of expert performance,”. Psychological review. 1993;100:363. vol. p. [Google Scholar]