Abstract

Objective

To evaluate whether accredited hospitals maintain quality and patient safety standards over the accreditation cycle by testing a life cycle explanation of accreditation on quality measures. Four distinct phases of the accreditation life cycle were defined based on the Joint Commission International process. Predictions concerning the time series trend of compliance during each phase were specified and tested.

Design

Interrupted time series (ITS) regression analysis of 23 quality and accreditation compliance measures.

Setting

A 150-bed multispecialty hospital in Abu Dhabi, UAE.

Participants

Each month (over 48 months) a simple random sample of 24% of patient records was audited, resulting in 276 000 observations collected from 12 000 patient records, drawn from a population of 50 000.

Intervention(s)

The impact of hospital accreditation on the 23 quality measures was observed for 48 months, 1 year preaccreditation (2009) and 3-year postaccreditation (2010–2012).

Main outcome measure(s)

The Life Cycle Model was evaluated by aggregating the data for 23 quality measures to produce a composite score (YC) and fitting an ITS regression equation to the unweighted monthly mean of the series.

Results

The four phases of the life cycle are as follows: the initiation phase, the presurvey phase, the postaccreditation slump phase and the stagnation phase. The Life Cycle Model explains 87% of the variation in quality compliance measures (R2=0.87). The ITS model not only contains three significant variables (β1, β2 and β3) (p≤0.001), but also the size of the coefficients indicates that the effects of these variables are substantial (β1=2.19, β2=−3.95 (95% CI −6.39 to −1.51) and β3=−2.16 (95% CI −2.52 to −1.80).

Conclusions

Although there was a reduction in compliance immediately after the accreditation survey, the lack of subsequent fading in quality performance should be a reassurance to researchers, managers, clinicians and accreditors.

Keywords: AUDIT, HEALTH SERVICES ADMINISTRATION & MANAGEMENT

Strengths and limitations of this study.

The study uses interrupted time series analysis as an alternative to the randomised control trial, which is recognised as the gold standard by which effectiveness is measured in clinical disciplines.

This is the first interrupted time series analysis of hospital accreditation.

This is also the first study on hospital accreditation in the UAE.

This is the first study to develop and test the Life Cycle Model on hospital accreditation.

The study is limited to one hospital; more studies are needed to test the validity of this life cycle framework in different national and cultural settings.

The selection and validity of the quality measures were dependent on the accuracy of documentation in the patient record.

Introduction

Hospital accreditation is frequently selected by healthcare leaders as a method to improve quality and is an integral part of healthcare systems in more than 70 countries.1 The growth of hospital accreditation can be attributed in part to the growing public awareness of medical errors and patient safety gaps in healthcare.2 Highlighting the costs of accreditation, Øvretveit and Gustafson3 state that evaluations should be used effectively because they consume time and money that can be utilised in other activities of organisations. As cost containment continues to be a concern in many hospitals, organisations need to evaluate the value of accreditation as a long-term investment.4 However, the literature shows mixed and inconsistent results over the impact and effectiveness of hospital accreditation.5–10 Studies using a perceived benefits approach have argued that hospital accreditation sustains improvements in quality and organisational performance.11–14 Although accreditation is a framework for achieving and sustaining quality, empirical studies that evaluate whether accredited organisations sustain compliance with quality and patient safety standards over the accreditation cycle are lacking. Most studies have used cross-sectional designs and/or comparative static analysis of data at two points in time.8 13 15 16 To draw causal inferences about the direct influence of accreditation on patients’ health outcomes and clinical quality, a dynamic analysis that focuses on the effects of accreditation over time is needed.3 This research directly addresses this issue by adopting a time series framework. In addition, this is the first study to answer the important question of whether accredited organisations maintain quality and patient safety standards over the accreditation cycle by developing and testing a life cycle explanation.

The accreditation life cycle defines the complex stages and dynamics of accreditation as a quality intervention. We shall test the validity of the Life Cycle Model against monthly data, for a series of quality measures recorded by a single hospital over 4 years (between January 2009 and December 2012). This period incorporates an accreditation survey in December 2009.

Joint Commission International (JCI) has published an accreditation preparation strategy that suggests most hospitals will pass through various phases during the process of accreditation.17 Based on the JCI process, we hypothesise four distinct phases of the accreditation cycle and derive predictions concerning the time series trend of compliance during each phase. The predictions are the building blocks of the life cycle framework. We then test the validity of the Life Cycle Model by calibrating interrupted time series regression equations for 23 key quality compliance measures.

The life cycle of accreditation

The initiation phase

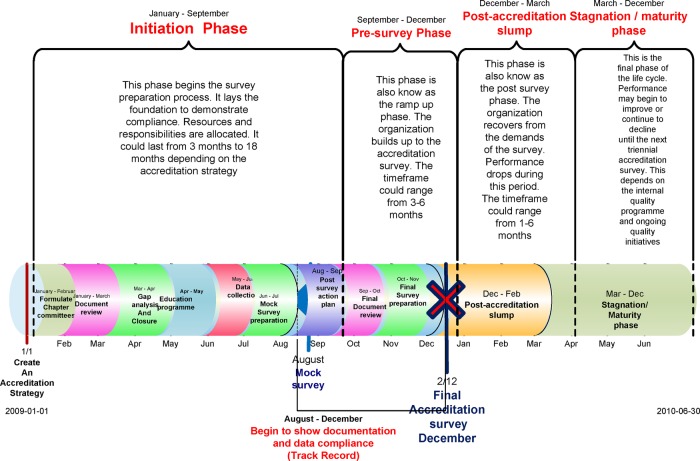

This involves laying the foundation for achieving compliance with the JCI quality standards. We describe two subphases: adoption and revitalisation (figure 1). The adoption subphase is characterised by the implementation of new standards. JCI recommends developing an internal structure, composed of teams and leaders, to facilitate coordination of all the activities needed to prepare for accreditation.17 A steering committee of team leaders coordinates the preparation. As JCI requires a number of mandatory policies and procedures, a document review is initiated. The revitalisation subphase is characterised by further improvement in compliance stimulated by a gap analysis. JCI recommends that a Baseline Assessment/Gap analysis is carried out in order to compare current processes and compliance with the expectations of the standards.17 This identifies the actions necessary to eliminate the gaps between an organisation's current performance and that necessary to achieve accreditation. Additionally, the collection and analysis of baseline quality data are initiated and compared with the requirements of the quality monitoring standards.17 The process includes: (1) analysing compliance with the JCI standards; (2) developing an action plan to address deficiencies; (3) implementation of new processes and data collection targeting compliance to standards; (4) conducting an organisation-wide training programme and (5) allocation of required resources. We predict that the initiation phase as a whole will be characterised by a gradual improvement in the degree of compliance to standards, that is, a positive change in slope. Since it is also a period of change, sporadic improvements in performance may be expected as organisations pilot documents and alter practices.

Figure 1.

The accreditation life cycle: phases and timeline.

The presurvey phase

The presurvey phase occurs within 3–6 months of the accreditation survey (figure 1). It follows a mock survey, recommended by JCI, where the findings lead to a review of existing gaps and the staff work on closing these within the short time frame.17 A marked improvement (ramp up) in compliance is expected to occur during the presurvey phase because the staff are aware of the proximity of the survey and because the organisation invests resources in preparation. Furthermore, JCI accreditation requires submission of a 4-month record of compliance measures prior to the accreditation survey, thus providing a further stimulus to improvement. It is hypothesised, therefore, that the peak level of compliance performance will occur during this phase.

The postaccreditation slump

The quality performance of most hospitals tends to fall back towards preaccreditation levels immediately on receiving accredited status (figure 1). The staff no longer feel the pressure to perform optimally and may focus on activities that were neglected or shelved during the presurvey phase. This phase may be prolonged if there is a lack of leadership, no incentive to improve, competing demands, organisational changes or lack of continuous monitoring of performance. The loss of the quality manager, who is responsible for maintaining quality by measures such as periodic self-audit and continuous education, is potentially serious. If the goal was survey compliance rather than quality improvement, standards may not be embedded in practice and performance will not be sustained. We hypothesise that a sharp drop in levels of compliance will occur immediately following the accreditation survey followed by a negative change in slope over time.

The stagnation/maturation phase

This phase follows the postaccreditation slump and occurs a few months after the accreditation survey. Since the hospital is in compliance with the JCI standards, as validated by the survey, there are no new initiatives to drive further improvements, which are predicted to lead to stagnation in compliance performance. If there is no ongoing performance management system, a decline may set in which may last until the next initiation phase in preparation for reaccreditation. Generally, the accreditation process includes a periodic (snapshot), as opposed to continuous assessment, which leads to a more reactive rather than forward-looking focus and can be a factor in persistent quality deficiencies.18 During this stagnation phase, we hypothesise that there will be an undulating plateau of compliance characterised by sporadic changes but at an overall level above the preaccreditation values.

Methods

Study population

The study was conducted in the private 150-bed, multispecialty, acute-care hospital in Abu Dhabi, UAE. The annual inpatient census is approximately 15 000. The hospital treats approximately half a million ambulatory care patients per year. The scope of healthcare services is provided to all patient age groups, nationalities and payment types.

Data

To test the Life Cycle Model, a total of 23 quality measures were recorded each month at the hospital over a 4-year period, including a JCI accreditation survey (table 1). The quality measures were selected by a panel of experts consisting of clinical auditors, doctors, quality and patient safety leaders based on: (1) interpretability, enabling clear conclusions to be drawn on the level of compliance with JCI standards and thus accreditation impact; (2) consistency in terms of high values indicating better quality; (3) direct correlation with a specific JCI standard and relation to an important dimension of quality and (4) applicability, as all measures should apply to all patients in the hospital irrespective of disease condition or specialty. These measures were observed for 48 months, 1 year prior to accreditation (2009) to capture the preparation period, and for 3-year postaccreditation (2010, 2011 and 2012) to capture the 3-year accreditation cycle. The monthly time interval was based on the most disaggregated level of the data collection and the organisation's reporting frequency for the quality measures. The principal data source for the measures was a random sample of 12 000 patient records drawn from a population of 50 000 during the study period (January 2009 to December 2012). Slovin's formula was used to calculate the sample size per month based on a 95% CI from an average monthly inpatient census of 1500 patients. Each month during the entire study period, a simple random sample of 24% of patient records was selected from the population of discharged patients and manually audited by physicians. The auditors were unaware of the objectives of the study. The data were abstracted from the patient record 1 month postdischarge, resulting in a total of 276 000 observations. Quality measures that displayed an inverse relationship to percentage measures were transformed, for example, ‘percentage of surgical site infections’ was converted to the ‘percentage of infection-free surgeries’, thus equating higher values to good quality while, conversely, high rates of, for example, surgical site infection indicate poor quality. The sources and methods of data collection were the same before and after the intervention, thus eliminating any detection bias. The measures reflect important dimensions of quality, including patient assessment, surgical procedures, anaesthesia and sedation use, medication errors, infection control and patient safety. Furthermore, the measures represent 10 (71.4%) of the 14 chapters in the JCI 3rd Edition Standards manual. Since the 10 chapters have a direct impact on clinical quality, the measures signify survey tracer indicators of standards compliance (table 1).

Table 1.

Quality measure descriptions for the Al Noor Hospital time series analysis

| Dimension of measurement | Measures | Value |

|---|---|---|

| Patient assessment (JCI chapters—assessment of patients and care of patient) | ||

| Y1 | Initial medical assessment done within 24 h of admission | Percentage |

| Y2 | Initial nursing assessment within 24 h of admission | Percentage |

| Y3 | Percentage of pain assessments completed per month | Percentage |

| Y4 | Percentage of completed pain reassessments per month | Percentage |

| Surgical procedures (JCI chapters—patient and family rights, anaesthesia and surgical care, quality and patient safety) | ||

| Y5 | Completion of the surgical invasive procedure consent | Percentage |

| Y6 | Percentage of operating room (OR) cancellation of elective surgery (transformed) | Percentage |

| Y7 | Unplanned return to OR within 48 h (transformed) | Percentage |

| Medication error use and near-misses (JCI chapter—medication management and use) | ||

| Y8 | Reported medication errors (transformed) | Per 1000 prescriptions |

| Anaesthesia and sedation use (JCI chapter-anaesthesia and surgical care) | ||

| Y9 | Percentage of completed anaesthesia, moderate and deep sedation consents | Percentage |

| Y10 | Percentage of completed modified Aldrete scores (pre, post, discharge) | Percentage |

| Y11 | Percentage of completed pre-anaesthesia assessments | Percentage |

| Y12 | Percentage of completed anaesthesia care plans | Percentage |

| Y13 | Percentage of completed assessments of patients receiving anaesthesia | Percentage |

| Y14 | Effective communication of risks, benefits and alternatives of anaesthesia to patients | Percentage |

| Availability, content and use of patient records (JCI chapter—management of communication and information) | ||

| Y15 | Percentage of typed post-operative report completed within 48 h | Percentage |

| Infection control, surveillance and reporting (JCI chapter—prevention and control of infections) | ||

| Y16 | Hospital acquired methicillin-resistant Staphylococcus aureus rate (transformed) | Per 1000 Admissions |

| Y17 | Surgical site infection rate (transformed) | Percentage |

| Reporting of activities as required by law and regulation (JCI chapter—governance, leadership and direction) | ||

| Y18 | Mortality rate (transformed) | Percentage |

| International patient safety goals (JCI chapter -international patient safety goals) | ||

| Y19 | Compliance with surgical site marking | Percentage |

| Y20 | Compliance with the time-out procedure | Percentage |

| Y21 | Screening for patient fall risk | Percentage |

| Y22 | Overall hospital hand hygiene compliance rate | Percentage |

| Y23 | Fall risk assessment and reassessment | Percentage |

Research design

Measuring the effects of policy interventions is difficult since there is no unexposed control group available as policies are normally targeted towards the whole population simultaneously. Interrupted time series analysis is a statistical method for analysing temporally ordered measurements to determine if an intervention (eg, accreditation), or even an experimental manipulation, has produced a significant change in the measurements.19–21 Linear segmented regression analysis is a partly controlled design where the trend before the accreditation intervention is used as a control period. The superiority of this method over a simple before-and-after study is due to the repeated monthly measures of variables while controlling for seasonality, secular trends and changes in the environment.22 Interrupted time series analysis distinguishes the effects of time from those of intervention and is the most powerful, quasi-experimental design to evaluate longitudinal effects of time-limited interventions. The interruption splits the time series into preintervention and postintervention (accreditation) segments so that segmented regression analysis of interrupted time series data permits the researcher to statistically evaluate the impact of accreditation on the 23 quality measures, both immediately and in the long term, and the extent to which factors other than accreditation explain the change.

The set of observations of hospital performance making up the time-series data is conceptualised as the realisation of a process. Each segment of the time series exhibits a level and a trend. A change in level, for example, an increase or decrease in a quality measure after accreditation, constitutes an abrupt intervention effect. Conversely, the change in trend of a variable is an increase or decrease in the slope of the segment after accreditation compared with the segment preceding the accreditation. Shifts in level (intercept) or slope, with p<0.01, were defined as statistically significant. Segmented regression models fit a least squares regression line to each segment of the independent variable, time and thus assume a linear relationship between time and the outcome within each segment.21

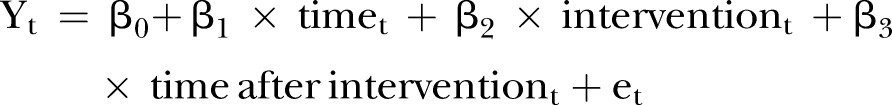

The following linear regression equation is specified to estimate the level and trend in the dependent variable before accreditation and the changes in the level and trend after accreditation:

|

1 |

where Yt is the outcome, for example, the mean number of physicians complying with site marking per month; timet indicates time in months at timet from the start of the observation period to the last time point in the series; intervention is a measure for timet designated as a dummy variable taking the values 0 before the intervention and 1 after the intervention, which was implemented at month 12 in the series. Time after intervention is a continuous variable recording the number of months after the intervention at timet, coded 0 before the intervention (accreditation) and (time −36) after the intervention. In this model β0 is the baseline level of the outcome at the beginning of the series; β1 the slope prior to accreditation, that is, the baseline trend; β2 the change in level immediately after accreditation; β3 the change in the slope from preaccreditation to postaccreditation and represents the monthly mean of the outcome variable; and et the random error term.

If using equation 1 to estimate level and trend changes associated with an intervention, such as accreditation, we control for the baseline level and trend, a major strength of segmented regression analysis.

Statistical analysis

First, a plot of the observations against time was constructed in order to reveal important features of the data such as trend, seasonality, outliers, turning points and/or sudden discontinuities, which are vital in analysing the data and calibrating a model. Second, segmented regression models were fitted using the ordinary least squares regression analysis. The results of segmented regression modelling were reported as level and trend changes. Third, the Durbin-Watson statistic was used to test for the presence of autocorrelation. Two distinct types of autocorrelation are identified: (1) autoregressive process and (2) moving average process. If the statistic was significant, the model was adjusted by estimating the autocorrelation parameter and including it in the segmented regression model. If no autocorrelation is present, then the intervention model was deemed appropriate for the analysis. Fourth, the Dickey-Fuller statistic was used to test for stationarity and seasonality. If the series displayed seasonality or some other non-stationary pattern, the solution was to take the difference of the series from one period to the next and then analyse this differenced series. Sometimes, a series may need to be differenced more than once or at lags greater than one period. Since seasonality induces autoregressive and moving average processes, the detection and inclusion of a seasonal component was implemented in the time series analysis methods using ARIMA, ARMA and dynamic regression. Fifth, the impact of external or internal events was reviewed. When assessing the impact of an intervention on a time series, it is imperative that any observed changes in a series can be attributed to the effect of that intervention only and not to other interventions or events which have had an effect on the series at the same time. There were no major organisational changes in the hospital structure or management during the period of study. Furthermore, there was no monitoring by JCI during the 3 years after the first survey including self-reporting of quality measures or additional organisation-wide site visits. Therefore, the data were not influenced by secular changes and only impacted by the intervention during the study period. Finally, goodness-of-fit tests were undertaken using F-statistics to test for significance of the overall model. Parameter estimation was computed to identify significant individual regressors in the model. Analysis was conducted using EViews 7 and SAS V.9.3. Next, in order to test whether the accreditation process exhibits the life cycle effect, the following statistical predictions were specified for the 23 measures, which are consistent with the hypotheses previously formulated concerning levels of compliance during the four phases of the Life Cycle Model:

The Initiation Phase is the baseline level of the outcome at the beginning of the series.

The measures should exhibit a positive change in slope in the preaccreditation period, the baseline trend. The peak level of compliance should occur during the 3 months prior to the accreditation survey (the Pre-Survey Phase).

The measures should record a negative change in level post the accreditation survey (the Post-Accreditation Slump).

The measures should exhibit a negative change of slope post the accreditation survey (The Stagnation Phase).

The ultimate confirmatory test of the proposed Life Cycle model is to aggregate the data for all 23 quality compliance measures to produce a composite score (YC) and to fit an interrupted time series regression equation to the unweighted mean monthly value of the series.

Results

Table 2 outlines the interrupted time series equations for the 23 quality compliance measures, together with the diagnostic test results for autocorrelation and seasonality/stationarity. First, in the case of 19 of the 23 measures, the β1 coefficient (the slope prior to accreditation) is positive, as hypothesised, and in 10 measures the coefficient is significant. Second, for 14 of the 23 equations, the β2 coefficient (the change in level following accreditation) is negative, as postulated, and for seven measures the parameter is significant (table 2). Third, for 20 of the 23 time series models, the β3 coefficient (the slope postaccreditation) is negative, as predicted, and 11 of the coefficients are significant. Several of the interrupted time series equations, as indicated in table 2, display autocorrelation, in which cases the autoregressive (AR) or moving average (MA) variable was included to correct for it, while Y10 and Y20 displayed seasonality and were adjusted for non-stationarity using differencing.

Table 2.

Interrupted time series models for the 23 quality measures

| Model validation and parameter estimation |

Diagnostic tests | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MODEL: | R2 | Autocorrelation check Durbin Watson | Test for seasonality/stationarity | ||||||||

| Intercept |

Time (β1) |

Intervention (β2) (Change in level) | Time after intervention (β3) (Change in slope) |

(Dickey fuller unit root test) | |||||||

| Value | p Value | Value | p Value | Coefficient 95% CI (LCI-UCI) |

p Value | Coefficient 95% CI (LCI-UCI) |

p Value | R2 | D-value before (D-value after) |

p Value | |

| Model 2. Y1 with AR term | 78.60 | 0.00** | 1.19 | 0.35 | −4.54 (−16.33 to 7.25) | 0.44 | −0.99 (−3.63 to 1.65) | 0.45 | 0.38 | 1.00 (1.92) | 0.03‡ |

| Model 1 Y2 | 96.17 | 0.00** | 0.13 | 0.53 | 1.24 (−1.63 to 4.11) | 0.38 | −0.18 (−0.60 to 0.24) | 0.39 | 0.09 | 1.46† | 0.00‡ |

| Model 2. Y3 with AR (1) | 94.56 | 0.00** | 0.16 | 0.85 | −4.00 (−12.10 to 4.10) | 0.33 | −0.02 (−1.82 to 1.77) | 0.98 | 0.34 | 1.05 (2.22) | 0.04‡ |

| Model 1. Y4 | 32.56 | 0.00** | 7.02 | 0.00* | −13.91 (−32.37 to 4.56) | 0.14 | −7.28 (−10.00 to −4.56) | 0.00** | 0.48 | 1.72† | 0.00‡ |

| Model 2 Y5 with MA (1) | 87.91 | 0.00** | 1.21 | 0.00* | −2.70 (−4.76 to −0.63) | 0.01* | −1.18 (−1.72 to −0.64) | 0.01* | 0.96 | 1.30 (2.53) | 0.00‡ |

| Model 1 Y6 transformed | 14.89 | 0.00** | −0.28 | 0.38 | −0.36 (−4.66 to 3.95) | 0.87 | 0.32 (−0.31 to 0.95) | 0.31 | 0.49 | 2.10† | 0.00‡ |

| Model 1. Y7 transformed | 99.92 | 0.5 | 0.003 | 0.88 | −0.05 (−0.30 to 0.20) | 0.69 | 0.01 (−0.03 to 0.04) | 0.63 | 0.34 | 1.86† | 0.00‡ |

| Transformed medication errors Y8 | 99.97 | 0.00** | −0.002 | 0.21 | 0.04 (0.01,0.06) | 0.00** | 0.00 (−0.00,0.01) | 0.18 | 0.35 | 1.56† | 0.003‡ |

| Model 1. Y9 | 55.19 | 0.00** | 5.02 | 0.00* | −15.42 (−23.38 to −7.45) | 0.00** | −4.95 (−6.12 to −3.78) | 0.00** | 0.71 | 1.84† | 0.00‡ |

| Model 3. Y10 (first differencing) with MA (1) |

28.87 | 0.00** | 7.2 | 0.00* | −7.17 (−12.11 to −2.23) | 0.01* | −7.30 (−8.49 to −6.11) | 0.00** | 0.81 | 2.84 (1.91) | 1.00 seasonality/data are not stationary |

| Model 2. Y11 with AR (1) | 92.15 | 0.00** | 0.7 | 0.22 | 0.97 (−4.86 to 6.80) | 0.74 | −0.84 (−1.98 to 0.30) | 0.14 | 0.33 | 1.27 (1.91) | 0.02‡ |

| Model 3. Y12 with MA (1) | 77.43 | 0.00** | 2.61 | 0.00* | −11.68 (−20.04 to −3.31) | 0.01* | −2.48 (−4.07 to −0.88) | 0.00** | 0.8 | 0.78 (2.13) | 0.00‡ |

| Model 2. Y13 with AR (1) | 97.01 | 0.00** | 0.22 | 0.81 | −6.17 (−14.37 to 2.03) | 0.14 | −0.02 (−1.90 to 1.87) | 0.98 | 0.45 | 0.92 (1.75) | 0.00‡ |

| Model 1.Y14 | 67.2 | 0.00** | 3.75 | 0.00* | −12.83 (−21.63 to −4.03) | 0.01* | −3.64 (−4.94 to −2.35) | 0.00** | 0.53 | 1.76† | 0.00‡ |

| Model 1. Y15 | 57.33 | 0.00** | 1.95 | 0.0058* | 4.33 (−4.98 to 13.64) | 0.35 | −1.85 (−3.22 to −0.480 | 0.01* | 0.54 | 1.75† | 0.01‡ |

| Transformed MRSA rate Y16 | 98.65 | 0.00** | 0.10 | 0.26 | −0.16 (−1.33,1.00) | 0.78 | −0.08 (−0.26,0.09) | 0.33 | 0.10 | 1.87† | 0.00‡ |

| Transformed surgical site infection rate Y17 | 99.92 | 0.00** | −2.58 | 1.00 | 0.05 (−0.18,0.29) | 0.644 | −0.004 (−.040,0.031) | 0.8137 | 0.05 | 2.31† | 0.00‡ |

| Transformed mortality rate Y18 | 100.00 | 0.00** | −0.02 | 0.145 | 0.01 (−0.14,0.16) | 0.886 | −0.01 (−0.01,0.04) | 0.814 | 0.10 | 2.04 | 0.00‡ |

| Model 3. Y19 with AR (1) and AR (2) | 40.56 | 0.00** | 5.20 | 0.00* | 0.79 (−4.37 to 5.94) | 0.76 | −5.269 (−6.19 to −4.34) | 0.00** | 0.94 | 1.05 (2.07) | 0.00‡ |

| Model 6. Y20 (first differencing) with AR (1) and AR (2) | 25.70 | 0.00** | 7.51 | 0.00* | −14.89 (−21.30 to −8.49) | 0.00** | −7.36 (−8.64 to −6.08) | 0.00** | 0.90 | 1.1 (2.43) | 0.14 seasonality/data are not stationary |

| Model 1. Y21 | 91.94 | 0.00** | 0.65 | 0.00* | 0.21 (−2.46 to 2.89) | 0.87 | −0.67 (−1.07 to −0.28) | 0.00** | 0.42 | 1.96† | 0.00‡ |

| Model 1. Y22 | −0.02 | 0.96 | 0.02 | 0.71 | 0.14 (−0.43 to 0.71) | 0.62 | −0.02 (−0.11 to 0.06) | 0.62 | 0.03 | 1.72† | 0.00‡ |

| Model 4. Y23 (first differencing) with AR (1) and AR (2) | 55.51 | 0.00** | 55.51 | 0.00* | −1.67 (−6.29 to 2.96) | 0.47 | −4.26 (−5.30 to −3.22) | 0.00** | 0.90 | 0.89 (2.6) | 0.26 seasonality/data are not stationary |

*p≤0.05; **p≤0.001.

†No autocorrelation.

‡No seasonality-data are stationary;.

AR: Autoregressive variable: MA: Moving average variable; MRSA, methicillin-resistant Staphylococcus aureus.

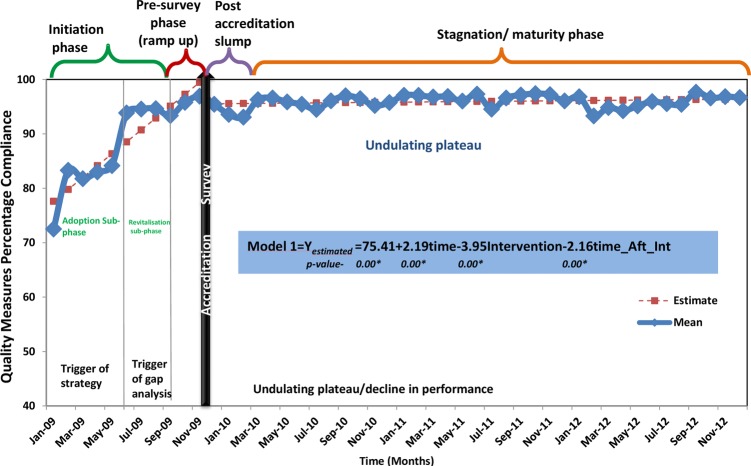

The results of the confirmatory test, using a composite score (YC) of the 23 quality measures, provide proof of the Life Cycle Model (figure 2). The slope prior to accreditation (β1) is positive and highly significant, as hypothesised. The change in level following the accreditation survey (β2) signals a significant decline in compliance, as predicted; and, as postulated, the postaccreditation slope (β3) is also negative and statistically significant (table 3). Furthermore, the R2 indicates that over 87% of the variation in quality compliance outcomes is explained by the three variables in the Life Cycle Model (table 3). The best fit interrupted time series model not only contains three significant variables, but also the size of the coefficients indicates that the effects of these variables are substantial. The preintervention slope (β1) implies an increase in compliance by 2.19 percentage points per month prior to the accreditation survey. This Initiation Phase is characterised by a period of steep increases in compliance followed by sporadic declines. The β2 coefficient suggests that the mean level of compliance for the 23 quality measures decreased by 3.95 percentage points immediately following the accreditation survey. The β3 coefficient indicates a decrease in compliance of 2.16 percentage points per month postaccreditation. The postaccreditation slump is followed by a long period of stagnation characterised by an undulating plateau of compliance but, importantly, at a level of 20 percentage points higher than the preaccreditation survey levels (figure 2).

Figure 2.

Phases of the accreditation life cycle: empirical evidence.

Table 3.

Interrupted Time Series for Composite Quality Measures (YC)

| |

Model Validation and Parameter Estimation |

Diagnostic tests |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intercept |

Time (β1) |

Intervention (β2) (Change in level) | Tim_Aft_Int (β3) (Change in slope) | Autocorrelation (AC) Check Durbin Watson | Test for seasonality/stationarity (Dickey-Fuller unit-root test) |

|||||||||

| Dimension | MODEL | Value | p Value | Value | p Value | Coefficient 95% CI (LCI-UCI) |

p Value | Coefficient 95% CI (LCI-UCI) |

p Value | R2 | D-Value (before) | D-Value (after) | p Value | Result |

| Mean_ Composite† | Yc | 75.41 | 0.00** | 2.19 | 0.00** | −3.95 (−6.39 to −1.51) | 0.00** | −2.16 (−2.52 to −1.80) | 0.00** | 0.87 | 1.56 | – | 0.00** | No seasonality/data are stationary |

†The composite quality measure (YC) is the mean of the 23 quality measures; **p≤0.001.

Discussion

While there are many questions about the benefits of hospital accreditation, empirical evidence to support its effectiveness is still lacking. According to Greenfield and Braithwaite,1 this creates a serious legitimacy problem for policymakers and hospital managements. Is achieving and maintaining accreditation worth the time, effort and cost if there is uncertainty about whether it results in quantifiable improvements in healthcare delivery and outcomes23? Shaw5 has argued that many countries are embarking on accreditation programmes without any evidence that they are the best use of resources for improving quality. While proof of the value of accreditation is so far indeterminate, there is also no conclusive evidence that no benefits arise or that resources are being wasted.9 The literature prompts a rethink of how accreditation contributes to clinical and organisational performance.24 Therefore, without an empirically grounded evidence base for accreditation, the debate about the effects of accreditation—positive and negative—will remain anecdotal, influenced by political ideology and driven by such biases.25

This is the first study of accreditation to use interrupted time series analysis. Furthermore, this paper has outlined a new conceptual framework of hospital accreditation—the Life Cycle Model—and presented statistical evidence to support it. This is also the first study to use interrupted time series regression analysis over a 4-year period to test for the impact of accreditation on quality compliance measures in healthcare.

The study results have answered the key question: do hospitals maintain quality and patient safety standards over the accreditation cycle? The results demonstrate that, although performance falls after the accreditation survey, the tangible impact of accreditation should be appreciated for its capacity to sustain improvements over the accreditation cycle. This phenomenon is supported by other researchers who state that those institutions which invest in the accreditation surveys reap the most benefits from accreditors’ diagnosis, sharing of leading practices and the ensuing changes.26 27 Organisational efforts (eg, creating a functional committee structure) to meet the accreditation programme’ requirements could orchestrate the circumstances for prolonged improvements in hospitals.28 At a microlevel, the findings of this research demonstrate that a private hospital can use accreditation to improve quality. At a macrolevel, regulatory bodies can ascertain that investment in accreditation is appropriate as a quality improvement strategy. Acceptance of the accreditation life cycle framework offers a blueprint for improving strategy on quality of healthcare. A major benefit of the concept is that stagnation and declining outcomes can be avoided by monitoring the life cycle and taking proactive initiatives at appropriate times in order to sustain performance. The Life Cycle Model also justifies the need for a continuous survey readiness programme throughout the organisation.

Continuous readiness has been described as being ‘ready for the next patient, not just the next survey’.29 Continuous survey readiness strategies may create a heightened awareness of the level of compliance and standards. The literature supports regular self-assessments, intracycle mock surveys and benchmarking of quality measures to a data library30 31 for sustaining quality improvement, which may also be used to mitigate the stagnation phase. A paradigm shift, from the scheduled accreditation survey to an unannounced survey, is recommended to prompt a change from a survey preparation mindset to that of continual readiness. A triennial snapshot is no substitute for ongoing monitoring and continual improvement. Continuous survey readiness may ameliorate the life cycle effect of accreditation provided the organisation is required to implement such a resource intensive programme by the accreditation body. Although this study is conceived of as being novel for its unique evaluation methodology and the Life Cycle Model, the following limitations should be recognised. First, the study evaluates accreditation in a single hospital. Second, the accuracy of measures is dependent on the quality of documentation in the patient record. Third, the choice of quality measures was also defined by the availability of evidence in patient records; thus, a proportion of standards for operational systems and facilities management was excluded. More studies are needed to test the validity of this life cycle framework in different national and cultural settings. Further use of interrupted time series analysis is encouraged when evaluating quality interventions such as accreditation.

Supplementary Material

Acknowledgments

The authors are grateful to Randy Arcangel for his assistance with running the statistical analysis. This paper is based upon the research from Dr. Devkaran's PhD thesis completed under the supervision of Professor O'Farrell at the Edinburgh Business School, Heriot-Watt University.

Footnotes

Contributors: SD conceived and designed the experiment and analysed the data. SD and PNO'F interpreted the data and wrote the manuscript; they jointly developed the model and arguments for the paper and also revisited the article for important intellectual content. Both authors reviewed and approved the final manuscript.

Funding: This research received no funding from any agency in the public, commercial or not-for-profit sectors.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Greenfield D, Braithwaite J. Developing the evidence base for accreditation of health care organisations: a call for transparency and innovation. Qual Saf Health Care 2009;18:162–3 [DOI] [PubMed] [Google Scholar]

- 2.Kohn L, Corrigan J, Donaldson M. To err is human: building a safer health system. Washington DC: Institute of Medicine, National Academies Press, 1999 [PubMed] [Google Scholar]

- 3.Øvretveit J, Gustafson D. Evaluation of quality improvement programmes. Qual Health Care 2002;11:270–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Øvretveit J. Which interventions are effective for improving patient safety? A review of research evidence. Stockholm, Sweden: Karolinska Institutet, Medical Management Centre, 2005 [Google Scholar]

- 5.Shaw CD. Evaluating accreditation. Int J Qual Health Care 2003;15:455–6 [DOI] [PubMed] [Google Scholar]

- 6.Greenfield D, Travaglia J, Braithwaite J, et al. An analysis of the health sector accreditation literature. A report for the Australian accreditation research network: examining future health care accreditation research. Sydney: Centre for Clinical Governance Research, The University of New South Wales, 2007 [Google Scholar]

- 7.Griffith JR, Knutzen ST, Alexander JA. Structural versus outcomes measures in hospitals: a comparison of joint commission and medicare outcomes scores in hospitals. Qual Manag Health Care 2002;10:29–38 [DOI] [PubMed] [Google Scholar]

- 8. Salmon JW, Heavens J, Lombard C, et al. The Impact of Accreditation on the Quality of Hospital Care: KwaZulu-Natal Province, Republic of South Africa. Operations Research Results 2:17. Bethesda MD. Published for the US Agency for International Development (USAID) by the Quality Assurance Project, University Research Co., LLC. 2003. [Google Scholar]

- 9.Øvretveit J, Gustafson D. Improving the quality of health care: using research to inform quality programmes. BMJ 2003;326:759–61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Miller MR, Pronovost P, Donithan M, et al. Relationship between performance measurement and accreditation: implications for quality of care and patient safety. Am J Med Qual 2005;20:239–52 [DOI] [PubMed] [Google Scholar]

- 11.Chen J, Rathore SS, Radford MJ, et al. JCAHO accreditation and quality of care for acute myocardial infarction. Health Aff 2003;22:243–54 [DOI] [PubMed] [Google Scholar]

- 12.Leatherman S, Berwick D, Iles D, et al. The business case for quality: Case studies and an analysis. Health Aff 2003;22:17–30 [DOI] [PubMed] [Google Scholar]

- 13.El-Jardali F, Jamal D, Dimassi H, et al. The impact of hospital accreditation on quality of care: perception of Lebanese Nurses. Int J Qual Health Care 2008;20:363–71 [DOI] [PubMed] [Google Scholar]

- 14.Lanteigne G. Case studies on the integration of Accreditation Canada's program in relation to organizational change and learning: The Health Authority of Anguilla and the Ca'Focella Ospetale di Treviso [Doctorat en administration des services de santé]. Montréal: Faculté de médicine, Université de Montréal, 2009 [Google Scholar]

- 15.Chandra A, Glickman SW, Ou FS, et al. An analysis of the association of society of chest pain centres accreditation to American college of cardiology/American heart association non-ST-segment elevation myocardial infarction guideline adherence. Ann Emerg Med 2009;54:17–25 [DOI] [PubMed] [Google Scholar]

- 16.Sack C, Lütkes P, Günther W, et al. Challenging the Holy Grail of hospital accreditation: a cross-sectional study of inpatient satisfaction in the field of cardiology. BMC Health Serv Res 2009;10:120–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Joint Commission International. Joint Commission international accreditation: getting started. 2nd edn Oakbrook Terrace, IL: Joint Commission Resources, 2010 [Google Scholar]

- 18.Lewis S. Accreditation in Health Care and Education: The Promise, The Performance, and Lessons Learned. Raising the Bar on Performance and Sector Revitalization. Access Consulting Ltd. 2007. https://www.hscorp.ca/wp-content/uploads/2011/12/Accreditation_in_Health_Care_and_Education1.pdf.

- 19.Gillings D, Makuc D, Siegel E. Analysis of interrupted time series mortality trends: an example to evaluate regionalized perinatal care. Am J Public Health 1981;71:38–46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bowling A. Research methods in health: investigating health and health services. 2nd edn Buckingham: Open University Press, 2002 [Google Scholar]

- 21.Wagner AK, Soumerai SB, Zhang F, et al. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther 2002;27:299–309 [DOI] [PubMed] [Google Scholar]

- 22.Cook TD, Campbell DT. Quasi-experimentation. Design and analysis issues for field settings. Boston, MA: Houghton Mifflin Company, 1979 [Google Scholar]

- 23.Nicklin W, Dickson S. The value and impact of accreditation in health care: a review of the literature. Accreditation Canada, 2009 [Google Scholar]

- 24.Braithwaite J, Greenfield D, Westbrook J, et al. Health service accreditation as a predictor of clinical and organisational performance: a blinded, random, stratified study. Qual Saf Health Care 2010;19:14–21 [DOI] [PubMed] [Google Scholar]

- 25.Greenfield D, Braithwaite J. Developing the evidence base for accreditation of healthcare organizations: a call for transparency and innovation. Qual Saf Health Care 2009;18:162–3 [DOI] [PubMed] [Google Scholar]

- 26.Pomey MP, Lemieux-Charles L, Champagne F, et al. Does accreditation stimulate change? A study of the impact of the accreditation process on Canadian healthcare organizations. Implement Sci 2010;5:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Nicklin W, Barton M. CCHSA accreditation: a change catalyst toward healthier work environments. Healthc Pap 2006;7:58–63 [DOI] [PubMed] [Google Scholar]

- 28.Jaafaripooyan E, Agrizzi D, Akbari-Haghighi F. Healthcare accreditation systems: further perspectives on performance measures. Int J Qual Health Care 2011;23:645–56 [DOI] [PubMed] [Google Scholar]

- 29.Valentine N, McKay M, Glassford B. Getting ready for your next patient: embedding quality into nursing practice. Nurse Leader 2009;7:39–43 [Google Scholar]

- 30.Chuang S, Inder K. An effectiveness analysis of healthcare systems using a systems theoretic approach. BMC Health Serv Res 2009;9:195–205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Shaw CD, Braithwaite J, Moldovan M, et al. Profiling health-care accreditation organizations: an international survey. Int J Qual Health Care 2013;25:222–31 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.