Abstract

Background

Medical progress depends on the evaluation of new diagnostic and therapeutic interventions within clinical trials. Clinical trial recruitment support systems (CTRSS) aim to improve the recruitment process in terms of effectiveness and efficiency.

Objective

The goals were to (1) create an overview of all CTRSS reported until the end of 2013, (2) find and describe similarities in design, (3) theorize on the reasons for different approaches, and (4) examine whether projects were able to illustrate the impact of CTRSS.

Methods

We searched PubMed titles, abstracts, and keywords for terms related to CTRSS research. Query results were classified according to clinical context, workflow integration, knowledge and data sources, reasoning algorithm, and outcome.

Results

A total of 101 papers on 79 different systems were found. Most lacked details in one or more categories. There were 3 different CTRSS that dominated: (1) systems for the retrospective identification of trial participants based on existing clinical data, typically through Structured Query Language (SQL) queries on relational databases, (2) systems that monitored the appearance of a key event of an existing health information technology component in which the occurrence of the event caused a comprehensive eligibility test for a patient or was directly communicated to the researcher, and (3) independent systems that required a user to enter patient data into an interface to trigger an eligibility assessment. Although the treating physician was required to act for the patient in older systems, it is now becoming increasingly popular to offer this possibility directly to the patient.

Conclusions

Many CTRSS are designed to fit the existing infrastructure of a clinical care provider or the particularities of a trial. We conclude that the success of a CTRSS depends more on its successful workflow integration than on sophisticated reasoning and data processing algorithms. Furthermore, some of the most recent literature suggest that an increase in recruited patients and improvements in recruitment efficiency can be expected, although the former will depend on the error rate of the recruitment process being replaced. Finally, to increase the quality of future CTRSS reports, we propose a checklist of items that should be included.

Keywords: automation; clinical trials as topic; decision support systems, clinical; patient selection; research subject recruitment

Introduction

Medical progress depends on the evaluation of new diagnostic and therapeutic interventions within clinical trials. The value of each clinical trial depends on the successful recruitment of patients within a limited time frame. The number of participants must be sufficiently large to allow for scientifically and statistically valid analysis. Unfortunately, many trials experience gaps between initially planned and finally achieved participant numbers or they need to prolong their recruitment period. Slow recruitment delays medical progress and leads to unnecessarily high study costs [1-3].

The main stakeholders in the recruitment process are the patient, the treating physician, the study nurse, and the principal investigator. But when it comes to the details of how responsibilities and tasks are distributed and how stakeholders interact with one another, recruitment processes start to show large variability. These specifics are influenced by a multitude of factors, including whether the trial is prospective or retrospective, the number of patients to be screened, the fraction of potential participants among the screened patients, the number of participating clinics, the urgency of recruiting a patient after discovering eligibility, the local data protection laws, the available funds or the organization, and infrastructure of the clinical institutions which pursue the trial.

Because of this variability in the recruitment processes, numerous reasons for failure to include sufficient participants into a trial were found [4-6]. On the most abstract level, these are overoptimistic feasibility estimations of future eligible patient numbers [7,8], the inability to motivate physicians to approach their patients [9-12], and the inability to motivate patients to participate [13,14].

Following increased levels of patient data capture in digital systems and the advent of clinical decision support systems, the early 1990s also saw the use of computers for matching patients and trial protocols. These clinical trial recruitment support systems (CTRSS) aim to solve the issue of false feasibility estimations, to generate a positive impact on the treating physicians’ enrollment efforts, and to reduce the resources required to set up a successful recruitment process. Although many CTRSS have been proposed, the problems in recruitment persist [15,16].

In this context, Cuggia et al [17] raised the question “What significant work has been carried out toward automating patient recruitment?” and reviewed the literature published between 1998 and October 2009. They found a comparatively small number of papers related to 28 distinct CTRSS. Most of these projects had focused on the technological feasibility of the search algorithm and neglected assessments of the system’s impact on recruitment in real-life scenarios. Cuggia et al concluded “that the automatic recruitment issue is still open” and that in 2009 it was still “difficult to make any strong statements about how effective automatic recruitment is, or about what makes a good decision support system for clinical trial recruitment.”

Since then, CTRSS have become even more popular. Many independent institutions have tackled the challenge to improve their local recruitment processes. Large European collaborations, such as Electronic Health Records for Clinical Research (EHR4CR) [18], and national collaborations, for example in Germany [19], have been initiated to create information technology (IT)-supported patient recruitment architectures and platforms. For the related but broader challenge of extracting meaningful patient information from electronic health record (EHR) data, a plethora of publications have been published in recent years and the term patient phenotyping has been coined [20]. Recently, Shivade et al [21] presented a review on phenotyping techniques. They observed “a rise in the number of studies associated with cohort identification using electronic medical records.”

The rapidly growing knowledge about and the importance of electronic patient recruitment systems warrants a new review of the existing literature. Our objectives were to (1) create an overview of all papers published until the end of 2013, (2) find and describe similarities in CTRSS design, (3) discuss the reasons for different approaches, and (4) examine whether new projects were able to illustrate the impact of CTRSS.

Methods

Search Strategy

One of the authors (FK) searched the database PubMed with 2 queries. The first query contained keywords for publication titles and Medical Subject Headings (MeSH) terms. Because most recent articles were not yet completely indexed with MeSH terms, a second query performed a more profound keyword search in all fields. Neither query was limited to a specific time period:

PubMed query 1: (“clinical trial”[Title] OR “clinical trials”[Title] OR “Clinical Trials as Topic”[MESH]) AND (“eligibility”[Title] OR “identification”[Title] OR “recruitment”[Title] OR “Patient Selection”[MESH] OR “cohort”[Title] OR “accrual”[Title] OR “enrollment”[Title] OR “enrolment” [Title] OR “screening”[Title]) AND (“electronic”[Title] OR “computer”[Title] OR “software”[Title] OR “Decision Making, Computer-Assisted”[MESH] OR “Decision Support Systems, Clinical”[Mesh] OR “Medical Records Systems, Computerized”[Mesh])

PubMed query 2: (“clinical trial”[All Fields] OR “clinical trials”[All Fields]) AND (“eligibility”[All Fields] OR “identification”[All Fields] OR “recruitment”[All Fields] OR “accrual”[All Fields] OR “enrollment”[All Fields] OR “enrolment”[All Fields] OR “screening”[All fields]) AND (“participants”[All Fields] OR “cohort”[All fields] OR “patients”[All Fields]) AND (“electronic”[All fields] OR “computer”[All fields] OR “software”[All fields] OR “automatic”[All Fields])

Both queries were executed on January 15, 2014. After removing all duplicates from the combined result sets of both queries, FK screened titles and abstracts for the inclusion criteria. We then tried to obtain the full text of all included articles for a second screening. Finally, FK reviewed all references of the included manuscripts for additional articles. In case of uncertainty about the inclusion of an article, it was discussed with HUP for a final decision.

Inclusion Criteria

Our review covers primary research articles and conference proceedings on computer systems that compared patient data and eligibility criteria of a clinical trial to identify either potential participants for a given trial or suitable trials for a given patient. The system must have employed a computer to determine patient eligibility; that is, the utilization of electronically captured data was insufficient if the matchmaking process itself was done manually (eg, [22,23]). Manual processes before and after eligibility determination were otherwise accepted. Articles on the construction and processing of eligibility criteria, although closely tied to the construction and usage of CTRSS, were not part of this review (eg, [24,25]). Although technically the same, we also excluded decision support systems that identified patients for other purposes than clinical trials recruitment (eg, for diagnosing [26] or phenotyping [27]).

Classification

The classification of CTRSS was roughly based on that of a previous review by Cuggia et al [17] to render results comparable with one another. They included (1) the clinical context or setting to which the system was deployed, (2) the manner of integration into the existing clinical or recruitment workflow, (3) the source and format of patient data and eligibility criteria, (4) the reasoning method employed to derive eligible patients, and (5) the outcome obtained by the system’s application to one or more clinical trials.

Results

Included Studies

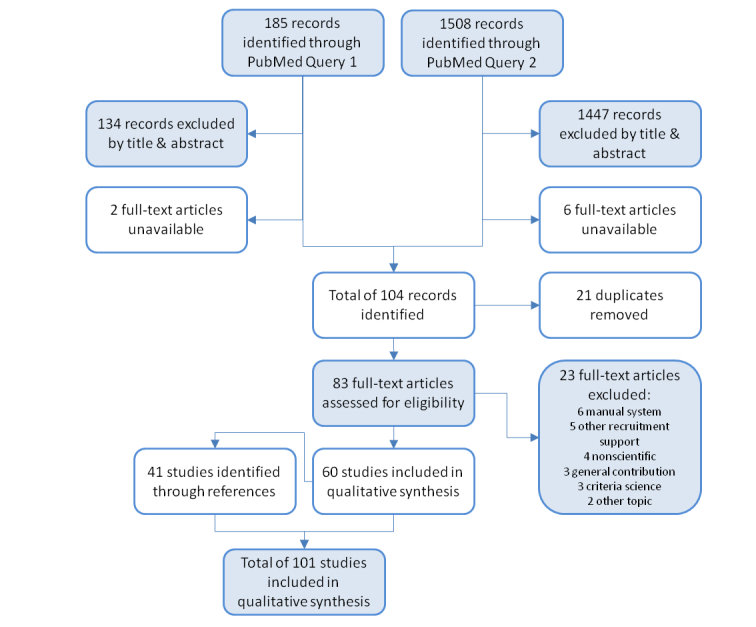

The 2 PubMed queries together yielded 1693 articles. A total of 1581 articles were removed from the literature pool based on their titles and abstracts. After removal of 8 articles that could not be obtained as full text and 21 duplicates, we arrived at 83 distinct articles, of which 60 were included in the qualitative analysis after review. In all, 5 of the excluded articles described other supportive measures for trial recruitment, 4 were deemed nonscientific (eg, commentaries), 6 described manual systems or the mode of eligibility determination was not clearly stated, 3 constituted general contributions without a relation to a specific CTRSS, 3 focused on the representation of eligibility criteria in a computable format, and 2 articles dealt with other topics (eg, phenotyping, personalized medicine). We obtained 41 additional articles through references and arrived at a final pool of 101 articles [3,28-127] on 79 different systems. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram [128] in Figure 1 shows the different phases of the article selection process.

Figure 1.

Flow diagram on the process of literature selection.

Results Structure

Multimedia Appendix 1 shows a list of all articles grouped by system and ordered by first publication date (objective 1). It also summarizes the CTRSS characteristics according to the categories described subsequently. In the following sections on CTRSS characteristics, we identify and describe CTRSS groups with similar features (objective 2). We also speculate on environmental characteristics that led the developers to favor a group or reject another (objective 3). All evidence for the impact of CTRSS on patient recruitment is presented in Outcomes (objective 4).

Characteristics of Included Articles

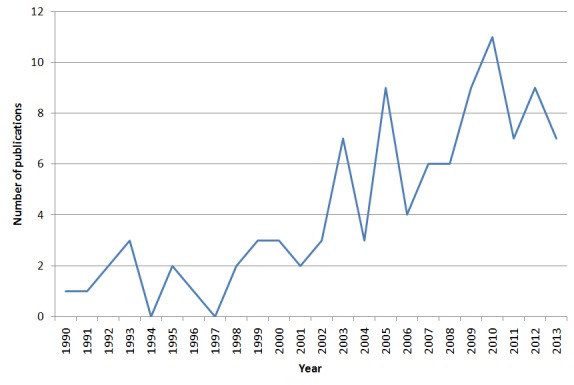

Regarding system maturity, 12 articles reported on a CTRSS concept that was not implemented yet. A total of 42 articles described a prototypical implementation, often including performance tests, but no application to a running clinical trial. Another 47 articles described fully matured systems that were used to recruit patients into at least 1 trial. First publications on CTRSS dated back to 1990. However, there were no more than 3 publications per year until 2003. Since then, 7 articles per year were published on average, so that nearly 80% of all articles were from the past 10 years (Figure 2).

Figure 2.

Number of publications on CTRSS per year.

Clinical Context and Scope of Application

CTRSS have been implemented and used in trials in a wide variety of clinical domains. Still, many systems were evaluated for only 1 trial or trials from the same domain. With 17 CTRSS in this domain, oncology (especially breast cancer) was found particularly often. This domain may be favorable because it is research intensive with many open trials and exceptionally large available volumes of patient data and funding. The functionalities and algorithms of the CTRSS seemed largely independent from the clinical domain. Thus, no author precluded the use of their system for clinical trials from other domains and many actually suggested it.

The accuracy of a CTRSS depends on the available patient data and its effect depends on the organizational environment in which it operates. Therefore, each CTRSS should be evaluated for a large number of trials and at multiple sites to increase the reliability of reported results if possible. Many authors observed this: 43 articles reported on using their system for more than 1 trial (11 did not name an exact figure) and 14 CTRSS were intended for use at multiple sites. In comparison, 37 reports evaluated a CTRSS for a single trial and 62 CTRSS were used at a single institution. In all, 11 papers failed to give the number of trials their CTRSS had been evaluated or used for.

Workflow Integration

Overview

Every CTRSS has 2 points of contact with the recruitment workflow of a clinical trial. The first is the trigger that causes the system to assess the eligibility of one or more patients. The second is the communication of the assessment’s results (eg, a list of potential trial participants) to the system’s user.

Trigger

One way to trigger the eligibility assessment is to have the user or an administrator execute a manual process. Manual triggers are both the easiest to implement and the most commonly found. They are sufficient for cases in which patient data are entered into the CTRSS by the user who can subsequently view patient eligibility in an interactive fashion. The user can be a physician [38,88] or the patient [59,79,112]. Manual triggers are also sufficient for cases in which an eligibility assessment is required only once to generate a patient list, which is not expected to change during the trial’s recruitment phase. The latter is generally the case for retrospective trials and feasibility studies. Typical examples include Payne et al [97], Thadani et al [115], and Köpcke et al [69] who required an administrator to develop a Structured Query Language (SQL)-based query. Based on 16 years of COSTAR research queries, Murphy [85] created the graphical interface Informatics for Integrating Biology and the Bedside (i2b2) to allow investigators to parameterize query templates themselves.

For trials that require regular re-evaluation of patient eligibility because of changing patient data over time, manual triggers are generally inefficient and are replaced by automatic triggers. Automatic triggers can start eligibility assessments periodically at given time intervals [40,122] or in reaction to particular events in the hospital information system (HIS) [28,44,48,60]. Time-based triggers are generally easier to implement than event-based triggers. The interval length between assessments depends on the requirements of each trial and the computing time required for an assessment. It is usually set to a value between several minutes and 1 day. For trials that require an immediate reaction to new patient data by trial staff and for trials with comparatively rare potential participants, trigger events are preferred. Such triggers include the availability of new data or the admission of a patient.

Communication

The results of an eligibility assessment must be communicated somehow to the CTRSS user. The primary factor of influence when choosing a mode of communication is the target user group. If patients are supposed to use the CTRSS, it is most common to offer a separate user interface that interactively displays potentially fitting trials and/or a score indicating the patient’s fit with a certain trial [32,59,72,79,109,112,125]. Exceptions are found if the patient is interested in future trials instead of ones that are currently recruiting. In these cases, patients enter their health data into a registry or a personal health record and they are notified by email as soon as a fitting trial is detected [61,125]. If the CTRSS has no clinical/research user (ie, the direct user is IT staff), it usually transforms the raw result of the reasoning algorithm into a patient list which is subsequently handed out to the researcher [60,80,103,105,115,119]. This is the preferred mode of communication if eligibility assessments are only required once [69,97]. However, when the target users are either treating physicians or clinical investigators, the mode of communication also needs to accommodate data security regulations and the trial’s temporal requirements. Pagers seem to be the only option if the user needs to react immediately to new patient data, such as critical laboratory values [41,63]. When time is of less importance, emails are chosen to deliver both proposals for single patients and patient sets alike [34,45,106,124]. A recurring scenario is that the physician or nurse is reminded of a trial during their first consultation. To achieve this, alerts or flags are placed in the EHR which appear at a convenient moment and often allow direct evaluation of the patient’s eligibility [104,121].

When coupled with simultaneous messages, automatic triggers have the disadvantage of easily initiating alerts or prompts at a time when the user is not prepared to answer them. Untimely messages will cause the receiver to ignore them. The same effect occurs for systems with a large share of false positive alerts. This alert fatigue is regularly mentioned as a problem for CTRSS efficiency and acceptance. Numbers for the fraction of alerts that are actually reviewed by the receiver range from 25% [60], more than 30% to 40% [52], and 56% [105] to less than 70% [62]. For Ruffin [105], even “numerous prompts and reminders and customized requests” could not solve the problem. Additionally, Embi and Leonard [52] found that response rates declined at a rate of 2.7% per 2-week time period.

Knowledge Representation and Data Sources

Overview

The core technical functionality of a CTRSS is the comparison of eligibility criteria with the electronically available patient data. According to Weng et al [124], the process is characterized by 3 aspects: “the expression language for representing eligibility rules, the encoding of eligibility concepts, and the modeling of patient data.” The underlying problem is that eligibility criteria are almost always given in narrative form and need to be translated into a structure that can be interpreted by the CTRSS. The same is true for the patient data, which needs to be analyzed to identify concepts that match the eligibility concepts before developing the eligibility rules themselves.

Source of Patient Data

Most authors choose the data source for their CTRSS according to availability and accessibility. Few CTRSS designs are based on a comparison of different potential data sources (eg, for timeliness or comprehensiveness). Nevertheless, the reuse of existing patient data for the purpose of recruitment is common practice: 64 CTRSS relied on data that was collected for other purposes originally. A total of 5 monitored the health level 7 (HL7) messages of a clinical information system, 46 of them read patient data directly from the EHR of the hospital or general practitioner, 12 used a data warehouse, and 1 used a clinical registry. In this order, these data sources increasingly collect and integrate patient data over time, software applications, and institutions, which makes access to the data of large patient sets comparatively easy. However, more integrated data often means the data source becomes increasingly detached from its origin as well (ie, some information is lost during processing and delays between the documented event and availability of the corresponding data grow). For some trials, such delays are unacceptable because trial staff need to be notified as soon as possible for specific events. Specialty subsystems, such as an electronic tracking board [3,29] or the messages exchanged between these systems [54,76,98,101,121], need to be monitored directly in these cases. A total of 3 CTRSS preloaded patient characteristics from the EHR and prompted the physician to complete missing data [90,94,95]. Wilcox et al [125] conceptualized a CTRSS that integrated EHR data and the personal health record of a patient. Only 16 CTRSS made exclusive use of data that were entered directly into the system itself by the physician (n=8), the patient (n=7), or an investigator (n=1).

Terminologies

The CTRSS developer can choose the terminology for clinical concept names arbitrarily if patient data are entered only for the purpose of eligibility assessment. However, if patient data are taken from an already existing data source, most developers chose to reuse the terminologies found there. A total of 66 articles did not mention the use of any terminology. Of these, 5 performed pure free-text analysis and did not necessarily require terminologies. Of those papers that did mention the use of a specific terminology, 16 named the International Classification of Diseases (ICD). This makes sense because it is also the terminology most commonly used within EHRs. There were no other widespread terminologies used for CTRSS. The Unified Medical Language System (UMLS) appeared in 6 publications and the Medical Entities Dictionary (MED), Systematized Nomenclature of Medicine Clinical Terms (SNOMED CT), and Logical Observation Identifiers Names and Codes (LOINC) in only 3, respectively. In all, 10 terminologies were each used in only 1 CTRSS, such as Cerner Multum, National Drug Code, Hospital International Classification of Diseases Adapted, Read, NCI-Thesaurus (NCI-T), and 837 billing data, or in 2 CTRSS, such as MeSH, NCI Common Data Elements (CDE), and Current Procedural Terminology.

Intermediary Criteria Format

Terminologies are usually chosen to suit the available patient data, whereas the intermediary criteria format is strongly associated with the reasoning method of the CTRSS. The SQL is the most frequently found representation of criteria logic. Unfortunately, the CTRSS literature lacks details on the representation of criteria expressions. A comparison of the eligibility criteria as given in the study protocol and their representation in the CTRSS is rare; 49 papers gave no information on the chosen format of eligibility expressions.

Translation Process

With a few exceptions, the translation process to make eligibility criteria processable for the computer seemed to be a manual one. For 51 CTRSS, the administrator was responsible for reading the trial protocol, mapping clinical concepts to the target terminology, and creating eligibility expressions. This is the most efficient process in clinical settings that generate few trials per researcher because teaching costs are minimized and experience is concentrated in 1 person. Yet, a notable fraction of the CTRSS offered the user an interface to select eligibility criteria autonomously from a small [38] or large [43] set of predefined criteria. Having the user translate the eligibility criteria of a trial is primarily meaningful for feasibility studies, giving a researcher the means to dynamically modify the criteria for a new trial and to instantly receive feedback for the change’s influence on the expected number of participants.

Lonsdale et al [75] proposed natural language processing (NLP) to support the translation process. They read eligibility sentences from the trial registry ClinicalTrials.gov, parsed them to retrieve logical forms and mapped concepts to standard terminologies to generate executable Arden syntax Medical Logic Modules (MLMs). The process succeeded for 16% of all criteria from 85 randomly chosen trials [74,75]. Zhang et al [127] and Köpcke et al [70] proposed case-based reasoning algorithms for free-text and structured patient data, respectively. These algorithms did not require the translation of eligibility criteria into rules, but tried to determine the unknown eligibility of new patients by comparing them with a set of patients with known eligibility status.

Reasoning

Overview

Closely tied to the previously described CTRSS characteristics is the reasoning process itself (ie, the method to assess whether the available data for a patient suffices for the conditions set by the trial’s eligibility criteria). Almost all CTRSS “perform ‘pre-screening’ for clinical research staff” [115] instead of trying to determine the actual eligibility of a patient. They do not replace manual chart review, but act as a filter that limits the number of patients who require such by selecting the most likely candidates. The presentation of reasoning details, such as a probability of eligibility or missing patient characteristics together with the screening list, can facilitate the manual screening process even further.

The dominance of relational databases for the storage of patient data entails that most CTRSS employ database queries somewhere in the reasoning process. Consequentially, most CTRSS are based on an elaborate query or a set of subsequently executed queries per trial [3,45,64,126]. If the result set of potentially eligible patients is sufficiently accurate, no further processing is required.

Some authors demonstrated the feasibility of more exotic reasoning methods. A total of 4 CTRSS used Arden syntax to control the reasoning process [64,75,91,95]; 3 CTRSS employed an ontologic reasoner after transforming eligibility criteria and, in 2 cases, patient data into separate ontologies [35,72,96]. However, although technically interesting, the authors failed to convey the advantages of these algorithms when compared with the aforementioned simpler ones.

Dealing With Incomplete Data

Some CTRSS designers paid particular attention to missing patient data. Tu et al [119] developed 2 methods for dealing with this problem. In their qualitative method, each criterion was attributed 1 of 5 qualities according to a patient’s concrete data: patient meets the criterion, patient probably meets the criterion, no assertion possible, patient probably fails the criterion, and patient fails the criterion. Specific rules for each criterion derived one of these qualities from the patient’s data or assign default values. In their probabilistic method, a Bayesian belief network was manually constructed for each trial. The network represented variables as nodes and dependencies as links between nodes. All nodes and links were given probabilities based on legacy data or experts. If data for a variable were found, the variable was given a probability of 1 or zero; otherwise, the default probabilities were used. When all available data for a patient were retrieved, a probability for the patient’s eligibility could be calculated. This probabilistic approach was applied again later by Papaconstantinou et al [94] and Ash et al [31]. Bhanja et al [36] suggested that scalability as well as time and design complexities discouraged the use of probabilistic approaches.

Natural Language Processing

The wish to include unstructured (ie, free-text) data could also warrant the utilization of complex reasoning algorithms. Keyword searches were often employed when no structured data elements were available [29,41,73,93,101,106,110]. They could easily be added to complement queries of structured patient data [3,66,98,124]. Pakhomov [93] compared a keyword search with 2 other NLP methods: naive Bayes and perceptron. Naive Bayes yielded the best sensitivity (95% vs 86% and 71% for perceptron and keyword search, respectively) and perceptron offered the best specificity (65% vs 57% and 54% for naive Bayes and keyword search, respectively). Although performing worst of all methods, the advantage of using a simple keyword search lies in its easy implementation (no need for training data) and transparency. In a similar comparison, Zhang et al [127] found regular expressions outperformed a vector space method and latent semantic indexing to achieve accuracy similar to a specifically developed method called subtree match. However, they also proposed algorithms for automatic keyword and subtree generation, which could offer distinct potential for automation.

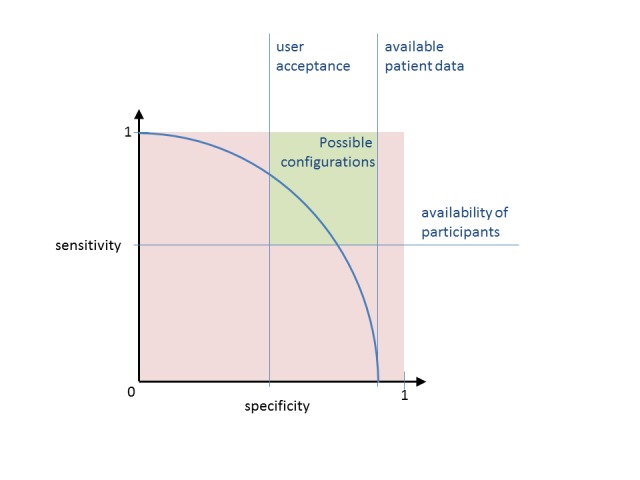

Sensitivity-Versus-Specificity Tradeoff

Independent from the chosen reasoning, the inclusiveness of each CTRSS is subject to the desires of its user. Ultimately, the setup of a CTRSS “requires sensitivity-versus-specificity tradeoffs” for each trial [119]. The upper limit to specificity might be determined by the fit between available patient data and eligibility criteria, whereas its lower limit is simply determined by what the user is willing to accept (Figure 3). The required level of sensitivity is limited by the availability of trial participants. Sensitivity should be chosen as low as possible to increase specificity and, thus, reduce recruitment workload. In practice, however, when the CTRSS is motivated by a lack of participants for a specific trial, maximum sensitivity is imperative and low specificity must be accepted [49].

Figure 3.

The sensitivity and specificity of patient proposals from most CTRSS depends on the configuration of the reasoning algorithm. The developer is free to favor specificity over sensitivity or vice versa, depending on conditions that are likely to be different for each trial. Frequently relevant conditions are user acceptance, the availability of patient data, and the availability of participants compared to the required number.

Outcome

Overview

All studies in this review shared the common goal to improve the recruitment process of clinical trials. However, calculating the performance of the CTRSS in terms of specificity and sensitivity alone is, at best, a secondary indicator for its effect. Direct comparison with the manual recruitment process with regard to its effects on one or more of the following 3 variables should be favored: (1) the pure number of trial participants (ie, the effectiveness of the recruitment process), (2) the cost to recruit a given number of patients in terms of money and/or time (ie, the efficiency of the recruitment process), and (3) the quality of the collective of trial participants (eg, measures for selection bias and dropouts). All reported system effects were weighted according to the scientific quality of the evaluation as (1) reliable quantitative measurement, (2) quantitative measurement with insufficient description of or flawed method, or (3) survey or estimation (corresponding to A-C in Multimedia Appendix 1, respectively).

Impact on Recruitment Effectiveness

We found 5 papers that reliably quantified differences in recruitment effectiveness between manual and CTRSS-supported recruitment. Embi et al [49] reported on a doubling of physician’s enrollment rate from 3 to 6 per month, which was attributed to a concurrent significant increase in the number of referring physicians from 5 to 42. The CTRSS presented by Cardozo et al [41] increased identification of eligible patients from 1 in 2 months to 6 in 2 months after physicians failed to generate pager notifications in time. Herasevich et al [63] doubled monthly enrollment rates from 37 in approximately 8.5 months to 68 in approximately 9 months in a time-critical setting. They attributed the effect to the change from imprecise clinical notes (manual process) to specific physiologic criteria (automated process) as the basis for eligibility evaluation. Beauharnais et al [34] also doubled recruitment, in this case from 11 patients in 63 days to 20 patients in 62 days. The effect seemed to correlate with an increase in screening efficiency that similarly doubled the number of screened patients. A comparatively minor increase in recruited patients of 14% from 306 to 348 in the same week was reported by Köpcke et al [69] who addressed pure oversight of otherwise well-organized manual recruiters. They also found 7% of the manually included patients did not fulfill the trial’s eligibility criteria.

Lane et al [71], Tu et al [119], and a research group from the University of South Florida [55,56,68,88] ran their respective CTRSS on legacy patient data and evaluated how many of those patients found potentially eligible by their system were actually enrolled in the past. These works only showed an upper limit of CTRSS effectiveness because it was unclear whether “physicians actually missed the matches, rather than having undocumented reasons for omitting them” [56]. Similarly, Weiner et al [122] described an increase in the number of eligibility alerts sent to the trial investigator. Again, these can only be an upper limit for the effect of the CTRSS on enrollment because the physician’s reasons for not alerting the investigator were unclear. It is possible that the physicians judged patients unfit for the trial for reasons beyond the criteria that were considered by the CTRSS or that the patients were unwilling to participate. Séroussi and Bouaud [108], Weng et al [124], and Treweek et al [118] compared the effectiveness of their CTRSS with conventional methods of recruitment by running them in parallel over the whole study period. However, the lack of enrollment numbers for a preceding phase without the CTRSS made it impossible to quantify the effect of the CTRSS. Finally, Ferranti et al [54] reported an increase in recruitment numbers by 53%. Although we found their methodology suitable, the authors failed to discuss reasons for a sharp increase in recruitment numbers 2 months before introduction of the CTRSS.

Impact on Recruitment Efficiency

We judged 4 papers to reliably quantify differences in the efficiency of a CTRSS and the manual recruitment process. Thompson et al [117] reduced the screening time required per eligible patient from 18 to 6 minutes (66%) in a 2-week evaluation of their CTRSS prototype. This reduction was achieved solely through a higher fraction of eligible patients among screened patients, whereas the individual screening time was actually higher for patients proposed by the CTRSS. Penberthy et al [98] verified this circumstance for 5 additional trials, achieving screening time reductions of 95%, 34%, 86%, and 34% in 4 trials and an increase of 31% in 1 trial. Again, time savings resulted from screening fewer noneligible patients, whereas individual screening time remained unchanged. Therefore, the benefit in efficiency was found to depend on the specificity of the CTRSS. Nkoy et al [3] decreased screening time from 2 to zero hours daily with no manual control of the patient list generated by their CTRSS. They translated these time savings into cost savings of US $1200 per month. Beauharnais et al [34] halved screening time from 4 to 2 hours daily, measuring manual and CTRSS-aided recruitment over 60 subsequent days, respectively. They concluded that “the use of an algorithm is most beneficial for studies with low enrollment rates because of the long duration of the accrual period.”

Following a proposition by Ohno-Machado et al [90], the aforementioned research group from the University of South Florida [55,56,68,88] presented a unique approach to increase screening efficiency. Through ordering of the necessary clinical tests for eligibility determination in such a way that cheap but decisive tests were done first, they expected a reduction of costs by 50%. The cost of each test and the number of clinical trials and eligibility criteria that required a test’s results were included in the calculation. Unfortunately, the evaluation of the methodology was based on retrospective data and it remained unclear how the cost for tests without reordering were calculated. Seyfried et al [110] reported decreased screening time, but used the same dataset with the same test physicians for both manual and CTRSS-aided screenings (50 patients, 1-week interval). Furthermore, the CTRSS appeared to be trained with the same dataset on which it was tested later. Thadani et al [115] and Schmickl et al [106] did not directly measure screening time decreases, but stated that they could imagine screening only patients proposed by their respective CTRSS to be sufficient, reducing the patient pool by 81% and 76%, respectively. Obviously, such a strategy would require the CTRSS to feature a sufficiently high sensitivity.

Impact on Recruitment Quality

Only Rollman et al [104] compared the characteristics of patient sets after manual and CTRSS-aided recruitment. To this end, they observed 2 subsequent trials with similar eligibility criteria, the same recruitment period of 22 months and the same 4 recruiting primary care physicians. They found that usage of the CTRSS significantly increased the proportion of male nonwhite patients, as well as the fraction of patients with more severe disease grades.

Discussion

Principal Findings

There are some CTRSS setups that reappear on a regular basis. Firstly, for the retrospective identification of trial participants based on existing clinical data, database queries are designed and executed once or on a regular basis. They create a list of potentially eligible patients that is printed on paper or otherwise delivered to the researcher. Secondly, for trials with short windows of opportunity for recruitment, a key event in the EHR or another health IT component is constantly monitored. Its occurrence causes a more comprehensive eligibility test for the concerned patient and is communicated to the researcher via pager. Thirdly, if no patient data exist yet, it is entered directly into the CTRSS, which assesses and communicates the patient’s eligibility directly after completion of data entry. Although the treating physician was required to act for the patient in older systems, it is now becoming increasingly popular to offer this possibility directly to the patient via dedicated websites. Our review confirms the findings of Weng et al [129] who also gave names to these CTRSS types: (1) mass screening decision support, (2) EHR-based recruitment alerts, and (3) computerized research protocol systems and Web-based patient-enabling systems (depending on the user).

The setup of a specific CTRSS is rarely chosen on a theoretical background (ie, after an evaluation of different options for triggering the system and communicating the results). Instead, the setup is dictated mostly by the existing clinical environment, available IT tools, and the needs of a specific trial or group of researchers. Because CTRSS are a subset of clinical decision support systems (CDSS), it will generally be possible to configure existing CDSS such that they assume CTRSS functionalities (eg, [50,64,86]).

Limitations of the Review

Our review is limited in that the collection of publications and extraction of information from these publications was done by only 1 author. We reduced the impact of this approach by refraining from any interpretation of the given information in this step. Nevertheless, we cannot preclude mistakes, especially when stating that no or unclear information on a certain CTRSS characteristic was found in an article. Furthermore, all unreferenced statements made in this review reflect only the opinion of the 2 authors and are subject to discussion by the research community.

Comparison With Previous Review

Our review of 101 CTRSS publications offers the most comprehensive and up-to-date overview on CTRSS. Compared to the previous review paper by Cuggia et al from 2011 [17], which analyzed 28 CTRSS from articles published before October 2009, we identified an increase of publications in the subsequent years. These more recent publications present more data on the impact of CTRSS on the recruitment process, which we discuss subsequently. Of the 7 tendencies in CTRSS research formulated by Cuggia et al, all but the exclusive reliance on structured data appear to continue. We found many CTRSS that include unstructured data as a data source, although many of them are limited to keyword searches. There are 3 additional lessons we believe can be learned from the existing research, which are described subsequently.

Lack of standards is not limited to the terminologies of the patient data source, but also applies to the computational representation of eligibility criteria. Although researchers have proposed independent languages to encode the free-text criteria of a trial’s protocol (eg, ERGO [130], EliXR [131]), most CTRSS bind the representation of eligibility criteria in 2 ways to the specifics of their environment: (1) to the terminology of the patient data source and (2) to the chosen reasoning method. We believe independent and exchangeable eligibility criteria to be desirable because multisite trials have become the norm. However, judging from the experience so far, readily encoded criteria will need to be the norm in trial protocols before they will be adopted by CTRSS designers. Tools to help translate the criteria into SQL statements could speed up the adoption process.

The choice of the reasoning method should consider its pervasiveness (ie, how easily third parties interested in its deployment can learn to install and administrate it). Considering this, no other method seems to be as suitable for CTRSS as SQL queries on relational databases. Queries can make use of existing data from the EHR, a data warehouse (DWH), or a registry and their administrators are likely to be experienced creators and users of such queries. Resistance to adopt and maintain an additional query-based system is likely to be small compared to CTRSS that require additional training in one of the less widespread technologies, such as probabilistic methods or Arden syntax. Although complex reasoning methods have been shown to achieve high accuracy, it is unclear whether they lead to an increased CTRSS impact compared to queries.

Using patient care data promises efficiency and effectiveness gains for a CTRSS. But, because it is collected for other purposes, it also introduces new challenges [131]. It is imperfect from the viewpoint of eligibility assessments because it lacks uniformity (the same information can be documented differently for 2 patients), timeliness (information might be documented too late), and completeness (information might be missing for some or all patients). Uniformity and completeness problems can lead to severe selection bias and increase the cost of eligibility rule creation. For example, low uniformity necessitates an analysis of documentation habits; low completeness might enforce the use of proxy data [78] or estimates [90]. Timeliness must be ensured by the documentation process, which might resist change. Untimely data will severely limit the possibility to support a trial, especially in outpatient settings [46]. Thus, unfit data can constitute a major limitation to CTRSS impact.

First Conclusions on Clinical Trial Recruitment Support Systems Impact

We suggested that the introduction of a CTRSS can be motivated by 3 expectations: (1) an increase in the number of participants for a given clinical trial or a set of trials, (2) a reduction of trial costs through decreased screening costs, and (3) the guarantee to select a representative set of patients (ie, the reduction of selection bias). Many authors do not elaborate on the shortcomings of the manual recruitment process that led to the development of their CTRSS.

Whether a CTRSS is able to increase the number of participants for a trial depends little on its setup, but rather on the deficits of the manual recruitment process it is set to replace. To begin with, an untapped group of potential participants (ie, a gap between those patients who are eligible and those who are asked to participate) needs to exist. This gap originates from some patients not being screened at all or from communication problems between the different actors of the recruitment process. Thus, a CTRSS can close this gap if it can ensure that every patient is screened and that the necessary information on the patient and the trial is available in time.

Often, a CTRSS is expected to close the gap between estimated and realized participant numbers or that between eligible and recruited patients or even the gap between needed and available patients. These expectations are likely to be disappointed. They disregard that many causes for insufficient recruitment are out of the scope of a CTRSS or simply cannot be addressed by an IT intervention. The most important is a willingness of the patient to participate and motivation of physicians to participate in recruitment. The analysis of the existing recruitment process and its weaknesses should, therefore, be part of every CTRSS design process. Weng et al [123,124] give examples on how to do this. They characterize patient eligibility status in different categories, such as potentially eligible, approachable, consentable, eligible, and ultimately enrolled. By comparing the ratio of patients in each category, such taxonomy can be used to identify the weak spots in recruitment that need to be addressed by the CTRSS.

Although the effectiveness of a CTRSS is determined by its setting, improvements in screening efficiency might be more generally achievable. Many successes to reduce screening time are based on using existing data to reliably exclude patients from the screening list (ie, the CTRSS generates no or few false negatives). In this way, the CTRSS can be used to reduce the number of patients that must be screened manually. Under the reasonable assumption that documented data are correct, but not all patient characteristics are documented, we believe CTRSS should focus on the exclusion criteria of a clinical trial to maximize efficiency gains. No final eligibility decision should be based on the trial’s inclusion criteria because this can reduce the sensitivity of the CTRSS and motivate the screeners to use other screening methods in parallel. To realize efficiency gains, the CTRSS must completely replace the former screening process. This also means that the aim to increase recruitment efficiency is opposed to the other 2 potential aims of a CTRSS which profit from running multiple screening methods in parallel.

The potential benefit of a CTRSS on the composition of a trial’s participants has been insufficiently explored so far. Because patient demographics should be easily obtainable for all experiments comparing manual and CTRSS-aided recruitment, we suggest including them in future publications.

Future Directions

We found most articles describe the characteristics and operating principles of their CTRSS reasonably well, but all lacked in some regard. Intermediary criteria representation, terminologies of the patient data, and an evaluation of the system’s effects were often missing. Many authors present prototypes of their CTRSS directly after finishing its design and fail to report on its outcome and usage. We encourage more follow-up publications on the experiences with existing CTRSS such as those by Embi et al [51], Embi and Leonard [52], and Dugas et al [47]. To strengthen the comprehensibility and usefulness of future reports, we propose a list of essential elements that should be included (Textbox 1).

Essential elements to be included in future CTRSS studies.

Clinical Context

Number of trials that the CTRSS has been evaluated for

Length of time the CTRSS has been in use

Brief description and number of sites that use the CTRSS

Input

Representation format of eligibility criteria in the CTRSS

Comparison of original and computable eligibility criteria for an exemplary trial

Summary of how well the eligibility criteria could be translated for all other trials (if any)

Details on the translation process

Representation of patient data in the CTRSS

Details on the patient data source (eg, purpose, terminologies)

Working Principle

What triggers an eligibility assessment?

How are eligibility criteria and patient data compared?

How is the result of the assessment communicated, when is it communicated, and to whom?

Outcome

Recruitment process before introduction of CTRSS including perceived problems

Recruitment process following introduction of CTRSS

Patient numbers and time spent for each step

In their review of patient cohort identification systems in general, Shivade et al [21] found a “growing trend in the areas of machine learning and data mining” and believe these necessary to develop generalizable solutions. For CTRSS in particular, this trend has not yet manifested in the literature. Only Zhang et al [127] and Köpcke et al [70] report on experiments to exploit these techniques for recruitment purposes, but both are still in a prototype stage. Machine learning promises more independence from the individual representation of patient data in a hospital and better portability. Still more data are needed to assess advantages and disadvantages and to explore hybrid solutions.

Current publications in the area of CTRSS are still too focused on—and sometimes limited to—technical aspects of system setup and the accuracy of its eligibility assessment. After review of most of the existing literature, we believe that the impact of a CTRSS on a given recruitment process is determined more by the context of the CTRSS (ie, the available patient data, its integration in trial, and clinical workflows and its attraction to users). Therefore, what is needed are research projects to evaluate how a CTRSS can be embedded in different recruitment workflows, the characteristics of trials that profit from CTRSS, different designs for user interaction, and the outcomes of CTRSS in relation to these parameters.

Conclusions

We further found that differences in the setup of CTRSS are because of existing infrastructure and particularities of the recruitment process, particularly the target user of the CTRSS (eg, treating physician, study nurse) and the prior recruitment problem (eg, failure to identify, failure to communicate). Yet, there are still many questions open in defining when and how CTRSS can best improve recruitment processes in clinical trials. Based on the questions that remained open in our analysis of many of the 101 articles, we propose an item list that should be considered for future publications on CTRSS design, implementation, and evaluation. This shall ensure that CTRSS setup and background, their integration in research processes, and their outcome results are sufficiently described to allow researchers to better learn from other´s experiences.

Acknowledgments

We acknowledge support by Deutsche Forschungsgemeinschaft and Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU) within the funding program Open Access Publishing.

Abbreviations

- CDE

common data elements

- CTRSS

clinical trial recruitment support systems

- EHR

electronic health record

- ERGO

Eligibility Rule Grammar and Ontology

- HIS

hospital information system

- ICD

International Classification of Diseases

- LOINC

Logical Observation Identifiers Names and Codes

- MED

Medical Entities Dictionary

- MLM

Medical Logic Module

- NCI

National Cancer Institute

- NLP

natural language processing

- SNOMED CT

Systematized Nomenclature of Medicine Clinical Terms

- SQL

Structured Query Language

- UMLS

Unified Medical Language System

Multimedia Appendix 1

Table of publications included in qualitative analysis together with major CTRSS characteristics.

Footnotes

Authors' Contributions: FK conducted the review, wrote, and reviewed the manuscript. HUP wrote and reviewed the manuscript.

Conflicts of Interest: None declared.

References

- 1.Tassignon JP, Sinackevich N. Speeding the Critical Path. Appl Clin Trials. 2004 Jan;13(1):42. http://www.appliedclinicaltrialsonline.com/appliedclinicaltrials/article/articleDetail.jsp?id=82018. [Google Scholar]

- 2.Sullivan J. Subject recruitment and retention: Barriers to success. Appl Clin Trials. 2004 Apr;:50–54. http://www.appliedclinicaltrialsonline.com/appliedclinicaltrials/article/articleDetail.jsp?id=82018. [Google Scholar]

- 3.Nkoy FL, Wolfe D, Hales JW, Lattin G, Rackham M, Maloney CG. Enhancing an existing clinical information system to improve study recruitment and census gathering efficiency. AMIA Annu Symp Proc. 2009;2009:476–80. http://europepmc.org/abstract/MED/20351902. [PMC free article] [PubMed] [Google Scholar]

- 4.Hunninghake DB, Darby CA, Probstfield JL. Recruitment experience in clinical trials: literature summary and annotated bibliography. Control Clin Trials. 1987 Dec;8(4 Suppl):6S–30S. doi: 10.1016/0197-2456(87)90004-3. [DOI] [PubMed] [Google Scholar]

- 5.Gotay CC. Accrual to cancer clinical trials: directions from the research literature. Soc Sci Med. 1991;33(5):569–77. doi: 10.1016/0277-9536(91)90214-w. [DOI] [PubMed] [Google Scholar]

- 6.Ellis PM. Attitudes towards and participation in randomised clinical trials in oncology: a review of the literature. Ann Oncol. 2000 Aug;11(8):939–45. doi: 10.1023/a:1008342222205. http://annonc.oxfordjournals.org/cgi/pmidlookup?view=long&pmid=11038029. [DOI] [PubMed] [Google Scholar]

- 7.Collins JF, Williford WO, Weiss DG, Bingham SF, Klett CJ. Planning patient recruitment: fantasy and reality. Stat Med. 1984 Dec;3(4):435–43. doi: 10.1002/sim.4780030425. [DOI] [PubMed] [Google Scholar]

- 8.Campbell MK, Snowdon C, Francis D, Elbourne D, McDonald AM, Knight R, Entwistle V, Garcia J, Roberts I, Grant A, Grant A, STEPS group Recruitment to randomised trials: strategies for trial enrollment and participation study. The STEPS study. Health Technol Assess. 2007 Nov;11(48):iii, ix–105. doi: 10.3310/hta11480. http://www.journalslibrary.nihr.ac.uk/hta/volume-11/issue-48. [DOI] [PubMed] [Google Scholar]

- 9.Taylor KM, Kelner M. Interpreting physician participation in randomized clinical trials: the Physician Orientation Profile. J Health Soc Behav. 1987 Dec;28(4):389–400. [PubMed] [Google Scholar]

- 10.Siminoff LA, Zhang A, Colabianchi N, Sturm CM, Shen Q. Factors that predict the referral of breast cancer patients onto clinical trials by their surgeons and medical oncologists. J Clin Oncol. 2000 Mar;18(6):1203–11. doi: 10.1200/JCO.2000.18.6.1203. [DOI] [PubMed] [Google Scholar]

- 11.Mannel RS, Walker JL, Gould N, Scribner DR, Kamelle S, Tillmanns T, McMeekin DS, Gold MA. Impact of individual physicians on enrollment of patients into clinical trials. Am J Clin Oncol. 2003 Apr;26(2):171–3. doi: 10.1097/01.COC.0000017798.43288.7C. [DOI] [PubMed] [Google Scholar]

- 12.Rahman M, Morita S, Fukui T, Sakamoto J. Physicians' reasons for not entering their patients in a randomized controlled trial in Japan. Tohoku J Exp Med. 2004 Jun;203(2):105–9. doi: 10.1620/tjem.203.105. http://japanlinkcenter.org/JST.JSTAGE/tjem/203.105?lang=en&from=PubMed. [DOI] [PubMed] [Google Scholar]

- 13.Comis RL, Miller JD, Aldigé CR, Krebs L, Stoval E. Public attitudes toward participation in cancer clinical trials. J Clin Oncol. 2003 Mar 1;21(5):830–5. doi: 10.1200/JCO.2003.02.105. [DOI] [PubMed] [Google Scholar]

- 14.Grunfeld E, Zitzelsberger L, Coristine M, Aspelund F. Barriers and facilitators to enrollment in cancer clinical trials: qualitative study of the perspectives of clinical research associates. Cancer. 2002 Oct 1;95(7):1577–83. doi: 10.1002/cncr.10862. http://dx.doi.org/10.1002/cncr.10862. [DOI] [PubMed] [Google Scholar]

- 15.McDonald AM, Knight RC, Campbell MK, Entwistle VA, Grant AM, Cook JA, Elbourne DR, Francis D, Garcia J, Roberts I, Snowdon C. What influences recruitment to randomised controlled trials? A review of trials funded by two UK funding agencies. Trials. 2006;7:9. doi: 10.1186/1745-6215-7-9. http://www.trialsjournal.com/content/7//9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Toerien M, Brookes ST, Metcalfe C, de Salis I, Tomlin Z, Peters TJ, Sterne J, Donovan JL. A review of reporting of participant recruitment and retention in RCTs in six major journals. Trials. 2009;10:52. doi: 10.1186/1745-6215-10-52. http://www.trialsjournal.com/content/10//52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cuggia M, Besana P, Glasspool D. Comparing semi-automatic systems for recruitment of patients to clinical trials. Int J Med Inform. 2011 Jun;80(6):371–88. doi: 10.1016/j.ijmedinf.2011.02.003. [DOI] [PubMed] [Google Scholar]

- 18.Coorevits P, Sundgren M, Klein GO, Bahr A, Claerhout B, Daniel C, Dugas M, Dupont D, Schmidt A, Singleton P, De Moor G, Kalra D. Electronic health records: new opportunities for clinical research. J Intern Med. 2013 Dec;274(6):547–60. doi: 10.1111/joim.12119. [DOI] [PubMed] [Google Scholar]

- 19.Trinczek B, Köpcke F, Leusch T, Majeed RW, Schreiweis B, Wenk J, Bergh B, Ohmann C, Röhrig R, Prokosch HU, Dugas M. Design and multicentric implementation of a generic software architecture for patient recruitment systems re-using existing HIS tools and routine patient data. Appl Clin Inform. 2014;5(1):264–83. doi: 10.4338/ACI-2013-07-RA-0047. http://europepmc.org/abstract/MED/24734138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hripcsak G, Knirsch C, Zhou L, Wilcox A, Melton G. Bias associated with mining electronic health records. J Biomed Discov Collab. 2011;6:48–52. doi: 10.5210/disco.v6i0.3581. http://www.uic.edu/htbin/cgiwrap/bin/ojs/index.php/jbdc/article/view/3581/2997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shivade C, Raghavan P, Fosler-Lussier E, Embi PJ, Elhadad N, Johnson SB, Lai AM. A review of approaches to identifying patient phenotype cohorts using electronic health records. J Am Med Inform Assoc. 2014;21(2):221–30. doi: 10.1136/amiajnl-2013-001935. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=24201027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Andersen MR, Schroeder T, Gaul M, Moinpour C, Urban N. Using a population-based cancer registry for recruitment of newly diagnosed patients with ovarian cancer. Am J Clin Oncol. 2005 Feb;28(1):17–20. doi: 10.1097/01.coc.0000138967.62532.2e. [DOI] [PubMed] [Google Scholar]

- 23.Ashburn A, Pickering RM, Fazakarley L, Ballinger C, McLellan DL, Fitton C. Recruitment to a clinical trial from the databases of specialists in Parkinson's disease. Parkinsonism Relat Disord. 2007 Feb;13(1):35–9. doi: 10.1016/j.parkreldis.2006.06.004. [DOI] [PubMed] [Google Scholar]

- 24.Tu SW, Peleg M, Carini S, Bobak M, Ross J, Rubin D, Sim I. A practical method for transforming free-text eligibility criteria into computable criteria. J Biomed Inform. 2011 Apr;44(2):239–50. doi: 10.1016/j.jbi.2010.09.007. http://linkinghub.elsevier.com/retrieve/pii/S1532-0464(10)00139-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Patel CO, Weng C. ECRL: an eligibility criteria representation language based on the UMLS Semantic Network. AMIA Annu Symp Proc. 2008:1084. [PubMed] [Google Scholar]

- 26.Friedlin J, Overhage M, Al-Haddad MA, Waters JA, Aguilar-Saavedra JJ, Kesterson J, Schmidt M. Comparing methods for identifying pancreatic cancer patients using electronic data sources. AMIA Annu Symp Proc. 2010;2010:237–41. http://europepmc.org/abstract/MED/21346976. [PMC free article] [PubMed] [Google Scholar]

- 27.Carroll RJ, Eyler AE, Denny JC. Naïve Electronic Health Record phenotype identification for Rheumatoid arthritis. AMIA Annu Symp Proc. 2011;2011:189–96. http://europepmc.org/abstract/MED/22195070. [PMC free article] [PubMed] [Google Scholar]

- 28.Afrin LB, Oates JC, Boyd CK, Daniels MS. Leveraging of open EMR architecture for clinical trial accrual. AMIA Annu Symp Proc. 2003:16–20. http://europepmc.org/abstract/MED/14728125. [PMC free article] [PubMed] [Google Scholar]

- 29.Ahmad F, Gupta R, Kurz M. Real time electronic patient study enrollment system in emergency room. AMIA Annu Symp Proc. 2005:881. http://europepmc.org/abstract/MED/16779168. [PMC free article] [PubMed] [Google Scholar]

- 30.Ainsworth J, Buchan I. Preserving consent-for-consent with feasibility-assessment and recruitment in clinical studies: FARSITE architecture. Stud Health Technol Inform. 2009;147:137–48. [PubMed] [Google Scholar]

- 31.Ash N, Ogunyemi O, Zeng Q, Ohno-Machado L. Finding appropriate clinical trials: evaluating encoded eligibility criteria with incomplete data. Proc AMIA Symp. 2001:27–31. http://europepmc.org/abstract/MED/11825151. [PMC free article] [PubMed] [Google Scholar]

- 32.Atkinson NL, Massett HA, Mylks C, Hanna B, Deering MJ, Hesse BW. User-centered research on breast cancer patient needs and preferences of an Internet-based clinical trial matching system. J Med Internet Res. 2007;9(2):e13. doi: 10.2196/jmir.9.2.e13. http://www.jmir.org/2007/2/e13/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bache R, Miles S, Taweel A. An adaptable architecture for patient cohort identification from diverse data sources. J Am Med Inform Assoc. 2013 Dec;20(e2):e327–33. doi: 10.1136/amiajnl-2013-001858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Beauharnais CC, Larkin ME, Zai AH, Boykin EC, Luttrell J, Wexler DJ. Efficacy and cost-effectiveness of an automated screening algorithm in an inpatient clinical trial. Clin Trials. 2012 Apr;9(2):198–203. doi: 10.1177/1740774511434844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Besana P, Cuggia M, Zekri O, Bourde A, Burgun A. Using semantic web technologies for clinical trial recruitment. The Semantic Web-ISWC 2010: 9th International Semantic Web Conference, ISWC 2010; November 7-11, 2010; Shanghai, China. Springer; 2010. p. 7748. [Google Scholar]

- 36.Bhanja S, Fletcher-Heath LM, Hall LO, Goldgof DB, Krischer JP. A qualitative expert system for clinical trial assignment. FLAIRS-98: Proceedings of the Eleventh International Florida Artificial Intelligence Research Symposium Conference; Eleventh International Florida Artificial Intelligence Research Symposium Conference; May 18-20, 1998; Sanibel Island, Florida. AAAI Press; 1998. p. 84. [Google Scholar]

- 37.Boland MR, Miotto R, Gao J, Weng C. Feasibility of feature-based indexing, clustering, and search of clinical trials. A case study of breast cancer trials from ClinicalTrials.gov. Methods Inf Med. 2013;52(5):382–94. doi: 10.3414/ME12-01-0092. http://europepmc.org/abstract/MED/23666475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Breitfeld PP, Weisburd M, Overhage JM, Sledge G, Tierney WM. Pilot study of a point-of-use decision support tool for cancer clinical trials eligibility. J Am Med Inform Assoc. 1999 Dec;6(6):466–77. doi: 10.1136/jamia.1999.0060466. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=10579605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Breitfeld PP, Ullrich F, Anderson J, Crist WM. Web-based decision support for clinical trial eligibility determination in an international clinical trials network. Control Clin Trials. 2003 Dec;24(6):702–10. doi: 10.1016/s0197-2456(03)00069-2. [DOI] [PubMed] [Google Scholar]

- 40.Butte AJ, Weinstein DA, Kohane IS. Enrolling patients into clinical trials faster using RealTime Recuiting. Proc AMIA Symp. 2000:111–5. http://europepmc.org/abstract/MED/11079855. [PMC free article] [PubMed] [Google Scholar]

- 41.Cardozo E, Meurer WJ, Smith BL, Holschen JC. Utility of an automated notification system for recruitment of research subjects. Emerg Med J. 2010 Oct;27(10):786–7. doi: 10.1136/emj.2009.081299. [DOI] [PubMed] [Google Scholar]

- 42.Carlson RW, Tu SW, Lane NM, Lai TL, Kemper CA, Musen MA, Shortliffe EH. Computer-based screening of patients with HIV/AIDS for clinical-trial eligibility. Online J Curr Clin Trials. 1995 Mar 28;Doc No 179:[3347 words; 32 paragraphs]. [PubMed] [Google Scholar]

- 43.Deshmukh VG, Meystre SM, Mitchell JA. Evaluating the informatics for integrating biology and the bedside system for clinical research. BMC Med Res Methodol. 2009;9:70. doi: 10.1186/1471-2288-9-70. http://www.biomedcentral.com/1471-2288/9/70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Duftschmid G, Gall W, Eigenbauer E, Dorda W. Management of data from clinical trials using the ArchiMed system. Med Inform Internet Med. 2002 Jun;27(2):85–98. doi: 10.1080/1463923021000014158. [DOI] [PubMed] [Google Scholar]

- 45.Dugas M, Lange M, Berdel WE, Müller-Tidow C. Workflow to improve patient recruitment for clinical trials within hospital information systems - a case-study. Trials. 2008;9:2. doi: 10.1186/1745-6215-9-2. http://www.trialsjournal.com/content/9//2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Dugas M, Amler S, Lange M, Gerss J, Breil B, Köpcke W. Estimation of patient accrual rates in clinical trials based on routine data from hospital information systems. Methods Inf Med. 2009;48(3):263–6. doi: 10.3414/ME0582. [DOI] [PubMed] [Google Scholar]

- 47.Dugas M, Lange M, Müller-Tidow C, Kirchhof P, Prokosch HU. Routine data from hospital information systems can support patient recruitment for clinical studies. Clin Trials. 2010 Apr;7(2):183–9. doi: 10.1177/1740774510363013. [DOI] [PubMed] [Google Scholar]

- 48.Embi PJ, Jain A, Harris CM. Physician perceptions of an Electronic Health Record-based Clinical Trial Alert system: a survey of study participants. AMIA Annu Symp Proc. 2005:949. http://europepmc.org/abstract/MED/16779236. [PMC free article] [PubMed] [Google Scholar]

- 49.Embi PJ, Jain A, Clark J, Bizjack S, Hornung R, Harris CM. Effect of a clinical trial alert system on physician participation in trial recruitment. Arch Intern Med. 2005 Oct 24;165(19):2272–7. doi: 10.1001/archinte.165.19.2272. http://europepmc.org/abstract/MED/16246994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Embi PJ, Jain A, Clark J, Harris CM. Development of an electronic health record-based Clinical Trial Alert system to enhance recruitment at the point of care. AMIA Annu Symp Proc. 2005:231–5. http://europepmc.org/abstract/MED/16779036. [PMC free article] [PubMed] [Google Scholar]

- 51.Embi PJ, Jain A, Harris CM. Physicians' perceptions of an electronic health record-based clinical trial alert approach to subject recruitment: a survey. BMC Med Inform Decis Mak. 2008;8:13. doi: 10.1186/1472-6947-8-13. http://www.biomedcentral.com/1472-6947/8/13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. J Am Med Inform Assoc. 2012 Jun;19(e1):e145–8. doi: 10.1136/amiajnl-2011-000743. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=22534081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fernández-Breis JT, Maldonado JA, Marcos M, Legaz-García Mdel C, Moner D, Torres-Sospedra J, Esteban-Gil A, Martínez-Salvador B, Robles M. Leveraging electronic healthcare record standards and semantic web technologies for the identification of patient cohorts. J Am Med Inform Assoc. 2013 Dec;20(e2):e288–96. doi: 10.1136/amiajnl-2013-001923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Ferranti JM, Gilbert W, McCall J, Shang H, Barros T, Horvath MM. The design and implementation of an open-source, data-driven cohort recruitment system: the Duke Integrated Subject Cohort and Enrollment Research Network (DISCERN) J Am Med Inform Assoc. 2012 Jun;19(e1):e68–75. doi: 10.1136/amiajnl-2011-000115. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=21946237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Fink E, Hall LO, Goldgof DB, Goswami BD, Boonstra M, Krischer JP. Experiments on the automated selection of patients for clinical trials. Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics; IEEE International Conference on Systems, Man, and Cybernetics; October 5-8, 2003; Washington, DC. 2003. pp. 4541–4545. [DOI] [Google Scholar]

- 56.Fink E, Kokku PK, Nikiforou S, Hall LO, Goldgof DB, Krischer JP. Selection of patients for clinical trials: an interactive web-based system. Artif Intell Med. 2004 Jul;31(3):241–54. doi: 10.1016/j.artmed.2004.01.017. [DOI] [PubMed] [Google Scholar]

- 57.Gennari JH, Reddy M. Participatory design and an eligibility screening tool. Proc AMIA Symp. 2000:290–4. http://europepmc.org/abstract/MED/11079891. [PMC free article] [PubMed] [Google Scholar]

- 58.Gennari JH, Sklar D, Silva J. Cross-tool communication: from protocol authoring to eligibility determination. Proc AMIA Symp. 2001:199–203. http://europepmc.org/abstract/MED/11825180. [PMC free article] [PubMed] [Google Scholar]

- 59.Graham AL, Cha S, Cobb NK, Fang Y, Niaura RS, Mushro A. Impact of seasonality on recruitment, retention, adherence, and outcomes in a web-based smoking cessation intervention: randomized controlled trial. J Med Internet Res. 2013;15(11):e249. doi: 10.2196/jmir.2880. http://www.jmir.org/2013/11/e249/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Grundmeier RW, Swietlik M, Bell LM. Research subject enrollment by primary care pediatricians using an electronic health record. AMIA Annu Symp Proc. 2007:289–93. http://europepmc.org/abstract/MED/18693844. [PMC free article] [PubMed] [Google Scholar]

- 61.Harris PA, Lane L, Biaggioni I. Clinical research subject recruitment: the Volunteer for Vanderbilt Research Program www.volunteer.mc.vanderbilt.edu. J Am Med Inform Assoc. 2005 Dec;12(6):608–13. doi: 10.1197/jamia.M1722. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=16049231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Heinemann S, Thüring S, Wedeken S, Schäfer T, Scheidt-Nave C, Ketterer M, Himmel W. A clinical trial alert tool to recruit large patient samples and assess selection bias in general practice research. BMC Med Res Methodol. 2011;11:16. doi: 10.1186/1471-2288-11-16. http://www.biomedcentral.com/1471-2288/11/16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Herasevich V, Pieper MS, Pulido J, Gajic O. Enrollment into a time sensitive clinical study in the critical care setting: results from computerized septic shock sniffer implementation. J Am Med Inform Assoc. 2011 Oct;18(5):639–44. doi: 10.1136/amiajnl-2011-000228. http://jamia.bmj.com/cgi/pmidlookup?view=long&pmid=21508415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jenders RA, Hripcsak G, Sideli RV, DuMouchel W, Zhang H, Cimino JJ, Johnson SB, Sherman EH, Clayton PD. Medical decision support: experience with implementing the Arden Syntax at the Columbia-Presbyterian Medical Center. Proc Annu Symp Comput Appl Med Care. 1995:169–73. http://europepmc.org/abstract/MED/8563259. [PMC free article] [PubMed] [Google Scholar]

- 65.Kamal J, Pasuparthi K, Rogers P, Buskirk J, Mekhjian H. Using an information warehouse to screen patients for clinical trials: a prototype. AMIA Annu Symp Proc. 2005:1004. http://europepmc.org/abstract/MED/16779291. [PMC free article] [PubMed] [Google Scholar]

- 66.Kho A, Zafar A, Tierney W. Information technology in PBRNs: the Indiana University Medical Group Research Network (IUMG ResNet) experience. J Am Board Fam Med. 2007 Apr;20(2):196–203. doi: 10.3122/jabfm.2007.02.060114. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=17341757. [DOI] [PubMed] [Google Scholar]

- 67.Koca M, Husmann G, Jesgarz J, Overath M, Brandts C, Serve H. A special query tool in the hospital information system to recognize patients and to increase patient numbers for clinical trials. Stud Health Technol Inform. 2012;180:1180–1. [PubMed] [Google Scholar]

- 68.Kokku PK, Hall LO, Goldgof DB, Fink E, Krischer JP. A cost-effective agent for clinical trial assignment. Proceedings of the 2002 IEEE International Conference on Systems, Man & Cybernetics: bridging the digital divide: cyber-development, human progress, peace and prosperity; 2002 IEEE International Conference on Systems, Man & Cybernetics; October 6-9, 2002; Yasmine Hammamet, Tunisia. 2002. [Google Scholar]

- 69.Köpcke F, Kraus S, Scholler A, Nau C, Schüttler J, Prokosch HU, Ganslandt T. Secondary use of routinely collected patient data in a clinical trial: an evaluation of the effects on patient recruitment and data acquisition. Int J Med Inform. 2013 Mar;82(3):185–92. doi: 10.1016/j.ijmedinf.2012.11.008. [DOI] [PubMed] [Google Scholar]

- 70.Köpcke F, Lubgan D, Fietkau R, Scholler A, Nau C, Stürzl M, Croner R, Prokosch HU, Toddenroth D. Evaluating predictive modeling algorithms to assess patient eligibility for clinical trials from routine data. BMC Med Inform Decis Mak. 2013;13:134. doi: 10.1186/1472-6947-13-134. http://www.biomedcentral.com/1472-6947/13/134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lane NM, Kemper CA, Fodor M, Tu SW, Lai T, Yvon M, Carlson RW, Deresinski SC, Musen MA. Opportunity to enhance accrual to clinical trials using a microcomputer. International Conference On AIDS: Science Challenging AIDS; June 16-21, 1991; Florence, Italy. 1991. pp. 16–21. [Google Scholar]

- 72.Lee Y, Dinakarpandian D, Katakam N, Owens D. MindTrial: An Intelligent System for Clinical Trials. AMIA Annu Symp Proc. 2010;2010:442–6. http://europepmc.org/abstract/MED/21347017. [PMC free article] [PubMed] [Google Scholar]

- 73.Li L, Chase HS, Patel CO, Friedman C, Weng C. Comparing ICD9-encoded diagnoses and NLP-processed discharge summaries for clinical trials pre-screening: a case study. AMIA Annu Symp Proc. 2008:404–8. http://europepmc.org/abstract/MED/18999285. [PMC free article] [PubMed] [Google Scholar]

- 74.Lonsdale D, Tustison C, Parker C, Embley DW. Formulating queries for assessing clinical trial eligibility. Proceedings of the 11th international conference on Applications of Natural Language to Information Systems; NLDB’06: 11th international conference on Applications of Natural Language to Information Systems; May 31-June 2, 2006; Klagenfurt, Austria. Berlin: Springer; 2006. pp. 82–93. [DOI] [Google Scholar]

- 75.Lonsdale DW, Tustison C, Parker CG, Embley DW. Assessing clinical trial eligibility with logic expression queries. Data & Knowledge Engineering. 2008 Jul;66(1) doi: 10.1016/j.datak.2007.07.005. [DOI] [Google Scholar]

- 76.Majeed RW, Röhrig R. Identifying patients for clinical trials using fuzzy ternary logic expressions on HL7 messages. Stud Health Technol Inform. 2011;169:170–4. [PubMed] [Google Scholar]

- 77.Marcos M, Maldonado JA, Martínez-Salvador B, Boscá D, Robles M. Interoperability of clinical decision-support systems and electronic health records using archetypes: a case study in clinical trial eligibility. J Biomed Inform. 2013 Aug;46(4):676–89. doi: 10.1016/j.jbi.2013.05.004. http://linkinghub.elsevier.com/retrieve/pii/S1532-0464(13)00070-1. [DOI] [PubMed] [Google Scholar]

- 78.McGregor J, Brooks C, Chalasani P, Chukwuma J, Hutchings H, Lyons RA, Lloyd K. The Health Informatics Trial Enhancement Project (HITE): Using routinely collected primary care data to identify potential participants for a depression trial. Trials. 2010;11:39. doi: 10.1186/1745-6215-11-39. http://www.trialsjournal.com/content/11//39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Metz JM, Coyle C, Hudson C, Hampshire M. An Internet-based cancer clinical trials matching resource. J Med Internet Res. 2005 Jul 1;7(3):e24. doi: 10.2196/jmir.7.3.e24. http://www.jmir.org/2005/3/e24/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Miller JL. The EHR solution to clinical trial recruitment in physician groups. Health Manag Technol. 2006 Dec;27(12):22–5. [PubMed] [Google Scholar]

- 81.Miller DM, Fox R, Atreja A, Moore S, Lee JC, Fu AZ, Jain A, Saupe W, Chakraborty S, Stadtler M, Rudick RA. Using an automated recruitment process to generate an unbiased study sample of multiple sclerosis patients. Telemed J E Health. 2010 Feb;16(1):63–8. doi: 10.1089/tmj.2009.0078. http://europepmc.org/abstract/MED/20064056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Mosis G, Koes B, Dieleman J, Stricker BCh, van der Lei J, Sturkenboom MC. Randomised studies in general practice: how to integrate the electronic patient record. Inform Prim Care. 2005;13(3):209–13. doi: 10.14236/jhi.v13i3.599. [DOI] [PubMed] [Google Scholar]

- 83.Mosis G, Dieleman JP, Stricker BCh, van der Lei J, Sturkenboom MC. A randomized database study in general practice yielded quality data but patient recruitment in routine consultation was not practical. J Clin Epidemiol. 2006 May;59(5):497–502. doi: 10.1016/j.jclinepi.2005.11.007. [DOI] [PubMed] [Google Scholar]

- 84.Murphy SN, Morgan MM, Barnett GO, Chueh HC. Optimizing healthcare research data warehouse design through past COSTAR query analysis. Proc AMIA Symp. 1999:892–6. http://europepmc.org/abstract/MED/10566489. [PMC free article] [PubMed] [Google Scholar]

- 85.Murphy SN, Barnett GO, Chueh HC. Visual query tool for finding patient cohorts from a clinical data warehouse of the partners HealthCare system. Proc AMIA Symp. 2000:1174. http://europepmc.org/abstract/MED/11080028. [PMC free article] [PubMed] [Google Scholar]

- 86.Musen MA, Carlson RW, Fagan LM, Deresinski SC, Shortliffe EH. T-HELPER: automated support for community-based clinical research. Proc Annu Symp Comput Appl Med Care. 1992:719–23. http://europepmc.org/abstract/MED/1482965. [PMC free article] [PubMed] [Google Scholar]

- 87.Nalichowski R, Keogh D, Chueh HC, Murphy SN. Calculating the benefits of a Research Patient Data Repository. AMIA Annu Symp Proc. 2006:1044. http://europepmc.org/abstract/MED/17238663. [PMC free article] [PubMed] [Google Scholar]