Abstract

Background

The attentional blink (AB) phenomenon was used to assess the effect of emotional information on early visual attention in typically developing (TD) children and children with autism spectrum disorders (ASD). The AB effect is the momentary perceptual unawareness that follows target identification in a rapid serial visual processing stream. It is abolished or reduced for emotional stimuli, indicating that emotional information has privileged access to early visual attention processes.

Method

We examined the AB effect for faces with neutral and angry facial expressions in 8–14-year-old children with and without an ASD diagnosis.

Results

Children with ASD exhibited the same magnitude AB effect as typically developing children for both neutral and angry faces.

Conclusions

Early visual attention to emotional facial expressions was preserved in children with ASD.

Attention capacity is limited, but emotionally significant information can overcome this limitation to capture attention. This is demonstrated in the auditory domain by the well-known “cocktail party” effect, where we notice our name, but not much else in another group’s conversation. In the visual domain, the attentional blink (AB) phenomenon is abolished or reduced for emotional stimuli. AB refers to unawareness of a second target (Target2) presented immediately (e.g., 200–400ms) but not later (e.g., 600 ms) after identifying the first target (Target1). This lapse in perceptual awareness immediately following target identification is akin to a “blink”, and is thought to reflect limitations in attention resources; resources are momentarily depleted immediately following their engagement in the identification of Target1 and recover over time (Fox, Russo, & Georgiou, 2005). However, emotionally significant Target2 stimuli (e.g., emotionally arousing words, like “NAKED”) reduce or abolish the AB effect; emotional Target2 stimuli are perceived with greater accuracy than neutral Target2 stimuli immediately after identification of Target1 (Anderson, 2005). The AB paradigm, therefore, examines the influence of encoding emotional information implicitly; that is, encoding without requiring explicit evaluation of emotional valence. Reduction of the AB effect with emotional information indicates that emotional valence influences early visual attention.

Prior brain imaging and neuropsychological studies suggest that testing emotional information processing in early visual attention is an assay of amygdala function. Structural imaging studies of human and non-human primates showed that the amygdala has direct connections with cortical (prefrontal cortex) and subcortical (pulvinar) areas that are involved in visual attention (Adolphs, 2003; Amaral, Price, Pitkanen, & Carmichael, 1992; Pessoa & Adolphs, 2010). Bilateral lesions to the amygdala led to impaired recognition of fearful facial expressions (Adolphs, Tranel, Damasio, & Damasio, 1994). Adults with post-traumatic stress disorder, a disorder characterized by atypical amygdala function, showed an enhanced amygdala response when disengaging attention from threatening faces (El Khoury-Malhame et al., 2011). The AB effect for emotional information depends upon the amygdala as adults with bilateral amygdala damage failed to show a reduced AB effect for emotional words (Anderson & Phelps, 2001). Thus, bilateral amygdala damage eliminated privileged access to emotional information in early visual attention. Functional neuroimaging studies of the AB effect showed that increased amygdala activation correlated with a reduced AB effect for emotional stimuli, providing direct evidence that the amygdale mediates emotional modulation of the AB effect (Lim, Padmala, & Pessoa, 2009). Sensitivity of the emotional AB effect to amygdala damage in neuropsychological patients and amygdala engagement in healthy individuals supports its use as an assay for contributions of the amygdala to early visual attention.

Histologic and neuroimaging evidence documents altered amygdala development and function in ASD (Amaral, Schumann, & Nordahl, 2008); this alteration is posited to underlie core social impairments in ASD (Dawson, Webb, & McPartland, 2005; Schultz, 2005). Tasks assessing core social impairments can be parsed into two categories: explicit evaluation of emotional information (‘explicit’) vs. implicit encoding of emotional information (‘implicit’). Most behavioral studies in the ASD literature have used explicit tasks that require direct comparison of facial expressions or pairing expressions with emotional words. Adults and children with ASD have difficulty with facial expression recognition (Boraston, Blakemore, Chilvers, & Skuse, 2007; Davies, Bishop, Manstead, & Tantam, 1994; Gross, 2008; Hobson, 1986; Macdonald et al., 1989; Tardif, Lainé, Rodriguez, & Gepner, 2007), but this is not a universal finding (see Harms, Martin, & Wallace, 2010 for comprehensive view). In comparison, few ASD studies outside of the neuroimaging environment have used implicit tasks. Adults with ASD demonstrated an intact AB effect with neutral words, but failed to show a reduced AB effect for identifying emotional words (Corden, Chilvers, & Skuse, 2008; Gaigg & Bowler, 2009), indicating that early visual attention is selectively disrupted for emotional information. In contrast, adults with ASD showed an intact spatial attention bias to faces with emotional expressions (Monk et al., 2010). Based on these findings, it is not possible to discern whether impairment of implicit emotional processing in ASD is selective to stimuli (words rather than pictures) or attentional process (visual perception rather than spatial attention).

The present study examined the emotional modulation of the AB effect in 8–14 year-old children with and without ASD. It is important to investigate this effect in ASD children for two reasons: First, there are no published studies of implicit emotional processing in early visual attention in ASD children. In typical development, electrophysiological evidence indicates that visual processing of emotional faces becomes more efficient with age (Batty & Taylor, 2006) and voluntary attention (indexed by P300) is higher for angry than happy faces for children but was higher for happy than angry faces for adults (Kestenbaum & Nelson, 1992). If such developmental differences are paralleled in ASD, it is possible that the emotional AB effect differs between ASD adults and children. Second, explicit identification of emotion in faces was worse in adults with ASD than controls but this finding did not extend to school age children and adolescents (Rump, Giovannelli, Minshew, & Strauss, 2009). If this developmental difference is paralleled in implicit emotional processing, AB findings from ASD adults may not extend to ASD children. In the 8 to 14 age range children are sensitive to emotional faces, such as anger (Barnes, Kaplan, & Vaidya, 2007). Thus, this is an important period for examining implicit emotional processing. Our AB paradigm used picture stimuli, headshots of dogs as Target1 and faces with neutral or angry expressions as Target2. We used target pictures rather than words, because language impairments are common in ASD and we wanted to limit their effect on performance. Further, the emotional targets were faces with angry expressions because past findings show that the AB associated with angry faces is more robust than that associated with happy faces (Maratos, 2011)

Methods

Participants

Twenty-five children with an ASD diagnosis and 25 typically developing (TD) control children were recruited for this study. Sixteen additional children were enrolled but excluded due to: 1) a failure to meet performance criteria (ASD: N=4; TD: N=7) and 2) to create similar gender ratios for the two groups (TD: N=5 females). Children were recruited through the local community via advertisements and a hospital’s outpatient clinic specializing in ASD and neuropsychological assessment. All children were between the ages of 8 and 14 years, and were required to have a Full Scale IQ≥70, as measured by a Wechsler Intelligence scale (Wechsler Intelligence Scale for Children–4th Edition, or Wechsler Abbreviated Scale of Intelligence; Wechsler, 1999, 2003). Written informed consent from parents of children, and assent from children were obtained according to Institutional Review Board guidelines.

Children with ASD received a clinical diagnosis (Autism n=13, Asperger’s Syndrome n=9, Pervasive Developmental Disorder-Not Otherwise Specified n=3) based on Diagnostic and Statistical Manual of Mental Disorders–Fourth Edition –Text Revised criteria (American Psychiatric Association, 2000). They also met the criteria for ‘broad ASD’ based on scores from the Autism Diagnostic Interview-Revised (Lord, Rutter, & Le Couteur, 1994) and/or the Autism Diagnostic Observation Schedule (Lord et al., 2000) following criteria established by the Collaborative Programs for Excellence in Autism (Lainhart et al., 2006). Children with ASD were screened through a parent phone interview and excluded if they had any history of known genetic, psychiatric, or neurological disorders (e.g., Fragile X syndrome or Tourette’s syndrome). Stimulant medications were withheld at least 24 hours prior to testing (n=2). One child was prescribed an anti-psychotic, an anti-depressant, and an anti-cholinergic, and two other children were prescribed melatonin. TD children were screened and excluded if they or a first-degree relative had developmental, language, learning, neurological, psychiatric disorders, or psychiatric medication usage, or if the child met the clinical criteria for a childhood disorder on the Child Symptom Inventory – Fourth Edition or Child and Adolescent Symptom Inventory (Gadow & Sprafkin, 2000, 2010).

Stimulus materials

All stimuli were displayed on a white background at a viewing distance of 60 cm on a 17-inch monitor using E-Prime version 1.1 (Psychology Software Tools Inc., Pittsburg, PA). Twenty color face photographs of adult faces (10 with neutral facial expressions and 10 with angry facial expressions) were obtained from the NimStim set (Tottenham et al., 2009); faces did not overlap across tasks. The Emotional Identification Task consisted of six colored photographs of adults with angry and neutral facial expressions (three of each gender). The Attentional Blink Task consisted of three classes of stimuli: 1) Two color photographs of dogs (from www.dogbreedinfo.com), were used as the first target stimuli (Target1). 2) Twenty-eight colored photographs of scrambled faces were used for the distractor stimuli. Faces of seven females and seven males with neutral expressions were scrambled with the face outline preserved and the interior portion scrambled with a method devised previously (Conway, Jones, DeBruine, Little, & Sahraie, 2008). 3) Fourteen colored photographs of faces with angry and neutral expressions (seven of each gender; seven with mouth open and seven closed) were used for the second target stimuli (Target2). Neutral, angry, and scrambled faces did not differ in lower level visual characteristics such as luminance and spatial frequency information. All photographs were matched for luminance (N. Tottenham, personal communication February 15, 2012) and did not differ in spatial frequency computed using a 2-dimensional discrete Fourier transform, across the three classes of stimuli.

Emotional Identification Task

This task assesses participants ability to recognize the emotional valence of faces accurately. The task consisted of 6 trials. Participants began the task by pressing the space bar in response to the words “Get Ready!” on the screen. Either an angry face or neutral face was presented centrally for 2000 ms, following which the participant had to press the number “1” key for “angry” or the number “2” key for “no emotion” in answer to the question “Is this person:”. Participants could take as long as they needed to record their response. Recognition accuracy of 70% or higher was considered successful emotion discrimination.

Attentional Blink Task

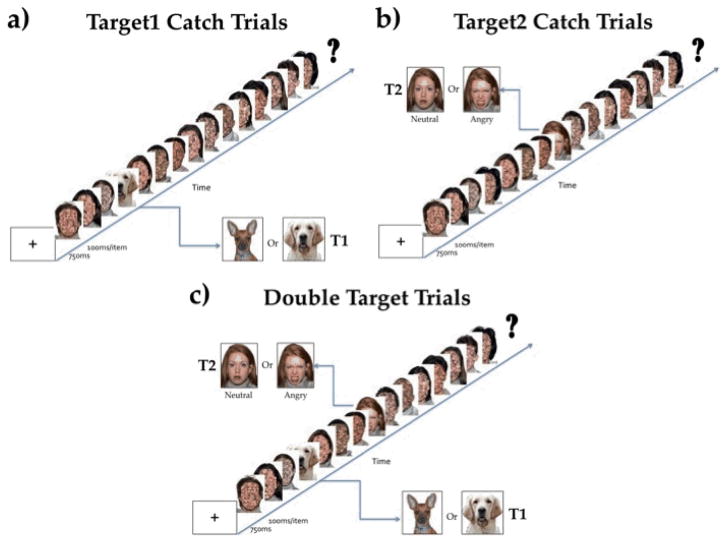

The AB task consisted of 188 trials divided into four blocks of 47 trials each. Participants pressed the space bar in response to the appearance of the words “Get Ready!” on the screen to start each block; they could take a self-paced break in between blocks. Each trial began with the presentation of a fixation cross for 750 ms followed by a rapid serial visual presentation (RSVP) stream of 15 stimuli presented for 100 ms each (see Figure 1). Participants were instructed to look for a dog and informed that sometimes a face would also appear. Participants were instructed to pay attention closely to the task because the pictures came on the screen very fast. Each trial ended with the question on the screen “Did you see a dog?”. Participants pressed “1” for “yes” or “2” for “no” on the number pad of the keyboard. Following their response, a second question, “Did you see a face?” appeared on the screen. Once again, participants indicated their response by using the number pad. For each question, participants had 5000 ms to respond.

Figure 1.

Rapid Serial Visual Presentation (RSVP) stream for the Attentional Blink Task. a) Target1-only catch trials in which only the dog face was presented, b) Target2-only catch trials in which only an angry or neutral face was presented, c) Double target trials in both Target1, the dog face, and Target2, the human face, were presented. Each scrambled face distractor was presented for 100ms. At the end of the RSVP stream, participants indicated by key press whether they had seen saw a dog and a face. Faces are counterbalanced for open and closed mouth across emotion conditions.

The AB task consisted of eight types of trials. There were two types of catch trials, and six types of AB trials varying in the facial expression of the Target2 stimulus, at each of three time lags. Specifically, catch trials included: 1) 30 Target1-only trials, in which only the Target1, a dog, appeared in the RSVP stream but no Target2 appeared; 2) 32 Target2-only trials, in which a Target2 (either an angry or neutral face) appeared in the RSVP stream without a preceding Target1. These trials were included in order to ‘catch’ children with a bias to respond with “yes” to both questions at the end of the RSVP stream.

On the remaining AB trials, a Target2 was a face with either a neutral or angry expression which followed the T1 at one of three lags: 1) 21 trials with a neutral Target2 appearing 200 ms after the Target1, (termed Neutral-Lag2); 2) 21 trials with an angry Target2 appearing 200 ms after the Target1, (termed Angry-Lag2); 3) 21 trials with a neutral Target2 appearing 400 ms after the Target1 (termed Neutral-Lag4); 4) 21 trials with an angry Target2 appearing 400 ms after the Target1, (termed Angry-Lag4); 5) 21 trials with a neutral Target2 appearing 800 ms after the Target1 (termed Neutral-Lag6); 8) 21 trials with an angry Target2 appearing 800 ms after the Target1 (termed Angry-Lag8). Further, within the RSVP stream, the serial position of Target1 was varied among stimuli 4, 5, and 6 in the trial sequence. The position of Target1 was varied at these points to minimize missing the Target1 because it occurred too early in the sequence and individuals would not have enough time to adapt to the RSVP presentation; moreover, this minimized working memory demands of remembering the Target1 by the end of the trial. Thus, Target2 followed each of these positions at the three lags described above such that it was either the second (Lag2), fourth (Lag4), or eighth (Lag8) stimulus after the Target1. All the trials were pseudorandomized into blocks so that no more than two successive trials were of the same type within each block. Blocks were created to allow breaks after 47 trials, and were balanced to include the same number of each trial type. Two versions of the task were created to counterbalance block presentation order.

Procedure

Participants completed 20 practice trials and 188 test trials of the AB task as part of a larger study examining cognitive control in ASD. This testing was conducted in the same session as cognitive testing. In addition, 13 children in the TD group were recruited for a study on typical development and completed the explicit emotional identification task.

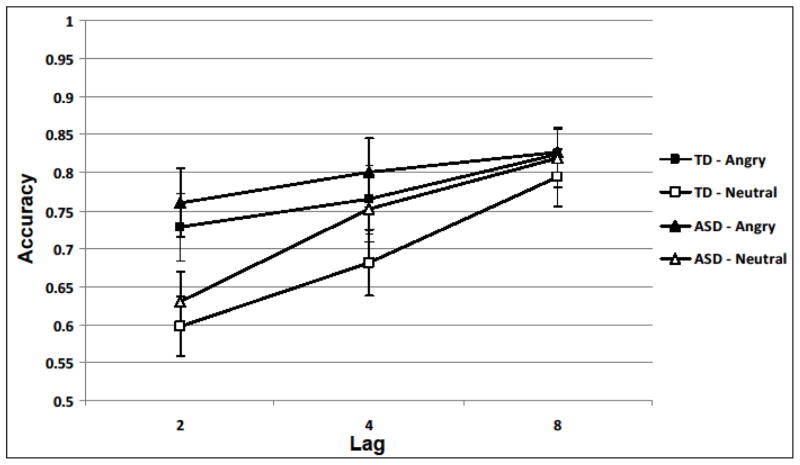

Results

Performance on the Emotion Identification task was 100% accurate for all 13 participants who completed the task. On the AB task, children who made more than 50% errors on the Target2 catch trials were excluded from further analysis (ASD: n=4; TD: n=7). Accuracy of Target1 catch trials was similar between the two groups across lags and emotion conditions (all Fs<1.35, all ps>0.25; See Supporting Information online for detailed information on catch trials). A Target2 response was scored as correct only if the participant had also correctly identified the Target1, because for Target1 incorrect trials the source of Target2 errors is unknown (Chun & Potter, 1995). For each participant, percentage of accurate Target2 trials was computed for each of the six types of AB trials. Percent accuracy was analyzed in a mixed analysis of variance with Group (ASD, TD) as the between-subjects factor and Emotion (Target2 - Angry, Neutral) and Lag (2, 4, 8) as within-subjects factors. Measures of effect size (partial eta-squared for analyses of variance [ANOVA], Cohen’s d for means, r2 for correlations) are presented for the AB effects. Figure 2 summarizes the results by trial type and groups.

Figure 2.

Mean proportion accuracy for Target2 (conditional upon correct identification of Target1) as a function of Group (ASD vs. TD), Emotional face of Target2 (angry vs. neutral), and Lag (2, 4, 8). Error bars represent standard error of the mean.

We found two significant main effects. Both groups were more accurate in the Angry Target2 condition than Neutral Target2 condition (main effect of Emotion), F(1,48)=28.08, p<0.01, η2=.37. Both groups’ Target2 accuracy differed by Lag (main effect of Lag), F(2,47)=19.57, p<.01, η2=.45. Post-hoc paired t-tests showed that Target2 detection at Lag2 was worse than at Lag4, t(49)=3.95, p<0.01, d= 0.38, and Lag8, t(49)=6.36, p<0.01, d= 0.83. Target2 detection at Lag4 was also worse than Lag8, t(49)=3.93, p<0.01, d= 0.38. These findings indicate that our paradigm produced the expected AB phenomenon, decreased accuracy at the shorter lags relative to longer lags. Further, the main effect of Group was not significant (p=0.74) indicating that overall accuracy did not differ between groups.

There was a significant Lag X Emotion interaction, F(2,47) = 10.97, p<.001, η2=.32, indicating that the magnitude of the AB differed by the nature of the Target2. Paired t-tests showed that Target2 accuracy was higher for Angry than Neutral trials at Lag2 (t(49),=7.08, p<.01, d=0.73) and Lag4 (t(49)= 3.10, p<0.01, d= 0.33), but not at Lag8 (p=0.34, d=0.11). Further, among the Angry trials, Target2 accuracy improved from Lag2 to Lag8 (t(49)= 2.86, p<0.01, d= 0.43) and marginally from Lag4 to Lag8 (t(49)=1.96, p=0.056, d= 0.22); accuracy at Lag2 did not differ from Lag4 (p =0.11, d= 0.17). Among the Neutral trials, Target2 accuracy improved with increasing lag length, from Lag2 to Lag4 (t(49)=5.00 p<0.01, d=0.54) and from Lag4 to Lag8 (t(49), = 4.25, p < .01, d= 0.48), and from Lag2 to Lag8 (t(49)=8.28, p<0.01, d= 1.10). Improved Target2 accuracy for angry faces relative to neutral faces at earlier lags indicates that emotional stimuli reduced the AB effect. Most importantly, there were no significant 2-way or 3-way interactions with Group (all F’s<1.1, all p’s>0.300), indicating that the magnitude of the effect of emotional stimuli on the AB did not differ between groups. All of the reported effects did not change when age or Full Scale IQ was used a covariate. We also found that the reported effects did not change after removing 3 children with ASD who had full scale IQ lower than 85. Further, the ANOVA was repeated using d’ as the dependent variable. This analysis also revealed that the AB was modulated by emotional stimuli and that the groups did not differ in the magnitude of the AB for neutral and emotional faces (See Supporting Information online for details).

Discussion

We assessed whether emotional faces reduced the AB effect in children with ASD and matched TD controls. The magnitude of the AB effect for faces with neutral expression was similar in the two groups. Further, it was reduced to the same extent for faces with angry expression, indicating that early visual attention to emotional information is preserved in higher-functioning children with ASD. Supplementary analyses indicated that lack of group difference in the AB effect for neutral and emotional faces was not explained by age, intelligence, or response bias.

The present findings extend our knowledge of early visual attention and emotional processing in ASD in three ways. First, our study is the first to examine the AB effect for social pictorial stimuli such as photographs of faces. Second, our finding of an intact AB effect for stimuli with neutral emotional valence in ASD extends past findings using stimuli such as single letters in children and adults (Amirault et al., 2009; Rinehart, Tonge, Brereton, & Bradshaw, 2010) and words with adults (Corden et al., 2008; Gaigg & Bowler, 2009). Thus, early visual attention to stimuli without emotional valence, regardless of stimulus type, appears to be similar in ASD and TD groups. The present findings conflict with one study showing that adults with ASD had reduced Target2 accuracy for longer lags relative to controls (Amirault et al., 2009); however this finding was not observed in other studies (Corden et al., 2008; Gaigg & Bowler, 2009; Rinehart et al., 2010). One critical difference between Amirault et al. and the other studies reporting no group differences is that T2 (black “X”) and distracters (black letters) did not differ in color in their study. Perhaps this similarity in color made it difficult to identify Target2 among distracters for ASD adults until long after Target1 (red letter) identification. Third, our finding of a reduced AB effect with emotional stimuli in ASD children is consistent with findings in adults with ASD using a different implicit task, bias in spatial attention (Monk et al., 2010). Together, these findings from different implicit tasks and different age groups provide convergent evidence for preserved sensitivity of early attention to emotional nonverbal stimuli, specifically angry faces, in ASD.

Our findings of an intact emotional modulation of the AB effect for faces conflict with the two adult studies in ASD using emotional words (Corden et al., 2008; Gaigg & Bowler, 2009). We offer four possible accounts for these discrepant findings. First, we posit that the perceptual salience of the emotional stimuli influences whether the emotional AB effect is intact in ASD. Our paradigm required detection of emotional faces with gaze directed toward the observer in the context of non-meaningful visual stimuli (i.e., scrambled faces). The high contrast between target and non-target stimuli may have highlighted the emotional valence in faces, thereby, increasing their likelihood of detection over neutral faces in a rapid serial visual processing stream. Prior studies required identification of taboo words in the context of meaningful neutral words (Corden et al., 2008; Gaigg & Bowler, 2009). As both require semantic processing, the taboo words may not have been as salient in this context. Thus, our conditions are not parallel to the adult studies that reported insensitivity of the AB effect to emotional information in ASD. This perceptual salience account predicts that adults with ASD would show greater accuracy for Target2 taboo words relative to neutral words if they were presented in a rapid visual processing stream of non-words. Furthermore, this account also predicts that adults with ASD would show an intact emotional modulation of the AB effect in our paradigm with emotional facial expressions.

Second, we posit that emotional words used in the past studies may not have been arousing enough to be sensitive to early visual attention processes. The emotional words include abstract concepts that attain their emotional arousal only by placing them in a social context (e.g., “BASTARD”). Perhaps, this is one reason why the emotional words were rated as being only moderately arousing (Corden et al., 2008). Thus, the AB task used with adults placed greater demands on semantic interpretation. Indeed, accuracy of identifying emotional Target2 words correlated positively with verbal abilities in the ASD group (Gaigg & Bowler, 2009). In contrast, our face stimuli did not require semantic interpretation, and this lesser burden in our AB paradigm might have made the angry Target2 stimuli highly detectable. This emotional arousal account predicts that our finding of an intact emotional modulation of the AB effect for faces will not extend to words.

Third, we posit that the response demand of identifying (and remembering) a word may also increase verbal short-term memory demands in the AB task. This may be another contributing factor to the aforementioned relationship between Target2 accuracy and verbal abilities (Gaigg & Bowler, 2009). In contrast the pictorial AB task only required face detection, and this lower level response demand limited these non-visual attention components of the task. Thus, this response demand account predicts that our finding of an intact emotional modulation of the AB effect for faces will not extend to an identification version of the pictorial AB task (i.e., gender identification).

Lastly, the discrepant findings may reflect a developmental difference. Intact emotional modulation of the AB effect in children but not in adults suggests that sensitivity of early visual attention to emotional information may weaken with maturation. Indeed, findings from typical development indicate that pre-adolescent children of the same ages as those included in our study, exhibit a heightened response to angry faces, which is not sustained into adulthood (Barnes et al., 2007). Our findings suggest that visual attention in ASD follows a similar trajectory for sensitivity to emotional information as observed in typical development. Interestingly, the typical trajectory is nonlinear, as younger children (6–9 years) and adults did not show the same heightened sensitivity to angry faces. Therefore, this account predicts that both ASD and TD children who are younger than the age range included here should not show the reduced AB effect for angry faces.

Neuropsychological and neuroimaging studies suggest that the emotion AB effect assays amygdala function (Anderson & Phelps, 2001; Lim et al., 2009). Intact emotional modulation of the AB effect in our study suggests that the amygdala is not globally disrupted in ASD. Our finding converges with behavioral evidence of intact rapid, early attention to non-social emotional stimuli in ASD, such as identifying a picture of a snake in a visual array containing pictures of mushrooms (South et al., 2008). Functional neuroimaging studies of rapid implicit processing of emotional faces yield increased amygdala response in adults with ASD, suggesting a compensatory response of the amygdala to perform similarly to matched controls (Monk et al., 2010). This evidence is juxtaposed to reports of generally reduced amygdala function during explicit social perception and cognition tasks involving faces (Harms et al., 2010; Pelphrey & Carter, 2008; Pelphrey, Shultz, Hudac, & Vander Wyk, 2011; Schultz, 2005). Taken together, these findings indicate that only some aspects of amygdala function may be affected in higher-functioning ASD, and future investigations should examine the full range of tasks that require input from the amygdala, in order to delineate the behavioral profile of amygdala function in ASD. Furthermore, should future research support the hypothesis of intact amygdala function for implicit early visual attention in ASD, then this knowledge could influence new behavioral interventions that take advantage of intact implicit emotional processing to support teaching more complex, explicit social skills that lag or fail to develop.

It is important to note limitations of the present study. First, our lack of group differences may be attributed to inadequate power. However our sample sizes meet or exceed those of the two previous emotional AB studies that detected group differences (Corden et al., 2008; Gaigg & Bowler, 2009). Further, the effect size in the present study for the predicted 3-way interaction that was observed previously in adults was small indicating that very large samples (e.g., N=151 per group based on G*power 3; Faul, Erdfelder, Lang, & Buchner, 2007) would be needed to detect differences. Second, our ASD sample is limited to those with higher cognitive functioning (Full Scale IQ Range= 77–149). While this reduces potential confounds of a general cognitive disability on early visual attention, our findings may not generalize to lower functioning individuals with ASD. Third, we do not know whether all children were able to explicitly recognize angry and neutral expressions, as we were able to obtain these data only on a subset of the TD children. The magnitude of the emotional AB effect (% accuracy for angry Target2 –neutral Target2 at Lag2) for those children did not differ significantly from the remaining TD children (p = 0.82).

In summary, early visual attention processes were sensitive to emotional information in children with ASD using an implicit emotional processing task. These processes are known to rely upon amygdala function, a brain structure with a noted history of atypical development and function in ASD. Our findings suggest that some early attention functions subserved by the amygdala are preserved in the preadolescent period of development in higher-functioning children with ASD.

Table 1.

Participant demographics

| Total N | TYP 25 |

ASD 25 |

p-value |

|---|---|---|---|

| Chronological Age (Years) | |||

| M (SD) | 10.61 (1.68) | 11.17 (1.66) | 0.24 |

| Range | 6.92–14 | 8.42–13.92 | |

| Full Scale IQ2 | |||

| M (SD) | 117.80 (15.49) | 110.68 (18.10) | 0.14 |

| Range | 91–144 | 77–149 | |

| Gender (male/female) | 17/8 | 20/5 | 0.33 |

| ADI/ADI-R1 | |||

| Social M | -- | 19.63 (4.92) | -- |

| Range | 11 – 27 | ||

| Communication | -- | 15.88 (3.99) | -- |

| Range | 9 – 22 | ||

| Repetitive Behaviors | -- | 5.13 (1.80) | -- |

| Range | 2 – 9 | ||

| ADOS | |||

| Social+Communication | -- | 11.36 (3.56) | -- |

| Range | 5 – 19 | ||

One child was missing the ADI-R

Weschler Intelligence scale for Children–4th Edition, or Wechsler Abbreviated Scale of Intelligence

Key Points.

Social impairments in children with ASD have been documented in explicit social emotion tasks; however, less is known about implicit processing of social emotional information in children with ASD.

This study compared the emotional modulation of the AB effect in higher-functioning children with ASD and typically developing controls, because this implicit task has revealed a lack of emotional modulation in adults with ASD.

The current results showed similar patterns of emotional modulation of the AB effect in ASD and TD groups.

Thus, some early social-emotional visual attention functions are preserved during development in higher-functioning children with ASD.

Acknowledgments

We thank all the families for volunteering their time and effort to participate in our studies. Isadore and Bertha Gudelsky Foundation, the Elizabeth and Frederick Singer Foundation, and the National Institute Of Mental Health - Award Numbers K23MH086111 (PI: BEY), 5F31MH84526 (PI: ER), and 5R01MH084961 (PI: CJV) – supported this research. We thank Vanessa Troiani and Xiaozhen You with their assistance in conducting the spatial frequency analysis on our stimuli. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute Of Mental Health or the National Institutes of Health.

References

- Adolphs R. Cognitive neuroscience of human social behavior. Nature Reviews Neuroscience. 2003;4:165–178. doi: 10.1038/nrn1056. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372(6507):669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL, Pitkanen A, Carmichael ST. Anatomical organization of the primate amygdaloid complex. In: Aggleton JP, editor. The Amygdala: Neurobiological aspects of emotion, memory, and mental dysfunction. New York: Wiley-Liss; 1992. pp. 1–66. [Google Scholar]

- Amaral DG, Schumann CM, Nordahl CW. Neuroanatomy of autism. Trends in Neurosciences. 2008;31(3):137–145. doi: 10.1016/j.tins.2007.12.005. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders DSM-IV-TR Fourth Edition. 4. American Psychiatric Publishing, Inc; 2000. [Google Scholar]

- Amirault M, Etchegoyhen K, Delord S, Mendizabal S, Kraushaar C, Hesling I, Allard M, et al. Alteration of attentional blink in high functioning autism: a pilot study. Journal of Autism and Developmental Disorders. 2009;39(11):1522–1528. doi: 10.1007/s10803-009-0821-5. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411(6835):305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Barnes KA, Kaplan LA, Vaidya CJ. Developmental differences in cognitive control of socio-affective processing. Developmental Neuropsychology. 2007;32(3):787–807. doi: 10.1080/87565640701539576. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental science. 2006;9(2):207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Boraston Z, Blakemore SJ, Chilvers R, Skuse D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia. 2007;45(7):1501–1510. doi: 10.1016/j.neuropsychologia.2006.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Potter MC. A two-stage model for multiple target detection in rapid serial visual presentation. Journal of Experimental Psychology. Human Perception and Performance. 1995;21(1):109–127. doi: 10.1037//0096-1523.21.1.109. [DOI] [PubMed] [Google Scholar]

- Conway CA, Jones BC, DeBruine LM, Little AC, Sahraie A. Transient pupil constrictions to faces are sensitive to orientation and species. Journal of Vision. 2008;8(3):17.1–11. doi: 10.1167/8.3.17. [DOI] [PubMed] [Google Scholar]

- Corden B, Chilvers R, Skuse D. Emotional modulation of perception in Asperger’s syndrome. Journal of Autism and Developmental Disorders. 2008;38(6):1072–1080. doi: 10.1007/s10803-007-0485-y. [DOI] [PubMed] [Google Scholar]

- Davies S, Bishop D, Manstead AS, Tantam D. Face perception in children with autism and Asperger’s syndrome. Journal of Child Psychology and Psychiatry. 1994;35(6):1033–1057. doi: 10.1111/j.1469-7610.1994.tb01808.x. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Developmental Neuropsychology. 2005;27(3):403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- El Khoury-Malhame M, Reynaud E, Soriano A, Michael K, Salgado-Pineda P, Zendjidjian X, Gellato C, et al. Amygdala activity correlates with attentional bias in PTSD. Neuropsychologia. 2011;49(7):1969–1973. doi: 10.1016/j.neuropsychologia.2011.03.025. [DOI] [PubMed] [Google Scholar]

- Faul F, Erdfelder E, Lang AG, Buchner A. G*Power 3: A flexible statistical power analysis for the social, behavioral, and biomedical sciences. Behavior Research Methods. 2007;39:175–191. doi: 10.3758/bf03193146. [DOI] [PubMed] [Google Scholar]

- Fox E, Russo R, Georgiou GA. Anxiety modulates the degree of attentive resources required to process emotional faces. Cognitive, Affective & Behavioral Neuroscience. 2005;5(4):396–404. doi: 10.3758/cabn.5.4.396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gadow KD, Sprafkin J. Childhood Symptom Inventory. 4. Stony Brook, NY: Checkmate Plus; 2000. [Google Scholar]

- Gadow KD, Sprafkin J. Child & Adolescent Symptom Inventory. 4. Stony Brook, NY: Checkmate Plus; 2010. Revised. [Google Scholar]

- Gaigg S, Bowler D. Brief Report: Attenuated Emotional Suppression of the Attentional Blink in Autism Spectrum Disorder: Another Non-Social Abnormality? Journal of Autism and Developmental Disorders. 2009 doi: 10.1007/s10803-009-0719-2. [DOI] [PubMed] [Google Scholar]

- Gross TF. Recognition of immaturity and emotional expressions in blended faces by children with autism and other developmental disabilities. Journal of Autism and Developmental Disorders. 2008;38(2):297–311. doi: 10.1007/s10803-007-0391-3. [DOI] [PubMed] [Google Scholar]

- Harms MB, Martin A, Wallace GL. Facial Emotion Recognition in Autism Spectrum Disorders: A Review of Behavioral and Neuroimaging Studies. Neuropsychology Review. 2010;20:290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- Hobson RP. The autistic child’s appraisal of expressions of emotion. Journal of Child Psychology and Psychiatry. 1986;27(3):321–342. doi: 10.1111/j.1469-7610.1986.tb01836.x. [DOI] [PubMed] [Google Scholar]

- Kestenbaum R, Nelson CA. Neural and behavioral correlates of emotion recognition in children and adults. Journal of experimental child psychology. 1992;54(1):1–18. doi: 10.1016/0022-0965(92)90014-w. [DOI] [PubMed] [Google Scholar]

- Lainhart JE, Bigler ED, Bocian M, Coon H, Dinh E, Dawson G, Deutsch CK, et al. Head circumference and height in autism: a study by the Collaborative Program of Excellence in Autism. American Journal of Medical Genetics. Part A. 2006;140(21):2257–74. doi: 10.1002/ajmg.a.31465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim SL, Padmala S, Pessoa L. Segregating the significant from the mundane on a moment-to-moment basis via direct and indirect amygdala contributions. Proceedings of the National Academy of Sciences of the United States of America. 2009;106(39):16841–16846. doi: 10.1073/pnas.0904551106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EH, Leventhal BL, DiLavore PC, Pickles A, et al. The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30(3):205–23. 11055457. [PubMed] [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders. 1994;24(5):659–85. doi: 10.1007/BF02172145. 7814313. [DOI] [PubMed] [Google Scholar]

- Macdonald H, Rutter M, Howlin P, Rios P, Le Conteur A, Evered C, Folstein S. Recognition and expression of emotional cues by autistic and normal adults. Journal of Child Psychology and Psychiatry. 1989;30(6):865–877. doi: 10.1111/j.1469-7610.1989.tb00288.x. [DOI] [PubMed] [Google Scholar]

- Maratos FA. Temporal processing of emotional stimuli: the capture and release of attention by angry faces. Emotion (Washington, D.C.) 2011;11(5):1242–1247. doi: 10.1037/a0024279. [DOI] [PubMed] [Google Scholar]

- Monk CS, Weng SJ, Wiggins J, Kurapati N, Louro H, Carrasco M, Maslowsky J, et al. Neural circuitry of emotional face processing in autism spectrum disorders. Journal of Psychiatry & Neuroscience. 2010;35(2):105–114. doi: 10.1503/jpn.090085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Carter EJ. Brain mechanisms for social perception: lessons from autism and typical development. Annals of the New York Academy of Sciences. 2008;1145:283–299. doi: 10.1196/annals.1416.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Shultz S, Hudac CM, Vander Wyk BC. Research review: Constraining heterogeneity: the social brain and its development in autism spectrum disorder. Journal of Child Psychology and Psychiatry, and Allied Disciplines. 2011;52(6):631–644. doi: 10.1111/j.1469-7610.2010.02349.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nature Reviews. Neuroscience. 2010;11(11):773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rinehart N, Tonge B, Brereton A, Bradshaw J. Attentional blink in young people with high-functioning autism and Asperger’s disorder. Autism. 2010;14(1):47–66. doi: 10.1177/1362361309335718. [DOI] [PubMed] [Google Scholar]

- Rump KM, Giovannelli JL, Minshew NJ, Strauss MS. The development of emotion recognition in individuals with autism. Child development. 2009;80(5):1434–1447. doi: 10.1111/j.1467-8624.2009.01343.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz RT. Developmental deficits in social perception in autism: the role of the amygdala and fusiform face area. International Journal of Developmental Neuroscience. 2005;23(2–3):125–141. doi: 10.1016/j.ijdevneu.2004.12.012. [DOI] [PubMed] [Google Scholar]

- South M, Ozonoff S, Suchy Y, Kesner RP, McMahon WM, Lainhart JE. Intact emotion facilitation for nonsocial stimuli in autism: is amygdala impairment in autism specific for social information? Journal of the International Neuropsychological Society. 2008;14(1):42–54. doi: 10.1017/S1355617708080107. [DOI] [PubMed] [Google Scholar]

- Tardif C, Lainé F, Rodriguez M, Gepner B. Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial-vocal imitation in children with autism. Journal of Autism and Developmental Disorders. 2007;37(8):1469–1484. doi: 10.1007/s10803-006-0223-x. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, et al. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Abbreviated Scales of Intelligence. San Antonio, TX: Psychological Corporation; 1999. [Google Scholar]

- Wechsler D. Wechsler Scales of Intelligence. 4. San Antonio, TX: Psychological Corporation; 2003. [Google Scholar]