Abstract

Healthcare providers have increased the use of quality improvement (QI) techniques, but organizational variables that affect QI uptake and implementation warrant further exploration. This study investigates organizational characteristics associated with clinics that enroll and participate over time in QI. The Network for the Improvement of Addiction Treatment (NIATx) conducted a large cluster-randomized trial of outpatient addiction treatment clinics, called NIATx 200, which randomized clinics to one of four QI implementation strategies: (1) interest circle calls, (2) coaching, (3) learning sessions, and (4) the combination of all three components. Data on organizational culture and structure were collected before, after randomization, and during the 18-month intervention. Using univariate descriptive analyses and regression techniques, the study identified two significant differences between clinics that enrolled in the QI study (n = 201) versus those that did not (n = 447). Larger programs were more likely to enroll and clinics serving more African Americans were less likely to enroll. Once enrolled, higher rates of QI participation were associated with clinics’ not having a hospital affiliation, being privately owned, and having staff who perceived management support for QI. The study discusses lessons for the field and future research needs.

Keywords: Process improvement, QI, organizational characteristics, randomized trial, organizational culture

Introduction

Patients throughout the healthcare systems are unlikely to receive evidence-based care, and the problem is particularly acute for patients with alcohol and drug use disorders. A review of hospital charts reported that patient diagnosis affected the quality of care received; receipt of recommended care varied from a high of 79% of patients with senile cataracts to 11% among patients diagnosed with alcohol dependence (McGlynn et al., 2003). Despite a critical need to improve access to quality care for alcohol and drug use disorders, providers of addiction treatment tend to be small, independent nonprofit corporations with varying experience using quality improvement (QI) tools.(McLellan, Carise & Kleber, 2003).

This paper asks two research questions: “What organizational characteristics distinguish addiction treatment clinics that chose to enroll in a QI study from those that did not?” and “What organizational characteristics are associated with increased clinic participation in QI?”

Quality Improvement in Healthcare

Berwick (2003 draws on the groundbreaking work of Rogers (2003) to identify three clusters of influence over the rate at which innovations diffuse in healthcare: perceptions of the innovation itself, characteristics of individuals who may adopt change, and context. Shortell et al. (1995) found that “a participative, flexible, risk-taking organizational culture” in U.S. hospitals supported implementation of QI, while hospitals with more centralized controls had more limited implementation. Berwick (2003) and Kaplan et al. (2010) refer to organizational context. Across these perspectives, engagement by leadership and a culture that supports change are thought to promote the uptake of QI. A systematic review of contextual variables associated with implementing QI in healthcare noted that organizational leadership, organizational culture, a focus on data-driven systems of change (Wisdom et al, 2006), and experience with QI all supported successful QI initiatives (Kaplan et al, 2010).

Damschroder et al’s (2009) Consolidated Framework for Implementation Research (CFIR) describes five domains of implementation theory in healthcare settings: intervention characteristics, outer setting (e.g., patient needs and resources), inner setting (e.g., culture, leadership engagement), characteristics of the individuals involved, and the process of implementation (e.g., planning, evaluation). The CFIR framework has been extended to assess implementation research in settings that treat alcohol and drug use disorders (Damschroder & Hagedorn, 2011). We drew upon the CFIR framework in selecting survey instruments to assess aspects of QI implementation in this study.

QI in Addiction Treatment

Publicly funded specialty clinics provide most addiction treatment in the U.S. (Horgan & Merrick, 2001). They serve an average of 47 patients/day (bSubstance Abuse and Mental Health Services Administration [SAMHSA], 2012b). The system consists of roughly 14,000 clinics, with 81% providing outpatient treatment (SAMHSA, 2012b). Of the estimated 23 million Americans who need addiction treatment, less than 10% receive treatment in any given year (SAMHSA, 2012a). People seeking treatment often face obstacles such as burdensome paperwork, financial screening, and long waits (Ford II et al., 2007) that discourage many from getting treatment. Licensure and accreditation generally require that QI plans be in place, and 93% of 749 addiction treatment clinics surveyed from 2002 to 2004 reported having a QI plan, but the plans had little influence on clinical practice (Fields & Roman, 2010). Clinics, moreover, close at high rates and have high staff turnover (Johnson & Roman, 2002; McLellan et al., 2003).

Studies of organizational influences on implementing QI in addiction treatment facilities show mixed results. Larger addiction treatment centers were reported by some to be more likely to implement QI (Knudsen, Ducharme, & Roman, 2007; Knudsen, & Roman, 2004), while an analysis of programs participating in a national research network found a negative relationship between size and QI (Ducharme, Knudsen, Roman, & Johnson, 2007). Corporate structure (for-profit versus not-for-profit) also has inconsistent relationships with the use of QI (Ducharme et al., 2007; Knudsen et al., 2007; Knudsen & Roman, 2004). Roman and colleagues observed a strong need for leadership on implementation to counteract the tendency of clinics to discontinue evidence-based practices after adopting them (Roman et al., 2010). An analysis of the NIATx 200 data may clarify relationships because of the large pool of eligible clinics (n = 648) and the number of participating clinics (n = 201)

The NIATx 200 Study

Since 2003, NIATx (formerly the Network for the Improvement of Addiction Treatment) has used systems engineering techniques to study problems of access to and retention in addiction treatment. NIATx 200 (Gustafson et al., 2013) is the largest study of QI conducted in addiction treatment. A cluster-randomized trial tested four methods of disseminating QI in 201 addiction treatment clinics in five states. Although NIATx 200 was not specifically designed to address the research questions raised in this study, the data gathered permit an analysis of characteristics associated with clinics that opt to undertake QI.

METHODS

The research team worked with the state agencies that coordinate services and manage federal funds for addiction treatment in Massachusetts, Michigan, New York, Oregon, and Washington to recruit sites to participate in the NIATx 200 trial. Within the five states, 648 clinics met eligibility criteria (they provided outpatient or intensive outpatient care, reported 60 or more admissions per year, and did not have prior experience with NIATx). New York State excluded clinics in metropolitan New York City because they were participating in a related QI initiative. State agencies invited eligible providers to recruitment meetings to learn about the trial. Nine half-day recruitment meetings were held in the five states. A standard agenda was used at each recruitment meeting. At the end of each recruitment meeting, clinics had the opportunity to sign up for the study. During the 6-month recruitment period, 201 clinics enrolled in the study and were randomly assigned to the four study interventions (Quanbeck et al., 2011).

The four study conditions incorporated strategies frequently used in QI initiatives and learning collaboratives: (1) interest circle calls, (2) coaching, (3) learning sessions, and (4) the combination of the three conditions (Gustafson et al., 2013). The goals of NIATx 200 were to reduce waiting time to treatment, increase retention in care, and increase the number of new patients treated annually (Gustafson et al., 2013). All organizations had the same goals during each 6-month period of the 18-month intervention. For example, each clinic, regardless of intervention arm, concentrated on reducing waiting time during months 1–6 of the 18 months (Gustafson et al., 2013).

Design and Sample

For the first research question, “What organizational characteristics distinguish addiction treatment clinics that chose to enroll in the NIATx 200 quality improvement study from those that did not?”, we analyzed each of the 648 eligible clinics that made a decision about enrolling in the NIATx 200 trial. This outcome is considered a binary variable (enroll/not enroll). See Figure 1. For the second research question, “What organizational characteristics are associated with increased clinic participation in the NIATx 200 initiative?”, we examined each clinic’s level of participation in the study’s QI activities during the 18-month intervention period. Each intervention consisted of a pre-defined set of activities. We assessed participation at the clinic level, without considering the number of staff at the clinic who took part. For example, a clinic that participated in a coach site visit and all 18 monthly calls would have 23 total participation hours in this intervention (See Table 1).

Figure 1.

Definitions

Table 1.

Clinic Participation Hours by Intervention

| Participation Hours1 | Interest Circle Calls2 | Coaching3 | Learning Sessions4 | Combination5 |

|---|---|---|---|---|

| Average | 6.0 | 13.5 | 25.7 | 43.1 |

| Standard deviation | 3.8 | 5.1 | 9.7 | 16.2 |

| Maximum possible | 18 | 23 | 34.5 | 75.5 |

| % of maximum participation6 | 33% | 59% | 74% | 57% |

| # of clinics allocated | 49 | 50 | 54 | 48 |

Hours of participation per clinic, unweighted by the number of clinic staff members participating from each clinic. The interest circle calls group, with 18 maximum possible hours of participation, was conceived as a type of control group for comparison.

The interest circle call group received content via 18 one-hour calls.

Participation in the coaching intervention consisted of one half-day site visit lasting 5 hours, followed by 18 one-hour monthly calls.

Learning session participation occurred in three sessions. The first provided 8.5 hours of content in one day. The second and third provided 13.5 hours of content delivered over two days.

Participation in the combination arm included opportunities to participate in interest circle calls, coaching, and learning sessions. The total available participation hours equals the sum of hours for each of the other three study interventions.

The percent of maximum participation is determined by the count of the number of clinics that participated in all intervention opportunities divided by the total number of clinics in the intervention arm.

Data and Measures

Data used in the analysis were collected by the larger NIATx 200 study before recruitment, before randomization, and during the 18-month intervention period.

Before recruitment started, states provided a common set of data on eligible clinics, including the percentage of patients served who were male, African American, and referred from the criminal justice system. These data were collected in part because they are part of the Treatment Episode Data Set (TEDS), which is the standard, minimal dataset required by the federal government for all clinics that receive public funding. Public funding was an eligibility criterion of NIATx 200. Clinic locations were classified as rural, semi-urban, or urban using the United States Department of Agriculture Rural Urban Continuum Code (RUCC) website. Private-versus-public ownership was validated from websites for all eligible clinics. Accreditation was self-reported by the providers and verified using information from the websites of six accreditation organizations. Clinics that enrolled in the study also completed an application with questions on ownership, affiliation (hospital based, freestanding, or other), for-profit status, and Internet availability for staff.

Management survey

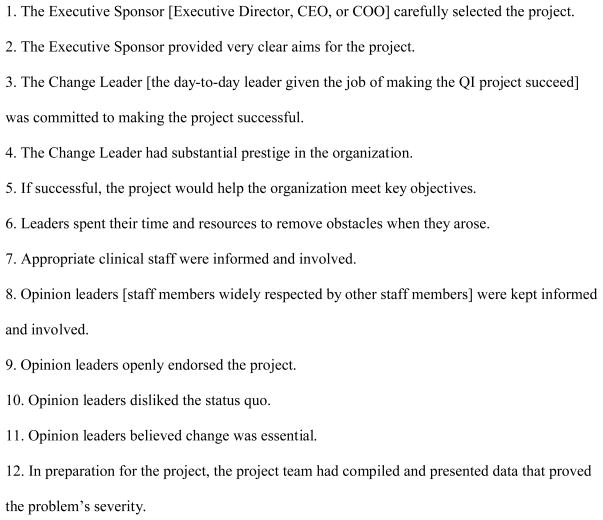

Bloom and Van Reenen (2007) developed a management survey to determine if differences in manufacturing firms’ management practices could help explain significant and persistent differences in levels of productivity observed between manufacturing firms in the same industry. McConnell et al. (2009) adapted this survey for use by addiction treatment clinics and tested it in more than 200 outpatient clinics during the NIATx 200 study. Each clinic is rated on a scale from 1 (worst) to 5 (best) on each of 14 management practices. (See Figure 2.) Averaging scores from each practice resulted in an overall management score. The management score was used as a stratification variable in the NIATx 200 randomization process.

Figure 2.

Management Practices Evaluated in the Management Survey

The survey was collected during a telephone interview administered during baseline data collection. To test for measurement error in the scoring of management practices, a subset of 14 interviews was double scored. One researcher conducted the interview and scored the clinic’s management practices using the survey template while a second researcher listened remotely and scored independently. The correlation coefficient between the two scores was 0.80 (p<0.01), indicating a strong degree of agreement between the two ratings (McConnell et al., 2009). Ninety-six percent of participating clinics completed the management survey.

Organizational Change Manager

Before randomization, clinic staff completed a survey that included the 15-factor Organizational Change Manager (OCM) (Gustafson, et al., 2003), which uses a Bayesian model to estimate the probability of successful organizational change (see Figure 3). Each factor consists of four questions rated on a 5-point scale (strongly agree, agree, disagree, strongly disagree, don’t know/not sure). The OCM alpha ranges from 0.775 to 0.937 across the 15 factors. Analysis indicates strong inter-rater reliability (R2=0.72) and that the OCM predicts successful improvement initiatives 80% of the time (Olsson et al, 2003). Questions related to implementation—representing three of the OCM’s 15 factors—were excluded because the focus of the analysis is baseline readiness to change rather than implementation. (See Figure 3.) The OCM was part of a staff survey completed by clinical, managerial, and administrative staff members familiar with the NIATx 200 project. Each survey response included information that identified the clinic and provided information about the respondent, including job function, race, gender, and ethnicity. The OCM was collected as part of a staff survey that we asked 10 staff members at each clinic to complete.

Figure 3.

Organizational Change Manager (OCM) Survey Constructs

Each clinic’s designated change leader was responsible for distributing the survey to staff members, who had the option of filling out the survey online or on paper. Responses were anonymous. Ninety-five percent of participating clinics returned one or more surveys at baseline. The mean number of responses was 8.2 with a standard deviation of 3.8 per clinic.

Analysis

Univariate descriptive analyses (chi-square and t-tests) assessed differences between enrolled and not-enrolled sites. Power calculations for the larger NIATx 200 trial were based on anticipated changes in waiting time (one of the primary outcomes of the original study), and these power calculations set the recruitment goal of 200 clinics. The p-values reported in Tables 3 and 4 reflect the volume of data available. The analysis was exploratory; i.e., not intended to confirm the significance of factors hypothesized before the study.

Table 3.

Descriptive Characteristics of Enrolled versus Not Enrolled Clinics

| Enrolled (n=201) Mean (SD) |

Non-enrolled (n=447) Mean (SD) |

p-Value | |

|---|---|---|---|

| Structure | |||

| Size (number of publicly-funded patients at baseline) | 391 (381) | 297 (246) | 0.001 |

| Clinic accreditation | 39.5% | 39.1% | 0.936 |

| Private ownership | 81.5% | 84.3% | 0.379 |

| Patient population | |||

| Percent of male patients | 65 (11) | 67 (13) | 0.094 |

| Percent of African American patients | 13 (16) | 18 (23) | 0.001 |

| Percent of patients referred by the criminal justice department | 44 (21) | 46 (23) | 0.135 |

| Geographic Location | |||

| Rural/urban | 0.157 | ||

|

| |||

| 1 Urban | 82.6% | 81.9% | |

| 2 Suburban | 8.2% | 5.1% | |

| 3 Rural | 9.2% | 13% | |

Table 4.

Logistic Regression Results of Participation

| Intercept | Hospital | Private | Mgmt. support | Clinics with high participation | |

|---|---|---|---|---|---|

| Overall time period (18 months) | 58% | ||||

| Estimate | −4.319 | −.870 | .700 | 1.263 | |

| Standard error | 1.636 | .342 | .324 | .483 | |

| p-value | .008 | .011 | .031 | .009 | |

| Exp (coeff) | .013 | .419 | 2.014 | 3.536 |

These values are odds ratios. For example, the Hospital odds ratio of 0.419 means that the odds of a hospital-based site participating at a high level are 0.419 times the odds of a non-hospital-based site participating at a high level. On average, the odds that a site participates at high level increases by a factor of 3.536 for each unit increase in the OCM management support score.

The participation measure counted encounters between QI activities and each clinic in the assigned interventions. (See Table 1). Participation in each study intervention (interest circle calls, coaching, and learning sessions) was measured separately and assigned a value of “high” or “low” based on the number of events in which the clinic participated. Activities in the combination intervention were separated by type (interest circle calls, coaching, and learning sessions) and added to the participation total for each of the three interventions. The threshold for high participation varied by intervention type and was set to produce roughly the same percentage of high participants within each type. To understand the extent to which individual clinics participated in the QI efforts to which they were randomized, the study team used regression techniques to analyze the number of encounters with the intervention in 18 months. We fit a stepwise logistic regression model of clinic participation. In the first step, we modeled overall participation as a function of our independent variables, deleting characteristics with p-values greater than 0.05. In the second step, we retained the significant characteristics from the first step and examined the impact of each of the management survey and OCM survey items included in the analysis. We again used stepwise elimination of insignificant items. By design, there was no significant additive effect in the model associated with the type of intervention, because we sorted clinics into “high” and “low” groups within each intervention (interest circle calls, coaching, learning sessions, and combination). Nevertheless, we included the intervention type as a candidate variable at the beginning of the stepwise regression process and confirmed that it was statistically insignificant. To offset, in part, the increased likelihood of false positives associated with repeated testing, we retained only items with p-values less than 0.01.

RESULTS

Table 2 provides an overview of the recruitment efforts within each state. Based on geography and travel distance, the number of meetings varied by state. Overall, 37% of eligible agencies agreed to participate in the study. The recruitment percent varied by state. It ranged from 52% in State 1 to 19% in State 5. We also tracked the number of days between the recruitment meeting and each clinic’s submitting an application. The results indicate that 95 of the 201 clinics participating in the study enrolled at the recruitment meeting. Another 40 clinics enrolled within 30 days of the recruitment meeting. To recruit the remaining agencies, we worked closely with our state partners, who used personal contacts, phone calls, and e-mails to assist with recruitment.

Table 2.

Overview of Recruitment Efforts

| State 1 | State 2 | State 3 | State 4 | State 5 | Total | |

|---|---|---|---|---|---|---|

| Number of Recruitment Meetings | 2 | 1 | 3 | 1 | 2 | 9 |

| Number of Eligible Agencies | 82 | 140 | 221 | 90 | 115 | 648 |

| Number of Agencies Recruited and Filed Application | 43 | 52 | 55 | 37 | 52 | 239 |

| % Recruited of Eligible Sites | 52.4% | 37.1% | 24.9% | 41.1% | 45.2% | 36.9% |

| Number of Agencies in the Study | 43 | 42 | 41 | 37 | 38 | 201 |

| % In Study of Eligible Sites | 52.4% | 30.0% | 18.6% | 41.1% | 33.0% | 31.0% |

| Days from Recruitment Meeting to Application Submitted | ||||||

| 0 Days | 15 | 28 | 23 | 18 | 11 | 95 |

| 1 to 14 Days | 4 | 2 | 7 | 3 | 3 | 19 |

| 15 to 30 Days | 6 | 1 | 1 | 7 | 6 | 21 |

| 30 days or more | 18 | 11 | 10 | 9 | 18 | 66 |

Table 3 summarizes the characteristics for clinics that enrolled versus those that were eligible but did not enroll, and identifies characteristics that were significantly different between the groups. Compared with all eligible clinics, those enrolled in NIATx 200 were larger (by approximately 100 additional annual admissions) and served a smaller proportion of African Americans (by approximately 5 percentage points.). Enrolled and not-enrolled clinics were similar in the percentage of patients referred by the criminal justice system, the percent of males served, ownership, accreditation, and type of location.

To assess whether there was an interaction between the intervention type and the other model factors (hospital-based, privately owned, and management support), we refit the model to each group of clinics by intervention type. While there was some variation in the estimated model factor effects, the pattern of the effects was similar for each intervention group. Hospital-based clinics consistently exhibited a negative correlation with participation. Private ownership exhibited negative correlation with participation in interest circles and learning sessions, and a lack of correlation with participation in coaching. High scores on the OCM item related to management support were consistently correlated with higher participation in all of the intervention activities. In fact, the estimated effect of management support from the OCM was greater when estimated for each of the three intervention types separately than when estimated for all clinics combined. So, there was no indication that intervention type significantly confounded the estimates of the effects of the model factors.

Table 4 presents the logistic regression results for participation across the entire study period (18 months). Significant predictors of participation are hospital affiliation and private ownership, and one OCM item under the heading of management support. Overall, clinics without a hospital affiliation were more likely to participate, as were privately owned clinics. Clinics whose staff indicated that the project would help meet organizational goals had greater participation.

DISCUSSION

The study found associations between participation in QI and several organizational characteristics. Two characteristics were associated with enrollment: larger size and serving fewer African American patients. Three characteristics were associated with participating in QI: hospital affiliation and private ownership were negatively associated with participation, and staff members’ perception that management supports the goals of the QI project was positively associated with participation.

Questions designed to assess management quality did not provide information related to participation. However, staff members’ perception of management support for change was positively associated with participation. This may suggest that managers need to communicate, effectively and continuously, their support for QI. Implementing a staff-wide survey such as the Organizational Change Manager before embarking on a QI project might help project planners identify organizations that would benefit from more visible support from management for the QI initiative.

Addiction treatment centers that specialize in working with specific subgroups (e.g., African Americans, American Indians and Alaskan Natives, Hispanic/Latinos, and women) tend to be small, independent clinics with limited or no relationships with research institutions (Corredoira & Kimberly, 2006; Kimberly and McLellan, 2006). As such, few evidence-based treatments for alcohol and drug use disorders have sufficient data on racial/ethnic minorities to generalize to those subgroups (Santisteban, Vega & Suarez-Morales, 2006). Increasing the diversity among participants in addiction treatment studies expands the generalizability of research, enhances external validity, and increases the applicability of the findings to clinical practice.

The underrepresentation of African Americans, women, and minorities in treatment protocols and randomized clinical trials continues to be an issue for researchers, but also for providers, because research on evidence-based practices may be less useful to clinics serving these subgroups (Fiscella, Franks, Gold, & Clancy, 2000; Hanson, Leshner, & Tai, 2002; Hasnain-Wynia et al., 2007; Murthy, Krumholz, & Gross, 2004; Nerenz, 2005). Even though these variables may be surrogates for other attributes of the populations served or the organizations serving them, the results underscore the need for researchers to make concerted efforts to recruit clinics that primarily serve racial and ethnic minorities.

Given the commitment of time and resources necessary to improve quality of care, it is perhaps not surprising that larger organizations were more likely to enroll in the study. Larger hospitals have been found to be more likely to have implemented QI principles, tools, or techniques (Alexander et al., 2006; Young, Charns, & Shortell, 2001). In this study, privately owned clinics participated more than governmental organizations. Public sector and governmental treatment organizations may have been less likely to commit resources to QI because of constrained budgets during the study period (2007–2009).

The results indicate that relating change activities to an organization’s goal improves participation. The association strengthened when the change activities focused on increasing treatment capacity through either increased patient retention or admissions. This result provides support for a key QI principle: Focus change efforts on fixing a key organizational problem (Capoccia et al., 2007; Gustafson & Hundt, 1995).

LIMITATIONS AND CHALLENGES

Two constructs posed analytical challenges: participation itself and measuring how participation changes over time. We tested four measures of participation, seeking to identify the measure that most closely mimicked the actual behavior of the clinics. The measure we used assessed participation by clinic, without considering the number of staff at the clinic who took part, which allowed us to sort participation rates within each intervention. More research is needed on how best to measure participation in QI—specifically, which aspects of participation are most closely linked to improvement in outcomes?

The other challenge is measuring how participation changes over time. We focused on organizational readiness for change before the start of the study and how organizations prepared for change. It is possible that the OCM values after 9, 18, and 27 months would better explain participation, but we focused on baseline scores because response rates declined over time.

The absence of a standardized protocol to track a clinic from eligibility to enrollment is a limitation of the study. Without such a protocol, we were unable in all five states to track whether an eligible clinic attended a recruitment meeting and, if so, actually enrolled in the study. Future studies of organizational recruitment should include such a protocol.

Although NIATx 200 was a large study, it only represents 200 of the more than 14,000 outpatient treatment clinics in the U.S. Hence the lessons of this analysis may or may not translate to other payers and policymakers seeking to do QI research in addiction treatment, and among other types of outpatient clinics in healthcare.

IMPLICATIONS FOR PRACTICE

Recruiting participants for QI studies and maintaining a commitment to project activities are ongoing challenges for organizational research and for managers in healthcare. Recruiting clinics that serve higher proportions of African Americans may take extra effort. This study shows that management support is important for QI success, and as such, reinforces the literature on organizational change. Building management support is a necessary part of quality improvement in outpatient addiction treatment clinics. But how management support is measured also matters. This study measures management support quantitatively, in the context of the QI literature, and with an eye toward informing recruitment to and quality improvement practices in addiction treatment settings.

Acknowledgments

The NIATx 200 study was funded by the National Institute on Drug Abuse (R01 DA020832).

Footnotes

Earlier versions of portions of the study were presented at the Addiction Health Services Research Conference in October 2011 and the Annual Meeting of the American Public Health Association in November 2012.

INSTITUTIONAL REVIEW BOARDS

The NIATx 200 study protocol and all data collection instruments used in the analysis were reviewed and approved by Institutional Review Boards at the University of Wisconsin-Madison and Oregon Health & Science University (Protocol #: R01 DA020832).

Contributor Information

Kyle L. Grazier, Email: kgrazier@umich.edu, Richard Carl Jelinek Professor and Chair, Health Management and Policy, Professor of Psychiatry, University of Michigan, 1415 Washington Heights, Ann Arbor, MI 48109-2029, Office: (734) 936-1222, Fax: (734) 764-4338

Andrew R. Quanbeck, Email: andrew.quanbeck@chess.wisc.edu, University of Wisconsin–Madison, Center for Health Enhancement Systems Studies

John Oruongo, Email: oruongo@stat.wisc.edu, University of Wisconsin–Madison, Department of Statistics.

James Robinson, Email: jim@chsra.wisc.edu, University of Wisconsin–Madison, Center for Health Systems Research and Analysis.

James H. Ford, II, Email: jay.ford@chess.wisc.edu, University of Wisconsin–Madison, Center for Health Enhancement Systems Studies.

Dennis McCarty, Email: mccartyd@ohsu.edu, Oregon Health Sciences University, Department of Public Health and Preventive Medicine.

Alice Pulvermacher, Email: alice.pulvermacher@chess.wisc.edu, University of Wisconsin–Madison, Center for Health Enhancement Systems Studies.

Roberta A. Johnson, Email: bobbie.johnson@chess.wisc.edu, University of Wisconsin–Madison, Center for Health Enhancement Systems Studies

David H. Gustafson, Email: dhgustaf@wisc.edu, University of Wisconsin–Madison, Center for Health Enhancement Systems Studies

References

- Alexander JA, Griffith J, Weiner B. Quality improvement and hospital financial performance. Journal of Organizational Behavior. 2006;27(7):1003–1102. [Google Scholar]

- Berwick DM. Disseminating innovations in health care. Journal of the American Medical Association. 2003;289:1969–1975. doi: 10.1001/jama.289.15.1969. [DOI] [PubMed] [Google Scholar]

- Bloom N, Van Reenen J. Measuring and explaining management practices across firms and countries. The Quarterly Journal of Economics. 2007;122(4):1351–1408. [Google Scholar]

- Capoccia VA, Cotter F, Gustafson DH, Cassidy EF, Ford JH, II, Madden L, Owens BH, Farnum SO, McCarty D, Molfenter T. Making ‘stone soup’: Improvements in clinic access and retention in addiction treatment. Joint Commission Journal on Quality and Patient Safety. 2007;33(2):95–103. doi: 10.1016/s1553-7250(07)33011-0. [DOI] [PubMed] [Google Scholar]

- Corredoira RA, Kimberly JR. Industry evolution through consolidation: Implications for addiction treatment. Journal of Substance Abuse Treatment. 2006;31(3):255–265. doi: 10.1016/j.jsat.2006.06.020. [DOI] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50–64. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ducharme LJ, Knudsen HK, Roman PM, Johnson JA. Innovation adoption in substance abuse treatment: Exposure, trialability, and the clinical trials network. Journal of Substance Abuse Treatment. 2007;32:321–329. doi: 10.1016/j.jsat.2006.05.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fields D, Roman PM. Total quality management and performance in substance abuse treatment centers. Health Services Research. 2010;45(6):1630–1650. doi: 10.1111/j.1475-6773.2010.01152.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiscella K, Franks P, Gold MR, Clancy CM. Inequality in quality: Addressing socioeconomic, racial, and ethnic disparities in health care. The Journal of the American Medical Association. 2000;283(19):2579–2584. doi: 10.1001/jama.283.19.2579. [DOI] [PubMed] [Google Scholar]

- Ford JH, II, Green CA, Hoffman KA, Wisdom JP, Riley KJ, Bergmann L, et al. Process improvement needs in substance abuse treatment: Admissions walk-through results. Journal of Substance Abuse Treatment. 2007;33(4):379–389. doi: 10.1016/j.jsat.2007.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gustafson DH, Quanbeck AR, Robinson JM, Ford JH, II, Pulvermacher A, French MT, McConnell KJ, Batalden PB, Hoffman KA, McCarty D. Addiction. 2013;108:1145–1157. doi: 10.1111/add.12117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gustafson DH, Hundt AS. Findings of innovation research applied to quality management principles for health care. Health Care Management Review. 1995;20(2):16–33. [PubMed] [Google Scholar]

- Hanson GR, Leshner AI, Tai B. Putting drug abuse research to use in real-life settings. Journal of Substance Abuse Treatment. 2002;23(2):69–70. doi: 10.1016/s0740-5472(02)00269-6. [DOI] [PubMed] [Google Scholar]

- Hasnain-Wynia R, Baker DW, Nerenz D, Feinglass J, Beal AC, Landrum MB, Behal R, Weissman JS. Disparities in health care are driven by where minority patients seek care. Archives of Internal Medicine. 2007;167(12):1233–1239. doi: 10.1001/archinte.167.12.1233. [DOI] [PubMed] [Google Scholar]

- Horgan C, Merrick E. Services research in the era of managed care. In: Galanter M, editor. Recent developments in alcoholism. Vol. 15. New York: Kluwer Academic/Plenum Publishers; 2001. pp. 241–263. [Google Scholar]

- Johnson A, Roman P. Predicting closure of private substance abuse treatment facilities. Journal of Mental Health Administration. 2002;29(2):115. doi: 10.1007/BF02287698. [DOI] [PubMed] [Google Scholar]

- Kaplan HC, Brady PW, Dritz MC, Hooper DK, Linam WM, Froehle CM, Margolis P. The influence of context on quality improvement success in health care: A systematic review of the literature. The Milbank Quarterly. 2010;88(4):500–559. doi: 10.1111/j.1468-0009.2010.00611.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kimberly JR, McLellan AT. The business of addiction treatment: A research agenda. Journal of Substance Abuse Treatment. 2006;31(3):213–219. doi: 10.1016/j.jsat.2006.06.018. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Roman PM. Modeling the use of innovations in private treatment organizations: The role of absorptive capacity. Journal of Substance Abuse Treatment. 2004;26(1):51–59. doi: 10.1016/s0740-5472(03)00158-2. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Ducharme LJ, Roman PM. The adoption of medications in substance abuse treatment: Associations with organizational characteristics and technology clusters. Drug and Alcohol Dependence. 2007;87:164–174. doi: 10.1016/j.drugalcdep.2006.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McConnell KJ, Hoffman KA, Quanbeck A, McCarty D. Management practices in substance abuse treatment programs. Journal of Substance Abuse Treatment. 2009;37(1):79–89. doi: 10.1016/j.jsat.2008.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, Kerr EA. The quality of health care delivered to adults in the United States. New England Journal of Medicine. 2003;348:2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public’s demand for quality care? Journal of Substance Abuse Treatment. 2003;25(2):117–121. [PubMed] [Google Scholar]

- Murthy VH, Krumholz HM, Gross CP. Participation in cancer clinical trials: Race-, sex-, and age-based disparities. The Journal of the American Medical Association. 2004;291(22):2720–2726. doi: 10.1001/jama.291.22.2720. [DOI] [PubMed] [Google Scholar]

- Nerenz DR. Health care organizations’ use of race/ethnicity data to address quality disparities. Health Affairs. 2005;24(2):409–416. doi: 10.1377/hlthaff.24.2.409. [DOI] [PubMed] [Google Scholar]

- Olsson JA, Lic T, Ovretveit J, Kammerlind P. Developing and Testing a Model to Predict Outcomes of Organizational Change. Q Manage Health Care. 2003;12(4):240–249. doi: 10.1097/00019514-200310000-00009. [DOI] [PubMed] [Google Scholar]

- Quanbeck AR, Gustafson DH, Ford JH, II, Pulvermacher A, French MT, McConnell KJ, McCarty D. Disseminating quality improvement: Study protocol for a large cluster randomized trial. Implementation Science. 2011;6(1):44. doi: 10.1186/1748-5908-6-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of Innovations. 5. New York: The Free Press, a division of Simon & Schuster, Inc; 2003. [Google Scholar]

- Roman PM, Abraham AJ, Rothrauff TC, Knudsen HK. A longitudinal study of organizational formation, innovation adoption, and dissemination activities within the National Drug Abuse Treatment Clinical Trials Network. Journal of Substance Abuse Treatment. 2010;38(Suppl 1):S44–S52. doi: 10.1016/j.jsat.2009.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santisteban D, Vega RR, Suarez-Morales L. Utilizing dissemination findings to help understand and bridge the research and practice gap in the treatment of substance abuse disorders in Hispanic populations. Drug and Alcohol Dependence. 2006;84S:S94–S101. doi: 10.1016/j.drugalcdep.2006.05.011. [DOI] [PubMed] [Google Scholar]

- Shortell SM, O’Brien JL, Carman JM, Foster RW, Hughes EF, Boerstler H, O’Connor EJ. Assessing the impact of continuous quality improvement/total quality management: Concept versus implementation. Health Services Research. 1995;30(2):377–401. [PMC free article] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration. Results from the 2011 National Survey on Drug Use and Health: Summary of National Findings. Rockville, MD: 2012a. NSDUH Series H-44, HHS Publication No. (SMA) 12-4713. [Google Scholar]

- Substance Abuse and Mental Health Services Administration. Data on Substance Abuse Treatment Facilities. Rockville, MD: Substance Abuse and Mental Health Services Administration; 2012b. National Survey of Substance Abuse Treatment Services (N-SSATS): 2011. BHSIS Series: S-64, HHS Publication No. (SMA) 12-4730. Table 4.4. [Google Scholar]

- Wisdom JP, Ford JH, Hayes RA, Hoffman K, Edmundson E, McCarty D. Addiction Treatment Agencies’ Use of Data: A Qualitative Assessment. Journal of Behavioral Health Services and Research. 2006;33(4):394–407. doi: 10.1007/s11414-006-9039-x. [DOI] [PubMed] [Google Scholar]

- Young GJ, Charns MP, Shortell SM. Top manager and network effects on the adoption of innovative management practices: A study of TQM in a public hospital system. Strategic Management Journal. 2001;22(10):935–951. [Google Scholar]