Abstract

Cochlear implants (CIs) partially restore hearing to the deaf by directly stimulating the inner ear. In individuals fitted with CIs, lack of auditory experience due to loss of hearing before language acquisition can adversely impact outcomes. For example, adults with early-onset hearing loss generally do not integrate inputs from both ears effectively when fitted with bilateral CIs (BiCIs). Here, we used an animal model to investigate the effects of long-term deafness on auditory localization with BiCIs and approaches for promoting the use of binaural spatial cues. Ferrets were deafened either at the age of hearing onset or as adults. All animals were implanted in adulthood, either unilaterally or bilaterally, and were subsequently assessed for their ability to localize sound in the horizontal plane. The unilaterally implanted animals were unable to perform this task, regardless of the duration of deafness. Among animals with BiCIs, early-onset hearing loss was associated with poor auditory localization performance, compared with late-onset hearing loss. However, performance in the early-deafened group with BiCIs improved significantly after multisensory training with interleaved auditory and visual stimuli. We demonstrate a possible neural substrate for this by showing a training-induced improvement in the responsiveness of auditory cortical neurons and in their sensitivity to interaural level differences, the principal localization cue available to BiCI users. Importantly, our behavioral and physiological evidence demonstrates a facilitative role for vision in restoring auditory spatial processing following potential cross-modal reorganization. These findings support investigation of a similar training paradigm in human CI users.

Keywords: auditory cortex, cochlear implant, cross-modal plasticity, hearing loss, multisensory, sound localization

Introduction

Cochlear implants (CIs) can partially restore hearing to people with severe to profound sensorineural hearing loss. Implanting both ears rather than just one is receiving growing support, encouraged by improvements in sound localization and speech recognition over those provided with a single CI (Müller et al., 2002; van Hoesel and Tyler, 2003; van Hoesel, 2004, 2012; Brown and Balkany, 2007).

Age at onset of deafness appears to limit the capacity of individuals implanted in adulthood to realize the full benefits of CIs (Moore and Shannon, 2009). Although this is consistent with a critical role for auditory experience in shaping the developing brain (Kral and Eggermont, 2007; Hartley and King, 2010), it is also possible that sounds become less effective in activating auditory brain regions in deaf patients with CIs because of cross-modal takeover (Lee et al., 2001; Sharma et al., 2005; Sandmann et al., 2012). Thus, although cross-modal reorganization of auditory cortex may contribute to the development of superior visual discrimination abilities in the deaf (Bavelier et al., 2006; Lomber et al., 2010; Merabet and Pascual-Leone, 2010), it may reduce the capacity of such individuals to benefit subsequently from CI input (Sharma et al., 2002; Doucet et al., 2006).

Studies in humans and other species with normal hearing (NH) have revealed widespread multisensory influences on auditory cortical processing (Schroeder and Foxe, 2005; Bizley and King, 2012; Kayser et al., 2012). In particular, spatial selectivity in the auditory cortex is enhanced by the presence of spatially coincident visual stimuli (Bizley and King, 2008). This suggests that audiovisual training might provide a useful strategy for assisting adult bilateral CI (BiCI) users to learn to localize sound after early hearing loss.

The aim of this study was therefore to determine using a ferret model of cochlear implantation whether multisensory training can enhance auditory spatial abilities in early-deafened animals that received BiCIs in adulthood. However, because perception by human CI users tends to be dominated by vision when conflicting visual and auditory speech cues are available (Schorr et al., 2005), we wanted to avoid a situation in which the animals ignored their auditory inputs and made their localization responses principally or exclusively on the basis of concurrent and more reliable visual cues. Consequently, we adopted a multisensory training paradigm wherein visual and auditory stimuli were presented separately in a randomly interleaved fashion.

Our results show that multisensory training can significantly enhance the auditory spatial abilities of early-deafened ferrets that received BiCIs in adulthood and that a corresponding improvement takes place in the cortical encoding of interaural level differences (ILDs), the principal cue to sound source location available to CI users (Verschuur et al., 2005). Current implantation programs in most countries worldwide do not recommend BiCIs in adults with prelingual hearing loss (Bond et al., 2009; Berrettini et al., 2011). Our results suggest, however, that this novel audiovisual training paradigm has the potential to provide many more individuals with the opportunity for hearing rehabilitation that affords binaural hearing.

Materials and Methods

Induction of hearing loss and cochlear implantation.

Sixteen ferrets, born in the University's animal care facility, were used in this study. All procedures were approved by a local ethical review committee and performed under license by the United Kingdom Home Office. Six ferrets were deafened around the onset of hearing (on postnatal day (P)30, “early-onset hearing loss”), 6 were deafened as adults (“late-onset hearing loss”), and the remaining 4 animals had NH. All deafened animals were implanted as adults in one ear (unilateral CI [UniCI]) or both ears (BiCI) (for details of individual animals, see Table 1; male to female distribution: 10 and 6, respectively).

Table 1.

Details of animals used in this studya

| ID | Postnatal age (days) at deafening | Postnatal age (days) at CI | Type of training | Duration (days) of CI use | Group | Cortical electrophysiology and age at recording |

|---|---|---|---|---|---|---|

| F0824 (NH) | — | — | Auditory | — | NH | NA |

| F0834 (NH) | — | — | Auditory | — | ||

| F0730 (NH) | — | — | Auditory | — | ||

| F0830 (NH) | — | — | Auditory | — | ||

| F0913 (BiCI) | 30 | 402 | Untrained | 118 | Early-onset hearing loss, without any training | Yes, 532 |

| F0914 (BiCI) | 30 | 412 | Untrained | 142 | Yes, 571 | |

| F0915 (BiCI) | 30 | 427 | Auditory and multisensory | 138 | Early-onset hearing loss + multisensory training | Yes, 583 |

| F0827 (BiCI) | 30 | 415 | Auditory and multisensory | 171 | Yes, 603 | |

| F0756 (UniCI) | 30 | 222 | Auditory and multisensory | 164 | No | |

| F0870 (UniCI) | 30 | 368 | Auditory and multisensory | 172 | No | |

| F0747 (BiCI) | 183 | 209 | Untrained | 0 | Late-onset hearing loss, without any training | Yes, 209 |

| F0748 (BiCI) | 190 | 212 | Untrained | 0 | Yes, 212 | |

| F0808 (BiCI) | 215 | 501 | Auditory alone | 150 | Late-onset hearing loss + auditory training | Yes, 663 |

| F0810 (BiCI) | 208 | 497 | Auditory alone | 132 | Yes, 642 | |

| F0868 (UniCI) | 256 | 312 | Auditory alone | 141 | No | |

| F0864 (UniCI) | 209 | 278 | Auditory alone | 122 | No |

aAll durations are in days. CI use duration of “0” indicates that electrophysiological recordings immediately followed the cochlear implantation without recovery.

All 4 NH animals were used for assessment of sound localization performance without any surgical interventions. Of the 6 ferrets with late-onset hearing loss, 4 were trained on the free-field sound localization task after induction of hearing loss and fitting of unilateral (n = 2) or bilateral (n = 2) CIs as adults (“late-onset hearing loss, with auditory training”), whereas the remaining 2 animals were used only for electrophysiological recording after bilateral implantation (“late-onset hearing loss, without any training”). Similarly, of the 6 animals deafened early in development (P30), 4 were implanted either unilaterally (n = 2) or bilaterally (n = 2) in adulthood and were then trained on both the sound localization task and a multisensory paradigm that included auditory and visual stimuli (“early-onset hearing loss, with multisensory training”). The other 2 early-deafened animals received BiCIs in adulthood and did not undergo any training before recording (“early-onset hearing loss, without any training”).

The techniques of deafening with aminoglycoside administration, surgical implantation of an intracochlear array, and chronic intracochlear stimulation with monitoring for electrode integrity and efficacy of stimulation were performed in accordance with protocols described previously (Hartley et al., 2010). Deafness was induced by daily subcutaneous injections of neomycin sulfate (50 mg/kg/d, Sigma-Aldrich) and confirmed by lack of auditory brainstem responses to trains of broadband noise bursts presented at ≥95 dB SPL. Animals were implanted with multichannel electrode arrays comprising 7 active intracochlear electrodes and 1 extracochlear electrode. Briefly, an incision was made behind the ear followed by exposure of the mastoid cavity. After removing the bone overlying the bulla, the basal turn of the cochlea was identified and the electrode array inserted into the scala tympani via the round window. The lead wire from the array was secured to the pericranium and tunneled under the skin to exit in the interscapular region.

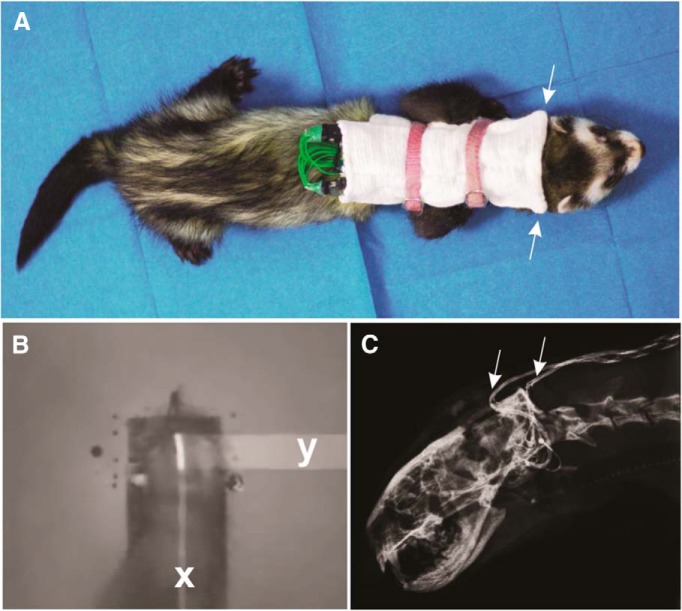

After postoperative recovery, the electrode arrays were connected via the lead wires to clinical speech processors (ESPrit 3G; Cochlear UK) carried within modified backpacks (Fig. 1). Where provided, chronic electrical stimulation was then commenced, using a continuous interleaved sampling strategy at 500 pulses/s/channel in the monopolar mode, at preset threshold and comfort levels for each animal. Using CustomSound software (Cochlear UK), comfort and threshold levels were initially set 6 dB above and 3 dB below the electrically evoked auditory brainstem response (EABR) threshold, respectively. These levels were readjusted every 3–4 weeks using electrically evoked compound action potentials measured from each channel and ear. All biphasic stimuli were scaled and presented within this range. Stimulation was provided for 8–10 h/d, every day, and impedances were measured daily via CustomSound software to assess the integrity of the electrodes.

Figure 1.

Behavioral training methods and cochlear implantation in ferrets. A, A ferret fitted with BiCIs and speech processors carried within a backpack. Arrows point to the location of microphones. Backpacks were secured by harnesses that did not hinder the mobility of the animal. B, Measurement of head-orienting accuracy. A strip of adhesive reflective tape was placed on the animal's head (white strip), and changes in the x-y coordinates of the tape were captured at a rate of 50 Hz by an infrared camera mounted directly overhead. C, x-ray illustrating the position of the implant lead wires (arrows) that were tunneled subcutaneously to reach the bulla, behind the ear.

Animal behavior.

Ferrets used in the behavioral testing paradigm were housed in standard laboratory cages. During the testing process, water access was regulated by allowing the animals to receive their daily water needs from the testing chamber through water rewards for correct trials. At the end of the day, they were provided with supplemental water to ensure that their daily needs were met according to their body weight (60–70 ml/kg). The testing chamber consisted of a circular arena enclosed within a sound-attenuated chamber lined internally with sheets of acoustic foam (MelaTech; Hodgson & Hodgson). Twelve loudspeakers (FRS 8; Visaton) and their associated water spouts were positioned at 30° intervals around the arena and hidden from the animal's view by a muslin curtain. The animal initiated a trial by standing on a centrally located platform and licking a water spout attached at its anterior end. This triggered presentation, via Tucker-Davis Technologies System 2 hardware, of a single burst of broadband noise randomly from one of the loudspeakers. An inverse transfer function was applied to ensure a flat spectrum in the output from each loudspeaker, and the noise bursts were low-pass filtered at 30 kHz. Full details are provided by Nodal et al. (2008).

For each trial, correct responses were rewarded by a predetermined amount of water from a spout positioned in front of the loudspeaker. The animals were initially trained by presenting continuous noise until one of the peripheral reward spouts was licked. This was continued until the implanted animals were able to consistently localize the source of the stimulus to an accuracy of >70%, after which the task was switched to 2000 ms stimuli. Shorter stimulus durations were also used, but because some of the ferrets performed so poorly, all the data presented here were obtained using a duration of 2000 ms to ensure that sufficient numbers of trials were performed for statistical analysis. Stimulus level was roved between trials (from 56 to 84 dB SPL in 7 dB steps for animals with NH, and from 62 to 66 dB SPL in 1 dB steps for implanted animals) to minimize the possibility of the ferrets responding on the basis of absolute level cues. For implanted animals, these levels were deliberately kept below the threshold for activation of the automatic gain control mechanisms of the speech processors, as this could have altered the binaural cue values provided. The ferrets were allowed up to 15 s to respond after the onset of the stimulus, after which a new trial had to be initiated by the animal returning to the central platform. Our software registered the reward spout licked by the animal and converted this to a percentage correct score and error magnitude and direction for each trial. The overall percentage correct score and mean unsigned error magnitude (averaged across all loudspeaker locations tested) were then calculated.

We also monitored the initial head-orienting responses made after sound onset using an overhead infrared camera to detect the Cartesian coordinates at a frame rate of 50 Hz of a reflective strip placed on the animal's head (Fig. 1B). Movements were recorded once a trial was initiated, and these were converted to angular distances traversed by the head in the first second after stimulus onset. The initial head turn made by the animal was considered over when a change in the direction of movement was recorded. The final head bearing was calculated as the mean angle over the last three frames of this initial movement or, if a change in direction was not observed, from the last three frames recorded over the period when the sampling was performed.

After completing the auditory sessions, the ferrets in the “early-onset hearing loss with multisensory training” group were trained to localize both auditory and visual stimuli by randomly interleaving noise bursts from the seven loudspeakers in the frontal hemifield with 40 ms light flashes from light-emitting diodes mounted above each one. The visual stimuli were then withdrawn and the animals retested with sound alone using the same set of loudspeakers.

Cortical electrophysiology.

Animals were prepared for electrophysiological recordings after induction and maintenance of general anesthesia with medetomidine hydrochloride (Domitor; 0.022 mg/kg; Pfizer) and ketamine (Ketaset; 5 mg/kg; Fort Dodge Animal Health). The trachea was intubated, fluid (saline 5 ml/h) was provided intravenously, and the animal's core temperature, ECG, and end-tidal CO2 were monitored throughout.

Stimuli were delivered during the recordings via the existing intracochlear electrode arrays, unless high electrode impedance measurements indicated an open circuit, suggesting an electrode breakage, in which case we reimplanted new arrays. A craniotomy was then performed over the middle ectosylvian gyrus and the dura removed to expose the primary auditory cortex (A1) (Nelken et al., 2004; Bizley et al., 2005). A digitally controlled micromanipulator (Leitz-Wetzlar, SUI) was used to insert 16-channel single-shank silicon probes (Neuronexus), which were positioned approximately orthogonal to the cortical surface.

Stimuli delivered to the intracochlear arrays in a wide bipolar configuration (∼1.5 mm between the apical, active electrode and the basal, return electrode) comprised 1 ms biphasic, square-wave electrical pulses presented at average binaural levels (ABLs) of 0–9 dB relative to the electrical threshold defined by EABR waveforms in 3 dB steps, at a rate of 30 pulses/min. The EABR threshold was defined as the minimum stimulus intensity producing a response amplitude of at least 0.4 μV for wave IV (3–3.5 ms after stimulus onset) of the EABR. As in previous studies of binaural sensitivity in ferret auditory cortex (Campbell et al., 2006), the ILD was varied without changing the ABL by increasing the stimulus level in one ear and reducing it by the same amount in the other ear. A total of 21 stimuli with ILDs covering a range of ±4 dB in 0.4 dB steps were presented at each ABL and repeated 15 times in a randomly interleaved fashion. Recordings were then digitized and amplified using Tucker-Davis Technologies System 3 hardware.

Data analysis.

Extracellular recordings were analyzed offline using automated k-means clustering of the spike waveforms. Clusters that displayed a clear refractory period within the autocorrelogram were classified as single units, and all others were deemed to be multiunit, although no differences in ILD sensitivity were found between them.

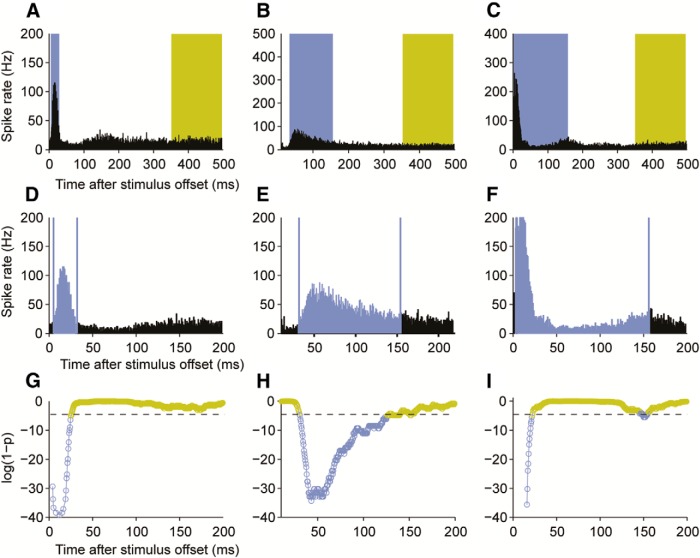

Neural signals were exported to MATLAB (MathWorks) software for further analysis. The response window was assigned a delay of 5 ms from stimulus onset to ensure that it was not contaminated by the electrical stimulus artifact. Significant responses were identified within the first 105 ms after stimulus onset, using Victor's binless method for estimating the stimulus-related information in the spike trains (Victor, 2002) (Fig. 2). Rate-ILD functions were then obtained. Although some units exhibited well-defined, monotonic rate-ILD functions, the complexity of the functions observed in other units precluded automated fitting of curves. Once the response window duration had been optimized for each stimulus within the first 105 ms after stimulus onset by the binless algorithm, peak and mean evoked rates were calculated as a function of ILD and statistically analyzed. If significant differences were observed in the initial ANOVA, a pairwise multiple-comparisons test (Tukey's Honestly Significant Difference test) was used to examine differences between experimental groups.

Figure 2.

Representative response patterns observed in three different A1 units after intracochlear stimulation via a CI. A–C, Peristimulus time histograms, over which response periods (blue) and periods of spontaneous activity (yellow) have been superimposed. The start and end times of the response window were determined for each unit using a binless algorithm in which a sliding window was moved forward in time in 1 ms steps, comparing the firing rate within that window with that in a preset spontaneous window. The algorithm automatically resizes the response window based on a trail of activity, and this is terminated when the firing rate that is no longer significantly different from that in the spontaneous window. D–F, To demonstrate algorithm performance, higher-resolution peristimulus time histograms are plotted for these same units, with the estimated response window indicated by the blue vertical lines. Significant responses were most commonly restricted to the first 50 ms (D), although longer latency (E) and more prolonged (F) responses were also observed. G–I, Log(1-probability) of responses for these units as a function of time, where the threshold of significance is indicated by the horizontal broken line. Blue circles represent significant evoked activity. Yellow circles represent activity that is no different from spontaneous levels. Because evoked activity was rarely observed beyond 100 ms, initial bounds of 5–105 ms were set for all units within which the response window was optimized using this procedure.

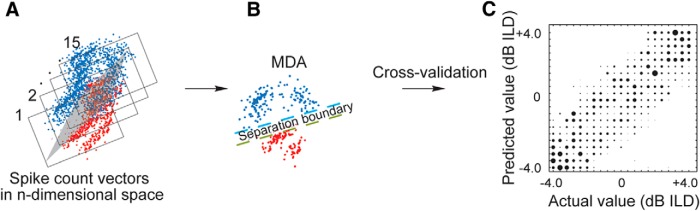

To assess how well the cortical responses represent the spatial information available to the animals, we performed a multiple discriminant analysis (MDA) to determine whether we could predict either the ILD or the ABL from the neural responses (Fig. 3). The optimal response window was first determined using the binless algorithm as detailed above. We subsequently used a linear cross-validation model (Manel et al., 1999; Petrus et al., 2014), which was fitted to a matrix of responses to predict the identity of “missing stimuli” that had been removed in turn (Campbell et al., 2010), yielding confusion matrices (e.g., Fig. 3C). Each confusion matrix contains the proportion of classifications made for the predicted stimulus identity (y-axis) plotted against the true identity of the stimulus (x-axis). This was done for both ILD (ignoring ABL) and ABL (ignoring ILD). We then quantified the similarity between the known identity of the stimulus (x) and its predicted identity (y), as determined by the cross-validation model, by calculating the mutual information (MI) between the two according to the following equation:

|

We reduced the positive bias associated with MI estimates via a bootstrapping approach (i.e., by resampling the datasets with randomly reassigned stimulus labels and subtracting the mean “chance” MI from the original estimates to obtain the bias-corrected MI) (Panzeri et al., 2007). A permutation test was then used to determine whether the observed difference in the bias-corrected MI between groups of animals was significant or not, by comparing this difference with that obtained when we pooled the data from the two distributions after random reassignment of stimulus labels. For both ILD and ABL, we calculated the mean unsigned classification error in dB from the classifications made away from the diagonal in the confusion matrices.

Figure 3.

Schematic showing the steps taken in the MDA used in this study. A, After subtracting the spontaneous rates and z-scoring, a principal components analysis was performed on the spike count vectors (represented by the dots) for each unit at each ILD-ABL combination (21 ILDs × 4 ABLs) for each of 15 stimulus repetitions (indicated by incremental counts from 1 to 15). In this case, the data are shown projected along the first (PC1, blue) and second (PC2, red) principal components. A feature space was then constructed composed of the 10 principal components that account for a substantial portion of the variance within the dataset. The MDA estimates the probability with which each response vector was assigned to the correct ILD/ABL value according to the similarity between the responses to each of the 15 stimulus repetitions. The centroid of each vector was calculated; and for every subsequent repeat, this was compared with the centroid of a previous (random) repeat of the stimulus. The data were then projected on to a reduced-dimension space (B), and the spike count vectors were separated into discrete and dissimilar clusters (dashed lines, also represented by the gray region in A). Once the classifier was “trained” in this fashion, a random spike count vector was removed from the dataset and the classifier run to estimate the value of this missing stimulus. The probability of assigning a spike count vector to its unique stimulus was then estimated by this cross-validation process. C, The performance of this classifier was evaluated by comparing the actual ILD or ABL value (x-axis) with the predicted value (y-axis), where perfect performance is indicated by the 45° diagonal, and quantified by estimating the mutual information between the actual and predicted values for both ILD and ABL.

Results

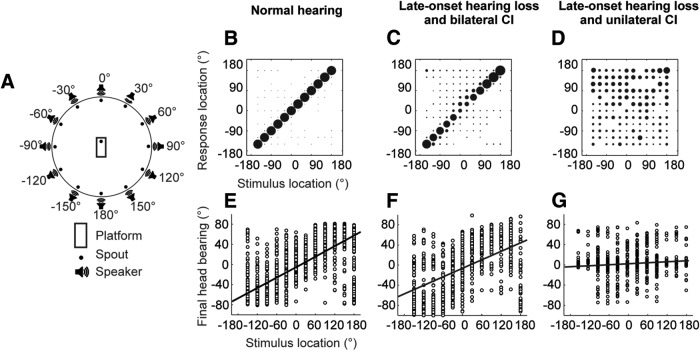

Sound localization following bilateral cochlear implantation in animals with late-onset hearing loss

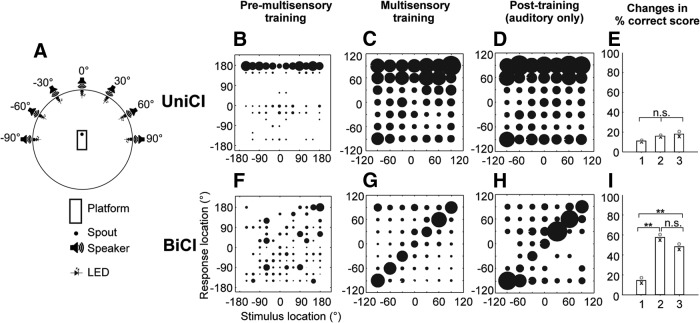

Ferrets were trained by positive conditioning to localize broadband noise bursts (2000 ms) from one of 12 speakers mounted in a circular array (Fig. 4A). Localization accuracy was greater in animals with NH (Fig. 4B) than in those with late-onset hearing loss and BiCIs (Fig. 4C). Nevertheless, both groups of animals performed significantly above chance, whereas this was not the case for those with a UniCI (Fig. 4D). A similar relationship was seen between the head-orienting response (“final head bearing”) and the target location (Fig. 4E–G), with a clear correlation between the two for ferrets with NH (Fig. 4E) and those with BiCIs (Fig. 4F), but not for ferrets with a UniCI (Fig. 4G).

Figure 4.

Sound localization after unilateral or bilateral cochlear implantation in ferrets that were deafened in adulthood. A, Testing chamber with 12 loudspeakers arranged circumferentially. A trial was initiated when the animal mounted the central platform and licked the start spout; a fluid reward was provided if the animal then approached and licked the spout adjacent to the loudspeaker from which the stimulus had been presented. B–D, Stimulus–response plots for ferrets with NH (B, n = 4), and in animals with late-onset hearing loss and either BiCIs (C, n = 2) or a UniCI (D, n = 2). The stimuli were broadband noise bursts with a duration of 2000 ms. The size of the dots indicates the proportion of responses made to each loudspeaker location. Although a clear correlation between the stimulus and response locations was observed for the control and bilaterally implanted ferrets, this was not the case for the animals with a CI in one ear only (F(2,72) = 47.24, p < 0.001, ANOVA; post hoc comparisons revealed significant differences between each group, p < 0.001). E–G, Stimulus–response plots showing the distribution of final head bearings as a function of stimulus location, for NH animals (E) and those with late-onset hearing loss and BiCIs (F) or a UniCI (G). Significant group differences in the slopes of the best-fitting linear regressions were found for these head-orienting data (F(2,5693) = 8.03, p < 0.001; ANOVA). Post hoc comparisons showed that all slopes were significantly different from each other (p < 0.001) and, importantly, that the slope of the regression line fitted to the head-orienting data from the UniCI animals was not significantly different from zero (p = 0.16), indicating that these animals were unable to localize these sounds.

These data therefore indicate that BiCIs can restore the ability of ferrets with late-onset hearing loss to localize long-duration noise bursts. Importantly, this was observed both for the approach-to-target responses, where the animals had to select which of the 12 loudspeaker/reward–spout combinations most closely matched the perceived sound direction, and the initial head-orienting responses. Localization of such long-duration sounds is potentially a closed-loop process if the animals reach the peripheral reward spout while the stimulus is still present. Measurements of response time (i.e., the time from stimulus onset to the animal licking a peripheral reward spout) indicated that this was the case for the NH ferrets (NH, mean ± SD: 1.30 ± 0.18 s), whereas the implanted ferrets typically reached the reward spout just after the stimulus had terminated (mean ± SD: BiCI = 2.17 ± 0.10 s; UniCI = 2.2 ± 0.02 s). The pronounced difference in performance between the unilateral and BiCI groups cannot therefore be attributed to the relative speed with which they make their responses. Moreover, the similarity in the latency of the saccade-like head-orienting movements (mean ± SD: NH = 138.54 ± 19.68 ms; BiCI = 172.26 ± 84.99 ms; UniCI = 186.41 ± 11.01 ms) indicates that the greater orientation accuracy observed in the NH and bilaterally implanted ferrets does not depend on the later part of the sound.

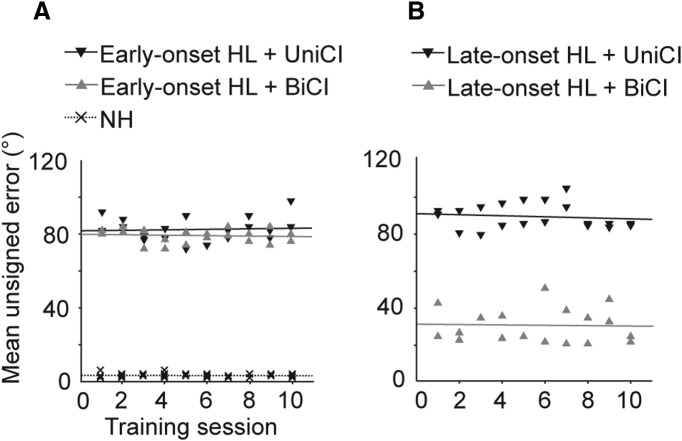

Effect of age at onset of hearing loss on sound localization

The ability of ferrets with BiCIs to localize sound varied with the age at onset of deafness. Thus, ferrets with BiCIs localized sound significantly more accurately, as illustrated by plotting the magnitude of the mean unsigned errors across all 12 loudspeaker positions, following late-onset hearing loss (Fig. 5B) than if hearing loss was initiated at P30 (Fig. 5A; ANOVA, F(2,5) = 325.13, p = 0.003). In contrast, no difference in performance was found in the ferrets with UniCIs according to the age at onset of hearing loss because sound localization accuracy was no better than chance after implantation in animals with both early- and late-onset hearing loss (Fig. 5A,B). This finding is consistent with a critical role for auditory experience in shaping the development of the brain circuits responsible for processing binaural spatial cues.

Figure 5.

Effect of training on auditory localization accuracy. A, Magnitude of the unsigned errors averaged for all incorrect trials and speaker locations over 10 consecutive training sessions for NH ferrets, and for ferrets with early-onset deafness that were fitted with a UniCI (n = 2) or BiCIs (n = 2) as adults. Each symbol represents a different animal. HL, Hearing loss. B, Equivalent data for ferrets with late-onset deafness and a UniCI (n = 2) or BiCIs (n = 2). In each case, the slopes of the fitted regression lines did not differ either between the groups (ANCOVA, p = 0.6) or from zero (t tests, p = 0.59).

The performance of the ferrets on this sound localization task was very stable. This is illustrated in Figure 5 by plotting the mean unsigned error for different animals as a function of testing session. Even after 10 consecutive testing sessions (554–619 trials in each group), no improvement in performance was seen in any group, regardless of age at onset of hearing loss or whether the animals had been implanted in one or both ears.

Effects of multisensory training on sound localization in animals with early-onset hearing loss

We next investigated whether repeated training using a localization task that interleaves auditory and visual stimuli (Fig. 6A) might facilitate auditory learning in the bilaterally implanted ferrets with early-onset hearing loss. In contrast to the previous sound-alone task, both visual and auditory stimuli were restricted to the frontal hemifield (±90°) during multisensory training to ensure that the LEDs were positioned within the animals' visual field.

Figure 6.

Effect of multisensory training on sound localization accuracy in ferrets with early-onset hearing loss. A, Testing chamber with seven loudspeakers and light-emitting diodes arranged at 30° intervals in the frontal hemifield. Auditory performance of the ferrets with a UniCI (B–E) or BiCIs (F–I) are grouped by training experience. B, F, Stimulus-response plots using all 12 loudspeakers covering the full 360° of azimuth (as in Fig. 4A) before the start of multisensory training with the multisensory setup. At this stage, no difference was found between the performance of animals with a UniCI and those with BiCIs. C, G, Stimulus-response plots for the final session of multisensory training. Subsequently, the visual stimuli were discontinued and animals were trained with auditory stimuli only for another 10 sessions. D, H, Stimulus-response plots for the last of these sound-only sessions. E, I, Mean percentage correct scores before, during, and after multisensory training. No change in auditory localization performance (proportion of correct trials) was found in the ferrets with a single CI, whereas multisensory training resulted in a significant improvement in the bilaterally implanted animals, which persisted after removal of the visual cues. Statistical comparisons are provided in text.

As shown by the magnitude of the localization errors in Figure 5A, ferrets with early-onset hearing loss and either UniCI or BiCIs performed poorly on the auditory localization task. The distribution of their approach-to-target responses across the 12 loudspeaker positions is illustrated in Figure 6B, F. The animals with a UniCI showed a marked bias in their responses toward a single location (directly behind; Fig. 6B). This bias was not seen in the bilaterally implanted animals, but their percentage correct scores were nonetheless close to the level expected by chance, and not significantly different from UniCI animals (Fig. 6B, F; χ2 test, χ2 = 0.41, p = 0.52). Although the distribution of their responses changed somewhat, no improvement in auditory localization accuracy was seen in the ferrets with a UniCI either during the multisensory training trials or when the animals were subsequently tested with sound alone (Fig. 6C–E; χ2 = 5.36, df = 2, p = 0.07). In contrast, the ferrets with early-onset hearing loss and BiCIs exhibited a significant improvement during multisensory training in their ability to localize sound, which, importantly, persisted when they were subsequently reassessed in the absence of visual cues (Fig. 6G–I). This improvement was maintained in all subsequent sessions (≥20) performed in the absence of visual cues (χ2 = 303.3, df = 2, p < 0.001; post hoc pairwise comparisons with Bonferroni correction). Because sound localization performance in these animals was assessed during and after multisensory training using stimuli presented from the frontal hemifield only (Fig. 6A), we compared their performance to that of the ferrets with late-onset hearing loss and BiCIs over corresponding stimulus locations.

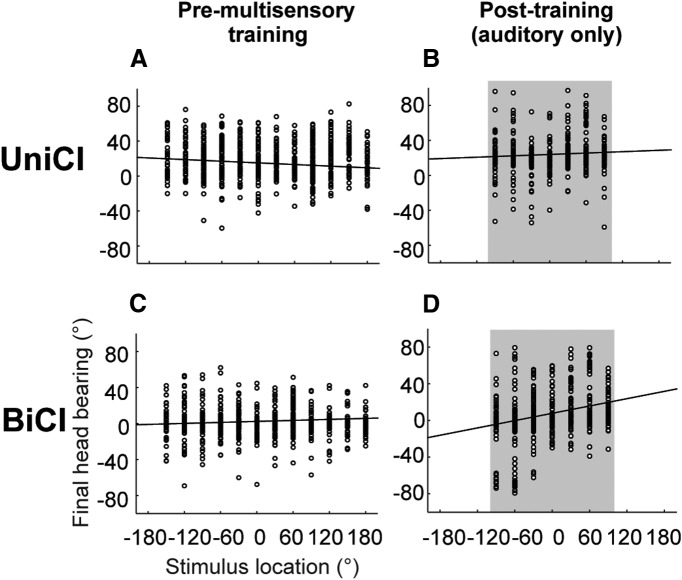

Similar changes were induced by multisensory training in the accuracy of the sound-evoked head-orienting movements made by early-deafened ferrets with BiCIs. This is shown in Figure 7 by comparing the relationship between stimulus location and final head bearing before (Fig. 7A,C) and after the period of multisensory training (Fig. 7B,D). As with the approach-to-target behavior, an improvement in orienting accuracy was observed in the bilaterally implanted animals but not in those with a single CI (ANCOVA, F(3,2476) = 161.16; p < 0.001, post hoc multiple comparisons).

Figure 7.

Effect of multisensory training on the relationship between final head bearing and auditory target location in implanted animals with early-onset hearing loss. A–D, Stimulus-response plots showing the distribution of final head bearings as a function of stimulus location for animals fitted with a UniCI (A, B) or BiCIs (C, D). Data are shown before (A, C) and after multisensory training (B, D), and linear regression lines have been fitted in each case. The slopes of these lines increased for both groups of early-deafened ferrets, but post hoc comparisons showed that the slope was significantly higher after multisensory training (D) in the bilaterally implanted animals than before training (C) and at either stage in the UniCI group, whereas no significant differences were found for any of the other pairwise comparisons. B, D, Gray shaded area represents the frontal hemifield within which the multisensory training was provided.

Together, these behavioral data indicate that interleaved visual-auditory training can significantly enhance the auditory localization ability of ferrets that were deafened in infancy and fitted with BiCIs as adults to the same level as that observed in ferrets that were both deafened and implanted bilaterally in adulthood.

Multisensory training increases the responsiveness of auditory cortical neurons in ferrets with cochlear implants

At the conclusion of the behavioral testing, we measured the ILD sensitivity of neurons in the auditory cortex of these animals. We focused on this binaural cue as the continuous interleaved sampling strategy used in this study should have provided the ferrets wearing BiCIs with useful ILDs. We have previously shown that the location of the microphones in bilaterally implanted ferrets provides physiologically relevant ILDs and interaural timing differences (ITDs) (Hartley et al., 2010). We did not consider ITDs in the present study because human CI users show greatly superior ILD versus ITD sensitivity, particularly when a continuous interleaved sampling speech processing strategy is used (Lawson et al., 1998; van Hoesel and Tyler, 2003). These studies show that ILD detection thresholds can be as low as the minimum possible current step in the speech processor outputs. Consequently, our recording electrodes were targeted to more dorsal regions of A1, where neurons tuned to high frequencies in NH ferrets (Bizley et al., 2005) would be expected to show ILD sensitivity (Campbell et al., 2006). Recordings were made from four groups of animals with the following: (1) late-onset hearing loss without any training (n = 2); (2) late-onset hearing loss with auditory training (n = 2); (3) early-onset hearing loss without any training (n = 2); and (4) early-onset hearing loss with multisensory training (n = 2). Because of differences in both age at onset of deafness and implantation, as well as differences in training history, it is inevitable that there were group differences in the ages at which the electrophysiological recordings were made (Table 1). However, the animals with early-onset hearing loss that received BiCIs, and which contributed both behavioral and electrophysiological data, were quite closely matched in terms of age at deafening (P30), age at implantation (P402–P427), and age at recording (P532–P603), regardless of whether they received training or not. The age of deafening (P208–P215) and implantation (P497–P501) was much later in the bilaterally implanted ferrets with late-onset hearing loss, but the recordings were also performed when the animals were ∼20 months old (P642–P663).

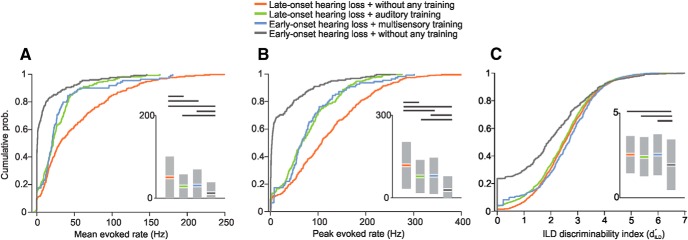

After spike sorting and identification of significant neuronal responses (Fig. 2), we first examined how well A1 units in each group responded to the brief current pulses that were delivered to the intracochlear electrodes. Within the response windows determined for each unit, we calculated mean and peak evoked spike rates by subtracting the respective mean and peak rates within a corresponding spontaneous window. Figure 8A, B summarizes these results as a function of age at onset of hearing loss and training history. We found significant group differences in both mean (ANOVA, F(3,960) = 48.97, p < 0.0001; Fig. 8A) and peak evoked firing rates (F(3,960) = 84.9, p < 0.0001; Fig. 8B), with the strongest responses observed in the animals that were deafened acutely as adults. Although these were the youngest animals from which cortical recordings were made, their relatively high firing rates most likely reflect the absence of any prolonged period of deafness or changes over time in the integrity of the CIs. The weakest responses were found in the ferrets with early-onset hearing loss that did not receive any training, further highlighting the importance of auditory input in sustaining cortical activity. Compared with the latter, cortical neurons recorded in ferrets with early-onset hearing loss that received multisensory training were significantly more responsive.

Figure 8.

Cumulative probability functions showing the relative magnitude of the stimulus-evoked responses of A1 neurons, grouped by age of onset of hearing loss and training history in animals with BiCIs. A, Mean sound-evoked firing rates. B, Peak sound-evoked firing rates. C, ILD discriminability index computed from rate-level functions. Insets, Modified box-plots showing the means and 95% confidence intervals of each spike rate measure, grouped in the same fashion as the probability functions. The probability functions and bars indicating that the means have been color-coded to identify the different groups. The horizontal lines indicate significant intergroup differences, as revealed by Tukey HSD tests for post-ANOVA pairwise comparisons.

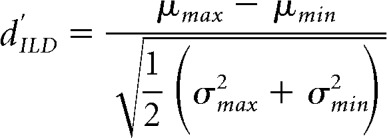

To determine whether there were also group differences in spatial resolution that might account for the behavioral results, we obtained a discriminability index (dILD′) of neuronal populations based on the range of firing rates in response to repeat presentations of each unique stimulus (Hancock et al., 2010). This was calculated as follows:

|

where μmax and μmin represent the maximum and minimum mean firing rates in response to a given stimulus across all presentations of the same stimulus, respectively, and σmax and σmin represent the corresponding SDs. This index was significantly different across the four groups of animals (ANOVA, F(3,80975) = 1176.77, p < 0.0001; Fig. 8C), and post hoc multiple comparisons confirmed that neuronal populations from the group of animals with early-onset hearing loss without any training showed the lowest discriminability. These results suggest that multisensory training can sharpen the auditory spatial sensitivity of cortical neurons in ferrets with BiCIs that were deafened in infancy. Importantly, no differences in either cortical responsiveness or ILD discriminability were found between the ferrets with early-onset hearing loss that received multisensory training after bilateral cochlear implantation and the animals with late-onset hearing loss and BiCIs that received sound localization training only. These findings therefore resemble the behavioral results from these animals.

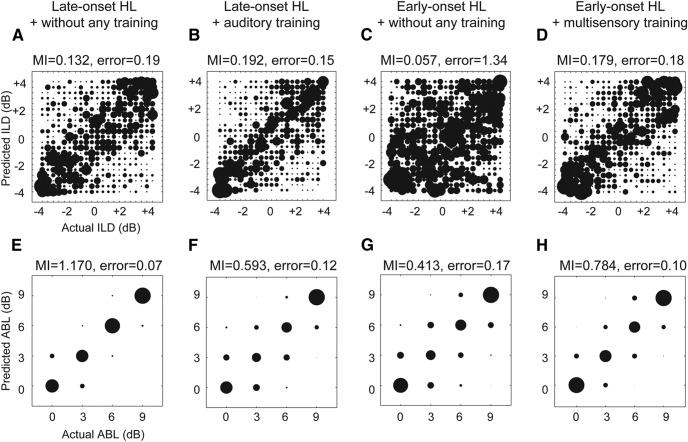

Changes in neural ILD coding following cochlear implantation and training

A number of studies have adopted the use of classifiers to quantify neuronal coding of auditory spatial cues (Middlebrooks et al., 1994; Miller and Recanzone, 2009; Lesica et al., 2010; Day and Delgutte, 2013). To assess how well their cortical responses represent the spatial information available to the animals, we used MDA (see Materials and Methods; Fig. 3) to determine whether we could predict either the ILD or the overall stimulus pulse intensity (average binaural level [ABL]) from the responses of cortical neurons. This approach has previously been used successfully to quantify the coding of communication signals (Machens et al., 2003; Schnupp et al., 2006), ILDs (Campbell et al., 2010), and pure tones (Petrus et al., 2014) by neuronal spike trains. We performed this analysis separately for ILD (ignoring ABL; Fig. 9A–D) and ABL (ignoring ILD; Fig. 9E–H). To estimate the similarity between the true and model-predicted assignments for each stimulus value, we calculated the bias-corrected MI for the confusion matrices shown in Figure 9 (see Materials and Methods). This analysis also determines the extent to which neuronal responses are modulated by changes in the stimulus parameter values and thus provided a measure of the binaural cue and sound level sensitivity of A1 neurons. In addition to the bias-corrected MI, we also calculated the mean absolute classification error associated with each confusion matrix.

Figure 9.

Results of the MDA of ILD and ABL coding by auditory cortical neurons in ferrets with BiCIs, grouped by age at onset of hearing loss and training history. The size of each circle in the bubble plots is proportional to the number of classifications made for a given stimulus value. The x- and y-axes in each plot represent the true and classifier-assigned identities, respectively. A–D, ILD coding in acutely deafened and implanted adult animals that did not receive behavioral training (A), and in animals with late-onset hearing loss followed by cochlear implantation and auditory training (B). Early-onset hearing loss followed by cochlear implantation in adulthood without any training (C), and early-onset hearing loss followed by cochlear implantation in adulthood with auditory alone and then multisensory training (D). In the untrained animals with early-onset hearing loss (C), the ILD sensitivity of cortical units was poor, as indicated by the relatively large number and magnitude of classification errors, whereas training produced a significant increase in classification accuracy (D). The MI between model-predicted and actual ILD is shown above each confusion matrix. The mean absolute error associated with each classification is also indicated above each plot. E–H, ABL coding by auditory cortical neurons for the same four groups. MI for ABL coding is also indicated above each panel.

Multisensory training resulted in a clear improvement in both the ILD and ABL sensitivity of A1 neurons in ferrets with early-onset hearing loss (Fig. 9). ILD-related MI was significantly higher in the animals that received auditory and then multisensory training (Fig. 9D) than in those that did not receive any training (Fig. 9C) (one-tailed permutation test, p < 0.001). The ABL-related MI was also higher in the trained group (p < 0.001; Fig. 9G,H). In keeping with these results, the classification-related errors for both ILD (p < 0.001) and ABL (p < 0.001) were significantly lower in the bilaterally implanted ferrets with early-onset deafness that were trained than in those animals that did not receive any training.

Comparison of the two groups of ferrets with late-onset hearing loss revealed no difference in the ILD-related MI values (permutation tests, p = 0.16) between the two groups, or in the size of the classification errors (p = 0.23). Thus, despite the prolonged period of deafness experienced in adulthood by the ferrets that subsequently received BiCIs and auditory training, the neural representation of ILDs in these animals (Fig. 9B) was no different from that seen in acutely deafened and implanted ferrets (Fig. 9A). The ABL-related MI was, however, lower in the trained animals (Fig. 9F) (p < 0.001), whereas the ABL classification errors were not significantly different between these groups (p = 0.42).

The results of this analysis confirm that multisensory training can improve stimulus coding by cortical neurons of ferrets that were deafened in infancy and implanted bilaterally in adulthood. Moreover, as in the behavioral data, cortical ILD sensitivity closely resembled that found in bilaterally implanted ferrets with late-onset hearing loss.

Discussion

We examined the effect of age of deafening on the sound localization ability of adult ferrets that received several months of acoustic stimulation via CIs fitted in either one or both ears. Regardless of the duration of deafness before implantation, animals with a UniCI performed very poorly. This was expected because they lack either the binaural or spectral localization cues that allow sound-source direction to be determined (Blauert, 1997; Majdak et al., 2011; Keating et al., 2013), and would have instead only had access to the ambiguous information provided by the acoustic head-shadow effect (Van Wanrooij and Van Opstal, 2004). In contrast, ferrets with BiCIs were able to localize sound reasonably accurately if deafness occurred after the auditory system had matured. But even animals deafened in infancy can learn to localize sound using BiCIs fitted in adulthood, so long as they were provided with appropriate training that interleaves auditory and visual stimuli. These findings provide new insights into the adaptive capabilities of the auditory system and have important clinical implications for the rehabilitation of patients fitted with BiCIs.

Effects of hearing loss on the developing auditory brain

Hearing loss can have far-reaching consequences on the development of auditory brain circuits, potentially limiting the extent to which normal functions can be restored by CIs (Kral and Eggermont, 2007; Hartley and King, 2010). For example, ultrastructural studies in congenitally deaf cats have shown that neurons in the medial superior olive, a brainstem nucleus involved in processing interaural time differences, exhibit abnormalities in the number and size of their synaptic inputs, which can be partially reversed after prolonged intracochlear electrical stimulation (Tirko and Ryugo, 2012). Recordings from neurons in the inferior colliculus of bilaterally implanted adult animals have demonstrated that ITD sensitivity in congenitally deaf cats is degraded compared with that seen in acutely deafened animals (Hancock et al., 2010, 2013). Because neural ITD coding was less severely altered in cats that were deafened as adults 6 months before recording (Hancock et al., 2013), it appears that early auditory experience is critical for the development of neural sensitivity to this localization cue.

Comparisons of the local field potentials evoked by intracochlear stimulation between acutely deafened and congenitally deaf cats have shown that the development of A1 responses is also disrupted by a lack of auditory input during development (Kral et al., 2000, 2005), leading to an abnormal representation of ITDs (Tillein et al., 2010). These findings are therefore consistent with our observations that ferrets deprived of auditory experience until they received BiCIs in adulthood exhibit degraded cortical ILD sensitivity and an inability to localize sound unless they receive appropriate training. In contrast, ILD coding and localization behavior were more accurate in animals that were deafened as adults.

The ferrets were deafened at two time points, P30, around the age of hearing onset (Moore and Hine, 1992), and at >6 months of age, when their cortical responses are mature (Mrsic-Flogel et al., 2003). Developing neural circuits are particularly susceptible to hearing loss or exposure to abnormal acoustic environments within a critical period (Knudsen, 2004; de Villers-Sidani et al., 2007; Takesian et al., 2012). This is supported by differences in cortical auditory evoked potentials (Sharma et al., 2002, 2005) and speech perception outcomes (McConkey Robbins et al., 2004; Svirsky et al., 2004) in congenitally deaf children fitted with CIs at different ages. Moreover, the benefits of BiCIs are greatest if the interval between implantation of each ear, as well as that between onset of deafness and implantation, is kept to a minimum (Gordon and Papsin, 2009; Gordon et al., 2011). In view of this, it is perhaps unsurprising that adults with early-onset hearing loss are not normally considered as candidates for BiCIs, potentially excluding many individuals who lost hearing early and continue to rely of speech reading or other less than optimal measures for their rehabilitation.

Training and cochlear implantation outcomes

If binaural spatial cues are altered by occluding one ear, adult humans (Kumpik et al., 2010) and ferrets (Kacelnik et al., 2006) can rapidly achieve near-normal localization accuracy so long as they are provided with appropriate training. Similarly, auditory training paradigms have been successful in enhancing the speech perception abilities of adult CI patients (Fu et al., 2004; Stacey et al., 2010). In contrast, we observed no improvement in the performance of the implanted ferrets after repeated testing on the auditory localization task. This may be because they did not receive sufficiently intense training, particularly as Litovsky et al. (2010) have reported that some human CI users with prelingual onset of deafness can use ILDs after bilateral cochlear implantation in adulthood.

Given this lack of improvement following practice with auditory stimuli alone, we investigated whether providing more reliable visual spatial cues might facilitate an improvement in auditory localization by bilaterally implanted ferrets that were deafened in infancy. Several studies have shown that audiovisual training can facilitate auditory (Strelnikov et al., 2011; Bernstein et al., 2013) or visual learning (Seitz et al., 2006; Shams et al., 2011). Application of multisensory training paradigms to CI users is complicated, however, by the fact that early deafness results in an expanded representation of other sensory modalities in the deprived auditory cortex (Lee et al., 2001; Bavelier and Neville, 2002; Lomber et al., 2010; Meredith and Allman, 2012). Indeed, there is evidence that the extent of this cross-modal reorganization may limit the capacity of CI users to benefit from their auditory inputs (Doucet et al., 2006; Sandmann et al., 2012). Nevertheless, comparable cross-modal plasticity has been reported in ferret A1 after early-onset (Meredith and Allman, 2012) and late-onset hearing loss (Allman et al., 2009), suggesting that this may not explain the differences in localization performance of the bilaterally implanted ferrets in which hearing was lost at different ages.

In our study, multisensory training always followed an initial period of training with sound alone in ferrets with early-onset deafness, so we were unable to compare the ILD sensitivity of A1 neurons after each stage. However, multisensory training enabled these animals to localize sound just as accurately as ferrets with late-onset hearing loss that received sound localization training only. Because the cortical response properties were also very similar in these groups, and very different from those recorded in untrained ferrets with early-onset hearing loss, it is likely that multisensory training directly influenced auditory processing in A1.

One of the consequences of the cross-modal plasticity arising from hearing loss is that audiovisual integration by human CI users is often abnormal. Thus, CI users tend to rely more heavily on visual cues when presented with incongruent auditory and visual speech (Schorr et al., 2005), and this seems to be particularly the case with individuals who are less proficient in using their implants for auditory tasks, such as speech recognition (Tremblay et al., 2010). We therefore expected that bilaterally implanted ferrets with early-onset hearing loss, which initially performed very poorly on the sound localization task, would likely ignore auditory cues if they were trained on a multisensory task in which visual and auditory stimuli are presented together on the same trials. Furthermore, although auditory localization (Shelton and Searle, 1980; Stein et al., 1988) and cortical processing (Bizley and King, 2008; Salminen et al., 2013) are normally enhanced by congruent visual cues, it has been reported that visual stimuli can impair auditory perception in CI users (Champoux et al., 2009).

Transfer of discrimination training has been demonstrated between different sensory modalities (Frieman and Goyette, 1973; Goyette and Frieman, 1973). Consequently, we trained the ferrets with early-onset hearing loss by delivering auditory and visual stimuli on separate, randomly interleaved trials. This produced a significant improvement in auditory localization accuracy, which persisted after the visual stimuli were removed. Moreover, cortical neurons in these animals transmitted significantly more information about ILDs than those recorded in untrained ferrets that were deafened at the same age, suggesting that multisensory training in adulthood can improve the neural representation of this spatial cue, thereby offsetting the effects of developmental hearing loss.

Rather than interacting at an early processing stage, such as auditory cortex, Bernstein et al. (2013) proposed that visual speech stimuli may guide auditory perceptual learning by directing top-down attention to the corresponding auditory features. Interestingly, Kral and Eggermont (2007) have argued that the absence of auditory experience in late-implanted children results in a deficit in top-down modulation of processing in A1 and a decrease in adaptive plasticity. Using visual training to engage higher-order multisensory areas that project back to auditory cortex may help to overcome these deficits and facilitate learning of an equivalent goal-directed task driven primarily by sound. The prefrontal cortex may be involved in this, given that neurons there can encode behaviorally relevant associations between visual and auditory stimuli presented at different times (Fuster et al., 2000).

Our multisensory training paradigm was based on the principle that presentation of auditory and visual information on separate, randomly interleaved trials may circumvent the bias toward visual stimuli that results from deafness-induced cross-modal reorganization of the auditory cortex. By training bilaterally implanted adult ferrets with early-onset hearing loss in this fashion, we have shown that they can learn to process ILDs to a level commensurate with that seen in normally raised animals that were deafened in adulthood. Our results therefore suggest that multisensory training could provide an important strategy for helping BiCI recipients to benefit from electrical stimulation of both ears, particularly in individuals who may not fit traditional criteria for bilateral implantation, such as adults with early-onset hearing loss. If comparable improvements are shown in human subjects, multisensory training has the potential to extend candidacy for CIs, as well as to augment current training methods for improving auditory perception in these individuals.

Footnotes

This work was supported by a Rhodes Scholarship to A.I., a Wellcome Principal Research Fellowship to A.J.K. (WT076508AIA), and a Wellcome Clinician Scientist Fellowship to D.E.H.H. We thank Peter Keating for technical assistance, Caroline Bergmann for veterinary consultations, Stacey Barclay for animal care, and Martyn Preston for modification of cochlear implant equipment.

The authors declare no competing financial interests.

This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/3.0), which permits unrestricted use, distribution and reproduction in any medium provided that the original work is properly attributed.

References

- Allman BL, Keniston LP, Meredith MA. Adult deafness induces somatosensory conversion of ferret auditory cortex. Proc Natl Acad Sci U S A. 2009;106:5925–5930. doi: 10.1073/pnas.0809483106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ. Cross-modal plasticity: where and how? Nat Rev Neurosci. 2002;3:443–452. doi: 10.1093/cercor/bhs340. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Dye MW, Hauser PC. Do deaf individuals see better? Trends Cogn Sci. 2006;10:512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein LE, Auer ET, Jr, Eberhardt SP, Jiang J. Auditory perceptual learning for speech perception can be enhanced by audiovisual training. Front Neurosci. 2013;7:34. doi: 10.3389/fnins.2013.00034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berrettini S, Baggiani A, Bruschini L, Cassandro E, Cuda D, Filipo R, Palla I, Quaranta N, Forli F. Systematic review of the literature on the clinical effectiveness of the cochlear implant procedure in adult patients. Acta Otorhinolaryngol Ital. 2011;31:299–310. [PMC free article] [PubMed] [Google Scholar]

- Bizley JK, King AJ. Visual-auditory spatial processing in auditory cortical neurons. Brain Res. 2008;1242:24–36. doi: 10.1016/j.brainres.2008.02.087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizley JK, King AJ. What can multisensory processing tell us about the functional organization of auditory cortex? In: Murray MM, Wallace MT, editors. The neural bases of multisensory processes. Boca Raton, FL: CRC; 2012. Frontiers in neuroscience. [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Nelken I, King AJ. Functional organization of ferret auditory cortex. Cereb Cortex. 2005;15:1637–1653. doi: 10.1093/cercor/bhi042. [DOI] [PubMed] [Google Scholar]

- Blauert J. Spatial hearing: the psychophysics of human sound localization. Cambridge, MA: Massachusetts Institute of Technology; 1997. [Google Scholar]

- Bond M, Mealing S, Anderson R, Elston J, Weiner G, Taylor RS, Hoyle M, Liu Z, Price A, Stein K. The effectiveness and cost-effectiveness of cochlear implants for severe to profound deafness in children and adults: a systematic review and economic model. Heal Technol Assess. 2009;13:1–330. doi: 10.3310/hta13440. [DOI] [PubMed] [Google Scholar]

- Brown KD, Balkany TJ. Benefits of bilateral cochlear implantation: a review. Curr Opin Otolaryngol Head Neck Surg. 2007;15:315–318. doi: 10.1097/MOO.0b013e3282ef3d3e. [DOI] [PubMed] [Google Scholar]

- Campbell RA, Schnupp JW, Shial A, King AJ. Binaural-level functions in ferret auditory cortex: evidence for a continuous distribution of response properties. J Neurophysiol. 2006;95:3742–3755. doi: 10.1152/jn.01155.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell RA, Schulz AL, King AJ, Schnupp JW. Brief sounds evoke prolonged responses in anesthetized ferret auditory cortex. J Neurophysiol. 2010;103:2783–2793. doi: 10.1152/jn.00730.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Champoux F, Lepore F, Gagné JP, Théoret H. Visual stimuli can impair auditory processing in cochlear implant users. Neuropsychologia. 2009;47:17–22. doi: 10.1016/j.neuropsychologia.2008.08.028. [DOI] [PubMed] [Google Scholar]

- Day ML, Delgutte B. Decoding sound source location and separation using neural population activity patterns. J Neurosci. 2013;33:15837–15847. doi: 10.1523/JNEUROSCI.2034-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villers-Sidani E, Chang EF, Bao S, Merzenich MM. Critical period window for spectral tuning defined in the primary auditory cortex (A1) in the rat. J Neurosci. 2007;27:180–189. doi: 10.1523/JNEUROSCI.3227-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doucet ME, Bergeron F, Lassonde M, Ferron P, Lepore F. Cross-modal reorganization and speech perception in cochlear implant users. Brain. 2006;129:3376–3383. doi: 10.1093/brain/awl264. [DOI] [PubMed] [Google Scholar]

- Frieman J, Goyette CH. Transfer of training across stimulus modality and response class. J Exp Psychol. 1973;97:235–241. doi: 10.1037/h0033931. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Galvin J, Wang X, Nogaki G. Effects of auditory training on adult cochlear implant patients: a preliminary report. Cochlear Implants Int. 2004;5(Suppl 1):84–90. doi: 10.1179/cim.2004.5.Supplement-1.84. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Gordon KA, Papsin BC. Benefits of short interimplant delays in children receiving bilateral cochlear implants. Otol Neurotol. 2009;30:319–331. doi: 10.1097/MAO.0b013e31819a8f4c. [DOI] [PubMed] [Google Scholar]

- Gordon KA, Jiwani S, Papsin BC. What is the optimal timing for bilateral cochlear implantation in children? Cochlear Implants Int. 2011;12(Suppl 2):S8–S14. doi: 10.1179/146701011X13074645127199. [DOI] [PubMed] [Google Scholar]

- Goyette CH, Frieman J. Transfer of training following discrimination learning with two reinforced responses. Learn Motiv. 1973;4:432–444. doi: 10.1016/0023-9690(73)90008-8. [DOI] [Google Scholar]

- Hancock KE, Noel V, Ryugo DK, Delgutte B. Neural coding of interaural time differences with bilateral cochlear implants: effects of congenital deafness. J Neurosci. 2010;30:14068–14079. doi: 10.1523/JNEUROSCI.3213-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hancock KE, Chung Y, Delgutte B. Congenital and prolonged adult-onset deafness cause distinct degradations in neural ITD coding with bilateral cochlear implants. J Assoc Res Otolaryngol. 2013;14:393–411. doi: 10.1007/s10162-013-0380-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley DEH, King AJ. Development of the auditory pathway. In: Moore DR, Fuchs PA, Rees A, Palmer AR, Plack CJ, editors. The Oxford handbook of auditory science: the auditory brain. Oxford: Oxford UP; 2010. [Google Scholar]

- Hartley DE, Vongpaisal T, Xu J, Shepherd RK, King AJ, Isaiah A. Bilateral cochlear implantation in the ferret: a novel animal model for behavioral studies. J Neurosci Methods. 2010;190:214–228. doi: 10.1016/j.jneumeth.2010.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik O, Nodal FR, Parsons CH, King AJ. Training-induced plasticity of auditory localization in adult mammals. PLoS Biol. 2006;4:e71. doi: 10.1371/journal.pbio.0040071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Remedios R, Logothetis NK. Multisensory influences on auditory processing: perspectives from fMRI and electrophysiology. In: Murray MM, Wallace MT, editors. The neural bases of multisensory processes. Boca Raton, FL: CRC; 2012. Frontiers in neuroscience. [PubMed] [Google Scholar]

- Keating P, Dahmen JC, King AJ. Context-specific reweighting of auditory spatial cues following altered experience during development. Curr Biol. 2013;23:1291–1299. doi: 10.1016/j.cub.2013.05.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen EI. Sensitive periods in the development of the brain and behavior. J Cogn Neurosci. 2004;16:1412–1425. doi: 10.1162/0898929042304796. [DOI] [PubMed] [Google Scholar]

- Kral A, Eggermont JJ. What's to lose and what's to learn: development under auditory deprivation, cochlear implants and limits of cortical plasticity. Brain Res Rev. 2007;56:259–269. doi: 10.1016/j.brainresrev.2007.07.021. [DOI] [PubMed] [Google Scholar]

- Kral A, Hartmann R, Tillein J, Heid S, Klinke R. Congenital auditory deprivation reduces synaptic activity within the auditory cortex in a layer-specific manner. Cereb Cortex. 2000;10:714–726. doi: 10.1093/cercor/10.7.714. [DOI] [PubMed] [Google Scholar]

- Kral A, Tillein J, Heid S, Hartmann R, Klinke R. Postnatal cortical development in congenital auditory deprivation. Cereb Cortex. 2005;15:552–562. doi: 10.1093/cercor/bhh156. [DOI] [PubMed] [Google Scholar]

- Kumpik DP, Kacelnik O, King AJ. Adaptive reweighting of auditory localization cues in response to chronic unilateral earplugging in humans. J Neurosci. 2010;30:4883–4894. doi: 10.1523/JNEUROSCI.5488-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawson DT, Wilson BS, Zerbi M, van den Honert C, Finley CC, Farmer JC, Jr, McElveen JT, Jr, Roush PA. Bilateral cochlear implants controlled by a single speech processor. Am J Otol. 1998;19:758–761. [PubMed] [Google Scholar]

- Lee DS, Lee JS, Oh SH, Kim SK, Kim JW, Chung JK, Lee MC, Kim CS. Cross-modal plasticity and cochlear implants. Nature. 2001;409:149–150. doi: 10.1038/35051653. [DOI] [PubMed] [Google Scholar]

- Lesica NA, Lingner A, Grothe B. Population coding of interaural time differences in gerbils and barn owls. J Neurosci. 2010;30:11696–11702. doi: 10.1523/JNEUROSCI.0846-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Jones GL, Agrawal S, van Hoesel R. Effect of age at onset of deafness on binaural sensitivity in electric hearing in humans. J Acoust Soc Am. 2010;127:400–414. doi: 10.1121/1.3257546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lomber SG, Meredith MA, Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- Machens CK, Schütze H, Franz A, Kolesnikova O, Stemmler MB, Ronacher B, Herz AV. Single auditory neurons rapidly discriminate conspecific communication signals. Nat Neurosci. 2003;6:341–342. doi: 10.1038/nn1036. [DOI] [PubMed] [Google Scholar]

- Majdak P, Goupell MJ, Laback B. Two-dimensional localization of virtual sound sources in cochlear-implant listeners. Ear Hear. 2011;32:198–208. doi: 10.1097/AUD.0b013e3181f4dfe9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manel S, Dias JM, Ormerod SJ. Comparing discriminant analysis, neural networks and logistic regression for predicting species distributions: a case study with a Himalayan river bird. Ecol Model. 1999;120:337–347. doi: 10.1016/S0304-3800(99)00113-1. [DOI] [Google Scholar]

- McConkey Robbins A, Koch DB, Osberger MJ, Zimmerman-Phillips S, Kishon-Rabin L. Effect of age at cochlear implantation on auditory skill development in infants and toddlers. Arch Otolaryngol Head Neck Surg. 2004;130:570–574. doi: 10.1001/archotol.130.5.570. [DOI] [PubMed] [Google Scholar]

- Merabet LB, Pascual-Leone A. Neural reorganization following sensory loss: the opportunity of change. Nat Rev Neurosci. 2010;11:44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Allman BL. Early hearing-impairment results in crossmodal reorganization of ferret core auditory cortex. Neural Plast. 2012;2012:601591. doi: 10.1155/2012/601591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middlebrooks JC, Clock AE, Xu L, Green DM. A panoramic code for sound location by cortical neurons. Science. 1994;264:842–844. doi: 10.1126/science.8171339. [DOI] [PubMed] [Google Scholar]

- Miller LM, Recanzone GH. Populations of auditory cortical neurons can accurately encode acoustic space across stimulus intensity. Proc Natl Acad Sci U S A. 2009;106:5931–5935. doi: 10.1073/pnas.0901023106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore DR, Hine JE. Rapid development of the auditory brainstem response threshold in individual ferrets. Brain Res Dev Brain Res. 1992;66:229–235. doi: 10.1016/0165-3806(92)90084-A. [DOI] [PubMed] [Google Scholar]

- Moore DR, Shannon RV. Beyond cochlear implants: awakening the deafened brain. Nat Neurosci. 2009;12:686–691. doi: 10.1038/nn.2326. [DOI] [PubMed] [Google Scholar]

- Mrsic-Flogel TD, Schnupp JW, King AJ. Acoustic factors govern developmental sharpening of spatial tuning in the auditory cortex. Nat Neurosci. 2003;6:981–988. doi: 10.1038/nn1108. [DOI] [PubMed] [Google Scholar]

- Müller J, Schön F, Helms J. Speech understanding in quiet and noise in bilateral users of the MED-EL COMBI 40/40+ cochlear implant system. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Nelken I, Bizley JK, Nodal FR, Ahmed B, Schnupp JW, King AJ. Large-scale organization of ferret auditory cortex revealed using continuous acquisition of intrinsic optical signals. J Neurophysiol. 2004;92:2574–2588. doi: 10.1152/jn.00276.2004. [DOI] [PubMed] [Google Scholar]

- Nodal FR, Bajo VM, Parsons CH, Schnupp JW, King AJ. Sound localization behavior in ferrets: comparison of acoustic orientation and approach-to-target responses. Neuroscience. 2008;154:397–408. doi: 10.1016/j.neuroscience.2007.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panzeri S, Senatore R, Montemurro MA, Petersen RS. Correcting for the sampling bias problem in spike train information measures. J Neurophysiol. 2007;98:1064–1072. doi: 10.1152/jn.00559.2007. [DOI] [PubMed] [Google Scholar]

- Petrus E, Isaiah A, Jones AP, Li D, Wang H, Lee HK, Kanold PO. Cross-modal induction of thalamocortical potentiation leads to enhanced information processing in the auditory cortex. Neuron. 2014;81:664–673. doi: 10.1016/j.neuron.2013.11.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salminen NH, Aho J, Sams M. Visual task enhances spatial selectivity in the human auditory cortex. Front Neurosci. 2013;7:44. doi: 10.3389/fnins.2013.00044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandmann P, Dillier N, Eichele T, Meyer M, Kegel A, Pascual-Marqui RD, Marcar VL, Jäncke L, Debener S. Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain J Neurol. 2012;135:555–568. doi: 10.1093/brain/awr329. [DOI] [PubMed] [Google Scholar]

- Schnupp JW, Hall TM, Kokelaar RF, Ahmed B. Plasticity of temporal pattern codes for vocalization stimuli in primary auditory cortex. J Neurosci. 2006;26:4785–4795. doi: 10.1523/JNEUROSCI.4330-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schorr EA, Fox NA, van Wassenhove V, Knudsen EI. Auditory-visual fusion in speech perception in children with cochlear implants. Proc Natl Acad Sci U S A. 2005;102:18748–18750. doi: 10.1073/pnas.0508862102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, “unisensory” processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim R, Shams L. Sound facilitates visual learning. Curr Biol. 2006;16:1422–1427. doi: 10.1016/j.cub.2006.05.048. [DOI] [PubMed] [Google Scholar]

- Shams L, Wozny DR, Kim R, Seitz A. Influences of multisensory experience on subsequent unisensory processing. Front Psychol. 2011;2:264. doi: 10.3389/fpsyg.2011.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr AJ. A sensitive period for the development of the central auditory system in children with cochlear implants: implications for age of implantation. Ear Hear. 2002;23:532–539. doi: 10.1097/00003446-200212000-00004. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Kral A. The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hear Res. 2005;203:134–143. doi: 10.1016/j.heares.2004.12.010. [DOI] [PubMed] [Google Scholar]

- Shelton BR, Searle CL. The influence of vision on the absolute identification of sound-source position. Percept Psychophys. 1980;28:589–596. doi: 10.3758/BF03198830. [DOI] [PubMed] [Google Scholar]

- Stacey PC, Raine CH, O'Donoghue GM, Tapper L, Twomey T, Summerfield AQ. Effectiveness of computer-based auditory training for adult users of cochlear implants. Int J Audiol. 2010;49:347–356. doi: 10.3109/14992020903397838. [DOI] [PubMed] [Google Scholar]

- Stein BE, Huneycutt WS, Meredith MA. Neurons and behavior: the same rules of multisensory integration apply. Brain Res. 1988;448:355–358. doi: 10.1016/0006-8993(88)91276-0. [DOI] [PubMed] [Google Scholar]

- Strelnikov K, Rosito M, Barone P. Effect of audiovisual training on monaural spatial hearing in horizontal plane. PLoS One. 2011;6:e18344. doi: 10.1371/journal.pone.0018344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svirsky MA, Teoh SW, Neuburger H. Development of language and speech perception in congenitally, profoundly deaf children as a function of age at cochlear implantation. Audiol Neurootol. 2004;9:224–233. doi: 10.1159/000078392. [DOI] [PubMed] [Google Scholar]

- Takesian AE, Kotak VC, Sanes DH. Age-dependent effect of hearing loss on cortical inhibitory synapse function. J Neurophysiol. 2012;107:937–947. doi: 10.1152/jn.00515.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tillein J, Hubka P, Syed E, Hartmann R, Engel AK, Kral A. Cortical representation of interaural time difference in congenital deafness. Cereb Cortex. 2010;20:492–506. doi: 10.1093/cercor/bhp222. [DOI] [PubMed] [Google Scholar]

- Tirko NN, Ryugo DK. Synaptic plasticity in the medial superior olive of hearing, deaf, and cochlear-implanted cats. J Comp Neurol. 2012;520:2202–2217. doi: 10.1002/cne.23038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay C, Champoux F, Lepore F, Théoret H. Audiovisual fusion and cochlear implant proficiency. Restor Neurol Neurosci. 2010;28:283–291. doi: 10.3233/RNN-2010-0498. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJ. Exploring the benefits of bilateral cochlear implants. Audiol Neurootol. 2004;9:234–246. doi: 10.1159/000078393. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJM. Bilateral cochlear implants. In: Zeng FG, Popper A, Fay RR, editors. Auditory prostheses: new horizons. New York: Springer; 2012. pp. 13–58. [Google Scholar]

- van Hoesel RJ, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J Acoust Soc Am. 2003;113:1617–1630. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- Van Wanrooij MM, Van Opstal AJ. Contribution of head shadow and pinna cues to chronic monaural sound localization. J Neurosci. 2004;24:4163–4171. doi: 10.1523/JNEUROSCI.0048-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verschuur CA, Lutman ME, Ramsden R, Greenham P, O'Driscoll M. Auditory localization abilities in bilateral cochlear implant recipients. Otol Neurotol. 2005;26:965–971. doi: 10.1097/01.mao.0000185073.81070.07. [DOI] [PubMed] [Google Scholar]

- Victor JD. Binless strategies for estimation of information from neural data. Phys Rev E Stat Nonlin Soft Matter Phys. 2002;66 doi: 10.1103/PhysRevE.66.051903. 051903. [DOI] [PubMed] [Google Scholar]