Abstract

Big brown bats emit biosonar sounds and perceive their surroundings from the delays of echoes received by the ears. Broadcasts are frequency modulated (FM) and contain two prominent harmonics sweeping from 50 to 25 kHz (FM1) and from 100 to 50 kHz (FM2). Individual frequencies in each broadcast and each echo evoke single-spike auditory responses. Echo delay is encoded by the time elapsed between volleys of responses to broadcasts and volleys of responses to echoes. If echoes have the same spectrum as broadcasts, the volley of neural responses to FM1 and FM2 is internally synchronized for each sound, which leads to sharply focused delay images. Because of amplitude–latency trading, disruption of response synchrony within the volleys occurs if the echoes are lowpass filtered, leading to blurred, defocused delay images. This effect is consistent with the temporal binding hypothesis for perceptual image formation. Bats perform inexplicably well in cluttered surroundings where echoes from off-side objects ought to cause masking. Off-side echoes are lowpass filtered because of the shape of the broadcast beam, and they evoke desynchronized auditory responses. The resulting defocused images of clutter do not mask perception of focused images for targets. Neural response synchronization may select a target to be the focus of attention, while desynchronization may impose inattention on the surroundings by defocusing perception of clutter. The formation of focused biosonar images from synchronized neural responses, and the defocusing that occurs with disruption of synchrony, quantitatively demonstrates how temporal binding may control attention and bring a perceptual object into existence.

Key words: Echolocating bats, Biosonar, Temporal binding, Attention, Perceived images, Echo delay, Neural response latency, Amplitude–latency trading

Introduction: perceived objects, attention and inattention

The environment delivers multifaceted stimuli representing individual objects in the surrounding scene that have to be isolated from other objects for localization and identification. Parallel registration of multiple stimulus features occurs because different groups of sensory neurons respond selectively to different features, thus segregating the features into subpopulations of neurons. The ensuing feature ‘inventory’ is not sufficient to reconstruct the actual objects for perception, however, because many features represent information distributed across more of the scene than just one object. Thus, although features are decomposed out of the stimuli, they have to be grouped to reconstitute individual objects for perception. The temporal binding hypothesis (von der Malsburg, 1995; von der Malsburg, 1999; Engel and Singer, 2001) proposes that a unitary object emerges from its surroundings to be perceived because of the synchronization of responses distributed across neurons that are selective for its constituent features. Features of other objects produce responses that are not synchronized to this same instant and would not be incorporated into the perceived image. In effect, the object occupies the perceptual center because its features are bound together by the common timing of their responses (Roelfsema et al., 1996; Uhlhaas et al., 2009). Lack of response synchrony relegates the features of the other objects to the perceptual background. The temporal binding hypothesis implies that attention brings a specific object into perception by registering synchronized responses. Selective attention usually is conceived of as an active process – a ‘searchlight’ that moves across the neuronal representation of the scene to select one object for momentary perception (Crick, 1984; Crick and Koch, 2003). Shifting of attention involves waxing and waning of the salience of the feature representations for individual objects to guide the searchlight (Itti and Koch, 2001). The assignment of other objects to the unattended background of the scene is assumed to be a passive process – they just are not selected by the searchlight. The bat's sonar illustrates how this searchlight may operate in the context of temporal binding. Unexpectedly, it provides evidence that inattention may involve an active process that exploits the desynchronization of responses to magnify the perceptual separation of the inattended background outside the searchlight's ‘spot’ from the attended object in the spot.

The temporal binding hypothesis was invoked to explain the formation of visual images, but unambiguously addressing the hypothesis has proven difficult, with no clear consensus on the outcome (e.g. Shadlen and Movshon, 1999; Wallis, 2005; see Treisman, 1999). Visual stimuli contain many distinguishable features, and the visual system has a corresponding proliferation of feature representations at multiple cortical stages (Felleman and van Essen, 1991). Responses to various visual features are spread over both space and time in the brain, which provides multiple opportunities for linking of responses occurring at the same time across different levels, but which also obscures observation of any specific occurrence of synchronization. Moreover, visual neurons mostly respond to stimuli with multiple spikes. It is difficult to determine which spikes evoked in one neuron are supposed to be synchronized to which spikes in other neurons to define binding. To overcome this difficulty, low-frequency oscillatory signals widely present in neural tissue have been proposed as the synchronizing reference, but supporting evidence is correlational in nature.

What would be the characteristics of an ideal perceptual system for directly testing the binding hypothesis? First, stimuli must be quantitatively distinguishable along one or more feature dimensions, with each such dimension having its own feature-selective neuronal subpopulation. Different numerical values of an identifiable feature should activate different neurons within that subpopulation. Second, changes in numerical values for the feature should affect the content of the percept, which makes psychophysical experiments essential.

List of abbreviations

- FM

frequency modulated

- FM1

first harmonic FM sweep

- FM2

second harmonic FM sweep

Third, to avoid ambiguity about which responses are supposed to synchronized, each feature-selective neuron should respond to an individual presentation of the stimulus with just one spike, not with multiple spikes. Fourth, the timing, or latency, of each spike should have a recognizable contribution to the content of the percept. If responses of some cells are induced to drop out of synchrony by moving to a different latency, a clearly defined, quantifiable change should be manifested in the percept. A perceptual system that satisfies these conditions is the biological sonar of bats (Neuweiler, 2000).

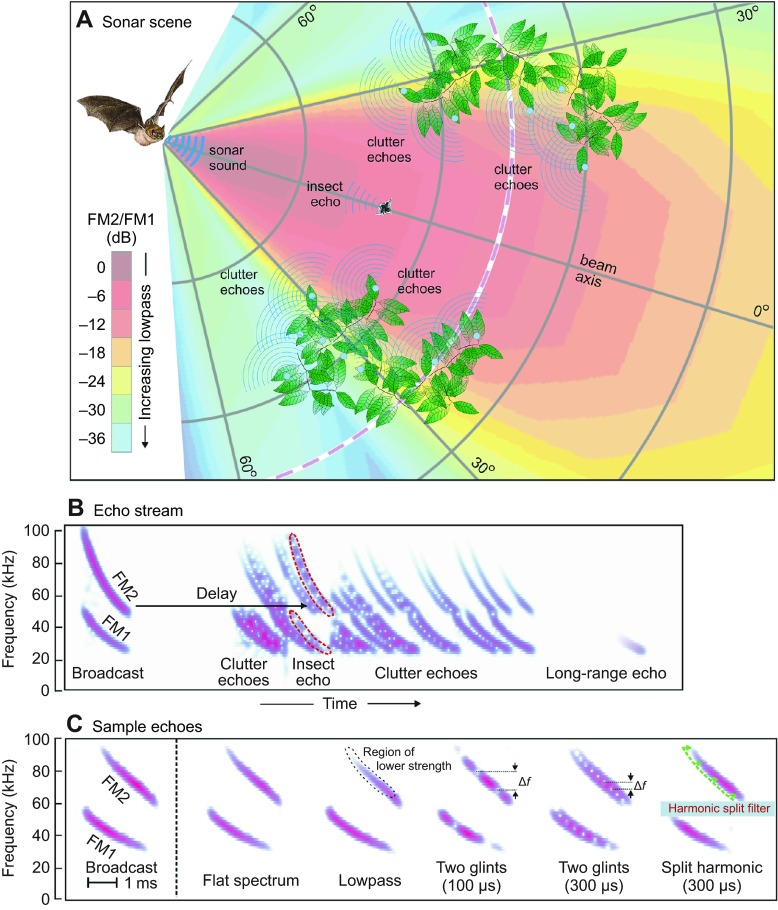

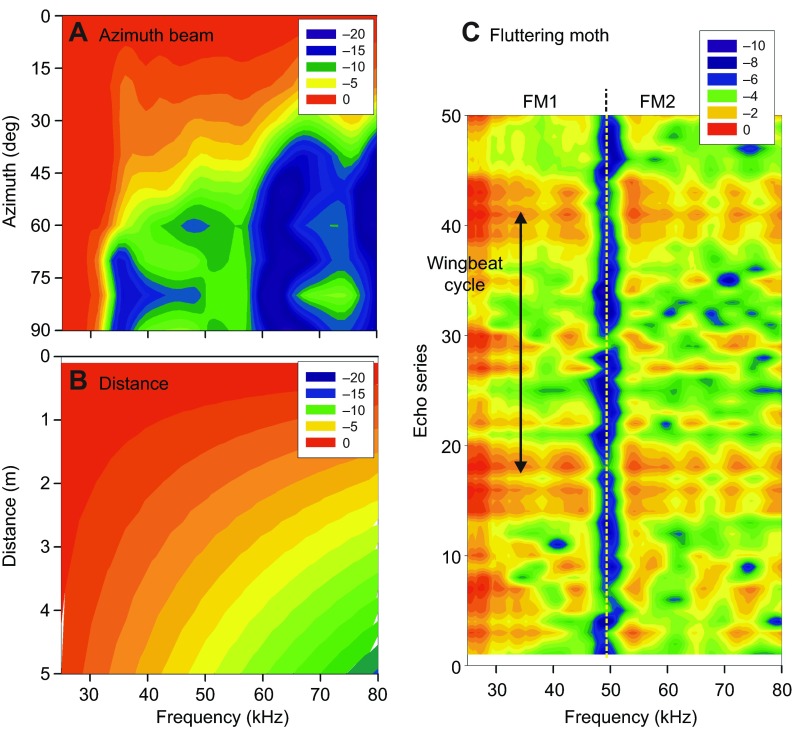

Biosonar scenes

Echolocating big brown bats, Eptesicus fuscus (Palisot de Beauvois 1796), emit trains of frequency-modulated (FM) biosonar sounds and orient in the near environment by listening for echoes that return to their ears (Moss and Surlykke, 2010; Neuweiler, 2000). The bat senses the highly dynamic surrounding sonar scene (Fig. 1A) from the stream of returning echoes that follows each broadcast (Fig. 1B) (Moss and Surlykke, 2010; Baker et al., 2014). Successive sounds are produced at intervals from over 100 ms down to approximately 10 ms, depending on the proximity of objects, the depth of the scene (e.g. Aytekin et al., 2010; Hiryu et al., 2010) and whether the bat is maneuvering to catch an insect or avoid a collision (Barchi et al., 2013; Hiryu et al., 2010; Petrites et al., 2009). The big brown bat's hearing covers frequencies of 10 to 100 kHz (Koay et al., 1997; Macías et al., 2006). Neural tuning in the bat's auditory system spans these same frequencies (Covey and Casseday, 1995; Simmons, 2012). The bat's broadcasts are short (0.5 to 20 ms) and contain frequencies from approximately 20 to 100 kHz in two prominent downward-sweeping harmonics (FM1 from 50 to 25 kHz, and FM2 from 100 to 50 kHz). The use of FM harmonics is technologically novel for sonar, and it is important to understand what bats might gain from their presence. Fig. 1B,C depicts broadcasts and echoes as spectrograms, which have a linear vertical frequency axis. Overall, echoes are similar to broadcasts except for being delayed by travel out to the object and back (5.8 ms m−1). This delay is the bat's cue to perceive target distance, or range. Each echo also is weakened by losses incurred during travel, and is further weakened by the reflective strength of individual objects (Houston et al., 2004; Simmons and Chen, 1989; Stilz and Schnitzler, 2012). Sample echoes (Fig. 1C) illustrate specific modifications caused by different acoustic conditions imposed by the scene. Only for nearby objects located on the axis of the beam does the impinging sound contain the full broadcast spectrum (i.e. ‘flat’ spectrum; Fig. 1B,C, Fig. 2A,B). Objects located off to the sides or farther away are illuminated by the sound (‘ensonified’) less strongly at higher frequencies (lowpass filtered; Fig. 2A,B), an effect that is carried back in the echoes. Thus, off-axis or distant echoes always are lowpass filtered. Detectability of targets at distances of 10–20 m depends on reception of the lowest frequencies that survive over long distances through the air (Holderied et al., 2005; Stilz and Schnitzler, 2012; Surlykke and Kalko, 2008). The object's shape also contributes to the echo spectrum due to reinforcement and cancellation at specific frequencies caused by overlapping reflections from different parts of the object, or ‘glints’ (Simon et al., 2014; Moss and Zagaeski, 1994; Simmons and Chen, 1989). Interference between glint reflections creates multiple spectral nulls in insect echoes (Fig. 2C) spaced at frequency intervals (Δf) that are reciprocally related to the delay separation of the reflections (e.g. 100 or 300 μs in Fig. 1C). The high spatial frequency of spectral ripples (Shamma, 2001) – ‘speckle’ in spectrogram images of fluttering insect echoes (Fig. 2C) – unambiguously distinguishes target characteristics from the much smoother lowpass effects of spatial location within the scene (Fig. 2A,B). Individual neurons in the big brown bat's auditory system respond selectively to multiple ripple nulls at different frequencies (Sanderson and Simmons, 2002), but they are not selectively tuned for the broader decline in strength of lowpass echoes (see Simmons, 2012). The bat perceives the target's shape from the ripples in the spectrum created by these nulls (Simon et al., 2014; Falk et al., 2011; Simmons, 2012).

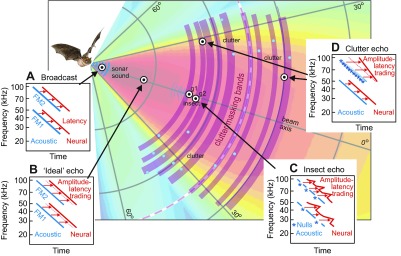

Fig. 1.

Representation of sonar scene. (A) Sonar scene showing bat, broadcast beam, insect and surrounding vegetation (clutter). FM broadcast contains full emitted frequency content (FM1, FM2) only immediately in front of the bat's mouth (dark red sector of beam; beam color depicts the amplitude ratio of FM2/FM1). Higher frequencies (FM2) are progressively weakened off to the sides and farther away (see color scale). The insect is a compact object, while screens of vegetation are extended surfaces containing numerous reflecting points (many blue circles). The spectrum of echoes is determined mainly by the spectrum of the incident sound, which depends on the object's location in the emitted beam. (B) Spectrograms illustrating the stream of echoes from the scene show FM sweeps in broadcast sound (left) and the sequence of echoes at progressively longer delays. Target and clutter echoes are mixed together. The target at long range is detected from the lowest frequencies of 25–30 kHz in FM1, because only these travel far through the air. (C) Spectrograms of sample echoes used in experiments on delay perception and clutter. Echo can have a flat spectrum or be modified by lowpass filtering, by overlapping glint reflections (nulls spaced at Δf intervals reciprocal to glint spacing) or by deliberate splitting of harmonics at different harmonic delays (e.g. FM2 300 μs later than FM1).

Fig. 2.

Separability of target and location acoustics in biosonar scenes. (A) Spectra of big brown bat FM echolocation sounds radiated in different horizontal directions (Hartley and Suthers, 1989). Spectra become progressively more lowpass filtered as the azimuth shifts farther off to the side. (B) Spectra of broadcasts impinging on the beam's axis at different distances because of atmospheric absorption (Stilz and Schnitzler, 2012). Only lowpass filtering occurs. (C) Spectra for a series of echoes of 50 rapidly produced FM incident sounds reflected by a fluttering moth (Moss and Zagaeski, 1994) (one wingbeat period is shown by the vertical arrow). The speckled character of moth spectra caused by local spectral nulls and lack of lowpass filtering distinguishes insect echoes from echoes arriving from off-side or far away. Color scales show dB spectral level relative to strongest value.

When hunting for prey, big brown bats often fly in proximity to vegetation, and they even fly up to the vegetation to pick insects off leaves (Simmons, 2005). They regularly fly through screens of branches and leaves to emerge on the other side. To follow an insect or to determine whether the path forward is unobstructed and it is safe to proceed, they have to pay attention to the space in front (Barchi et al., 2013; Hiryu et al., 2010; Petrites et al., 2009). Big brown bats not only emit a wide beam that impinges on objects off to the sides as well as to the front (Fig. 1A, Fig. 2A) (Hartley and Suthers, 1989; Ghose and Moss, 2003), but they also receive echoes through their external ears from all objects over a wide region extending from the front and to the left for the left ear, and from the front and to the right for the right ear (Aytekin et al., 2004; Müller, 2004). These bats accurately track nearby moving targets with the aim of the head (Masters et al., 1985; Ghose and Moss, 2006), which centers the axis of the broadcast beam on the selected target, thus minimizing its motion within the beam to deliver the full broadcast spectrum onto the object. Tracking also aligns the axes of the left-ear and right-ear receiving beams so they overlap on the target. As a result, both the sounds impinging on the target and the echoes stimulating the auditory system have relatively flat spectra, with no lowpass filtering due to location. This isolates the spectral ripple caused by target shape to facilitate object classification in flight (Simon et al., 2014; Falk et al., 2011). Bats also receive much stronger reflections from clutter located off to the sides (Fig. 1A) (Müller and Kuc, 2000; Yovel et al., 2009; Stilz and Schnitzler, 2012). Tracking guarantees that, while the object of interest always is ensonified by the full broadcast spectrum, off-side clutter is ensonified by lowpass versions of the broadcast (Fig. 2A). Targets are segregated from clutter using this distinction (Bates et al., 2011).

Clutter interference occurs when echoes from a target of interest in one direction arrive at the same delay as echoes from other objects in different directions. Their simultaneity impairs, or masks, the bat's ability to perceive the desired echoes. Fig. 3 illustrates the acoustic structure of the scene shown in Fig. 1A in relation to the potential for clutter interference based on the locations of echo sources. Objects in the broadcast beam are replaced with acoustic reflecting points (blue dots) in Fig. 3. The insect contains two prominent glints (g1 for head, g2 for wing), so the echo from the insect consists of two closely spaced reflections that overlap and mix together when they are received by the bat's ears. The surrounding vegetation is depicted as clusters of reflecting points distributed at different distances around the bat. The curved purple bands in Fig. 3 delineate the zones of potential masking that result from each of the clutter sources. Because the vegetation is widely distributed in depth, the entire space to the bat's front is filled by bands of potential interference. Two of the clutter arcs are in positions to mask the glints (g1, g2) in the insect. The extended nature of the clutter also can prevent the bat from determining that there are no echoes present at most distances, which would signify that the upcoming path is safe, that is, free of collision hazards.

Fig. 3.

Acoustics of the sonar scene from Fig. 1A showing only the reflecting points (blue circles). Echoes from vegetation create clutter bands (curved purple arcs) that can obscure (i.e. mask) the presence of target echoes if they arrive at the same delays. Insets (A–D) illustrate the effects of object location on inner-ear acoustic (blue) auditory system neural spectrograms (red) of echoes from representative reflecting points (bullseyes). Auditory spectrograms have a logarithmic vertical frequency axis, which straightens lines tracing FM1 and FM2 compared with the curved sweeps shown in Fig. 1B. (A) Emitted sound contains equal-strength FM1 and FM2 with an overall flat spectrum. The neural spectrogram (red) is uniformly later than the acoustic spectrogram (blue) because of the latency of neural on-responses. (B) The flat-spectrum ‘ideal’ echo from a nearby one-glint target is a weakened, delayed replica of broadcast. Its neural spectrogram (red) is slightly retarded relative to the broadcast neural spectrogram (dashed red sloping lines) because of amplitude–latency trading. (C) Ripple-spectrum echo from a two-glint insect (g1, g2) located straight ahead, which is kept on the beam's axis by the bat's tracking response. Regularly spaced weak points in the acoustic spectrogram show interference nulls (*). In the neural spectrogram, the weak nulls are transposed into locally longer latencies by amplitude–latency trading, giving the sloping red lines a zig-zag shape. (D) Echoes from off-side or distant clutter have lowpass spectra because of frequency-dependent beam width and atmospheric absorption. The neural spectrogram for progressively weaker higher frequencies in FM2 is both retarded in time and skewed in slope because of amplitude–latency trading.

Auditory representation of biosonar scenes

Both the outgoing biosonar sounds and the returning echoes are received by the bat's ears and registered by neural responses in the auditory system. First, successive frequencies in the FM sweeps are registered mechanically at different locations along the high-frequency to low-frequency tonotopic axis of the organ of Corti in the bat's cochlea. Second, separate subpopulations of neurons tuned to different frequencies by their inner-ear receptors respond in a corresponding temporal sequence to these frequencies in an FM sweep. One important differences is that, unlike the linear frequency scale of conventional spectrograms (Fig. 1B,C), the frequency scale developed by the inner ear is approximately logarithmic. Neural frequency tuning preserves the inner ear's logarithmic frequency representation in corresponding tonotopic maps at all levels of the bat's auditory nervous system (Covey and Casseday, 1995; Pollak and Casseday, 1989; Simmons, 2012). Third, and most crucial, each neuron at successive stages of auditory processing produces an average of only one spike in response to its tuned frequency in an FM sound that mimics biosonar stimulation [phasic on-response (Covey and Casseday, 1995; Neuweiler, 2000; Pollak and Casseday, 1989)]. When stimulated by ‘ordinary’ sounds such as tone bursts, noise bursts or complex communication sounds, single neurons throughout the bat's auditory system mostly respond with multiple spikes. But, for the restricted category of rapid wideband FM sweeps used for echolocation, this complexity is discarded (Sanderson and Simmons, 2002; Simmons, 2012).

In Fig. 3, the illustrated auditory representations are time–frequency plots – spectrograms – but with a vertical logarithmic frequency scale as used by the bat, not the linear frequency scale of conventional spectrograms as in Fig. 1B,C. Two types of spectrogram are shown: acoustic spectrograms (blue), representing the frequency scale of the bat's inner ear, and neural spectrograms (red), representing the volley of single-spike responses evoked by each FM sound in neurons within the bat's nervous system. The acoustic spectrograms are acoustico-mechanical in nature. They register vibrations on the basilar membrane induced by individual frequencies in the FM sweeps of the broadcast or the echo (Neuweiler, 2000). The FM sweeps in Fig. 3 appear linear, not curved as in Fig. 1B, because of the logarithmic frequency axis of the auditory system. The neural spectrograms (red) trace the time-of-occurrence of successive frequencies in FM1 or FM2 by the timing, or latency, of single spikes evoked from one frequency to the next. Behavioral tests of the big brown bat's ability to distinguish continuous from stepwise FM sweeps suggest that there are approximately 80 parallel frequency channels to cover frequencies of 25 to 75 kHz, which span all of FM1 and part of FM2 (Roverud and Rabitoy, 1994). Thus, there probably are approximately 100 parallel frequency channels for the entire biosonar band up to 100 kHz. At any given instant, the corresponding frequencies in the harmonic sweeps have a 2:1 frequency ratio, and the spikes evoked by those corresponding frequencies will occur simultaneously. In other words, the auditory representation of the harmonics will be coherent, with the FM1 and FM2 traces in the acoustic (blue) and neural (red) spectrograms correctly aligned in time. As described below, echoes that have coherently aligned harmonics are perceived very acutely (Bates and Simmons, 2011). However, the auditory system's mode for representing changes in strength at different frequencies can cause the harmonic traces to become misaligned at some frequencies. The acoustic spectrograms for the inner ear (blue) are similar to the conventional spectrograms in Fig. 1B in that changes in the strength of the sounds at different frequencies are represented by changes in the brightness or thickness of the blue lines. At each frequency, the numerical value of the sound's amplitude is retained as the numerical value on the intensity axis – the third dimension of the spectrogram. Unlike the acoustic spectrograms, changes in the strength of the sounds at different frequencies in the auditory spectrograms (red) are represented instead by shifts in the latency of the responses at those frequencies (Fig. 3). This effect is ‘amplitude–latency trading’ (Pollak and Casseday, 1989). As the sound becomes weaker at any given frequency, the single-spike responses traced by the red lines occur later (i.e. shift to the right) by approximately 15 μs dB−1 (Simmons, 2012). Different points on the red lines in Fig. 3 shift to the left or right according to the strength of the echo from one frequency to the next. This transformation from stimulus amplitude to response timing is unique to auditory representation.

The inner ear's acoustic spectrogram of the broadcast (blue, Fig. 3A), faithfully traces the FM1 and FM2 harmonic sweeps. These are traced again by neural responses making up the corresponding auditory spectrogram (red, Fig. 3A). A slight rightward shift of the neural spectrogram as a whole (rightward-pointing red arrows) is due to the minimum activation latency (~1 ms) between initial mechanical stimulation of auditory receptors and the actual production of spikes in response to that activation. For each broadcast, the neural spectrogram serves as the bat's internal reference with which to recognize subsequent echoes (Simmons, 2012). The next broadcast also is picked up by the bat's inner ears, and a new internal reference is created for the next stream of echoes. Retention of the broadcast template for comparison with later-arriving echoes takes place in the inferor colliculus, where a there is a huge proliferation of neurons that have different latencies for their single spikes, from approximately 5 to 30 ms or more at each frequency (Covey and Casseday, 1995; Simmons, 2012). The delay of the echo – the bat's cue for target range – is extracted from the time that elapses between the volley of single-spike responses to the broadcast and the volley of single-spike responses to the echo. At a higher level, neurons in the big brown bat's auditory cortex respond only to pairs of sounds, the broadcast and the echo, and then only if the sounds' time separation fits the relative latencies of the cells that ultimately drive them from the inferior colliculus. These neurons are ‘delay-tuned’ to represent target range (Neuweiler, 2000; Simmons, 2012). With regard to spectra nulls, different degrees of sharpness for frequency tuning in the inferior colliculus match the different degrees of narrowness for the nulls in the spectra of echoes from insects (Fig. 2C), but this frequency tuning is too narrow to match the much broader spectral shape associated with lowpass filtering (Fig. 2A,B). Frequency tuning eventually affects responses in the bat's auditory cortex, where most delay-tuned neurons manifest additional selectivity for spectral nulls (see Simmons, 2012). Cortical responses emerge much more readily when the echo spectrum contains local regions of low amplitude, or speckled nulls (Sanderson and Simmons, 2002).

Types of echo

The first example of an echo in Fig. 2B is from an ‘ideal’ target, which consists of a single reflecting point that returns all of the frequencies in the incident sound equally (flat-spectrum echo, Fig. 1C). Its echo is just a delayed, weakened replica of the broadcast. Thus, the ideal echo has both an acoustic and an auditory spectrogram that resemble those of the broadcast itself. Note that the neural spectrogram (red) of the ideal echo in Fig. 3B is slightly later in time than the auditory spectrogram of the broadcast in Fig. 3A (rightward-pointing red arrows are longer). The uniformly lower strength of the ideal echo relative to the broadcast engages amplitude–latency trading uniformly, and the shape of the auditory spectrogram is unchanged (same slopes for the red lines in Fig. 3A,B).

For real objects, such as insects, typical target strengths (the reduction in echo strength attributable to the process of reflection alone) range from approximately −10 to −50 dB (Houston et al., 2004; Simmons and Chen, 1989). The insects hunted by big brown bats [e.g. beetles, moths and orthopterans (Clare et al., 2013)] are large enough (10–60 mm) relative to the bat's broadcast wavelengths (3.4 to 17 mm) that echoes are formed with approximately equal strength across all frequencies based on the insect's cross-sectional size and resulting gross reflectivity (Houston et al., 2004). However, the spectrum of insect echoes is dominated by rapid fluctuations of amplitude across frequencies, i.e. ripple (Fig. 2C), not the smooth, monotonic decline in strength as frequencies increase (Fig. 2A,B). In Fig. 3, the insect is depicted as consisting of two glints, one (g1, the head) slightly closer than the other (g2, a wing). For sonar purposes, the spacing of the reflecting parts at slightly different distances is the target's ‘shape’. For the insect sizes preyed upon by big brown bats (linear dimensions of 0.5–6 cm), the time separation of the glint reflections ranges from approximately 30 to 360 μs. These glint reflections overlap at the bat's ears to interfere with each other, creating cancellation nulls (* in Fig. 3C) at specific frequencies and spaced at specific frequency intervals according to the time separation of the glint reflections (Δf in Fig. 1C) (Neuweiler, 2000; Simmons, 2012). For a two-glint delay separation of 100 μs (17 mm glint spacing in depth), the frequency spacing of the nulls (Δf) is 10 kHz. For a two-glint separation of 300 μs (51 mm glint spacing), the null spacing is 3.3 kHz. Because the nulls are local reductions in amplitude at particular frequencies, they translate into local retardations in the latency of neural responses (rightward-pointing segments in two-glint red neural spectrogram in Fig. 3C). Neural responses at nulls are retarded by up to 1–2 ms compared with responses at neighboring frequencies on the shoulders of the null (Simmons, 2012). These give the neural spectrograms of insect echoes their characteristic ‘zig-zag’ trace (red arrows in Fig. 3C).

Off the axis of the broadcast beam (Fig. 1A), the target's location imposes a broad reduction in the strength of the incident sound, and therefore of the echoes, that is biased towards all of the higher frequencies (Fig. 2A). This lowpass filtering is manifested in echo spectrograms by fading of the curved ridge representing FM2 (Fig. 1C). FM2 is progressively weaker not only at greater off-side locations but also at greater distances because of atmospheric absorption at higher frequencies. The most distant targets return only the lowest frequencies in FM1 (Fig. 1B) (Stilz and Schnitzler, 2012). In Fig. 3D, the off-side or distant clutter echo has an acoustic spectrogram (blue) that indicates progressive weakening of higher frequencies by its progressively weaker blue hue. In the neural spectrogram (red), the longer latencies at higher frequencies skew the slope of the line that traces the sweep in FM2 relative to the sweep in FM1. This distortion of the timing of neural responses breaks the normal coherence of harmonically related frequencies. Any given frequency in FM2 no longer is registered simultaneously with its corresponding frequency in FM1. Loss of synchrony between FM1 and FM2 dramatically affects the bat's perception of target distance and shape.

Perception of echo delay

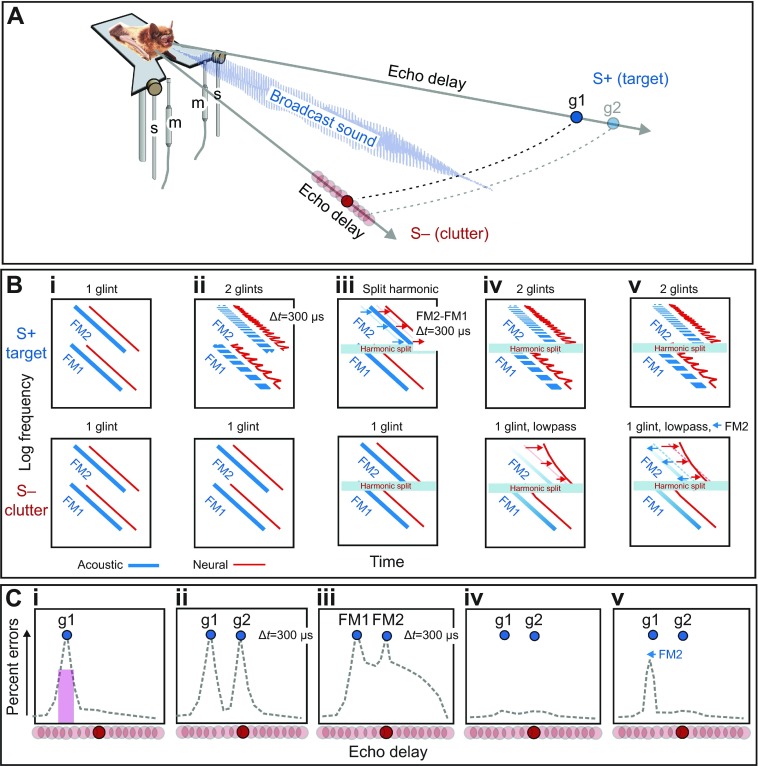

Fig. 4A shows the two-choice psychophysical procedure used to measure the bat's perception of delay and evaluate the strength of clutter masking caused by echoes that arrive at similar delays. In the simplest case, a big brown bat is trained to sit on an elevated, Y-shaped platform and broadcast a series of its sonar sounds to find a nearer target (S+) at a fixed distance (e.g. 30 or 60 cm) on one side and respond by crawling forward toward it, for food reward (Simmons, 1973). The target on the other side (S−) initially is located farther away (e.g. 40 or 70 cm), but it gradually is moved closer in small steps until both targets are at the same distance. From trial to trial, the targets are alternated randomly on the left and right. The horizontal angle separating S− from S+ is 40 deg, which keeps both targets well inside the beam of the broadcasts. Both targets are strongly ensonified, and the bat has no way to avoid receiving echoes from both sides simultaneously. Fig. 4B shows various acoustic (blue) and auditory spectrograms (red) for stimuli used in five representative two-choice tests of delay perception and clutter masking. Fig. 4C shows idealized plots for the mean performance of several bats in these five tasks (numerical data in Figs 5, 6). The dashed lines trace the errors that bats make in the two-choice task. As the difference in distance between S− and S+ decreases, the bats begin to make more errors in choosing the correct target (Fig. 4Ci) (Simmons, 1973). The error rate reaches a peak when the delay of S− matches the delay of S+. The location of the error peak indicates the delay where the bat perceives S− to have the same delay as S+. This increase in errors constitutes clutter masking of S+ by S−. Fig. 5A shows numerical data for two representative experiments with S+ at a distance of either 30 or 60 cm (pink curves). The width of the error peak is approximately 2 cm, which suggests the scale of individual curved clutter bands in Fig. 3.

Fig. 4.

Experimental methods and explanation of stimuli. (A) Two-choice behavioral procedure used to measure perceived distance to rewarded S+ target echoes by tracing the masking effect of unrewarded S− clutter echoes. The perceived distance of the S+ target is traced by plotting the bat's errors when S− is presented at different delays that bracket the fixed delay of S+. The bat sits on a Y-shaped platform and emits a series of FM sounds while it makes its choice. For real targets, two objects are placed at specified distances on the left and right of the bat. For electronic echoes, the bat's broadcasts are picked up with microphones (m) on the bat's left and right, and returned as electronically delayed echoes from loudspeakers (s), also on the left and right. (Bi–v) Schematic acoustic (blue) and neural (red) spectrograms of S+ target and S− clutter stimuli for five crucial experiments. (Ci–v) Schematic plots of percentage errors from the bat's choices for stimuli in Bi–v (numerical data in Figs 5, 6). (Bi,Ci) One-glint S− echoes used to locate the perceived position of one-glint S+ by error peak signifying masking of perceived delay. Vertical purple bar in Ci relates error peak to curved clutter arcs in Fig. 2A. (Bii,Cii) One-glint S− echoes used to locate the perceived position of two-glint S+, which yields two error peaks (g1, g2). (Biii,Ciii) One-glint S− echoes used to locate the perceived position of split-harmonic S+ echoes (FM2 300 μs later than FM1), which yields two error peaks surrounded by a broad error region. (Biv,Civ) Masking of two-glint S+ echoes is prevented (no error peaks) by lowpass filtering of one-glint S− echoes, which induces amplitude–latency trading and misalignment of FM2 relative to FM1 in neural spectrograms. (Bv,Cv) Masking caused by lowpass clutter is restored through electronically shifting FM2 earlier to offset amplitude–latency trading.

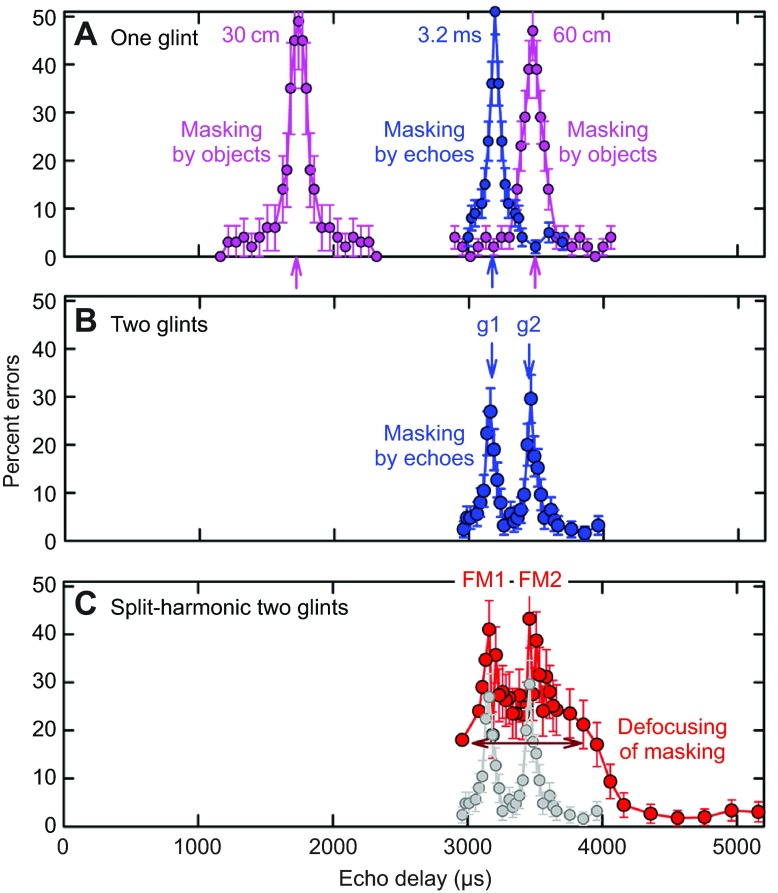

Fig. 5.

Two-choice behavioral results measure clutter masking by an increase in error rate when clutter echoes and target echoes coincide. Horizontal axis plots echo delay for target distance (58 μs cm−1); vertical axis plots mean percentage errors (error bars indicate ±1 s.d.). (A) Numerical data for Fig. 4Ci. Pink curves show masking of echoes from fixed one-glint target (S+) by same-strength one-glint moveable clutter (S−) (S+ at 30 cm, mean of eight bats; S+ at 60 cm, mean of four bats). Blue curve shows masking of electronic echoes when one-glint clutter echoes are at the same delay as one-glint target echoes (S+ at 3.2 ms, corresponding to 55 cm). (B) Numerical data for Fig. 4Cii. Blue curve shows the mean performance of four bats for two-glint S+ electronic test echoes (g1, g2; delivered at fixed delays of ~3200 and 3500 μs for 300 μs glint separation) when one-glint electronic probe echoes are delivered at various delays. Two peaks in error curves show that the bats perceive both glints. (C) Numerical data for Fig. 4Ciii. Red curve shows the four bats' mean performance for split-harmonic test echoes with a 300 μs deliberate lag of FM2 relative to FM1. Two error peaks show that the bats register the delay of each harmonic (FM1, FM2), but these peaks ride on top of a broad pedestal of errors that surround each peak (horizontal red arrow). Widening of the curve shows defocusing of the bat's image for the two-glint target when the harmonics are misaligned. Grey curve repeats blue curve from B.

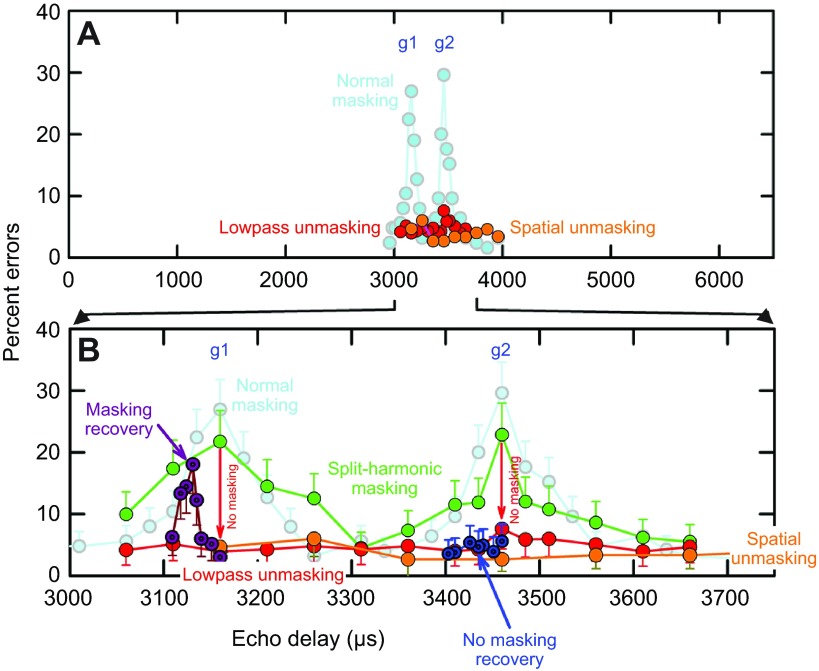

Fig. 6.

Manipulation of echoes that causes defocusing also prevents clutter masking. (A; expanded plot in B) Light blue curve shows normal masking of two-glint test echoes by one-glint probe echoes (from Fig. 5B). Green curve shows same masking when split-harmonic filters clip out 45–55 kHz from two-glint S+ and one-glint S− echoes at the FM1–FM2 boundary. FM1 and FM2 harmonics have the same delay, however, so two error peaks still appear in the curve. Red curve (lowpass unmasking) shows loss of masking (no error peaks; red ‘no masking’ downward-pointing arrows) when only one-glint probe echoes (clutter) are mildly lowpass filtered, which retards and skews auditory responses to FM2 (numerical data for Fig. 4Civ). Orange curve (spatial unmasking) shows loss of masking (no error peaks) when probe echoes (clutter) are presented from 90 deg overhead and 30 deg off to the side, with no electronic lowpass filtering. Overhead clutter echoes are lowpass filtered upon reception by the bat's ears. Dark purple curve shows masking recovery for g1. Masking of g1 is restored when FM2 is moved earlier to compensate for amplitude–latency trading (numerical data for Fig. 4Cv). Peak of masking recovery occurs for FM2 31 μs earlier than FM1. This refocuses the bat's image for the clutter (see Fig. 5A) but also restores the capacity for masking. Dark blue curve shows that FM2 latency compensation does not restore masking for g2.

Several different experiments have used an electronic version of the two-choice masking experiment (e.g. Bates and Simmons, 2010; Bates and Simmons, 2011; Simmons, 1973; Simmons et al., 1990a; Simmons et al., 1990b; Stamper et al., 2009). Fig. 5A also shows results from one such experiment performed with the S+ target simulated by echoes arriving at a delay of 3.2 ms (blue curve for S+ at 3.2 ms). This delay corresponds to a target range of 55 cm. S− clutter echoes vary in delay from 3.7 ms down to 3.0 ms in small steps. In this electronic example, the width of the resulting error peak (the clutter zone) is approximately 100 μs. For both the real objects' echoes (pink curves) and the electronic echoes (blue curve), S+ and S− had the same strength (~70–75 dB SPL peak to peak). In a related clutter masking experiment, also with big brown bats, when the amplitude of the clutter echoes was 22 dB stronger than the target echoes, the total depth of the clutter zone expanded to at least 20 cm (Sümer et al., 2009). Natural clutter – vegetation – consists of multiple reflectors such as clusters of leaves that are larger than insects and densely distributed in range, so the potential for clutter masking can be expected to fill much of the space in front of the bat in Fig. 1A.

Big brown bats discriminate between objects based on their glint configurations (DeLong et al., 2008; Simmons and Chen, 1989; Simmons et al., 1990a; Simmons et al., 1990b). If the glints are closer together than approximately 6 cm, so that the glint reflections arrive within approximately 360 μs of each other, the reflections combine to form a single echo that has a single delay plus a rippling pattern of spectral nulls. The frequency spacing of these nulls (Δf in Fig. 1C) is the reciprocal of the time separation of the reflections (100 or 300 μs in Fig. 1C). Big brown bats sense the pattern of the nulls to perceive the separation in delay between the reflections (Simmons, 2012). For example, when S+ in Fig. 4Bii consists of two overlapping reflections (g1, g2) at delays of 3.2 and 3.5 ms (Δt=300 μs), the bat's error rate (Fig. 4Cii) increases when the one-glint S− clutter echoes coincide in delay with either of the S+ glint reflections (Fig. 5B) (Bates and Simmons, 2011).

The two harmonics (FM1, FM2) always are aligned in the bat's broadcasts and also in flat-spectrum echoes from nearby objects located on the axis (Fig. 1C). In fact, they are aligned in all echoes, just weaker at higher frequencies for lowpass echoes. They also are aligned in the inner ear acoustic spectrograms of these echoes (blue lines, Fig. 3). However, in the corresponding neural spectrograms for lowpass-filtered echoes (red, Fig. 3D), registration of the harmonics becomes misaligned because of amplitude–latency trading. Temporal misalignment of the responses to the harmonics affects the bat's perception of echo delay. In Fig. 4Biii, S+ and S− echoes each consist of a single glint reflection, as in Fig. 4Bi, and both have been electronically filtered to separate the harmonics (indicated by ‘harmonic split’ horizontal blue bar segregating FM2 from FM1). This allows FM2 to be delivered at a different delay than FM1. In the illustrated experiment, FM2 is delayed by 300 μs more than FM1 (FM1 at 3.2 ms, FM2 at 3.5 ms). The one-glint S− echoes (Fig. 4Biii) have no such harmonic misalignment, but, as a control, they do have the harmonic-split electronic separation in place (same horizontal blue bar). The performance of bats is shown schematically in Fig. 4Ciii, with numerical results in Fig. 5C (red curve) (Bates and Simmons, 2011). There still are two narrow error peaks separated by 300 μs. These correspond to the delays of FM1 at 3.2 ms and FM2 at 3.5 ms. Their presence indicates that the misaligned timing of the harmonics leads the bat to perceive a corresponding echo delay for FM1 and FM2. What is peculiar is the presence of a broad region of errors – in essence, a much wider error peak – superimposed on the two narrow peaks associated directly with the harmonics (Fig. 4Ciii, Fig. 5C). This broad peak is fully 1 ms across (horizontal red arrow, Fig. 5C). It represents a serious degradation of overall delay acuity – a defocusing of the bat's image. The defocusing effect has an abrupt onset that is revealed by misaligning FM2 in smaller time steps from 0 to 300 μs. A harmonic misalignment as little as 3 μs produces significant defocusing, and FM2 offsets of 5–25 μs lead to fully defocused images (Bates and Simmons, 2011). Split-harmonic echoes are a useful experimental tool, but they are not naturally occurring sounds. However, lowpass filtering of echoes (Fig. 1C) does occur naturally as a result of the location of the object in the broadcast beam, and it also causes the neural registration of FM2 to become misaligned with FM1 because of amplitude–latency trading. Broad defocusing of the bat's delay image occurs for lowpass S+ echoes as well as split-harmonic S+ echoes, thus demonstrating the interchangeability of natural lowpass neural misalignment and artificial electronic misalignment of FM2 (Bates and Simmons, 2010). The broad peak of errors illustrated in Fig. 5C that signifies defocusing is ubiquitous for perception of S+ echoes with misaligned neural responses to the harmonics. What consequences does defocusing have for the capacity of the S− echoes to cause masking of normal S+ echoes?

Mitigation of clutter interference

The experiments that yielded the results illustrated in Fig. 5 were carried out by shifting the presentation of clutter (S−) echoes to different delays around the delay of target echoes (S+) (Fig. 4A). The manipulations illustrated in Fig. 4Ci–iii were applied only to the S+ target echoes. Fig. 4Biv,v and 4Civ,v illustrate two different experiments in which the spectrum of S− echoes was manipulated instead of S+ echoes, which kept the same 300 μs glint separation shown in Fig. 4Biii and 4Ciii. When the S− clutter echoes had a flat spectrum, the results showed two narrow error peaks, one for each glint (g1, g2; Fig. 4Cii, Fig. 5C). In contrast, when the S− clutter echoes are lowpass-filtered, the error peaks for S+ (g1, g2) disappear entirely (Fig. 4Civ), even though the S+ echoes are unchanged. Fig. 6A shows numerical results for this experiment. Compared with the normal two-glint experiment (faded blue curve in Fig. 6, or green curve in Fig. 6B for split-harmonic filters in place but no lowpass filtering of S−), lowpass-filtered S− echoes do not produce error peaks when they arrive at the same delays as the two-glint reflections in S+ (g1, g2) (red curve in Fig. 6). The masking effect of the clutter is gone (Bates et al., 2011). The same loss of masking also occurs if the S− echoes have electronically flat spectra when emitted by the loudspeakers but are delivered from loudspeakers located overhead and slightly to one side (orange curve, Fig. 6). This off-side location engages lowpass filtering by the receiving beams of the external ears (Warnecke et al., 2014; see Aytekin et al., 2004). Thus, the same manipulations of echoes that cause harmonic misalignment and delay-image defocusing (e.g. Fig. 5C) also cause a release from clutter masking (Fig. 6). Defocusing is a dispersal of the originally sharp perception that is expected from the temporal binding hypothesis, which requires synchronization of responses to the harmonics for a sharp image to be formed. However, defocusing applied to the clutter echoes reduces their capacity to interfere with perception of target echoes. The origin of defocusing is lowpass filtering, which is associated with an object's off-side or distant location in the scene (Fig. 2A,B, Fig. 3D). By exploiting the chain of events from lowpass filtering to misalignment of neural responses evoked by echo harmonics to defocusing of the resulting images, bats may acquire the ability to operate effectively in cluttered surroundings. Echoes from nearby, on-axis objects have, on average, flat spectra except for spectral ripple (Fig. 2C). By tracking the target, the bat can form focused images of targets for purposes of classification while also preventing clutter interference from impairing perception of the target. This finding could have a significant technological bonus if the bat's auditory defocusing mechanism can be incorporated into a biomimetic sonar design to suppress clutter interference, which is the bane of man-made sonar and radar systems (Denny, 2007; Stimson, 1998).

Can the mitigation of clutter interference caused by lowpass filtering be reversed by directly shifting the timing of FM2 earlier than FM1 to compensate for the amplitude–latency trading that retards the neural responses to FM2? This manipulation does restore focus to images that have been defocused. For example, a 3 dB reduction in FM2 relative to FM1 causes ~48 μs of additional neural delay from amplitude–latency trading, which leads to defocusing of delay perception (Bates and Simmons, 2011). If FM2 is reduced in amplitude by 3 dB but also moved earlier by 48 μs, to compensate for the amplitude–latency trading, the sharpness of delay perception is restored (Bates and Simmons, 2010). This finding unambiguously identifies neural synchronization as the crucial factor for sharp imaging. It also satisfies the requirement for validating the temporal binding hypothesis that the perception be disrupted by desynchronizing the neural responses, and restored by resynchronizing the responses. The same methodological reciprocity applies to clutter masking: if lowpass filtering of S− retards the neural responses to FM2 (Fig. 4Biv), and leads to unmasking (Fig. 4Civ, Fig. 6), then moving FM2 to a shorter delay than FM1 while keeping the lowpass filtering in place ought to restore the alignment of the neural responses to the harmonics in S− and lead to recovery of masking (Fig. 4Bv, 4Cv). When the delay of FM2 is electronically shortened by 0 to 50 μs relative to the delay of FM1 in lowpass echoes, the error peak signifying clutter masking of the first glint (g1) reappears at approximately −30 μs of compensation (purple ‘masking recovery’ curve, Fig. 6B) (Bates et al., 2011). Curiously, the same amplitude–latency compensation does not restore clutter masking for the second glint (g2) (dark blue ‘no masking recovery’ curve, Fig. 6B), perhaps because this second glint is extracted from the ripple pattern of interference nulls in S+ echoes, not from the timing of neural responses evoked by the S− echoes. Realignment of responses to FM2 with responses to FM1 is only approximate; it does not remove the skewing of the sloping line for responses to FM2 in lowpass echoes (Fig. 4Bv), only the average timing of the neural responses, as reflected in the restoration of making for the first glint. The global lowpass effect may prevent registration of the local ripple necessary to reconstruct the second glint in the image.

Conclusions

Experiments that manipulate the synchronization of neural responses to the harmonics in FM biosonar echoes support the temporal binding hypothesis for formation of perceived images. Sharp, focused images are perceived for echoes that evoke synchronized responses to harmonics. Diffuse, defocused images are perceived for echoes that evoke desynchronized responses to harmonics. These results suggest that the bat's sonar can serve as a vehicle for the validating the temporal binding hypothesis. However, they go beyond the initial concern to address the question of whether the focusing or defocusing of images might be related to attention and its opposite, inattention. Defocused images derived from lowpass or off-side echoes appear not to interfere with perception of focused images derived from on-axis echoes. The bat's target-tracking response keeps the target in the zone of full-spectrum ensonification to yield echoes that have no lowpass filtering and that evoke synchronized responses to echo harmonics. The ripple spectrum crucial for perceiving the target's shape (Fig. 2C) is distinct from the spectral distortion of lowpass filtering (Fig. 2A,B). In addition, the acoustic separability of smooth spectral modulation caused by lowpass filtering and rapid spectral modulation caused by ripple is backed up by specific neural mechanisms that are selective for echoes contain only the spectral ripple. These mechanisms are manifested by null-selective neurons in the bat's auditory cortex (Sanderson and Simmons, 2002; Simmons, 2012). By perceiving focused images of on-axis targets, bats are able to classify objects by their shape – an indication that they are paying attention to the target, not to the surrounding clutter. The very definition of inattention is the disappearance of objects from perception. By perceiving defocused images of the clutter, with the attendant reduction in masking, are bats exploiting the desynchronization of neural responses to relegate the clutter to the unattended surround? The boundary between focused and defocused images is very categorical – only a few microseconds of misalignment between responses to FM2 and responses to FM1 is sufficient to cause defocusing (Bates and Simmons, 2011). The abrupt, discontinuous transition to defocusing suggests that desynchronization of responses is the means by which inattention is imposed on objects in the surround.

The various effects of echo spectral and harmonic manipulations on the bat's perception of echo delay expose what might be perceptual principles involved in focused attention and defocused inattention. They highlight the central role of neural response timing for creating sharply focused images of echo delay for the object in the perceptual center (Fig. 4A,B), and by implication, the role of response timing for preventing masking by defocusing of images for objects is the perceptual surround. Experimental demonstration of the reversibility of masking and masking release by shifting the timing of neural responses to harmonics in biosonar echoes (Bates et al., 2011) ties the mechanisms of focusing and defocusing in perception to the mechanisms of attention and inattention. Big brown bats act as though they solve the clutter problem by paying attention to the target, which is tracked by the aim of the head and the axes of the emission and reception beams to transfer the full spectrum of the broadcasts into the echoes. Echoes from objects off to the sides or farther away necessarily are lowpass filtered, with weaker FM2 than FM1, and these echoes lead to blurred images of the clutter that do not cause clutter interference. The abrupt onset of defocusing suggests that it originates in an active process that detects even a small desynchronization of neural responses to FM2 relative to FM1 and imposes an abrupt collapse of image sharpness (Bates and Simmons, 2011). By keeping its sonar beam aligned on the target, or pointed down the immediately upcoming flight path, the bat may automatically ensure focused imaging of the desired space. The hard computational work may be in disabling the images derived from the clutter by detecting desynchrony of responses so that clutter masking does not occur.

Acknowledgements

This work was presented as the Heiligenberg Lecture to the International Society for Neuroethology (ISN) at the University of Maryland on 6 August 2012. The author thanks Prof. A. N. Popper, University of Maryland, and the ISN Program Committee for the invitation to give the lecture.

Footnotes

Competing interests

The author declares no competing financial interests.

Funding

Funding was provide by the Office of Naval Research [N00014-04-1-0415, N00014-09-1-0691]; the National Science Foundation [IOS-0843522]; the National Institutes of Health [R01-MH069633]; the NASA/RI Space Grant; and the Brown Institute for Brain Science. Deposited in PMC for release after 12 months.

References

- Aytekin M., Grassi E., Sahota M., Moss C. F. (2004). The bat head-related transfer function reveals binaural cues for sound localization in azimuth and elevation. J. Acoust. Soc. Am. 116, 3594-3605 [DOI] [PubMed] [Google Scholar]

- Aytekin M., Mao B., Moss C. F. (2010). Spatial perception and adaptive sonar behavior. J. Acoust. Soc. Am. 128, 3788-3798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker C. J., Smith G. E., Balleri A., Holderied M., Griffiths H. D. (2014). Biomimetic echolocation with application to radar and sonar sensing. Proc IEEE Inst. Electr. Electron Eng. 102, 447-458 [Google Scholar]

- Barchi J. R., Knowles J. M., Simmons J. A. (2013). Spatial memory and stereotypy of flight paths by big brown bats in cluttered surroundings. J. Exp. Biol. 216, 1053-1063 [DOI] [PubMed] [Google Scholar]

- Bates M. E., Simmons J. A. (2010). Effects of filtering of harmonics from biosonar echoes on delay acuity by big brown bats (Eptesicus fuscus). J. Acoust. Soc. Am. 128, 936-946 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates M. E., Simmons J. A. (2011). Perception of echo delay is disrupted by small temporal misalignment of echo harmonics in bat sonar. J. Exp. Biol. 214, 394-401 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates M. E., Simmons J. A., Zorikov T. V. (2011). Bats use echo harmonic structure to distinguish targets from clutter and suppress interference. Science 333, 627-630 [DOI] [PubMed] [Google Scholar]

- Clare E. L., Symondson W. O. C., Fenton M. B. (2013). An inordinate fondness for beetles? Variation in seasonal dietary preferences of night-roosting big brown bats (Eptesicus fuscus). Mol. Ecol. [Epub ahead of print] doi:10.1111/mec.12519 [DOI] [PubMed] [Google Scholar]

- Covey E., Casseday J. H. (1995). The lower brainstem auditory pathways. In Hearing by Bats (ed. Popper A. N., Fay R. R.), pp. 235-295 New York, NY: Springer; [Google Scholar]

- Crick F. (1984). Function of the thalamic reticular complex: the searchlight hypothesis. Proc. Natl. Acad. Sci. USA 81, 4586-4590 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crick F., Koch C. (2003). A framework for consciousness. Nat. Neurosci. 6, 119-126 [DOI] [PubMed] [Google Scholar]

- DeLong C. M., Bragg R., Simmons J. A. (2008). Evidence for spatial representation of object shape by echolocating bats (Eptesicus fuscus). J. Acoust. Soc. Am. 123, 4582-4598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denny M. (2007). Blip, Ping, and Buzz: Making Sense of Radar and Sonar. Baltimore, MD: Johns Hopkins University Press; [Google Scholar]

- Engel A. K., Singer W. (2001). Temporal binding and the neural correlates of sensory awareness. Trends Cogn. Sci. 5, 16-25 [DOI] [PubMed] [Google Scholar]

- Falk B., Williams T., Aytekin M., Moss C. F. (2011). Adaptive behavior for texture discrimination by the free-flying big brown bat, Eptesicus fuscus. J. Comp. Physiol. A 197, 491-503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman D. J., Van Essen D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1-47 [DOI] [PubMed] [Google Scholar]

- Ghose K., Moss C. F. (2003). The sonar beam pattern of a flying bat as it tracks tethered insects. J. Acoust. Soc. Am. 114, 1120-1131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghose K., Moss C. F. (2006). Steering by hearing: a bat's acoustic gaze is linked to its flight motor output by a delayed, adaptive linear law. J. Neurosci. 26, 1704-1710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley D. J., Suthers R. A. (1989). The sound emission pattern of the echolocating bat, Eptesicus fuscus. J. Acoust. Soc. Am. 85, 1348-1351 [DOI] [PubMed] [Google Scholar]

- Hiryu S., Bates M. E., Simmons J. A., Riquimaroux H. (2010). FM echolocating bats shift frequencies to avoid broadcast-echo ambiguity in clutter. Proc. Natl. Acad. Sci. USA 107, 7048-7053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holderied M. W., Korine C., Fenton M. B., Parsons S., Robson S., Jones G. (2005). Echolocation call intensity in the aerial hawking bat Eptesicus bottae (Vespertilionidae) studied using stereo videogrammetry. J. Exp. Biol. 208, 1321-1327 [DOI] [PubMed] [Google Scholar]

- Houston R. D., Boonman A. M., Jones G. (2004). Do echolocation signal parameters restrict bats' choice of prey? In Echolocation in Bats and Dolphins (ed. Thomas J. A., Moss C. F., Vater M.), pp 339-345 Chicago, IL: University of Chicago Press; [Google Scholar]

- Itti L., Koch C. (2001). Computational modelling of visual attention. Nat. Rev. Neurosci. 2, 194-203 [DOI] [PubMed] [Google Scholar]

- Koay G., Heffner H. E., Heffner R. S. (1997). Audiogram of the big brown bat (Eptesicus fuscus). Hear. Res. 105, 202-210 [DOI] [PubMed] [Google Scholar]

- Macías S., Mora E. C., Coro F., Kössl M. (2006). Threshold minima and maxima in the behavioral audiograms of the bats Artibeus jamaicensis and Eptesicus fuscus are not produced by cochlear mechanics. Hear. Res. 212, 245-250 [DOI] [PubMed] [Google Scholar]

- Masters W. M., Moffat A. J. M., Simmons J. A. (1985). Sonar tracking of horizontally moving targets by the big brown bat Eptesicus fuscus. Science 228, 1331-1333 [DOI] [PubMed] [Google Scholar]

- Moss C. F., Surlykke A. (2010). Probing the natural scene by echolocation in bats. Front. Behav. Neurosci 4, 33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moss C. F., Zagaeski M. (1994). Acoustic information available to bats using frequency-modulated sounds for the perception of insect prey. J. Acoust. Soc. Am. 95, 2745-2756 [DOI] [PubMed] [Google Scholar]

- Müller R. (2004). A numerical study of the role of the tragus in the big brown bat. J. Acoust. Soc. Am. 116, 3701-3712 [DOI] [PubMed] [Google Scholar]

- Müller R., Kuc R. (2000). Foliage echoes: a probe into the ecological acoustics of bat echolocation. J. Acoust. Soc. Am. 108, 836-845 [DOI] [PubMed] [Google Scholar]

- Neuweiler G. (2000). The Biology of Bats. New York, NY: Oxford University Press; [Google Scholar]

- Petrites A. E., Eng O. S., Mowlds D. S., Simmons J. A., DeLong C. M. (2009). Interpulse interval modulation by echolocating big brown bats (Eptesicus fuscus) in different densities of obstacle clutter. J. Comp. Physiol. A 195, 603-617 [DOI] [PubMed] [Google Scholar]

- Pollak G. D., Casseday J. H. (1989). The Neural Basis of Echolocation in Bats. New York, NY: Springer; [Google Scholar]

- Roelfsema P. R., Engel A. K., König P., Singer W. (1996). The role of neuronal synchronization in response selection: a biologically plausible theory of structured representations in the visual cortex. J. Cogn. Neurosci. 8, 603-625 [DOI] [PubMed] [Google Scholar]

- Roverud R. C., Rabitoy E. R. (1994). Complex sound analysis in the FM bat Eptesicus fuscus, correlated with structural parameters of frequency modulated signals. J. Comp. Physiol. A 174, 567-573 [DOI] [PubMed] [Google Scholar]

- Sanderson M. I., Simmons J. A. (2002). Selectivity for echo spectral interference and delay in the auditory cortex of the big brown bat Eptesicus fuscus. J. Neurophysiol. 87, 2823-2834 [DOI] [PubMed] [Google Scholar]

- Shadlen M. N., Movshon J. A. (1999). Synchrony unbound: a critical evaluation of the temporal binding hypothesis. Neuron 24, 67-77, 111-125 [DOI] [PubMed] [Google Scholar]

- Shamma S. (2001). On the role of space and time in auditory processing. Trends Cogn. Sci. 5, 340-348 [DOI] [PubMed] [Google Scholar]

- Simmons J. A. (1973). The resolution of target range by echolocating bats. J. Acoust. Soc. Am. 54, 157-173 [DOI] [PubMed] [Google Scholar]

- Simmons J. A. (2005). Big brown bats and June beetles: multiple pursuit strategies in a seasonal acoustic predator-prey system. Acoust. Res. Lett. Online 6, 238-242 [Google Scholar]

- Simmons J. A. (2012). Bats use a neuronally implemented computational acoustic model to form sonar images. Curr. Opin. Neurobiol. 22, 311-319 [DOI] [PubMed] [Google Scholar]

- Simmons J. A., Chen L. (1989). The acoustic basis for target discrimination by FM echolocating bats. J. Acoust. Soc. Am. 86, 1333-1350 [DOI] [PubMed] [Google Scholar]

- Simmons J. A., Ferragamo M., Moss C. F., Stevenson S. B., Altes R. A. (1990a). Discrimination of jittered sonar echoes by the echolocating bat, Eptesicus fuscus: the shape of target images in echolocation. J. Comp. Physiol. A 167, 589-616 [DOI] [PubMed] [Google Scholar]

- Simmons J. A., Moss C. F., Ferragamo M. (1990b). Convergence of temporal and spectral information in target images perceived by the echolocating bat, Eptesicus fuscus. J. Comp. Physiol. A 166, 449-470 [DOI] [PubMed] [Google Scholar]

- Simon R., Knörnschild M., Tschapka M., Schneider A., Passauer N., Kalko E. K. V., von Helversen O. (2014). Biosonar resolving power: echo-acoustic perception of surface structures in the submillimeter range. Front. Physiol 5, 64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stamper S. A., Bates M. E., Benedicto D., Simmons J. A. (2009). Role of broadcast harmonics in echo delay perception by big brown bats. J. Comp. Physiol. A 195, 79-89 [DOI] [PubMed] [Google Scholar]

- Stilz W.-P., Schnitzler H.-U. (2012). Estimation of the acoustic range of bat echolocation for extended targets. J. Acoust. Soc. Am. 132, 1765-1775 [DOI] [PubMed] [Google Scholar]

- Stimson G. W. (1998). Introduction to Airborne Radar. Mendham, NJ: Scitech; [Google Scholar]

- Sümer S., Denzinger A., Schnitzler H.-U. (2009). Spatial unmasking in the echolocating big brown bat, Eptesicus fuscus. J. Comp. Physiol. A 195, 463-472 [DOI] [PubMed] [Google Scholar]

- Surlykke A., Kalko E. K. V. (2008). Echolocating bats cry out loud to detect their prey. PLoS ONE 3, e2036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman A. (1999). Solutions to the binding problem: progress through controversy and convergence. Neuron 24, 105-125 [DOI] [PubMed] [Google Scholar]

- Uhlhaas P. J., Pipa G., Lima B., Melloni L., Neuenschwander S., Nikolić D., Singer W. (2009). Neural synchrony in cortical networks: history, concept and current status. Front. Integr. Neurosci. 3, 17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- von der Malsburg C. (1995). Temporal binding and the neural correlates of sensory awareness. Curr. Opin. Neurobiol. 5, 520-526 [DOI] [PubMed] [Google Scholar]

- von der Malsburg C. (1999). The what and why of binding: the modeler's perspective. Neuron 24, 95-104, 111-125 [DOI] [PubMed] [Google Scholar]

- Wallis G. (2005). A spatial explanation for synchrony biases in perceptual grouping: consequences for the temporal-binding hypothesis. Percept. Psychophys. 67, 345-353 [DOI] [PubMed] [Google Scholar]

- Warnecke M., Bates M. E., Flores V., Simmons J. A. (2014). Spatial release from simultaneous echo masking in bat sonar. J. Acoust. Soc. Am. 135, 3077-3085 [DOI] [PubMed] [Google Scholar]

- Yovel Y., Stilz P., Franz M. O., Boonman A., Schnitzler H.-U. (2009). What a plant sounds like: the statistics of vegetation echoes as received by echolocating bats. PLOS Comput. Biol. 5, e1000429 [DOI] [PMC free article] [PubMed] [Google Scholar]