Abstract

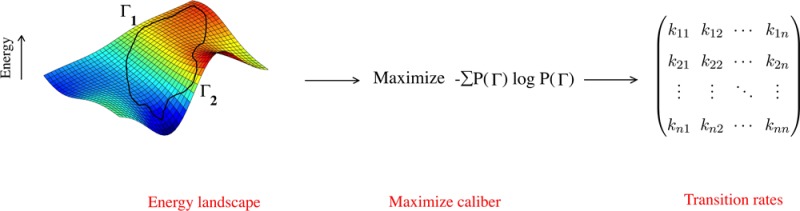

We present a principled approach for estimating the matrix of microscopic transition probabilities among states of a Markov process, given only its stationary state population distribution and a single average global kinetic observable. We adapt Maximum Caliber, a variational principle in which the path entropy is maximized over the distribution of all possible trajectories, subject to basic kinetic constraints and some average dynamical observables. We illustrate the method by computing the solvation dynamics of water molecules from molecular dynamics trajectories.

Introduction

We propose a method for inferring the kinetic rate matrix for stochastic systems for which the steady state popuations are known. The types of systems of interest include protein folding,1−3 ion channels,4 molecular motors,5 the evolutionary dynamics of protein sequences,6 the collective firing patterns of neurons,7 or noisy gene expression.8 In such studies, stationary probabilities {pi} of N stable states {i} are often known or estimated from experimental data,6,7 detailed molecular mechanics calculations,3 coarse grained theories,1,4 or from Maximum Entropy.6,7,9−12 Moreover, suppose further that we know some average global dynamical quantity, such as the average mutation rate (number of amino acid changes per unit time), the average current through an ion channel, or the average number of neurons that change their state from spiking to resting (and vice versa) per unit time step. How can we infer the full distribution of microscopic state-to-state rates, given just the stationary-state populations and one or more average overall rate quantities?

More specifically, we are interested in a principled way to solve the following under-determined “inverse” kinetics problem. Consider a stationary and irreducible Markov process among i = 1,2,3,...,N states. Suppose you know the following: (a) the stationary state probability distribution, {pi} of the occupancies of those states, and (b) the value ⟨w⟩ of some dynamical observable w averaged over the ensemble of stationary state trajectories. From these N + 1 quantities, we want to infer the N × N microscopic transition probabilities,

| 1 |

between those states.

While the stationary distribution indeed constrains the transition probability matrix, it does not uniquely determine it. Here, we develop a procedure based on the principle of Maximum Caliber, a variant of the principle of Maximum Entropy, that is applicable to dynamical processes.13,14 This variational principle allows us to uniquely infer ∼N2 transition probabilities that are consistent with ∼N imposed constraints.

First, we define the path entropy, S, over a given ensemble {Γ} of trajectories Γ ≡ ... → i → j → k → l... as

| 2 |

Maximum Caliber is a variational principle that chooses a unique probability distribution {P(Γ)} over trajectories from all possible candidate distributions as the one that maximizes the path entropy while otherwise satisfying the relevant stationary and dynamical constraints.13−16

Consider an ensemble of stationary state trajectories {Γ} (see above) having a total time duration T. Restricting our attention to first-order Markov trajectories allows us to greatly simplify the path entropy and carry out our analysis in terms of trajectories of single steps, i → j.2,17−19 The Markov property implies that the probability of any particular trajectory Γ can be expressed in terms of the transition probabilities {kij},

| 3 |

The path entropy of the above ensemble is directly proportional to the total duration T of the trajectory. The path entropy per unit time is given by20,21

| 4 |

The microscopic transition probabilities of any Markov process are subject to two types of constraints. First, from state i at time t, the system must land somewhere at time t + dt. Second, a system in state j at time t + dt must arrive from somewhere, so

| 5 |

Third, we require one additional constraint that is global, that is, averaged over the entire ensemble of trajectories. We fix the path ensemble average of some dynamical quantity w. The average ⟨w⟩Γ over any given stationary trajectory Γ is given by

| 6 |

The path ensemble average ⟨w⟩ is

| 7 |

Since Γ is a stationary state trajectory, the path ensemble average ⟨w⟩ of eq 7 simplifies to

| 8 |

Maximization of the path entropy subject to these three constraints

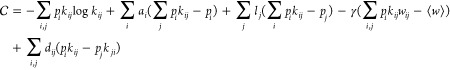

can be expressed equivalently in terms of maximization of a quantity

called the Caliber  :14

:14

| 9 |

where γ is the Lagrange multiplier associated with the constraint ⟨w⟩ and {ai} and {li} enforce the to-somewhere constraint and the from-somewhere constraint, respectively.

To solve for the matrix kij of transition probabilities, we take the derivative of the

Caliber  with respect

to kij and equate it

to zero. This gives

with respect

to kij and equate it

to zero. This gives

| 10 |

where we have made the substitutions: eai–1 = βi/pi and elj = λj. The values kij* are the transition probabilities that satisfy the constraints and otherwise maximize the caliber. For simplicity of notation, we drop the superscript * in the remainder of this paper, that is, kij ≡ kij.

In this problem, the values of pi are given. To compute the kij values, we first must determine the values of the Lagrange multipliers βi, λj, and γ. We do so by substituting the constraint relations mentioned above.

Determining the Lagrange Multipliers

For a given value of γ, the modified Lagrange multipliers βi and λj are determined by satisfying the to-somewhere and from-somewhere conditions indicated above. From eqs 5

| 11 |

where e–γwij = Wij. Equation 11 can be simplified if we define a nonlinear operator D over column vectors x̅ = [x1,x2,...]T as D[x̅]i = pi/xi. We have

| 12 |

where λ̅ = [λ1,λ2,...]T and β̅ = [β1,β2,...]T are the column vectors of Lagrange multipliers.

For a particular value of the Lagrange multiplier γ, eqs 12 can be numerically and self-consistently solved for {βi} and {λi}. In practice, we choose an appropriate γ by first constructing transition probabilities {kij} for multiple values of γ (see eq 10) and choosing the value of γ that satisfies

| 13 |

where ⟨w⟩ is the prescribed value of the ensemble average of the dynamical quantity w.

Detailed Balance

So far, the treatment above is generally applicable to nonequilibrium systems. However, if we are interested in restricting our attention to systems in thermodynamic equilbrium, we can impose an additional constraint that the system must satisfy detailed balance, pikij = pjkji. In this case, the Caliber can be expressed as

|

14 |

Here, dij are Lagrange multipliers that impose the detailed balance condition. Differentiating with respect to kij and setting the derivative to zero, we find (see eq 10)

| 15 |

where δij = edij–dji = 1/δji.

Now we have to determine the modified Lagrange multipliers βi, λj, δij, and γ from the imposed constraints. Let us first impose detailed balance to determine δij. We have

| 16 |

Since δij = 1/δji, we have

| 17 |

In eq 17, identifying ρi = (βiλi)1/2,

| 18 |

where e–γ/2(wij+wji) = Wijsym is the symmetrized form of Wij = e–γwij (see above). It is easy to see that kij in eq 18 satisfies detailed balance. The Lagrange multipliers ρ̅ = [ρ1,ρ2,...,ρN] and γ are determined by the same procedure as described above. We have

| 19 |

In other words, ρ̅ is the solution of the nonlinear equation

| 20 |

and γ is determined by adjusting the path ensemble average

| 21 |

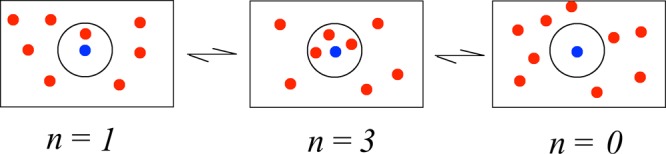

An Illustration: Computing the Dynamics of a Solvation Shell from Simulated Populations

We now illustrate how the present MaxCal method can be used to take a stationary-state distribution and a global dynamical constraint and to infer microscopic kinetics. Consider a shell of solvating water molecules surrounding a single water molecule. The number, n(t), of water molecules in the hydration shell is a quantity that fluctuates with time t (see Figure Figure 1). We want to compute how fast the water molecules enter or exit the solvation shell. If the time interval dt is small, n(t) and n(t + dt) will be statistically correlated. Here, we construct a Markov process to model the time series {n(t)}. We will require the Markov process to reproduce (a) the stationary distribution p(n) that is observed in molecular dynamics simulations, and (b) the average change in occupancy Δ per time step of duration dt, a path ensemble average (see Appendix for details of molecular simulation). While the choice of dynamical constraint(s) remains somewhat arbitrary, it is validated only a posteriori. In other words, the constrain(s) is a modeling aspect of any maximum entropy method.22 We have

| 22 |

where n(t) = i and n(t + dt) = j.

Figure 1.

Hydration shell (black circle) around a central water molecule (blue disc) is dynamically populated by other water molecules in the bulk solvent medium (red discs). The probability, p(n) that the hydration shell has exactly n water molecules is a key quantity in determining the solvation free energy of liquid water.23,24

Since the system should satisfy detailed balance, the transition probability kij for a transition n(t) = i → n(t + dt) = j is given by (see eq 18),

| 23 |

For a given value of γ, we determine the Lagrange multipliers

ρi from eqs 20 above. In order to determine the Lagrange multiplier γ which

dictates the rate of transition between states, we first construct

Markov processes for different values of γ. Panel B of Figure

Figure 2 shows that the path ensemble average

of the change in occupation number per unit time step Δ is exponentially

decreasing with γ. From trajectories sampled at every 5 fs from

the MD simulation, we find that experimental trajectory average Δexpt =  ≈ 0.0629 which corresponds

to γ

≈ 3.29. From here onward, we use γ = 3.29 and construct

the transition probabilities {kij} (see eq 23). Note that the path ensemble

average Δ and consequently the Lagrange multiplier γ,

depend on the time interval dt between two observation

(dt = 5 fs here).

≈ 0.0629 which corresponds

to γ

≈ 3.29. From here onward, we use γ = 3.29 and construct

the transition probabilities {kij} (see eq 23). Note that the path ensemble

average Δ and consequently the Lagrange multiplier γ,

depend on the time interval dt between two observation

(dt = 5 fs here).

Figure 2.

Panel A: The stationary distribution p(n) of the number of water molecules in the hydration shell of radius r = 3.2 Å of a reference water molecule. Panel B: The dependence of the ensemble average of change in water occupancy number Δ = ⟨|n(t + dt) – n(t)|⟩ on the Lagrange multiplier γ. We see that Δ depends exponentially on γ. A higher γ implies slower dynamics and vice versa. We choose γ = 3.29 to match the observed Δ ≈ 0.0629 in the molecular dynamics simulation.

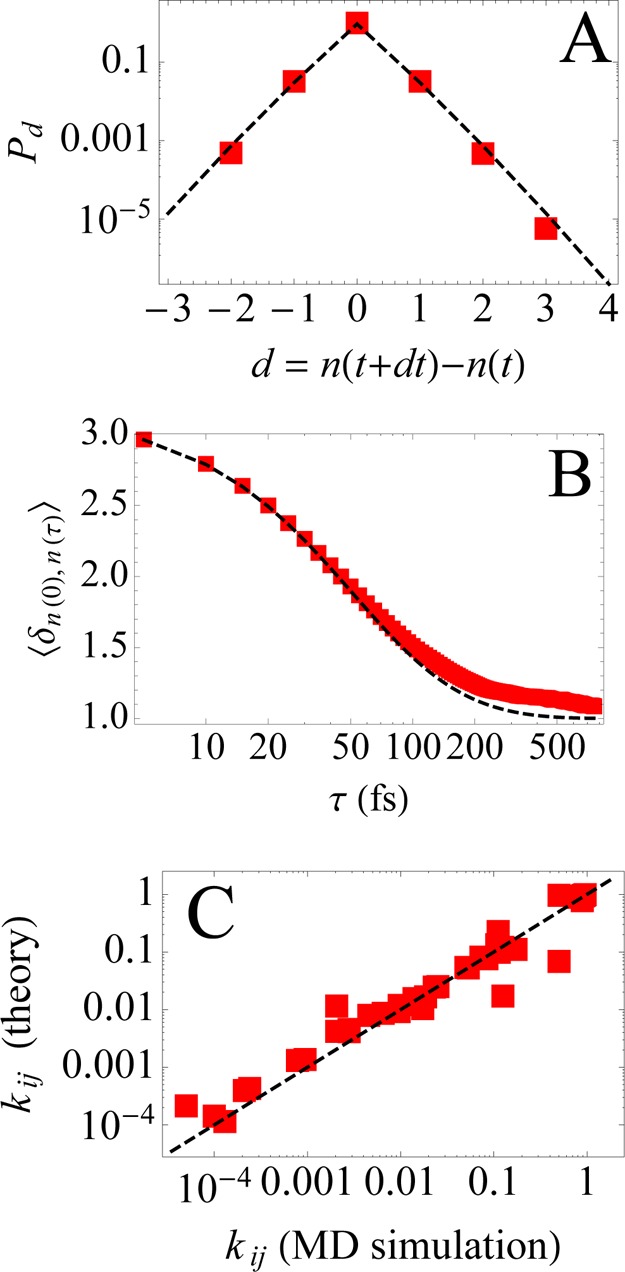

From the Markov process constructed with γ = 3.29 (see above), we now compute various dynamical quantities: (a) the probability Pd of jump size d, (b) the occupancy autocorrelation ⟨δn(0),n(τ)⟩, and (c) the transition probabilities kij, and we compare to those obtained directly from the MD simulation trajectory. In general, the MaxCal method will be of value when transitions are hard to simulate, such as for large kinetic barriers. Here, we are just illustrating with a toy problem for which we can determine the transition probabilities independently from the simulations.

From the long simulation trajectory, the probability Pd of jump size is estimated as the histogram of d = n(t + dt) – n(t). Here d could be both positive and negative. Pd is given by

| 24 |

The normalized occupancy autocorrelation is simply the joint probability that n(t) and n(t + τ) are equal. It is given by

| 25 |

where K(τ) = kτ is the τth power of the matrix of transition probabilities {kij}.

In Figure 3 we plot Pd, ⟨δn(0),n(τ)⟩, and {kij} estimated from the molecular dynamics trajectory and compare them to our predictions from Markov modeling. Even though we constrained only the mean value Δ = ⟨|d|⟩ of Pd, the modeled Markov process captures the entire distribution Pd with high accuracy. Similarly the occupancy correlation ⟨δn(0),n(τ)⟩ is also reproduced with high accuracy even though we did not utilize any information about it when inferring the transition probabilities of the Markov process. Moreover, our modeling also accurately captures the individual transition probabilities {kij} over 4 orders of magnitude.

Figure 3.

Panel A: The probability Pd of jump size estimated from the trajectory derived from MD simulation (red squares) is compared to the one predicted using the transition probabilities of the Markov process (dashed black line). Panel B: The normalized occupancy autocorrelation ⟨δn(0),n(τ)⟩ as estimated from the MD trajectory and as predicted from the transition probabilities of the Markov process. Panel C: We directly compare the transition probabilities kij for the probability of transition i → j empirically obtained from the MD trajectory to the ones predicted by using eq 23.

Discussion and Summary

We have presented here a variational approach that computes N × N microscopic transition probabilities of a Markov process, given only knowledge of a stationary state population distribution and one trajectory-averaged dynamical property. In this approach, we maximize the path entropy subject to constraints; that is, we maximize the Caliber. We show that this method gives correct values of dynamical quantities in an example of molecular dynamics simulations of a water solvation shell around a single water molecule. This method may be useful for analyzing single-molecule experiments such as on ion channels,4 dynamics of neuron firing,7 and the dynamics of protein-sequence evolution,6 for example.

Acknowledgments

K.D. thanks grant 5R01GM090205-02 from the National Institute of General Medical Sciences. P.D. thanks Mr. Manas Rachh and Mr. Karthik Shekhar for numerous discussions about the topic and a critical reading of the manuscript. We would like to thank Kingshuk Ghosh and Steve Presse for helpful discussions on the manuscript.

Appendix I: MD Simulation

We performed a molecular dynamics simulation on 233 water molecules25,26 at 300 K and at a constant volume using NAMD27 with help of the Langevin thermostat. The oxygen atom of one of the water molecules was fixed at the origin. The time step of integration was 1 fs and the trajectory was stored every 5 fs. Sampling the trajectory every 5 fs ensures that correlations in n(t) have not vanished.

The authors declare no competing financial interest.

Funding Statement

National Institutes of Health, United States

References

- Weikl T. R.; Palassini M.; Dill K. A. Protein Sci. 2004, 13, 822–829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane T. J.; Schwantes C. R.; Beauchamp K. A.; Pande V. S. J. Chem. Phys. 2013, 139, 145104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane T. J.; Bowman G. R.; Beauchamp K.; Voelz V. A.; Pande V. S. J. Am. Chem. Soc. 2011, 133, 18413–18419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hille B.Ion Channels of Excitable Membranes; Sinauer: Sunderland, MA, 2001; Vol. 507. [Google Scholar]

- Kolomeisky A. B.; Fisher M. E. Annu. Rev. Phys. Chem. 2007, 58, 675–695. [DOI] [PubMed] [Google Scholar]

- Shekhar K.; Ruberman C. F.; Ferguson A. L.; Barton J. P.; Kardar M.; Chakraborty A. K. Phys. Rev. E 2013, 88, 062705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneidman E.; Berry M. J.; Segev R.; Bialek W. Nature 2006, 440, 1007–1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulsson J. Phys. Life Rev. 2005, 2, 157–175. [Google Scholar]

- Dixit P. D. Biophys. J. 2013, 104, 2743–2750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pressé S.; Ghosh K.; Lee J.; Dill K. A. Rev. Mod. Phys. 2013, 85, 1115. [Google Scholar]

- Dixit P. D. J. Chem. Phys. 2013, 138, 184111. [DOI] [PubMed] [Google Scholar]

- Crooks G. E. Phys. Rev. E 2008, 75, 041119. [DOI] [PubMed] [Google Scholar]

- Jaynes E. T. Annu. Rev. Phys. Chem. 1980, 31, 579–601. [Google Scholar]

- Pressé S.; Ghosh K.; Lee J.; Dill K. A. Rev. Mod. Phys. 2013, 85, 1115–1141. [Google Scholar]

- Stock G.; Ghosh K.; Dill K. A. J. Chem. Phys. 2008, 128, 194102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Q. A. Chaos, Solitons, & Fractals 2005, 26, 1045–1052. [Google Scholar]

- Seitaridou E.; Inamdar M. M.; Phillips R.; Ghosh K.; Dill K. J. Phys. Chem. B 2007, 111, 2288–2292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otten M.; Stock G. J. Chem. Phys. 2010, 133, 034119. [DOI] [PubMed] [Google Scholar]

- Ghosh K.; Dill K. A.; Inamdar M. M.; Seitaridou E.; Phillips R. Am. J. Phys. 2006, 74, 123–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filyukov A.; Karpov V. Y. J. Eng. Phys. Thermophys. 1967, 13, 416–419. [Google Scholar]

- Cover T. M.; Thomas J. A.. Elements of Information Theory; John Wiley & Sons: New York, 2012. [Google Scholar]

- Caticha A.arXiv preprint arXiv:1212.6967, 2012. [Google Scholar]

- Asthagiri D.; Dixit P. D.; Merchant S.; Paulaitis M. E.; Pratt L. R.; Rempe S. B.; Varma S. Chem. Phys. Lett. 2010, 485, 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merchant S.; Asthagiri D. J. Chem. Phys. 2009, 130, 195102. [DOI] [PubMed] [Google Scholar]

- Jorgensen W.; Chandrasekhar J.; Madura J. D.; Impey R. W.; Klein M. L. J. Chem. Phys. 1983, 79, 926–935. [Google Scholar]

- Neria E.; Fischer S.; Karplus M. J. Chem. Phys. 1996, 105, 1902–1921. [Google Scholar]

- Phillips J. C.; Braun R.; Wang W.; Gumbart J.; Tajkhorshid E.; Villa E.; Chipot C.; Skeel R. D.; Kale L.; Schulten K. J. Comput. Chem. 2005, 26, 1781–1802. [DOI] [PMC free article] [PubMed] [Google Scholar]