Abstract

Exposure is often cited as an explanation for campaign success or failure. A lack of validation evidence for typical exposure measures, however, suggests the possibility of either misdirected measurement or incomplete conceptualization of the idea. If whether people engage campaign content in a basic, rudimentary manner is what matters when we talk about exposure, a recognition-based task should provide a useful measure of exposure, or what we might call encoded exposure, that we can validate. Data from two independent sources, the National Survey of Parents and Youth (NSPY) and purchase data from a national antidrug campaign, offer such validation. Both youth and their parents were much more likely to recognize actual campaign advertisements than to claim recognition of bogus advertisements. Also, gross rating points (GRPs) for a campaign advertisement correlated strikingly with average encoded exposure for an advertisement among both youth (r = 0:82) and their parents (r = 0:53).

Beyond considerations of message design, an important explanatory variable for public health campaign success or failure is exposure (Hornik, 1997). While researchers and practitioners may be increasingly cognizant of this notion, however, at least two related questions are often overlooked. First, what exactly do when we mean when we talk about exposure? Second, are measures of that construct valid? Do typical measures indicate meaningful variation?

Upon initial consideration of these questions, it might seem reasonable to focus on the physical presence of information in a person's immediate environment as a useful dimension for study. Yet communication scholars have noted in various contexts that the physical proximity of a person to electronic media appliances (or time spent with appliances) does not guarantee any meaningful engagement with information presented in such media (Clarke & Kline, 1974; Kline, 1977; Salmon, 1986). A classic example occurs whenever a television blares away in a living room as various family members walk in and out, paying no substantive attention to the content.

Instead, often what is of more interest to campaign planners and evaluators is whether presentation of campaign content generates at least a minimal memory trace in individuals. Only at that point might we begin to suggest that a potential audience member has engaged the campaign's presentation in any meaningful sense. To avoid confusion, we can call this variable encoded exposure.

How can we measure encoded exposure? Given the notion of a minimal memory trace, at least two options are relevant: a recognition task or a recall task. While the two types of memory measures are related, recognition can be differentiated from unaided recall of information. We can think about unaided recall as the ability to offer detail about particular content when asked an open-ended question at some point after the initial opportunity to engage the content. Recognition, in contrast, is a more basic ability to respond to a closed-ended question about past engagement with specific content when presented with that content once again. The former suggests a relatively high degree of current information salience and accessibility, whereas the latter involves a somewhat lower standard of past cognitive engagement (Shoemaker, Schooler, & Danielson, 1989; Singh, Rothschild, & Churchill, 1988).

In light of this distinction, recognition-based tasks theoretically should offer appropriate indicators of encoded exposure. As Lang (1995) has argued, recognition measures likely indicate if the information in question ever has been encoded, which is a basic outcome that resides at a different conceptual level than the retrieval ability likely tapped by recall tasks. While unaided questions may provide a keener sense of what is most salient to a respondent at the time of interview, measuring recognition should more precisely and efficiently tap basic encoded exposure (du Plessis, 1994; Stapel, 1998).

In response to criticism regarding the possible tendency of recognition measures to provide too much aid or to encourage false reporting, researchers have offered some evidence that recognition measures can discriminate between valid and bogus reports and can produce variance comparable to recall measures (Singh & Rothschild, 1983; Singh et al., 1988; Zinkhan, Locander, & Leigh, 1986). Moreover, recall measures have faults of their own. Unaided recall tasks, for example, tend to lead to substantial underreporting and place a heavy burden on the respondent (Sudman & Bradburn, 1982).

Beyond such small-scale investigations of recognition measure performance, however, available literature currently yields few, if any, reported attempts to validate a recognition task as a measure of encoded exposure by comparing the sheer availability of national campaign content with encoded exposure among a national population. A recognition-based task administered to a representative sample of an intended audience for such a mass media campaign should provide a meaningful encoded exposure measure that we can validate. In light of that argument, evidence from an evaluation of the U.S. Office of National Drug Control Policy's (ONDCP) antidrug mass media campaign offers an opportunity to explore the empirical validity of these measurement claims.

Methods

Procedure

From November 1999 through December 2000, a multistage cluster sample1 representing all U.S. youth ages 9 to 18 and their parents or caregivers participated in two waves of the NSPY. In a first wave, from November 1999 through May 2000, interviewers administered surveys with 3,312 youth ages 9 to 18 in 2,373 households and with 2,293 parents in 2,282 households. From July 2000 through December 2000, interviews also were conducted with 2,362 youth ages 9 to 18 in 1,726 households and 1,632 parents in 1,623 households. Respondents used touch-screen laptop computers and headphones brought into their homes by an interviewer to view each question (or listen to a prerecorded reading of the question) and to respond. These data were generated as part of a multiyear evaluation of the National Youth Anti-Drug Media Campaign (Hornik et al., 2000; Hornik et al., 2001).

Data detailing advertisement purchases also were obtained from campaign staff. From September 1999 through December 2000, campaign organizations placed advertisements in national network, cable, and in-school television programming, as well as in local television programming in more than 100 U.S. metropolitan areas. Those organizations, in turn, reported gross rating points estimates for each advertisement during the specific weeks that an advertisement aired.

GRPs are a conventional unit used by advertising researchers for measuring a population's opportunities for exposure to a particular unit of media content (Farris & Parry, 1991). GRPs are the product of underlying estimates of reach and frequency. In theory, for example, 100 GRPs could be the result of 100% of the population in question having the opportunity to see or hear an advertisement one time, 1% of that population having the opportunity to see or hear an advertisement 100 times, or some other combination of reach and frequency. In this case, such estimates provided the basis for a total GRP density score for each advertisement from the campaign.

Measures

In order to gauge encoded exposure, television advertisements that had aired in the two months prior to a particular NSPY interview were shown to respondents on the laptop computer used for the interview. Generally, the interview program played up to four advertisements for respondents, depending on the number of eligible advertisements.2 In addition to the actual campaign advertisements, each respondent also was shown a bogus antidrug advertisement. Each bogus advertisement was one of a series of advertisements that had been produced professionally (for one of the partner organizations of the campaign) but had yet to air.

After seeing each advertisement, each respondent was asked, “Have you ever seen or heard this ad?” If they responded in the affirmative, they then were asked, “In recent months, how many times have you seen or heard this ad?” Response categories were “not at all,” “once,” “2 to 4 times,” “5 to 10 times,” and “more than 10 times.” (In order to represent the numeric distance between these categories in interval fashion, categories were recoded into scores of 0, 1, 3, 7.5, and 12.5 for analysis. “Don't know” responses to the initial question were recoded as 0.5.)

GRPs, as provided by campaign staff in the manner noted earlier, provided the basis for a measure of the sheer environmental prevalence of campaign advertisements. Specifically, the total gross ratings points for each advertisement were divided by the total number of weeks in which that advertisement aired from September 1999 through December 2000 to produce a total GRP density score for each advertisement. This measure indicates the level of total physical availability for each advertisement throughout the time period in question.

Analysis

In order to validate the NSPY measure of encoded exposure, at least two types of evidence are useful. First, as an initial diagnostic test, the simple, yes-or-no recognition portion of the NSPY measure should discriminate between recognition of media content that actually was available to a respondent in recent months and the tendency to falsely report recognition of content that was not available. To explore this idea, we compared average recognition levels for advertisements that actually did air prior to interview with average recognition levels for bogus advertisements included in the NSPY that did not actually air.

Second, a useful measure of encoded exposure for a particular campaign advertisement should correlate, at an aggregate level, with the sheer environmental prevalence of that advertisement. While encoded exposure is theoretically different from environmental availability, the two should be related insofar as the exposure encoding process is fundamentally contingent on the environmental presence of information. In the context of this study, that meant that the total GRP density achieved for a campaign advertisement should correlate with the average weekly encoded exposure score reported by respondents for that advertisement.

In order to estimate weekly encoded exposures from the original NSPY recognition questions, the total number of times an advertisement reportedly was seen in recent months was divided by the average number of days the advertisement was on the air in the 60 days prior to an interview. This step offered an estimate of average encoded exposures per day for that advertisement across respondents. Multiplying this number by seven then offered an estimate of average encoded exposures per week for each advertisement.

Because a multistage cluster design was used to generate the original sample, it is most appropriate to use analysis software that affords the use of replicate weight factors to avoid underestimating standard errors. Accordingly, we used version 4.0.73 of WesVar Complex Samples Software, developed by Westat, for all final analyses involving probability levels.3

Results

Initially noteworthy is the fact that the NSPY recognition measure produced variance. Across all of the advertisements shown, approximately 84% of youth recognized at least one of the campaign advertisements as having aired in the time period in question, but only about a third (35%) of youth reported seeing at least one of the campaign advertisements every week. Among parents, approximately 62% reported recognition of at least one of the advertisements and about a quarter (24%) of parents reported seeing at least one of the campaign advertisements every week.4 Such variance suggests clear differences between the campaign advertisements with reference to encoded exposure, as seeing an advertisement more than once a week is likely quite different from not even engaging it once a month. Beyond simply demonstrating variance, however, the encoded exposure measure had two additional tests to pass. We turn to those results next.

Recognition for Actual and Bogus Advertisements

If the encoded exposure measure operates as intended, respondents should report relatively higher recognition of advertisements that actually were available in the U.S. information environment prior to the time of interview compared with reported recognition of bogus advertisements. This pattern did emerge upon analysis. The average actual campaign television advertisement intended for youth was recognized by approximately 45% of 9- to 18-year-old youth. Over a third (9 of 23) of the actual youth advertisements were recognized by more than half of all youth. In contrast, the average bogus advertisement reportedly was recognized by less than 12% of youth. In other words, youth respondents were more likely to report recognition when presented with an actual campaign advertisement than when presented with a bogus advertisement, t = 50:05; p < 0:01.

A similar story emerged among parents. Approximately 30% of parents recognized the average actual campaign television advertisement intended for parents, whereas 16% recognized the average bogus advertisement. Again, this pattern signaled a significant difference in the tendency to report recognition according to whether an actual or a bogus advertisement was presented, t = 16:62; p < 0:01.

Nomological Validation

While knowing that respondents tended to report recognition more often when recognition actually was possible is useful, the measure in question theoretically also should bear a relationship to the environmental availability of advertisements if it is an indicator of encoded exposure. For any particular advertisement, in other words, average reported encoded exposure should correlate with the total GRP density obtained for that advertisement. Table 1 outlines the GRP levels purchased for each relevant ONDCP campaign advertisement. This variation should predict variation in the encoded exposure measure.

TABLE 1.

Gross Rating Point Data For Television Campaign Advertisements (Fall 1999 Through 2000)

| Advertisement | Total TV GRPs | Total weeks on air | Total GRP density |

|---|---|---|---|

| Youth ads | |||

| Ad A | 592.41 | 9 | 65.82 |

| Ad B | 540.90 | 20 | 27.05 |

| Ad C | 412.10 | 11 | 37.46 |

| Ad D | 181.67 | 8 | 22.71 |

| Ad E | 725.64 | 17 | 42.68 |

| Ad F | 41.35 | 10 | 4.14 |

| Ad G | 886.17 | 18 | 49.23 |

| Ad H | 483.59 | 7 | 69.08 |

| Ad I | 1013.59 | 13 | 77.97 |

| Ad J | 30.52 | 6 | 5.09 |

| Ad K | 736.83 | 16 | 46.05 |

| Ad L | 398.16 | 16 | 24.89 |

| Ad M | 145.08 | 7 | 20.73 |

| Ad N | 7.30 | 2 | 3.65 |

| Ad O | 334.62 | 12 | 27.89 |

| Ad P | 386.12 | 18 | 21.45 |

| Ad Q | 229.85 | 18 | 12.77 |

| Ad R | 233.45 | 18 | 12.97 |

| Ad S | 98.10 | 13 | 7.55 |

| Ad T | 125.11 | 7 | 17.87 |

| Ad U | 420.91 | 13 | 32.38 |

| Ad V | 58.96 | 5 | 11.79 |

| Ad W | 168.74 | 8 | 21.09 |

| Parent ads | |||

| Ad AA | 287.31 | 16 | 17.96 |

| Ad BB | 118.63 | 10 | 11.86 |

| Ad CC | 193.23 | 11 | 17.57 |

| Ad DD | 64.15 | 3 | 21.38 |

| Ad EE | 100.40 | 5 | 20.08 |

| Ad FF | 201.53 | 18 | 11.20 |

| Ad GG | 488.53 | 22 | 22.21 |

| Ad HH | 103.60 | 5 | 20.72 |

| Ad II | 431.14 | 8 | 53.89 |

| Ad JJ | 269.85 | 8 | 33.73 |

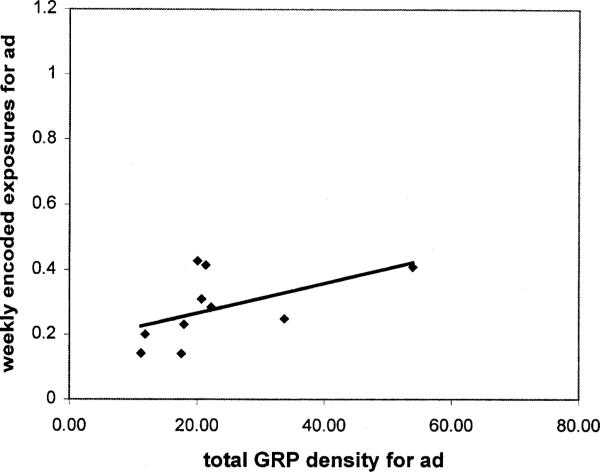

Figure 1 presents strong evidence that this relationship exists among U.S. adolescents. The correlation between the NSPY estimate and weekly GRPs is substantial (r = 0:82) for the advertisements studied (n = 23). Figure 2 suggests that a relationship also exists among their parents, although the correlation for the parent advertisements studied (n = 10) is somewhat smaller (r = 0:53) than that demonstrated for youth.

FIGURE 1.

Relationship of encoded exposure measure and gross rating points among youth.

FIGURE 2.

Relationship of encoded exposure measure and gross rating points among parents.

Discussion

The encoded exposure measure employed in this study does not produce uniformly high scores, in contrast with some previous speculation about recognition tasks. Moreover, it does seem to tap memory of past engagement with actual media content rather than simply measuring phenomena such as social desirability. Furthermore, the measure bears a strong relationship to the environmental prevalence of a national TV campaign among a sample of U.S. adolescents and their parents. This last finding is particularly important. Not only does the result provide nomological validation evidence, but it also begins to suggest that the sheer environmental prevalence of a health promotion advertisement accounts for a substantial proportion of encoded exposure to that advertisement. This pattern underscores the importance of generating widespread availability for campaign messages as a simple but crucial step in campaign planning.

It is worth asking why the relationship between encoded exposure and GRPs was apparently weaker among parents. At least two answers seem appropriate. First, the GRP reports for parent advertisements are less precise as an indicator of the environmental prevalence for the group in question than are the GRP reports for youth advertisements because the parent advertisement GRPs are estimates for the U.S. population of all adults between 25 and 54 years old. Specific estimates for parents were not available. Second, parent television advertisements enjoyed fewer GRPs in general than youth television advertisements during the time period in question. None of the parent television advertisements included in this study garnered more than 55 GRPs per week on air, whereas some youth television advertisements garnered almost 80. As a result, the greater variation, and generally higher level, of youth television advertisement GRPs may have afforded a better opportunity to witness a relationship between external estimates and NSPY measures than was the case for parents.

In sum, we have good reason to believe that the aforementioned recognition task indicated encoded exposure. Certainly, the units of content in question in this study may not be representative of relatively longer units or other media; each advertisement appeared on television and was 60 seconds in length at most. Also, it is not clear whether the antidrug advertisements from this campaign are generally representative of other types of health promotion content. Nonetheless, public health program evaluators and health communication researchers should gain at least some confidence in the notion that encoded exposure is measurable using relatively simple, albeit relatively resource-demanding, techniques.

Conclusion

If we are willing to accept that the notion of encoded exposure, which involves the generation of at least a minimal memory trace, is a useful conceptualization of a variable that matters for campaign evaluators and practitioners, then the present findings suggest that a recognition-based task can provide a useful measurement among a national sample. Analysis of data from two independent data sources, the recent NSPY and independent advertisement purchase data, offered two pieces of evidence in this regard. Not surprising (but worth noting) is the fact that respondents reported recognition of advertisements that had actually aired much more often than they reported recognition of bogus advertisements that had not yet aired. More importantly, GRP data correlated strikingly with average encoded exposure for each advertisement, particularly among adolescents. These results, then, both confirm speculation about the role of information availability in determining what an intended audience ultimately encodes and suggest an efficient measure to include in evaluating electronic media efforts in this arena.

Acknowledgments

Data reported in this paper are the result of work funded by the National Institute on Drug Abuse (contract number N01DA-8-5063) through a primary contract with Westat, Inc., Rockville, Maryland, and a subcontract with the Annenberg School for Communication, University of Pennsylvania. We are grateful to Ogilvy & Mather of New York for providing the media time purchase data used in the analyses. David Maklan and Robert Hornik are coprincipal investigators for the project, Diane Cadell is project director, and David Judkins is director of statistics. The NIDA project officer is Susan David.

Footnotes

Youth and their parents were found by door-to-door screening of a scientifically selected sample of about 34,700 dwelling units for Wave 1 and a sample of 23,000 dwelling units for Wave 2. These dwelling units were spread across about 1,300 neighborhoods in Wave 1 and 800 neighborhoods in Wave 2 in 90 primary sampling units. The sample provided an efficient and nearly unbiased cross section of America's youth and their parents. Youth living in institutions, group homes, and dormitories were excluded. Parents were defined to include natural parents, adoptive parents, and foster parents who lived in the same household as the sample youth. Stepparents also were treated the same as parents unless they had lived with the child for less than 6 months. When no parents were present, an adult caregiver usually was identified and interviewed in the same manner as actual parents. Interviewers achieved a response rate of approximately 65% for youth and approximately 63% for parents across waves.

If the number of eligible advertisements for an interview exceeded the maximum number of slots, a sample of the advertisements was shown and remaining eligible advertisements were assigned an imputed response using either hot deck methods or other procedures developed by Westat. Also, African Americans and bilingual Spanish=English speakers were shown additional campaign advertisements specifically intended for those audiences. Results reported here focus on general population advertisements.

All analyses also were conducted using version 9.0 of the SPSS package, which does not accommodate replicate weights, for comparison purposes. The same substantive story emerged in both WesVar and SPSS results.

Final estimates are adjusted for nonresponse and for differences with known population characteristics. Estimates also are reported in the second semiannual evaluation report for the National Youth Anti-Drug Media Campaign (Hornik et al., 2001), where the procedures for estimating total frequencies of encoded exposure are described fully.

Contributor Information

BRIAN G. SOUTHWELL, School of Journalism and Mass Communication University of Minnesota Minneapolis, Minnesota, USA

CARLIN HENRY BARMADA, Annenberg School for Communication University of Pennsylvania Philadelphia, Pennsylvania, USA.

ROBERT C. HORNIK, Annenberg School for Communication University of Pennsylvania Philadelphia, Pennsylvania, USA

DAVID M. MAKLAN, Substance Abuse Research Group, Westat, Inc. Rockville, Maryland, USA

References

- Clarke P, Kline FG. Media effects reconsidered: Some new strategies for communication research. Communication Research. 1974;1:224–240. [Google Scholar]

- du Plessis E. Recognition versus recall. Journal of Advertising Research. 1994;34(3):75–91. [Google Scholar]

- Farris PW, Parry ME. Clarifying some ambiguities regarding GRP and average frequency. Journal of Advertising Research. 1991;31(6):75–77. [Google Scholar]

- Hornik R. Public health education and communication as policy instruments for bringing about changes in behavior. In: Goldberg ME, Fishbein M, Middlestadt SE, editors. Social marketing: Theoretical and practical perspectives. Lawrence Erlbaum Associates; Mahwah, NJ: 1997. pp. 45–58. [Google Scholar]

- Hornik R, Maklan D, Cadell D, Judkins D, Sayeed S, Zador P, Southwell B, Appleyard J, Hennessy M, Morin C, Steele D. Evaluation of the National Youth Anti-Drug Media Campaign: Campaign exposure and baseline measurement of correlates of illicit drug use from November 1999 through May 2000. National Institute on Drug Abuse; Bethesda, MD: 2000. [Google Scholar]

- Hornik R, Maklan D, Judkins D, Cadell D, Yanovitzky I, Zador P, Southwell B, Mak K, Das B, Prado A, Barmada C, Jacobsohn L, Morin C, Steele D, Baskin R, Zanutto E. Evaluation of the National Youth Anti-Drug Media Campaign: Second semi-annual report of findings. National Institute on Drug Abuse; Bethesda, MD: 2001. [Google Scholar]

- Kline FG. Time in communication research. In: Hirsch PM, et al., editors. Strategies for communication research. Sage; Beverly Hills, CA: 1977. [Google Scholar]

- Lang A. Defining audio/video redundancy from a limited-capacity information processing perspective. Communication Research. 1995;22(1):86–115. [Google Scholar]

- Salmon CT. Message discrimination and the information environment. Communication Research. 1986;13(3):363–372. [Google Scholar]

- Shoemaker PJ, Schooler C, Danielson WA. Involvement with the media: Recall versus recognition of election information. Communication Research. 1989;16(1):78–103. [Google Scholar]

- Singh SN, Rothschild ML. Recognition as a measure of learning from television commercials. Journal of Marketing Research. 1983;20:235–248. [Google Scholar]

- Singh SN, Rothschild ML, Churchill GA. Recognition versus recall as measures of television commercial forgetting. Journal of Marketing Research. 1988;25:72–80. [Google Scholar]

- Stapel J. Recall and recognition: A very close relationship. Journal of Advertising Research. 1998;38(4):41–45. [Google Scholar]

- Sudman S, Bradburn NM. Asking questions: A practical guide to questionnaire design. Jossey-Bass Publishers; San Francisco: 1982. [Google Scholar]

- Zinkhan GM, Locander WB, Leigh JH. Dimensional relationships of aided recall and recognition. Journal of Advertising. 1986;15(1):38–46. [Google Scholar]