Abstract

Objective

To determine if unaided, non-linguistic psychoacoustic measures can be effective in evaluating cochlear implant (CI) candidacy.

Study Design

Prospective split-cohort study including predictor development subgroup and independent predictor validation subgroup.

Setting

Tertiary referral center.

Subjects

Fifteen subjects (28 ears) with hearing loss were recruited from patients visiting the University of Washington Medical Center for CI evaluation.

Methods

Spectral-ripple discrimination (using a 13-dB modulation depth) and temporal modulation detection using 10- and 100-Hz modulation frequencies were assessed with stimuli presented through insert earphones. Correlations between performance for psychoacoustic tasks and speech perception tasks were assessed. Receiver operating characteristic (ROC) curve analysis was performed to estimate the optimal psychoacoustic score for CI candidacy evaluation in the development subgroup and then tested in an independent sample.

Results

Strong correlations were observed between spectral-ripple thresholds and both aided sentence recognition and unaided word recognition. Weaker relationships were found between temporal modulation detection and speech tests. ROC curve analysis demonstrated that the unaided spectral ripple discrimination shows a good sensitivity, specificity, positive predictive value, and negative predictive value compared to the current gold standard, aided sentence recognition.

Conclusions

Results demonstrated that the unaided spectral-ripple discrimination test could be a promising tool for evaluating CI candidacy.

Keywords: cochlear implant candidacy, unaided non-linguistic measures

Introduction

The preoperative evaluation is the first step towards receiving a cochlear implant (CI) for individuals with significant hearing loss. To determine CI candidacy, postlingually deafened adults typically receive medical evaluation, cochlear imaging, audiological evaluation, hearing aid (HA) evaluation, and sometimes psychological evaluation (1). In the past, patients with a pure tone average (PTA) greater than 90 dB HL for both ears were considered to be candidates for CIs. This criterion has changed substantially with advances in CI technology, but the PTA is not an optimal criterion for CI candidacy because pure tone thresholds are not necessarily linked to speech discrimination abilities using a HA. Therefore, aided speech perception testing is currently the gold standard to determine the limits of HA benefit. The criterion level for speech perception tests for CI candidacy evaluation varies across countries, as well as across individual patients’ medical insurance plans. For example, in Korea, cochlear implantation can be covered for patients with a PTA (500, 1000, and 2000 Hz) greater than 70 dB HL as well as a sentence recognition score less than 50% in the best aided condition. In the United States, Medicare covers cochlear implantation for individuals with sentence recognition scores of less than 40% in the best aided condition (2). Other insurers typically follow the FDA guidelines for the Nucleus devices, which approve cochlear implantation for patients who show limited benefit from appropriately fit HAs, defined as a sentence recognition score less than 50% in the ear to be implanted and less than 60% on the contralateral side. AzBio sentence materials are now frequently used as an alternative to HINT to evaluate patients’ aided sentence recognition abilities (3,4).

Aided speech perception, however, presents several limitations. First, it requires a significant resource investment to ensure a “best-fit” aided condition. Second, many clinics don’t have speech perception materials in languages other than the native language. Consequently, there is a significant bias towards underestimating speech recognition ability for patients whose native language differs from the available speech materials in the clinic. Third, it is not possible to create an international or cross-language standard for CI candidacy with speech perception tests. The cross-language variability makes global comparisons of speech discrimination suspect. Fourth, there is a chance that a learning effect could occur for patients who return to the clinic for repeated CI candidacy evaluations because of the limited number of sentence lists.

The goal of the present study was to explore the possibility of using surrogate non-speech psychoacoustic measures without a HA. Two psychoacoustic measures were chosen to evaluate each subject’s spectral and temporal modulation processing: spectral ripple discrimination (SRD) and temporal modulation detection (TMD), respectively. Both tests were shown to correlate significantly with speech perception abilities in CI users (5–7). For example, significant correlations were found between SRD and vowel/consonant identification in quiet (5) and spondee word recognition in babble or steady background noise (6). A significant correlation was also found between SRD and consonant/vowel identification for patients with mild to profound hearing loss (5). Moreover, more than 50% of variance in speech perception in noise by CI users can be accounted for by SRD and TMD (7).

To determine if an unaided, non-linguistic, psychoacoustic test is a viable option for CI candidacy evaluation, a battery of auditory tasks was administered to 15 candidates for CIs (total 28 ears tested individually). Performance on the psychoacoustic tests and the standard clinical tests were compared, using correlation and signal detection analyses.

Materials and Methods

Subjects

Fifteen patients (10 females) participated. They were recruited from patients who visited the University of Washington Medical Center for CI evaluation. All subjects were native speakers of American English. Subject demographic information is shown in Table 1. Audiometric thresholds are shown in Table 2. This study was approved by the University of Washington IRB.

Table 1.

Subject demographic information.

| Subject | Sex | Age (yrs) |

Duration of hearing loss (yrs) |

Etiology | Hearing aid experience (yrs) |

Cochlear implantation |

|---|---|---|---|---|---|---|

| S111 | F | 43 | 29 | Autoimmune-mediated hearing loss | 0 | Both ears |

| S112 | M | 77 | 26 | Noise-induced progressive hearing loss | 26 | Left ear only |

| S113 | F | 84 | 24 | Unknown | 22 | None |

| S114 | M | 50 | 46 | Neonatal mumps | 45 | Left ear only |

| S115(a) | F | 46 | 1.5 | Idiopathic sudden sensorineural hearing loss (single side deafness) |

0 | Left ear only |

| S116 | F | 55 | 43 | Unknown | 31 | None |

| S118 | F | 66 | Left: 4 Right: 2 months |

Left: Meniere’s disease Right : Idiopathic sudden sensorineural hearing loss |

0 | None |

| S119 | M | 71 | 28 | Unknown | 24 | Right ear only |

| S120 | F | 70 | 66 | Childhood measles | 52 | Right ear only |

| S121(b) | F | 54 | 50 | Encephalopathy | 0 | None |

| S122(c) | F | 19 | 17 | Encephalopathy | 7 | None |

| S123 | M | 57 | 29 | Otosclerosis | 29 | None |

| S124 | M | 73 | 30 | Unknown | 12 | Left ear only |

| S125 | F | 40 | 40 | Unknown | 39 | To be implanted |

| S126 | F | 65 | 3 | Idiopathic sudden sensorineural hearing loss | 0.3 | None |

S115 has single side deafness and received a CI on the left ear. Her right ear show normal audiometric function. See Table 2 for details.

S121 has been unsuccessful in wearing hearing aids. She last tried them about 7 years ago but she felt wearing hearing aids made her understanding of speech poorer.

Auditory neuropathy spectrum disorder. She was initially fit with binaural hearing aids at age 9, but she eventually stopped wearing the right hearing aid because "it didn't make the speech clearer."

Table 2.

Air conduction audiometric thresholds for the test ears are shown in dB HL.

| Subject | Ear | Frequency (Hz) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 125 | 250 | 500 | 750 | 1000 | 1500 | 2000 | 3000 | 4000 | 6000 | 8000 | ||

| S111 | Left | 85 | 100 | 100 | 105 | 100 | 95 | 90 | 80 | 85 | 115 | 105 |

| Right | 75 | 80 | 90 | 80 | 80 | 80 | 70 | 70 | 90 | 95 | 90 | |

| S112 | Right | 35 | 45 | 65 | 70 | 70 | 95 | 115 | 100 | 115 | 115 | 105 |

| S113 | Left | 60 | 60 | 60 | 60 | 65 | 75 | 75 | 60 | 75 | 70 | 90 |

| Right | 70 | 65 | 65 | 70 | 75 | 75 | 65 | 65 | 95 | 90 | ||

| S114 | Left | 40 | 65 | 100 | 100 | 110 | 115 | 115 | 120 | 120 | 115 | 105 |

| Right | 40 | 60 | 95 | 100 | 110 | 120 | 120 | 120 | 120 | 115 | 105 | |

| S115 | Right | 60 | 65 | 85 | 105 | 110 | 110 | 105 | 110 | 90 | ||

| S116 | Left | 20 | 35 | 50 | 55 | 55 | 70 | 70 | 65 | 80 | 115 | 95 |

| Right | 15 | 25 | 45 | 55 | 55 | 70 | 70 | 70 | 85 | 115 | 105 | |

| S118 | Left | 75 | 65 | 70 | 60 | 50 | 55 | 55 | 60 | |||

| Right | 40 | 60 | 60 | 70 | 90 | 115 | 90 | 95 | 100 | |||

| S119 | Left | 20 | 35 | 50 | 55 | 65 | 80 | 70 | 80 | 85 | 90 | 100 |

| Right | 30 | 35 | 65 | 80 | 100 | 120 | 120 | 120 | 120 | 115 | 105 | |

| S120 | Left | 65 | 70 | 95 | 100 | 100 | 90 | 90 | 85 | 85 | 80 | 80 |

| Right | 60 | 75 | 105 | 110 | 115 | 115 | 115 | 115 | 115 | 105 | 90 | |

| S121 | Left | 85 | 95 | 90 | 95 | 90 | 85 | 70 | 55 | 60 | 45 | 60 |

| Right | 80 | 80 | 80 | 80 | 75 | 65 | 35 | 40 | 40 | 35 | 40 | |

| S122 | Left | 50 | 55 | 30 | 35 | 25 | 30 | 25 | 25 | 10 | 20 | |

| Right | 70 | 75 | 70 | 65 | 40 | 30 | 35 | 75 | 70 | 30 | ||

| S123 | Left | 85 | 80 | 80 | 90 | 100 | 105 | 105 | 90 | |||

| Right | 40 | 50 | 55 | 70 | 80 | 80 | 85 | |||||

| S124 | Left | 80 | 75 | 65 | 70 | 70 | 75 | 70 | 90 | |||

| Right | 80 | 90 | 80 | 70 | 75 | 75 | 75 | 80 | 85 | |||

| S125 | Left | 80 | 80 | 95 | 95 | 95 | 105 | 105 | 115 | 120 | 105 | |

| Right | 90 | 90 | 95 | 95 | 95 | 100 | 105 | 105 | 120 | 110 | ||

| S126 | Left | 70 | 75 | 75 | 75 | 70 | 75 | 95 | 90 | |||

| Right | 70 | 70 | 65 | 55 | 45 | 80 | 95 | 90 | ||||

Procedure

Five tasks were administered for each subject and for each ear: (1) pure tone audiometry, (2) unaided word recognition using the W-22 monosyllabic lists, (3) aided sentence recognition using the AzBio sentences, (4) assessment of unaided spectral modulation sensitivity using SRD, and (5) assessment of unaided temporal modulation sensitivity using TMD. The first three tests were conducted in the audiology clinic at the University of Washington Medical Center, as part of the standard clinical CI evaluation. The two psychoacoustic tasks were done in the research laboratory at the Virginia Merrill Bloedel Hearing Research Center. Subjects were instructed on the procedures for the two psychoacoustic tasks either verbally or using written notes (see Appendix 1).

Pure-tone audiometry

Standard pure-tone audiometry was performed by clinically certified audiologists across the frequency range of 125 to 8,000 Hz using an audiometer (Grason-Stadler, Model 61) with an insert earphone (Etymotic, ER-3A). A modified Hughson-Westlake technique was used to measure pure-tone thresholds.

Unaided W-22 monosyllabic word recognition in quiet

Stimuli for the W-22 test (8) were played on a compact disc (CD) player, and the output was routed to the audiometer. The output of the audiometer was directed to an ER-3A earphone. The stimuli were presented at a supra-threshold level, generally 40 dB SL or at most comfortable level (MCL) if loudness recruitment issues precluded a higher level. Twenty-five words were presented from a word list randomly chosen for each subject. Subjects were instructed to repeat the word that they heard. A total percent-correct score was calculated based on words correctly repeated.

Aided AzBio sentence recognition in quiet

To evaluate the functional use of subjects’ current HAs, sentence recognition was measured using AzBio sentences (3,4). Sentences were played from a CD routed through the audiometer and presented in the sound field. During testing, subjects used their own HAs or loaner HAs if they did not have their own. No background noise was used. One list of 20 sentences was used for each subject. Sentences were presented at 60 dBA. Subjects were instructed to repeat the sentence that they heard. Each sentence was scored as the total number of words correctly repeated. A mean percent correct score across 20 sentences was calculated.

To ensure patients were properly fit with amplification for CI evaluation process, patients’ own HAs were evaluated using an AudiScan Verifit HA analyzer. The Verifit was used to analyze electroacoustic characteristics such as frequency response, gain, and output of the HA. If HAs did not meet anticipated targets such as those defined by NAL-NL2 prescriptive method, or were obviously under-amplifying as demonstrated by functional gain testing in the sound-field, clinic-owned HAs were used. The clinic used Phonak Naida S V UP BTE HAs programmed via Noah-3 using Phonak Target 3.1 software with the NAL-NL2 prescriptive formula. The programmed HAs were then analyzed using the AudioScan Verifit test box to verify appropriate responses to speech at three different input levels (55, 65, and 75 dB SPL) and maximum power output. Once programmed and verified, HAs were coupled either to the patient’s own earmolds or to comply canal tips and tubes for the evaluation process.

Spectral-ripple discrimination (SRD)

Stimuli were generated using MATLAB with a sampling frequency of 44,100 Hz. The following equation was used (6,7):

| (Eq. 1) |

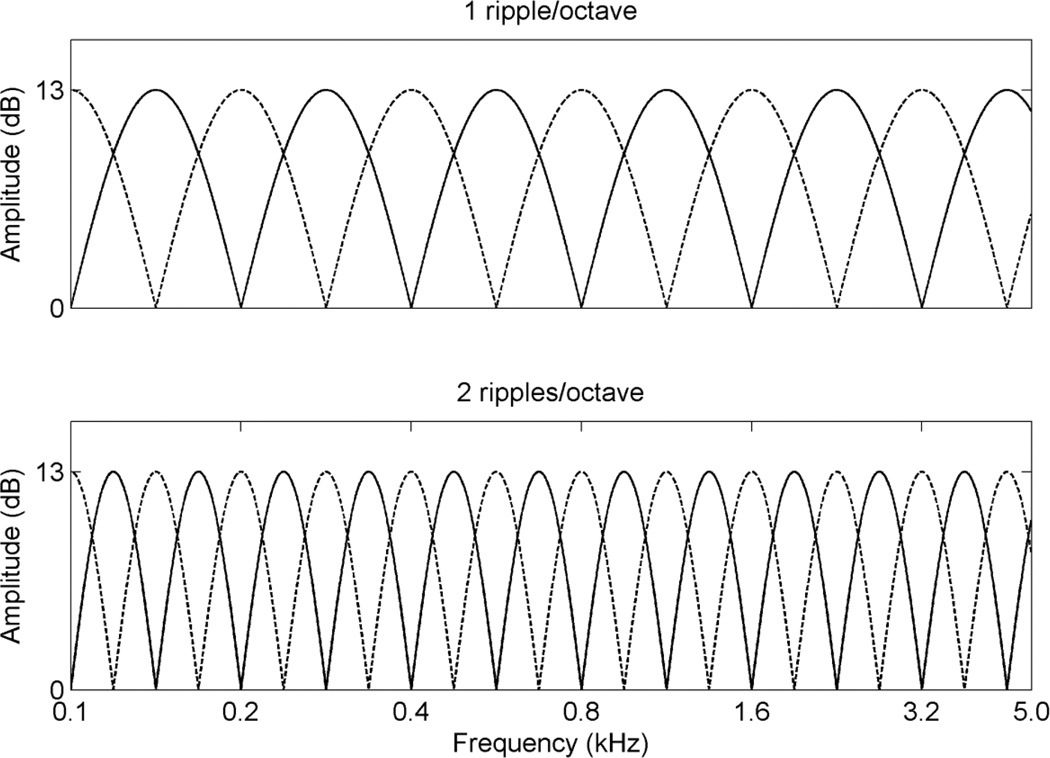

in which D is ripple depth in dB, R is ripples/octave (rpo), Fi is the number of octaves at the i-th component frequency, ∅ is the spectral modulation starting phase in radians, t is time in seconds, and the φi are the randomized temporal phases in radians for pure tones. The ripple depth (D) of 13 dB was chosen because it was desirable to present the full spectral modulation depth within subjects’ dynamic range (i.e. between threshold and MCL). The tones were spaced equally on a logarithmic frequency scale with a bandwidth of 100 – 4,991 Hz. For the reference stimulus, the spectral modulation starting phase of the full-wave rectified sinusoidal spectral envelope was set to zero radian, and for “oddball” stimulus, the phase was set to π/2 radian. The 2,555 tones ensured a clear representation of spectral peaks and valleys for stimuli with higher ripple densities. The stimuli had 500 ms total duration and were ramped with 150 ms rise/fall times. Figure 1 shows the amplitude spectra for rippled noise with 1 and 2 rpo, respectively.

Figure 1.

Amplitude spectra of stimuli for spectral ripple discrimination. The solid lines show the spectra for the reference stimuli and the dotted lines show the spectra for the test stimuli.

Threshold and MCL for each ear were measured using rippled noise. The rippled noise, generated by a computer, was routed through an audiometer and presented monaurally to the test ear via an insert earphone. The experimenter used standard audiometric techniques (American Speech-Language-Hearing Association, 2005) with the audiometer to determine threshold and MCL for the rippled noise. Thresholds and MCLs for all tested ears are listed in Table 3.

Table 3.

Thresholds and MCLs measured for spectral ripple discrimination and temporal modulation detection tests are shown in dB HL.

| Subject ID | Spectral ripple discrimination | Temporal modulation detection | ||

|---|---|---|---|---|

| Threshold | MCL | Threshold | MCL | |

| S111(L) | 85 | 115 | 85 | 115 |

| S111(R) | 70 | 85 | 75 | 85 |

| S112(R) | 60 | 105 | 70 | 110 |

| S113(L) | 65 | 100 | 75 | 95 |

| S113(R) | 60 | 90 | 65 | 100 |

| S114(L) | 75 | 115 | 85 | 110 |

| S114(R) | 70 | 110 | 85 | 110 |

| S115(R)* | 75 | 83 | 80 | 95 |

| S116(L) | 45 | 60 | 55 | 70 |

| S116(R) | 40 | 65 | 55 | 70 |

| S118(L) | 55 | 70 | 55 | 75 |

| S118(R) | 65 | 85 | 70 | 90 |

| S119(L) | 50 | 85 | 65 | 90 |

| S119(R) | 50 | 80 | 65 | 95 |

| S120(L) | 75 | 110 | 85 | 115 |

| S120(R) | 80 | 110 | 95 | 115 |

| S121(L) | 40 | 85 | 40 | 95 |

| S121(R) | 50 | 95 | 65 | 85 |

| S122(L) | 25 | 45 | 25 | 45 |

| S122(R) | 45 | 70 | 35 | 55 |

| S123(L) | 80 | 105 | 85 | 110 |

| S123(R) | 55 | 90 | 65 | 95 |

| S124(L) | 60 | 80 | 65 | 80 |

| S124(R) | 65 | 80 | 70 | 85 |

| S125(L) | 80 | 105 | 85 | 115 |

| S125(R) | 80 | 105 | 90 | 115 |

| S126(L) | 80 | 105 | 85 | 115 |

| S126(R) | 80 | 105 | 90 | 115 |

Due to the normal hearing on the left ear (single sided deafness), masking noise was presented when measuring thresholds and MCLs for the right ear.

Before actual testing, subjects listened to the stimuli several times with the experimenter to ensure that they were familiar with the task. During testing, stimuli were presented at the measured MCL. A three-interval, three-alternative paradigm was used to determine the threshold. Three rippled noise tokens, two reference stimuli and one “oddball” stimulus, were presented for each trial. Ripple density was varied adaptively in equal-ratio steps of 1.414 in an adaptive 2-up, 1-down procedure. The subject’s task was to identify the interval that sounded different. Feedback was not provided. A level rove of 8 dB was used with a 1 dB step size to limit listeners’ ability to use level cues. The threshold for a single adaptive track was estimated by averaging the ripple density for the final 8 of 13 reversals. Higher spectral-ripple thresholds indicate better discrimination performance. For each ear, three adaptive tracks were completed and the final threshold was the mean of these three adaptive tracks. The entire procedure (including the MCL measurement and actual testing) took about 20 minutes to complete.

Temporal modulation detection

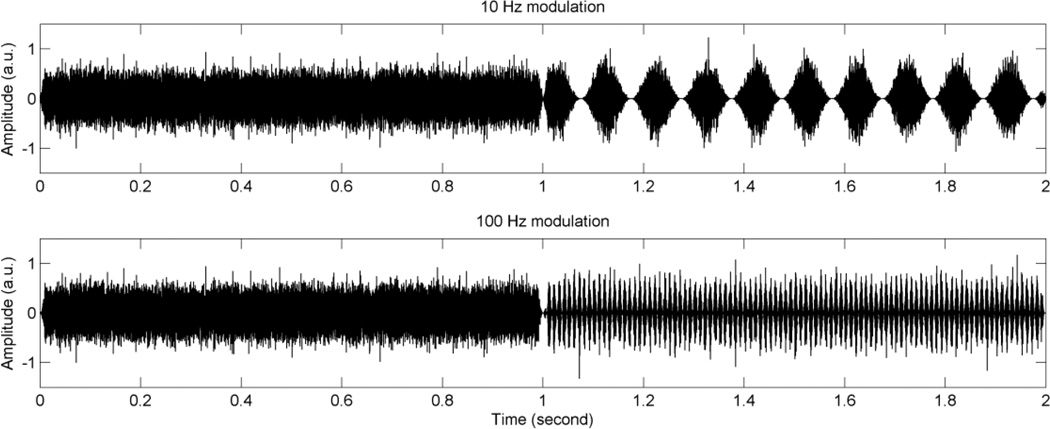

This test was administered as previously described by Won et al. (7). Stimuli were generated using MATLAB software with a sampling frequency of 44,100 Hz. For the modulated stimuli, sinusoidal amplitude modulation was applied to the wideband noise carrier. The stimulus duration for both modulated and unmodulated signals were 1 second. Modulated and unmodulated signals were gated on and off with 10 ms linear ramps, and they were concatenated with no gap between the two signals.

Before testing, threshold and MCL were measured using an unmodulated wideband noise. During actual testing, stimuli were presented at the measured MCL. Modulation detection thresholds (MDTs) were measured using a 2-interval, 2-alternative adaptive forced-choice paradigm. One of the intervals consisted of modulated noise, and the other interval consisted of steady noise. Subjects were instructed to identify the interval which contained the modulated noise. Modulation frequencies of 10 and 100 Hz were tested: the former represents a fairly slow rate of modulation, while the other is a relatively fast rate. A 2-down, 1-up adaptive procedure was used to measure the modulation depth threshold, starting with a modulation depth of 100% and decreasing in steps of 4 dB from the first to the fourth reversal, and 2 dB for the next 10 reversals. Visual feedback with the correct answer was given after each presentation. For each tracking history, the final 10 reversals were averaged to obtain the MDT. MDTs in dB relative to 100% modulation (20log10(mi)) were obtained, where mi indicates the modulation index. Subjects completed two modulation frequencies in random order, and then the subjects repeated a new set of two modulation frequencies with a newly created random order. Three tracking histories were obtained to determine the average thresholds for each modulation frequency. It took about 30 to 40 minutes to complete the test. Figure 2 shows the waveforms for the temporal modulation detection test.

Figure 2.

Waveforms for the temporal modulation detection test. In this example, modulated signals are presented in the second interval (between 1 and 2 seconds). Unmodulated wideband noise is presented in the first interval (between 0 and 1 second). The upper panel shows an example of 10 Hz modulation frequency, and the lower panel shows an example of 100 Hz modulation frequency. Modulation depth of 100% is used for this illustration.

Analysis

To estimate the optimal psychoacoustic criterion value for CI candidacy for the initial 20 ears, the exploratory analysis (predictor development) was performed using Pearson correlation analysis, linear regression modeling, and receiver operator characteristic (ROC) curve analysis (12). Optimization of the predictive performance of psychoacoustic tests was performed by varying the test criteria systematically to maximize the area under the ROC curve for predicting CI candidacy. Then the derived optimal psychoacoustic criterion value was used to make a prediction for the independent sample of an additional 8 ears, and the kappa statistic of agreement was calculated. The distinction between the initial 20 ears and the next 8 ears was made based on the order that each subject participated in this study.

Results

1. Data analysis with the initial 20 ears

(a) Correlation and regression analyses

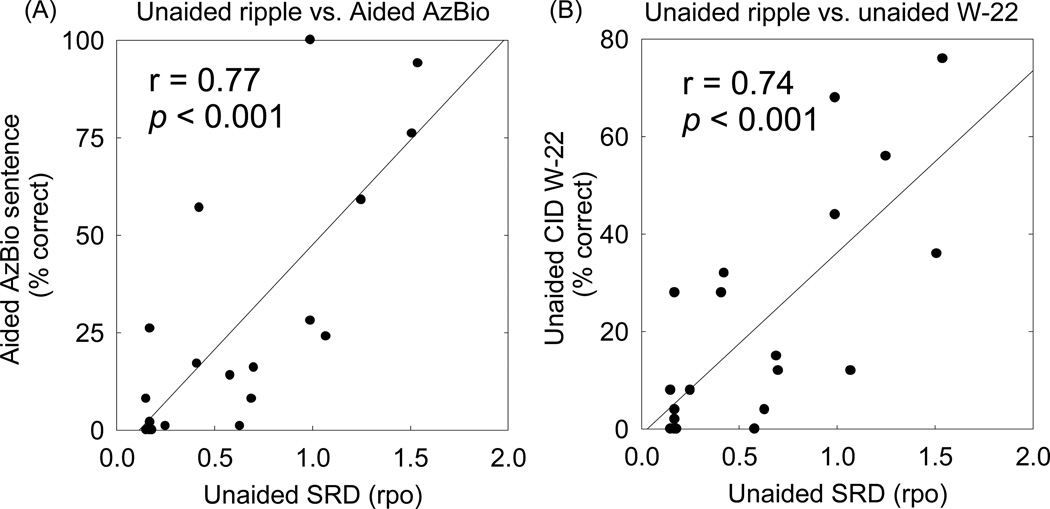

Significant correlations were found between unaided SRD and aided AzBio sentence recognition in quiet. Figure 3(A) shows the scattergram of unaided SRD thresholds and aided AzBio scores for the initial 20 ears (r = 0.77, p < 0.001). Figure 3(B) shows the scattergram of unaided SRD thresholds and unaided W-22 word scores (r = 0.74, p < 0.001).

Figure 3.

(A) Relationship between unaided spectral ripple discrimination (SRD) and aided AzBio sentence recognition in quiet. (B) Relationship between unaided SRD and unaided CID-W22 word recognition in quiet. Linear regressions are represented by the solid lines.

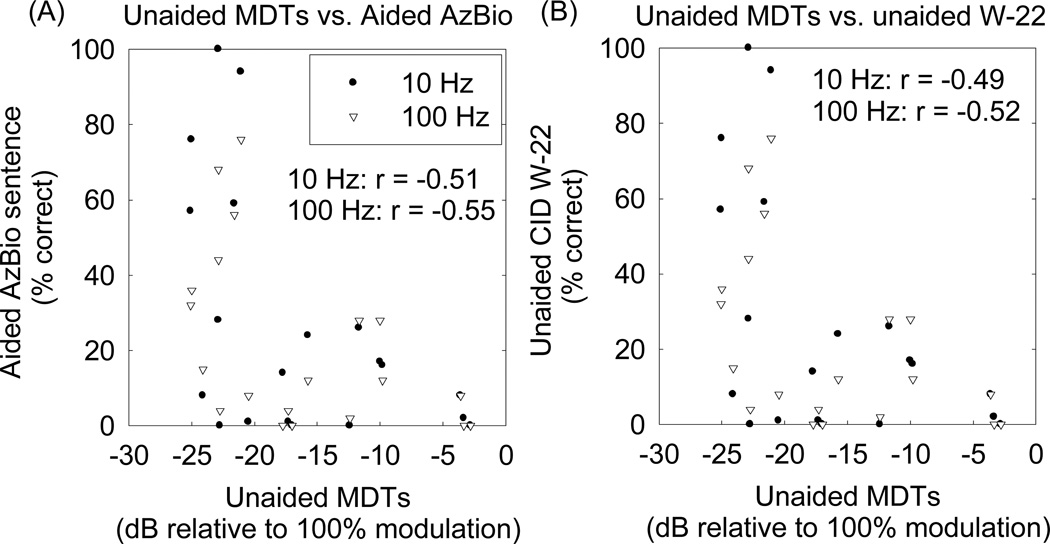

Relatively weaker correlations were found between unaided MDTs and performance on speech recognition tests. Figure 4 shows the scattergram of MDTs and aided AzBio sentence scores and unaided W-22 word scores.

Figure 4.

(A) Relationship between unaided modulation detection thresholds (MDTs) and aided AzBio sentence recognition in quiet. (B) Relationship between unaided MDTs and unaided CID-W22 word recognition in quiet. Pearson correlation coefficients are indicated in the figures. The p-values for these correlations were less than 0.05.

The correlation analyses above demonstrate that the SRD scores show the best predictive power for the aided AzBio sentence recognition scores. The following regression equation best modeled the relationship between the SRD and AzBio scores and was used to make predictions for the subsequent 8 ears.

| (Eq. 3) |

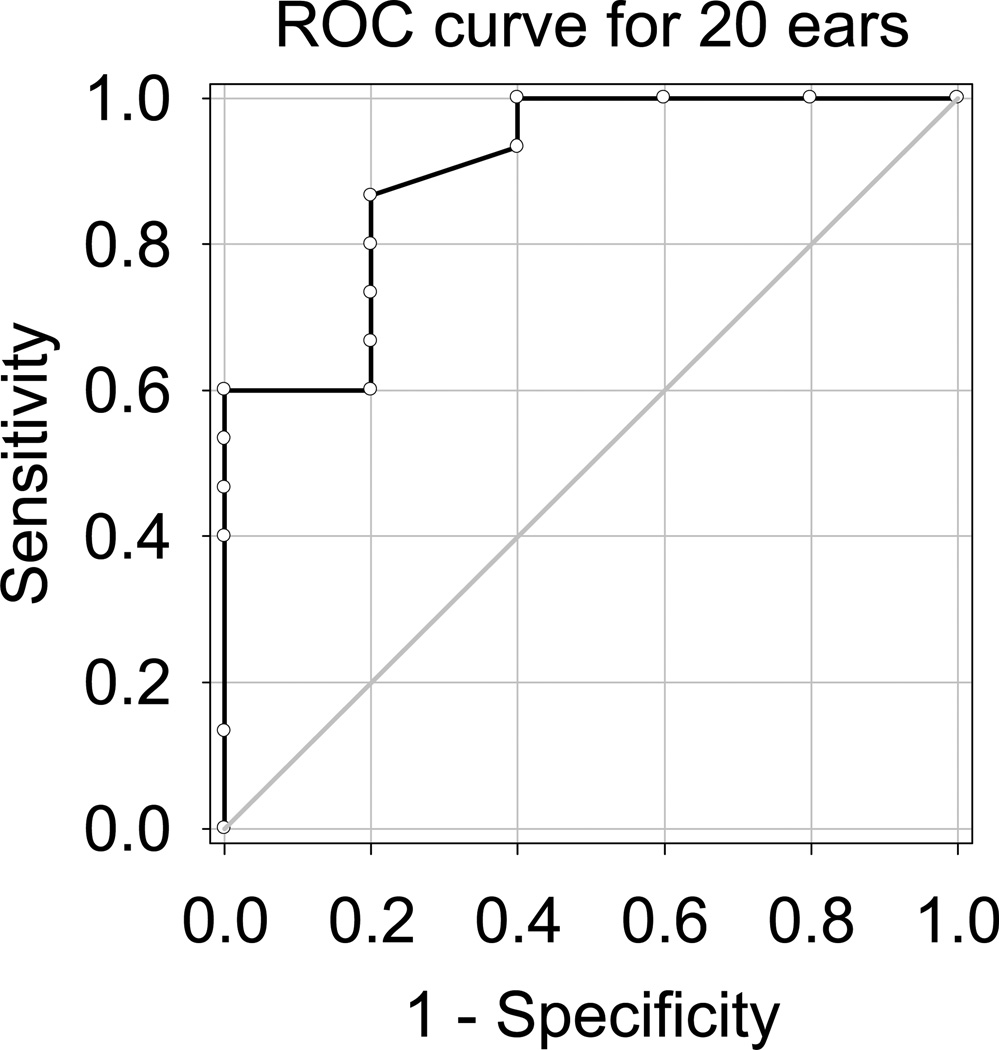

(b) ROC curve analysis

To further investigate the clinical potential of SRD, an ROC curve analysis was used to assess the quality of CI candidacy evaluation by the SRD test. Figure 5 shows the ROC curve for the initial 20 ears, plotting sensitivity as a function of (1 – specificity). The area under the ROC curve in Figure 5 was 0.90, with a 95% confidence interval of 0.16. The optimal cutoff value for SRD performance was 0.845 rpo, estimated following the method presented by Zweig et al. (12). Thus, subjects with SRD thresholds less than this cutoff value would be designated CI candidates; those with thresholds above this value would not be. At this SRD threshold, the corresponding optimized sensitivity value was 0.87, and specificity was 0.80, positive predictive value was 0.93, negative predictive value was 0.67, and the corresponding d′ value was 1.97.

Figure 5.

The ROC curve for the initial 20 ears is plotted for CI candidacy evaluation using spectral-ripple discrimination thresholds. Area under the curve was 0.90. An optimal cutoff value was 0.845 rpo, and at this cutoff value, the corresponding d′ was 1.97.

2. Candidacy prediction for 8 additional ears

Using the regression analysis (Eq. 3) and the ROC curve analysis, a CI candidacy prediction was made for 8 additional ears. Table 4 shows the prediction outcomes. For all 8 ears, the CI candidacy prediction made by SRD scores was identical to the clinical evaluation (kappa 1.0), suggesting that the unaided SRD test is a viable method for CI candidacy evaluation. The sensitivity, specificity, positive predictive value, and negative predictive value were each 1.0 (due to perfect agreement) in this small independent sample.

Table 4.

Candidacy prediction for separate 8 ears based on the data collected for 20 ears

| SRD | Predicted aided AzBio† |

Candidacy prediction‡ |

Clinical aided AzBio |

Clinical Candidacy |

||

|---|---|---|---|---|---|---|

| S123 (L) | 1.2 | > 0.845* | 58% | No | N/Aǂ | No |

| S123 (R) | 1.58 | < 0.845* | 79% | 92% | ||

| S124 (L) | 0.76 | < 0.845* | 35% | Yes | 1% | Yes |

| S124 (R) | 0.43 | < 0.845* | 17% | 16% | ||

| S125 (L) | 0.62 | < 0.845* | 27% | Yes | 36% | Yes |

| S125 (R) | 0.28 | < 0.845* | 9% | 7% | ||

| S126 (L) | 0.29 | < 0.845* | 10% | Yes | 11% | Yes |

| S126 (R) | 0.33 | > 0.845* | 12% | 9% | ||

The optimal cutoff estimated using the ROC curve

AzBio scores were predicted using Eq. 3.

When the predicted AzBio scores or the optimal SRD cutoff value was used, the same prediction was shown.

S123 did not complete the AzBio sentence test for his right ear due to participation schedule constraints.

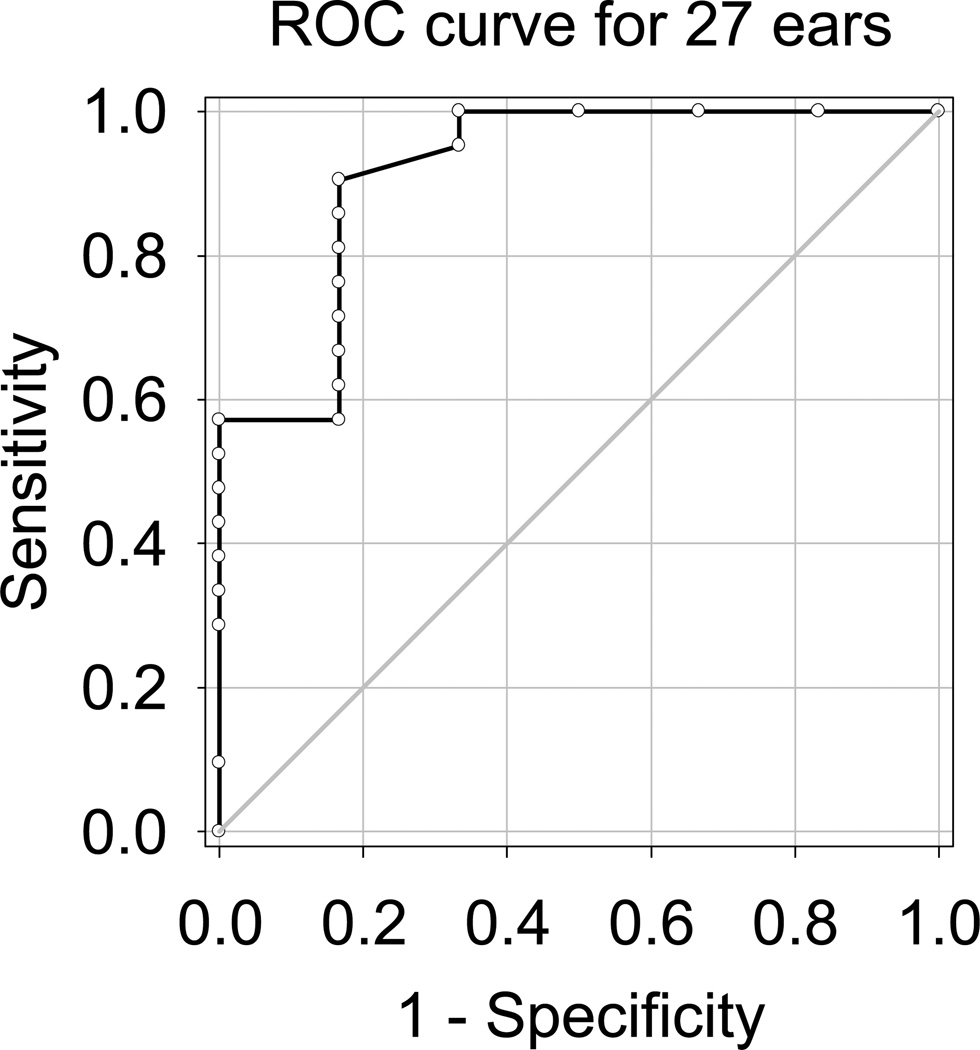

3. Re-analysis with 27 ears

Using all 27 ears, correlational and ROC analyses were completed again to provide these data with a larger sample1. An AzBio score for one ear was missing because the subject did not bring his HA for clinical testing. Figure 6 shows the ROC curve for 27 ears. The area under the curve is 0.92, with a 95% confidence interval of 0.14. The optimal cutoff value using this larger data set was 0.875 rpo. At this SRD threshold, the corresponding sensitivity value was 0.90 and specificity was 0.83, and the corresponding d′ value was 2.24. The correlation coefficients of SRD with aided AzBio sentence recognition and unaided W-22 word recognition were 0.80 and 0.76, respectively (p < 0.001 for both correlations).

Figure 6.

The ROC curve for all 27 ears is plotted for CI candidacy evaluation using spectral17 ripple discrimination thresholds. Area under the curve was 0.92. An optimal cutoff value was 0.875 rpo, and at this cutoff value, the corresponding d′ was 2.24.

Discussion

The primary goal of the present study was to determine if unaided non-linguistic tests could serve as surrogate measures for CI candidacy evaluation. SRD and TMD were evaluated in potential CI candidates. These psychoacoustic tests were administered without the use of HAs. The same group of patients was evaluated for CI candidacy using standard clinical procedures. Unaided SRD showed a strong correlation with aided AzBio scores. The ROC curve analysis demonstrated that unaided SRD performance mirrors the gold standard best-aided sentence recognition score, with a derived area under the ROC curve of 0.90 and corresponding d′ value of 1.97. Our application of this derived SRD criterion in a small independent sample suggests that this candidacy predictor may be a promising tool for determining CI candidacy; however, this SRD criterion should be validated in a larger independent sample in order to measure accurately the sensitivity, specificity, positive predictive value, and negative predictive value.

The sound stimuli were presented via an insert earphone for the SRD and TMD tests, while the aided AzBio sentences were presented at 60 dBA in the sound field. An insert earphone was used for the SRD and TMD tests to avoid the need to mask the contralateral ear, since insert phones afford substantial interaural attenuation. Prior to the SRD test, each subject’s threshold and MCL were measured to find an appropriate presentation level, audible but not uncomfortably loud, to perform the task. In addition, the dynamic range for each ear was measured to attempt to verify that the spectral modulation depth of 13 dB could be fully represented within the subject’s dynamic range. All subjects showed a dynamic range greater than 13 dB. However, it is possible that some patients could potentially present with a dynamic range less than 13 dB. To avoid this issue, an alternative method of assessing spectral modulation sensitivity, spectral ripple detection (13,14), could be employed. In this test, the ripple modulation rate is held constant and the depth of spectral modulation is varied adaptively. The minimum modulation depth of a rippled noise that can be discriminated from an unmodulated noise at a given ripple modulation frequency is termed the spectral ripple detection threshold. Using this paradigm, previous studies demonstrated a strong predictive power for speech recognition in CI users (13,14).

A potential confounding cue for the TMD test is vibrotactile sensation for the amplitude-modulated signal. Weisenberger et al. (15) evaluated temporal modulation detection sensitivity for vibrotactile sensations produced by broadband noise carriers. They demonstrated that MDTs were −6 and −2 dB for 10 and 100 Hz modulation frequencies, respectively. These performance levels are far worse than the results found from the present study, where subjects showed mean MDTs of −16.9 and −8.5 dB for 10 and 100 Hz modulation frequencies, respectively. Moreover, the magnitude of the vibrotactile sensation produced by the sponge earphones used in this study is less than the vibrotactile sensation produced by the vibration exciter used by Weisenberger et al. (15). Therefore, the net effect of vibrotactile sensation on the MDTs measured in this study is likely minimal. All subjects also confirmed that an acoustic cue was used to perform the TMD test.

Unaided SRD could be a cost-effective measure to evaluate CI candidacy, because SRD can be implemented without having to fit HAs. The total amount of time to fit HAs depends on various factors. For example, if earmolds are necessary, it might take one or two weeks to make and bring them to the clinic for patients. If patients are using HAs for the first time, the period for the fitting and adaptation (about 3 months) will be needed. Even if patients have been previously using HAs, it can take audiologists significant time to assess, program, and verify the best HA fit with real-ear measures. In contrast, unaided SRD can be administered much more efficiently because it does not involve HA fitting. The test itself can be completed in approximately 20 minutes, including the measurements of threshold and MCL, which is only approximately 10 to 30% of the average time required for the current protocol of verifying optimal HA fit and administering aided sentence recognition tests.

There are several potential advantages of using unaided non-linguistic psychoacoustic measures as a surrogate preoperative test for CI candidacy evaluation. First, from a cost and time perspective, the tasks can be administered without the costly and time-consuming best HA fit, instead using insert earphones in a smaller audio booth. This is a particularly appealing point for clinical settings where limited clinicians and resources for HA trials and speech recognition testing are available. The tasks use non-speech stimuli; therefore they can readily work within any language system. These tests show minimal acclimatization, addressing a potential learning effect with speech measures (6). Lastly, modified procedures could be used with prelingually deafened children preoperatively to obtain behavioral data using a method presented by Horn et al. (16), or with electrophysiological data using a method presented by Won et al. (17).

Conclusions

The current study suggests that it is potentially viable to use unaided SRD as a surrogate measure for CI candidacy evaluation. Such an approach could significantly reduce the cost and time for clinicians as well as patients and potentially allow testing of populations that are difficult or unreliable to assess with typical aided speech recognition tests. Future studies will investigate the predictive power of pre-operative unaided psychoacoustic performance for post-operative clinical outcomes. Given the significant, but weak correlations between pre-operative and post-operative speech discrimination scores (18), such prediction seem plausible.

ACKNOWLEDGEMENTS

This work was supported by NIH grants R01- DC010148, P30-DC004661 and an educational gift from Advanced Bionics. HJ Shim was supported by the Eulji Medical Center. IJ Moon was supported by the Samsung Medical Center.

Appendix 1. Written notes for subjects about experiment procedures

Spectral ripple discrimination: You will be listening to the same kind of sounds. This time, you’ll hear three bursts of noise. Two of them will sound the same in quality or pitch, and one will sound different. You choose the one that sounds different, and select it by clicking on the appropriate box on the computer screen. (Try not to pay attention to any slight differences in loudness between the sounds.)

Temporal modulation detection: You’ll be listening to the same kind of sounds. This time, there will be one burst of noise followed immediately by another. Your task is to pick which of the two intervals is wavering, or vibrating, and click the corresponding box. The color green above a box will signal the correct answer. We’ll start by listening to each of the sounds.

Footnotes

One subject (S123) did not complete the AzBio sentence test for his right ear, so 27 ears were used for the ROC analysis.

Financial Disclosures: Jay Rubinstein is a paid consultant for Cochlear Ltd and receives research funding from Advanced Bionics Corporation, two manufacturers of cochlear implants. Neither company played any role in data acquisition, analysis, or composition of this paper.

REFERENCES

- 1.Zwolan TA. Selection of cochlear implant candidates. In: Waltzman SB, Rland JT Jr, editors. Cochlear Implants. 2nd edition. New York: Thieme Medical Publishers; 2006. pp. 57–68. [Google Scholar]

- 2. [Accessed April 18, 2013];Centers for Medicare and Medicaid Services, Cochlear implantation. Available at: http://www.cms.gov/Medicare/Coverage/Coverage-with-Evidence-Development/Cochlear-Implantation-.html.

- 3.Gifford RH, Shallop JK, Peterson A. Speech recognition materials and ceiling effects: considerations for cochlear implant programs. Audiology and Neurotology. 2008;13:193–205. doi: 10.1159/000113510. [DOI] [PubMed] [Google Scholar]

- 4.Spahr AJ, Dorman MF, Litvak LM, Van Wie S, et al. Development and validation of the AzBio sentence lists. Ear Hear. 2012;33:112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listener. J Acoust Soc Am. 2005;118:1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- 6.Won JH, Drennan WR, Rubinstein JT. Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J Assoc Res Otolaryngol. 2007;8:384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Won JH, Drennan WR, Nie K, Jameyson EM, Rubinstein JT. Acoustic temporal modulation detection and speech perception in cochlear implant listeners. J Acoust Soc Am. 2011;130:376–388. doi: 10.1121/1.3592521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hirsh IJ, Davis H, Silverman SR, Reynolds EG, Eldert E, Benson RW. Development of materials for speech audiometry. J Speech Hear Dis. 1952;17:321–337. doi: 10.1044/jshd.1703.321. [DOI] [PubMed] [Google Scholar]

- 9.Viemeister NF. Temporal modulation transfer functions based upon modulation thresholds. J Acoust Soc Am. 1979;66:1364–1380. doi: 10.1121/1.383531. [DOI] [PubMed] [Google Scholar]

- 10.Bacon SP, Viemeister NF. Temporal modulation transfer functions in normal-hearing and 15 hearing-impaired listeners. Audiology. 1985;2:117–134. doi: 10.3109/00206098509081545. [DOI] [PubMed] [Google Scholar]

- 11.Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- 12.Zweig MH, Campbell G. Receiver-operating characteristic (ROC) plots: A fundamental evaluation tool in clinical medicine. Clin Chem. 1993;39:56–577. [PubMed] [Google Scholar]

- 13.Saoji AA, Litvak L, Spahr AJ, Eddins DA. Spectral modulation detection and vowel and consonant identifications in cochlear implant listeners. J Acoust Soc Am. 2009;126:955–958. doi: 10.1121/1.3179670. [DOI] [PubMed] [Google Scholar]

- 14.Anderson ES, Oxenham AJ, Nelson PB, Nelson DA. Assessing the role of spectral and intensity cues in spectral ripple detection and discrimination in cochlear-implant users. J Acoust Soc of Am. 2012;132:3925–3934. doi: 10.1121/1.4763999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Weisenberger JM. Sensitivity to amplitude-modulated vibrotactile signals. J Acoust Soc Am. 1986;80:1707–1715. doi: 10.1121/1.394283. [DOI] [PubMed] [Google Scholar]

- 16.Horn DL, Won JH, Lovett ES, Dasika VK, Rubinstein JT, Werner LA. Spectral ripple discrimination in listeners with immature or atypical spectral resolution. Presented at the Conference on Implantable Auditory Prostheses; 2013. abstract # T2. [Google Scholar]

- 17.Won JH, Clinard CG, Kwon SY, Dasika VK, Nie K, Drennan W, Tremblay KL, Rubinstein JT. Relationship between behavioral and physiological spectral-ripple discrimination. J Assoc Res Otolaryngol. 2011;12:375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rubinstein JT, Parkinson WS, Tyler RS, Gantz BJ. Residual speech recognition and cochlear implant performance: effects of implantation criteria. Am J Otol. 1999;20(4):445–452. [PubMed] [Google Scholar]