Abstract

Natural sound environments are dynamic, with overlapping acoustic input originating from simultaneously active sources. A key function of the auditory system is to integrate sensory inputs that belong together and segregate those that come from different sources. We hypothesized that this skill is impaired in individuals with phonological processing difficulties. There is considerable disagreement about whether phonological impairments observed in children with developmental language disorders can be attributed to specific linguistic deficits or to more general acoustic processing deficits. However, most tests of general auditory abilities have been conducted with a single set of sounds. We assessed the ability of school-aged children (7–15 years) to parse complex auditory non-speech input, and determined whether the presence of phonological processing impairments was associated with stream perception performance. A key finding was that children with language impairments did not show the same developmental trajectory for stream perception as typically developing children. In addition, children with language impairments required larger frequency separations between sounds to hear distinct streams compared to age-matched peers. Furthermore, phonological processing ability was a significant predictor of stream perception measures, but only in the older age groups. No such association was found in the youngest children. These results indicate that children with language impairments have difficulty parsing speech streams, or identifying individual sound events when there are competing sound sources. We conclude that language group differences may in part reflect fundamental maturational disparities in the analysis of complex auditory scenes.

Keywords: development, mismatch negativity (MMN), auditory scene analysis, language impairments, phonological awareness

1. Introduction

There is considerable controversy over the relationship between general auditory processing skills and language ability (Ramus et al., 2013). Developmental language disorders (DLDs), which include specific language impairment (SLI) and developmental dyslexia (DD), are defined by the absence of a clearly defined pathology (e.g., hearing loss or neurological disorders) in the face of an inability to use language with the same facility as age-matched peers. Many children with DLDs have persistent deficits in phonological processing (Briscoe et al., 2001). They have impaired ability to discriminate between speech sounds (Reed, 1989; Werker & Tees, 1987) and require larger spectral differences to differentiate phonemes than children with typical language development (TLD) (Elliot, Hammer, & Scholl, 1989; Elliot & Hammer, 1988). Although ongoing research has failed to identify the etiologies of DLDs, it has spawned diverse hypotheses regarding the underlying causal factors. On one side of the continuing debate, DLDs are hypothesized to be due to a specific linguistic deficit of phonological processing, not generalizable to the acoustic elements of the speech sounds themselves (Bishop, Carlyon, Deeks, & Bishop, 1999; Gathercole & Baddeley, 1993; Helzer, Chaplin, & Gillam, 1996; Mody, Studdert-Kennedy, & Brady, 1997; Nittrouer, 1999; Nittrouer et al., 2011; Ramus, Rosen, Dakin, Day, Castellote, White, & Firth, 2003; Remez, Ruben, Burns, Pardo, & Lang, 1994; Rosen, 2003; Rosen & Maganari, 2001; Schulte-Korne, Diemel, Bartling, & Remschmidt, 1999; Sharma et al., 2009; Snowling, 1998; Studdert-Kennedy, 2002).

On the other side, phonological processing deficits have been hypothesized to originate from a more general difficulty in perceiving acoustic information (Ahissar, Protopapas, Reid, & Merzenich, 2000; Beattie & Mannis, 2013; Benasich & Tallal, 2002; Benasich, Tomas, Choudhury, & Leppanen, 2002; Choudhury, Leppanen, Leevers, & Benasich, 2007; Efron, 1963; Farmer & Klein, 1995; Hari & Renvall, 2001; Lubert, 1981; McAnally & Stein, 1996; McAnally & Stein, 1996; Nagarajan, Mahncke, Salz, Tallal, Roberts, & Merzenich, 1999; Reed, 1989; Richardson, Thomson, Scott, & Goswami, 2004; Tallal & Piercy, 1973a; Tallal & Piercy, 1973b; Tallal, 1980; Tallal, Merzenich, Miller, & Jenkins, 1998; Tallal, Miller, & Finch, 1993; Tallal, Stark, Kallman, & Mellits, 1980; Wright, Bowen, & Zecker, 2000; Wright, Lombardin, King, Puranik, Leonard, & Merzenich, 1997). For example, it has been suggested that an inability to distinguish rapidly changing acoustic features at a normal rate impairs the ability to accurately represent the phonemic elements of the language, which in children with DLDs, may impede the normal development of spoken language (Stein & McAnally, 1995; Tallal & Piercy, 1973b). Further, individuals with language impairments often have 1) impaired ability to discriminate sound frequencies (Baldeweg et al., 1999; Hill et al., 2005; Mengler et al., 2005; McAnally & Stein, 1996; Nickisch & Massinger, 2009); 2) different auditory masking thresholds than controls (Wright & Reid, 2002; Hill et al., 2005); 3) poorer discrimination of sounds presented in rapid succession or briefly presented information (Ahissar et al., 2000; Benasich et al., 2002; Fazio, 1999; Tallal, 1976; Tallal & Piercy, 1973a; Tallal & Stark, 1981; Tallal et al., 1993; Tallal et al., 1985; Wright et al., 1997); 4) difficulty with rise-time perception (Goswami et al., 2011); 5) difficulty with rhythm perception (Huss et al., 2011); 6) impaired ability to discriminate sequential sounds (Tallal & Piercy, 1973a); and, 7) poorer ability at reporting the order of sequential sounds (Tallal & Piercy, 1973b).

There has been considerable debate about this latter etiological hypothesis because general auditory processing deficits are not always found in children diagnosed with DLDs (Bishop, Carlyon et al., 1999; Helzer, Champlin, & Gillan, 1996; Nittrouer et al., 2011). However, research continues to highlight difficulties of auditory perception in both SLI and DD (Catts, 1993; Catts, Adlof, Hogan, Weisner, 2005; Stackhouse & Wells, 1997; Tallal, 2004; Bishop, 2007; Bishop & Snowling, 2004; Corriveau, Pasquini, & Goswami, 2007; Goulandris, Snowling, & Walker, 2000). The inability to empirically resolve the issue has hampered progress in understanding the role of auditory processing in observed phonological processing deficits of children with DLDs (Bailey & Snowling, 2002).

The current study approached the issue from a different perspective by exploring the relationship between phonological processing ability and auditory scene analysis in 7–15-year-old children with and without DLDs. Auditory scene analysis is a fundamental skill of the auditory system that facilitates the ability to perceive and identify sound events in the environment. It is the skill that allows us to select a voice in a crowded room or to listen to the melody of the flute in the orchestra. Auditory scene analysis is remarkable in that sound enters the ears as a mixture of all the sounds in the environment, from which mechanisms of the brain disentangle the mixture to integrate and segregate the input and provide neural representations that maintain the integrity of the distinct sources. If we were at a garden party, for example, we may hear the wind blowing, music playing, glasses clinking, and people who are talking. The different sources can be distinguished by multiple acoustic cues, such as the spatial location, the pitch, and the timbre (e.g., male vs. female voices). The multiple cues in the signal contribute to the identification of the individual streams (e.g., wind blowing), and strengthen the perception of stream segregation within the whole scene. What’s interesting about understanding this skill in relationship to language impairments is the aspect of perceptual organization of sounds, when there are competing sound sources, which is common in everyday environments. The notion is that the ability to identify the order of within-stream events in complex environments is predicated on the sounds first being segregated (Sussman, 2005).

Additionally, there is evidence that speech perception requires fundamental sound processing mechanisms intrinsic to auditory scene analysis. Darwin (1981; 1984) demonstrated that only after partitioning sounds to streams were phonetic patterns heard. This indicates that phonological perception is dependent on the even more basic process of discriminating and segregating the acoustic signal into its constituent sound sources (Darwin, 2008). Thus, the ability to process the correct order of phonemes, a skill necessary to understand the speech stream, may be impaired by an inability to accurately segregate the acoustic signal into its constituent parts. Sussman (2005) found that auditory stream segregation processes precede within-stream event formation, which link or segregate successive within-stream elements together. This basic auditory processing mechanism is likely related to speech processing, providing additional support to this schema. Moreover, multiple reports have documented difficulties with stream segregation in adults with language impairments (Helenius, Uutela, & Hari, 1999; Petkov, O’Connor, Benmoshe, Baynes, & Sutter, 2005; Sutter, Petkov, Baynes, & O’Connor, 2000). Although, there is no clear evidence that nonlinguistic auditory processing deficits cause language impairments, the presence of low level processing deficits in those with language impairments lends credibility to the hypothesis that accurate nonlinguistic auditory processing abilities are vital to typical speech development.

To assess auditory scene processing abilities, the frequency distance between two sets of sounds was used to either promote segregation (when the sounds were far apart in frequency) or promote integration (when near in frequency). Music provides an ‘everyday’ example in which frequency separation of tones serve as a cue for segregation. Composers have long known about this remarkable ability of the auditory system. The alternation of tones along the frequency dimension can promote the perception of one or more distinct streams or melodies, such that from one sound source, one timbre (e.g. a guitar), notes played sequentially across a range of frequencies result in the experience of multiple sound streams occurring simultaneously, and converging harmonically (e.g., listen to Francisco Tárrege’s guitar piece Recuerdos de la Alhambra).

There were two main goals of the study. The first goal was to assess the ability of children to parse auditory input and perceive sound streams. This involved reporting how a mixture of sounds were perceived as one integrated or two segregated streams in one experiment, and selectively attending to one of the frequency streams to perform a simple sound discrimination task in the other experiment. Thus, Experiment 1 examined the global perception of the sounds, where Experiment 2 assessed the ability to detect a tone feature change occurring within a single stream. Here we aimed to gain a better understanding of whether the acuity for processing complex scenes in typical language development would be similar in children who have been identified with DLDs. We hypothesized that children with phonological processing deficits would require larger frequency separations to hear two streams or to detect deviant stimuli compared to children with TLD. The second goal was to determine whether the presence of phonological processing impairments would be predictive of stream segregation performance. Here, we evaluated the relationship of this fundamental but complex auditory scene processing skill to phonological processing ability, as measured on standardized tests of phonological (e.g., CTOPP). We hypothesized that phonological processing ability would predict stream segregation performance.

2. Methods

2.1 Participants

Seventy-eight children (39 females) ranging in age from 7–15 years (M=11/SD=2) were paid for their participation in the study. Participants were recruited by flyers posted in the immediate medical/research community and in local area schools. Children gave written assent and their accompanying parent gave written consent after the protocol was explained to them. The protocol was approved by the Internal Review Board at the Albert Einstein College of Medicine where the study was conducted. All procedures were carried out according to the Declaration of Helsinki. Table 1 denotes subject characteristics divided by group distribution for children with language impairments and for age-matched non-affected control participants with typical language development (TLD). All participants passed a hearing screen (at 20 dB HL for 500, 1000, 2000, and 4000 Hz).

Table 1.

Participant Information

| Typical Language Development | Developmental Language Disorder | |||||

|---|---|---|---|---|---|---|

| Age group | 7–9 yrs | 10–12 yrs | 13–15 yrs | 7–9 yrs | 10–12 yrs | 13–15 yrs |

| n=78 | 9 | 16 | 14 | 9 | 15 | 15 |

| M/F | 2/7 | 5/11 | 6/8 | 4/5 | 12/3 | 10/5 |

An initial telephone screen was used to ascertain TLD or DLD status, and to assess preliminary inclusion criteria (no history of audiologic, neurologic, or significant emotional or behavioral disorders). TLD participants had no history of language or reading diagnoses, ADHD, treatment or placement in special educational programs, no grade retention and no Individualized Education Plan. DLD participants had a prior diagnosis of a Mixed Receptive-Expressive Language Disorder and/or Reading Disorder based on professional evaluations. Recruits who met the pre-screen criteria underwent a two-hour psychometric testing session with a licensed psychologist (K.L.) to meet criteria for the study. Screening measures were conducted on all potential participants, which included 1) four subtests of the Wechsler Abbreviated Scale of Intelligence (WASI); 2) the core subtests of the Comprehensive Test of Phonological Processing (CTOPP); 3) WJ-III Tests of Achievement for reading; and 4) Vanderbilt DSM-IV-based checklist for attention deficit hyperactivity disorder (ADHD) symptoms. For the TLD group, recruits were eligible to participate in the study if they scored in at least the average range in all areas: standard scores no more than one standard deviation below the mean, had no prior diagnosis of ADHD and parental endorsement of <6 of the 9 symptoms of inattention and of hyperactivity on a DSM-IV based checklist for diagnosis of ADHD. For the DLD group, recruits were eligible if, in addition to previous diagnosis of reading or language impairment, they scored 1 SD or more below the mean on at least one subtest of the CTOPP, and a nonverbal IQ score on the WASI at or above 1 SD below the mean (Table 2). All participants included in this report met criteria for the study, summarized in Table 1.

Table 2.

Psychometric Test Results

| Age Group |

Wechsler Abbreviated Scale of Intelligence (WASI) |

Comprehensive Test of Phonological Processing (CTOPP) |

Woodcock Johnson III Tests of Achievement |

DSM-IV Checklist | |||

|---|---|---|---|---|---|---|---|

| Performance IQ |

Phonological Awareness |

Phonological Memory |

Rapid Naming |

Word Attack | Inattention | Hyperactivity | |

| Typical Language Development | |||||||

| 7–9 y | 114 (16) | 110(16) | 108(14) | 104(14) | 119(10) | 0(0) | 0(0) |

| 10–12 y | 108 (12) | 105(14) | 101(12) | 111(12) | 109(10) | 1(3) | 1(1) |

| 13–15 y | 107 (11) | 105(13) | 101(12) | 114(11) | 106(10) | 0(0) | 0(0) |

| Developmental Language Disorders | |||||||

| 7–9 y | 93 (9) | 86(8) | 88(12) | 99(7) | 85(8) | 2(2) | 2(2) |

| 10–12 y | 97 (8) | 83(11) | 87(9) | 89(8) | 84(11) | 3(3) | 1(2) |

| 13–15 y | 94 (7) | 80(9) | 84(10) | 87(13) | 84(8) | 4(4) | 2(3) |

Standard deviation in parentheses

2.2 Stimuli and procedures

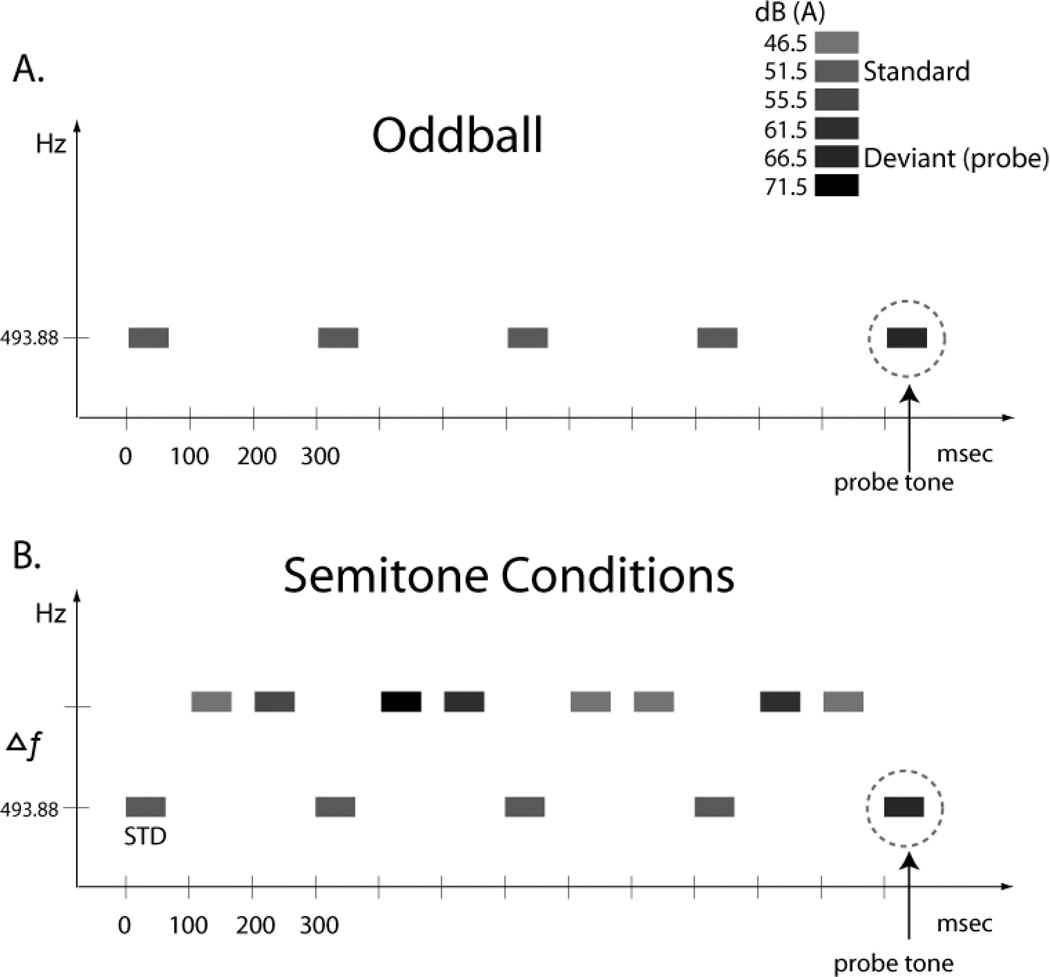

Complex tones (50 ms in duration with 5 ms rise/fall times) were created using Adobe Audition 3.0 software and Neuroscan STIM hardware (Compumedics Corp), presented binaurally via insert earphones (E-A-RTONES® 3A). The harmonic tone complexes (herein called “tones”) were composed of the first five harmonics with equal amplitudes. All tones were calibrated using a sound-level meter with an artificial ear (Brüel & Kjær 2209). The tones are labeled by their fundamental frequencies (f0). In all conditions, one tone was presented every 300 ms with a fixed f0 of 493.88 Hz (H2: 987.76 Hz; H3: 1481.64 Hz; H4: 1975.52 Hz; and H5: 2469.4 Hz). In the ‘oddball’ condition, this tone was presented alone (Fig 1A). In the ‘semitone’ conditions, another tone, which varied in its spectral distance from the fixed tone, was presented between the fixed tones to create different conditions of frequency separation (Fig 1B). When these higher-frequency tones intervened between the fixed frequency tones, the stimulus presentation rate was one tone every 100 ms (onset-to-onset pace).

Figure 1.

Stimulus Paradigm. Rectangles represent tones. The abscissa shows the passage of time and the ordinate indicates frequency scale. The shading of the rectangles represents the intensity value of the tones. A. Oddball sequence. Tones are presented at the pace they occur in the semitone (ST) conditions without the intervening tones. The louder intensity (deviant) probe tone is indicated with an arrow. B. Semitone Conditions. Tones presented between the ‘oddball’ tones to create the semitone conditions. These tones occur at 3, 7, 11, 23, and 31 ST distance from the oddball tones in separate conditions. The STD oddball tone intensity was 51.5 dB(A) and the louder probe tone was 66.5 dB(A). Only when the tones are segregated by frequency separation can the louder probe tones be distinguished from the intensity of the other oddball tones (see Methods for details).

In the Oddball condition (Fig 1A), the 493.88 Hz tone was presented with 87% probability at 51.5 dB(A) and with 13% probability at 66.5 dB(A), randomly dispersed. The louder 66.5 dB(A) tone was the “oddball” or deviant tone. In each of the five ST conditions, the frequency distance was chosen according to the logarithmic scale, in musical semitone (ST) steps. The ST conditions, denoting the Δf, and the f0 value of the second tone (in parentheses) were 3 ST (587.33 Hz); 7 ST (739.99 Hz); 11 ST (932.33 Hz); 23 ST (1864.7 Hz); and 31 ST (2960.0 Hz). These intervening tones randomly had any of four different intensity values (46.5, 55.5, 61.5, 71.5 dB(A)), distributed equi-probably. The intensity values spanned above and below the intensity values of the standard (51.5 dB(A)) and deviant (66.5 dB(A)) intensity values, such that standard and deviant tones of the oddball stream (493.88 Hz) tones had neither the highest nor the lowest intensity value of the six different intensity values presented (Fig 1). This was done to minimize the possibility that participants could anchor their responses to the loudest or softest tones in the overall sequence.

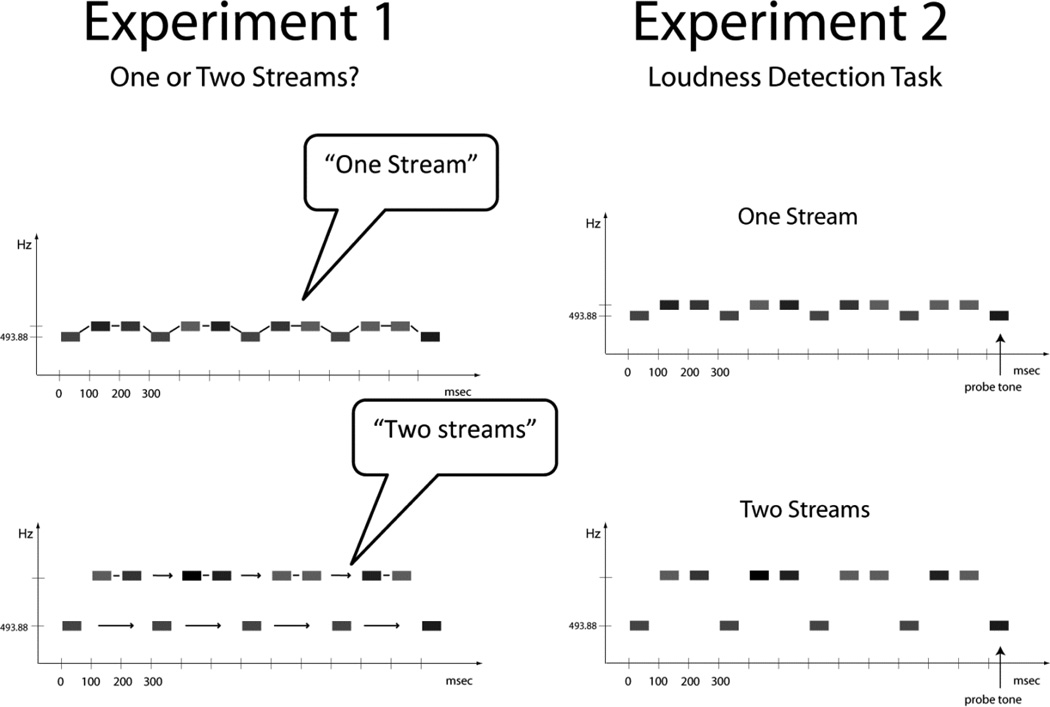

The two experiments used the same basic design but with different task goals (Fig 2). Experiments were conducted in a sound-treated booth (IAC, Bronx, NY). In Experiment 1 (Fig 2, left), participants were randomly presented with short sequences of each of the ST conditions (3, 7, 11, 23, and 31 ST). Each condition presented five stimulus blocks; each block contained 201 stimuli (20 s duration). The 25 stimulus blocks were randomized, and presented in different orders to each participant (to minimize any potential order effects). Practice was given prior to the main test, using tone sequence samples with ‘extremes’: 1 ST for ‘one stream’ and 35 ST for ‘two streams’ to ‘illustrate’ the difference. Once the instructions were understood, participants reported after each trial whether the tone sequences sounded more like one stream or more like two streams by raising one hand for reporting ‘one stream’ and two hands for reporting ‘two streams’. Thus, there was no ‘correct’ response for how participants perceived the tone sequences. The experimenter recorded participants’ response in writing after each trial, and then presented the next stimulus block.

Figure 2.

Behavioral Tasks. Experiment 1 (left panel) assessed global perception of the sounds with participant report of whether they heard the tones more as integrated into ‘one stream’ (top) or segregated into ‘two streams’ (bottom). Experiment 2 (right panel) assessed the ability to detect the probe tones amongst the 493.88 Hz tones. This task was predicated on the ability to segregate the tones, which varied as a function of the frequency distance of the intervening tones, to make the comparison of the frequent intensity value (51.5 dB) from the infrequent probe (66.5 dB) in that frequency stream.

In Experiment 2 (Fig 2, right), the experiment started with a practice for the loudness detection task using the oddball condition (500 stimuli, 65 probe tones, presented in two blocks of 250 stimuli, 300 ms onset-to-onset pace). Subjects were instructed to press the response key every time they heard the louder intensity tone that occurred randomly in the sequence (‘probe tone’, Fig. 2, right). The ST conditions were presented in a randomized order across participants. 1500 stimuli presented in two stimulus blocks (each containing 750 stimuli, 100 ms onset-toonset pace) were presented for each ST condition (13% were probe tones: 65 probe tones in each condition). Each stimulus block was 1.25 minutes in duration. The logic for Experiment 2 was that the louder intensity tones within the oddball stream could be detected with accuracy only when they segregate from the intervening tones -- forming their own stream. Perceiving the details within a stream first necessitated that the streams be segregated. When the louder intensity tones could not be detected in the oddball sequence, it indicated that the intervening tones were integrated into the same sound stream obscuring any intensity regularity (i.e., repeating 51.5 dB(A) tone) that existed in the oddball stream.

Two experiments (Experiment 1- One vs. two streams; and Experiment 2- loudness detection task) were used with the same ST conditions but with different instructions in order to assess convergent evidence on the same process. If the stream segregation process were being adequately assessed, then the two measures should be consistent with each other (Sussman & Steinschneider, 2009). When a participant says they hear two streams (in Experiment 1), it should be indicative of their ability to detect louder sounds in Experiment 2.

The Oddball condition provided a measure of comparison for the loudness detection task. Thus, five ST conditions were presented in Experiment 1 (One vs. two streams). Six conditions were presented in Experiment 2 (Loudness detection task). The total session time, including practice, breaks, hearing screen, and reading/signing consent forms took approximately 80 minutes.

2.3 Data Analysis

For Experiment 1, there were no ‘correct’ answers. Thus, the proportion of trials that two streams were perceived was calculated, separately for each ST condition in each group. For Experiment 2, the dependent variables were hit rate (HR), false alarm rate (FAR), d’, and reaction time (RT), calculated for the detection of the within-stream intensity deviants (‘probe tones’). Button presses were only required on a small percentage of the overall sequence of tones (4% of the total number of stimuli in the Semitone conditions). A response was considered correct when it was recorded 100–900 ms from stimulus onset. ‘Hits’ were correct button presses to the infrequent louder intensity probe tones; ‘misses’ were the absence of a button press to the probe tones. ‘‘Correct rejections’’ were correct ‘‘no-go’’ responses to any of the other tones. “False alarms’’ were button presses to any tone other than the louder intensity probe tones. However, due the rapid pace of the stimuli, calculating FAR based on the total number of non-target stimuli possibly yields an underestimate of the FAR because there can be no expectation that a response can occur to every tone (Bendixen & Andersen, 2013). To provide a more conservative estimate of the FAR, it was calculated according to Bendixen and Andersen (2013), using the number of possible response windows as the base estimate to calculate FAR rather than the number of total non-target stimuli as the denominator. This number will be referred to as the ‘adjusted false alarm rate’. The adjusted FAR was used to calculate d’, also under the assumption that it provides a more conservative estimate of the sensitivity to the target. HR, adjusted FAR, d’, and RT were calculated for each individual, separately in each condition.

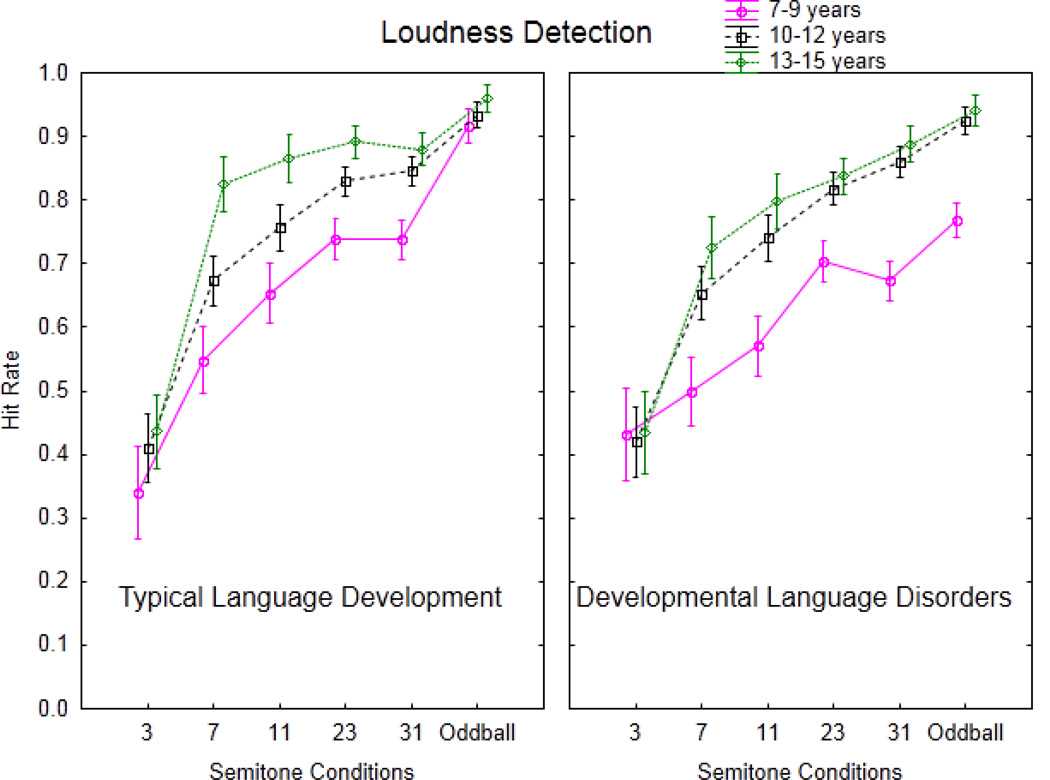

For the loudness detection task (Experiment 2), separate two-way mixed model analysis of variance (ANOVA), with factors of age group (between) and experimental condition (within), were used to assess within-group maturation effects (Fig 4). These results were not used to draw comparative conclusions between groups. For group comparison, to test the hypothesis that children with DLDs require larger frequency separations to detect two sound streams than children with TLD, a mixed model ANOVA was calculated on HR, using factors of group status (TLD/DLD), age group (7–9, 10–12, and 13–15 years), and experimental condition (3, 7, 11, 23, 31 ST, and Oddball) in both experiments. There was no difference in the ANOVA results whether using d’ (with the adjusted FAR) or HR. HR was chosen for display so that comparison units across conditions would be compatible, and reflect the statistical analyses used. Mixed model ANOVAs were also calculated separately for RT and for adjusted FAR with factors of group (between subjects), age group (between subjects), and condition (within subjects). Post hoc analysis (Tukey HSD) was performed when the ANOVA showed any significant main effects or interactions (p<0.05) and corrections were applied for violations of sphericity (Greenhouse–Geisser). Post hoc analyses were considered significant at p<0.05 (experimentwise). The within-subject (repeated measures) factors were Δf (ST conditions).

Figure 4.

Experiment 2. Hit rate on the loudness detection task is displayed (y-axis), comparing ages 7–9 (pink, solid line), 10–12 (black, dashed line), and 13–15 (green, dotted line) years within language status groups to illustrate maturation effects in children with typical language development (left panel) compared to children with developmental language disorders (right panel). Semitone and Oddball conditions are displayed along the x-axis.

Multiple regression analysis was used to determine if performance on Experiment 1 (report of one or two streams) predicted performance on Experiment 2 (loudness detection task) in each group. To test the hypothesis that phonological processing ability predicts stream segregation performance, a stepwise multiple regression analysis was performed with the average of the results from Experiments 1 and 2 (combined score) as the dependent (criterion) variable and phonological processing ability (scores on three subtests of the CTOPP: phonemic awareness, phonemic memory, and rapid naming) and non-verbal IQ (PIQ) as predictor variables. Pearson’s r was used to assess correlations.

3. Results

3.1 Results and Discussion for Experiment 1: One vs. two streams

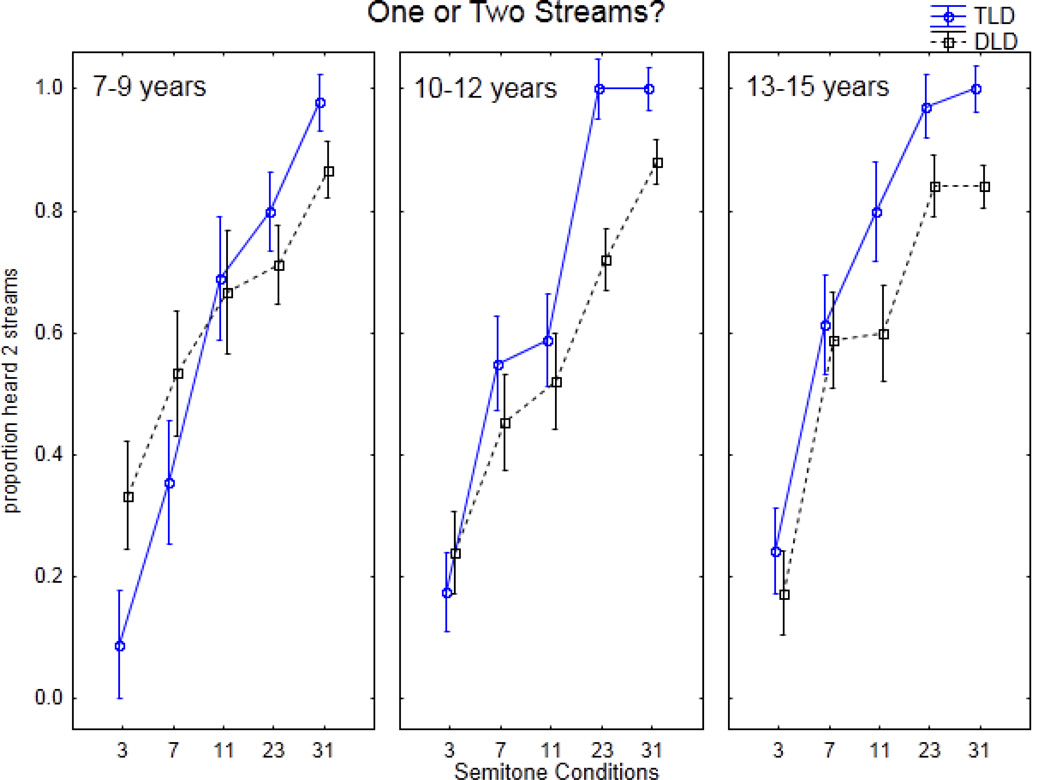

Table 3 summarizes the results showing the proportion of trials that participants reported hearing “two streams” in each ST condition. Figure 3 displays the results and illustrates the comparison between TLD children and those diagnosed with a DLD. Overall, , the proportion of times “two-streams” was reported increased with larger frequency separations (3-to-31 ST) in both groups. Consistent with other previous studies (Bregman, 1990; Carlyon et al., 2001; Micheyl et al., 2007; Sussman & Steinschneider, 2009; Sussman, Wong et al., 2007), there are three patterns of sound organization: integrated, ambiguous, and segregated. In children, the 3 ST Δf is associated with an integrated perception in which ‘two streams’ is rarely reported. The 31 ST Δf is associated with a segregated perception in which ‘two streams’ is almost always reported. The 7 ST Δf is associated with an ambiguous region in which ‘two streams’ is reported roughly half of the time.

Table 3.

Experiment 1: One vs. two streams

| 7–9 years | 10–12 years | 13–15 years | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Semitone Conditions | |||||||||||||||

| 3 | 7 | 11 | 23 | 31 | 3 | 7 | 11 | 23 | 31 | 3 | 7 | 11 | 23 | 31 | |

| TLD | .09 | .36 | .69 | .80 | .98 | .18 | .55 | .59 | 1.00 | 1.00 | .24 | .61 | .80 | .97 | 1.00 |

| (.11) | (.22) | (.25) | (.26) | (.07) | (.30) | (.38) | (.29) | (.00) | (.00) | (.34) | (.35) | (.26) | (.07) | (.00) | |

| DLD | .33 | .53 | .67 | .71 | .87 | .24 | .45 | .52 | .72 | .88 | .17 | .59 | .60 | .84 | .84 |

| (.26) | (.24) | (.28) | (.25) | (.14) | (.24) | (.19) | (.35) | (.26) | (.22) | (.21) | (.32) | (.35) | (.22) | (.19) | |

Proportion reported hearing two streams (standard deviation in parentheses). TLD=typical language development; DLD=developmental language disorder

Figure 3.

Experiment 1. Global perception of the sequences are displayed by comparing between children with typical language development (TLD, blue, solid line) and children with developmental language disorders (DLD, black, dashed line) for 7–9-year-olds (left panel), 10–12-year-olds (middle panel) and 13–15-year-olds (right panel). Proportion of times participants reported hearing ‘two streams’ is indicated on the y-axis. Semitone conditions are displayed along the x-axis.

There was a main effect of Δf (F4, 288=132.79, p<0.0001). Post hoc calculations showed a significant overall increase in the proportion of time that ‘two streams’ was reported from 3 to 31 ST (3ST=0.21 < 7 ST=0.52 < 11 ST=0.64 < 23 ST=0.84 < 31 ST=0.93) There was no main effect of age (F2, 72=1.18, p=0.31), suggesting that these percepts were consistent across all age groups. There was no main effect of group (F1, 72=2.42, p=0.12) but group status interacted with ST condition (F4, 288=4.45, p=0.0017). This interaction showed that, overall, DLD reported hearing ‘two streams’ less often than TLD at 23 and 31 ST conditions in all ages. A post hoc analysis conducted within each age group separately, showing that TLD group reported a significantly higher proportion of hearing ‘two streams’ than the DLD group at the 31 ST condition in the youngest group (7–9 years), at 23 ST and 31 ST in the middle group (10–12 years), and at 11 ST, 23 ST, and 31 ST in the oldest group (13–15 years). There were no interaction of group with age (F8, 288=1.69, p=0.11) and no three-way interaction of group × age × condition (F<1, p=0.44).

Overall, the children with DLDs never reached same level as TLD children, even at 31 ST, in reporting ‘two streams’ in any of the conditions. Distinctions of note are: 1) in the 13–15-year-olds, the DLD children were still in the ambiguous range at 11 ST (Fig. 3, right panel) where the TLD group reported hearing ‘two streams’ (segregation) most of the time; 2) in the 10–12-year-olds, the ambiguous range was at 7 and 11 ST conditions for both groups, at 23 ST the TLD switched percept to segregation, whereas the DLD group reported significantly more often hearing two streams from 23 to 31 ST but significantly less often than the TLD group; and 3) in the 7–9-year-olds, significant group differences in reporting one or two streams occurred only at the extremes (3 and 31 ST conditions). This seems to suggest that in the youngest group of DLD children, there was not as much distinction across the ST conditions. They reported hearing ‘two streams’ significantly more often than the TLD group when the sounds were integrated (3ST condition) and significantly less often when the sounds were segregated (31 ST condition). Thus, it is not clear from the results of Experiment 1 alone what this reflects regarding sound organization in the youngest group of DLD children; what other factors are influencing the report of global perception of the sounds. In the older two age groups, in contrast, the condition where there was a significant group difference distinguished the Δf at which the sound sequence was perceived as having two streams.

3.2 Results and Discussion for Experiment 2: Loudness detection task

Table 4 summarizes the mean RT, HR, FAR, and d’ for groups and conditions. Figure 4 illustrates the age group differences within and across the groups. First, there are notable maturational changes in auditory stream segregation ability in the typically developing children. This is observed by a main effect of age (F2, 36=13.61, p<.0001). Overall, the 13–15-year-olds had a significantly higher HR (0.81) than the 10–12-year-olds (0.74) and these were both significantly higher than the 7–9-year-olds (0.66). Thus, deviance detection as measured by HR improved with age. Because deviance detection is predicated on segregating the sounds and then perceiving the within-stream loudness distinction, we conclude that stream segregation ability improves with age. This is substantiated by the post hoc calculations showing that there was no significant difference in the ability to detect the louder sound as a function of age in the Oddball condition when there were no intervening tones (Fig 4, left panel, Oddball condition).

Table 4.

Experiment 2: Loudness Detection Task

| 7–9 years | 10–12 years | 13–15 years | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Semitone Conditions | |||||||||||||||||||

| Measure | Group | 3 | 7 | 11 | 23 | 31 | Old | 3 | 7 | 11 | 23 | 31 | Odd | 3 | 7 | 11 | 23 | 31 | Odd |

| Reaction time (msec) | TLD | 460 | 472 | 447 | 422 | 457 | 373 | 414 | 366 | 362 | 322 | 324 | 285 | 413 | 333 | 319 | 295 | 298 | 253 |

| (64) | (61) | (81) | (74) | (83) | (49) | (74) | (57) | (64) | (53) | (41) | (46) | (71) | (45) | (43) | (52) | (55) | (50) | ||

| DLD | 434 | 433 | 417 | 385 | 394 | 363 | 415 | 396 | 392 | 341 | 332 | 289 | 422 | 362 | 345 | 320 | 313 | 268 | |

| (100) | (80) | 88) | (86) | (76) | (67) | (59) | (53) | (60) | (53) | (55) | (55) | 78) | (60) | (41) | (57) | (47) | (50) | ||

| Hit rate (%) | TLD | .34 | .55 | .65 | .74 | .74 | .92 | .41 | .67 | .76 | .83 | .85 | .93 | .44 | .83 | .86 | .89 | .88 | .96 |

| (.19) | (.14) | (.13) | (.07) | (.11) | (.04) | (.19) | (.18) | (.12) | (.07) | (.06) | (.05) | (.26) | (.08) | (.04) | (.05) | (.05) | (.03) | ||

| DLD | .43 | .50 | .57 | .70 | .67 | .77 | .42 | .65 | .74 | .82 | .86 | .92 | .43 | .70 | .77 | .82 | .85 | .92 | |

| (.24) | (.23) | (.23) | (.18) | (.18) | (.21) | (.15) | (.16) | (.19) | (.09) | (.10) | (.05) | (.23) | (.17) | (.12) | (.13) | (.09) | (.08) | ||

| Adj. False alarm rate (%) | TLD | .33 | .14 | .08 | .06 | .06 | .06 | .26 | .09 | .05 | .04 | .03 | .04 | .24 | .08 | .02 | .02 | .02 | .02 |

| (.37) | (.12) | (.06) | (.03) | (.03) | (.04) | (.39) | (.07) | (.06) | (.04) | (.02) | (.05) | (.34) | (.12) | (.02) | (.02) | (.02) | (.01) | ||

| DLD | .53 | .32 | .14 | .13 | .10 | .10 | .32 | .08 | .08 | .03 | .03 | .03 | .28 | .07 | .06 | .05 | .03 | .03 | |

| (.51) | (.35) | (.10) | (.12) | (.04) | (.09) | (.26) | (.06) | (.08) | (.03) | (.03) | (.04) | (.41) | (.11) | (.09) | (.09) | (.07) | (.06) | ||

| d’ | TLD | .001 | .271 | .862 | .252 | .213 | .05 | .561 | .912 | .532 | .993 | .123 | .76 | .352 | .633 | .463 | .663 | .664 | .29 |

| (1.32) | (.56) | (.62) | (.39) | (.52) | (.59) | (1.23) | (.75) | (.83) | (.74) | (.70) | (.96) | (1.84) | (.75) | (.70) | (.90) | (.80) | (.78) | ||

| DLD | −.40 | .371 | .341 | .821 | .792 | .31 | .361 | .882 | .283 | .203 | .163 | .65 | .382 | .382 | .682 | .983 | .563 | .91 | |

| (1.17) | (1.50) | (.8 9) | (.9 3) | (.6 3) | (1.27) | (.7 8) | (.7 8) | (.9 6) | (.9 5) | (.8 2) | (.9 1) | (1.51) | (1.09) | (.8 9) | (1.06) | (1.13) | (1.08) | ||

Odd=oddball condition; (standard deviation in parentheses); TLD=typical language development; DLD= developmental language disorder

In all of the TLD age groups, the ability to detect the louder sound (the probe tone) increased with increasing Δf (main effect of condition, F5, 180=107.32, ε=0.48, p<0.0001). Post hoc calculations showed that HR in the 3ST condition was lowest (0.39), and less than the 7 ST condition (0.68), the 11 ST condition (0.76), and the 23 ST condition (0.82). There was no significant difference between the 23 and 31 ST condition (0.82), but the 31 ST condition was significantly lower than the HR in the Oddball condition (0.94). The interaction between age and condition did not reach significance after correction (F10, 180=1.82, p=0.12).

Assessing maturation effects in the DLD group, overall, as Δf increased, so did HR (main effect of condition, F5, 180=76.83, ε=0.61, p<0.0001). There was a significant increase in HR with increase in Δf: 3 ST condition (0.43) < 7 ST (0.62) < 11 ST (0.69) < 23 ST (0.78). There was no significant difference between the 23 and 31 ST (0.79) conditions. HR in the 31 ST was significantly lower than the Oddball (0.87) condition. A main effect of age group was observed (F2, 36=4.18, p<0.023), with post hoc calculations showing that the 7–9-year-olds had a lower HR (0.61) than the 10–12-year-olds group. In contrast, no significant difference between the 10–12 and 13–15 year-olds occurred in the group with DLD (0.74 vs. 0.75, respectively). That is, there was no significant improvement with age from 10–12 years to 13–15 years in the DLD group. There was no interaction between condition and age (F10, 180=1.72, p=0.12).

Assessing effects of group status (TLD vs. DLD), on loudness detection performance, ANOVA revealed a significant age by condition interaction (F10, 360=2.57, p=0.024) showing that overall, the 7–9-year-old group had a lower HR in the Oddball (0.84), 31 ST (0.71), 23 ST (0.72), 11 ST (0.61), and 7 ST (0.52) conditions than both middle and older groups. The middle group was significantly lower than the older group only in the 7 ST (0.66 vs. 0.76, respectively) and 11 ST (0.75 vs. 0.82, respectively) conditions. This was not dependent upon language status and suggests that the ambiguous range occurs at larger Δf in younger children. HR did not differ for the 3 ST conditions across age groups (young: 0.39; middle: 0.42; and older: 0.43), indicating the integrated percept for all at this Δf. There was no main effect of group status (TLD vs. DLD) (F1, 72=2.74, p=.10) on loudness detection performance, and no interactions of group status and age (F<1, p=0.53), or group status, age, and condition (F< 1, p=.45). The interaction between group status and condition was not significant (F5, 360=2.13, p=0.06) but the trend was toward DLD having lower HR than TLD in the 11, 23, and 31 ST conditions. (This interaction was statistically significant when comparing performance across experiments (F4, 288=5.41, p=.0003), showing larger frequency separations needed to report two streams or detect louder sounds in DLD than TLD at 11, 23, and 31 ST). There was a significant main effect of age group (F2, 72=12.34, p<0.0001). Post hoc revealed that HR in the younger children (7–9-year-old) was lower (0.63) than in the middle (0.74) and older (0.78) aged children, with no significant difference between the middle and older groups. There was also a significant main effect of condition (F5, 360=181.66, p<0.0001). Post hoc analysis revealed that only 23 and 31 ST conditions did not differ significantly from each other. Otherwise, there was a significant increase in loudness detection performance with increase in Δf (3 ST: 0.41; 7 ST: 0.65; 11 ST: 0.73; 23 ST: 0.80; 31 ST: 0.80), and HR was significantly lower in all ST conditions compared to the Oddball (0.90) condition. These age and condition effects on loudness detection performance did not depend on language ability.

False alarm rate (FAR) did not significantly differ as a function of group status (DLD vs. TLD) (F1,72=3.89, p=0.052): The trend was toward the DLD group having an overall higher FAR. There was a significant main effect of age group (F1,72=5.94, p<0.004). Post hoc calculations showed that the 7–9-year-old age group had significantly higher false alarms (0.17) than either of the other two groups (0.09 and 0.08), which did not differ from each other. There was also a significant main effect of condition (F5,360=36.23, p<0.001). Post hoc calculations showed that FAR was highest for the 3ST condition (0.37), consistent with an inability to segregate out the oddball tones. FAR was also higher in the 7 ST condition (0.13) than the Oddball (0.04) or 31 ST (0.04) conditions, but did not differ from the 23 (0.05) or 11 ST (0.07) conditions. There were no interactions of condition with group (F5,360 <1, p=0.43), or with age group (F10,360 <1, p=0.52), and no interaction among all factors (F10,360 <1, p=0.76).

Reaction time to the louder sounds did not significantly differ as a function of group status (DLD vs. TLD) (F1,72<1, p=0.97). There was a significant age effect (F2,72=19.35, p<00001), with post hoc calculations showing that the youngest age group (7–9 years) had significantly slower RT (421 ms) than both older groups (353 and 358 ms, respectively). The difference in RT between the two older age groups was not significant. There was also a main effect of condition on RT (F5,360 = 89.96, ε=.56, p<0.0001). Reaction time was shortest in the Oddball condition (305 ms). Mean RT increased with decreasing Δf (Oddball: 305 ms<31 ST: 353 ms=23 ST: 348 ms<11 ST: 380 ms<7 ST: 394 ms<3 ST: 427 ms), with no difference between 31 and 23 ST conditions. A condition by age group interaction (F10, 360=4.66, p<0.0001) occurred because RT was significantly longer in the youngest group than the two older age groups in all conditions except for the 3ST condition, where there was no difference among the groups. This was likely due to the difficulty or inability in the 3 ST condition to detect the louder sound when the sequence of tones was perceived as an integrated stream.

3.3 Comparison between Experiments 1 and 2

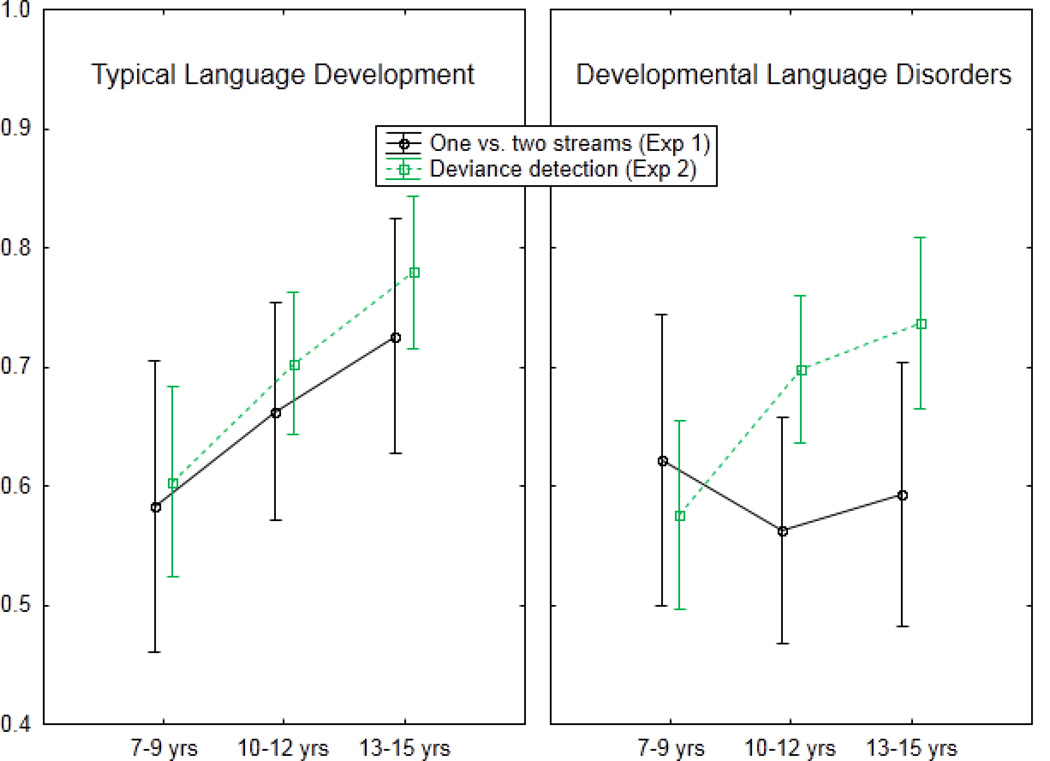

The purpose for conducting two experiments was to assess stream segregation using different performance tasks. Experiment 1 relied on report about whether the set of sounds was perceived as “one” (integrated) or “two” (segregated) streams. In Experiment 2, the ability to perform the loudness detection task was predicated on the ability to first segregate out one set of tones from the other. Thus, it would be expected that the results of both experiments would be consistent with each other. That is, when a participant says they hear two streams, performance on the loudness detection task should be similarly strong, consistent with previous studies in TLD children (Sussman, Wong, et al., 2003; Sussman & Steinschneider, 2009). Figure 5 shows a comparison of the two groups, illustrating in the TLD group the consistency of the increase in performance with the increase in age across experiments (Fig 5, left). This pattern is not seen in the DLD group. In the TLD group, the results of the regression indicated that reporting ‘two streams’ significantly predicted loudness detection performance (β= .40, p=.01), and report of hearing ‘two streams’ also explained a significant proportion of variance in loudness detection (R2=.16, F1,37=7.07, p<.01). That is, in the TLD group (Fig 5, left), when a child said they heard two streams (Exp 1) performance on the loudness detection task (Exp 2) was consistent with the amount of perceived segregation. In contrast, in the DLD group, the amount of perceived segregation did not predict loudness detection performance (β= −.20, p=.21). When a child said they heard two streams, it did not correspond with their ability to detect the louder sound (Fig 5, right). This inconsistency between performance in the DLD group could be due to the demand of language use and conceptualization of what ‘two streams’ means in Experiment 1, which may not be related to stream segregation perception per se. However, other factors, such as attentional abilities, may have distinguished performance between the two experiments. Nonetheless, task performance of reporting whether one or two streams were heard may not be fully reflective of auditory stream segregation ability in children with language impairments.

Figure 5.

Comparison between results of Experiment 1 (black, solid line) and Experiment 2 (green, dashed line) are displayed for children with typical language development (TLD) and those with developmental language disorders (DLD), illustrating consistency in performance measures of stream perception in the TLD children (left panel) that was not observed in the children with DLD (right panel). Age group is presented along the x-axis, thus showing the overall maturation effect in the TLD group.

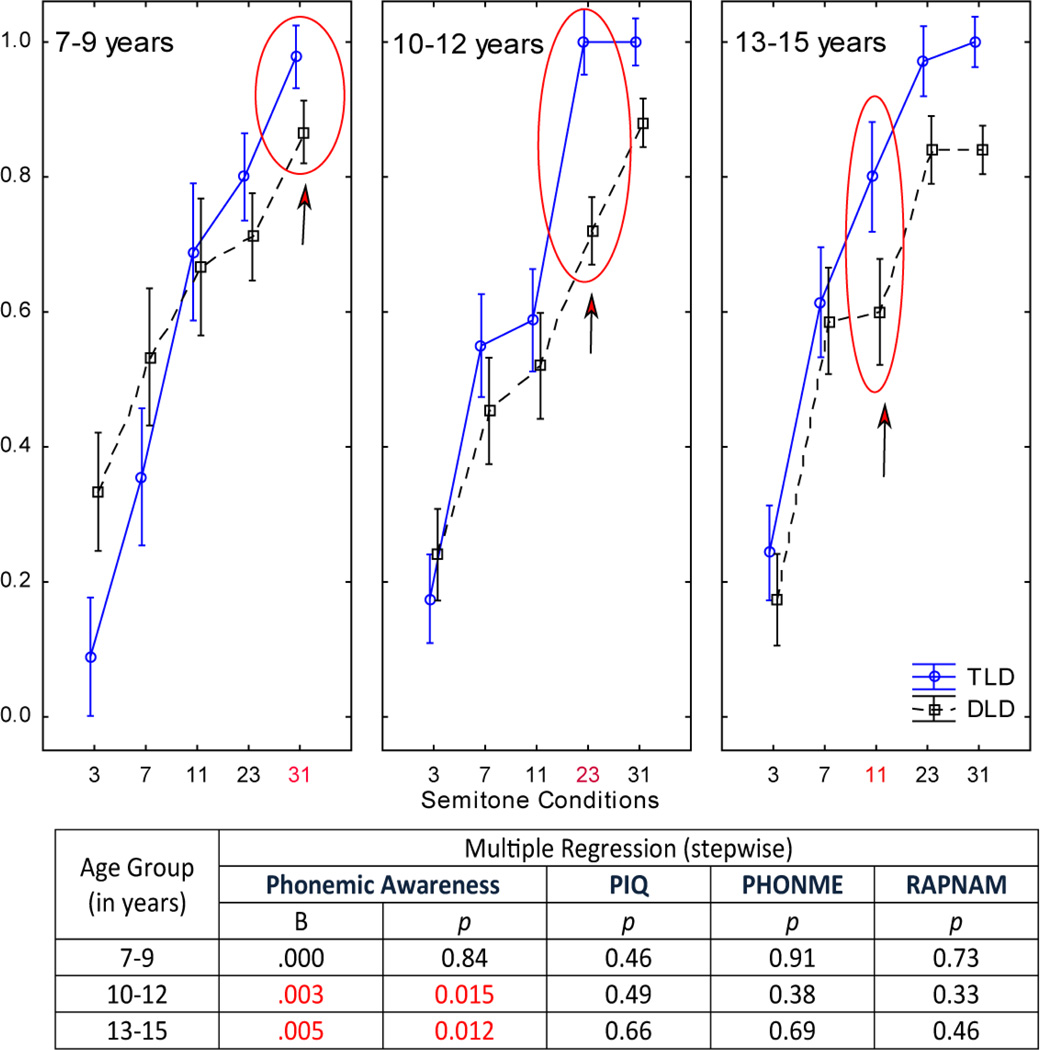

3.4 Relationship between phonological processing and auditory scene analysis

The relationship between phonological processing (phonemic awareness, phonemic memory, and rapid naming subtests of the CTOPP) and stream segregation performance was assessed with a stepwise multiple regression analysis in each age group separately using the ST condition where there was a significant difference in performance between the TLD and DLD groups (Fig 6, labeled in red). The overall phonological processing skill level significantly predicted stream perception in the 10–12- and 13–15-year-olds (R2=.19, F1,30=6.74, p=0.012; R2=.21, F1,28=7.35, p=.012, respectively). Phonemic awareness uniquely and significantly explained 19% and 21% (respectively) of the variance in the stream perception measures. However, the strength of the relationship between phonemic awareness and stream perception was low (unstandardized coefficient (B) = .003 and .005, respectively), suggesting that the relationship is not linear (an increase in one measure does not predict a linear increase on the other measure). In contrast, phonological processing skills did not predict segregation performance in the youngest group (F4,17<1, p=0.66), and phonological processing scores explained none of the variance in stream perception in the 7–9-year-olds (B=0).

Figure 6.

Relationship between stream segregation perception and phonological processing ability. Multiple regression results are displayed using the first ST condition where significant differences between groups were found: at 31 ST in the 7–9-year-olds, at 23 ST in the 10–12-year-olds, and at 11 ST in the 13–15-year-olds as the criterion variable and subtest scores of the CTOPP (phonological awareness, phonemic memory, and rapid naming) and non-verbal IQ (PIQ) as predictor variables (see Results for detailed description). There was no relationship between language ability and stream perception in the 7–9-year-old group.

There were also significant correlations of stream segregation performance measures with scores on Word attack (r=.53), Phonological Awareness (r=.46), and Rapid Naming (r=.42) but not Phonemic memory skills in the 13–15-year-olds (11 ST condition); and with Word attack (r=.46), Phonological Awareness (r=.43), Rapid Naming (r=.40), and Phonemic memory (r=.36) in the 10–12-year-olds (23 ST condition). No significant correlations were found between phonological processing and word attack measures with stream segregation performance in the 7–9-year-old group.

4. General Discussion

These data demonstrate notable maturational effects on stream segregation ability in children with typical language development through adolescence (from 7–15 years of age). Older children reported hearing two streams (Experiment 1) and detected within-stream louder tones (Experiment 2) at smaller frequency separations than younger children. That is, acuity for stream perception improves with age. Moreover, the results of both experiments were consistent with each other in the TLD group. Ability to perform the loudness detection task (Experiment 2) was congruent with reporting hearing ‘two streams’ (Experiment 1).

There was a maturation effect on reaction time and on false alarm rate in the loudness detection task (Experiment 2), in which RT was slower and FAR higher in the youngest group compared to the two older age groups overall. These effects were not altered by language group status. Faster responses to loudness deviants were reflective of maturational characteristics not associated with language ability. Thus, this finding is incompatible with previous studies supporting a ‘general slowing hypothesis’ in children with DLDs (e.g., Miller et al., 2006). Finally, there was an inverse relationship of RT and FAR with ST condition in that RT was longer and FAR higher with decreasing Δf. This would be expected as the difficulty of the loudness detection task would be greater with smaller Δf.

Perception of stream segregation in both language groups showed that 31 ST was segregated, 3 ST was integrated, and 7–11 ST was ambiguous, depending on the age of the child. This result, the three regions of segregated, integrated, and ambiguous percepts, is consistent with our previous studies and with reports in adults (Sussman & Steinschneider, 2009; Sussman, Wong et al., 2007; Bregman, 1990; Carlyon et al., 2001). We have found these three perceptual organizations to be consistent across ages, and the current data now suggest that this complex organizational factor is not dependent upon basic language ability, or due to differences in attention capacities of older children when compared to their younger counterparts.

Group status (TLD vs. DLD) did not distinguish the ability to detect loudness deviants across ages. The condition main effect showed that HR was significantly higher in the Oddball condition, when there were no competing sounds, than all of the ST conditions in children between 7–15 years (regardless of group status). This result indicates that loudness detection performance was altered by selectively attending (or ignoring) one set of sounds, or by the presence of competing irrelevant sounds. There also was a significant increase in HR with increasing Δf, suggesting an overall influence of segregation on the ability to perform the loudness detection task.

In contrast, group status did distinguish how children reported hearing the sounds as one or two streams. This was demonstrated by the significant difference in stream segregation performance between the two experiments in the DLD group, but consistency of the results across age groups in the TLD group. Thus, another major finding of the current study was that the perception of two streams (Experiment 1) paralleled the ability to detect loudness changes (Experiment 2) in the TLD group but not in the DLD group. When TLD children reported hearing two streams, they performed well on the loudness detection task (i.e., they could segregate the sound streams to perform the task). However, for the DLD children, reported hearing two streams was not an indication of how well they did on the loudness detection task. Stream segregation performance in Experiment 1 predicted performance in Experiment 2 only in the TLD group. This discordance between the two tasks may be due to language use and conceptualization of what ‘two streams’ are despite receiving the same instructions and practice (implying an understanding of the tasks). That is, task performance of reporting whether one or two streams were heard may not be fully reflective of stream segregation ability in children with language impairments. On the other hand, the group differences may better reflect a fundamental maturational difficulty in the analysis of complex auditory scenes.

Alternatively, the group difference between experiments may be explained by attentional factors. The role of attention and other higher-level factors may need better specification for explaining such group differences (Petkov et al., 2005; Johnson, 2012, Nittrouer, 2012; Nittrouer et al., 2011). Recent hypotheses have indicated dysfunctional attentional mechanisms as causal for deficits in phonological processing (Banai & Ahissar, 2006; Facoetti et al., 2003; Hari & Renvall, 2001; Petkov et al., 2005; Shafer, Ponton, Datta, Morr, & Schwartz, 2007; Stevens, Sanders, & Neville, 2006). Hari & Renvall (2001) proposed that an inability to process rapidly changing acoustic information may be the result of weak automatic attentional mechanisms. Hartley & Moore (2002) suggested that deficits in attention, cognition, and motivational factors can better account for the range of experimental evidence in DLD than can temporal processing deficit theory.Facoetti et al. (2003) suggested that deficits in directing attention impact on the lower level visual and auditory processes used in phonological processing, whereas Petkov et al. (2005) suggested that deficits were due to attentional processes rather than either low-level acoustic or language-related mechanisms. At the least, these studies emphasize that attention mechanisms may be an integral factor in language processing.

Overall, phonological processing predicted stream segregation performance, and was correlated with stream perception in the two older groups, but phonological skills were neither associated with nor predicted stream segregation performance in the youngest age group. One possible explanation is that the association between the two is reflective of a finer acuity in stream segregation skills that occurs with experience. In the youngest group, performance may be confounded by immaturities in other systems or processes that mediate performance, and therefore measures may not be strictly reflective of stream segregation ability. This is consistent with previous studies that found musical processing ability to be predictive of linguistic processing (Goswami et al., 2013; Huss et al., 2011).

In conclusion, children with language impairments performed significantly lower in both experiments. They needed larger frequency separations to hear two streams (Experiment 1), though when analyzed separately, group status did not indicate poorer perform on the loudness detection task (Experiment 2). Overall, the results suggest that children with language impairments have difficulty parsing speech streams, or identifying individual sound events, when there are multiple competing sound sources. However, poorer performance in DLD children may not be solely attributed to stream segregation abilities, as simple sound frequency discrimination has been shown to be impaired in dyslexic individuals (Baldeweg, Richardson, Watkins, Foale & Gruzelier, 1999; McAnally & Stein, 1996). Further, the current results suggest that the level of language involvement or cognitive ability for understanding the task scheme may influence performance ability that is not strictly reflective of an auditory skill.

Highlights.

Maturation effects on stream segregation perception through adolescence

Performance measures differed in children with language impairments

Relationship with phonological processing skills was weak or absent

Acknowledgements

This research was supported by the NIH (R01DC004623, R01DC006003).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ahissar M, Protopapas A, Reid M, Merzenich MM. Auditory processing parallels reading abilities in adults. Proc. Natl. Acad. Sci. U. S. A. 2000;97:6832–6837. doi: 10.1073/pnas.97.12.6832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey PJ, Snowling MJ. Auditory processing and the development of language and literacy. Br. Med. Bull. 2002;63:135–146. doi: 10.1093/bmb/63.1.135. [DOI] [PubMed] [Google Scholar]

- Baldeweg T, Richardson A, Watkins S, Foale C, Gruzelier J. Impaired auditory frequency discrimination in dyslexia detected with mismatch evoked potentials. Annal. Neurol. 1999;45(4):495–503. doi: 10.1002/1531-8249(199904)45:4<495::aid-ana11>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- Banai K, Ahissar M. Auditory processing deficits in dyslexia: task or stimulus related? Cereb. Cortex. 2006;16(12):1718–1728. doi: 10.1093/cercor/bhj107. [DOI] [PubMed] [Google Scholar]

- Beattie RL, Manis FR. Rise time perception in children with reading and combined reading and language difficulties. J. Learn. Disabil. 2013;46(3):200–209. doi: 10.1177/0022219412449421. [DOI] [PubMed] [Google Scholar]

- Benasich AA, Tallal P. Infant discrimination of rapid auditory cues predicts later language impairment. Behav. Brain Res. 2002;136(1):31–49. doi: 10.1016/s0166-4328(02)00098-0. [DOI] [PubMed] [Google Scholar]

- Benasich AA, Tomas JJ, Choudhury N, Leppanen PH. The importance of rapid auditory processing ability to early language development: Evidence from converging methodologies. Dev. Psychobiol. 2002;40:278–292. doi: 10.1002/dev.10032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendixen A, Andersen SK. Measuring target detection performance in paradigms with high event rates. Clin. Neurophys. 2013;124:928–940. doi: 10.1016/j.clinph.2012.11.012. [DOI] [PubMed] [Google Scholar]

- Bishop DVM. Using mismatch negativity to study central auditory processing in developmental language and literacy impairments: where are we, and where should we be going? Psychol. Bull. 2007;133(4):651–672. doi: 10.1037/0033-2909.133.4.651. [DOI] [PubMed] [Google Scholar]

- Bishop DV, Carlyon RP, Deeks JM, Bishop SJ. Auditory temporal processing impairment: neither necessary nor sufficient for causing language impairment in children. Psychol. Bull. 1999;42:1295–1310. doi: 10.1044/jslhr.4206.1295. [DOI] [PubMed] [Google Scholar]

- Bishop DVM, Snowling MJ. Developmental dyslexia and specific language impairment: Same or different? Psychol. Bull. 2004;130(6):858–886. doi: 10.1037/0033-2909.130.6.858. [DOI] [PubMed] [Google Scholar]

- Bregman AS. Auditory Scene Analysis, MIT, Press, MA. 1990. [Google Scholar]

- Briscoe J, Bishop DV, Norbury CF. Phonological processing, language, and literacy: A comparison of children with mild-to-moderate sensorineural hearing loss and those with specific language impairment. J. Child. Psychol. Psychiatry. 2001;42:329–340. [PubMed] [Google Scholar]

- Carlyon RP, Cusack R, Foxton JM, Robertson IH. Effects of attention and unilateral neglect on auditory stream segregation. J. Exp. Psychol. Hum. Percept. Perform. 2001;27(1):115–127. doi: 10.1037//0096-1523.27.1.115. [DOI] [PubMed] [Google Scholar]

- Catts HW. The relationship between speech-language impairments and reading disabilities. J. Speech Lang. Hear. Res. 1993;36:948–958. doi: 10.1044/jshr.3605.948. [DOI] [PubMed] [Google Scholar]

- Catts HW, Adlof SM, Hogan TP, Weismer ES. Are specific language impairment and dyslexia destinct disorder? J. Speech Lang. Hear. Res. 2005;48:1378–1396. doi: 10.1044/1092-4388(2005/096). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choudhury N, Leppanen PH, Leevers HJ, Benasich AA. Infant information processing and family history of specific language impairment: converging evidence for RAP deficits from two paradigms. Dev. Sci. 2007;10(2):213–236. doi: 10.1111/j.1467-7687.2007.00546.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corriveau K, Pasquini E, Goswami U. Basic auditory processing skills and specific language impairment: a new look at an old hypothesis. J. Speech Lang. Hear. Res. 2007;60:647–666. doi: 10.1044/1092-4388(2007/046). [DOI] [PubMed] [Google Scholar]

- Darwin C. Perceptual grouping of speech components. Q. J. Exp. Psychol. 1981;33a:185–207. [Google Scholar]

- Darwin C. Perceiving vowels in the presence of another sound. J. Acoust. Soc. Am. 1984;76:1636–1647. doi: 10.1121/1.391610. [DOI] [PubMed] [Google Scholar]

- Darwin CJ. Listening to speech in the presence of other sounds. Philos. Trans. R. Soc. Lond. B, Biol. Sci. 2008;363(1493):1011–1021. doi: 10.1098/rstb.2007.2156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron R. Temporal perception, aphasia and d'ej'a vu. Brain. 1963;86:403–424. doi: 10.1093/brain/86.3.403. [DOI] [PubMed] [Google Scholar]

- Elliott L, Hammer M. Longitudinal changes in auditory discrimination in normal children and children with language-learning problems. J. Speech Hear. Disord. 1988;53:467–474. doi: 10.1044/jshd.5304.467. [DOI] [PubMed] [Google Scholar]

- Elliot L, Hammer M, Scholl M. Fine-grained auditory discrimination in normal children and children with language-learning problems. J. Speech Hear. Res. 1989;32:112–119. doi: 10.1044/jshr.3201.112. [DOI] [PubMed] [Google Scholar]

- Facoetti A, Lorusso ML, Paganoni P, Cattaneo C, Galli R, Umilta C, Mascetti GG. Auditory and visual automatic attention deficits in developmental dyslexia. Brain Res. Cogn. Brain Res. 2003;16:185–191. doi: 10.1016/s0926-6410(02)00270-7. [DOI] [PubMed] [Google Scholar]

- Farmer ME, Klein RM. The evidence for a temporal processing deficit linked to dyslexia: A review. Psychon. Bull. Rev. 1995;2:460–493. doi: 10.3758/BF03210983. [DOI] [PubMed] [Google Scholar]

- Fazio BB. Arithmetic calculation, short-term memory, and language performance in children with specific language impairment: a 5-year follow-up. J. Speech Lang. Hear. Res. 1999;42(2):420–431. doi: 10.1044/jslhr.4202.420. [DOI] [PubMed] [Google Scholar]

- Gatherole SE, Baddeley AD. Short-term memory may yet be deficient in children with language impairments: a comment on van der Ley & Howard. J. Speech Lang. Hear. Res. 1993;36(6):1193–1207. doi: 10.1044/jshr.3802.463. [DOI] [PubMed] [Google Scholar]

- Goulandris NK, Snowling MJ, Walker I. Is dyslexia a form of specific language impairment? A comparison of dyslexic and language impaired children as adolescents. Ann. Dyslexia. 2000;50:103–120. doi: 10.1007/s11881-000-0019-1. [DOI] [PubMed] [Google Scholar]

- Goswami U, Huss M, Mead N, Fosker T, Verney JP. Perception of patterns of musical beat distribution in phonological developmental dyslexia: Significant longitudinal relations with work reading and reading comprehension. Cortex. 2013;49(5):1363–1376. doi: 10.1016/j.cortex.2012.05.005. [DOI] [PubMed] [Google Scholar]

- Hari R, Renvall H. Impaired processing of rapid stimulus sequences in dyslexia. Trends Cogn. Sci. 2001;5(2):525–532. doi: 10.1016/s1364-6613(00)01801-5. [DOI] [PubMed] [Google Scholar]

- Hartley DE, Moore DR. Auditory processing efficiency deficits in children with developmental language impairments. J. Acoust. Soc. Am. 2002;112(6):2962–2966. doi: 10.1121/1.1512701. [DOI] [PubMed] [Google Scholar]

- Helenius P, Uutela K, Hari R. Auditory stream segregation in dyslexic adults. Brain. 1999;122:907–913. doi: 10.1093/brain/122.5.907. [DOI] [PubMed] [Google Scholar]

- Helzer JR, Champlin CA, Gillam RB. Auditory temporal resolution in specifically language-impaired and age-matched children. Percept. Mot. Skills. 1996;83:1171–1181. doi: 10.2466/pms.1996.83.3f.1171. [DOI] [PubMed] [Google Scholar]

- Huss M, Verney JP, Fosker T, Mead N, Goswami U. Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex. 2011;47(6):674–689. doi: 10.1016/j.cortex.2010.07.010. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Executive function and developmental disorders: The flip side of the coin. Trends Cogn. Sci. 2012;16(9):454–457. doi: 10.1016/j.tics.2012.07.001. [DOI] [PubMed] [Google Scholar]

- Lubert N. Auditory perceptual impairments in children with specific language disorders: a review of the literature. J. Speech Hear. Disord. 1981;46(1):1–9. [PubMed] [Google Scholar]

- McAnally KI, Stein JF. Auditory temporal coding in dyslexia. Proc. Biol. Sci. 1996;63(1373):961–965. doi: 10.1098/rspb.1996.0142. [DOI] [PubMed] [Google Scholar]

- Mengler ED, Hogben JH, Michie P, Bishop DV. Poor frequency discrimination is related to oral language disorder in children: A psychoacoustic study. Dyslexia. 2005;11(3):155–173. doi: 10.1002/dys.302. [DOI] [PubMed] [Google Scholar]

- Micheyl C, Carlyon RP, Gutschalk A, Melcher JR, Oxenham AJ, Rauschecker JP, Tian B, Courteney Wilson E. The role of auditory cortex in the formation of auditory streams. Hear. Res. 2007;229(1–2):116–131. doi: 10.1016/j.heares.2007.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller CA, Leonard LB, Kail RV, Zhang X, Tomblin JB, Francis DJ. Response time in 14-year-olds with language impairment. J Speech Lang Hear Res. 2006;49(4):712–728. doi: 10.1044/1092-4388(2006/052). [DOI] [PubMed] [Google Scholar]

- Mody M, Studdert-Kennedy M, Brady S. Speech perception deficits in poor readers: Auditory processing or phonological coding? J. Exp. Child Psychol. 1997;64(2):199–231. doi: 10.1006/jecp.1996.2343. [DOI] [PubMed] [Google Scholar]

- Nagarajan S, Mahncke H, Salz T, Tallal P, Roberts T, Merzenich MM. Cortical auditory signal processing in poor readers. Proc. Natl. Acad. Sci. U. S. A. 1999;96(11):6483–6488. doi: 10.1073/pnas.96.11.6483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S. Do temporal processing deficits cause phonological processing problems? J. Speech Lang. Hear. Res. 1999;42(4):925–942. doi: 10.1044/jslhr.4204.925. [DOI] [PubMed] [Google Scholar]

- Nittrouer S. A new perspective on developmental language problems: Perceptual organization deficits. Perspect. Lang. Learn. Educ. 2012;19(3):87–97. doi: 10.1044/lle19.3.87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Shune S, Lowenstein JH. What is the deficit in phonological processing deficits: Auditory sensitivity, masking, or category formation? J. Exp. Child Psychol. 2011;108(4):762–785. doi: 10.1016/j.jecp.2010.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petkov CI, O’Connor KN, Benmoshe G, Baynes K, Sutter ML. Auditory perceptual grouping and attention in dyslexia. Brain Res. Cogn. Brain Res. 2005;24(2):343–354. doi: 10.1016/j.cogbrainres.2005.02.021. [DOI] [PubMed] [Google Scholar]

- Ramus F, Marshall CR, Rosen S, van der Lely HK. Phonological deficits in specific language impairment and developmental dyslexia: Towards a multidimensional model. Brain. 2013;136(Pt 2):630–645. doi: 10.1093/brain/aws356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramus F, Rosen S, Dakin SC, Day BL, Castellote JM, White S, Frith U. Theories of developmental dyslexia: Insights from a multiple case study of dyslexic adults. Brain. 2003;126:841–865. doi: 10.1093/brain/awg076. [DOI] [PubMed] [Google Scholar]

- Reed MA. Speech perception and the discrimination of brief auditory cues in reading disabled children. J. Exp. Child Psychol. 1989;48(2):270–292. doi: 10.1016/0022-0965(89)90006-4. [DOI] [PubMed] [Google Scholar]

- Remez RE, Rubin PE, Berns SM, Pardo JS, Lang JM. On the perceptual organization of speech. Psychol. Rev. 1994;10(1):129–156. doi: 10.1037/0033-295X.101.1.129. [DOI] [PubMed] [Google Scholar]

- Richardson U, Thomson JM, Scott SK, Goswami U. Auditory processing skills and phonological representation in dyslexic children. Dyslexia. 2004;10(3):215–233. doi: 10.1002/dys.276. [DOI] [PubMed] [Google Scholar]

- Rosen S. Auditory processing in dyslexia and specific language impairment: is there a deficit? What is its nature? Does it explain anything? J. Phon. 2003;31:509–527. [Google Scholar]

- Rosen S, Manganari E. Is here a relationship between speech and nonspeech auditor processing in children with dyslexia? J. Speech Lang. Hear. Res. 2001;44(4):720–736. doi: 10.1044/1092-4388(2001/057). [DOI] [PubMed] [Google Scholar]

- Schulte-Korne G, Deimel W, Bartling J, Remschmidt H. The role of phonological awareness, speech perception, and auditory-temporal processing for dyslexics. Eur. Child Adolesc. Psychiatry. 1999;8(3):28–34. doi: 10.1007/pl00010690. [DOI] [PubMed] [Google Scholar]

- Shafer VL, Ponton C, Datta H, Morr ML, Schwartz RG. Neurophysiological indices of attention to speech in children with specific language impairment. Clin. Neurophysiol. 2007;118(6):1230–1243. doi: 10.1016/j.clinph.2007.02.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma M, Purdy SC, Kelly AS. Comorbidity of auditory processing, language, and reading disorders. J. Speech Lang. Hear. Res. 2009;52(3):706–722. doi: 10.1044/1092-4388(2008/07-0226). [DOI] [PubMed] [Google Scholar]

- Snowling M. Dyslexia as a phonological deficit: Evidence and implications. Child Psychol. Psychiatry Review. 1998;3:4–11. [Google Scholar]

- Stackhouse J, Wells B. Children’s Speech and Literacy Difficulties. London: Whurr Publishers; 1997. [Google Scholar]

- Stein JF, McAnally K. Auditory temporal processing in developmental dyslexics. Irish. J. Psychol. 1995;16(3):220–228. [Google Scholar]

- Stevens C, Sanders L, Neville H. Neurophysiological evidence for selective auditory attention deficits in children with specific language impairment. Brain Res. 2006;111(1):143–152. doi: 10.1016/j.brainres.2006.06.114. [DOI] [PubMed] [Google Scholar]

- Studdert-Kennedy M. Deficits in phoneme awareness do not arise from failures in rapid auditory processing. Read. Writ.: An Interdiscipl J. 2002;15:5–14. [Google Scholar]

- Sussman E. Integration and segregation in auditory scene analysis. J. Acoust. Soc. Am. 2005;117(3):1285–1298. doi: 10.1121/1.1854312. [DOI] [PubMed] [Google Scholar]

- Sussman E, Steinschneider M. Attention effects on auditory scene analysis in children. Neurophychologia. 2009;47(3):771–785. doi: 10.1016/j.neuropsychologia.2008.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sussman E, Wong R, Horváth J, Winkler I, Wang W. The development of the perceptual organization of sound by frequency separation in 5–11 year-old children. Hear. Res. 2007;225:117–127. doi: 10.1016/j.heares.2006.12.013. [DOI] [PubMed] [Google Scholar]

- Sutter ML, Petkov C, Baynes K, O’Connor KN. Auditory scene analysis in dyslexics. Neuroreport. 2000;11:1967–1971. doi: 10.1097/00001756-200006260-00032. [DOI] [PubMed] [Google Scholar]

- Tallal P. Rapid auditory processing in normal and disordered language development. J. Speech Hear. Res. 1976;19(3):561–571. doi: 10.1044/jshr.1903.561. [DOI] [PubMed] [Google Scholar]

- Tallal P. Language disabilities in children: a perceptual or linguistic deficit? J. Pediatr. Psychol. 1980;5(2):127–140. doi: 10.1093/jpepsy/5.2.127. [DOI] [PubMed] [Google Scholar]

- Tallal P. Improving language and literacy is a matter of time. Nat. Rev. Neurosci. 2004;5(9):721–728. doi: 10.1038/nrn1499. [DOI] [PubMed] [Google Scholar]

- Tallal P, Merzenich MM, Miller S, Jenkins W. Language learning impairments: integrating basic science, technology, and remediation. Exp. Brain Res. 1998;123:210–219. doi: 10.1007/s002210050563. [DOI] [PubMed] [Google Scholar]

- Tallal P, Miller S, Fitch RH. Neurobiological basis of speech: a case for the preeminence of temporal processing. Ann. N. Y. Acad. Sci. 1993;682:27–47. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- Tallal P, Piercy M. Defects of non-verbal auditory perception in children with developmental aphasia. Nature. 1973a;241:468–469. doi: 10.1038/241468a0. [DOI] [PubMed] [Google Scholar]

- Tallal P, Piercy M. Developmental aphasia: impaired rate of non-verbal processing as a function of sensory modality. Neuropsychologia. 1973b;11:389–398. doi: 10.1016/0028-3932(73)90025-0. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE. Speech acoustic-cue discrimination abilities of normally developing and language-impaired children. J. Acoust. Soc. Am. 1981;69(2):568–574. doi: 10.1121/1.385431. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE, Kallman C, Mellits D. Developmental dysphasia: Relation between acoustic processing deficits and verbal processing. Neuropsychologia. 1980;18(3):273–284. doi: 10.1016/0028-3932(80)90123-2. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE, Mellits ED. Identification of language-impaired children on the basis of rapid perception and production skills. Brain Lang. 1985;25(2):314–322. doi: 10.1016/0093-934x(85)90087-2. [DOI] [PubMed] [Google Scholar]

- Werker JF, Tees RC. Speech perception in severely disabled and average reading children. Can. J. Psychol. 1987;41(1):48–61. doi: 10.1037/h0084150. [DOI] [PubMed] [Google Scholar]

- Wright BA, Bowen RW, Zecker SG. Nonlinguistic perceptual deficits associated with reading and language disorders. Curr. Opin. Neurobiol. 2000;10(4):482–486. doi: 10.1016/s0959-4388(00)00119-7. [DOI] [PubMed] [Google Scholar]

- Wright BA, Lombardin LJ, King WM, Puranik CS, Leonard CM, Merzenich MM. Deficits in auditory temporal and spectral resolution in language-impaired children. Nature. 1997;387:176–178. doi: 10.1038/387176a0. [DOI] [PubMed] [Google Scholar]