Abstract

This review is concerned primarily with psychophysical and physiological evidence relevant to the question of the existence of spatial features or spatial primitives in human vision. The review will be almost exclusively confined to features defined in the luminance domain. The emphasis will be on the experimental and computational methods that have been used for revealing features, rather than on a detailed comparison between different models of feature extraction. Color and texture fall largely outside the scope of the review, though the principles may be similar. Stereo matching and motion matching are also largely excluded because they are covered in other contributions to this volume, although both have addressed the question of the spatial primitives involved in matching. Similarities between different psychophysically-based model will be emphasized rather than minor differences. All the models considered in the review are based on the extraction of directional spatial derivatives of the luminance profile, typically the first and second, but in one case the third order, and all have some form of non-linearity, be it rectification or thresholding.

Keywords: Features, Psychophysics

1. Introduction

Twenty-five years ago, Vision Research was still largely dominated by the simple, multiple-analyser model of near-threshold vision (see Graham, this volume). Successful as this model was in relating human psychophysics and physiology, it nevertheless seemed to have rather little to say about how the visual image was represented above threshold, where multiple analyzers were combined to produce features such as bars and edges. In the 1980s several schemes emerged for non-linear combination of analyzers into spatially-localized features. The purpose of this review is to review progress in testing these models, up to the start of 2010.

2. The intrinsic geometry of features

2.1. Features imply discontinuity in representation of the retinal image

Low-pass filtering by the relatively feeble optics of the eye (Helmholtz, 1896) allows us to localize objects with an accuracy an order of magnitude finer than the photoreceptor mosaic (Westheimer, 1979). However, despite the fact that the image is mathematically continuous, we perceive it as more-or-less sharply divided into different regions by features. In this review we shall take features to refer to those physical aspects of the image that are discretely represented and which have a measurable position. Our definition is similar to that by ter Haar Romeny (2003) who calls features “ …special, semantically circumscribed, local meaningful structures (or properties) in the image. Examples are edges, corners, T-junctions, monkey saddles and many more.” In his lines “Composed upon Westminster Bridge, September 3, 1802” Wordsworth adds a few more examples, and emphasizes the role of lighting: “Never did sun more beautifully steep/In his first splendor valley, rock or hill.” Actually, we shall later take some issue with the idea that all features must be ‘semantically circumscribed’.

2.2. General evidence for the importance of features

There was still some doubt in the 1980s about the importance of spatial localization of Fourier components in the image, and thus of features. One type of model used banks of linear filters at different size and orientations centered at each point in the image, and used their relative outputs as the primitive visual code (Wilson & Bergen, 1979).1 Spatial feature models such as MIRAGE (Watt & Morgan, 1983a, 1985) used the same set of filters but used localized features of their output, such as zero-crossings and extrema. Experimental tests concentrated on ‘hyperacuity’2 tasks such as vernier and spatial interval (Westheimer, 1977, 1979, 1981, 1984; Westheimer & McKee, 1975) which involve relative spatial localization of features like bars and blobs. The filter-bank theory could point to the effects of adaptation on orientation (Regan & Beverely, 1985) and spatial frequency (Blakemore & Sutton, 1969) discrimination (see however, Maudarbocus and Ruddock (1973) for a reinterpretation of the latter), and the ability of a four-mechanism local filter bank to account for spatial interval discrimination (Wilson & Bergen, 1979; Wilson & Gelb, 1984b). Perturbation experiments, on the other hand (Morgan, Hole, & Ward, 1990) showed that observers could carry out relative position tasks between pairs of features while ignoring the presence of spatially-jittered bars which were designed to corrupt the output of the putative filter banks. Later studies (Levi, Jiang, & Klein, 1990; Levi & Waugh, 1996; Levi & Westheimer, 1987; Morgan & Regan, 1987) showed that relative contrast perturbations between the two bars in a vernier or spatial interval task did not reduce acuity, and that the two bars could even be of opposite contrast. (For a review of these findings see Morgan (1990) and various Chapters in Bock and Goode (1994)) A widely-accepted resolution of the ‘place token’ vs. ‘filter bank’ dichotomy follows the same lines as the ‘short vs. long-range’ process distinction in motion perception (Anstis, 1980; Braddick, 1974, 1980). The relative positions of widely-separated features is insensitive to contrast polarity and to irrelevant features and is thus likely to use place-tokens, while ‘short range’ computations such as vernier acuity for abutting bars (Westheimer & Hauske, 1975) are sensitive to flanking features and do not need to be explained by relative positional information (see also Klein & Levi, 1985).

One of the diagnostic attributes of the short-rang process in motion has been the ‘missing fundamental’ (MF) effect (Georgeson & Harris, 1990). A square-wave with its fundamental removed has a perceived edge where its remaining Fourier components are in phase but this perceived edge moves backwards if the waveform is moved through one-quarter cycle of its fundamental period, provided that the inter-frame interval of the motion sequence is sufficiently brief to support short-range motion (otherwise, the edge moves forwards). This has been taken to show (Georgeson & Harris, 1990; Lu & Sperling, 1995) that motion direction is computed directly from a motion energy detection dominated by the highest amplitude Fourier component (the 3rd) rather than by a positional token (the edge). There is no equivalent evidence to reveal a short-range process for static spatial hyperacuity. No doubt observers could align the perceived edges of two MF gratings, but they could be doing this from the third harmonic. A critical test would be to compare the relative position threshold for MF gratings with that for the 3rd harmonic alone. Vernier alignment thresholds are highly sensitive to blur (Krauskopf & Farell, 1991; Stigmar, 1970; Watt & Morgan, 1983b). Therefore, if the perceived sharp edge of the MF grating is being used for alignment, thresholds should rise when the >3f components are removed, by a factor greater than predicted by probability summation.

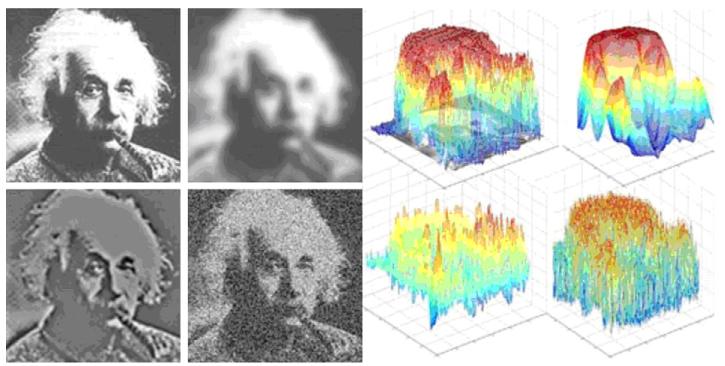

The role of positional information, interpreted as the phase of Fourier components, also received attention in the 1980s. Piotrowski and Campbell (1982) performed global Fourier transforms on various natural images and then swapped either their phases or amplitude spectra. Overwhelmingly, the appearance of the composite images followed that of the phase structure, indicating the importance of spatially localized information such as edges. Phase congruence at edges between different spatial frequency components was proposed by Marr and Hildreth (1980) as an important computational principle, and was later made the central theme of the local-energy model (Morrone & Burr, 1988; Ross, Morrone, & Burr, 1989). In retrospect, the finding that the global amplitude spectra of a natural image has little influence on its appearance is predictable from the fact that natural images all have the same 1/f form (Field & Brady, 1997), changing the slope of which alters only the perceived amount of blur. Equally obviously, there must be a spatial scale at which amplitude information is important, even if it is in the degenerate case where the Fourier transform is done in patches 1 pixel in size. Morgan, Ross, and Hayes (1991) varied the size of Gaussian-windowed patches in which the amplitude and phases of the patchwise Fourier transform was swapped between faces, and found a smooth transition between amplitude and phase domination as the patch size increased (Fig. 1). It remains to be determined whether the crossover between amplitude and phase spatial scale is a physical (cyc/image) or physiological (cyc/deg) limit, but informal experiments suggest that the crossover is not much changed by viewing distance, indicating a physical limit.

Fig. 1.

Images (128 × 128 pixels) of two political philosophers (right), one of whom has been discredited. The images on the right contribute their phase spectra of their Fourier transforms to the remaining images in the same row and their amplitude spectra to the other row. The Fourier transform was performed patchwise in overlapping, Gaussian-windowed patches of 62, 32, 16, 8 and 4 pixel width and height (large patches on the left). Note that phase information dominates perceived appearance in large patches, and amplitude in small with hybrids in between. Some viewers may see rivalry in the intermediate cases and other illusory figures such as Tony Blair (from Morgan et al., 1991).

Field and Brady (1997) reported that natural scenes show considerable variability in their amplitude spectra, with individual scenes showing falloffs which are often steeper or shallower than 1/f. Using a new measure of image structure (the “rectified contrast spectrum” or “RCS”) on a set of calibrated natural images, Field and Brady (1997) demonstrated that a large part of the variability in the spectra is due to differences in the sparseness of local structure at different scales. Well focused images have structures (e.g., edges) which have roughly the same magnitude across scale. Elder (1999) reported a novel method for inverting the edge code to reconstruct the perceptually accurate estimate of the original image, and showed that the proposed representation embodies virtually all of the perceptually relevant information contained in a natural image. There is thus general agreement in the computational literature that natural images are characterized by localized structures like edges but this does not tell us directly what is represented by the human visual system (Section 5.2 below).

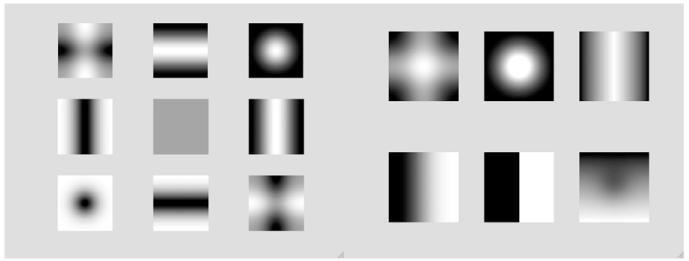

2.3. The retinal image as a landscape

The short list of features above suggests an analogy with the features of a physical landscape. The physical features of landscape used by geographers and in ordinary speech are also discrete, semantically circumscribed and localized in space, but are present in actually continuous surfaces. The analogy can be taken further by representing the image as a landscape L(x, y) with two dimensions (x, y) of space and a third dimension of luminance (Koenderink & van Doorn, 1987b; ter Haar Romeny, 2003; Watt, 1991). Examples of image landscapes are shown in Fig. 2. Landscapes neatly illustrate the important concept of spatial scale in images. Blurring an image is like eroding a landscape, or like pouring water over a sandcastle; the small-scale features like battlements are removed and only the smooth general outline remains. Conversely, adding pixel noise to the image adds a large set of small, localized features and make the landscape more jagged. If we take an image, blur it, and subtract the blurred image from the original (the photographic technique called unsharp masking) we have an image that has only fine detail and no large-scale structure. Note that the picture of the famous person is recognizable in the blurred version, the noisy version and the unsharp-masked version, despite the huge differences between the landscapes of these images. Most people, if asked, would say that this is because they contain the same ‘features’ like eyes and a moustache. This illustrates the considerable difficulties with which a theory of features is going to have to grapple.

Fig. 2.

The images in the left-hand panels show: (a, top left) the image of a famous face, (b, top right) the image blurred, (c, bottom left) the image with the blurred image subtracted and (d, bottom right) the image with pixel noise added. The images on the right show landscapes of the corresponding images on the right. Pixel intensity is represented by height of the landscape, with color added (red is high and blue is low). From Morgan (2003) with permission.

The overwhelming majority of psychophysical work on features has been done with just three basic types: cliffs (‘edges’), ridges (‘bars’) and summits (‘blobs’), and with reversed contrast versions (valleys and basins). The ubiquitous ‘grating’ is just a set of ridges and valleys. The ‘Gabor’ is a set of ridges/valleys with local summits and basins. It is an interesting fact in its own right that such a semantically-impoverished list has received so much attention, when ordinary language is much richer. In the past it was richer still:

“At Wivenhoe, in Essex, the low line of the hills has the shape of the heels of a person lying face-down. The name contains the shape: a hoh is a ridge that rises to a point and has a concave end. At Wooller in Northumberland, however, the hilltop is level, with a convex sloping shoulder. The hidden word here is ofer, ‘flat topped ridge.’ Early Anglo-Saxon settlers in England, observing, walking and working the landscape, defined it ups and downs with a subtlety largely missing from modern, motorized English.” (The Economist, May 16th 2009, emphasis added; the origin of ‘Wooller’ is in fact disputed.)

Other languages have similar variety. The Celts gave us the distinction between (i) a Corrie (Cwm), (ii) a Glen and (ii) a Strath. A Corrie has one outlet and is bounded on three sides by ridges whereas the other two are bounded on two sides, have two outlets and a watershed between. A Glen is typically narrow sided and deep (and usually glacial), whereas a Strath is broad, shallow and (usually fluvial).3 The hard-to-translate Welsh adjective ‘Bach’ refers to a nook, corner or bend in a river. It would be interesting to list the definitive Bedouin dictionary of sand dunes, but it is too long to include here.

2.4. Differential geometry to describe features

Since the image landscape is continuous, and since features invariably involve some change across space, the natural mathematical language for describing features is that of differential geometry (Koenderink & van Doorn, 1987b). A landscape can be described by the set of derivatives at each of its points. Since we have to distinguish edges from summits we know immediately that the derivatives must be directional (partial) describing the changes in a particular direction. The first-order derivatives give us the slope, as a mountaineer would understand it, the second order derivatives give us the change of slope or curvature (‘the slope gets steeper as we approach the summit’) and so on. The set of all directional derivatives defines the intrinsic geometry of a surface, that is, the properties that can be determined by measurements carried out on the surface itself, without appeal to anything outside. The idea of intrinsic geometry can be generalized to any number of dimensions, and it explains how we know we live on a curved surface and why physicists can determine that the four-dimensional manifold of space–time is curved, without the need of any 5th dimension.

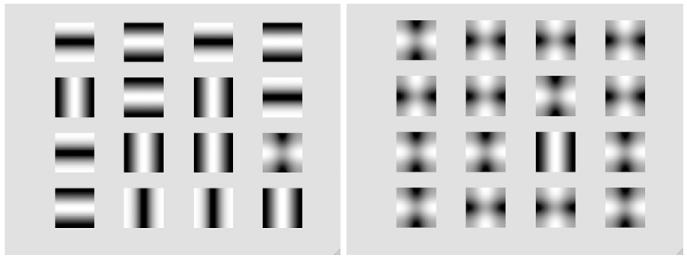

In differential geometry, every point has an associated set of curvatures in every possible directions. For example, on a ridge the curvature is zero going along the spine of the ridge and positive at right angles to the spine. The direction in which the curvature is maximal is one of the two principal curvatures. The other principal curvature is the minimum and is always at right angles to the maximum, a result proved by Euler in 1760. The directions of the principal curvatures are the principal directions. If we call the two orthogonal principal curvatures kx and ky and allow each of them to be −1, 0 or 1, then we obtain a classification of shapes shown in Table 1, and their pictorial representations in Fig. 3 (left hand side).

Table 1.

A classification of shapes according to their principal curvatures, kx and ky. The rightmost column shows the product of the two principal curvatures, known as the Gaussian curvature. Note that the Gaussian curvature of a plain and a ridge are the same, as those of a summit and basin. The saddle is unique in having a negative Gaussian curvature.

| Shapes | kx | ky | kx · ky |

|---|---|---|---|

| Plain | 0 | 0 | 0 |

| Ridge | 1 | 0 | 0 |

| Summit | 1 | 1 | 1 |

| Basin | −1 | −1 | 1 |

| Saddle | 1 | −1 | −1 |

Fig. 3.

The left-hand figure shows the results of all combinations of principal curvatures {−1, 0, 1} in the x direction (horizontal axis) and {−1, 0, 1} in the vertical direction (vertical axis). The plane with principal curvatures {0, 0} lies in the middle and can be used to inspect afterimages, which are similar to the image at 180° from the adapting image. The right-hand figure shows, from left to right and top to bottom: (a) two intersecting ridges, (b) a plateau with a conspicuous white Mach band, (c) a tent ridge, (d) a Gaussian-blurred edge, (e) a sharp edge and (f) an approximation to a Cwm.

Koenderink called the angle of the vector pointing from the centre of Fig. 3a the shape index. Thus, in the above diagram the vector at 0° points to the ridge, while the vector of 180° points to the valley. It can be seen that a large variety of interesting combinations of basic shapes can be generated in this way, and economically described.

Other landscape features can be generated by combining different elementary shapes or by using different blurring functions. Fig. 3 (right hand side) (a) shows two linear intersecting ridges (NASA/JPL http://hirise.lpl.arizona.edu/PSP_005392_1885) and is known to psychophysicists as a plaid (more correctly it should be a tartan, since a plaid is a piece of cloth, not a pattern). (b) a plateau (a truncated summit), which psychophysicists use to show a very nice white Mach band. (c) a tent ridge, which does not properly belong here as it has a discontinuity, but which would be blurred in the retinal image to a well-behaved ridge. (d) a cliff, which in psychophysics would be called a ‘blurred edge’. It is actually just one side of a ridge. (e) is its sharp equivalent. (f) an approximation to a Cwm, made by subtracting a small summit from a slope.

2.4.1. Gaussian curvature

The product of the principal curvatures K = kx · ky is known as the Gaussian curvature. It will be seen from Table 1 that the Gaussian curvature of a ridge and a plain are the same, as are those of summits and basins. The reason that Gaussian curvature is mathematically useful is that it defines the basic topology of the surface, which cannot be changed without tearing it apart. A piece of paper can be folded to produce a ridge, and then unfolded again into a plane, but it cannot be bent into a saddle. A local summit on a balloon can be pressed into a basin, and then pushed out into a summit again, but it cannot be abolished to make a local plain. The fact that the Gaussian curvature of a ridge and plain are identical would at first sight seem to limit its usefulness to the psychophysicist, but Barth, Zetzsche, and Rentschler (1998) proposed that the visual system contains mechanisms sensitive to local Gaussian curvature. Nam, Solomon, Morgan, Wright, and Chubb (2009) reasoned that if this were true, then an image patch with non-zero curvatures (a plaid) should ‘pop-out’ from a set of patches with zero curvature (gratings). Nam et al. thereby introduced the criterion for a feature that it should be pre-attentive. We shall return later to discuss whether this restriction is justified. What Nam et al. actually found was that plaids do ‘pop out’ from gratings, but only if their components are similar in spatial frequency. They concluded that mechanisms sensitive to Gaussian curvature exist, but that they are band-limited.

Nam et al.’s experiment does not distinguish the different signs of curvature, both of which are present in a plaid. To see whether regions of negative curvature ‘pop out’ from uncurved regions stimuli such as those in Fig. 4 could be employed. To do the experiment properly the energy of the patches would have to be carefully equated or jittered. Preliminary impressions are that the search is not as easy as one have supposed, but whether there is a significant set-size effect or not has yet to be determined.

Fig. 4.

Does a patch with negative Gaussian curvature pop out from distracters of zero Gaussian curvature (left) and is the reverse true (right)?

2.4.2. Gradients, derivatives and zero-crossings

We next define these terms because they are necessary for understanding the measurements that have been made of natural image landscapes.

3. Spatial derivatives

3.1. Gaussian scale-space

In a seminal paper, Koenderink and van Doorn (1987b) pointed out that local geometry of the image depends on the partial derivatives of luminance (see above), and that these derivatives could be calculated by local filters with properties similar to those of the receptive fields of single cells in the visual pathway. With rather little error (Hawken & Parker, 1987; Parker & Hawken, 1985) and with enormous computational advantages, these receptive field profiles can be treated as the point-spread-functions of filters that are various orders of derivatives of the Gaussian. A filter with a Gaussian profile in frequency domain also has a Gaussian point-spread function (Bracewell, 1978). If we place a filter at a particular point in the image, multiply its point-spread function point-for-point with the area of the image that it covers, and sum over all points, we have a new pixel value at that point. This process is referred to as convolution, and it produces a new image, dubbed by Robson (1980) the ‘neural image’.

One great advantage of the Gaussian filter for landscape analysis, as pointed out by Koenderink and van Doorn, is that it guarantees a representation that is specific to a particular spatial scale. Convolving an image with a Gaussian or one of its derivatives is equivalent to multiplying the spatial frequency spectrum of the image with a Gaussian and thus band-limiting it. The larger the Gaussian filter, as measured by its standard deviation, the coarser the scale of the analysis (see Fig. 2 for the transformation of a landscape into a blurry version by convolution with a Gaussian.) Thus, a multi-scale representation of the image can be achieved by using a battery of Gaussian filters of different size. A scale-space stack (Lindeberg, 1998; ter Haar Romeny, 2003; Witkin, 1983, 1984) is a stack of 2-D images where spatial scale is the third dimension. The tracking of features along the third dimension is a potentially powerful method for encoding type of features and their attributes such as blur (Georgeson, May, Freeman, & Hesse, 2007, see Appendix A). For example, a wide bar will appear in scale-space as two spatially-separated edges at fine scale, changing into a single peak at a coarse scale. Note, however, that the order of the derivative also affects the spatial scale, since differentiation in the Fourier domain is equivalent to multiplying the Fourier transform by 2πf, where f is frequency.

Another useful result (Bracewell, 1978) is that convolving an image with the nth derivative of a filter is equivalent to convolving the nth derivative of the image with the filter. Thus, to find the derivatives that are needed to characterize the local geometry of the image at a particular spatial scale, and thereby to find ‘features’, we need only convolve them the images with derivatives of a Gaussian.

3.2. Receptive fields

3.2.1. Receptive fields as gradient filters

Turning to physiology, we find that the receptive field profiles of retinal ganglion and lateral geniculate cells, and those of the input layer IVc of V1, are mostly circular and can be approximated by the difference between two 2-D Gaussian functions with different standard deviations (Enroth-Cugell, Robson, Schweitzer-Tong, & Watson, 1983; Robson, 1975). The resulting ‘Mexican Hat’ profile is similar to the second-derivative of a Gaussian (Marr & Hildreth, 1980). The closest approximation to the Laplacian of a Gaussian is a difference of Gaussians with a ratio of 1:1.6 of the centre to surround standard deviations. Thus, these cells can be considered to be computing the non-directional second-derivative of the image landscape. Simple cells in layers of V other than IVc of cortical area V1 are elongated and can be modeled by elongated Gaussian envelopes differentiated at right angles to their spine. If the order of differentiation is 1, they have an asymmetrical profile with a ridge on one side and a parallel valley on the other. The ridge and valley are necessarily separated by a zero point, referred to as a zero-crossing. Unsurprisingly these cells respond vigorously to ridges and valleys, but this does not mean that they are ridge or valley detectors, any more than they are detectors of edges, to which they also respond, a point emphasized by Koenderink and van Doorn (1987a).

If the order of differentiation is 2, the filter profile is a ridge with a valley on either side, or the reverse. They have ‘even’ symmetry and, again unsurprisingly, respond best to ridges, but are not ‘ridge detectors’ since they also respond to edges and even to summits and hollows of the right scale.

3.2.2. Linearity of receptive fields

An important question about the early filters from the point of view of feature extraction is whether they are linear. Do they respond to one polarity of a feature, alone or to both? A central aspect of the MIRAGE model is that positive and negative responses of the filters are fed into different channels by half-wave rectification before they are combined across spatial scale. It is this that preserves, in some but not all circumstances, the features in the high spatial frequency channels (zero-bounded regions) even after they are added to low frequency channels (see Figs. 5 and 3 from Watt & Morgan, 1985). Watt and Morgan justified their choice of half-wave rectification physiologically by the separation of on- and off channels in different classes of retinal ganglion cell and in the LGN, where they occur in different layers. More recently, Georgeson et al. (2007) has proposed another half-wave rectification (HWR) scheme in which the outputs of a linear first-derivative Gaussian filters are half-wave rectified before being sent in a second-stage to second-derivative Gaussians. Several attempts have been made to explain how apparently linear cortical cells can avoid inheriting the nonlinearities of LGN cells (Tolhurst & Dean, 1990; Wielaard, Shelley, McLaughlin, & Shapley, 2001). These linear cells can be modeled with a biphasic impulse response, causing them to respond at the onset of a stimulus of their preferred phase, or at the offset of a stimulus of the anti-preferred phase (Williams & Shapley, 2007). This makes them unsuitable for edge-polarity encoding, or for the purposes of MIRAGE. Recently, however, Williams and Shapley (2007) have reported that although many cells in layers 4B and C are of this kind, cells in Layers 2/3 show very little response at all to anti-preferred stimuli. Williams and Shapley (2007) note that this makes them suitable for performing the MIRAGE computation.

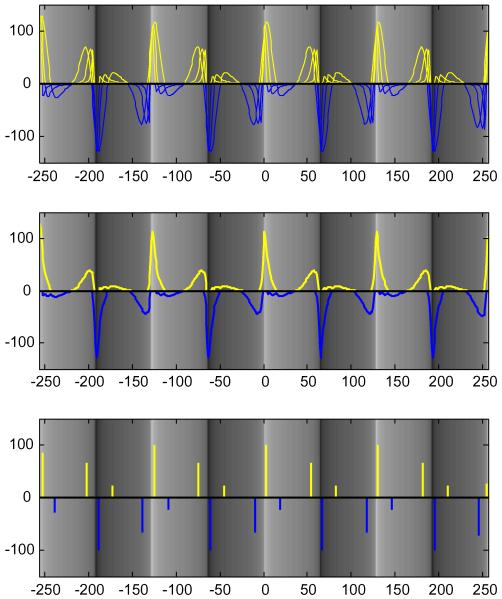

Fig. 5.

The figure, supplied by Roger Watt, illustrates the appearance of a class of 1-D stimuli introduced by Morrone and Burr (1988) in which the amplitude spectrum is that of a (partially blurred) square-wave and all Fourier components have a common phase at the origin. The arrival phase of all components at the origin (x = 0) is π/4 (45°), giving rise to an asymmetrical luminance profile. Most observers see a bright bar at or near the origin. The top panel in addition shows the responses of a bank of four Laplacian-of-Gaussian filters at four spatial scales (Watt & Morgan, 1985) with the half-wave rectified outputs shown as yellow and blue for positive and negative respectively. In the second panel the positive responses are added over all the filters, as (separately) are the negative responses. The final (bottom) panel shows the centroids of zero-bounded regions. In agreement with the local-energy model, a feature is located close to the phase-congruent origin. In agreement with Hesse and Georgeson (2005), the position is shifted slightly to one side. Also in agreement with Hesse and Georgeson (2005) there are features to either side of the bar, asymmetrically placed.

4. The problem of representation

The ‘neural image’ is itself a landscape, consisting of features like ridges and summits. If we are avoid an infinite regress, we must not ask how we detect ridges and summits in the neural image. It is pointless to look for a feature in a representation of an image if the identical feature is present in the original image. (The keyword here is ‘identical’.) There are two very different approaches that can be taken to this problem of regress. One is to say that we should not look for further features in the image at all. The image tells us the derivatives at each point and we know that this is sufficient to reconstruct the local geometry. The pointwise derivatives form a huge dataset which must be sent to a higher neural network to be interpreted. As Koenderink and van Doorn (1987a, 1987b) say: ‘The local geometry is represented in the jet as a whole and features are best defined as functions on the jet.’ (A ‘jet’ can be thought of as a particular order of filter in this context.) This position is entirely logical, if a bit disappointing. Its main drawback from the point of view of the psychophysicist trying to test it is that it makes few testable predictions about what image landscape features are and are not represented at the putative later stage. All it tells us is that we know at every point in the image whether we are on a ridge, in a valley, on a summit or in a deep depression. It tells us what direction the ridge is pointing to locally. It does not tell us how long the ridge is, or whether it has a summit on it, or whether there are other ridges nearby. All this is left to a later stage, where such information may or may not be represented. In other words, all we want to know is implicit in the pointwise filter outputs, but most things have yet to be made explicit. Again quoting Koenderink and van Doorn: ‘The sensorium4 does not compute perceptions at all but merely embodies the structure of the environment in a convenient form.’ The key word here is ‘convenient’. If the pointwise derivative representation is an advantage over the original image, it must be because it is easier for a higher neural network to deal with than raw pixel values, because a lot of the heavy lifting has been done. The most obvious thing that has been done is to compare pixel values over the regions of the receptive field, to compute a meaningful property of the image such as curvature.

A radically different approach to the problem of representation is to grasp the nettle of regress and to look for easily-computable spatial features in the neural image/sensorium. Obviously, these should not be the same as the features in the original landscape, but they could be other kinds of feature, found in the neural image alone. A key aspect of feature models is that they assert an irreversible loss of information at the stage where the neural image is converted to features. For example, Watt & Morgan (1983a, 1983b) looked at blur discrimination for blurred edges of different luminance waveform types (sinusoidal, gaussian blurred, etc.) and found that the discrimination data could all be summarized adequately by one dipper function expressed on the primitives (zero-bounded regions) not on the various waveforms. The implication was that the difference between the luminance profiles was being lost by the process of conversion to a spatial primitive code. Amongst many other examples of potentially disadvantageous loss of information may be cited the curious finding by Watt (1986) that if a vertical vernier target is crossed by a couple of horizontal lines, that are irrelevant to the task, then performance gets worse by exactly the amount one would expect if the line crossings were segmenting the target into independent parts.

The main candidate for features in this sense has been the zero-crossing in the first or second spatial derivative of the image, already mentioned as forming a boundary between ridges and valleys in the neural image. Marr (1982) and Marr and Hildreth (1980) proposed zero-crossings as convenient markers for the outlines of objects in filtered images. They asserted, and demonstrated for some cases, that zero-crossings were often coincident across spatial scale if they came from the boundary of a real object. When they are not, as in coarsely-sampled images of the Harmon-Julesz type, perception of the sampled structure fails but can be recovered by removing the middle spatial frequency band to make a transparent image (Harmon & Julesz, 1973) or by using pixel noise to mask the interfering high spatial frequencies (Morrone, Burr, & Ross, 1983). Around sufficiently uncluttered objects, zero-crossings in the first or second-derivative form closed curves, or ‘isophots’ of value zero (ter Haar Romeny, 2003). The closed curves are a tempting target for extracting further properties of the objects such as its general orientation and size (Watt, 1991).

Mach bands are seen at the beginning and end of linear luminance ramps. An example is seen at the edge of the Plateau in Fig. 3. Mach (1906) was the first to suggest that the visual system differentiates the image on the basis of the appearance of the eponymous bands. His historical account (1926) in the principles of physical optics (Mach, 2003) is fascinating, because he describes a long history in which the bands had been mistakenly presented as evidence for the wave theory of light! Mach pointed out that the second-derivative of a linear ramp is zero, except at the inflexion points at the bright and dark ends. He therefore concluded that these inflexion points were being represented in vision by some second-derivative process. His observations and theory were independently echoed by McDougall (1926) in his neural ‘drainage’ theory of inhibition, which obtained favorable comment from Sherrington: see http://www.jstor.org/stable/1416120. Mach bands have often been interpreted as maxima and minima in the output of an array of retinal ganglion cells (Cornsweet, 1970). However, these same extrema are present in the response to a sharp edge, in which no Mach bands are seen. Watt and Morgan (1983–1985) argued that in the response to a sharp edge, the extrema in the filter response are separated by a zero-crossing, and that this is interpreted as an ‘edge’. The extrema to either side are therefore not independently represented. However, if a linear ramp is introduced, the zero-crossing is replaced by a plain between the summit and the hollow, the width of which is proportional to the length of the ramp, after blurring. Experiments showed that Mach bands were detected at a critical ramp width of 3 arcmin, which is just the point at which a plain emerges in the filtered response. The plain is interpreted as such, and the isolated extrema at either side are represented as separate features – the Mach bands. The fact that Mach bands are not seen in sharp edges or in any Gaussian-blurred edge is also explained by the local-energy model, since these edges have only single peaks of local energy at their zero-crossings, in contrast to ramps, which have peaks and troughs of the inflexion points. The predictions of MIRAGE and the local-energy model are identical in this respect, and have not been experimentally distinguished (Morgan & Watt, 1997).

The fact that the predictions of MIRAGE and the local-energy model for Mach bands are difficult to distinguish is not surprising. Essentially, the local-energy model computes points in the image where the arrival phases of the Fourier components are the same. At the edges of the square-wave, for example, the arrival phases of the Fourier components are all zero. But this is exactly the point where the first derivatives of the components (which transform sine to cosine and vice versa) are usually maximal. Therefore, the model is similar to a gradient detector, like other derivative models. The local-energy model is implemented by convolving the image with pairs of band-pass filters, one member of the pair being odd-symmetric and the other even. These correspond to first- and second-derivatives of Gaussians respectively. MIRAGE uses only second-derivative filters, but a very similar set of parsing rules could be used for the outputs of first-derivative filters. The local-energy model squares the outputs of the odd-and-even filters and then adds them. The squaring non-linearity plays the same role as the half-wave rectifying non-linearity in MIRAGE, which is applied before filter outputs are added over scale, and which is used to parse the neural image into positive and negative zero-bounded regions.

In both MIRAGE and the local-energy model (LEM), an attempt is made to state the rules by which the visual system interprets the output of the filter bank, rather than postponing this interpretation to a later unknown stage. In LEM an interpretation stage is necessary because peaks of local energy do not distinguish edges from bars, and do not explain why Mach bands are seen as bars rather than edges. In LEM the interpretation stage is performed by appealing to the outputs of the component filters before they are squared and added. At an edge, the response of odd filters is greater than even; for a bar the reverse is true. In MIRAGE, similarly, an edge is defined as two abutting regions, one produced by the activity of ‘on centre’ cells and the other by ‘off centre’. A bar is defined by a positive region flanked by two negative regions, or alternatively as an isolated positive region, as in a Mach band. The rules in MIRAGE and LEM are, arguably, just different ways of describing the same underlying structure in images after they have been band-pass filtered. Both are fundamentally non-linear.

The MIRAGE model was developed by Watt (1991) into a 2-D version, where zero-crossings played a crucial role in segmenting the image into closed-curved ‘regions’. The regions are represented as having measurable properties such as overall mass, centre of gravity, length of major and minor axis, and so on. Another example where spatial primitives are made explicit, is the ‘bar-code’ representation of faces by Dakin and Watt (2009) where horizontal filtering was used to produce elongated zero-bounded regions that have an unusual tendency to fall into vertically co-aligned clusters compared with images of other natural scenes.

We have strongly contrasted the pointwise-derivative approach with the ‘spatial primitive’ alternative, but the antithesis is arguably more a matter of research strategy than of basic philosophy. Both approaches start with essentially the same localized derivative structure. Both would like to produce an account of our perception of features. The ‘spatial primitive’ approach attempts to derive simple rules for interpreting the sensorium on the basis of psychophysical observation. The alternative states that this is premature, and that we would be better off regarding the information about features as implicit in the sensorium/neural image.

Up to now we have been concerned primarily with features of the image landscape and the way they may relate to features in the world. These may or may not be related to the features we perceive, although Darwinian theory would strongly suggest that a relationship should exist. How do we find out what phenomenal ‘features’ characterize our visual perception? Only psychophysics can answer this question. Imagine that we meet a Martian who has ‘eyes’ (optical sensors) and seems to use the information from them to move about the world. How would we know whether they saw ‘edges’, ‘bars’ or even Mach bands, without knowing their language? The word ‘edge’ to the Martian would be like Quine’s (1960) imaginary word ‘Gavagai’, which might refer to a rabbit, or alternatively, to an edible object moving a high velocity along an unpredictable path, on a weekday. What Quine called the ‘indeterminacy of translation’ is a problem for ‘features’ just a much as for objects.

5. Criteria for features and their measurement

5.1. The primal sketch

David Marr proposed not only that images can be described by a set of primitive ‘features’ but the visual system does use these features in a symbolic description that he dubbed the ‘primal sketch’. Watt (1991) elaborated the idea into a detailed primal sketch, in which primitive features such as blobs have detailed symbolic attributes such as mass, centre of mass, elongation and orientation. This is a very big leap. How do we know whether anything like this actually occurs? To begin with, how would we demonstrate that spatial primitives exist?

5.2. Evidence for features in natural images

For a review of the statistical content of natural images see Simoncelli and Olshausen (2001). Many theorists (e.g. Geisler, Perry, Super, & Gallogly, 2001) make the Darwinian bet that the visual system has evolved to detect the features of natural images, and devotes resources to this detection in proportion to the probability of feature occurrence. Geisler and Diehl (2003, p. 381) provide a useful list of the image statistics that have been measured, which are principally concerned with the distributions of pixel intensity values and spectral information. The most relevant for our purposes are concerned with the distribution of edges in natural images. Switkes, Mayer, and Sloan (1978) examined the global Fourier transform of natural images and found evidence for a bias towards horizontal and vertical orientations. This tells us that the landscape is not entirely composed of summits and hollows but little more. In particular, it does not distinguish between edges and ridges, or tell us anything about the elongation of the contours. The same restriction applies to Coppola, Purves, McCoy, and Purves (1998) who measured orthogonal directional first derivatives for 3 × 3 pixel neighborhoods in natural images and again found evidence for a bias towards cardinal axes. Geisler et al. (2001) took the interesting approach of first defining potential edges at every pixel location by locating zero-crossings in the output of circularly-symmetric second-derivative filters, and then determining the predominant orientations at each of these locations by directional filters. They were then able to use the information from every possible pixel pair to calculate a histogram giving the probability of observing an edge element at every possible distance, orientation difference and direction from a given edge elements. Results showed that for all distances and directions the most likely edge element is parallel to the reference element, with greater probabilities for elements that are nearer and collinear. Collinearity is exactly what we would expect from a landscape that contains elongated ridges and edges. However, the probability of finding parallel vs. non-parallel elements is also higher at non-collinear locations. This is consistent with the presence of ridges in the images, since ridges will have parallel elements on either side of the spine. Ridges could arise, as Geisler et al. note from objects like the branch of a tree. Equally, they can result from oriented textures like the bark of a tree.

Pederson and Lee (2002) studied the distribution of edges in 3 × 3 patches of natural texture. Their aim was to obtain a full probability distribution of joint pixel values. Their work was explicitly inspired by Marr’s primal sketch idea that the structure of images can be described by ‘primitives such as edges, bars, blobs and terminations’. They confirmed a concentration of data along edges. They state their aim in the future of extending the analysis to other image primitives such as bars, blobs and T-junctions, but this does not yet seem to have appeared. In general, the statistic analysis of image structures has been highly biased towards edges, and it is difficult to find answers to the following questions:

What are the probabilities of summits vs. depressions of varying size in different kinds of natural image?

How common is it for ridges to have a summit?

What are probabilities of ridges vs. valleys? Do they have different sizes/depths/heights?

What are the probabilities of ridges as a function of the separation of their edges? (There are some indications of this in Geisler et al. (2001).)

How common are saddles? They occur between two partially resolved summits and around the lower skirt of 2-D Gaussians.

How conspicuous is a partially-resolved dot pair amongst unresolved distracters?

Watt (1991), as previously mentioned, took a different approach to the problem of finding the distribution of oriented features, bypassing the need for directional derivatives. Similarly to Geisler et al.’s first stage, Watt located zero-crossings by convolving natural images with an isotropic Laplacian-of-Gaussian filter and then retaining only the high energy regions. Closed curves of zero-crossings were then used to identify ‘blobs’, the orientation of which was then computed from the principal axis of the best-fitting ellipse. In this way, it could be shown that textures like tree bark had a characteristic orientation histogram, defined by its peak and variance. The ability of observers to identify the mean orientation of a set of ‘blobs’ (Gabor functions) was measured by Dakin and Watt (1997), and this has remained an active area of research (Dakin, 2001; Dakin, Bex, Cass, & Watt, 2009; Mansouri, Allen, Hess, Dakin, & Ehrt, 2004). The ability of observers to detect differences in orientation variance was studied by Motoyoshi and Nishida (2001) and Morgan, Chubb, and Solomon (2008). As in the case of luminance blur discrimination (see below), there is an optimum baseline (pedestal) standard deviation of the orientation blurring function for the best discrimination, consistent with an intrinsic blurring function for orientation with a standard deviation of about 3°.

5.3. Psychophysical evidence for features

5.3.1. Features are conspicuous and interesting

With apologies to readers from the Mid-West, one might say that plains are rather uninteresting. They become more interesting if they contain features like summits and valleys. Can this be used as a criterion for features?

5.3.1.1. Eye movements

If our hypothetical Martian moved its eyes, we could show it a grey-level image divided into regions with different features and see which ones were inspected. Dobson, Teller, and Belgum (1978) introduced this ‘preferential looking’ technique to interrogate a sub-class of Martian called the human infant. We know from their findings that infants prefer parallel ridges (gratings) to plains. Apart from this, there appears to be little systematic investigation of other features of the primal sketch in human infants, though it seems highly likely that blobs would also be preferred to plains. Yarbus (1967) introduced the method of measuring gaze at complex scenes and was able to show systematic similarities between observers, but this was at the high-level object level rather than at spatial primitives. Reinagel and Zador (1999) found that observers tended to look at high-contrast image patches, but also at patches where the neighborhood correlation between pixels was comparatively low. The latter finding is unexpected. Presumably the low-correlation patches contained fewer features like edges and were more like noise. This illustrates a problem with preferential looking in adults. We might prefer look at regions that are harder to interpret because there is survival value in exploring the unexpected. A good case can be made out that interesting photographs are the ones that challenge our routine preconceptions. Despite this, it would be useful to have more information along the lines of Reinagel and Zador (1999) using patches with carefully controlled statistics/features.

Rajashekar, Bovik, and Cormack (2006) and Rajashekar, van der Linde, Bovik, and Cormack (2007) examined the eye movements of subjects searching for simple geometrical shapes, which including a sharp edge, embedded in 1/f noise. Because the task was hard, subjects made many fixations to incorrect (target absent) positions, and the noise samples in these positions were analyzed by the method of psychophysical reverse correlation (Section 5.3.5) to determine the search templates.

For a recent review of the literature on the image features that attract saccades see Kienzle, Franz, Scholkopf, and Wichmann (2009) who interestingly argue for a simple centre-surround filter consistent with control by neurons in the superior colliculus (Humphrey, 1968).

5.3.1.2. Visual search

A feature like a blob would be noticeable on a plain. It would, literally, be salient. If asked to say whether one of an array of patches contained a blob when all the other were plains, the observer would probably be just as fast to say ‘yes or no’ if there were 10 plains as if there were two. This is the technique of ‘parallel search’, which has been extensively used to find what cognitive psychologists call ‘features’ (Treisman & Gelade, 1980). This is not the same sense in which we have used ‘feature’ so far. In the visual search literature, orientation is a ‘feature’ because a 90° bar ‘pops out’ from a set of 0° distracters. Others features, in this sense, include color, contrast polarity and size. In the sense that we have been using the term, these are properties of a feature, in this case a bar. Nevertheless, the paradigm is potentially adaptable for seeing which features, in the spatial primitive sense, ‘pop out’ from others.

Fahle (1991) examined ‘pop out’ of a target containing a vernier offset amongst distracters and found parallel search. The critical feature seems to have been a corner (Section 5.3.4.3) not an orientational difference, since a vernier target with one direction of offset did not ‘pop out’ from a distracters with the opposite offset. A further indication that corners are features is that a target without a corner did not pop out from distracters with corners, an example of the ‘feature positive’ effect first described in pigeons (Lindenblatt & Delius, 1988).

The example of Nam et al.’s (2009) experiment on Gaussian curvature has already been described, as well as a suggested experiment on the pop out of saddles. Many similar experiments could devised. However, there are some problems with the visual search technique as a method of identifying spatial primitives:

The technique is usually thought to identify pre-attentional processes, the theory being that attention takes time (in the order of magnitude 10−1 s (Duncan, Ward, & Shapiro, 1994) to move from one item to another in the visual field, therefore a search time that is independent of number of items (parallel) must be pre-attentional. Even if this were true, a parallel search for a putative feature would show only that it is pre-attentional, not that it is a spatial primitive. For example, a local horizontal edge with black on the top is quite hard to find amongst distracters with black on the bottom, so edge polarity is not a primitive by this criterion, although it is clearly represented (Tolhurst & Dealy, 1975). Kleffner and Ramachandran (1992) found that merely blurring the edges to make them look like summits and hollows lit from above, caused the odd-polarity one to ‘pop out’. Clearly, pre-attentional and post-attentional are not the same thing as pre- and post-object interpretation.

The distinction between ‘serial’ and ‘parallel’ search is not absolute (Duncan, 1989; Duncan & Humphreys, 1989). Even the search for an oriented bar amongst differently-oriented distracters becomes harder and more dependent on distracter number as the orientation difference between target and distracters decreases. An alternative conception to the pre- vs. post-attention dichotomy is that search is limited by early noise and that additional distracters bring more noise to the situation (Morgan, Castet, & Ward, 1998; Palmer, 1994; Palmer, Ames, & Lindsey, 1993; Palmer, Verghese, & Pavel, 2000; Solomon, Lavie, & Morgan, 1997). ‘Parallel’ search happens when the difference between target and distracters (‘the cue’) is so large that the noise is negligible. Finding an isolated ‘feature’ amongst distracters is usually taken to involve a second-stage of filtering, as in the standard models of texture segmentation (Bergen & Adelson, 1988; Chubb, 1999; Landy & Bergen, 1991; Malik & Perona, 1990). The outputs of the first-stage filters are rectified and fed into a second-stage differential operator that signals edges (the filter-rectify-filter model). Evidence for a second-stage gradient detector is the presence of Mach bands, the Chevreul illusion and the Cornsweet effect in texture ramps (Lu & Sperling, 1996). The presence of Mach bands in random-dot stereograms was also reported by Lunn and Morgan (1995), using forced-choice with 46 naive observers. The FRF model explains one of the key generalizations about visual search (Duncan & Humphreys, 1989): the ‘heterogeneous distracter’ effect, according to which search is impeded to the extent that the distracters differ amongst themselves. Local differences between distracters will produce spurious edges in the second-stage landscape. If the FRF model is generally correct, as it seems to be, then rapid, parallel visual search tells us which second-stage filters exist, not what filters are present in the first stage. It cannot reasonably be maintained that the second-stage filters exist for all first-stage filters, because of the example of contrast polarity. In other words, it is quite possible that saddles are represented as spatial primitives, but that there are no second-stage filters for making them ‘pop out’.

Despite these reservations, the Nam et al. (2009) study establishes the point that search is faster for intersecting ridges (plaids) when the two components have the same passband. If there are mechanisms for computing Gaussian curvature they appear to compare directional gradients within filters tuned to the same spatial scale.

5.3.2. Features are semantically circumscribed

One common argument runs that if landscape features like cliffs and summits are primitive tokens for images they might reasonably be expected to have names like ‘edges’ and ‘bars’. Several experimenters (Hesse & Georgeson, 2005; Morrone & Burr, 1988) have therefore taken the approach of asking observers to look for edges and bars in images and to mark where they saw them. The technique has been used in particular to test the predictions of the local-energy model against gradient-based models.

The validity of the underlying assumptions here is not self-evident. Even setting aside the ‘Gavagai’ problem (Quine, 1960) of the impossibility of radical translation, there is no reason why naive observers should have names for primitive features – the example of phonemes in speech makes this clear. There seems to be no name in English for a luminance saddle, but this does not mean that saddles are invisible. More generally, we cannot be sure that subjects will use terms like ‘bars’ and ‘edges’ in the same way. A thought experiment is to ask whether a bar has two edges – probably ‘yes’ if its a thick bar, but perhaps ‘no’ if it is very thin. Using exactly the same stimuli (see below) Hesse and Georgeson (2005) found bars to be flanked by two distant edges, while Morrone and Burr (1988) saw only an isolated bar.

These objections may seem to be overly abstract. In practice, if observers are asked to mark features, even in one-dimensional luminance profiles, there is close agreement between observers (Hesse & Georgeson, 2005). They mark summits (luminance ‘peaks’) and gradient maxima (‘edges’). Whether they place these marks exactly in these places will be considered in the following section (localization). The exception, as previously noted, is whether peaks are always flanked by edges. To test the local-energy model, Morrone and Burr (1988) invented an ingenious class of stimuli, in which the amplitude spectrum is that of a (partially blurred) square-wave and all Fourier components have a common phase at the origin. This ‘arrival phase’ could, for example, be π/2 approximating a square-wave, or 0, approximating a triangle wave. The interesting cases are the intermediate phases. By design, the local energy peak is always at the origin, where the Fourier components have the same phase, but features like the luminance peak and gradient maximum do not always occur at the origin. The LEM, however, asserts only a single bar at the origin, and this is what Morrone and Burr’s subjects in fact reported. On the other hands, with exactly the same stimulus, the subjects of Hesse and Georgeson (2005) saw a bar at the same place as the luminance peak, with two edges one on other side. It is tempting to think that this discrepancy is purely semantic, and that the local-energy observers did not consider edges on either side of a bar to be true edges. In fact the problem is deeper, because LEM asserts a bar at the local energy peak, and there really are no local energy maxima or minima corresponding to the edges described by Georgeson’s subjects. Part of the problem here is that LEM does not have a mechanism for making bar width explicit. It is subjectively quite obvious that the central bar in the π/4 case has a finite width, but this is not represented in LEM. If it were, the edges seen by Hesse and Georgeson (2005) might emerge.

The possibility should at least be considered that the method of semantic marking is too subjective and not sufficiently robust to decide between different representational schemes. A possibly more robust method is that of relative feature localization, to which we turn next.

5.3.3. Relative feature localization

Not all properties of an image are precisely localized. For example, in ‘crowded’ stimuli, observers can report the mean orientation of a set of Gabor patches, without being able to say which of the patches has the greatest tilt from vertical (Parkes, Lund, Angelluci, Solomon, & Morgan, 2001). However, primitive spatial features of the landscape like edges are almost by definition localizable. One method of testing for the presence of features has therefore been to see if they can be located by the observer, and with what accuracy. This method finesses the problem of giving names to the features, which is problematic (see Section 5.3.2). There are in fact two methods. One is to probe position of a feature with a reference, the location of which is assumed to be known. The other is to ask observers align two or more features, both of which have unknown locations and to measure the distance between them. Within each of these methods, there is a distinction to be made between measurements of sensitivity and bias.

5.3.3.1. Probing with a reference

This was the method used by Morrone and Burr (1988) and Hesse and Georgeson (2005). Observers could position a fine marker bar or dot so as to point (in one dimension) at the perceived position of the feature or to coincide with it. The limitations of this technique can be immediately seen by considering what would happen if we asked observers to indicate the position of a blurred edge by positioning a marker over it. The problem is that this is a Type 2 psychophysical measurement with no ‘right answer’. The observer can clearly see that the edge has extension and would be puzzled to know where to put the marker. Any attempt to tell the observer to point to the ‘centre’ of the edge, or some such, would be immediately influencing them in a particular direction. Determining a psychometric function by forced-choice does not solve the problem, if what one extracts from the function is a Type 2 measure (bias) rather than a Type 1 (slope). A true Type 1 version of the experiment would be to tell the observer nothing, but to give him/her binary-choice trials with feedback according to the true position of a physical feature such as the zero-crossing. If the zero-crossing is actually a primitive feature, then observers should be accurate in deciding whether the marker bar is to the left or right of the ZC. If, on the other hand, the local energy peak is a primitive, and if it could be arranged that the ZC and energy peak have different physical locations, observers should be more accurate when given feedback according to the position of the energy peak. We could thus decide between different feature theories on the basis of the slopes of psychometric functions, a bias-free, Type 1 measurement. Examples of this approach will be given in Section 5.3.4. It is mentioned here to make it clear that the usual way of using markers has been Type 2, where there is no right answer.

Again, this objection may be thought to be theoretical. There are clear cases where subjects can accurately and without feedback make a bar point to another feature, the obvious one being when the other feature is also a bar (vernier acuity, measured by the method of repeated settings). But the case where one kind of feature is used to point to another is more problematic. Morgan, Mather, Moulden, and Watt (1984) reported that thresholds were higher for aligning edges of opposite polarity than for aligning edges of the same polarity, a clear indication that there is not a single, unambiguous matching primitive such as the zero-crossing.

Morrone and Burr (1988) and Hesse and Georgeson (2005) used a pointer and spot respectively to find the relative location of bars and edges in the class of stimulus illustrated in Fig. 5, where the different Fourier components have the same arrival phase at the origin in the centre of the figure. The case is of interest because the local energy peak is in the exact centre, but other features such as the luminance peak are slightly displaced. Morrone and Burr reported that observers aligned the bar with the peak of local energy. Hesse and Georgeson, on the other hand, found that their subjects aligned the pointer with the luminance peak. Both investigators provide demonstrations to illustrate their findings but it is unclear what influence photographic nonlinearities might have on these demonstrations. An important methodological difference is that Morrone and Burr used prolonged inspection of their stimuli to encourage ‘monocular rivalry’ (Atkinson & Campbell, 1974; Georgeson & Phillips, 1980). Observers were encouraged to change their settings according to whether they saw a bar or an edge in the centre. Hesse and Georgeson on the contrary, used brief, repetitive exposures and refer to the analysis by Georgeson and Freeman (1997) of monocular rivalry for the phase 45° stimulus used by Morrone and Burr. Their stimulus was low contrast and low frequency; these conditions are especially favorable to getting the afterimages that underlie monocular rivalry (Georgeson, 1984; Georgeson & Turner, 1985).

As noted by Hesse and Georgeson (2005), and illustrated in Fig. 5, MIRAGE correctly predicts the same number of features as their observers, and the central feature is displaced from the energy peak in the same direction, but the magnitude of the displacement is not as great as predicted by MIRAGE. No Type 1 experiment with feedback, of the kind outlined in Section 5.3.4.2 seems yet to have been carried out. Hesse and Georgeson conclude that observers located the peak of luminance profiles, but this cannot be generally true because of Mach bands, and because of other experiments showing localization of centroids, not peaks of skewed Gaussian profiles (Section 5.3.4.2). In a later paper (Georgeson et al., 2007) the Hesse and Georgeson data were found to be well predicted by their (N3+, N3−) model, which we shall describe in the Appendix on blur.

Wallis and Georgeson (2009) used a marker to point to features in triangle-wave profiles with blurred peaks. The blurring function (a box) was designed to prevent peaks appearing in the output of a G″ filter, as they would in a flat-topped plateau (cf. Fig. 3Rb). Wallis and Georgeson report the appearance of opposite-polarity edges to either side of the centre of the stimulus, corresponding in position to the third derivative of the luminance profile. The subjects were three psychophysically-experienced observers and confirmation from a larger sample of naïve observers would be useful.

5.3.3.2. Probing relative position with two unknowns

The idea here is to align stimuli that are as similar as possible but putatively differing in relative location. An example would be aligning two stimuli that differ slightly in blur. There is still no ‘right answer’, but at least the stimuli are designed to have the same number and type of features, unlike the bar vs. edge comparison. Morgan et al. (1984) examined the critical conditions for the alignment of two differently-edges using an apparent motion test. In agreement with the phenomenon of ‘irradiation’ (Helmholtz, 1896; Moulden & Renshaw, 1979) they found that edges were not located at their zero-crossings but at a point shifted into the dark phase, a shift that could be modeled by a Naka–Rushton non-linearity. Building blurred edges with the inverse of the non-linearity cancelled the effect, so that edges were subjectively aligned at their actual zero-crossings. Mather and Morgan (1986) investigated alignment of edges placed simultaneously one above the other and found similar results. They also found, however, that thresholds (1/slopes of psychometric functions) were higher when comparing opposite-polarity edges, the increase being larger at high blurring standard deviation. This finding is a blow to zero-crossings as spatial primitives, since there is only one ZC per edge, independent of its polarity. MIRAGE explains the threshold elevation by their being two features to an edge, the zero-bounded regions on either size of the ZC. In physically-aligning opposing polarity edges neither the positive nor the negative regions are aligned, and worse still they have opposite directions of displacement. If an attempt is made to align positive with negative centroids this fails because of the compressive non-linearity; the tilt is opposite on either side of the ZC. This is an example of how a Type 1 measure (sensitivity) can be used to probe feature location.

Hesse and Georgeson (2005) used relative location to probe the position of features in the Morrone and Burr (1988) filtered square-wave stimuli mentioned above (Section 5.3.2). In their Experiment 2 (Fig. 5) they used an array of three horizontal ribbons each of which contained a 1-D luminance profile. The top and bottom ribbon contained identical stimuli with arrival phase at the origin of 0°, and the middle had arrival phase −45°. Over a series of trials with the physical position of the middle ribbon varied observers had to indicate with a key press ‘whether a salient feature roughly in the middle was to the left or right of the outer pair. The nature of the feature was left undefined and no feedback was given. The resulting psychometric functions showed a PSE shifted from the centre by ~1-4 arcmin (depending on sharpness), which was a factor of ~3 times greater than the JND, taken from the slope of the psychometric function.

Although the relative location task has no right answer, and is therefore Type 2, it appears from the evidence to be quite robust, and has the advantage of allowing Type 1 threshold measurement as well if a psychometric function is measured.

5.3.4. Discrimination methods

In view of the difficulties we have encountered in interpreting subjective marking of features it is tempting to look for Type 1 measures of sensitivity rather than bias. An example that has already been mentioned is the finding that thresholds for edge alignment are raised for opposite vs. same polarity edges, a fact that rules out simple ZC’s as spatial primitives for alignment. Tolhurst and Dealy (1975) measured contrast detection thresholds for bars and edges, and for discriminating their polarity and found that detection and discrimination thresholds were similar. Assuming that only one detector was active at threshold, and thus ruling out a MIRAGE-type of spatial distribution argument, they deduced that bars and edges were detected by different mechanisms.

5.3.4.1. Mach bands

Watt and Morgan (1983b) and Watt, Morgan, and Ward (1983) attempted to measure the conditions for the appearance of Mach bands by measuring thresholds for distinguishing a Gaussian-blurred edge from a composite Gaussian-rectangular blurred edge. Threshold was reached when the rectangular blurring component reached a critical width of ~3 arcmin. Watt et al. conjecture that this was just the point at which Mach bands appeared in the blurred edge, and that it was the presence of these bands that allowed the observer to discriminate between the stimuli. It is at this width that a region of zero-activity appears between a peak and a trough in the second-derivative of the retinally-blurred luminance profile. Of course, the assertion that Mach bands were present is based on purely subjective reports. No direct method has yet been found for measuring the presence of the bands. The strength of discrimination methods is that they do not depend on semantics; the limitation is they can only infer the existence of features indirectly.

5.3.4.2. Centroids

Discrimination thresholds have been used to identify the centroids of unresolved light distributions as a property predicting their localization. Westheimer and McKee (1977) composed each of two targets for a vernier alignment out of nine parallel unresolved bars 18 arcsec apart. Subjects had to report the direction of the vernier misalignment produced either (a) by displacing the whole set of 9 bars by the same amount and (b) displacing only one of the bars in the centre. Threshold displacements were lower in the former case, but if the data were recalculated as the shift in the centroid (first moment) of the light distribution as a whole, the two thresholds were identical. Watt et al. (1983) and Watt and Morgan (1984) confirmed this result for pairs of bars differing in relative luminance and separation, and further showed that observers could not discriminate which of two unresolved bars, left or right, was lower in luminance. Although they used shift in the PSE rather than a sensitivity method, this is the appropriate place to describe the finding (Whitaker & McGraw, 1998) that observers locate the relative positions (Section 5.3.3.2) of large-scale asymmetrical Gaussian blobs at their centroids. This would be expected from MIRAGE, which calculates the centroid of zero-bounded regions in the filtered luminance profile. Local energy peaks will also occur at centroid positions.

5.3.4.3. Curvature and corners

Curvature has been considered both theoretically and experimentally (Dobbins, Zucker, & Cynader, 1987; Fahle & Braitenberg, 1983; Koenderink & Richards, 1988; Watt & Andrews, 1982; Wilson, 1985) as have corners (Link & Zucker, 1988). Curvature is the first spatial derivative of local orientation (the tangent), and a corner is thus defined as a peak in the second spatial derivative. In the image landscape, a corner is a place where the luminance gradient is flat in a 90° sector bounded by two edges and negative elsewhere. It is thus a place of high gradient variance, unlike a summit where the variance is low. The numerous filters for ‘corner finding’ all exploit these facts in various ways. In the 2-D image, corners are places where orientation curvature changes rapidly. Thresholds for the second-derivative as such seem not have been reported, but Link and Zucker (1988) measured the accuracy with which a sharp bend in a line could be distinguished from a more gradual bend, when both lines were sampled by dots at various sampling phases with respect to the position of the corner. They found best performance when the sampling coincided with the corner. For Fahle’s experiment on ‘pop out’ of corners see Section 5.3.1.2.

5.3.5. Reverse correlation methods

Psychophysical reverse correlation would be a potentially useful way of identifying the critical spatial regions involved in elementary feature detection. If subjects were discriminating between edges and bars, for example, one could ask what noise samples made edges look more like bars, and vice versa. Little work of this kind appears to have been done. An exception is Neri and Heeger (2002) who detected a briefly flashed target bar that was embedded in ‘noise’ bars that randomly changed in intensity over space and time. Subjects behaved sub-optimally, in the sense that they were more likely to report a bar when the stimulus had high contrast energy, irrespective of its location in space and time. Kurki, Peromaa, Hyvarinen, and Saarinen (2009) used reverse correlation to assess the spatiotemporal characteristics of brightness perception, using a contrast polarity discrimination both for a luminance-defined bar and its high-pass filtered Craik-Cornsweet-O’Brian version, but their full results have not been published. For a combination of eye movement fixation recording with reverse correlation Rajashekar, Bovik, and Cormack (2006) (Section 5.3.3.1).

5.3.6. Functional brain imaging

There is a very large literature on the fMRI correlates of shape perception, little of which is relevant to the ‘primal sketch’. The psychophysical linking hypotheses appear to be: (a) that images containing features will elicit more neuronal activity than those that contain fewer features or none and (b) that the increased neuronal activity will be reflected in an elevated BOLD response. Perna, Tosetti, Montanaro, and Morrone (2008) measured the BOLD response to structured periodic band-pass images that all had the same amplitude spectra but different phases, arranged to produce edges, lines and random noise (random phase spectra). Alternation of lines against edges produced strong activation of V1 but alternation of lines against edges produced activity only in two higher visual areas, located along the lateral occipital sulcus and the caudal part of the interparietal sulcus. The former has been implicated in many shape analysis tasks (Kourtzi & Kanwisher, 2000) including the alignment of spatially separated features (Tibber, Anderson, Melmoth, Rees, & Morgan, 2009) and the latter in segregation of surfaces in depth (Spang & Morgan, 2008, Tsao et al., 2003).

Dumoulin, Dakin, and Hess (2008) showed observers a range of binary images derived from natural scenes, the same images with only the pixels at edges, and a textured image with the edge information largely removed and the rest remaining. Area V1 responded best to the full images, and least to textures. V2 (on average over subjects) responded equally to the full image and edge version, and least to texture. A variety of extra-striate visual areas, V3, VP, V3A, hV4 and a region in ventral occipital cortex (VO) responded best to the ‘edge’ images. No area responded most to texture.

5.3.7. Prediction of strange features

‘Prediction is very difficult’, said Niels Bohr, ‘especially of the future’. It would be nice if the powerful computational schemes of local energy, MIRAGE and the recent non-linear 3rd derivative model (Georgeson et al., 2007; May & Georgeson, 2007a, 2007b) could predict new features, rather than describing old ones. Wallis and Georgeson (2009) attempt this with their “Mach Edges” (Section 5.3.3.1) but further work is needed to determine whether these features are seen by other than experienced psychophysical observers. The next best thing to new predictions are phenomena that are known, but which are non-obvious. Mach bands have been discussed already. Chevreul edges, which are not the same as Mach bands although often confused with them, have been explained by MIRAGE (Watt & Morgan, 1985, Fig. 6) as spurious edges, and by LEM. An interesting case is the dark spots seen at the intersections of the Hermann grid (in the case where the grid is white on a gray background). These spots are especially puzzling because they would correspond to summits in the blurred image, not hollows. The classical model of this by peaks in the response of circularly-symmetrical DOG filters is almost certainly wrong because (a) it works only at a carefully chosen spatial scale and (b) the spots disappear if the grid intersections are not in alignment (Geier, Bernath, Hudak, & Sera, 2008). The latter fact makes one think of the ‘spider web’ illusion of diagonal ridges in a regular lattice of horizontal and vertical lines, which also depends on exact alignment of the intersections (Morgan & Hotopf, 1989). An accidental example of a spider web in an architectural drawing is illustrated in Fig. 6. As in the usual version, the faint lines run between a number of accidentally aligning corners and edges in the image.

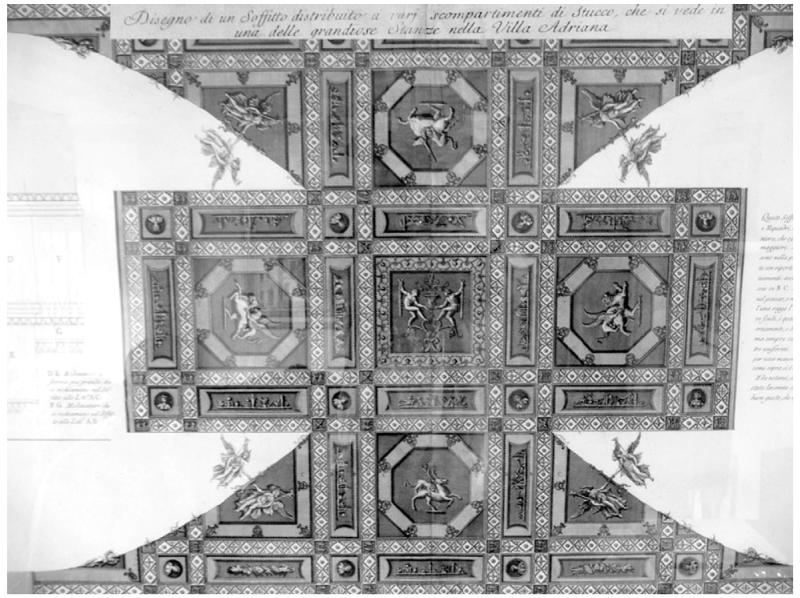

Fig. 6.

‘Spider Webs’ in a drawing by Piranesi of a ceiling from the Villa Adriana. Two black lines are seen going through the centre of the figure, continuing the high contrast curved edges on the outside. The lines are much reduced if the region if the curved edges are masked out, so they are not entirely attributable to low-pass filtering (Morgan & Hotopf, 1989).

The intrinsic geometry of the Hermann grid is interesting. At each intersection there are two orthogonal directions where the gradient is zero (along the ridges) and two orthogonal gradients at 45° to the ridges where the gradient is negative. Equivalently, there are four valleys corresponding to the corners of the grid. Morgan and Hotopf (1989) argued that these valleys provide local support for an assertion of long-range valleys, which is further supported by the alignment of the valleys across intersections. The ‘spider web’ according to this analysis, is a second-order feature arising from the accidental alignment of primitives. The spots of the Hermann grid are the assertion of the valley continuing across the intersection, and this could explain why the spots disappear when the corners are no longer in alignment (Geier et al., 2008).

Appendix A. Blur discrimination