Abstract

Public reporting of performance on quality measures is increasingly common but little is known about the impact, especially among physician groups. The Wisconsin Collaborative for Healthcare Quality (Collaborative) is a voluntary consortium of physician groups which has publicly reported quality measures since 2004, providing an opportunity to study the effect of this effort on participating groups. Analyses included member performance on 14 ambulatory measures from 2004–2009, a survey regarding reporting and its relationship to improvement efforts, and use of Medicare billing data to independently compare Collaborative members to the rest of Wisconsin, neighboring states and the rest of the United States. Faced with limited resources, groups prioritized their efforts based on the nature of the measure and their performance compared to others. The outcomes demonstrated that public reporting was associated with improvement in health quality and that large physician group practices will engage in improvement efforts in response.

Public reporting of performance on quality measures is increasingly common in health care.1 However the enthusiasm for public reporting is ahead of the science supporting it, especially in the context of physicians and physician groups.2, 3 Constance Fung, in a systematic review of public reporting, emphasized that rigorous evaluation of many public reporting systems was lacking.3 In the review, only 11 studies were identified that addressed the impact on quality improvement. All of these were essentially hospital based and focused primarily on mortality or cardiac procedures.3–6 The authors were unable to identify any published studies of the effect of publicly reporting performance data on quality improvement activity among physicians or physician groups.3 They went on to state that “more existing reporting systems should be evaluated in studies using rigorous designs with a plausible comparison strategy, so that secular trends and bias from the intervention effect can be distinguished.”3 The Wisconsin Collaborative for Healthcare Quality provides an opportunity to study such a reporting system.

The Collaborative is a voluntary, statewide consortium of physician groups, hospitals, health plans and employers working together to improve the quality and cost of health care in Wisconsin.7 Member physician groups care for half of the patients in the state of Wisconsin. Since 2004, the Collaborative has been posting the member physician groups’ performance on ambulatory quality measures on a publicly accessible website. This project was designed to study the impact of the first five years of that public reporting on the Collaborative as a whole and the individual participating groups. As such this is one of the first studies to provide insights into the impact of public reporting on large physician groups.

Methods

This project was designed as a retrospective cohort study focusing on the Collaborative reporting of ambulatory measures, 2004 through 2009. In the absence of a randomized control trial, the analysis was structured using a three-pronged approach. The first was to determine whether there was measurable improvement among Collaborative participants with respect to the outcomes being reported. The second was to survey each clinic site to assess how participants responded to the information reported. The third was to utilize the resources of the Dartmouth Atlas Project through the Dartmouth Institute for Health Policy and Clinical Practice to obtain an independent, external measurement of Collaborative performance over time and compare it to areas not participating in the Collaborative.

At the time this study was initiated in mid-2009, there were 20 physician practice groups participating in the Collaborative. Each group represented multiple affiliated clinics, both primary care and multispecialty, ranging from 8 to 100 clinics per group. Each member group commits to reporting outcomes for a number of quality measures on a yearly basis. (See exhibit 1 for measures evaluated). The groups are responsible for collecting their own data using methods strictly defined by a committee representing each of the groups. Results are independently audited and validated by the Collaborative after submission, with oversight from a multi-stakeholder audit committee, which includes leaders from healthcare provider organizations, health plans, and purchasing partners. Results for each physician group are posted by name on the Collaborative web site which is accessible for public viewing.8

Exhibit 1.

Improvement in aggregate performance on ambulatory measures by Wisconsin Collaborative for Healthcare Quality (WCHQ) members 2003 – 2009

| WCHQ Measure |

Initial year reported |

Significant Improvement |

Number of Years to Improve |

Percentage Improvement Since First Year |

Mean number projects initiated |

|---|---|---|---|---|---|

| Diabetes | 8.7 | ||||

| HbA1c Control (<7.0%) | 2003–2004 | Yes | 4 | 8.9 | |

| HbA1c Testing | 2005–2006 | No | 2.0 | ||

| Kidney Function Monitored | 2003–2004 | Yes | 2 | 17.3 | |

| LDL Control (<100 mg/dL) | 2003–2004 | Yes | 2 | 14.9 | |

| LDL Testing | 2003–2004 | Yes | 2 | 11.0 | |

| Blood Pressure Control (<130/80 mmHg) | 2006–2007 | No | 2.0 | ||

| Coronary Artery Disease | ** | ||||

| LDL Control (<100 mg/dL) | 2007 | No | 1.2 | ||

| LDL Testing | 2007 | No | 1.9 | ||

| Uncomplicated Hypertension | 3.9 | ||||

| Blood Pressure Control (<140/90 mmHg) | 2004–2005 | Yes | 2 | 9.1 | |

| Screening/Preventive Measures | 6.9 | ||||

| Pneumococcal Vaccinations | 2007 | No | 4.3 | ** | |

| Breast Cancer* | 2004–2005 | Yes | 4 | 4.0 | 2.6 |

| Cervical Cancer *∆ | 2003–2005 | No | 4.3 | 2.5 | |

| Colorectal Cancer* | 2005 | Yes | 3 | 6.7 | 1.8 |

Source: Data reported to the Wisconsin Collaborative. Initial analysis by Matt Gigot, MS

Notes:

In 2007–2008 patients with a history of cancer no longer excluded.

Through 2007–2009 Measurement Period

Not assessed in survey

Performance of Collaborative participants over time

In the initial analysis, each measure was assessed to determine if there was an improvement in the mean performance of the Collaborative as a whole. Using the group level results reported to the Collaborative each year, performance on each measure was compared year to year. Analyses were performed to determine how many years were required to achieve statistically significant improvement for each measure, which is achievement of a true difference from baseline with greater than 95% confidence. Statistical testing included pairwise t-tests and Tukey’s range test (a method for analyzing multiple comparisons over time). Next the trend within each group was analyzed. The documented performance for each annual report was compared with the baseline performance controlling for year and correlation among consecutive years within the same group. In addition, performance was compared among consecutive intervention years (year 1 vs year 2, year 2 vs year 3, year 3 vs year 4). The percent improvement by year and the rate of improvement for each group over the time period of participation were then estimated as a linear trend (slope), adjusting for group size and year. Finally, the groups were ordered by their rank during the year they first reported and this rank was compared to the subsequent rate of improvement (slope).9,10 Groups with only 1 year of participation in a measure were excluded.

Survey

The University of Wisconsin Survey Center was contracted to conduct a mail survey of the physician groups and the related clinics. The survey addressed clinic characteristics, knowledge of Collaborative measures, whether projects have been undertaken specifically in response to Collaborative reporting and specific types of quality improvement initiatives.11–15 Measures addressed included only those reported prior to 2007 including diabetes mellitus, hypertension, and cancer screening.

The strategy included a full mailing to the group leadership, a postcard reminder, a repeat mailing to those respondents who had not returned their surveys and reminder telephone calls before the first and last mailings to boost response rates.

Wisconsin Collaborative vs Non-Collaborative comparison: The Dartmouth Institute analysis

The Dartmouth Institute for Health Policy and Clinical Practice has an existing Medicare administrative dataset derived from a 20% sample of all fee-for-service, fully part A and B Medicare age entitled beneficiaries in the United States. They have developed a number of estimates of quality measures using this administrative data. At the time of this study, data was available from 2004 through 2007. They worked with a subsidiary of IMS Health, an international company that supplies the pharmaceutical industry with sales data and consulting services, to identify physicians who work at specific sites. Using the site addresses for Collaborative member clinics, they were able to link a list of clinic sites to the physicians working at those sites. Within the Medicare dataset, patients were assigned to physicians annually based on a plurality of their outpatient visits, giving priority to primary care physicians. Through this linkage, patients were assigned to clinic sites and identified as “Collaborative” or “non- Collaborative”. Three control populations were created for comparison purposes: Wisconsin residents not assigned to Collaborative physicians; residents of Iowa and South Dakota combined; and the remainder of the United States. Iowa and South Dakota were selected because they had no active public reporting effort and an existing relationship between state physician leadership and Collaborative leadership. Four annual cohorts were created, one for each year of the study. Thus assignment and location of study participants could change each year.

Existing Dartmouth quality measures derived from the Medicare billing data that were similar to Collaborative measures included lipid profiles and glycohemoglobin tests for diabetics aged 65–75 and the rate of mammography for women aged 67–69. Dartmouth also captured the performance of diabetic eye exams which is derived from the same cohort of patients and providers as the other diabetes process measures, but is not a measure reported by the Collaborative. Performances of all 4 cohorts were analyzed in the same manner using the 4 quality measures derived from the administrative datasets. Multivariate analyses were performed to compare the Wisconsin Collaborative groups in aggregate to the three comparison groups, adjusting for differences in age and gender (diabetics only), race, education and income. Values for education level and income were based on the average for the patient’s zip code obtained from US census data year 2000. Both the overall rate of test receipt and rate of change were compared simultaneously. Statistical adjustment was performed to account for multiple comparisons.

Limitations

Because a randomized controlled study was not possible there are several potential areas for bias or weakness. The decision to join the Wisconsin Collaborative is voluntary and as such the members are highly motivated. Patients of members also tend to be somewhat more affluent and less likely to be on Medicaid than the comparison groups. Patients from a lower socioeconomic status may be less likely to seek care and obtain recommended screening tests. Despite attempts to account for these differences using regression techniques in the analysis, this may create a bias in favor of better performance among WCHQ members. The group practice and clinic surveys were dependent on respondent recall. Any attribution of improvement efforts to Collaborative influence was potentially subjective. Finally, the Medicare analysis performed by the Dartmouth Institute was limited by the availability of the data to a 4 year time span. This was somewhat short relative to the time frames needed to observe improvement and may not allow for adequate comparisons.

Results

Twenty physician groups representing 582 affiliated clinics were members of the Wisconsin Collaborative and eligible to participate in the study. Two groups elected not to participate due to competing responsibilities. Two groups withdrew from the Collaborative during the study period. One group had recently merged with another member group and chose to report their historic data reflecting the two separate entities. Accordingly, analyses addressing performance of individual physician groups incorporate these 17 entities, representing 409 clinics. For analyses of Collaborative performance in aggregate, the data includes the performance all 20 physician groups.

Did performance on measures improve among WCHQ participants?

For the Collaborative as a whole, each measure showed an increase in the overall mean, ranging from a low of 1.2% for lipid (LDL cholesterol) control in coronary artery disease patients to 17.3% improvement in monitoring for kidney disease in diabetics. (Exhibit 1). A statistically significant improvement was seen in all measures that were implemented before the 2005–2006 reporting period and therefore had at least 3 years of reporting.

At the group level, substantially more groups improved significantly during the years that they reported to the Collaborative versus staying the same or worsening, with the exception of lipid(LDL cholesterol) testing in coronary artery disease (Exhibit 2). There was a strong correlation between the initial numerical rank of a group compared to its peers and the subsequent rate of improvement. In general, programs that were initially ranked the lowest compared to their counterparts improved at a greater rate while the higher performing groups generally remained the same regardless of the overall compliance rate. (Exhibit 2)

Exhibit 2.

Physician group level performance. Rate of improvement versus initial rank in Wisconsin Collaborative for Healthcare Quality (WCHQ).

| WCHQ Measure | Years Reported | # of

groups improved (worsened) |

Rate of Improvement (slope) |

Rank vs slope (R2) |

|---|---|---|---|---|

| Diabetes | ||||

| HbA1c Control (<7.0%) | 4 | 11(4) | 0.08* | 0.17 |

| HbA1c Testing | 5 | 13(2) | 0.04 | 0.71 |

| Microalbumin | 5 | 15(0) | 0.24* | 0.51 |

| LDL Control (<100 mg/dL) | 5 | 15(0) | 0.12* | 0.47 |

| LDL Testing | 5 | 15(0) | 0.17* | 0.30 |

| Blood Pressure Control (<130/80 mmHg) | 2 | 11(4) | 0.09 | 0.001 |

| Coronary Artery Disease | ||||

| LDL Control (<100 mg/dL) | 2 | 8(8) | 0.06 | 0.34 |

| LDL Testing | 2 | 10(6) | 0.12* | 0.36 |

| ScreeningPreventive Measures | ||||

| Pneumococcal Vaccinations | 2 | 13(3) | 0.12* | 0.14 |

| Breast Cancer | 5 | 15(1) | 0.07* | 0.36 |

| Cervical Cancer | 4 | 12(4) | 0.07* | 0.35 |

| Colorectal Cancer | 4 | 14(2) | 0.11* | 0.27 |

Source: Data reported to the Wisconsin Collaborative

Notes:

Slope statistically > 0.0 (p< 0.05)

Did Collaborative members initiate improvement efforts in response to public reporting?

Of the 17 groups who responded to our survey, 6 reported that quality improvement was either centrally or regionally managed, and so returned either a single survey for all clinics, or reported on subgroups of clinics which had the same quality improvement experience. The other 11 groups returned schedules filled out for each clinic. Combining the 2 approaches we have clinic level information on quality improvement activities for 409 or 72% of the original 582 clinics identified.

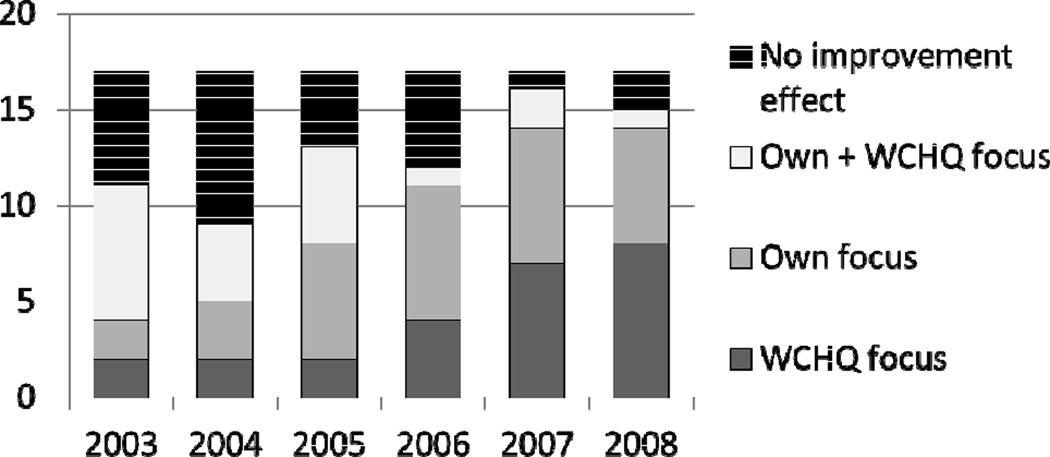

Sixteen of the groups responded to a direct question asking whether they formally chose to give priority to any of the Collaborative quality improvement measures and whether the decision was in response to reporting. It was common for member organizations to focus on Collaborative measures during the study period, and 15 groups reported formally giving priority to at least one quality improvement measure in response to Collaborative reporting. Nine groups indicated that their priorities were always or nearly always adopted in response to Collaborative reporting, while 6 sometimes did so. (Exhibit 3)

EXHIBIT 3.

Self reported trigger for initiation of improvement intervention, Wisconsin Collaborative vs other source.

Source: University of Wisconsin Survey center – group surveys

Notes: None

Groups reported a significant amount of activity in implementing systems and procedures to improve care quality and outcomes.17 As reported previously by our group, over time the mean number of quality improvement interventions for each condition increased, particularly for diabetes where the mean number of interventions rose from 5.0 (s.d.=3.9) to 8.7 (s.d.=4.5) between 2003 and 2008.17 For hypertension, there was a noticeable rise beginning in 2006 with the mean number of interventions adopted across clinics rising from 1.7 (s.d.=2.4) in 2006 to 3.9 (s.d.=2.7) in 2008. The most common initiatives implemented by Collaborative members at care sites were adopting guidelines (87%), and patient reminders (82%).

How did the rate of improvement compare between Collaborative participants and “non-Collaborative” participants?

Based on the Dartmouth analysis of Medicare billing data, Collaborative participants outperformed the comparator groups including the rest of Wisconsin, nearby states of Iowa and South Dakota, and the rest of the United States in measures of glycohemoglobin testing and lipid testing in diabetics and breast cancer screening, all three of which are publicly reported through the Collaborative.(Exhibit 4) In each of these measures, there was a trend toward the rate of improvement during the study years being higher for Wisconsin Collaborative participants but this did not reach statistical significance. In contrast, patients in Iowa and South Dakota were more likely to have received a diabetes related eye examination, which is not a measure publicly reported by the Collaborative. (Exhibit 4)

Exhibit 4.

Comparisons between Wisconsin Collaborative for Healthcare Quality (WCHQ), the rest of Wisconsin, Iowa/South Dakota and the rest of the United States.- Demographics, performance 2004, 2007 and rate of annual change. Note that all 4 years 2004 – 2007 were included in analysis of change.

| WCHQ | Non WCHQ, WI |

IA/SD | The rest of the US |

||

|---|---|---|---|---|---|

| Demographics | |||||

| Number (N) | 42,620 | 56,680 | 78,568 | 4,582,626 | |

| Age (mean) | 76.6 | 76.8 | 76.9 | 76.3 | |

| Female (%) | 59.9 | 60.0 | 60.2 | 60.2 | |

| Black (%) | 1.6 | 1.7 | 0.7 | 7.5 | |

| Medicaid during period (%) | 5.9 | 6.4 | 8.7 | 13.2 | |

| 1999 Median Household Income ($) | 46,292 | 45,058 | 38,622 | 43,945 | |

| Measures | |||||

| Hgb A1c testing | |||||

| 2004 % | 88.3** | 87.0 | 84.6 | 80.0 | |

| 2007 % | 90.7** | 88.4 | 86.5 | 82.6 | |

| Odds ratio annual change WCHQ vs | - | 1.06* | 1.06* | 1.05* | |

| Eye testing | |||||

| 2004 | 73.2 | 70.3 | 75.5** | 67.4 | |

| 2007 | 73.8 | 71.3 | 76.6** | 68.6 | |

| Odds ratio annual change WCHQ vs | - | 1.01 | 0.99 | 0.99 | |

| Lipid testing | |||||

| 2004 % | 79.4** | 77.9 | 72.9 | 76.1 | |

| 2007 % | 85.2** | 82.4 | 78.3 | 80.2 | |

| Odds Ratio annual change WCHQ vs | - | 1.05 | 1.05* | 1.07** | |

| Mammography | |||||

| 2004 % | 74.9** | 73.5 | 70.2 | 67.4 | |

| 2007 % | 76.8** | 73.8 | 71.0 | 67.4 | |

| Odds Ratio annual change WCHQ vs | - | 1.03 | 1.02 | 1.04 | |

Source: The Dartmouth Institute analysis. Medicare administrative data.

Notes:

P< 0.05 (Note that due to multiple comparisons a p value < 0.017 required for significance.)

P <0.017

The patients in the comparison groups were comparable to Collaborative patients in terms of age and sex. The rest of Wisconsin was similar to the WCHQ participants in racial makeup, income and percentage of Medicaid patients. However, Iowa and South Dakota patients had lower incomes, were less likely to be black and were more likely to be on Medicaid. The rest of the United States also was more likely to have lower incomes, more likely to be on Medicaid and more likely to be black. (Exhibit 4).

Conclusions

The three components of this study provide useful insight into the impact of voluntary public reporting of ambulatory measures on large independent physician provider groups. Although much of this evidence is circumstantial, this study takes advantage of "realist evaluation" methods, reflecting how concepts and improvement efforts are taken up in actual practice.18

Over the time frame of this study, which reflects the first 5 years of the Collaborative’s public reporting effort, the overall performance of Collaborative members as a whole improved significantly. All of the groups saw some improvement in a majority of the measures. In particular the groups whose baseline performance ranked the lowest among their peers tended to improve at the greatest rate. This occurred independently of the actual compliance rate or the spread between the top and bottom performers suggesting that it was more than just a regression to the mean or a ceiling effect among the top performers.

The survey component reinforced the concept that the annual public reports influenced improvement. Most participants when surveyed stated that they focused at least some improvement efforts in response to their performance on reported measures. More than half based their improvement efforts solely in response to reported measures. Nevertheless it was clear that none of the physician groups were able to address all of the Collaborative measures at the same time suggesting that groups chose to focus their improvement efforts.

The Dartmouth Institute was able to provide an independent measurement of provider performance based solely on Medicare billing data thus allowing a common platform to compare physician performance separate from the Collaborative’s internal data. It was reassuring to note that participants in the Collaborative tended to perform at a higher level than comparison groups in places where such public reporting is not available. Although performance on these measures improved elsewhere in the country as well, the members of the Collaborative consistently performed at a higher level and tended toward improving at a faster rate than the national comparison groups. Because the Collaborative members performed highly to begin with it is difficult to attribute the rate of improvement solely to the public reporting effort. However, the role of public reporting of the measures is reinforced by the observation that on diabetic eye exams Collaborative members performed no better than the comparison groups. This measure involves the same diabetes patient population as the glycohemoglobin and lipid testing. All three are recommended as best practice nationally, yet only the eye exam was not publicly reported by the Collaborative. It is hard to identify a plausible explanation other than the influence of public reporting to explain why Collaborative members should perform so much better on glycohemoglobin and lipid testing and not eye examination. The findings of this study are consistent with those of Lawrence Casalino11 who demonstrated that large practice groups are more likely to incorporate care management processes in response to incentives incorporating external recognition. It is not clear whether these findings can be extrapolated to small or medium size groups. In essence, public reporting creates a milieu in which practices compete for that recognition and strive to avoid the negative aspect of publically being identified at the bottom of the list. Those measures on which provider groups ranked the lowest compared to their peers were the measures most likely to demonstrate the most rapid improvement within that group, As such, comparative public reporting of quality measures was associated with overall improvement in performance on those measures among members of the groups participating. In our cohort, provider groups focused at least some of their improvement efforts on measures that were reported publicly although most groups limited the number of measures on which they chose to work, suggesting that the nature and number of quality measures had to be chosen carefully. John M. Colmers has pointed out that the most successful approaches to public reporting and transparency have resulted from partnerships involving the public and private sectors as well as purchasers and providers.1 Certainly, this characterizes the nature of the Wisconsin Collaborative.

This study supports the concept that voluntary reporting of rigorously defined quality measures as done by the Collaborative helps to drive improvement for all participants. However, the significance of this project goes beyond the impact on the Wisconsin Collaborative. This is one of the first studies of the impact of public reporting on providers and provider groups. As such it addresses one of the key gaps in our knowledge of public reporting as emphasized by Fung3, and provides useful insights into how independent provider groups might respond to public reporting of ambulatory measures. Unfortunately, this study was not structured to determine whether specific improvement efforts correlated with observed outcomes. This remains an opportunity for further study.

From a public policy perspective, this suggests that large group practices will engage in quality improvement efforts in response to public reporting especially when comparative performance is displayed. The selection of measures being reported and the organizations relative performance on those measures will contribute to how the group prioritizes its efforts. No group was able to respond to all measures reported, suggesting that it is important to carefully select the measures that are chosen for public reporting efforts. Nevertheless, the participation in a public reporting effort is associated with overall improvement for all the participants.

Acknowledgments

Financial Support: These results were presented at the annual meeting of the Society of General Internal Medicine, Phoenix, Arizona, May 2011, and at an invited presentation to the AQA alliance, Washington, D.C., October 2011. This study was supported through a grant from the Commonwealth Fund (No. 20080467). The article would not have been possible without the contributions of the study coordinator, Lucy Stewart; thoughtful editing by Alexandra Wright; statistical analyses contributed by Daniel Gottlieb, Daniel Eastwood, and Matt Gigot (in particular, Exhibit 1); and the unselfish efforts of the many site coordinators and members of the Wisconsin Collaborative for Healthcare Quality.

Contributor Information

Geoffrey C. Lamb, Email: glamb@mcw.edu, Office of Joint Clinical Quality, Department of Internal Medicine, Medical College of Wisconsin.

Maureen Smith, Departments of Population Health Sciences, Family Medicine, and Surgery Health Innovation Program, School of Medicine and Public Health, University of Wisconsin-Madison.

William B. Weeks, The Dartmouth Institute for Health Policy and Clinical Practice.

Christopher Queram, Wisconsin Collaborative for Healthcare Quality.

REFERENCES

- 1.Colmers JM. Public Reporting and Transparency. Commonwealth Fund Publication no. 988; Prepared for The Commonwealth Fund/Alliance for Health Reform 2007 Bipartisan Congressional Health Policy Conference; 2007. Jan, Downloaded December 16, 2009. [Google Scholar]

- 2.Marshall MN, Romano PS, Davies HTO. How do we maximize the impact of the public reporting of quality of care? Int J Qual Health Care. 2004;16(Suppl 1):i57–i63. doi: 10.1093/intqhc/mzh013. [DOI] [PubMed] [Google Scholar]

- 3.Fung Constance H, Yee-Wei Lim, Mattke Soeren, Damberg Cheryl, Shekelle Paul G. Systematic Review: the Evidence that Publishing Patient Care Performance Data Improves Quality of Care. Ann Int Med. 2008;148(2):111–123. doi: 10.7326/0003-4819-148-2-200801150-00006. [DOI] [PubMed] [Google Scholar]

- 4.Hibbard JH, Stockard J, Tusler M. Does Publicizing hospital performance stimulate quality improvement efforts? Health Aff (Millwood) 2003;22(2):84–94. doi: 10.1377/hlthaff.22.2.84. [DOI] [PubMed] [Google Scholar]

- 5.Hibbard J. Hospital Performance reports: impact on quality, market share and reputation. Health Aff (Millwood) 2005;24(4):1150–1160. doi: 10.1377/hlthaff.24.4.1150. [DOI] [PubMed] [Google Scholar]

- 6.Romano PS. Improving the quality of hospital care in America. New Engl J Med. 2005;353(3):302–304. doi: 10.1056/NEJMe058150. [DOI] [PubMed] [Google Scholar]

- 7.Greer AL. Embracing Accountability:Physician Leadership, Public Reporting, and Teamwork in the Wisconsin Collaborative for Healthcare Quality. The Commonwealth Fund. 2008 Jun [Google Scholar]

- 8.Wisconsin Collaborative for HealthCare Quality website: http://www.wchq.org

- 9.Liang K, Zeger SL. Longitudinal Data Analysis Using Generalized Linear Models. Biometrika. 1986;73(1):13–22. [Google Scholar]

- 10.Robins JM, Rotnitzky A, Zhao LP. Analysis of semiparametric regression models for repeated outcomes in the presence of missing data. Journal of the American Statistical Association. 1995;90(429):106–121. [Google Scholar]

- 11.Casalino L, Gillies RR, Shortell SM, Schmittdiel JA, Bodenheimer T, Robinson JC, Rundall T, Oswald N, Schauffler H, Wang MC. External incentives, information technology, and organized processes to improve health care quality for patients with chronic diseases. JAMA. 2003;289(4):434–441. doi: 10.1001/jama.289.4.434. [DOI] [PubMed] [Google Scholar]

- 12.Wagner EH, Austin BT, Davis C, Hindmarsh M, Schaefer J, Bonomi A. Improving chronic illness care: translating evidence into action. Health Aff (Millwood) 2001;20(6):64–78. doi: 10.1377/hlthaff.20.6.64. [DOI] [PubMed] [Google Scholar]

- 13.Wagner EH. Chronic disease management: what will it take to improve care for chronic illness? Eff Clin Pract. 1998;1(1):2–4. [PubMed] [Google Scholar]

- 14.Bonomi AE, Wagner EH, Glasgow RE, VonKorff M. Assessment of chronic illness care (ACIC): a practical tool to measure quality improvement. Health Serv Res. 2002;37(3):791–820. doi: 10.1111/1475-6773.00049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Alemi F, Safaie FK, Neuhauser D. A survey of 92 quality improvement projects. Jt Comm J Qual Improv. 2001;27(11):619–632. doi: 10.1016/s1070-3241(01)27053-9. [DOI] [PubMed] [Google Scholar]

- 16.Bonferroni adjustment was performed due to multiple comparisons.

- 17.Smith MA, Wright A, Queram C, Lamb GC. Public Reporting helped drive quality improvement in outpatient diabetes care among Wisconsin physician groups. Health Aff (Millwood) 2012;31(2):570–577. doi: 10.1377/hlthaff.2011.0853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pawson R, Tilley N. Realistic Evaluation. London: Sage; 1997. [Google Scholar]