Abstract

How do people represent object meaning? It is now uncontentious that thinking about manipulable objects (e.g., pencils) activates brain regions underlying action. But is this activation part of the meaning of these objects, or is it merely incidental? The research we report here shows that when the hands are engaged in a task involving motions that are incompatible with those used to interact with frequently manipulated objects, it is more difficult to think about those objects—but not harder to think about infrequently manipulated objects (e.g., bookcases). Critically, the amount of manual experience with the object determines the amount of interference. These findings show that brain activity underlying manual action is part of, not peripheral to, the representation of frequently manipulated objects. Further, they suggest that people’s ability to think about an object changes dynamically on the basis of the match between their (experience-based) mental representation of its meaning and whatever they are doing at that moment.

Keywords: embodied cognition, semantic representations, individual experience, simulation, action concepts, cognition, semantic memory, cognitive neuroscience, language, meaning

“Have you seen my pencils?” asks Jakob’s sister. Jakob is in the kitchen, kneading dough. “Uhh . . .” is his only reply. Later, when his sister finds the pencils next to him, Jakob denies knowing that she was looking for them. Clearly, Jakob had difficulty attending to his sister because he was distracted by his task. But perhaps Jakob’s ability to understand “pencils” was also influenced by the fact that his hands (i.e., his pencil-using effectors) were otherwise engaged, and if his sister had asked about something less frequently manipulated (e.g., her eyeglasses), she would have had better luck. More generally, perhaps the ability to understand (and produce) language fluctuates moment by moment according to the overlap between the requirements of whatever task a person happens to be performing and his or her (experience-based) mental representation of the meaning of the words.

A central problem in cognition is how people’s mental representations are connected to the things they represent. Sensorimotor-based theories (e.g., Allport, 1985) circumvent this “grounding problem” by positing that the brain regions activated when people perceive and interact with an object are the same regions that store its meaning. This means that the kinds of experiences one has with an object should determine its representation. For instance, a pencil might be represented primarily in regions involved in vision, somatosensory perception, and manual action. In contrast, a tiger would (for most people) be represented primarily in regions involved in vision and perhaps auditory perception.

Consistent with this prediction, results from numerous studies have shown that thinking about manipulable objects activates motor information and/or brain regions underlying action. For instance, reading (or hearing) names of objects primes how those objects are grasped (e.g., Tucker & Ellis, 2004) and manipulated (Bub & Masson, 2012), and naming pictures of things that are interacted with manually (e.g., pencils) produces activation in premotor cortex (e.g., Chao & Martin, 2000).

However, this motor priming and motor-cortex activation may be incidental to the activation of the concept, rather than part of it (for discussion, see Mahon & Caramazza, 2008). This distinction is critical to theories of conceptual representation, yet little progress has been made toward resolving it because most evidence has come from the kind of neuroimaging or priming-of-action studies described earlier. Such findings, though suggestive, cannot directly address whether action information is part of conceptual representation: Even if thinking about pencils reliably and rapidly activates motor information, it remains logically possible that this activation is incidental to, rather than part of, the representation of pencils. Functional MRI (fMRI) studies cannot adjudicate between these possibilities; fMRI allows one to infer an influence of cognition on brain activity, but not an influence of brain activity on cognition (e.g., Weber & Thompson-Schill, 2010). Priming actions has an analogous limitation: One can infer that the cognitive process in question influenced the action, but not that the action influenced the cognitive process.

How might one directly address whether objects with which people have had significant motor experience are represented, in part, in motor areas? If sensorimotor-based theories are correct, then not only should hearing “pencil” activate the motor pattern associated with pencils (as demonstrated in the studies described earlier), but activating the motor pattern associated with pencils should partially activate the concept pencil (cf. studies of object recognition by Helbig, Steinwender, Graf, & Kiefer, 2010, and Walenski, Mostofsky, & Ullman, 2007). Further, making it difficult to activate the motor pattern associated with pencils should make it difficult to think about pencils.

Determining whether there is such an influence of motor activity on thought is the critical test case for whether motor knowledge is truly part of, rather than peripheral to, the representation of manually experienced objects (see Anderson & Spivey, 2009). Yet few studies have explored the influence of motor-area activity on object concepts. In one, transcranial magnetic stimulation (TMS) of the inferior parietal lobule (assumed by the authors to alter object-related action) delayed participants’ naming of pictures of manipulable objects but not of nonmanipulable objects (Pobric, Jefferies, & Lambon Ralph, 2010). In another study, squeezing a ball in one hand made it more difficult for participants to name tools whose handles faced the squeezing hand (Witt, Kemmerer, Linkenauger, & Culham, 2010). By showing that engaging motor regions has an impact on object naming, these findings suggest that motor information is indeed part of the representation of manipulated objects.

However, the evidence is indirect. For instance, Pobric et al. (2010) did not report whether stimulating the inferior-parietal-lobe site found to affect participants’ naming of pictures of manipulable objects also affected participants’ ability to perform manual actions. Further, in Witt et al. (2010), the effect was produced not by the object per se but by the orientation of its handle—which could indicate that motor affordance information about objects is calculated for specific instances on the fly, rather than being part of the long-term conceptual representation of objects. In fact, results from studies on brain-damaged participants with apraxia, who have problems performing object-related actions but can nevertheless recognize the same objects they have difficulty acting on, have been taken as indicating that motor activity may not be a constituent part of object knowledge (Negri et al., 2007; but see Myung et al., 2010). Hence, additional work is needed to determine whether motor information is part of object representations.

Moreover, the underlying cause of the influence of motor activity on thought has yet to be explored. Establishing the cause is essential, given that sensorimotor-based models require that experience with objects determines their representations (see Pulvermüller, 2001). This means that the extent to which motor activity is part of an object’s representation should not simply reflect some categorical property of the concept (e.g., grammatical class or object attribute—for instance, “manipulable” or “kickable”). Instead, it should reflect the amount of motoric experience with the object. Thus, past motoric (but not nonmotoric) experience with an object should determine the impact of motor activity on the ability to think about that object. This is what we explored in the current study.

In Experiment 1, participants made verbal judgments about whether heard words were concrete or abstract while performing a concurrent task and while performing no concurrent task (in alternate blocks). The concurrent task was either a manual patty-cake-like task (involving motions incompatible with the motions associated with the presented words) or a control task (mental rotation). Hence, all participants completed both a concurrent-task block and a no-concurrent-task block, but the type of concurrent task (manual or rotation) differed between participants. The logic behind this design was analogous to that employed in TMS studies, in which brain areas are temporarily disabled and effects on behavior are measured. Unlike using TMS, however, engaging the motor system with a concurrent task does not rely on assumptions about the precise neuroanatomical regions that support the primary and secondary tasks: If the secondary task selectively interferes with the primary one, we can infer that the brain areas engaged by the secondary task are causally related to its execution. We predicted that interference from the patty-cake task would be greatest when participants judged objects with which they had the greatest amount of manual experience. In Experiment 2, we tested whether the predicted pattern of interference was also observed during object naming.

Experiment 1

Method

Participants

Seventy-two participants (45 female, 27 male) were recruited from the University of Pennsylvania community and received $10 per hour or course credit in return for participating. All participants were native English speakers and had normal or corrected-to-normal vision and normal hearing. Thirty-six participants were assigned to the manual-task group and 36 to the mental rotation (control) group.

Materials and procedure

We recorded sound files for 208 concrete nouns and 70 abstract nouns (e.g., justice; see Table S1 in the Supplemental Material available online for a full list of stimuli). We used six closed-contour shapes (Fig. 1) as stimuli for the rotation task.

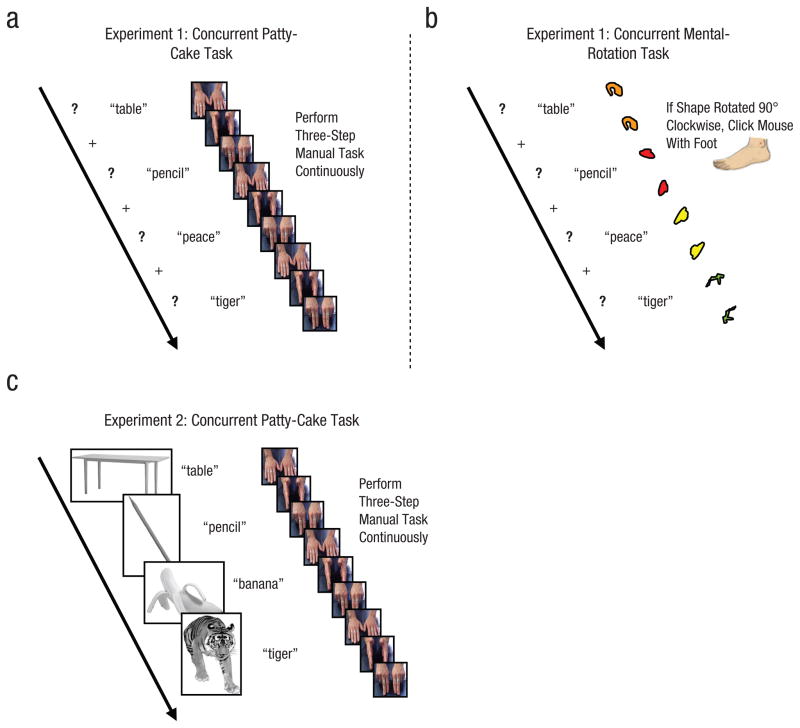

Fig. 1.

Structure of concurrent-task trials in Experiment 1 (semantic categorization task) and Experiment 2 (picture-naming task). In Experiment 1, participants performed a semantic categorization task (a, b) in which they heard spoken words (middle timelines). At the time the sound file for each word was played, a question mark appeared on the screen (left-most timelines), and participants made verbal judgments about whether heard words were concrete or abstract. On concurrent-task trials, one group of participants performed a concurrent patty-cake task, as illustrated by the images in the right-most timeline in (a), and another group performed a concurrent mental rotation task on visual shapes, as illustrated in the right-most timeline in (b). For this latter group, a shape appeared for 1 s before the presentation of each word in the semantic categorization task; after making a response, participants saw the same shape and clicked a computer mouse with their foot if it had rotated 90°. In Experiment 2, participants viewed a sequence of pictures, as illustrated by the left-most timeline in (c), and their task was to name the pictures (correct responses are indicated by the middle timeline). Concurrently, they performed the same patty-cake task as did the manual-task group in Experiment 1.

Stimuli were divided into two counterbalanced lists, such that each word occurred in concurrent-task (manual or rotation) and no-concurrent-task blocks (between subjects). Stimuli were presented in a fixed pseudorandom order (avoiding sequential semantically related words). There were 139 experimental trials (105 with concrete words and 34 with abstract words) per block. A voice key was used to record response times (RTs) for concrete/abstract judgments. Accuracy was coded off-line. Before completing the experimental trials, participants completed 20 practice trials, during which the experimenter gave feedback on performance of the concurrent tasks and on responding loudly enough to trigger the microphone.

In the no-concurrent-task block, participants simply made concrete/abstract judgments. In the patty-cake block, participants continuously performed a three-step manual task with both hands while making concrete/abstract judgments. The patty-cake task was carefully designed to involve only “nonsense” actions that were unlikely to be associated with the objects referred to by the words or, indeed, with any objects, particularly when performed as a continuous sequence. In the mental rotation block, a closed-contour shape appeared for 1 s before the presentation of the word for the concrete/abstract judgment. After making a judgment, participants saw the same shape and clicked a computer mouse (with their foot) if it had rotated 90° clockwise (true on 25% of trials; on the remaining trials, it was rotated 0°, 180°, or 270°). Figure 1 shows the trial structure and example stimuli used for the manual and rotation tasks. Block order was counterbalanced.

After completing the two blocks of concrete/abstract judgments, participants heard each concrete word again and indicated whether they had more experience touching the object with their hands or looking at it, using a scale from 1 (more experience touching) to 7 (more experience looking).

Results

On the basis of the postexperimental ratings, for each subject, we divided the items into two conditions: Items whose scores were in the bottom tercile were assigned to the more-experience-touching condition (M = 1.9, SD = 0.6), and those whose scores were in the top tercile were assigned to the less-experience-touching condition (M = 6.8, SD = 0.3). For each condition, we computed the manual-interference effect (separately for error rates and RTs) as the difference between semantic categorization responses in the manual-task and no-concurrent-task blocks. The rotation-interference effect for each condition was calculated analogously. Table 1 shows the raw RTs and error rates used to calculate these interference effects. As the table indicates, responses were slower in the concurrent-task blocks than in the no-concurrent-task blocks, F(1, 70) = 30.4, p < .001, and participants whose concurrent task was mental rotation responded slightly more slowly overall than did those whose concurrent task was patty-cake, F(1, 70) = 4.5, p = .04. However, RTs revealed no differences between conditions on the interference-effect measure and are not described further. To satisfy the homogeneity-of-variance and normality assumptions of analysis of variance (ANOVA), the analysis of errors was performed on the interference-effect measure.

Table 1.

Raw Reaction Times and Error Rates Used to Calculate Interference Effects in Experiments 1 and 2

| More experience touching

|

Less experience touching

|

|||

|---|---|---|---|---|

| Participant group and block | Response time | Error rate | Response time | Error rate |

| Manual-task participants (Experiment 1) | ||||

| No concurrent task | 1,094 ms (22 ms) | 0.2% (0.1%) | 1,103 ms (21 ms) | 0.7% (0.2%) |

| Concurrent task | 1,187 ms (28 ms) | 1.4% (0.5%) | 1,183 ms (28 ms) | 0.6% (0.2%) |

| Rotation-task participants (Experiment 1) | ||||

| No concurrent task | 1,176 ms (19 ms) | 0.3% (0.2%) | 1,175 ms (22 ms) | 0.4% (0.2%) |

| Concurrent task | 1,233 ms (23 ms) | 1.5% (0.5%) | 1,237 ms (26 ms) | 1.9% (0.5%) |

| Manual-task participants (Experiment 2) | ||||

| No concurrent task | 961 ms (26 ms) | 11.2% (1.4%) | 1,031 ms (33 ms) | 16.4% (1.8%) |

| Concurrent task | 1,063 ms (35 ms) | 19.6% (1.8%) | 1,116 ms (41 ms) | 20.2% (1.7%) |

Note: Standard errors are shown in parentheses.

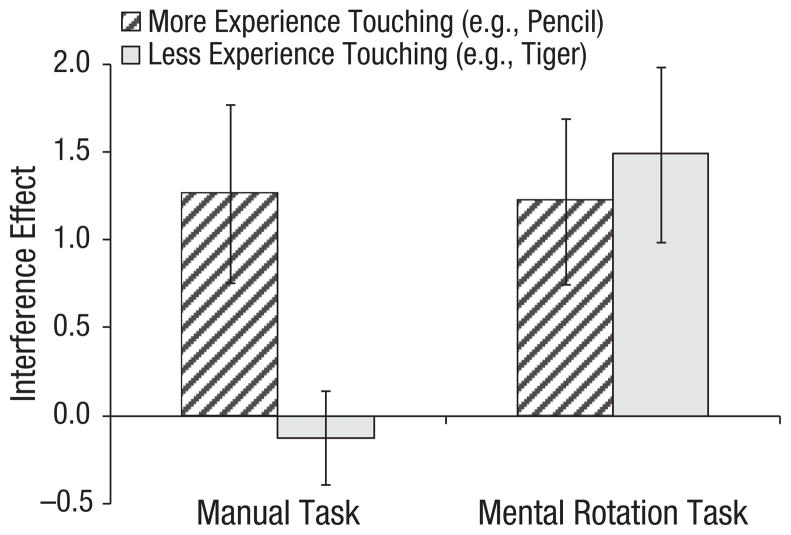

Our primary hypothesis was that the extent to which conceptual judgments about objects are affected by a secondary task depends both on the nature of the secondary task (manual vs. visuospatial) and on the individual’s motor history with the objects (amount of experience touching them). This hypothesis was supported by a reliable interaction between task type and manual experience on the magnitude of the interference effect, F(1, 70) = 4.5, p = .04. There was no main effect of task type, F(1, 70) = 2.5, p = .12. (However, there was a trend toward greater interference from the rotation task than from the manual task, which suggests that rotation was slightly more difficult.) There was no main effect of manual experience F(1, 70) = 2.0, p = .16. Planned comparisons revealed an effect of manual experience on the magnitude of the manual-interference effect, t(35) = 2.1, p = .04, but not on the rotation-interference effect, t(35) = −0.6, p = .54 (Fig. 2).1

Fig. 2.

Results from Experiment 1: mean interference-effect scores as a function of concurrent task and object condition. For both the manual task and the mental rotation task, interference scores were calculated by subtracting semantic categorization error rates in the no-concurrent-task block from semantic categorization error rates in the concurrent-task block. Error bars show standard errors of the mean.

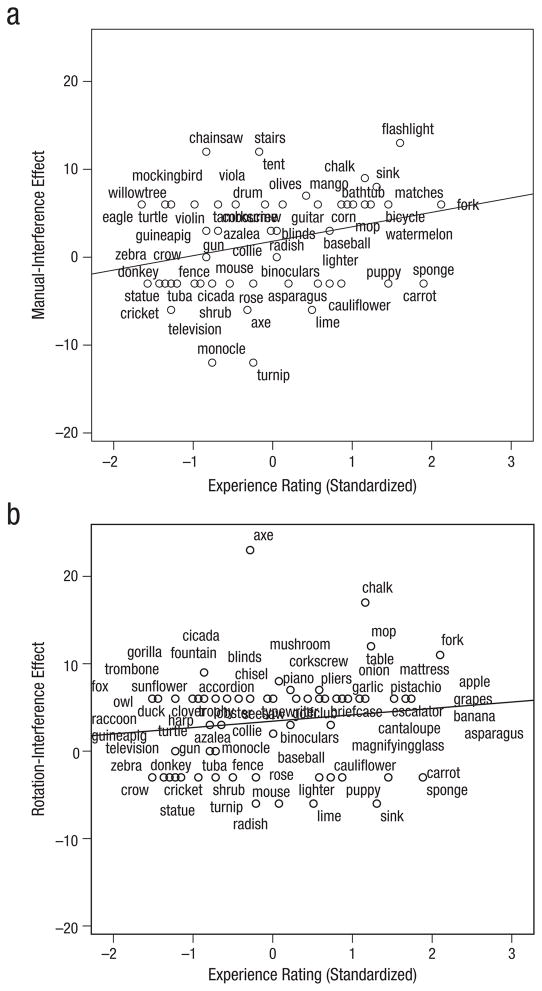

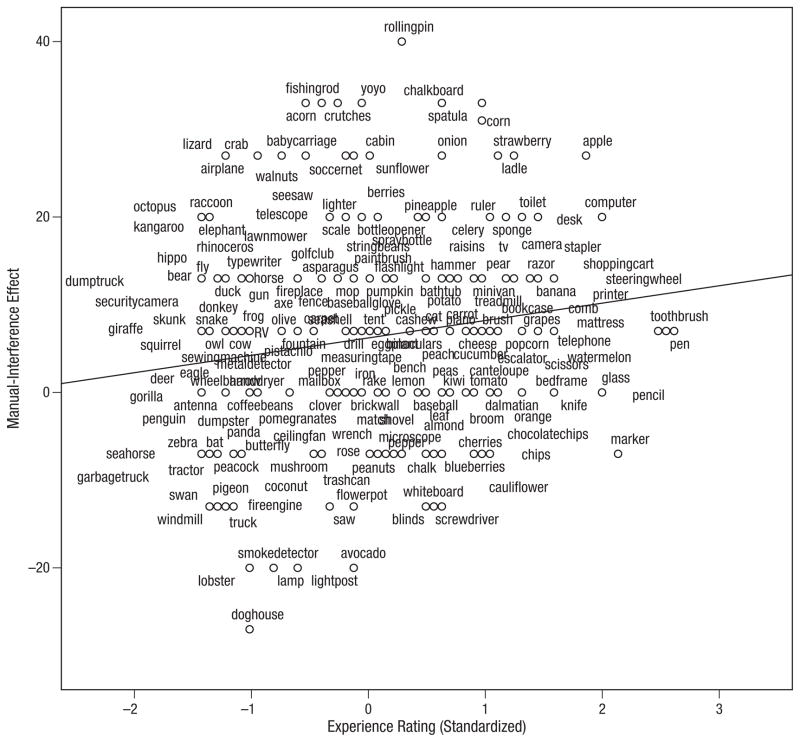

We also tested whether, on an object-by-object basis, amount of past manual experience with an object determined the impact of motor activity on conceptual access. That is, we used experience ratings as a continuous rather than a binary variable to predict the magnitude of the manual-interference effect across all items that elicited errors (N = 55 for the manual task and N = 70 for mental rotation). We found that more experience touching an object led to a larger manual-interference effect for that object, r = .28, p = .04 (Fig. 3a). There was no relationship between past experience touching objects and the rotation-interference effect, r = .13, p = .27 (Fig. 3b).

Fig. 3.

Results from Experiment 1: scatter plot (with best-fitting regression line) showing the relationship between interference-effect scores and ratings of experience touching objects for the manual task (a) and the mental rotation task (b). For both the manual task and the mental rotation task, interference scores were calculated by subtracting semantic categorization error rates in the no-concurrent-task block from semantic categorization error rates in the concurrent-task block.

Hence, in Experiment 1, we found that one’s amount of past experience touching an object influences one’s ability to access its representation when performing a manual task involving actions that are incompatible with those associated with the object. Critically, because each item appeared in each task, differences between items were controlled for—thus, for the same items, manual experience modulated interference in the manual task but not in the rotation task. Before discussing the implications of this finding, we describe Experiment 2, in which we tested whether past experience touching objects also modulated the influence of concurrent manual behavior during performance of a different task: picture naming. Whereas one could argue that concrete/abstract judgments elicit imagined touching (and that the manual task affected this incidental imagery process), it is less plausible that picture naming does. Therefore, an experience-dependent manual-interference effect on picture naming would provide even stronger evidence that motoric information is part of the representation of frequently handled objects. Moreover, the overall error rate in Experiment 1 was low, and several participants made no errors. We therefore asked whether the findings would generalize to a different task that might elicit more errors.

Experiment 2

Method

Participants

Thirty participants (24 female, 6 male) were recruited from the University of Pennsylvania community and received $10 per hour or course credit in return for participating. All participants were native English speakers and had normal or corrected-to-normal vision.

Materials and procedure

Stimuli were 218 gray-scale photographs of concrete objects. Only images determined by a pretest (N = 20) to have high name agreement were used. Most photographs depicted objects whose names were used in Experiment 1. (See Table S1 in the Supplemental Material.) In a second pretest, for each image, participants (N = 20) were asked, “How much experience do you have touching this object with your hands?”; responses were made using a scale from 1 (low manual experience) to 7 (high manual experience). Pretest participants did not participate in the picture-naming task or in Experiment 1.

Images were presented one at a time and remained on the screen until a voice key registered the participant’s response. The experimenter coded responses for accuracy on-line. Responses were coded as correct only if they matched an expected name (i.e., a normed name, as determined by the pretest) and the voice key was triggered accurately (e.g., not by a disfluent false start such as “uhh” or “um”). Errors were primarily disfluent false starts, omissions (e.g., “don’t know”), and semantic substitutions. Each participant performed both a patty-cake block and a no-concurrent-task block. In the patty-cake block, participants performed the three-step manual task used in Experiment 1 continuously while naming the pictures. In the no-concurrent-task block, participants simply named the pictures. (Because Experiment 1 revealed no relationship between rotation interference and experience with objects, we did not include a rotation block in Experiment 2.) As in Experiment 1, stimuli were divided into two counterbalanced lists, such that each picture occurred in both the no-concurrent-task block and the concurrent-task block (between subjects). Block order was counterbalanced. Participants completed 30 practice trials before beginning the experimental trials.

Results

As in Experiment 1, we used manual-experience ratings to divide items into terciles, creating two conditions: more experience touching (top tercile; M = 4.7, SD = 0.7) and less experience touching (bottom tercile; M = 1.4, SD = 0.4). Table 1 shows the raw RTs and error rates used to calculate the manual-interference effects (computed as in Experiment 1). As in Experiment 1, responses were slower in the concurrent-task block than in the no-concurrent-task block, F(1, 29) = 10.8, p = .003. Also, words for objects that participants had less experience touching were responded to more slowly overall than were words for objects that participants had more experience touching, F(1, 29) = 8.9, p = .006. As in Experiment 1, RTs revealed no differences between conditions on the interference-effect measure, so we again focus on errors. Unlike in Experiment 1, all participants made errors in Experiment 2.

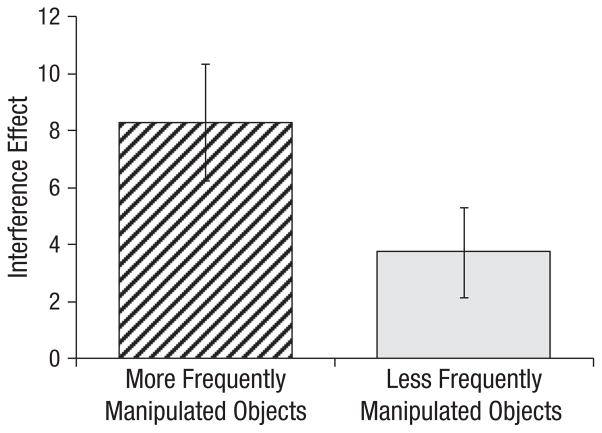

Once again, the analysis of errors was performed on the interference-effect measure. Consistent with our experience-based hypothesis, results again revealed a reliable effect of manual experience on the magnitude of the interference effect, t(29) = 2.1, p = .04 (Fig. 4). As in Experiment 1, we also found that across all objects that elicited errors (N = 211), there was a relationship between amount of experience touching an object and manual interference when naming it, r = .16, p = .02 (Fig. 5).

Fig. 4.

Results from Experiment 2: mean interference-effect scores as a function of object condition. For both the manual task and the mental rotation task, interference scores were calculated by subtracting semantic categorization error rates in the no-concurrent-task block from semantic categorization error rates in the concurrent-task block. Error bars show standard errors of the mean.

Fig. 5.

Results from Experiment 2: scatter plot (with best-fitting regression line) showing the relationship between interference-effect scores and ratings of experience touching objects. Interference scores were calculated by subtracting semantic categorization error rates in the no-concurrent-task block from semantic categorization error rates in the concurrent-task block.

Hence, in Experiment 2 we again showed that an incompatible manual task can interfere with the ability to think about frequently touched objects—specifically, it can interfere with the ability to produce their names.

Discussion

Our results showed that when participants made concrete/abstract judgments about an object, the degree to which performing a concurrent manual task increased response difficulty was related to how much experience they had touching the object. The same pattern was observed for object naming. The absence of an experience-modulated interference effect of mental rotation in Experiment 1 demonstrates that the observed effect was not a general effect of task difficulty (if anything, the rotation task was slightly more difficult overall than the manual task was). Moreover, the fact that there was a significant correlation between patty-cake interference and manual experience across items, and that this correlation appeared in both experiments, attests to the generalizability of the results.

Thus, the amount of manual experience with an object predicts the degree to which thinking about that object is disrupted by performing an incompatible manual task. This suggests that the motor system’s role in conceptual representations is determined by experience. Further, by demonstrating a direct link between the engagement of the motor system and the ability to access and produce the names of frequently manipulated objects, our work establishes a causal role for the motor system in conceptual representations.

Our findings are consistent with a large body of work that has focused on the role of the motor system in thinking about action (e.g., Bak et al., 2006; Boulenger et al., 2008; Cotelli et al., 2007; Damasio & Tranel, 1993; Neininger & Pulvermüller, 2003)—particularly action-language comprehension (Glenberg, Sato, & Cattaneo, 2008; Pulvermüller, Hauk, Nikuli, & Ilmoniemi, 2005; Shebani & Pulvermüller, 2013). However, our work breaks new ground by demonstrating that the motor system’s role generalizes beyond action understanding—it can also be a part of object representation. This generalization is crucial: Sensorimotor-based theories concern all concepts, but until now, the notion that motor information is a constituent part of object representations has not been put to a strong test. Moreover, whereas prior work has focused on categorical distinctions between stimuli (e.g., action verbs vs. nouns), we have shown directly that the relevant feature—manual experience—predicts disruption by performance of a manual task. Although it has been assumed that prior effects had to do with manual experience, this assumption has never before been tested.2

If frequently manipulated objects are partially represented in motor areas, why did we predict (and observe) interference from the manual task, rather than facilitation by it? The manual task was constructed to involve actions unlikely to be performed on the presented objects (or, indeed, on any objects). If participants had instead performed actions that were congruent with each object’s use, we would have predicted facilitation (see Bub, Masson, & Bukach, 2003, for a study exploring the influence of task performance on congruency effects).3 This prediction is supported by evidence that seeing (or hearing the name of) a manipulable object partially activates similarly manipulated objects (Campanella & Shallice, 2011; Helbig, Graf, & Kiefer, 2006; Kiefer, Sim, Helbig, & Graf, 2011; Myung, Blumstein, & Sedivy, 2006). Such evidence might suggest a different explanation of the findings: that performing the patty-cake task activated the representations of objects manipulated with similar motions, and it was these active representations (rather than the actions per se) that interfered with the representations of objects with which participants had more manual experience.

Given that the sequence of motions involved in the patty-cake task was expressly selected to be dissimilar to actions associated with any objects, we consider this possibility unlikely. Nevertheless, such an account would be compatible with the interpretation we have proposed: Because the interference is specific to objects with which people have more manual experience (and is correlated with such experience), any representations activated by the patty-cake task would have to be interfering with manipulation-related knowledge that is part of the concepts for to-be-categorized or to-be-named objects. In either case, the underlying cause of the interference effect is object-based manipulation-related knowledge.

Findings from neuroimaging studies demonstrating that motor areas become active when people think about manipulable objects (e.g., Chao & Martin, 2000) are often interpreted as evidence that the representation of manipulated objects involves motor areas. However, it has been argued that such results are also consistent with motor-area activation being incidental to, rather than a functional part of, the representations (e.g., Mahon & Caramazza, 2008). By showing that engaging motor areas interferes with the ability to think about frequently manipulated objects, our results demonstrate that motor-area activity is indeed a constitutive part of the representation of manipulated objects. Notably, the study reported here is a case in which a comparatively simple manipulation (asking participants to make hand motions) addresses the question of interest more directly than could any functional-neuroimaging method currently in vogue.

Critically, we also tested a fundamental but rarely explicitly examined premise of sensorimotor-based theories: that the underlying factor driving motor activity’s influence on conceptual representation is experience. In both of our experiments, we found that on an item-by-item basis, amount of experience touching an object predicted the amount of interference produced by the manual task. This pattern suggests that past experience touching objects indeed shapes these representations. Given that such experience varies across individuals (sometimes significantly), these results also suggest that the influence of motor activity on conceptual access should vary across individuals—just as the influence of conceptual access on motor-region activity varies with experience (e.g., Kan, Kable, Van Scoyoc, Chatterjee, & Thompson-Schill, 2006; Kiefer, Sim, Liebich, Hauk, & Tanaka, 2007; Weisberg, Turennout, & Martin, 2007; Willems, Hagoort, & Casasanto, 2010; see also Beilock, Lyons, Mattarella-Micke, Nusbaum, & Small, 2008, and Casasanto & Chrysikou, 2011, for related findings). To return to our opening example, perhaps if Jakob’s younger brother, Stephen (a technophile who never uses pencils), had been the one kneading dough, he would have had no difficulty understanding “pencil” because his representation of pencil would not contain motor knowledge.

Future work should explore the extent to which personal experience, observation (e.g., of others manipulating pencils), and cultural knowledge (e.g., knowing that most people do manipulate pencils) each contribute to an object’s representation. It would also be fruitful to explore whether experience can similarly predict other sensorimotor-based aspects of conceptual knowledge. For instance, if Stephen’s representation of pencil were primarily visual, perhaps an appropriate interfering visual task would make it more difficult for him to think about pencils.

It is important to emphasize that showing that motor information is part of the representation of manually experienced objects is quite different from claiming that it is necessary in order to have any representation of them. Conceptual representations, even for frequently handled objects, can include many different components—for instance, visual, auditory, olfactory, and action-oriented information, as well as nonsensorimotor information, such as encyclopedic knowledge (e.g., that pencils contain graphite). Thus, being unable to access part of a representation does not entail losing the entire concept—just as losing one finger from a hand does not entail loss of the entire hand. The findings reported here highlight this point: Even for objects with which participants had a high degree of manual experience, the patty-cake task did not dramatically increase errors. In fact, this may also explain why brain damage leading to problems performing an action with a particular object does not entail difficulty recognizing that object (Negri et al., 2007)—the object may be recognizable on the basis of other aspects of its representation (and the extent to which there are other aspects to rely upon may vary across individuals).

In sum, our work shows that not only does thinking about manipulable objects influence activity in motor areas, but activity in motor areas influences people’s ability to think about manipulable objects. Hence, motor area activity is not “peripheral” to object concepts—it is part of them: The sensorimotor activity evoked when something is interacted with (i.e., experienced) appears to become part of its meaning. Further, our findings suggest that the ability to use language can change moment by moment according to the match between one’s (experience-based) mental representations of words’ meanings and the requirements of whatever one happens to be doing.

Supplementary Material

Acknowledgments

We thank Miriam Akbar, Cindy Navarro, and Alexa Levesque for help creating stimuli and running participants and Katherine White, Michael Spivey, Gerry Altmann, and members of the Thompson-Schill Lab for helpful comments. Portions of this research were presented at the 2010 and 2011 annual meetings of the Cognitive Neuroscience Society.

Funding

The work reported here was supported by National Institutes of Health Grant R01MH70850 to S. L. T.-S.

Footnotes

Given that the rotation task placed demands on visual memory and that less experience touching objects corresponded to more experience looking at them, one might wonder why the less-experience-touching condition did not elicit more errors during the mental-rotation task. We suspect that this was because the visual systems that support mental rotation are not those that (primarily) support visual object recognition (Farah & Hammond, 1988; Gauthier et al., 2002).

To further determine whether the observed effects should be attributed to experience per se, we tested the effect of manual experience when object kind (i.e., artifact or natural) was partialed out. Because object kind was correlated with manual experience (Experiment 1: r = .29; Experiment 2: r = .28), the effects were weaker when this variable’s influence was removed. Nevertheless, for both experiments, the pattern remained the same: Manual experience was positively correlated with errors in naming (r = .15, p = .03) and semantic categorization (although this effect was not significant; r = .21, p = .12).

We elected to use an interfering task because of the difficulty of inducing such a facilitation effect without participants becoming aware of the nature of the manipulation.

Reprints and permissions: sagepub.com/journalsPermissions.nav

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

References

- Allport DA. Distributed memory, modular subsystems and dysphasia. In: Newman SK, Epstein R, editors. Current perspectives in dysphasia. Edinburgh, Scotland: Churchill Livingstone; 1985. pp. 207–244. [Google Scholar]

- Anderson SE, Spivey MJ. The enactment of language: Decades of interactions between linguistic and motor processes. Language and Cognition. 2009;1:87–111. [Google Scholar]

- Bak TH, Yancopoulou D, Nestor PJ, Xuereb JH, Spillantini MG, Pulvermüller F, Hodges JR. Clinical, imaging and pathological correlates of a hereditary deficit in verb and action processing. Brain. 2006;129:321–332. doi: 10.1093/brain/awh701. [DOI] [PubMed] [Google Scholar]

- Beilock SL, Lyons IM, Mattarella-Micke A, Nusbaum HC, Small SL. Sports experience changes the neural processing of action language. Proceedings of the National Academy of Sciences, USA. 2008;105:13269–13273. doi: 10.1073/pnas.0803424105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boulenger V, Mechtouff L, Thobois S, Broussolle E, Jeannerod M, Nazir TA. Word processing in Parkinson’s disease is impaired for action verbs but not for concrete nouns. Neuropsychologia. 2008;46:743–756. doi: 10.1016/j.neuropsychologia.2007.10.007. [DOI] [PubMed] [Google Scholar]

- Bub DN, Masson MEJ. On the dynamics of action representations evoked by names of manipulable objects. Journal of Experimental Psychology: General. 2012;141:502–517. doi: 10.1037/a0026748. [DOI] [PubMed] [Google Scholar]

- Bub DN, Masson MEJ, Bukach CM. Gesturing and naming: The use of functional knowledge in object identification. Psychological Science. 2003;14:467–472. doi: 10.1111/1467-9280.02455. [DOI] [PubMed] [Google Scholar]

- Campanella F, Shallice T. Manipulability and object recognition: Is manipulability a semantic feature? Experimental Brain Research. 2011;208:369–383. doi: 10.1007/s00221-010-2489-7. [DOI] [PubMed] [Google Scholar]

- Casasanto D, Chrysikou EG. When left is “right”: Motor fluency shapes abstract concepts. Psychological Science. 2011;22:419–422. doi: 10.1177/0956797611401755. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Cotelli M, Borroni B, Manenti R, Zanetti M, Arévalo A, Cappa SF, Padovani A. Action and object naming in Parkinson’s disease without dementia. European Journal of Neurology. 2007;14:632–637. doi: 10.1111/j.1468-1331.2007.01797.x. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Tranel D. Nouns and verbs are retrieved with differently distributed neural systems. Proceedings of the National Academy of Sciences, USA. 1993;90:4957–4960. doi: 10.1073/pnas.90.11.4957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah MJ, Hammond KH. Mental rotation and orientation-invariant object recognition: Dissociable processes. Cognition. 1988;29:29–46. doi: 10.1016/0010-0277(88)90007-8. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Hayward WG, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. BOLD activity during mental rotation and viewpoint-dependent object recognition. Neuron. 2002;34:161–171. doi: 10.1016/s0896-6273(02)00622-0. [DOI] [PubMed] [Google Scholar]

- Glenberg AM, Sato M, Cattaneo L. Use-induced motor plasticity affects the processing of abstract and concrete language. Current Biology. 2008;18:R290–R291. doi: 10.1016/j.cub.2008.02.036. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Graf M, Kiefer M. The role of action representations in visual object recognition. Experimental Brain Research. 2006;174:221–228. doi: 10.1007/s00221-006-0443-5. [DOI] [PubMed] [Google Scholar]

- Helbig HB, Steinwender J, Graf M, Kiefer M. Action observation can prime visual object recognition. Experimental Brain Research. 2010;200(3–4):251–258. doi: 10.1007/s00221-009-1953-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan IP, Kable JW, Van Scoyoc A, Chatterjee A, Thompson-Schill SL. Fractionating the left frontal response to tools: Dissociable effects of motor experience and lexical competition. Journal of Cognitive Neuroscience. 2006;18:267–277. doi: 10.1162/089892906775783723. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Helbig HB, Graf M. Tracking the time course of action priming on object recognition: Evidence for fast and slow influences of action on perception. Journal of Cognitive Neuroscience. 2011;23:1864–1874. doi: 10.1162/jocn.2010.21543. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Liebich S, Hauk O, Tanaka J. Experience-dependent plasticity of conceptual representations in human sensory-motor areas. Journal of Cognitive Neuroscience. 2007;19:525–542. doi: 10.1162/jocn.2007.19.3.525. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology—Paris. 2008;102:59–70. doi: 10.1016/j.jphysparis.2008.03.004. [DOI] [PubMed] [Google Scholar]

- Myung J, Blumstein SE, Sedivy JC. Playing on the typewriter and typing on the piano: Manipulation knowledge of objects. Cognition. 2006;98:223–243. doi: 10.1016/j.cognition.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Myung J, Blumstein SE, Yee E, Sedivy JC, Thompson-Schill SL, Buxbaum LJ. Impaired access to manipulation features in apraxia: Evidence from eyetracking and semantic judgment tasks. Brain & Language. 2010;112:101–112. doi: 10.1016/j.bandl.2009.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Negri GAL, Rumiati RI, Zadini A, Ukmar M, Mahon BZ, Caramazza A. What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cognitive Neuropsychology. 2007;24:795–816. doi: 10.1080/02643290701707412. [DOI] [PubMed] [Google Scholar]

- Neininger B, Pulvermüller F. Word-category specific deficits after lesions in the right hemisphere. Neuropsychologia. 2003;41:53–70. doi: 10.1016/s0028-3932(02)00126-4. [DOI] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Lambon Ralph MA. Category-specific versus category-general semantic impairment induced by transcranial magnetic stimulation. Current Biology. 2010;20:964–968. doi: 10.1016/j.cub.2010.03.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermüller F. Brain reflections of words and their meaning. Trends in Cognitive Sciences. 2001;5:517–524. doi: 10.1016/s1364-6613(00)01803-9. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Hauk O, Nikuli VV, Ilmoniemi RJ. Functional links between motor and language systems. European Journal of Neuroscience. 2005;21:793– 797. doi: 10.1111/j.1460-9568.2005.03900.x. [DOI] [PubMed] [Google Scholar]

- Shebani Z, Pulvermüller F. Moving the hands and feet specifically impairs working memory for arm- and leg-related action words. Cortex. 2013;49:222–231. doi: 10.1016/j.cortex.2011.10.005. [DOI] [PubMed] [Google Scholar]

- Tucker M, Ellis R. Action priming by briefly presented objects. Acta Psychologica. 2004;116:185–203. doi: 10.1016/j.actpsy.2004.01.004. [DOI] [PubMed] [Google Scholar]

- Walenski M, Mostofsky SH, Ullman MT. Speeded processing of grammar and tool knowledge in Tourette’s syndrome. Neuropsychologia. 2007;45:2447–2460. doi: 10.1016/j.neuropsychologia.2007.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weber MJ, Thompson-Schill SL. Functional neuroimaging can support causal claims about brain function. Journal of Cognitive Neuroscience. 2010;22:2415–2416. doi: 10.1162/jocn.2010.21461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisberg J, Turennout M, Martin A. A neural system for learning about object function. Cerebral Cortex. 2007;17:513–521. doi: 10.1093/cercor/bhj176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willems RM, Hagoort P, Casasanto D. Body-specific representations of action verbs: Neural evidence from right- and left-handers. Psychological Science. 2010;21:67–74. doi: 10.1177/0956797609354072. [DOI] [PubMed] [Google Scholar]

- Witt JK, Kemmerer D, Linkenauger SA, Culham J. A functional role for motor simulation in naming tools. Psychological Science. 2010;21:1215–1219. doi: 10.1177/0956797610378307. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.