Abstract

Intraclass correlation and Cronbach’s alpha are widely used to describe reliability of tests and measurements. Even with Gaussian data, exact distributions are known only for compound symmetric covariance (equal variances and equal correlations). Recently, large sample Gaussian approximations were derived for the distribution functions.

New exact results allow calculating the exact distribution function and other properties of intraclass correlation and Cronbach’s alpha, for Gaussian data with any covariance pattern, not just compound symmetry. Probabilities are computed in terms of the distribution function of a weighted sum of independent chi-square random variables.

New F approximations for the distribution functions of intraclass correlation and Cronbach’s alpha are much simpler and faster to compute than the exact forms. Assuming the covariance matrix is known, the approximations typically provide sufficient accuracy, even with as few as ten observations.

Either the exact or approximate distributions may be used to create confidence intervals around an estimate of reliability. Monte Carlo simulations led to a number of conclusions. Correctly assuming that the covariance matrix is compound symmetric leads to accurate confidence intervals, as was expected from previously known results. However, assuming and estimating a general covariance matrix produces somewhat optimistically narrow confidence intervals with 10 observations. Increasing sample size to 100 gives essentially unbiased coverage. Incorrectly assuming compound symmetry leads to pessimistically large confidence intervals, with pessimism increasing with sample size. In contrast, incorrectly assuming general covariance introduces only a modest optimistic bias in small samples. Hence the new methods seem preferable for creating confidence intervals, except when compound symmetry definitely holds.

Keywords: interrater reliability, confidence interval, compound symmetry, quadratic forms

1. Introduction

1.1. Motivation

Estimates of interrater reliability are often of interest in behavioral and medical research. As an example, consider a study in which each of five physicians used X-ray images (from CT scans) to measure the volume of the hippocampus (a region of the brain). Each physician measured the volume for each of twenty subjects. The scientist sought to evaluate inter-physician reliability. This led to a wish to compute estimates of reliability and confidence intervals for the estimates. The intraclass correlation coefficient and Cronbach’s alpha, which are one-to-one functions of each other, provide appropriate indexes of reliability. The disparities in training and experience among physicians makes it seem unlikely that equal variances and equal correlations (compound symmetry) occur in this setting.

The exact, small sample, distributions of the estimates of intraclass correlation and Cronbach’s alpha are known only for Gaussian data with compound symmetric covariance. However, as for the example just described, it may be invalid to assume equal variances and equal covariances across raters and time. Only a large sample approximation is available for covariance matrices that are not compound symmetric.

1.2. Literature Review

Cronbach (1951) defined coefficient alpha, ρα, a lower bound of the reliability of a test. Subsequently, Kristof (1963) and Feldt (1965) independently derived the exact distribution of a sample estimate, , assuming Gaussian data and compound symmetric covariance. Exact calculations require specifying the common variance and correlation. Recently, van Zyl, Neudecker, and Nel (2000) derived a large sample approximation of the distribution of for Gaussian data with a general covariance matrix. Approximate calculations require specifying the population covariance matrix. The results all extend to estimates of the intraclass correlation, due to the one-to-one relationship between the two measures of reliability.

1.3. Overview of New Results

Section 2 contains exact and approximate expressions for the distribution functions of estimates of both intraclass correlation and Cronbach’s alpha. A theorem in Section 2.2 provides the key result. It allows computing each distribution function in terms of the probability that a weighted sum of independent chi-square random variables is less than zero. A simple F approximation is derived and provides a much simpler algorithm than the one required to compute exact probabilities.

Section 3 contains three kinds of numerical evaluations of the new results. The first involves verifying the accuracy of the exact forms. The second centers on comparing the existing Gaussian approximation to the new exact result and the new F approximation. The third contains an evaluation of the coverage accuracy of confidence intervals based on an estimated covariance matrix.

2. Analytic Results

2.1. Notation

A scalar variable will be indicated by lower case, such as x; a vector (dimension n × 1, always a column) by bold lower case, such as x; an n × p matrix by bold upper case, X; and its transpose by X’. For example, 1n indicates an n × 1 vector of 1’s. If X = [x1 x2 … xp], then the stacking of the columns into an n · p × 1 will be indicated by vec(X), with the first column at the top, followed by the second, etc. The Kronecker product is defined as X ⊗ Y = {xij · Y}. For an n × 1 vector x, the n × n matrix with all zero elements off the diagonal and xi as the (i, i) element will be written Dg(x). As needed, a scalar, vector or matrix is described as constant, random, or a realization of a random scalar, vector or matrix.

Properties of Gaussian variables will be used throughout (Arnold, Chapter 3, 1981). All parameters are assumed finite. A scalar variable, y, will be indicated to follow a Gaussian distribution, with expected value, the mean, ε(y) = μ and variance, ν(y) = ε(y2) − [ε(y)]2 = σ2, by writing y ~ N (μ, σ2). An n × 1 vector will be indicated to follow a Gaussian distribution, with n × 1 mean vector ε(y)= μ and n × n covariance matrix ν(y) = ε(yy’ − εy)[ε(y)]’ = Σ, by writing y ~ Nn(μ, ∑).

Interest in this paper centers on an n × p matrix of data, Y, for which the rows form a set of independent and identically distributed Gaussian vectors. This may be indicated symbolically as [rowi(Y)]’ ~ Np (μ, Σ), assuming independent and identically distributed rows. Throughout this paper, Σ represents a p × p, symmetric, and positive definite population covariance matrix. The spectral decomposition allows writing Σ = VΣDg(λΣ)V’Σ, with positive eigenvalues {λΣ,j}. Corresponding eigenvectors are the columns of VΣ, which is p × p, of full rank, and orthonormal (V’ΣVΣ = Ip). Furthermore, if FΣ = VΣDg(λΣ)1/2 then Σ = FΣF’Σ.

Johnson, Kotz, and Balakrishnan (1994, 1995) gave detailed treatments of the chi-square and F distributions. Writing X ~ χ2(ν) indicates that X follows a central chi-square distribution, with ν degrees of freedom. If X1 ~ χ2(ν1) X2 ~ χ2(ν2) then writing (X1/ν1)(X2/ν2) ~ F(ν1, ν2) indicates that the radio follows a central F distribution, with numerator degrees of freedom ν1 and denominator degrees of freedom ν2. The distribution function of a central F will be indicated as FF(f;ν1, ν2), with corresponding qth quantile . The identity is useful.

2.2. Known Results

A covariance matrix may be described as compound symmetric if all variances are equal and all correlations are equal. If so, and σ2 is the common variance while ρI is the common correlation, Σ = σ2[ρI1p1’p − (1 − ρI)Ip]. The matrix has two distinct eigenvalues. The first is τ1 = σ2[1 + (p − 1)ρI], with corresponding eigenvector . The second is τ2 = σ2(1 − ρI), which has multiplicity p − 1 and corresponding eigenvectors any matrix V⊥, of dimension p × (p − 1), such that V’⊥ V⊥ =Ip−1 and V’⊥ 1p = 0p−1. Without loss of generality, V⊥ may be taken to be the orthogonal polynomial trend coefficients (normalized to unit length). Requiring 0 < σ2 < ∞ and −(p − 1)−1 < ρI < 1 ensures finite and positiv definite Σ. See Morrison (1990, p. 289) for additional detail.

In practice, caluculations are based on , with ν = n − 1 > p assumed throughout. In turn, this leads to the estimated intraclass correlation coefficient,

| (1) |

with . Similarly, the estimate of Cronbach’s α is

| (2) |

with . Estimated Cronbach’s α is a one-to-one function of estimated intraclass correlation:

| (3) |

Replacing with Σ gives the population values. If Σ is compound symmetric, then ρI is the common correlation, with the maximum likelihood estimate (with Gaussian data).

To avoid confusion it may help to describe ρI as the intraclass correlation if Σ is compound symmetric, and as the generalized intraclass correlation if Σ is not compound symmetric. The two situations have different analytic properties, including different maximum likelihood estimates of Σ. Under compound symmetry, ρI equals the average population correlation, and is the corresponding maximum likelihood estimate. In contrast, without compound symmetry, typically ρI will not equal the average correlation and will not be the maximum likelihood estimate of the average correlation. See Morrison (1990, p. 250) for some additional detail.

2.3. New Exact Distributions and Related Properties

Theorem 1. With multivariate Gaussian data, the cumulative distribution function of the estimate of intraclass correlation exactly equals the probability that a particular weighted sum of independent central chi-square random variables is less than zero. A parallel statement holds for Cronbach’s α.

In particular, consider observing the n × p random matrix Y with independent and identically distributed rows such that [rowi(Y)]’ ~ Np(μ, Σ), with Σ = FΣF’Σ, a p × p, symmetric, and positive definite matrix. Recall that the corresponding estimate is , with ν = n − 1 > p degrees of freedom. Let rI indicate a particular quantile of , with −(p − 1)−1 < rI < 1, and let rα indicate a particular quantile of , with −∞ < rα < 1. In addition, define the following one-to-one functions of the quantiles:

| (4) |

| (5) |

For c ∈ {α, I}, also define

| (6) |

The assumptions just stated suffice to allow proving the following results.

The Matrix F’Σ(1p1’p − xcIp)FΣ is a p × p, symmetric full rank, with one strictly positive eigenvalue, λ2c,1, and p − 1 strictly negative eigenvalues, {λ2c,2, …, λ2c,p}. Most importantly,

| (7) |

with Xcj ~ χ2(ν), independently of Xcj, if j ≠ j’.

Proof. See Propositions 1–4 and their proofs in the appendix.

Corollary 1

The previously known exact results for compound symmetry are special cases of the theorem results.

1. If Σ has compound symmetry then the proofs of Propositions 1–4 lead to simple forms of the required eigenvalues. In turn, and

| (8) |

2. If Σ has compound symmetry and q ∈ [0, 1] then

Solving for xc gives

with corresponding qth quantiles

| (9) |

and

| (10) |

3. If Σ has compound symmetry, an exact confidence interval can be computed as follows. Let and . For ρα, a confidence interval size 1 − αL − αU < 1, with 0 ≤ αL < 1 and 0 ≤ αU < 1, is given by

| (11) |

Similarly, if , then for ρI it follows that

| (12) |

Part 1 is proven in the appendix. The result coincides with the previously known form, as do 2 and 3, which follow easily from 1.

Corollary 2

The moment, characteristic, and cumulant generating functions, as well as all cumulants and the first two moments of D(xc), have simple closed forms. In particular, with , the characteristic function is (Johnson & Kotz, 1970, equation 15, p. 152)

| (13) |

The moment generating function is the same function with t replacing it and the cumulant generating function is the logarithm of the moment generating function. The mth cumulant is (Johnson & Kotz, 1970, equation 20, p. 153)

| (14) |

The first cumulant is the mean,

| (15) |

and the second cumulant is the variance,

| (16) |

Corollary 3

Davies’ algorithm (1980) allows computing the exact distribution function of estimates of intraclass correlation and Cronbach’s alpha.

The algorithm computes the distribution function of a weighted sum of independent chi-squares with any combination of positive and negative weights, by numerical inversion of the characteristic function.

2.4. An F Approximation for the Distribution Function

Davies’ algorithm can be computationally intensive. Therefore an approximation was derived for the distribution function of D(xc), based on Satterthwaite’s method (Mathai & Provost, 1992). The approximation matches the first two moments of

| (17) |

to Q* = λ* X* with X* ~ χ2(ν*). The constants that define the approximation are

| (18) |

and

| (19) |

Assuming Q ≈ Q* allows writing

| (20) |

Note that both the exact distribution and the approximate F distribution depend only on the eigenvalues of A2c, which are functions of Σ and rc. The approximation reduces to the exact result in the special case of compound symmetry.

2.5. Proposals for Approximating Confidence Limits with General Covariance

Two different methods for approximating confidence limits with general covariance have some appeal. For a confidence level of 1 − αL − αU, the simplest method is to merely replace Σ by and find the values of rcL and rcU that satisfy the equation . This first approach is based on approximate quantiles. Alternately, the relationship between the forms of the exact quantiles and confidence bounds under compound symmetry leads to suggesting a simple modification in the context of the F approximation. The confidence limit differs from the quantile by replacing an F quantile with the reciprocal of the opposite tail quantile. In the context of numerically inverting the approximate distribution function, this corresponds to replacing

The second approach may be described as being based on approximate confidence limits.

3. Numerical Evaluations

3.1. Check Exact Results with Simulations

Given a critical value, rc, and Σ calculation of the exact distribution function required the following steps.

Use Cholesky decomposition to compute FΣ such that Σ = FΣF’Σ.

Compute xc as either xI = [(p − 1)rI + 1] or xα = [1 − rα(p − 1)/p]−1.

Compute A1c = (1p1’p − xcIp).

Compute the eigenvalues of A2c = F’ΣA1cFΣ.

Apply Davies’ algorithm to compute Pr{D(xc) ≤ 0}, based on equation 7.

Computer simulations allowed checking the accuracy of the calculations of exact probabilities. All such simulations used ν = 9 and p = 4. Using SAS/IML® (SAS Institute, 1999), 100,000 random samples were generated from a multivariate normal distribution. Twelve distinct choices for Σ, assumed known, were created by combining one of four correlation matrices with one of three variance patterns. One correlation matrix had all correlations equal to ρ 0.5 (all off-diagonal elements were 0.5). The other three correlation matrices were autoregressive = of order 1, indicated AR(1), which implies the j, j’ element was ρ|j−j’|, with ρ ∈ {0.2, 0.5, 0.8}. The variance pattern, , was [1 1 1]’, [1 2 3 4]’, or [4 3 2 1]’. The observed probability that the estimate of Cronbach’s alpha was less than the critical value of rα = 0.70 was tabulated from the 100,000 replications. Each observed probability was compared to the corresponding exact probability computed with Davies’ algorithm. In all cases the exact probability was contained within the 95% confidence interval around the observed probability. The confidence intervals were based on a Gaussian approximation.

3.2. Evaluation of the F Approximation

Calculation of the F approximation for the distribution function began with computing the eigenvalues of A2c, as described in the opening paragraph of Section 3.1. Then equations 19 and 20 were used to complete the calculation.

The F approximation was computed for all cases considered in Section 3.1, again assuming Σ known (see Table 1). As stated in Corollary 1, the approximation gives the exact probability with compound symmetry. More notably, at least for the cases considered in Table 1, the approximation achieves three to four digits of accuracy.

TABLE 1.

with Σ Known and ν = 9

| Correlation | ρ | Exact | F Approx. | ||||

|---|---|---|---|---|---|---|---|

| equal | 0.5 | [1 | 1 | 1 | 1]’ | 0.2689 | 0.2689 |

| AR(1) | 0.5 | [1 | 1 | 1 | 1]’ | 0.5628 | 0.5631 |

| AR(1) | 0.2 | [1 | 1 | 1 | 1]’ | 0.9442 | 0.9440 |

| AR(1) | 0.8 | [1 | 1 | 1 | 1]’ | 0.0430 | 0.0429 |

| equal | 0.5 | [1 | 2 | 3 | 4]’ | 0.4697 | 0.4705 |

| AR(1) | 0.5 | [4 | 3 | 2 | 1]’ | 0.7139 | 0.7135 |

Table 2 illustrates the behavior of the approximation for a range of quantiles with ν 9, p = 3, AR(1) with ρ = 0.5, and . For the case considered, the approximation is accurate even at the extremes of the distribution.

TABLE 2.

with Σ Known, AR(1) Correlation with ρ = 0.5 and

| rα | Exact | F Approx. |

|---|---|---|

| 0.10 | 0.0614 | 0.0614 |

| 0.20 | 0.0899 | 0.0900 |

| 0.30 | 0.1349 | 0.1353 |

| 0.40 | 0.2072 | 0.2079 |

| 0.50 | 0.3231 | 0.3242 |

| 0.60 | 0.5010 | 0.5020 |

| 0.70 | 0.7367 | 0.7361 |

| 0.80 | 0.9418 | 0.9391 |

| 0.90 | 0.9992 | 0.9989 |

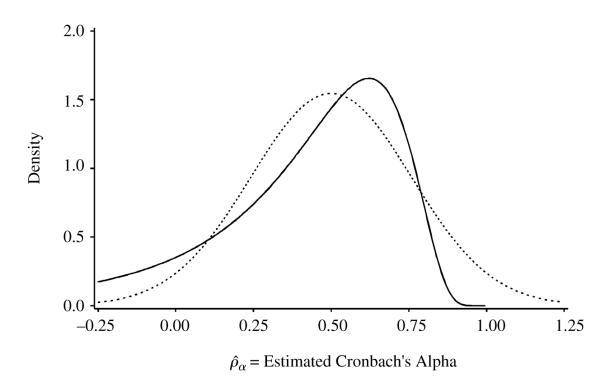

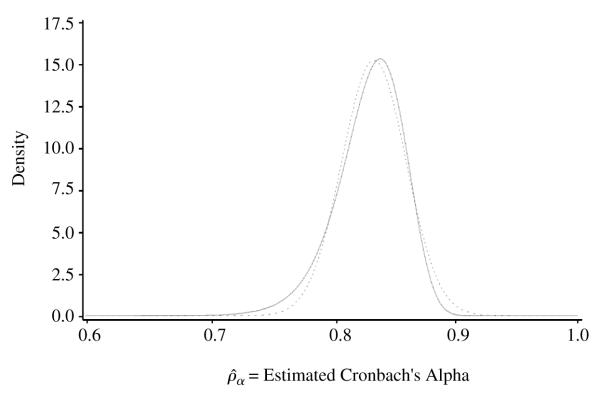

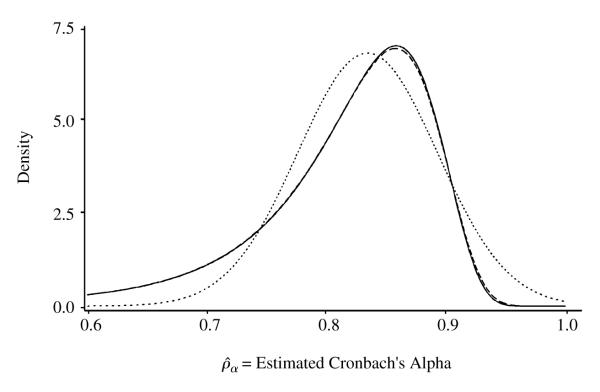

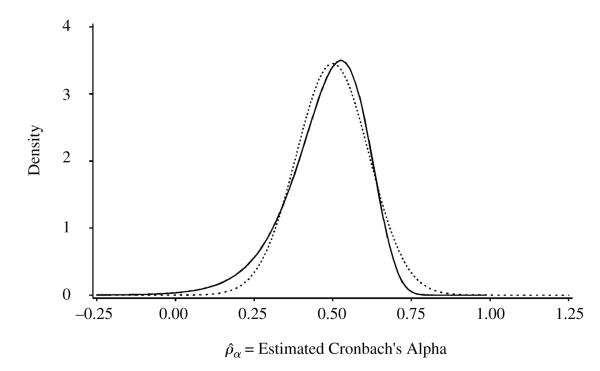

Figures 1–4 display the exact density function of Cronbach’s alpha, the F approximate density, and the Gaussian approximate density due to van Zyl et al. (2000). The exact and F approximation densities were computed as numerical derivatives of the corresponding distribution functions. In Figures 1 (ν = 9) and 2 (ν = 49), which differ only due to sample size, the exact density and the F approximate density coincide exactly because both are based on compound symmetry. Figures 3 (ν = 9) and 4 (ν = 49), which differ only due to sample size, are based on an AR(1) correlation pattern with heterogeneity of variance. In Figure 3, the exact and F approximate densities differ only slightly (by less than 10% in ordinate values) for only a subset of the domain. In Figure 4, the exact and F approximate densities are indistinguishable at the resolution of the plot.

Figure 1.

Density of for ν = 9. Solid line for exact and F approximate, dotted line for Gaussian approximate, for p = 4 and compound symmetry with ρ = 0.20, σ2 = 1.

Figure 4.

Density of for ν = 49. Solid line for exact and F approximate, dotted line for Gaussian approximate, for p = 4, AR(1) with ρ = 0.80 and .

Figure 3.

Density of for ν = 9. Solid line for exact, dashed line for F approximate, and dotted line for Gaussian approximate, for p = 4, AR(1) with ρ = 0.80 and .

Two overall conclusions seem apparent. First, the Gaussian approximation may deviate substantially from the exact result, including nonzero probabilities for values of Cronbach’s α greater than 1.0 (which are impossible). Second, the F approximation is nearly always indistinguishable from the exact result, except for some small differences in the right half of Figure 3. These results agree with those in Tables 1 and 2.

3.3. Confidence Intervals from Estimated Σ

All research to this point has assumed that the true covariance matrix is known. In practice, the covariance matrix can only be estimated. This leads to the desire to compute confidence intervals based on an estimated Σ. Four distinct methods for computing confidence intervals are defined by making two choices. The first choice is to assume either compound symmetry or general covariance. The second choice is to compute estimated quantiles or estimated confidence limits.

Assuming general covariance led to difficulties with finding lower quantiles of the unbounded random variable . Hence all calculations were conducted in terms of the intraclass correlation coefficient, which has a finite range, (−(p − 1)−1, 1), while the range of is unbounded, (−∞, 1). The change ensured stable convergence.

When assuming compound symmetry, equation 1 was used to compute . In turn, was computed. The quantile approach values were computed by replacing τ2/τ1 with in equation 10. The confidence limits approach values were computed by replacing τ2/τ1 with in equation 12.

Assuming general covariance requires an iterative process to compute quantiles or approximate confidence limits. The corresponding method assuming compound symmetry provided the starting value. The associated approximate distribution function value was computed next. This was done by computing the eigenvalues of A2c, as described in the opening paragraph of Section 3.1, but with replacing Σ, and then using equations 19 and 20. A simple bisection algorithm was applied to numerically invert the process and find a particular quantile. As described in Section 2.5, the confidence interval approach differs only by replacing the calculation of

with the calculation of

Simulations were used to estimate the coverage probability, which is the number of times a confidence interval contains the true value of the parameter, ρα, when estimating Σ. In all cases p = 4, with 500,000 samples from a multivariate normal distribution. One of four covariance matrices were used. Two were compound symmetric (see Table 3) with constant variance of and ρ ∈ {0.2, 0.8}. Two (see Table 4) used with an AR(1) correlation matrix and ρ ∈ {0.2, 0.8}.

Table 3.

Estimated Coverage Probability of Confidence Interval for ρα with Compound Symmetry and All

| 95% CI for Coverage of ρα |

|||||

|---|---|---|---|---|---|

| Assume Compound Symmetry |

Assume General Σ |

||||

| ρ | ν+ 1 | Quantiles | Conf. Limits | Quantiles | Conf. Limits |

| 0.2 | 10 | (0.929, 0.930) | (0.949, 0.950) | (0.919, 0.921) | (0.936, 0.937) |

| 50 | (0.946, 0.947) | (0.949, 0.950) | (0.944, 0.945) | (0.948, 0.949) | |

| 100 | (0.948, 0.949) | (0.949, 0.950) | (0.946, 0.948) | (0.949, 0.950) | |

| 200 | (0.948, 0.949) | (0.949, 0.951) | (0.948, 0.950) | (0.949, 0.950) | |

| 0.8 | 10 | (0.929, 0.930) | (0.950, 0.951) | (0.919, 0.920) | (0.936, 0.937) |

| 50 | (0.946, 0.947) | (0.949, 0.951) | (0.943, 0.945) | (0.948, 0.949) | |

| 100 | (0.948, 0.949) | (0.949, 0.950) | (0.947, 0.949) | (0.948, 0.950) | |

| 200 | (0.948, 0.949) | (0.949, 0.950) | (0.948, 0.949) | (0.949, 0.950) | |

Table 4.

Estimated Coverage Probability of Confidence Interval for ρα with AR(1) Correlation and

| 95% CI for Coverage of ρα |

|||||

|---|---|---|---|---|---|

| Assume Compound Symmetry |

Assume General Σ |

||||

| ρ | ν+ 1 | Quantiles | Conf. Limits | Quantiles | Conf. Limits |

| 0.2 | 10 | (0.952, 0.953) | (0.973, 0.974) | (0.929, 0.930) | (0.934, 0.935) |

| 50 | (0.970, 0.971) | (0.974, 0.975) | (0.946, 0.947) | (0.946, 0.948) | |

| 100 | (0.972, 0.973) | (0.974, 0.975) | (0.948, 0.950) | (0.948, 0.949) | |

| 200 | (0.973, 0.974) | (0.974, 0.975) | (0.949, 0.950) | (0.948, 0.949) | |

| 0.8 | 10 | (0.978, 0.979) | (0.992, 0.993) | (0.932, 0.934) | (0.932, 0.934) |

| 50 | (0.993, 0.993) | (0.995, 0.996) | (0.946, 0.948) | (0.946, 0.947) | |

| 100 | (0.994, 0.995) | (0.995, 0.996) | (0.948, 0.949) | (0.948, 0.949) | |

| 200 | (0.995, 0.995) | (0.995, 0.996) | (0.949, 0.950) | (0.949, 0.950) | |

The coverage probability was estimated by tabulating the fraction of times the estimated confidence interval contained the true value of ρα. Results in Tables 3 and 4 followed consistent patterns. Overall, estimating the covariance matrix introduced bias in confidence interval coverage, with the nature of the bias varying with the underlying assumption.

Naturally, if the assumption of compound symmetry holds in the population, confidence intervals based on the exact method worked perfectly. In contrast, the quantile based approximation gave accurate confidence intervals in large samples and optimistically narrow confidence intervals in small samples. Of course, the quantile approach would never be used in practice if compound symmetry were known to hold. However, the results give some guidance as to the sources of inaccuracy in the general covariance setting.

When general covariance is assumed regardless of the underlying covariance matrix, both methods generated optimistically narrow intervals in small samples, with the approximate confidence limit approach generating slightly less bias. In larger samples, assuming general covariance works very well with either method.

A very different picture arose from assuming compound symmetry when the assumption was false. Violation of the assumption led to consistently wide confidence intervals, independent of sample size for the approximate confidence limit approach. The quantile approach also always gave pessimistically wide intervals, although with somewhat less bias in small samples.

4. Conclusions

The new results provide a wide range of properties of estimates of intraclass correlation and Cronbach’s alpha with Gaussian data and general covariance. In addition, Davies’ algorithm provides precise numerical calculation of probabilities and densities.

The new F approximation, based on assuming general covariance, provides the best combination of accuracy and convenience, for both known and estimated Σ. In comparison to the Gaussian approximation, the new F approximation provides substantially greater accuracy, especially in small samples.

The one-to-one relationship between estimates of intraclass correlation and Cronbach’s alpha has two practical implications. First, all of the conclusions from the simulations about estimates of Cronbach’s alpha also apply to estimates of intraclass correlation. Second, the bounded nature of the intraclass correlation, leads to preferring calculations based on the intraclass correlation, even when interest centers on Cronbach’s alpha.

Not surprisingly, assuming general covariance provides much greater accuracy than that obtained when wrongly assuming that compound symmetry holds. Equally important, assuming general covariance in the presence of compound symmetry has only a small effect on confidence interval accuracy, and then only in small samples. Hence any substantial doubt about the validity of the compound symmetry assumption should lead to assuming general covariance and using the new results.

A number of avenues have appeal for future research. An improved approximation for confidence intervals based on estimated Σ in very small samples merits attention. Methods for comparing two or more estimates of reliability, and associated sample size formulas, would be valuable. It would be useful to know the impact of allowing missing data. Finally, the robustness of the probability calculations to the violation of the assumption of Gaussian variables holds great interest.

Free software which implements the new methods may be found at http://www.bios.unc.edu/~muller. Distribution function and confidence interval algorithms are implemented as collections of SAS/IML modules (SAS Institute, 1999).

Figure 2.

Density of for ν = 49. Solid line for exact and F approximate, dotted line for Gaussian approximate, for p = 4 and compound symmetry with ρ = 0.20, σ2 = 1.

Acknowledgments

Kistner’s work was supported in part by NIEHS training grant ES07018-24 and NCI program project grant P01 CA47 982-04. She gratefully acknowledges the inspiration of A. Calandra’s “Scoring formulas and probability considerations” (Psychometrika, 6, 1–9). Muller’s work supported in part by NCI program project grant P01 CA47 982-04.

Appendix. Definitions and Properties of Matrix Normal and Wishart Matrices

Arnold (1981, pp. 310–311) provided a general notation for a Gaussian data matrix. It is used because it helps simplify the proof of Theorem 1. In particular, an n × p matrix, Y, will be indicated to follow a matrix normal distribution, with n × p mean matrix, M, n × n row structure, Ξ, p × p column structure, Σ, by writing Y ~ Nn,p(M, Ξ, Σ). Equivalently, vec(Y) ~ Nn·p[vec(M), Σ ⊗ Ξ], or vec(Y’) ~ Nn·p[vec(M’), Ξ ⊗ Σ. Both Ξ and Σ are required to be symmetric and positive symmetric and semi-definite.

A reproductive property of a matrix normal will be used repeatedly. For constants A, (n* × n), B (p × p*), and C (n* × p*), it follows that AYB + C ~ Nn*,p* (AMB + C, AΞA’ B’ΣB), Either the original or transformed distribution may be singular, depending on the combination of ranks of A, B, Ξ and Σ.

Following Arnold (1981, pp. 314–323), if Y ~ Nn,p(M, I, Σ), then Y’Y is described as following a Wishart distribution, written Y’Y ~ Wp(n, Σ, M’M). Many variations occur, depending on the relative sizes of p and n, as well as the ranks of Σ and M. If M = 0 then write Wp(n, Σ) to indicate a central Wishart.

Proof of Theorem 1

The following assumptions suffice to prove Theorem 1. The n × p random matrix Y has independent and identically distributed rows such that [rowi (Y)]’ ~ Np(μ, Σ), with Σ = FΣF’Σ, a p × p, symmetric, and positive definite matrix. The corresponding estimate is . The functions xI = [(p−1)rI+1] and xα = [1−rα(p−1)/p]−1 are functions of −(p−1)−1 < rI < 1 and −∞ < rα < 1, while for (see equations 4–6).

The assumptions have the following immediate implications. With ν = n − 1 > p, the estimated covariance matrix, , is such that . A lemma in Glueck and Muller (1998) ensures that for any such there exists a ν × p matrix Y0 ~ Nν,p(0, Iν, Σ) with . The spectral decomposition allows writing Σ = VΣDg(λΣ)V’Σ, with VΣV’Σ = V’ΣVΣ = Ip. Also FΣ = VΣDg(λΣ)1/2 and Σ = FΣF’Σ. The matrices VΣ, Dg(λΣ) and FΣ are p × p and full rank.

Proposition 1

Let V1 = [v0 V⊥ ], with v0 = 1p p−1/2. Also let V⊥ be a p × (p − 1) matrix such that V’⊥V⊥ = Ip−1 and V’⊥1p = 0p−1. For c ∈ {α, I}, A1c = (1p1’p − xcIp) is p × p, symmetric and full rank, with one positive eigenvalue (p − xc) and p − 1 negative eigenvalues of −xc. Corresponding orthonormal eigenvectors are v0 and V⊥. Also A1c = V1Dg[(p − xc), −xc1p−1]V’1, with V’1V1 = Ip.

Proof of Proposition 1

A1c is a difference of p × p matrices and A’1c = (1p1’p − xcIp)’ = A1c. The fact that V’1V1 = Ip follows from the definitions of v0 and V⊥. It is easy to directly verify that A1cv0 = (p − xc)v0 and that A1cV⊥ = −xcV⊥, which proves the eigenvalue and eigenvector properties. The restriction −(p − 1)−1 < rI < 1 ensures (p − xI) > 0 and xI > 0. It also implies −∞ < rα < 1, which ensures (p − xα) > 0 and xα > 0.

Proposition 2

For c ∈ {α, I}, A2c = F’ΣA1cFΣ is p × p, symmetric full rank, with one strictly positive eigenvalue, λ2c,1, and p − 1 strictly negative eigenvalues, {λ2c,2, … , λ2c,p}.

Proof of Proposition 2

As a product of full rank p × p matrices, A2c is p × p and full rank. Also A’2c = (F’ΣA1cFΣ)’ = A2c. Hence the spectral decomposition may be written A2c = V2cDg(λ2c)V’2c. If then

| (A21) |

This demonstrates that A2c and Dg[(p−xc), −xc1p−1] are congruent. Consequently Sylvester’s Law of Inertia (Lancaster, 1969, p. 90) allows concluding that A2c has one positive and p − 1 negative eigenvalues.

Proposition 3

.

Proof of Proposition 3

| (A22) |

and

| (A23) |

Proposition 4

, with Xcj ~ χ2(ν), independently of Xcj’ if j ≠ j’.

Proof of Proposition 4

The fact that tr(AB) =tr(BA), when the matrices conform, allows writing

| (A24) |

If Z1 ~ Nν,p(0, Iν, Ip) then Y0 = Z1F’Σ. Note that Z1 = {z1,i j} is a collection of independent standard Gaussian variables. With V2c the orthonormal eigenvectors of A2c, define Z2c = Z1V2c, with Z2c ~ Nν,p(0, Iν, Ip). Therefore

| (A25) |

A diagonal element of Z’2cZ2c is z’2c,jz2c,j, with z2c,j the (ν × 1) column j of Z2c. Using the fact that postmultiplying by a diagonal matrix scales the columns allows writing

| (A26) |

The independent standard Gaussian nature of the elements of Z2c allows concluding that

| (A27) |

with Xcj independent of Xcj’ if j ≠ j’. Without loss of generality, assume that λ2c,1 is the single positive eigenvalue of A2c. The proof is completed by splitting the summation:

| (A28) |

Proof of Corollary 1

If Σ is compound symmetric then the eigenvectors of A1c and Σ coincide, which implies V’1VΣ = V’ΣV1 = Ip. In turn,

| (A29) |

If then Xc+ ~ χ2[ν(p − 1)]. Furthermore

| (A30) |

Note the identity .

Contributor Information

Emily O. Kistner, Department of Biostatistics, University of North Carolina, McGavran-Greenberg Building CB#7420, Chapel Hill, North Carolina 27599-7420

Keith E. Muller, Department of Biostatistics, University of North Carolina, 3105C McGavran-Greenberg Building CB#7420, Chapel Hill, North Carolina 27599-7420 (Keith Muller@UNC.EDU).

References

- Arnold SF. The theory of linear models and multivariate analysis. Wiley; New York: 1981. [Google Scholar]

- Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16:297–334. [Google Scholar]

- Davies RB. The distribution of a linear combination of χ2 random variables. Applied Statistics. 1980;29:323–333. [Google Scholar]

- Feldt LS. The approximate sampling distribution of Kuder-Richardson reliability coefficient twenty. Psychometrika. 1965;30:357–370. doi: 10.1007/BF02289499. [DOI] [PubMed] [Google Scholar]

- Glueck DH, Muller KE. On the trace of a Wishart. Communications in Statistics: Theory and Methods. 1998;27:2137–2141. doi: 10.1080/03610929808832218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson NL, Kotz S. Continuous univariate distributions—2. Houghton Mifflin; Boston: 1970. [Google Scholar]

- Johnson NL, Kotz S, Balakrishnan N. Continuous univariate distributions—1. 2nd ed Wiley; New York: 1994. [Google Scholar]

- Johnson NL, Kotz S, Balakrishnan N. Continuous univariate distributions—2. 2nd ed Wiley; New York: 1995. [Google Scholar]

- Kristof W. The statistical theory of stepped-up reliability when a test has been divided into several equivalent parts. Psychometrika. 1963;28:221–228. [Google Scholar]

- Lancaster PL. Theory of matrices. Academic Press; New York: 1969. [Google Scholar]

- Mathai AM, Provost SB. Quadratic forms in random variables. Marcel Dekker; New York: 1992. [Google Scholar]

- Morrison DF. Multivariate statistical methods. 3rd ed McGraw-Hill; New York: 1990. [Google Scholar]

- SAS Institute . SAS/IML user’s guide, version 8. SAS Institute, Inc.; Cary, NC: 1999. [Google Scholar]

- van Zyl JM, Neudecker H, Nel DG. On the distribution of the maximum likelihood estimator of Cronbach’s alpha. Psychometrika. 2000;65:271–280. [Google Scholar]