Abstract

Cooperation is fundamental to the evolution of human society. We regularly observe cooperative behaviour in everyday life and in controlled experiments with anonymous people, even though standard economic models predict that they should deviate from the collective interest and act so as to maximise their own individual payoff. However, there is typically heterogeneity across subjects: some may cooperate, while others may not. Since individual factors promoting cooperation could be used by institutions to indirectly prime cooperation, this heterogeneity raises the important question of who these cooperators are. We have conducted a series of experiments to study whether benevolence, defined as a unilateral act of paying a cost to increase the welfare of someone else beyond one's own, is related to cooperation in a subsequent one-shot anonymous Prisoner's dilemma. Contrary to the predictions of the widely used inequity aversion models, we find that benevolence does exist and a large majority of people behave this way. We also find benevolence to be correlated with cooperative behaviour. Finally, we show a causal link between benevolence and cooperation: priming people to think positively about benevolent behaviour makes them significantly more cooperative than priming them to think malevolently. Thus benevolent people exist and cooperate more.

Introduction

Two or more people cooperate if they pay an individual cost in order to increase the welfare of the group. The canonical economic model, assuming people care only about their own welfare, predicts that they should not cooperate: the incentive to minimise individual cost causes people to act selfishly. In reality the opposite behaviour is often observed. In personal relationships, workplace collaborations, political participation, and concerning global issues such as climate change, examples of cooperation are manifold, and fostering cooperation has been show to have a number of important applications [1]–[10].

Classical studies have been focussed on punishing of defectors [11]–[14], increasing the reputation of cooperators [3], [15], [16], and the interplay between these two mechanisms [17]–[20]. While these approaches have been successfully shown to enforce cooperation, and punishment has been adopted by most countries to sanction defectors, their weakness is their cost to not only the punisher and the punished, but to the third party tasked with rewarding those with increased reputation. The principle is: if we want to increase cooperation, someone must pay a cost.

In this light, it becomes important to find less expensive ways to sustain cooperation and it is here that individual factors may play a crucial role. Assume individual factor  is known to promote cooperation, then creating an environment which favours factor

is known to promote cooperation, then creating an environment which favours factor  will also promote cooperation. Existence of one or more such factors is suggested by the numerous experimental studies showing that humans do tend to behave cooperatively, even in anonymous, isolated environments where communications or long-term strategies are not allowed [21]–[25]. These studies have shown that humans are heterogeneous: some may cooperate, while others may not. If so, who are the cooperators?

will also promote cooperation. Existence of one or more such factors is suggested by the numerous experimental studies showing that humans do tend to behave cooperatively, even in anonymous, isolated environments where communications or long-term strategies are not allowed [21]–[25]. These studies have shown that humans are heterogeneous: some may cooperate, while others may not. If so, who are the cooperators?

A growing body of literature is trying to provide answers to this question, by investigating what factors promote cooperation in one-shot social dilemma games, such as the Public Goods game and the Prisoner's dilemma. In the Public Goods game,  agents are endowed with

agents are endowed with  monetary units and have to decide how much, if any, to contribute to a public pool. The total amount in the pot is multiplied by a constant and evenly distributed among all players. So, player

monetary units and have to decide how much, if any, to contribute to a public pool. The total amount in the pot is multiplied by a constant and evenly distributed among all players. So, player  's payoff is

's payoff is  , where

, where  denotes

denotes  's contribution and the ‘marginal return’

's contribution and the ‘marginal return’  is assumed to belong to the open interval

is assumed to belong to the open interval  . In the Prisoner's dilemma, two agents can either cooperate or defect. To cooperate means paying a cost to give a greater benefit to the other player; to defect means doing nothing.

. In the Prisoner's dilemma, two agents can either cooperate or defect. To cooperate means paying a cost to give a greater benefit to the other player; to defect means doing nothing.

While theoretical studies have shown that heterogeneity among subjects can promote the evolution of cooperation in the spatial Prisoner's dilemma and the spatial Public Goods game in a variety of different settings [26]–[29], experimental studies have investigated the role of specific factors, such as intuition and altruism (see below for definitions), on cooperation in one-shot anonymous Public Goods games and Prisoner's dilemma games [24], [30]–[36]. Intuitive actions are induced by either exerting time pressure on subjects or priming them towards intuition versus deliberate reflection [30]–[33]. While it is generally accepted that intuition favours cooperation through the Social Heuristics Hypothesis [30], the correlation between altruism and cooperation is still unclear: one study did not find any correlation between altruism and cooperation in a subsequent one-shot Public Goods game [36], while another study found a positive correlation between altruism and cooperation in a precedent one-shot Prisoner's dilemma [24].

Altruism is formally defined as unilaterally paying a cost  to give a benefit

to give a benefit  to another and is traditionally measured using a Dictator game [24], [32], [34]–[37]. Here a dictator is given an endowment

to another and is traditionally measured using a Dictator game [24], [32], [34]–[37]. Here a dictator is given an endowment  and must then decide how much, if any, to donate to a recipient who was given nothing. The recipient has no input in the process and simply accepts the donation. Givings in the Dictator game are usually considered as an appropriate measure of altruism [38]–[41] and recent experiments have shown that indeed they positively correlate to altruistic acts in real-life situations [42].

and must then decide how much, if any, to donate to a recipient who was given nothing. The recipient has no input in the process and simply accepts the donation. Givings in the Dictator game are usually considered as an appropriate measure of altruism [38]–[41] and recent experiments have shown that indeed they positively correlate to altruistic acts in real-life situations [42].

Experiments on the Dictator game typically present a bimodal distribution. Participants tend to either act selfishly or act so as to decrease inequity between players. Consider the scenario where Player 1 is given $10 dollars and must then decide how much if any to donate to a second anonymous player. In most cases Player 1 decides to selfishly keep all of the money, or to donate half to Player 2, and so reduce the inequity between the two players. There is a third scenario that occurs, although rarely. Here Player 1 decides to donate more to Player 2 than to keep for herself. In some cases players have been known to donate the entire sum. The act of increasing the other payoff beyond your own will be called ‘benevolence’. It is likely that this behaviour is not observed more often in the Dictator game as its design effectively penalises altruism. If cost were less of a factor perhaps benevolence would be more prevalent.

In sum, the main difference between cooperation and altruism is that altruism is unilateral: there is no way to get rewarded. Another difference is that we allow altruist action at negligible cost. In other words, the important part is to create a benefit to someone else without getting anything back. Benevolence is an extreme form of altruism, where the final result of the act is that the recipient has a larger payoff of the actor.

Cooperation: Two or more people cooperate if they pay an individual cost to give a greater benefit to the group.

Altruism: A person acts altruistically if he unilaterally pays a cost  to increase the benefit of someone else. More formally, Player 1 is altruist towards Player 2 if he prefers the allocation

to increase the benefit of someone else. More formally, Player 1 is altruist towards Player 2 if he prefers the allocation  to the allocation

to the allocation  , where

, where  and

and  .

.

Benevolence: A person acts benevolently if he unilaterally pays a cost  to increase the benefit of someone else beyond one's own. More formally, Player 1 is benevolent towards Player 2 if he prefers the allocation

to increase the benefit of someone else beyond one's own. More formally, Player 1 is benevolent towards Player 2 if he prefers the allocation  to the allocation

to the allocation  , where

, where  ,

,  , and

, and  .

.

Examples of benevolence in everyday life abound. The sharing of one's food causing the sharer to go hungry, campaigning on behalf of a VIP in order to promote their agenda, or something as trivial as ‘liking’ or sharing a status on social networks so as to increase the reputation of another.

In this paper, we have designed a game that allows players to choose actions that are malevolent, inequity averse or benevolent, all at minimal cost. More specifically, we give an endowment  to Player 1 that she keeps regardless of any subsequent choice. She has to then decide how much, between

to Player 1 that she keeps regardless of any subsequent choice. She has to then decide how much, between  and

and  to donate to Player 2. To donate

to donate to Player 2. To donate  will be referred as a malevolent act; to donate

will be referred as a malevolent act; to donate  will be referred as inequity aversion; to donate more than

will be referred as inequity aversion; to donate more than  will be referred as benevolence.

will be referred as benevolence.

This form of benevolence, though costless, increases the inequity among people and so it is predicted not to exist by the widely used inequity aversion models [47], [48]. Thus, as a first step of our program, we have conducted an experiment, using this new economic game, to show that most people act in a benevolent way even when it is made clear that there is no possibility of an indirect reward. We next move to investigate our main research question: Is benevolence one of those individual factors favouring cooperative behaviour? With this is mind, as a second step, we have asked whether benevolence is correlated to cooperative behaviour. We have found that benevolence positively correlates with cooperation in a number of different settings, and with different payoffs. Finally, in our third study, we have showed the causal link between benevolence and cooperation: priming people towards benevolence versus malevolence results in a significant increase of cooperative behaviour.

These results allow us to conclude that benevolence is an individual factor possessed by many people and that it is among those factors promoting cooperative behaviour. Although this observation contradicts inequity aversion models, other theories could be used to explain it. For instance, the tendency to maximise the total welfare and adherence to social norms can explain the existence of benevolence and its correlation with cooperative behaviour. We refer the reader to the Discussion section for more details.

Study 1. Benevolence Exists

Inequity aversion models [47], [48] are based on the assumption that humans have a tendency to mitigate payoff differences. Since benevolence, measured using the game described below, increases payoff difference between the actor and the recipient, these models predict that it does not exist. Thus, as a first step of our program, we make us sure that benevolence does actually exist. Moreover, we test whether people trust in the benevolence of others and, to this end, we have introduced a second player who has to gamble on the first player's level of benevolence. Among the several different ways one can formalise this strategic situation through an economic game, we have adopted a particularly simple one, formally described below.

BT

: Player 1 is given an amount

: Player 1 is given an amount  of dollars which she keeps regardless her choice. She then must choose an amount of dollars between

of dollars which she keeps regardless her choice. She then must choose an amount of dollars between  and

and  . Player 2 has to decide a number between 0 and

. Player 2 has to decide a number between 0 and  , as well. If Player 2's choice, say

, as well. If Player 2's choice, say  (as in trust), is smaller than or equal to Player 1's choice, say

(as in trust), is smaller than or equal to Player 1's choice, say  (as in benefit or benevolence), then Player 2 gets

(as in benefit or benevolence), then Player 2 gets  dollars, otherwise he gets nothing. So player 1's decision corresponds to the maximum amount of dollars she allows player 2 to make, while player 2's choice is a measure of his trust in Player 1's benevolence.

dollars, otherwise he gets nothing. So player 1's decision corresponds to the maximum amount of dollars she allows player 2 to make, while player 2's choice is a measure of his trust in Player 1's benevolence.

The BT game is similar to the Ultimatum Game with multiplier  [49]. Here Player 2 decides his minimal acceptable offer (MAO) and Player 1 decides an offer

[49]. Here Player 2 decides his minimal acceptable offer (MAO) and Player 1 decides an offer  to make to Player 2. If MAO

to make to Player 2. If MAO  , then Player 2 earns

, then Player 2 earns  and Player 1 earns

and Player 1 earns  , otherwise both players earn nothing. However, in the BT game the payoff of Player 1 is fixed and independent of any profile of strategies played.

, otherwise both players earn nothing. However, in the BT game the payoff of Player 1 is fixed and independent of any profile of strategies played.

We recruited US subjects to play BT using the online labour market Amazon Mechanical Turk [43]–[46]. After explaining the rules, we asked a series of comprehension questions to make sure they understood the game. These questions were formulated to make very clear the duality between harming and favouring the other player at zero cost for themselves. Players failing any of the comprehension questions were automatically screened out. We refer the reader to the File S1 for full experimental instructions.

using the online labour market Amazon Mechanical Turk [43]–[46]. After explaining the rules, we asked a series of comprehension questions to make sure they understood the game. These questions were formulated to make very clear the duality between harming and favouring the other player at zero cost for themselves. Players failing any of the comprehension questions were automatically screened out. We refer the reader to the File S1 for full experimental instructions.

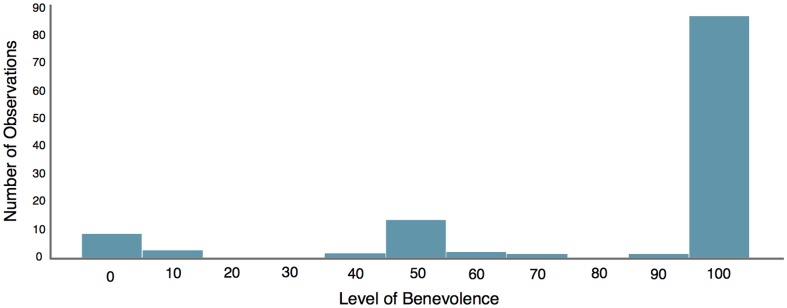

A total of 247 subjects passed all comprehension questions. Among the 123 subjects who played as Player 1, we find that only 12 participants chose a strategy  (9 malevolent and 3 inequity averse people). All others chose

(9 malevolent and 3 inequity averse people). All others chose  and about

and about  of the subjects acted in a perfectly benevolent way, choosing

of the subjects acted in a perfectly benevolent way, choosing  and so maximising the inequity between themselves and the others (see Figure 1).

and so maximising the inequity between themselves and the others (see Figure 1).

Figure 1. Distribution of choices in BT  of those people acting as Player 1.

of those people acting as Player 1.

Only 12 out of 123 participants acted in a malevolent or inequity averse way; all others acted benevolently with a large majority of participants acting in a perfectly benevolent way.

By looking at the 124 subjects who played as Player 2, we find that subjects tended to trust in the benevolence of others, although we find a general tendency to underestimate it: while the average ‘benevolence’ was  , the average ‘trust’ was only

, the average ‘trust’ was only  . The Mann-Whitney test confirms that these samples most likely come from different distributions (

. The Mann-Whitney test confirms that these samples most likely come from different distributions ( ). We have also conducted a similar experiment with BT

). We have also conducted a similar experiment with BT with 133 US subjects acting as Player 1 and 142 as Player 2. By comparing the results in BT

with 133 US subjects acting as Player 1 and 142 as Player 2. By comparing the results in BT with those in BT

with those in BT we find that, after the obvious rescaling, benevolence and trust do not seem to depend on the maximum payout

we find that, after the obvious rescaling, benevolence and trust do not seem to depend on the maximum payout  . (Mann-Whitney test:

. (Mann-Whitney test:  in case of benevolence;

in case of benevolence;  in case of trust).

in case of trust).

Study 2. Benevolence Is Positively Correlated with Cooperation

To study correlation between cooperation and benevolence, and cooperation and trust, we designed a battery of four two-stage games. Participants first played a BT game and then a standard Prisoner's dilemma PD with cost

with cost  and benefit

and benefit  . In our PD, two players must choose to either either cooperate or defect: to defect means keeping

. In our PD, two players must choose to either either cooperate or defect: to defect means keeping  , while to cooperate means giving

, while to cooperate means giving  to the other player. The strategic situation faced by the participants is summarised below.

to the other player. The strategic situation faced by the participants is summarised below.

T1. Subjects first play BT as Player 1 and then play PD

as Player 1 and then play PD .

.

T2. Subjects first play BT as Player 1 and then play PD

as Player 1 and then play PD .

.

T3. Subjects first play BT as Player 2 and then play PD

as Player 2 and then play PD .

.

T4. Subjects first play BT as Player 2 and then play PD

as Player 2 and then play PD .

.

Again we recruited US subjects using AMT and asked qualitative comprehension questions to make sure they understood the game.

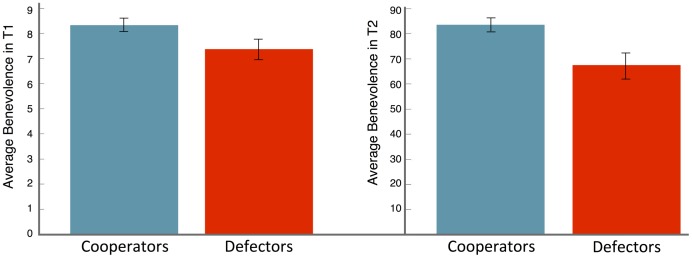

A total of 385 subjects, nearly evenly distributed among the four treatments, passed all comprehension questions. Figure 2 shows the average benevolence of cooperators and defectors in T1 and T2. Benevolence seems positively correlated with cooperation in both treatments. To confirm this, we use logistic regression to predict defection or cooperation as the dependent variable. We find that the correlation between benevolence and cooperation is borderline significant in T1 (coeff ,

,  ) and significant in T2 (coeff

) and significant in T2 (coeff ,

,  ). On the other hand, we find that trust affects cooperation only for

). On the other hand, we find that trust affects cooperation only for  (coeff

(coeff ,

,  ) and does not for

) and does not for  (coeff

(coeff ,

,  ).

).

Figure 2. Average level of benevolence of cooperators and defectors in T1 and T2, with error bars representing the standard error of the mean.

In both treatments benevolence is positively correlated with cooperation.

Study 3. Priming Benevolence Promotes Cooperation

In the previous studies we have shown that benevolence exists and is positively correlated to cooperation. Here we show the causal link between benevolence and cooperation. To do this we use an experimental design similar to that used in [30] to show the causal link between intuition and cooperation: we prime participants towards benevolence or malevolence before playing a Prisoner's dilemma. Specifically, we have conducted three more treatments, as described below.

T5. After entering the game, participants see a screen where we define benevolence as giving a benefit to someone else at negligible cost to themselves. Subjects are then asked to write a paragraph describing a time when acting benevolently led them in the right direction and resulted in a positive outcome for them. Alternatively, they could write a paragraph describing a time when acting malevolently led them in the wrong direction and resulted in a negative outcome for them. After this, they are asked to play PD .

.

T6. This treatment is very similar to T5, with the only difference that subjects are primed towards malevolence. We first define malevolence as an unkind act towards someone else with no immediate benefit for themselves and then we ask participants to write a paragraph describing a time when acting benevolently led them in the wrong direction and resulted in a negative outcome for them. Alternatively, they could write a paragraph describing a time when acting malevolently led them in the right direction and resulted in a positive outcome for them.

T7. This is a baseline treatment, where participants enter the game and are immediately asked to play PD , using literally the same instructions as in T5 and T6, in order to avoid framing effects.

, using literally the same instructions as in T5 and T6, in order to avoid framing effects.

Also for this study, we recruited US subjects using AMT. In order not to destroy the priming effect we decided not to ask for comprehension questions before the Prisoner's dilemma in T5 and T6. Further, we asked no comprehension questions in T7 so as not to bias any baseline measurement. To control for good quality results we used other techniques (see File S1 for full experimental details). In particular, at the end of the experiment we asked the players to describe the reason of their choice. This, together with the descriptions of benevolent or malevolent actions, allowed us to manually exclude from the analysis those subjects who did not take the game seriously or showed a clear misunderstanding of the rules of the game, as sometimes happens in AMT experiments. We excluded from our analysis 11 subjects.

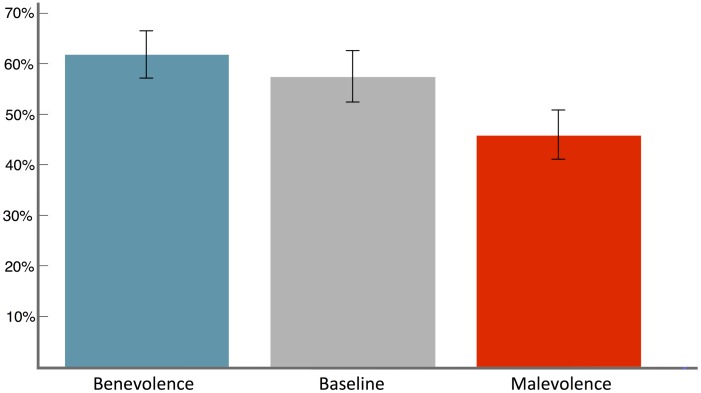

300 US subjects, nearly evenly distributed among the three treatments, participated to our third study and passed our manual screening. As Figure 3 shows, the trend is in the expected direction.  of the participants cooperated in T5, far more than that in T6 (

of the participants cooperated in T5, far more than that in T6 ( ). Pearson's

). Pearson's  test confirms that the difference is statistically significant (

test confirms that the difference is statistically significant ( ). The baseline treatment lies just in between, with a percentage of cooperation of

). The baseline treatment lies just in between, with a percentage of cooperation of  . However, the difference is not statistically significant with neither of the other two treatments (T5 vs T7,

. However, the difference is not statistically significant with neither of the other two treatments (T5 vs T7,  ; T6 vs T7,

; T6 vs T7,  ).

).

Figure 3. Average cooperation in each of the three treatments.

Participants primed to act benevolently were significantly more likely to cooperate than than those primed to act malevolently. The level of cooperation of unprimed participants lies between those of the primed groups and cannot be statistically separated from either.

Discussion

Benevolence, that is paying a (potentially zero) cost to increase someone else's welfare beyond that of your own, is predicted not to exist by the widely used inequity aversion models [47], [48], which are indeed founded on the idea that humans have a tendency to moderate payoff differences. Contrary to this prediction, our Study 1 shows that most people act in a benevolent way, at least when the cost of the action is zero, and that most people trust in the benevolence of others. Existence of benevolence might be seen as surprising also in light of other experimental results showing that people are often willing to pay a cost to decrease the benefit of a richer partner [50]. The explanation of these apparently contradictory results most likely relies in social norms: most people think that to be benevolent and to restore equity when a situation of inequity is artificially presented as status quo without any reason as in [50] are both the ‘right things to do’.

Looking for individuals factors promoting cooperative behaviour, we have then asked whether benevolence is positively correlated with cooperative behaviour. Our Study 2 shows that: benevolent people not only exist, but they are more likely to cooperate in a subsequent Prisoner's dilemma. This provides evidence that benevolence is one of those individual factors favouring cooperation.

Our Study 3 strengthens this conclusion by directly showing a causal link between benevolence and cooperation. Priming people to think about benevolence in a positive way or about malevolence in a negative way makes them significantly more cooperative than priming them to think about benevolence in a negative way or about malevolence in a positive way. The fact that the level of cooperation can experimentally be manipulated in such a way connects to the important question of whether priming people towards benevolence can be used for instance by companies as a way to increase cooperation among employees or by countries to increase cooperation among citizens, and to what extent.

As we have mentioned, our results are not consistent with inequity aversion models. However, a number of other theories could explain both the existence of benevolence and its positive correlation with cooperative behaviour. Several experimental studies have shown that many people act so as to maximise the total welfare [24], [51], [52] and some of the most recent mathematical models of human behaviour are indeed based on postulating this tendency [9], [25], [51], [53]. See [54], [55] for a review of other models of cooperation. This predisposition might explain why benevolent people exist and are more cooperative: our results might be due to a number of people attempting to maximise the total welfare. Other scholars suggest that social norms shape most of cooperative behaviour [56], [57]. Though social norms varies across cultures, it is possible that to be benevolent and to be cooperative are both seen as the ‘right things to do’ by part of the US population. From this perspective, our results could be driven by a number of people attempting to act according to the social norm they adhere to.

We conclude by saying that our results do not imply that defectors are never benevolent. As Figure 2 shows, defectors were substantially more benevolent than predicted by inequity aversion models, but significantly worse than cooperators. Benevolence thus seems far more transversal than cooperation and suggests the following question. What evolutionary pressures select for benevolence? Together with the aforementioned theories, several others, such as warm-glow giving [58] and the Social Heuristics Hypothesis [30], offer qualitative explanations. Andreoni's warm-glow giving theory states that (some) humans receive utility from the fact itself of giving; the SHH instead builds on the idea that everyday life interactions are often repeated and a benevolent act today may be rewarded tomorrow. It is then possible that people internalise benevolence in their everyday life and use it as a default strategy in the lab.

In conclusion, we have found that benevolence exists and it is positively correlated to cooperation. However, the ultimate reason why benevolence exists and why it is correlated with cooperation is far from being clear. It is therefore an important question for further research and is likely to be challenging because it clearly connects to some of the most basic open problems of human social behaviour.

Materials and Methods

We recruited US subjects using Amazon Mechanical Turk and randomly assigned them to one of seven experiments using economic games. Treatments are described in the Main Text and full instructions are given in the File S1. In four treatments, participants were informed that comprehension questions would be asked after the instructions of each game and that they would be automatically eliminated if they failed to correctly answer them. Comprehension questions were formulated in such a way to make very clear the duality between harming and favouring the other player (in case of the BT game), and between maximising one's own payoff and maximising the total welfare (in case of the PD). A total of 385 subjects passed all comprehension questions. Participants were also informed that computation and payment of the bonuses would be made at the end of the experiment. So, importantly, in each treatment, participants played the second game without knowing the outcome of the first. The structure of the remaining three treatments was such that we could not ask for comprehension questions. However, to control for good quality result we used other techniques, such as asking participants to describe the strategy used. This allowed us to manually eliminate from the analysis those people who showed a clear misunderstanding of the game rules. No deception was used. Written consent was obtained by all participants, and the experiments were approved by the Southampton University Ethics Committee on the Use of Human Subjects in Research. For further details of the experimental methods, see File S1.

Supporting Information

Experimental setup.

(PDF)

Acknowledgments

We thank Elena Simperl for discussions about the experimental design and Christian Hilbe and David G. Rand for helpful comments on early drafts.

Data Availability

The authors confirm that all data underlying the findings are fully available without restriction. The data is available from http://datadryad.org/review?wfID=28568&token=1dd64ab8-ab0e-429e-99d0-b7855018ff34.

Funding Statement

The author CS has been supported by the EPSRC (Engineering and Physical Sciences Research Council), grant reference EP/I016945/1. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Trivers R (1971) The evolution of reciprocal altruism. Q Rev Biol 46: : 35–57. [Google Scholar]

- 2.Axelrod R, Hamilton WD (1981) The evolution of cooperation. Science 211: : 1390–1396. [DOI] [PubMed] [Google Scholar]

- 3.Milinski M, Semmann D, Krambeck HJ (2002) Reputation helps solve the ‘tragedy of the commons’. Nature 415: : 424–426. [DOI] [PubMed] [Google Scholar]

- 4.Doebeli M, Hauert C (2005) Models of cooperation based on the Prisoners Dilemma and the Snowdrift game. Ecol Lett 8: : 748–766. [Google Scholar]

- 5.Lehmann L, Keller L (2006) The evolution of cooperation and altruism. A general framework and a classification of models. J Evol Biol 19: : 1365–1376. [DOI] [PubMed] [Google Scholar]

- 6.Nowak MA (2006) Five rules for the evolution of cooperation. Science 314: : 1560–1563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Crockett MJ (2009) The neurochemistry of fairness. Ann NY Acad Sci 1167: : 76–86. [DOI] [PubMed] [Google Scholar]

- 8.Rand DG, Nowak MA (2013) Human cooperation. Trends Cogn Sci 17: : 413–425. [DOI] [PubMed] [Google Scholar]

- 9. Capraro V (2013) A Model of Human Cooperation in Social Dilemmas. PLoS ONE 8(8): e72427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zaki J, Mitchell JP (2013) Intuitive Prosociality. Curr Dir Psychol Sci 22: : 466–470. [Google Scholar]

- 11.Boyd R, Richerson PJ (1992) Punishment allows the evolution of cooperation (or anything else) in sizable groups. Ethol Sociobiol 13: : 171–195. [Google Scholar]

- 12.Fehr E, Gächter S (2000) Cooperation and punishment in public goods experiments. Am Econ Rev 90: : 980–994. [Google Scholar]

- 13.Fehr E, Gächter S (2002) Altruistic punishment in humans. Nature 415: : 137–140. [DOI] [PubMed] [Google Scholar]

- 14.Gürerk Ö, Irlenbusch B, Rockenbach B (2006) The competitive advantage of sanctioning institutions. Science 312: : 108–111. [DOI] [PubMed] [Google Scholar]

- 15.Panchanathan K, Boyd R (2004) Indirect reciprocity can stabilize cooperation without the second-order free rider problem. Nature 432: : 499–502. [DOI] [PubMed] [Google Scholar]

- 16.Milinski M, Semmann D, Krambeck HJ, Marotzke J (2006) Stabilizing the Earth's climate is not a losing game: supporting evidence from public goods experiments. Proc Natl Acad Sci USA 103: : 3994–3998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Andreoni J, Harbaugh W, Vesterlund L (2003) The carrot or the stick: rewards, punishments, and cooperation. Am Econ Rev 93: : 893–902. [Google Scholar]

- 18.Rockenbach B, Milinski M (2006) The efficient interaction of indirect reciprocity and costly punishment. Nature 444: : 718–723. [DOI] [PubMed] [Google Scholar]

- 19.Sefton M, Shupp R, Walker JM (2007) The effects of rewards and sanctions in provision of public goods. Econ Inq 45: : 671–690. [Google Scholar]

- 20.Hilbe C, Sigmund K (2010) Incentives and opportunism: from the carrot to the stick. Proc R Soc B 277 (1693): : 2427–2433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cooper R, DeJong DV, Forsythe R, Ross TW (1996) Cooperation without Reputation: Experimental Evidence from Prisoner's Dilemma Games. Games Econ Behav 12: : 187–218. [Google Scholar]

- 22.Goeree J, Holt C (2001) Ten Little Treasures of Game Theory and Ten Intuitive Contradictions. Am Econ Rev 91: : 1402–1422. [Google Scholar]

- 23.Zelmer J (2003) Linear public goods experiments: A meta-analysis. Exper Econ 6: : 299–310. [Google Scholar]

- 24.Capraro V, Jordan JJ, Rand DG (2014) Cooperation Increases with the Benefit-to-Cost Ratio in One-Shot Prisoner's Dilemma Experiments. Available at SSRN: http://ssrn.com/abstract=2429862.

- 25.Barcelo H, Capraro V (2014) Group size effect on cooperation in social dilemmas. Available at SSRN: http://ssrn.com/abstract=2425030 [DOI] [PMC free article] [PubMed]

- 26.Wang Z, Perc M (2010) Aspiring to the fittest and promotion of cooperation in the prisoner's dilemma game. Phys Rev E 82: : 021115 [DOI] [PubMed] [Google Scholar]

- 27. Perc M, Wang Z (2010) Heterogeneous Aspirations Promote Cooperation in the Prisoner's Dilemma Game. PLoS ONE 5(12): e15117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Wang Z, Murks A, Du WB, Rong ZH, Perc M (2011) Coveting thy neighbors fitness as a means to resolve social dilemmas. J Theor Biol 277: 19–26. [DOI] [PubMed] [Google Scholar]

- 29.Szolnoki A, Wang Z, Perc M (2012) Wisdom of groups promotes cooperation in evolutionary social dilemmas. Sci Rep 2: : 576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rand DG, Green JD, Nowak MA (2012) Spontaneous giving and calculated greed. Nature 489: : 427–430. [DOI] [PubMed] [Google Scholar]

- 31.Rand DG, Peysakhovich A, Kraft-Todd GT, Newman GE, Wurzbacher O, et al. (In press) Social Heuristics Shape Intuitive Cooperation. Nature Commun 5: 3677. [DOI] [PubMed] [Google Scholar]

- 32.Peysakhovich A, Rand DG (2013) Habits of Virtue: Creating Norms of Cooperation and Defection in the Laboratory. Available at SSRN: http://ssrn.com/abstract=2294242.

- 33.Rand DG, Kraft-Todd GT (2013) Reflection Does Not Undermine Self-Interested Prosociality: Support for the Social Heuristics Hypothesis. Available at SSRN: http://ssrn.com/abstract=2297828. [DOI] [PMC free article] [PubMed]

- 34.Dreber A, Fudenberg D, Rand DG (2014) Who cooperates in repeated games: The role of altruism, inequity aversion, and demographics. J Econ Behav Organ 98: : 41–55. [Google Scholar]

- 35.Harbaugh WT, Krause K (2000) Children's contributions in public goods experiments: the development of altruistic and free-riding behaviours. Econ Inq 38: : 95–109. [Google Scholar]

- 36.Blanco M, Engelmann D, Normann HT (2011) A within-subject analysis of other regarding preferences. Games Econ Behav 72: : 321–338. [Google Scholar]

- 37.Capraro V, Marcelletti A (2014) Do good actions inspire good actions in others? Available at SSRN: http://ssrn.com/abstract=2454667. [DOI] [PMC free article] [PubMed]

- 38.Brañas-Garza P (2006) Poverty in dictator games: Awakening solidarity. J Econ Behav Organ 60: : 306–320. [Google Scholar]

- 39. Brañas-Garza P (2007) Promoting helping behavior with framing in dictator games. J Econ Psychol 28(4): 477–486. [Google Scholar]

- 40.Charness G, Gneezy U (2008) What's in a name?: anonymity and social distance in dictator and ultimatum games. J Econ Behav Organ 68: : 29–35. [Google Scholar]

- 41.Engel C (2011) Dictator games: A meta study. Exper Econ 14: : 583–610. [Google Scholar]

- 42.Franzen A, Pointner S (2013) The external validity of giving in the dictator game. Exper Econ 16: : 155–169. [Google Scholar]

- 43.Paolacci G, Chandler J, Ipeirotis PG (2010) Running Experiments on Amazon Mechanical Turk. Judgm Decis Mak 5: : 411–419. [Google Scholar]

- 44.Horton JJ, Rand DG, Zeckhauser RJ (2011) The online laboratory: conducting experiments in a real labor market. Exper Econ 14: : 399–425. [Google Scholar]

- 45.Rand DG (2012) The promise of Mechanical Turk: How online labor markets can help theorists run behavioral experiments. J Theor Biol 299: : 172–179. [DOI] [PubMed] [Google Scholar]

- 46. Thaler S, Simperl E, Wölger S (2012) An experiment in comparing human-computation techniques. IEEE Internet Computing 16 (5): 52–58. [Google Scholar]

- 47. Fehr E, Schmidt K (1999) A theory of fairness, competition, and cooperation. Q J Econ 114 (3): 817–868. [Google Scholar]

- 48. Bolton GE, Ockenfels A (2000) ERC: A Theory of Equity, Reciprocity, and Competition. Am Econ Rev 90 (1): 166–193. [Google Scholar]

- 49. Miyaji K, Wang Z, Tanimoto J, Hagishima A, Kokubo S (2013) The evolution of fairness in the coevolutionary ultimatum games. Chaos Solitons Fract 56: 13–18. [Google Scholar]

- 50.Dawes CT, Fowler JH, Johnson T, McElreath R, Smirnov O (2007) Egalitarian motives in humans. Nature 446: : 794–796. [DOI] [PubMed] [Google Scholar]

- 51. Charness G, Rabin M (2002) Understanding social preferences with simple tests. Q J Econ 117 (3): 817–869. [Google Scholar]

- 52. Engelmann D, Strobel M (2004) Inequality aversion, efficiency, and maximin preferences in simple distribution experiments. Am Econ Rev 94 (4): 857–869. [Google Scholar]

- 53.Capraro V, Venanzi M, Polukarov M, Jennings NR (2013) Cooperative equilibria in iterated social dilemmas. In: Proc 6th of the International Symposium on Algorithmic Game Theory, Lecture Notes in Computer Science 8146 : pp. 146–158. [Google Scholar]

- 54.Perc M, Gómez-Gardeñnes J, Szolnoki A, Floría LM, Moreno Y (2013) Evolutionary dynamics of group interactions on structured populations: A review. J R Soc Interface 10: : 20120997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Perc M, Szolnoki A (2010) Coevolutionary games - A mini review. BioSystems 99: 109–125. [DOI] [PubMed] [Google Scholar]

- 56. Fehr E, Fischbacher U (2004) Social norms and human cooperation. Trends Cogn Sci 8(4): 185–190. [DOI] [PubMed] [Google Scholar]

- 57. Tomasello M, Vaish A (2013) Origins of Human Cooperation and Morality. Annu Rev Psychol 64: 231–255. [DOI] [PubMed] [Google Scholar]

- 58. Andreoni J (1989) Giving with Impure Altruism: Applications to Charity and Ricardian Equivalence. J Polit Econ 97 (6): 1447–1458. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Experimental setup.

(PDF)

Data Availability Statement

The authors confirm that all data underlying the findings are fully available without restriction. The data is available from http://datadryad.org/review?wfID=28568&token=1dd64ab8-ab0e-429e-99d0-b7855018ff34.