Abstract

Background and aims

This study investigated the effectiveness of two different levels of e-learning when teaching clinical skills to medical students.

Materials and methods

Sixty medical students were included and randomized into two comparable groups. The groups were given either a video- or text/picture-based e-learning module and subsequently underwent both theoretical and practical examination. A follow-up test was performed 1 month later.

Results

The students in the video group performed better than the illustrated text-based group in the practical examination, both in the primary test (P<0.001) and in the follow-up test (P<0.01). Regarding theoretical knowledge, no differences were found between the groups on the primary test, though the video group performed better on the follow-up test (P=0.04).

Conclusion

Video-based e-learning is superior to illustrated text-based e-learning when teaching certain practical clinical skills.

Keywords: e-learning, video versus text, medicine, clinical skills

Background

E-learning and web-based learning is rapidly becoming an integrated part of the teaching methods used in medical schools and continuing medical education throughout the world.1,2 Of the many reasons, the primary one is availability. With an increasing number of smartphones, tablets, and laptops, e-learning is easily accessible whenever and wherever needed.

Further e-learning benefits include increased student satisfaction, especially when e-learning is used as an augmentation to traditional teaching methods.3–6 Cost is both an advantage and a disadvantage. Upfront development costs can be prohibitively high and require considerable technical skills. Once developed, however, e-learning offers the advantages of scale: it can be used countless times at minimal marginal cost.2

Previously published studies have shown little or no difference in outcome when comparing web-based e-learning modules to paper-based modules of the same curriculum.3,7,8 Similarly, studies comparing case-based e-learning to traditional case-based classroom teaching show no significant differences between groups.3,7,8

As for acquisition of practical clinical skills mediated by e-learning, the existing literature is scarce. Most available sources deal with e-learning in addition to traditional class-based learning. Results in these studies vary though, with both better and poorer results from e-learning-augmented teaching, compared to classroom teaching alone, having been described.4–6,9,10 This lack of evidence-based knowledge raises the question of whether or not a clinical skill can be mediated by e-learning alone.

E-learning in general is a rapidly growing market, reaching US$35.6 billion worldwide in 2011, and with a 5-year compound annual growth rate of 7.6% expected to reach $51.5 billion by 2016.11 With this rapidly developing market, there is an urgent need of evidence-based guidance on how to develop and implement the most effective e-learning in the future.12 Therefore, it is relevant to examine whether some e-learning methods are more effective than others on a stand-alone basis, in particular for the acquisition of practical clinical skills.

This study investigates the effectiveness of two different e-learning methods on a population of fourth- and fifth-year Danish medical students. While previous studies have focused primarily on the use of video or illustrated text incorporated into the traditional method of teaching as augmentation to certain topics, our aim was to examine which of the two modalities was best suited for acquiring both theoretical and practical knowledge of a new subject.

A previous study, dealing with assessment of patients with head trauma according to the Glasgow Coma Scale, showed improved outcomes associated with higher levels of e-learning.13 Using this “level division”, the two groups of the current study would have been divided into multimedia level 1 (illustrated text) and level 2 (video). However, this prior study was focused solely on the acquisition of theoretical knowledge. We thus designed our study to determine whether the results would also apply for the acquisition of practical skills.

For this study, we chose the Dix–Hallpike test as a teaching subject. This test is used by clinicians to examine patients with symptoms of dizziness for benign paroxysmal positional vertigo. Practical skill as well as considerable theoretical knowledge is required, in order for both the test to be performed and the results interpreted correctly.

Aim of the study

This study aimed to test if video-based e-learning was better than illustrated text-based e-learning when teaching clinical procedures. Subsequently, the study also aimed to test if there was a difference between theoretical knowledge and practical skills.

Materials and methods

Setup

This was a randomized controlled study of two groups learning the Dix–Hallpike test using either video-based or illustrated text-based e-learning. Test subjects were medical students from the University of Copenhagen. Students were recruited from participants at a voluntary course in emergency room surgery where the Dix–Hallpike test was not part of the expected curriculum (Danish Medical Association, Copenhagen). All interested students were invited to join. Three students who had previous clinical experience with or knowledge of the Dix–Hallpike test were excluded from this study.

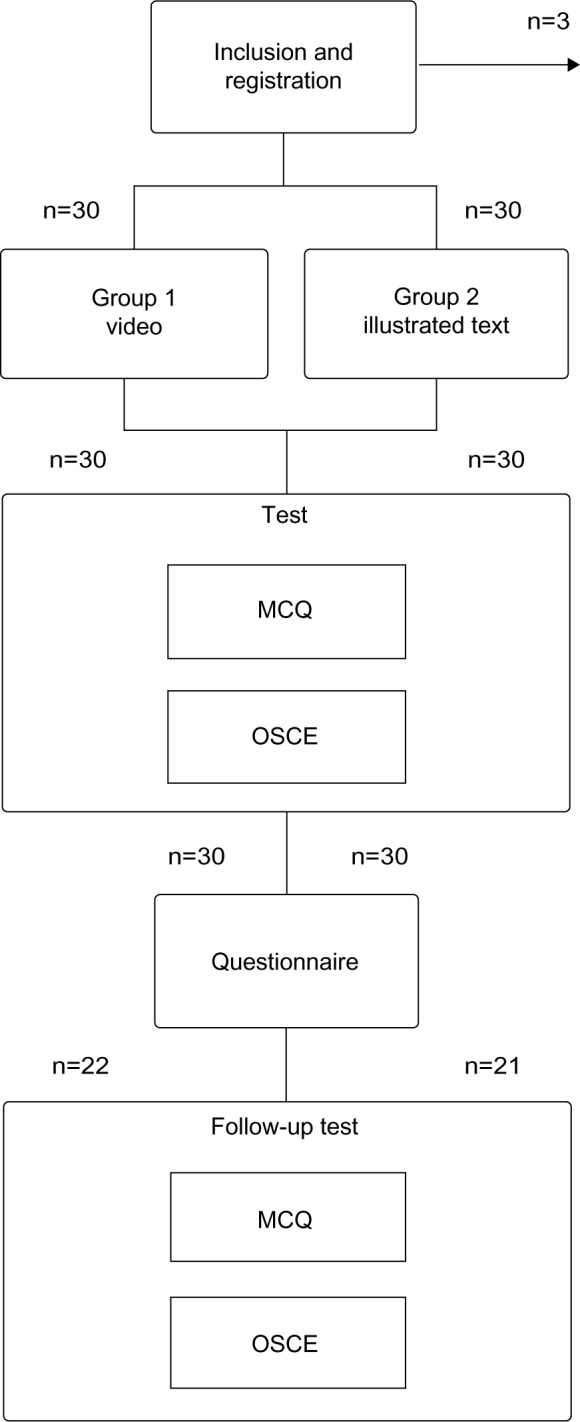

Before students could access the e-learning modules, they were required to register and specify their sex and age. At the same time, they were assigned to one of two groups using simple computerized randomization. Allocation concealment was maintained throughout the study. The students were randomly assigned to their groups and kept unaware of the presence of other groups and the teaching methods applied. After using the e-learning module for a maximum of 15 minutes, the students were asked to take a test (multiple-choice questionnaire [MCQ]) and observed structured clinical examination (OSCE), and subsequently a follow-up test (MCQ + OSCE) 1 month later. Group 1 was the video-based group while group 2 was the illustrated text-based one (as illustrated in Figure 1).

Figure 1.

Flowchart describing the sequence of this study.

Abbreviations: MCQ, multiple-choice questionnaire; OSCE, observed structured clinical examination.

Illustrated text-based e-learning

This simple e-learning module was purely an illustrated textbook with a series of six pictures showing the different steps of actions during the procedure. It also described evaluation of the test as well as basics about vertigo (Figure 2).

Figure 2.

Example of pictures taken from the illustrated text material.

Note: Reproduced with permission from Medviden.dk.

Video-based e-learning

This module was almost the same as the illustrated text one. The only difference was a video-sequence of about 2.2 minutes showing the procedure itself. We chose a maximum time of 15 minutes within the modules based on pilot studies indicating about 10–15 minutes was needed.

Development of the test instruments

MCQ

In order to avoid questions being seen before the test and follow-up-test, both consisted of 50 true/false questions, randomly chosen from a pool of 100 questions. These were validated using two groups of 15 students in pilot studies. An index of reliability was computed as the difference between the proportions of high and low scores answered correctly. All questions had a reliability index above 0.10, which was why no changes were made. The questions were divided into procedural questions (25 points) and questions regarding theory (25 points).

OSCE

The OSCE was evaluated using a mark sheet divided into procedural skills (a total of 25 points regarding ten predefined actions), and the students were scored in order to evaluate if the actions were performed safely and accurately. The students were also verbally asked ten questions regarding theory, and had the ability to gain a total of 25 points (all or none for each question). An index of reliability was computed as the difference between the proportions of high and low scores answered correctly. All questions and actions had a reliability index above 0.10, and no changes were made. The examiner in charge of supervising the OSCE was blinded, and thus had no knowledge of whether the student had been in the illustrated text group or video group.

Module development

The test, follow-up test, questionnaire, and e-learning training and test-module were all designed using Moodle (Moodle Pty Ltd, Perth, WA, Australia), a freeware (GPLv3-licensed) PHP web application for producing modular Internet-based courses integrated into a free Danish website for medical education. All parts of the study were closed and required a password for admittance. The program was carried out in Danish. The video and a rewritten combination of the two modules were available online.

Outcomes

The primary outcome was the difference between the two groups’ scores obtained on the validated knowledge test. Secondary outcomes were the difference in self-evaluated skills and satisfaction with the teaching material.

Statistical tests and study size

The Mann–Whitney U test was used to compare the two groups. Cohen’s d was calculated as a standardized measure of effect. Based on experiences from a pilot study, the difference was anticipated to be 5%. The anticipated range for this difference would be 10%, thereby a standard deviation applied for all groups of 5%. We anticipated that both groups would only deviate slightly from each other, and a 6% difference was chosen as a minimum relevant difference. The significance level was set at 5%, and statistical power at 80%. This yielded a total requirement of 22 subjects in each group.

Ethics

The study was purely educational, and the Danish National Committee on Health Research Ethics (DNVK), Denmark was consulted. Their conclusion was that the study did not require ethical approval (h-4-2013-fsp 41). All recruited students were asked to give electronic consent before entering the system.

Results

Table 1 illustrates the characteristics of the 60 students who completed the test and the 43 students who also completed the follow-up test. There were no statistically significant differences between the groups’ demographic parameters, and no students were excluded due to prior knowledge of the test.

Table 1.

Demographic characteristics of participating students

| Video | % | Text/pictures | % | P-value | |

|---|---|---|---|---|---|

| Primary test and questionnaire | |||||

| n | 30 | 30 | |||

| Mean age (SD) | 24.7 (1.3) | 24.6 (1.2) | 0.75 | ||

| Sex | |||||

| Male | 13 | 43 | 14 | 47 | 0.83 |

| Female | 17 | 57 | 16 | 53 | |

| Follow-up test | |||||

| n=106 | 22 | 21 | |||

| Mean age (SD) | 24.7 (1.4) | 24.6 (1.6) | 0.94 | ||

| Sex | |||||

| Male | 8 | 36 | 10 | 48 | 0.54 |

| Female | 14 | 64 | 11 | 52 | |

Note: P-values are for differences between the two randomized groups.

Abbreviation: SD, standard deviation.

Table 2 illustrates the differences in test scores (knowledge tests) between the two groups. For the MCQ, there were no statistically significant differences between the two groups in the primary test, but the video group performed statistically better in procedural questions (P=0.04) for the follow-up test. The OSCE showed significant differences between the two groups both in the primary test (P=0.001) and follow-up test (P=0.01).

Table 2.

Differences in mean numbers of correct responses, time, and efficiency

| Video | Text/picture | Difference | P-value | Cohen’s d | |

|---|---|---|---|---|---|

| Primary test | |||||

| n | 30 | 30 | |||

| MCQ (SD) | 44.5 (2.7) | 44.6 (3.5) | −0.1 | 0.7 | 0.04 |

| Procedure (SD) | 20.9 (2.0) | 20.7 (2.5) | 0.2 | 0.5 | 0.10 |

| Theory (SD) | 23.5 (1.8) | 23.8 (1.6) | −0.3 | 0.5 | 0.18 |

| OSCE (SD) | 44.2 (1.3) | 40.2 (3.8) | 4.0 | 0.001 | 1.39 |

| Procedure (SD) | 23.1 (1.0) | 19.2 (3.5) | 3.9 | <0.001 | 1.50 |

| Theory (SD) | 21.0 (1.0) | 20.9 (1.1) | 0.1 | 0.9 | 0.10 |

| Follow-up test | |||||

| n | 22 | 21 | |||

| MCQ (SD) | 40.8 (1.6) | 39.5 (3.0) | 1.3 | 0.15 | 0.55 |

| Procedure (SD) | 19.8 (1.0) | 18.6 (1.9) | 1.2 | 0.04 | 0.79 |

| Theory (SD) | 21.0 (1.4) | 20.9 (1.5) | 0.1 | 1.0 | 0.07 |

| OSCE (SD) | 40.5 (1.7) | 38.0 (3.9) | 2.5 | 0.01 | 0.80 |

| Procedure (SD) | 21.1 (1.4) | 19.0 (2.9) | 2.1 | 0.005 | 0.96 |

| Theory (SD) | 19.3 (1.8) | 19.0 (2.0) | 0.3 | 0.6 | 0.14 |

Notes: Significance calculated using Mann–Whitney U test. Cohen’s effect-size value (d): high (>0.8), moderate (0.5–0.8), or small (0.2–0.5) practical significance.

Abbreviations: SD, standard deviation; MCQ, multiple-choice questionnaire; OSCE, observed structured clinical examination.

Further analysis of the OSCE results revealed, that the result was primarily due to the differences in the procedural scores (P<0.001 in primary test; P=0.005 in follow-up test), while there were no significant differences in scores for the theoretical part (P=0.9; P=0.6). The follow-up questionnaire (Table 3) revealed that the video group was more satisfied with the teaching material than the illustrated text-based group (P<0.001). However, the two groups evaluated their clinical skills as being equally good.

Table 3.

Questionnaire

| Video | Text/picture | Difference | P-value | Cohen’s d | |

|---|---|---|---|---|---|

| n | 30 | 30 | |||

| Satisfaction (SD) | 7.3 (1.1) | 5.9 (1.0) | 1.4 | >0.001 | 1.37 |

| Self-evaluated clinical skill (SD) | 6.1 (1.3) | 6.3 (1.2) | −0.2 | 0.45 | 0.200 |

Notes: Questionnaire rated 0–10, where 10 was highest. Significance calculated using Mann–Whitney U test. Cohen’s effect-size value (d): high (>0.8), moderate (0.5–0.8), or small (0.2–0.5) practical significance.

Abbreviation: SD, standard deviation.

Discussion

In this study, we found that video-based e-learning was a more effective method than illustrated text-based e-learning for the procedural part of the OSCE in teaching the Dix–Hallpike test. This was in line with our expectations, as video can present a smoother and more exact sequence of steps in a given clinical procedure compared to an image sequence. In contrast, a less practically oriented teaching session with more factual points might be better suited for illustrated text-based learning, but this is beyond the scope of this study. In line with previous research,3 our results show that higher levels of e-learning yield both better results and higher student satisfaction.

A potential weakness in this type of study is whether or not the two sets of teaching materials were equivalent with regard to quality. As we found that both groups of students performed equally well in both the MCQ and in the theoretical questions of the OSCE, we concluded that the factual parts of our materials were comparable.

In their study of video sequences, in addition to regular tuition of medical students, Hibbert et al6 found no effect with regard to the procedure of thyroid examination. They argued that the expected “gain” of the intervention group might have been undermined due to the fact that a number of instructional videos on the topic are already available online, and are thus available to both the control and intervention groups.

To eliminate this effect, the primary test was administered immediately after the teaching session. However, as the subsequent follow-up test was conducted 1 month after the primary test, the performance of both groups could potentially have been biased by other instructional videos or acquisition of knowledge elsewhere. Even so, the video group still performed better than the illustrated text-based group in both MCQ and OSCE procedural questions on the follow-up test. This could be due to two factors: either our instructional video was of a higher quality than the ones available elsewhere online, or the students in the illustrated text-based group had not sought additional knowledge online.

The strengths of this study are comparable groups, simple setup, and relevant end points. The sample size was sufficient to detect statistical difference with regard to the procedural part of our end points, but a larger study might have shown difference in the theoretical part as well. This study supports the need for differentiating and individualizing e-learning into different modalities depending on the subject, and that further research is warranted in order to continuously develop e-learning on an evidence-based scale.

Conclusion

Based on our findings, we conclude that video-based e-learning is superior to illustrated text-based e-learning when teaching certain practical clinical skills, such as the Dix–Hallpike test. We suggest further studies in order to obtain a more general conclusion.

Footnotes

Disclosure

The authors report no conflicts of interest in this work.

References

- 1.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–1196. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]

- 2.Cook DA. Web-based learning: pros, cons and controversies. Clin Med. 2007;7(1):37–42. doi: 10.7861/clinmedicine.7-1-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cook DA, Dupras DM, Thompson WG, Pankratz VS. Web-based learning in residents’ continuity clinics: a randomized, controlled trial. Acad Med. 2005;80(1):90–97. doi: 10.1097/00001888-200501000-00022. [DOI] [PubMed] [Google Scholar]

- 4.Bloomfield JG, Jones A. Using e-learning to support clinical skills acquisition: exploring the experiences and perceptions of graduate first-year pre-registration nursing students – a mixed method study. Nurse Educ Today. 2013;33(12):1605–1611. doi: 10.1016/j.nedt.2013.01.024. [DOI] [PubMed] [Google Scholar]

- 5.Preston E, Ada L, Dean CM, Stanton R, Waddington G, Canning C. The Physiotherapy eSkills Training Online resource improves performance of practical skills: a controlled trial. BMC Med Educ. 2012;12:119. doi: 10.1186/1472-6920-12-119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hibbert EJ, Lambert T, Carter JN, Learoyd DL, Twigg S, Clarke S. A randomized controlled pilot trial comparing the impact of access to clinical endocrinology video demonstrations with access to usual revision resources on medical student performance of clinical endocrinology skills. BMC Med Educ. 2013;13:135. doi: 10.1186/1472-6920-13-135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Worm BS. Learning from simple ebooks, online cases or classroom teaching when acquiring complex knowledge. A randomized controlled trial in respiratory physiology and pulmonology. PLoS One. 2013;8(9):e73336. doi: 10.1371/journal.pone.0073336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Maloney S, Storr M, Paynter S, Morgan P, Ilic D. Investigating the efficacy of practical skill teaching: a pilot-study comparing three educational methods. Adv Health Sci Educ Theory Pract. 2013;18(1):71–80. doi: 10.1007/s10459-012-9355-2. [DOI] [PubMed] [Google Scholar]

- 9.Perkins GD, Kimani PK, Bullock I, et al. Improving the efficiency of advanced life support training: a randomized, controlled trial. Ann Intern Med. 2012;157(1):19–28. doi: 10.7326/0003-4819-157-1-201207030-00005. [DOI] [PubMed] [Google Scholar]

- 10.Worm BS, Buch SV. Does competition work as a motivating factor in e-learning? A randomized controlled trial. PLoS One. 2014;9(1):e85434. doi: 10.1371/journal.pone.0085434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Adkins SS. The Worldwide Market for Self-Paced eLearning Products and Services: 2011–2016 Forecast and Analysis. Monroe (WA): Ambient Insight; 2013. [Google Scholar]

- 12.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Instructional design variations in internet-based learning for health professions education: a systematic review and meta-analysis. Acad Med. 2010;85(5):909–922. doi: 10.1097/ACM.0b013e3181d6c319. [DOI] [PubMed] [Google Scholar]

- 13.Worm BS, Jensen K. Does peer learning or higher levels of e-learning improve learning abilities? A randomized controlled trial. Med Educ Online. 2013;18:21877. doi: 10.3402/meo.v18i0.21877. [DOI] [PMC free article] [PubMed] [Google Scholar]