Significance

Science is rooted in the concept that a model can be tested against observations and rejected when necessary. However, the problem of model testing becomes formidable when we consider the probabilistic forecasting of natural systems. We show that testability is facilitated by the definition of an experimental concept, external to the model, that identifies collections of data, observed and not yet observed, that are judged to be exchangeable and can thus be associated with well-defined frequencies. We clarify several conceptual issues regarding the testing of probabilistic forecasting models, including the ambiguity of the aleatory/epistemic dichotomy, the quantification of uncertainties as degrees of belief, the interplay between Bayesian and frequentist methods, and the scientific pathway for capturing predictability.

Keywords: system science, Bayesian statistics, significance testing, subjective probability, expert opinion

Abstract

Probabilistic forecasting models describe the aleatory variability of natural systems as well as our epistemic uncertainty about how the systems work. Testing a model against observations exposes ontological errors in the representation of a system and its uncertainties. We clarify several conceptual issues regarding the testing of probabilistic forecasting models for ontological errors: the ambiguity of the aleatory/epistemic dichotomy, the quantification of uncertainties as degrees of belief, the interplay between Bayesian and frequentist methods, and the scientific pathway for capturing predictability. We show that testability of the ontological null hypothesis derives from an experimental concept, external to the model, that identifies collections of data, observed and not yet observed, that are judged to be exchangeable when conditioned on a set of explanatory variables. These conditional exchangeability judgments specify observations with well-defined frequencies. Any model predicting these behaviors can thus be tested for ontological error by frequentist methods; e.g., using P values. In the forecasting problem, prior predictive model checking, rather than posterior predictive checking, is desirable because it provides more severe tests. We illustrate experimental concepts using examples from probabilistic seismic hazard analysis. Severe testing of a model under an appropriate set of experimental concepts is the key to model validation, in which we seek to know whether a model replicates the data-generating process well enough to be sufficiently reliable for some useful purpose, such as long-term seismic forecasting. Pessimistic views of system predictability fail to recognize the power of this methodology in separating predictable behaviors from those that are not.

Science is rooted in the concept that a model can be tested against observations and rejected when necessary (1). However, the problem of model testing becomes formidable when we consider natural systems. Owing to their scale, complexity, and openness to interactions within a larger environment, most natural systems cannot be replicated in the laboratory, and direct observations of their inner workings are always inadequate. These difficulties raise serious questions about the meaning and feasibility of “model verification” and “model validation” (2), and have led to the pessimistic view that “the outcome of natural processes in general cannot be accurately predicted by mathematical models” (3).

Uncertainties in the formal representation of natural systems imply that the forecasting of emergent phenomena such as natural hazards must be based on probabilistic rather than deterministic modeling. The ontological framework for most probabilistic forecasting models comprises two types of uncertainty: an aleatory variability that describes the randomness of the system, and an epistemic uncertainty that characterizes our lack of knowledge about the system. According to this distinction, which stems from the classical dichotomy of objective/subjective probability (4), epistemic uncertainty can be reduced by increasing relevant knowledge, whereas the aleatory variability is intrinsic to the system representation and is therefore irreducible within that representation (5, 6).

The testing of a forecasting model is itself a statistical enterprise that evaluates how well a model agrees with some collection of observations (e.g., 7, 8). One can compare competing forecasts within a Bayesian framework and use new data to reduce the epistemic uncertainty. However, the scientific method requires the possibility of rejecting a model without recourse to specific alternatives (9, 10). The statistical gauntlet of model evaluation should therefore include pure significance testing (11). Model rejection exposes “unknown unknowns”; i.e., ontological errors in the representation of the system and its uncertainties. Here we use “ontological” to label errors in a model’s quantification of aleatory variability and epistemic uncertainty (see SI Text, Glossary). [Other authors have phrased the problem in different terms; e.g., Musson’s (12) “unmanaged uncertainties.” In the social sciences, “ontological” is sometimes used interchangeably with “aleatory” (13).]

The purpose of this paper is to clarify the conceptual issues associated with the testing of probabilistic forecasting models for ontological errors in the presence of aleatory variability and epistemic uncertainty. Some relate to long-standing debates in statistical philosophy, in which Bayesians (14) spar with frequentists (15), and others propose methodological accommodations that draw from the strengths of both schools (10, 16). Statistical “unificationists” of the latter stripe advocate the importance of model checking using Bayesian (calibrated) P values (16, 17, 18, 19) as well as graphical summaries and other tools of exploratory data analysis (20). Bayesian modeling checking has been criticized by purists on both sides (21, 22), but one version, prior predictive checking, provides us with an appropriate framework for the testing of forecasting models for ontological errors.

Among the concerns to be addressed is the use of expert opinion to characterize epistemic uncertainty, a common practice when dealing with extreme events, such as large earthquakes, volcanic eruptions, and climate change. Frequentists discount the quantification of uncertainties in terms of degrees of belief as “fatally subjective—unscientific” (23), and they oppose “letting scientists’ subjective beliefs overshadow the information provided by data” (21). Bayesians, on the other hand, argue that probability is intrinsically subjective: all probabilities are degrees of belief that cannot be measured. Jaynes’s (24) view is representative: “any probability assignment is necessarily ‘subjective’ in the sense it describes only a state of knowledge, and not anything that could be measured in a physical experiment.” Immeasurability suggests untestability: How can models of probabilities that are not measureable be rejected?

Our plan is to expose the conceptual issues associated with the uncertainty hierarchy in the mathematical framework of a particular forecasting problem—probabilistic seismic hazard analysis (PSHA)—and resolve them in a way that generalizes the testing for ontological errors to other types of probabilistic forecasting models. A glossary of uncommon terms used in this paper is given in SI Text, Glossary.

Probabilistic Seismic Hazard Analysis

Earthquakes proceed as cascades in which the primary effects of faulting and ground shaking may induce secondary effects, such as landslides, liquefactions, and tsunamis. Seismic hazard is a probabilistic forecast of how intense these natural effects will be at a specified site on earth’s surface during a future interval of time. Because earthquake damage is primarily caused by shaking, quantifying the hazard due to ground motions is the main goal of PSHA. Various intensity measures can be used to describe the shaking experienced during an earthquake; common choices are peak ground acceleration and peak ground velocity. PSHA estimates the exceedance probability of an intensity measure X; i.e., the probability that the shaking will be larger than some intensity value x at a particular geographic site over the time interval of interest, usually beginning now and stretching over several decades or more (5, 25, 26). It is often assumed that earthquake occurrence is a Poisson process with rates constant in time, in which case the hazard model is said to be time independent.

A plot of the exceedance probability F(x) = P(X > x) for a particular site is called the hazard curve. Using hazard curves, engineers can estimate the likelihood that buildings and other structures will be damaged by earthquakes during their expected lifetimes, and they can apply performance-based design and seismic retrofitting to reduce structural fragility to levels appropriate for life safety and operational requirements. A seismic hazard map is a plot of the exceedance probability F at a fixed intensity x as a function of site position (27, 28) or, somewhat more commonly, x at fixed F (29–31). Official seismic hazard maps are now produced by many countries, and are used to specify seismic performance criteria in the design and retrofitting of buildings, lifelines, and other infrastructure, as well as to guide disaster preparedness measures and set earthquake insurance rates. These applications often fold PSHA into probabilistic risk analysis based on further specifications of loss models and utility measures (32, 33). Here we focus on assessing the reliability of PSHA as a forecasting tool rather than its role in risk analysis and decision theory.

The reliability of PSHA has been repeatedly questioned. In the last few years, disastrous earthquakes in Sumatra, Italy, Haiti, Japan, and New Zealand have reinvigorated this debate (34–39). Many practical deficiencies have been noted, not the least of which is the paucity of data for retrospective calibration and prospective testing of PSHA models, owing to the short span of observations relative to the forecasting time scale (40, 41). However, some authors have raised the more fundamental question of whether PSHA is misguided because it cannot capture the aleatory variability of large-magnitude earthquakes produced by complex fault systems (35, 38, 42). Moreover, the pervasive role of subjective probabilities in specifying the epistemic uncertainty in PSHA has made this methodology a target for criticism by scientists who adhere to the frequentist view of probabilities. A particular objection is that degrees of belief cannot be empirically tested and, therefore, that PSHA models are not scientific (43–45).

We explore these issues in the PSHA framework developed by earthquake engineers in the 1970s and 1980s and refined in the 1990s by the Senior Seismic Hazard Analysis Committee (SSHAC) of the US Nuclear Regulatory Commission (5). For a particular PSHA model Hm, the intrinsic or aleatory variability of the ground motion X is described by the hazard curve Fm(x) = P(X > x | Hm). The epistemic uncertainty is characterized by an ensemble {Hm: m ∈ M} of alternative hazard models consistent with our present knowledge. A probability πm is assigned to Fm(x) that measures its plausibility, based on present knowledge, relative to other hazard curves drawn from the ensemble.

Ideally, the model ensemble {Hm} could be constructed and the model probabilities assigned according to how well the candidates explain prior data. Various objective methods have been developed for this purpose: resampling, adaptive boosting, Bayesian information criteria, etc. (46, 47). However, the high-intensity, low-probability region of the hazard space F ⊗ x most relevant to many risk decisions is dominated by large, infrequent earthquakes. The data for these extreme events are usually too limited to discriminate among alternative assumptions and fix key parameters. Therefore, in common practice, the model ensemble {Hm} is organized as branches of a logic tree, and the branch weights {πm}, which may depend on the hazard level x, are assigned according to expert opinion (5, 48, 49).

If the logic tree spans a hypothetically mutually exclusive and completely exhaustive (MECE) set of possibilities (50), πm can be interpreted as the probability that Fm(x) is the “true” hazard value , and operations involving the plausibility measure {πm} must obey Kolmogorov’s axioms of probability; e.g.,

| [1] |

In PSHA practice, logic trees are usually constructed to sample the possibilities, rather than exhaust them, in which case the MECE assumption is inappropriate. One can then reinterpret πm as the probability that Fm(x) is the “best” among a set of available models (12, 47, 51) or “the one that should be used” (52). This utilitarian approach increases the subjective content of {Fm(x), πm}, as well as the possibilities for ontological error. We will call {Fm(x), πm} the experts’ distribution to recognize this subjectivity, regardless of whether {Hm} comes from a logic tree or is constructed in a different way.

For conceptual simplicity, we will assume the discrete experts’ distribution {Fm(x), πm} samples a continuous probability distribution with density function p(ϕ), where ϕ = F(x0) at a fixed hazard value x0. We denote this relationship by {ϕm} ∼ p(ϕ) and call p the extended experts’ distribution. Various data-analysis methods have been established to move from a discrete sample to a continuous distribution, although the process also compounds the potential for ontological error. Given the extended experts’ distribution, the expected hazard at fixed x0 is

| [2] |

This central value measures the aleatory variability of the hazard, conditional on the model, and the dispersion of p about describes the epistemic uncertainty in its estimation.

Epistemic uncertainty is thus described by imposing a subjective probability on the target behavior of Hm, which is an objective exceedance probability, ϕm. This procedure is well established in PSHA practice (5, 53), but it encounters frequentist discomfort with degrees of belief and Bayesian resistance to attaching measures of precision to probabilities. [In Bayesian semantics, the frequency parameters of aleatory variability are usually labeled as “chances” rather than as probabilities. According to Lindley (14), “the distinction becomes useful when you wish to consider your probability of a chance, whereas probability on a probability is, in the philosophy, unsound.” Jaynes (24) also rejects the identification of frequencies with probabilities.] Although these concerns do not matter much in the routine application of risk analysis, where only the mean hazard is considered (32, 54, 55), they must be addressed within the conceptual framework of model testing.

In our framework, testability derives from a null hypothesis, here called the “ontological hypothesis,” which states that data-generating hazard curve (the “true” hazard) is a sample from the extended experts’ distribution, . If an observational test rejects this null hypothesis, then we can claim to have found an ontological error.

Testing PSHA Models

One straightforward test of a PSHA model is to collect data on the exceedance frequency of a specified shaking intensity, x0, during N equivalent trials, each lasting 1 y. If ϕm = Fm(x0) is the 1-y exceedance probability for a particular hazard model Hm, and the data are judged to be unbiased and exchangeable under the experimental conditions—i.e., to have a joint probability distribution invariant with respect to permutations in the data ordering (56–58)—then the likelihood of observing k or more exceedances for each member of the experts’ ensemble is given by the tail of a binomial distribution:

| [3] |

Under the extended experts’ distribution, the unconditional probability is the expectation,

| [4] |

For notational simplicity, we suppress the dependence of P on N.

There have been only a few published attempts to test PSHA models against ground motion observations (59–62). To our knowledge, all have assumed a test distribution that has been computed from [3] using the mean exceedance probability rather than from the unconditional distribution [4]. This reflects the view shared by many hazard practitioners that the mean hazard is the only hazard needed for decision making (54, 55, 63, 64).

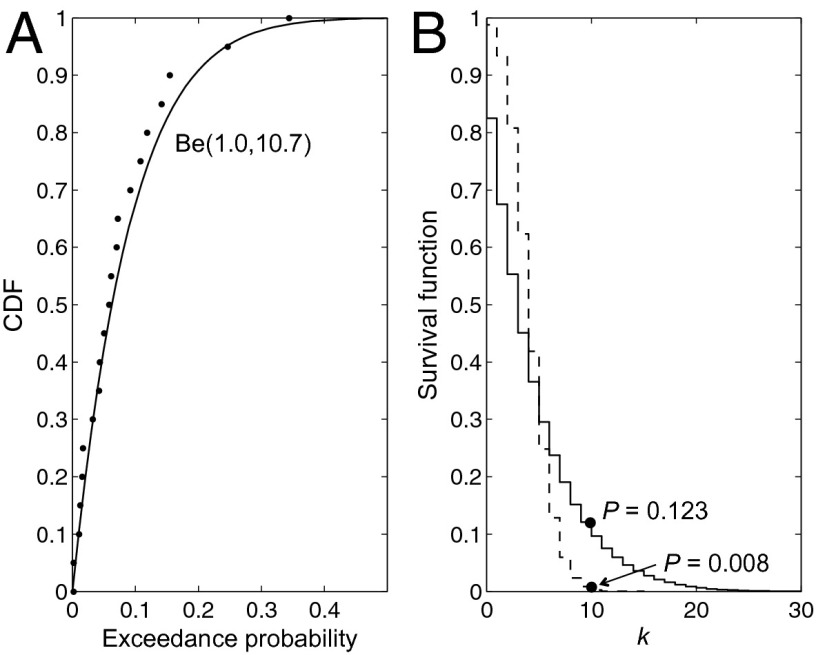

However, a test based on is often overly stringent, as can be seen from a simple example. We consider a PSHA model that comprises an experts’ ensemble of 20 equally weighted hazard curves, each an exponential function, (Fig. 1). The values sampled at x0 (arbitrarily chosen to be 0.29) give a mean exceedance probability of and can be represented by a beta distribution Be(α, β) with parameters α = 1.0 and β = 10.7 (Fig. 2A). Suppose this ground motion threshold is exceeded k = 10 times in n = 50 y; then the P value conditioned on the mean hazard is , whereas the unconditional value is P(k) = 0.123 (Fig. 2B). Thus, although this observational test rejects at a fairly high (99%) confidence level, it cannot reject the ontological hypothesis , even at a low (90%) level.

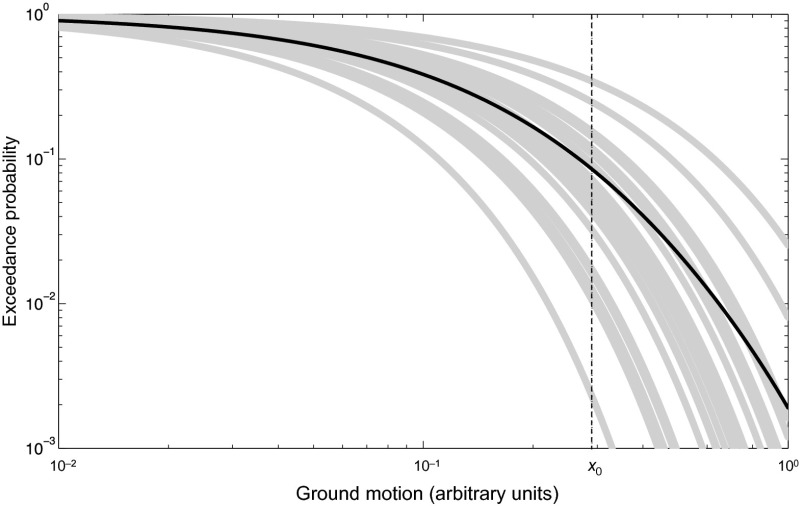

Fig. 1.

A simple PSHA model used in our testing examples. Gray lines show the experts’ ensemble of 20 exponential hazard curves {Fm(x): m = 1, …, 20}. Black line is the corresponding mean hazard curve . The distribution of values sampled at x0 (dashed line) is given in Fig. 2.

Fig. 2.

Results from test 1. (A) Discrete points are the cumulative distribution function (CDF) of the experts’ ensemble {Fm(x0): x0 = 0.29, m = 1, …, 20} from Fig. 1. When assigned equal weights (πm = 1/20), this ensemble can be represented by an extended experts’ distribution Be(1.0, 10.7), shown by the solid line. (B) Dashed line is the survival function (1 – CDF) of k or more exceedances in n = 50 trials conditioned on the mean hazard; solid line is the survival function of the unconditional exceedances, computed from Eq. 4. For the observation k = 10, the P value of the former is 0.008 and that of the latter is 0.123.

Finding an ontological error may not directly indicate what is wrong with the model. The ontological error might indicate that the parameters of the model were badly estimated, or that the basic structure of the model is far from reality. In our example test, a small P value could imply that either the beta distribution of ϕ, which characterizes the epistemic uncertainty, or the exponential distribution of Fm(x), which characterizes the aleatory variability, is wrong (or that both are). A small P value might also indicate that the data-generating process is evolving with time, so the data used for testing do not have the same distribution as the data used to calibrate the model.

Bayesian updating under the ontological hypothesis can improve the parameter estimates and thereby sharpen the extended experts’ distribution, but it cannot discover ontological errors, such as the inadequacy of the exponential distribution or a time dependence of the parameter λ not included in the time-independent model. To do that we must subject our “model of the world,” given here by p(ϕ), to a testing regime guided by an experimental concept that appropriately conditions nature’s aleatory variability.

Primacy of the Experimental Concept in Ontological Testing

The experimental concept in our PSHA example is very simple. We collect a set of yearly data {xn: n = 1, 2, …, N} and construct a binary sequence {en: n = 1, 2, …, N} by assigning en = 1 if xn exceeds a threshold value x0 and en = 0 if it does not. We observe that the sequence sums to k. We judge that the joint probability distribution of {en} is unchanged by any permutation of the indices; the yearly data are thus exchangeable and the sequence is Bernoulli. In the earthquake forecasting problem, we further assert, through a leap of faith, that future years are exchangeable with past years; i.e., the data sequence is exchangeable in Draper et al.’s (57) second sense. The predictive power of the time-independent PSHA model hangs on this assertion.

In general terms, the experimental concept specifies collections of data, observed and not yet observed, that are judged to be exchangeable when conditioned on a set of explanatory variables. Exchangeable events can be modeled as identical and independently distributed random variables with a well-defined frequency of occurrence (65, 66). This event frequency or chance—the limiting value of k/N in our example—represents the aleatory variability of the data-generating process (5, 6). Theoretical considerations and a finite amount of data can only constrain this probability within some epistemic uncertainty, quantified by the extended expert’s distribution.

Exchangeability thus distinguishes the aleatory variability, given by P(k | ϕ), from the epistemic uncertainty, given by p(ϕ). In our PSHA example, the exchangeability judgment links Eqs. 3 and 4 to de Finetti’s (65) representation of a Bernoulli process conditioned on p(ϕ) and thus connects the testing experiment to the frequentist concept of repeatability.

The primacy of the experimental concept can be illustrated by extending our PSHA example. The test in Fig. 2 (test 1) derives from an experimental concept based on a single exchangeability judgment. Now consider a second experimental concept (test 2) that distinguishes exceedance events in years when some observable index A is zero from those in years when A is unity. The data-generating process provides two sequences, {en(0): n = 1, 2, …, N0} when A = 0 and {en (1): n = 1, 2, …, N1} when A = 1. Both are judged to be Bernoulli, and they are observed to sum to k0 and k1 respectively. If A correlates with the frequency and/or magnitude variations in the earthquake rupture process, e.g., if the occurrence of large earthquakes and consequent ground shaking stronger than x0 are more likely when A = 1, then the expected frequency of k1/N1 might be greater than that of k0/N0.

As this example makes clear, it is not the aleatory variability intrinsic to the model that matters in testing, but rather the aleatory variability defined by the exchangeability judgments of the experimental concept. In other words, aleatory variability is an observable behavior of the data-generating process—nature itself—conditioned by the experimental concept to have well-defined frequencies. A model predicting this behavior can thus be tested for ontological error by frequentist (error statistical) methods.

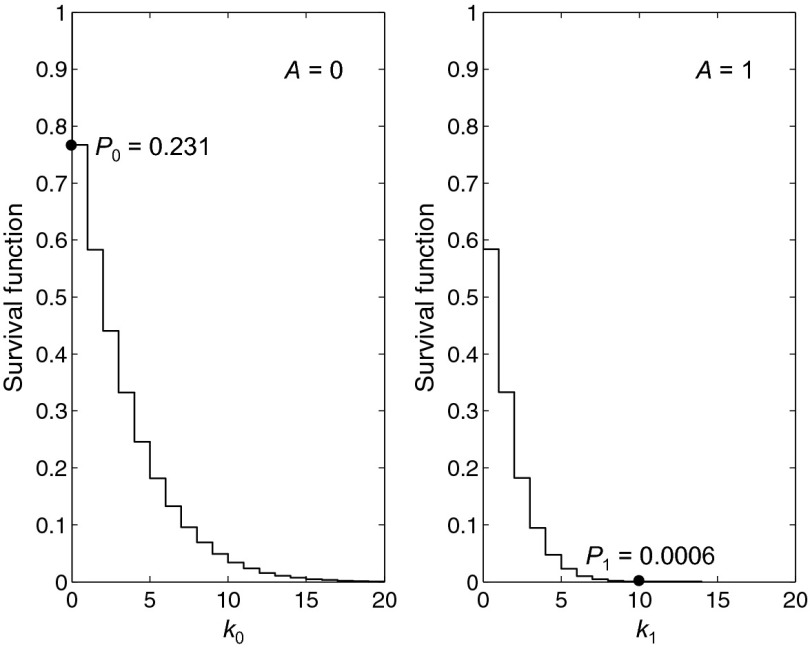

Suppose that, in a 50-y PSHA experiment, there are N0 = 35 y when A = 0 and N1 = 15 y with A = 1. Further suppose that no exceedances of the ground motion threshold are observed in the former set (k0 = 0), and 10 are recorded in the latter (k1 = 10). Test 1 applied to these datasets returns an identical result (k = 10), so the model passes with a prior predictive P value of 0.123, as shown in Fig. 2. However, in test 2, the A = 1 observation is much less likely under the ontological hypothesis (P1 = 0.0006) than the A = 0 observation (P0 = 0.231), and the P value for the combined result is quite small, 0.0012 (Fig. 3). Therefore, the model can be rejected by test 2 with high confidence.

Fig. 3.

Results from test 2. In a 50-y PSHA experiment, no ground motion exceedances are observed in the 35 y when A = 0 (Left), which gives P0 = 0.231, and 10 exceedances are observed in the 15 y with A = 1 (Right), which gives P1 = 0.0006. The P value for the combined result is 0.0012.

From this experiment, we infer that the data-generating process is probably A dependent and therefore time dependent (all years are not exchangeable). Hence, we might seek an alternative model that captures this type of time dependence in its aleatory variability, and we might reelicit expert opinion to characterize the epistemic uncertainty in its (two or more) aleatory frequencies.

In ontological testing, Box’s famous generalization that “all models are wrong, but some are useful” (67) can be restated as “all models are wrong, but some are acceptable under particular experimental concepts.” Qualifying a model under an appropriate set of experimental concepts is the key to model validation, in which we decide if a model replicates the data-generating process well enough to be sufficiently reliable for some useful purpose, such as long-term seismic forecasting. In our example, a model that passes test 1 may be adequate for time-independent forecasting, but, by failing test 2, it should be rejected as a viable time-dependent forecast.

Discussion

For frequentists, a probability is the limiting frequency of a random event or the long-term propensity to produce such a limiting frequency (9, 68); for Bayesians, it is a subjective degree of belief that a random event will occur (14). Advocates on both sides have argued that degrees of belief cannot be measured and, by implication, cannot be rejected (9, 24, 69). In the words of one author (70), “the degree of belief probability is not a property of the event (experiment) but rather of the observer. There exists no uniquely true probability, only one’s true belief.” Within the subjectivist Bayesian framework, one’s true belief can be informed, but not rejected, by experiment.

For us, the use of subjective probability such as expert opinion poses no problems for ontological testability as long as the experimental concept defines sets of exchangeable data with long-run frequencies determined by the data-generating process. These frequencies, which characterize the aleatory variability, have epistemic uncertainty described by the experts’ distribution. Expert opinion is thus regarded as a measurement system that produces a model that can be tested.

To illustrate this point with an example far from PSHA, we recall an experiment reported by Sir Francis Galton in 1907. During a tour of the English countryside, he recorded 787 guesses of the weight of an ox made by a farming crowd and found that the average was correct to a single pound (71). The experts’ distribution he tabulated passes the appropriate Student’s t test (retrospectively, of course, because this test was not invented until 1908).

We note one difference between farmers and PSHA experts. The experts’ distribution measures the epistemic uncertainty at a particular epoch, which may be larger or smaller depending on how the experts are able to sample the appropriate information space. In Galton’s experiment, a farmer looks individually at the ox, reaching his estimate more or less independently of his colleagues. As more farmers participate, they add new data, and the epistemic uncertainty is reduced by averaging their guesses. At a particular epoch, adding more PSHA experts will better determine, but usually not reduce, the epistemic uncertainty, because they rarely make independent observations but work instead from a common body of knowledge, which may be very limited. This and other issues related to the elicitation of expert opinion, such as how individuals should be calibrated and how a consensus should be drawn from groups, have been extensively studied (5, 72, 73).

The ontological tests considered here are conditional on the experimental concept, which may be weak or even wrong. An experimental concept will be incorrect when one or more of its exchangeability judgments violate reality. The constituent assumptions can often be tested independently of the model; e.g., through correlation analysis and other types of data checks (57, 74, 75). In our example, if the frequency estimator k/N increases in the long run (e.g., if the proportion N1/N0 increases), then the event set is not likely to be exchangeable, and the experimental concept of test 1 needs to be rethought to allow for this secular time dependence.

Through exchangeability judgments, the experimental concept ensures that aleatory variables have well-defined frequencies, which is just what we need to set up a regime for testing the ontological hypothesis. Bayesian model checking provides us with several options for pure significance testing. The ontological hypothesis can be evaluated using

-

i)

the prior predictive P value, computed directly from Eq. 4 (17);

-

ii)

the posterior predictive P value, computed after the experts’ distribution has been updated according to Bayes; e.g., (18, 76); or

-

iii)

a partial posterior predictive P value, computed for a data subset after the experts’ distribution has been updated using a complementary (calibration) data subset (77).

An ontological error is discovered when the new data are shown to be inconsistent with p(ϕ) or its updated version p′(ϕ); e.g., when a small P value is obtained from the experiment.

Among these options, the posterior predictive checks (ii) and (iii) are most often used by Bayesian objectivists because their priors are often improper and uninformative (20, 77). Moreover, in many types of statistical modeling, the goal is to assess the model’s ability to fit the data a posteriori, not its ability to predict the data a priori. According to Gelman (78), for example, “All models are wrong, and the purpose of model checking (as we see it) is not to reject a model but rather to understand the ways in which it does not fit the data. From a Bayesian point of view, the posterior distribution is what is being used to summarize inferences, so this is what we want to check.” In particular, the power of the test, or more generally its severity (9), is not very important. “If a certain posterior predictive check has zero or low power, this is not a problem for us: it simply represents a dimension of the data that is automatically or virtually automatically fit by the model” (75).

In forecasting, however, we are most interested in a model’s predictive capability; therefore, severe tests based on the prior predictive check (i) are always desirable. This testing regime is entirely prospective; the models are independent of the data used in the test (the testing is blind), and there are no nuisance parameters. The extended experts’ distribution p(ϕ) is subjective, informative, and always proper. “Using the data twice” is not an issue, as it is with the posterior predictive check (ii) (16). If based on the same data, prior predictive tests are always more severe than posterior predictive tests. Continual prospective testing now guides the validation of forecasting models in civil protection and other operational applications (79–82).

When the experimental concept is weak, lots of models, even poor predictors, can pass the test. An experimental concept provides a severe test, in Mayo’s (9) sense, if it has a high probability of detecting an ontological error of the type that matters to the model’s forecasting application. Severe tests require informative ensembles of exchangeable observations (although we must admit that these are often lacking in long-term PSHA). In practice, batteries of experimental concepts must be used to specify the aleatory variability of the data-generating process and organize severe testing gauntlets relevant to the problem at hand.

When the problem is forecasting, the most crucial features of an experimental concept are the assertions that past and future events are exchangeable. Scientists make these leaps of faith not blindly, but through careful consideration of the physical principles that govern the system behaviors they seek to predict. Therefore, the experimental concepts used to test models, as much as the models themselves, are the mechanism for encoding our physical understanding into the iterative process of system modeling. Validating our predictions through ontological testing is the primary means by which we establish our understanding of how the world works, and thus an essential aspect of the scientific method (1). It seems to us that the more pessimistic views of system predictability (2, 3) fail to recognize the power of this methodology in separating behaviors that are predictable from those that are not.

This power can be appreciated by considering cases where the exchangeability of past and future events is dubious. A notorious example is the prediction of financial markets. Exchangeability judgments are problematic in these experiments, because the markets learn so rapidly from past experience (83, 84). Without exchangeability, no experimental concept is available to discipline the system variability. Processes governed by physical laws are less contingent and more predictable than these agent-based systems; for example, exchangeability judgments can be guided by the characteristic scales of physical processes, leading to well-configured experimental concepts.

The points made in this paper are basic and without mathematical novelty. In terms of the interplay between Bayesian and frequentist methods, our view aligns well with the statistical unificationists (10, 85). However, it differs in the importance we place on the experimental concept in structuring the uncertainty hierarchy—aleatory, epistemic, ontological—and our emphasis on testing for ontological errors as a key step in the iterative process of forecast validation.

As with many conceptual discussions of statistical methodology, the proof is in the pudding. Does the particular methodology advocated here help to clarify any persistent misunderstandings that have hampered practitioners? We think so, particularly in regard to the widespread confusion about how to separate aleatory variability from epistemic uncertainty. Consider two examples:

-

•

In a review of the SSHAC methodology requested by the US Nuclear Regulatory Commission, a panel of the National Research Council asserted that “the value of an epistemic/aleatory separation to the ultimate user of a PSHA is doubtful… The panel concludes that, unless one accepts that all uncertainty is fundamentally epistemic, the classification of PSHA uncertainty as aleatory or epistemic is ambiguous.” (54). Here the confusion stems from SSHAC’s (5) epistemic/aleatory classification scheme, which is entirely model-based and thus ambiguous.

-

•

In his discussion of “how to cheat at coin and die tossing,” Jaynes (24) describes the ambiguity of randomness in terms of how the tossing is done; e.g., how high a coin is tossed. “The writer has never thought of a biased coin ‘as if it had a physical probability’ because, being a professional physicist, I know that it does not have a physical probability.” His confusion arises because he associates the aleatory frequency with the physical process, which is ambiguous unless we fix the experimental concept.

Both the model-based and physics-based ambiguity in setting up the aleatory/epistemic dichotomy can be removed by specifying an experimental concept. By testing the ontological hypothesis under appropriate experimental concepts, we can answer the important question of whether a model’s predictions conform to our conditional view of nature’s true variability.

Supplementary Material

Acknowledgments

We thank Donald Gillies for discussions on the philosophy of probability. W.M. was supported by Centro per la Pericolosità Sismica dell' Istituto Nazionale di Geofisica e Vulcanologia and the Real Time Earthquake Risk Reduction (REAKT) Project (Grant 282862) of the European Union’s Seventh Programme for Research, Technological Development and Demonstration. T.H.J. was supported by the Southern California Earthquake Center (SCEC) under National Science Foundation Cooperative Agreement EAR-1033462 and US Geological Survey Cooperative Agreement G108C20038. The SCEC contribution number for this paper is 1953.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1410183111/-/DCSupplemental.

References

- 1.American Association for the Advancement of Science . Science for All Americans: A Project 2061 Report on Literacy Goals in Science, Mathematics and Technology. Washington, DC: American Association for the Advancement of Science; 1989. [Google Scholar]

- 2.Oreskes N, Shrader-Frechette K, Belitz K. Verification, validation, and confirmation of numerical models in the Earth sciences. Science. 1994;263(5147):641–646. doi: 10.1126/science.263.5147.641. [DOI] [PubMed] [Google Scholar]

- 3.Pilkey OH, Pilkey-Jarvis L. Useless Arithmetic: Why Environmental Scientists Can’t Predict the Future. New York: Columbia Univ Press; 2007. [Google Scholar]

- 4.Hacking I. The Emergence of Probability. Cambridge, UK: Cambridge Univ Press; 1975. [Google Scholar]

- 5. Senior Seismic Hazard Analysis Committee (1997) Recommendations for Probabilistic Seismic Hazard Analysis: Guidance on Uncertainty and Use of Experts (U.S. Nuclear Regulatory Commission, U.S. Dept. of Energy, Electric Power Research Institute), NUREG/CR-6372, UCRL-ID-122160.

- 6.Goldstein M. Observables and models: Exchangeability and the inductive argument. In: Damien P, Dellaportas P, Olson NG, Stephens DA, editors. Bayesian Theory and Its Applications. Oxford, UK: Oxford Univ Press; 2013. pp. 3–18. [Google Scholar]

- 7.Murphy AH, Winkler RL. A general framework for forecast verification. Mon Weather Rev. 1987;115:1330–1338. [Google Scholar]

- 8.Schorlemmer D, Gerstenberger MC, Wiemer S, Jackson DD, Rhoades DA. Earthquake likelihood model testing. Seismol Res Lett. 2007;78:17–29. [Google Scholar]

- 9.Mayo DG. Error and the Growth of Experimental Knowledge. Chicago: Univ of Chicago Press; 1996. [Google Scholar]

- 10.Gelman A, Shalizi CR. Philosophy and the practice of Bayesian statistics. Br J Math Stat Psychol. 2013;66(1):8–38. doi: 10.1111/j.2044-8317.2011.02037.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cox DR, Hinkley DV. Theoretical Statistics. London: Chapman & Hall; 1974. [Google Scholar]

- 12.Musson R. On the nature of logic trees in probabilistic seismic hazard assessment. Earthquake Spectra. 2012;28(3):1291–1296. [Google Scholar]

- 13.Dequech D. Uncertainty: Individuals, institutions and technology. Camb J Econ. 2004;28(3):365–378. [Google Scholar]

- 14.Lindley DV. The philosophy of statistics. Statistician. 2000;49(3):293–337. [Google Scholar]

- 15.Mayo DG, Spanos A. Severe testing as a basic concept in a Neyman-Pearson philosophy of induction. Br J Philos Sci. 2006;57:323–357. [Google Scholar]

- 16.Bayarri MJ, Berger J. P-values for composite null models. J Am Stat Assoc. 2000;95(452):1127–1142. [Google Scholar]

- 17.Box GEP. Sampling and Bayes inference in scientific modelling and robustness. Roy Statist Soc Ser A. 1980;143(4):383–430. [Google Scholar]

- 18.Gelman A, Ming XL, Stern HS. Posterior predictive assessment of model fitness via realized discrepancies (with discussion) Stat Sin. 1996;6:733–807. [Google Scholar]

- 19.Sellke T, Bayarri MJ, Berger JO. Calibration of p values for testing precise null hypotheses. Am Stat. 2001;55(1):62–71. [Google Scholar]

- 20.Gelman A, Carlin JB, Stern HS, Rubin DB. Bayesian Data Analysis. 2nd Ed. London: Chapman & Hall/CRC; 2003. [Google Scholar]

- 21.Mayo D. Statistical science and philosophy of science: Where do/should they meet in 2011 (and beyond)? Rationality. Mark Morals. 2011;2:79–102. [Google Scholar]

- 22.Morey RD, Romeijn J-W, Rouder JN. The humble Bayesian: Model checking from a fully Bayesian perspective. Br J Math Stat Psychol. 2013;66(1):68–75. doi: 10.1111/j.2044-8317.2012.02067.x. [DOI] [PubMed] [Google Scholar]

- 23.Jaffe AH. A polemic on probability. Science. 2003;301(5638):1329–1330. [Google Scholar]

- 24.Jaynes ET. Probability Theory: The Logic of Science. New York: Cambridge Univ Press; 2003. [Google Scholar]

- 25.Cornell CA. Engineering seismic risk analysis. Bull Seismol Soc Am. 1968;58(5):1583–1606. [Google Scholar]

- 26.McGuire RK. Seismic Hazard and Risk Analysis. Oakland, CA: Earthquake Engineering Research Institute; 2004. [Google Scholar]

- 27.Working Group on California Earthquake Probabilities Seismic hazards in southern California: Probable earthquakes, 1994-2024. Bull Seismol Soc Am. 1995;85(2):379–439. [Google Scholar]

- 28. Headquarters for Earthquake Research Promotion (2005) National Seismic Hazard Maps for Japan (Report issued on 23 March 2005, available in English translation at http://www.jishin.go.jp/main/index-e.html)

- 29.Frankel AD, et al. 1996. National Seismic Hazard Maps; Documentation June 1996. (US Geological Survey Open-file Report1996-532)

- 30. MPS Working Group (2004) Redazione della mappa di pericolosita sismica prevista dall’Ordinanza PCM del 20 marzo 2003, rapporto conclusivo per il Dipartimento della Protezione Civile (Istituto Nazionale di Geofisica e Vulcanologia, Milano-Roma, http://zonesismiche.mi.ingv.it)

- 31.Petersen MD, et al. 2008. Documentation for the 2008 Update of the United States National Seismic Hazard Maps. (US Geological Survey Open-file Report 2008-1128)

- 32.Bedford T, Cooke R. Probabilistic Risk Analysis: Foundations and Methods. Cambridge: Cambridge Univ Press; 2001. [Google Scholar]

- 33.Baker JW, Cornell CA. Uncertainty propagation in probabilistic seismic loss estimation. Struct Saf. 2008;30:236–252. [Google Scholar]

- 34.Stein S, Geller R, Liu M. Bad assumptions or bad luck: Why earthquake hazard maps need objective testing. Seismol Res Lett. 2011;82(5):623–626. [Google Scholar]

- 35.Stein S, Geller R, Liu M. Why earthquake hazard maps often fail and what to do about it. Tectonophysics. 2012;562-563:1–25. [Google Scholar]

- 36.Stirling M. Earthquake hazard maps and objective testing: The hazard mapper’s point of view. Seismol Res Lett. 2012;83(2):231–232. [Google Scholar]

- 37.Hanks TC, Beroza GC, Toda S. Have recent earthquakes exposed flaws in or misunderstandings of probabilistic seismic hazard analysis? Seismol Res Lett. 2012;83(5):759–764. [Google Scholar]

- 38.Wyss MA, Nekrasova A, Kossobokov V. Errors in expected human losses due to incorrect seismic hazard estimates. Nat Hazards. 2012;62(3):927–935. [Google Scholar]

- 39.Frankel A. Comment on “Why earthquake hazard maps often fail and what to do about it” by S. Stein, R. Geller, and M. Liu. Tectonophys. 2013;592:200–206. [Google Scholar]

- 40.Savage JC. Criticism of some forecasts of the National Earthquake Prediction Evaluation Council. Bull Seismol Soc Am. 1991;81(3):862–881. [Google Scholar]

- 41.Field EH, et al. Uniform California Earthquake Rupture Forecast, Version 2 (UCERF 2) Bull Seismol Soc Am. 2009;99(4):2053–2107. [Google Scholar]

- 42.Geller RJ. Shake-up time for Japanese seismology. Nature. 2011;472(7344):407–409. doi: 10.1038/nature10105. [DOI] [PubMed] [Google Scholar]

- 43.Krinitzsky EL. Problems with logic trees in earthquake hazard evaluation. Eng Geol. 1995;39:1–3. [Google Scholar]

- 44.Castaños H, Lomnitz C. PSHA: Is it science? Eng Geol. 2002;66(3-4):315–317. [Google Scholar]

- 45.Klügel J-U. Probabilistic seismic hazard analysis for nuclear power plants – Current practice from a European perspective. Nucl Eng Technol. 2009;41(10):1243–1254. [Google Scholar]

- 46.Cox LA., Jr Confronting deep uncertainties in risk analysis. Risk Anal. 2012;32(10):1607–1629. doi: 10.1111/j.1539-6924.2012.01792.x. [DOI] [PubMed] [Google Scholar]

- 47.Marzocchi W, Zechar JD, Jordan TH. Bayesian forecast evaluation and ensemble earthquake forecasting. Bull Seismol Soc Am. 2012;102(6):2574–2584. [Google Scholar]

- 48.Kulkarni RB, Youngs RR, Coppersmith KJ. 1984. Assessment of confidence intervals for results of seismic hazard analysis. Proceedings of the Eighth World Conference on Earthquake Engineering, vol 1, 263-270, International Association for Earthquake Engineering, San Francisco.

- 49.Runge AK, Scherbaum F, Curtis A, Riggelsen C. An interactive tool for the elicitation of subjective probabilities in probabilistic seismic-hazard analysis. Bull Seismol Soc Am. 2013;103(5):2862–2874. [Google Scholar]

- 50.Bommer JJ, Scherbaum F. The use and misuse of logic trees in probabilistic seismic hazard analysis. Earthquake Spectra. 2008;24(4):997–1009. [Google Scholar]

- 51.Hoeting JA, Madigan D, Raftery AE, Volinsky CT. Bayesian model averaging: A tutorial. Stat Sci. 1999;14(4):382–417. [Google Scholar]

- 52.Scherbaum F, Kuhen NM. Logic tree branch weights and probabilities: Summing up to one is not enough. Earthquake Spectra. 2011;27(4):1237–1251. [Google Scholar]

- 53. Working Group on California Earthquake Probabilities (2003) Earthquake Probabilities in the San Francisco Bay Region: 2002–2031 (USGS Open-File Report 2003-214)

- 54.National Research Council Panel on Seismic Hazard Evaluation . Review of Recommendations for Probabilistic Seismic Hazard Analysis: Guidance on Uncertainty and Use of Experts. Washington, DC: National Academy of Sciences; 1997. [Google Scholar]

- 55.Musson RMW. Against fractiles. Earthquake Spectra. 2005;21(3):887–891. [Google Scholar]

- 56.Lindley DV, Novick MR. The role of exchangeability in inference. Ann Stat. 1981;9(1):45–58. [Google Scholar]

- 57.Draper D, Hodges JS, Mallows CL, Pregibon D. Exchangeability and data analysis. J R Stat Soc Ser A Stat Soc. 1993;156(1):9–37. [Google Scholar]

- 58.Bernardo JM. The concept of exchangeability and its applications. Far East J Math Sci. 1996;4:111–122. [Google Scholar]

- 59.McGuire RK, Barnhard TP. Effects of temporal variations in seismicity on seismic hazard. Bull Seismol Soc Am. 1981;71(1):321–334. [Google Scholar]

- 60.Stirling M, Petersen M. Comparison of the historical record of earthquake hazard with seismic hazard models for New Zealand and the continental United States. Bull Seismol Soc Am. 2006;96(6):1978–1994. [Google Scholar]

- 61.Albarello D, D'Amico V. Testing probabilistic seismic hazard estimates by comparison with observations: An example in Italy. Geophys J Int. 2008;175:1088–1094. [Google Scholar]

- 62.Stirling M, Gerstenberger M. Ground motion-based testing of seismic hazard models in New Zealand. Bull Seismol Soc Am. 2010;100(4):1407–1414. [Google Scholar]

- 63.McGuire RK, Cornell CA, Toro GR. The case for using mean seismic hazard. Earthquake Spectra. 2005;21(3):879–886. [Google Scholar]

- 64.Cox LA, Brown GC, Pollock SM. When is uncertainty about uncertainty worth characterizing? Interfaces. 2008;38(6):465–468. [Google Scholar]

- 65.de Finetti B. Theory of Probability: A Critical Introductory Treatment. London: John Wiley and Sons; 1974. [Google Scholar]

- 66.Feller W. An Introduction to Probability Theory and its Applications. 3rd Ed. Vol II. New York: John Wiley and Sons; 1966. [Google Scholar]

- 67.Box GEP. Science and statistics. J Am Stat Assoc. 1976;71(356):791–799. [Google Scholar]

- 68.Popper KR. Realism and the Aim of Science. London: Hutchinson; 1983. [Google Scholar]

- 69.de Finetti B. Probabilism. Erkenntnis. 1989;31:169–223. [Google Scholar]

- 70.Vick SG. Degrees of Belief: Subjective Probability and Engineering Judgment. Reston, VA: ASCE Press; 2002. [Google Scholar]

- 71.Galton F. Vox populi. Nature. 1907;75(1949):450–451. [Google Scholar]

- 72.Cooke RM. Experts in Uncertainty: Opinion and Subjective Probability in Science. New York: Oxford Univ Press; 1991. [Google Scholar]

- 73. U.S. Environmental Protection Agency (2011) Expert Elicitation Task Force White Paper (Science and Technology Policy Council, U.S. Environmental Protection Agency, Washington, DC)

- 74.O’Neill B. Exchangeability, correlation, and Bayes’ effect. Int Stat Rev. 2009;77(2):241–250. [Google Scholar]

- 75.Gelman A. Induction and deduction in Bayesian data analysis. Rationality. Mark Morals. 2011;2:67–78. [Google Scholar]

- 76.Rubin DB. Bayesian justifiable and relevant frequency calculations for the applied statistician. Ann Stat. 1984;12(4):1151–1172. [Google Scholar]

- 77.Bayarri MJ, Castellanos ME. Bayesian checking of the second levels of hierarchical models. Stat Sci. 2007;22(3):322–343. [Google Scholar]

- 78.Gelman A. Comment: Bayesian checking of the second levels of hierarchical models. Stat Sci. 2007;22(3):349–352. [Google Scholar]

- 79.Jordan TH, et al. Operational earthquake forecasting: State of knowledge and guidelines for implementation. Ann Geophys. 2011;54(4):315–391. [Google Scholar]

- 80.Inness P, Dorling S. Operational Weather Forecasting. New York: John Wiley and Sons; 2013. [Google Scholar]

- 81.Marzocchi W, Lombardi AM, Casarotti E. 2014. The establishment of an operational earthquake forecasting system in Italy. Seismol Res Lett, in press.

- 82.Zechar JD, et al. The Collaboratory for the Study of Earthquake Predictability perspectives on computational earthquake science. Concurr Comput. 2010;22:1836–1847. [Google Scholar]

- 83.Davidson P. Reality and economic theory. J Post Keynes Econ. 1996;18(4):479–508. [Google Scholar]

- 84.Silver N. The Signal and the Noise: Why So Many Predictions Fail But Some Don’t. New York: Penguin Press; 2012. [Google Scholar]

- 85. Bayarri MJ Berger JO (2013) Hypothesis testing and model uncertainty. Bayesian Theory and Its Applications, ed Damien P, Dellaportas P, Olson NG, and Stephens DA (Oxford Univ Press, Oxford, UK), pp. 361–194.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.