Abstract

Purpose

To assess the relationship between healthcare system performance on nationally endorsed prostate cancer quality of care measures and prostate cancer treatment outcomes.

Methods

This is a retrospective cohort study including 48,050 men from Surveillance Epidemiology and End Results – Medicare linked data who were diagnosed with localized prostate cancer between 2004 and 2009 and followed through 2010. Based on a composite quality measure, we categorized the healthcare systems in which these men were treated into 1-star (bottom 20%), 2-star (middle 60%), and 3-star (top 20%) systems. We then examined the association of healthcare system-level quality of care with outcomes using multivariable logistic and Cox regression.

Results

Patients who underwent prostatectomy in 3-star versus 1-star healthcare systems had a lower risk of perioperative complications (odds ratio 0.80, 95% confidence interval [CI] 0.64–1.00). However, these patients were more likely to undergo a procedure addressing treatment-related morbidity (e.g., 11.3% vs. 7.8% treated for sexual morbidity, p=0.043). Among patients undergoing radiotherapy, star-ranking was not associated with treatment-related morbidity. Among all patients, star-ranking was not significantly associated with all-cause mortality (Hazard Ratio [HR] 0.99, 95% CI 0.84–1.15) or secondary cancer therapy (HR 1.04, 95% CI 0.91–1.20).

Conclusion

We found no consistent associations between healthcare system quality and outcomes, which questions how meaningful these measures ultimately are for patients. Thus, future studies should focus on the development of more discriminative quality measures.

Keywords: prostate cancer, quality of care, outcomes

Introduction

The majority of the more than 240,000 men newly diagnosed with prostate cancer each year undergo treatment with either prostatectomy or radiotherapy.1,2 However, outcomes of these treatments vary widely, depending on where the patient is treated. For instance, the likelihood of complications and morbidity are higher for men treated at low-volume healthcare systems,3 implying that some healthcare systems have better outcomes than others.

To better assess the quality of prostate cancer care, a group at RAND codified a comprehensive list of performance measures in 2000.4,5 The Physician Consortium for Performance Improvement built upon this work and developed several clinically relevant quality measures that are grounded in a robust evidence base.6 Subsequently, several of these measures have been endorsed by the National Quality Forum and have been incorporated into the Centers for Medicare and Medicaid Services’ Physician Quality Reporting System.7,8 Their implementation notwithstanding, the extent to which performance on these measures is associated with better outcomes of treatment has remained unclear. Because they are generally thought to be reflective of quality prostate cancer care, it is possible that healthcare systems performing well on these measures will have better outcomes. However, due to their narrow scope and disconnect from what directly affects the sequelae of treatment, these measures may not be tightly linked with outcomes.

For these reasons, we assessed the extent to which adherence to these established performance measures is associated with outcomes of treatment, including perioperative complications and length of stay after surgery, treatment-related morbidity, the use of secondary cancer therapy and all-cause mortality.

Methods

Study population

We used Surveillance, Epidemiology, and End Results (SEER) – Medicare data for the years 2004 through 2009 to identify patients with newly diagnosed localized prostate cancer as their only cancer. We included subjects 66 years of age and older in the fee-for-service program eligible for Parts A and B of Medicare for at least 12 months before and after prostate cancer diagnosis. We limited the study to patients treated with radical prostatectomy or radiotherapy because the endorsed quality measures only apply to these patients.6,7 Finally, we excluded patients who were assigned to a healthcare system that was not within the SEER 18 regions. Using these criteria, our study population consisted of 48,050 patients who were followed through December 31, 2010.

Identifying healthcare systems

Previous empirical work has demonstrated that physicians and beneficiaries form naturally occurring groups centered around hospitals.9,10 These groups are well-suited units for quality measurement, because they represent definable targets for quality improvement.10 They also form the basis for the assignment of Medicare beneficiaries to Accountable Care Organizations.9 Thus, based on the methodology used to assign Medicare beneficiaries to Accountable Care Organizations,10 we assigned prostate cancer patients to the healthcare system in which they received most or all of their prostate cancer care.

Measuring quality of care

As previously described,11 we used five nationally endorsed measures to ensure a broad view of quality of care:5,6 (1) the proportion of patients seen by both a urologist and a radiation oncologist between diagnosis and treatment, (2) the proportion of patients with low-risk12 cancer avoiding receipt of a non-indicated bone scan, (3) the proportion of patients with high-risk12 cancer receiving adjuvant androgen deprivation therapy (ADT) while undergoing radiotherapy, (4) the proportion of patients treated by a high volume (upper tertile) provider (surgeon or radiation oncologist), and (5) the proportion of patients having at least two follow-up visits within one year after treatment with a treating surgeon or radiation oncologist.

To obtain a global assessment of the quality of prostate cancer care provided for each patient, we developed a composite patient-average measure of quality. For this measure, the denominator for each patient was the number of measures for which the patient was eligible, and the numerator was the number of measures that were successfully met.13 Because different approaches have been described for developing composite measures, we performed sensitivity analyses using an all-or-none approach in addition to the patient-average.13 The results of these sensitivity analyses were not materially different from the main analyses, so only those related to the patient-average are presented.

We fit multilevel models to examine performance on the composite quality measure across healthcare systems. Our outcome was the patient-average composite quality measure described above. These models allowed us to account for the nested structure of our data (i.e., patients nested within healthcare systems) by introducing a healthcare system-level random effect. We used empirical Bayes prediction to calculate the mean performance on the composite measure for each healthcare system, adjusting for patient (age in years, race, year of diagnosis, D’Amico risk group,12 comorbidity,14 socioeconomic status,15 and urban vs. rural residence) and regional characteristics (number of hospital beds, number of urologists, and number of radiation oncologists per 100,000 men aged 65 and older; Medicare managed care penetration; all obtained from the “Area Resource File”). This approach also accounted for differences in reliability of individual healthcare system performance estimates resulting from differences in sample size.16

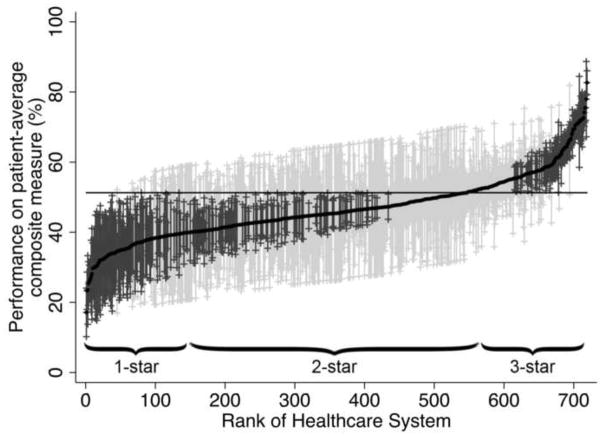

Next, we ranked healthcare systems from lowest to highest based on their adjusted mean performance on the composite measure (Figure 1). Across these systems, we observed significant variation in performance ranging from 19% to 84% (intraclass correlation 0.16). Building on previous work, we then categorized healthcare systems into 1-star, 2-star, and 3-star groups, encompassing the “worst” (bottom 20% of systems), the middle 60%, and the “best” (top 20% of systems),17 which served as our exposure.

Figure 1.

Variation in performance on the patient-average composite measure across the 719 healthcare systems. The solid black line represents the adjusted performance rate for each healthcare system. The horizontal line represents the adjusted overall mean rate of performance. Error bars represent 95% confidence intervals for the rates of the individual healthcare systems. Black error bars represent rates that are statistically significantly different from the overall mean. Grey error bars represent rates that are not significantly different from the overall mean.

Prostate cancer outcomes

Using well defined algorithms, we evaluated outcomes for all patients undergoing radical prostatectomy or radiotherapy (Appendix Table),18,19 including perioperative complications and length of stay after surgery, treatment-related morbidity (urinary, sexual, and gastrointestinal), the use of secondary cancer therapy, and all-cause mortality. We defined secondary cancer therapy as (1) use of radiotherapy or hormonal therapy after radical prostatectomy,18 or (2) use of ADT after 6 months of completion of radiotherapy for patients who did not receive concurrent ADT while undergoing radiation, or (3) use of ADT after 36 months of completion of radiotherapy for patients who did receive concurrent ADT.19 To measure all-cause mortality, we used the Medicare date of death.

Statistical analyses

We first assessed for differences in patient and regional characteristics across our healthcare system quality exposure using chi-squared tests. Next, we measured associations between healthcare system star-ranking and the risk of perioperative complications using logistic regression models. With the patient serving as our unit of analysis, the star-ranking of each healthcare system was entered as a categorical variable and Huber/White sandwich estimators of variance were used to account for the correlation of observed outcomes within healthcare systems. Length of stay was log-transformed and then modeled with a linear regression model. All models were adjusted for the patient and regional characteristics described in Tables 1 and 2. From these models, we then calculated the adjusted risk for perioperative complications and adjusted length of stay.

Table 1.

Patient characteristics, overall and according to quality ranking of healthcare system. IMRT: intensity-modulated radiotherapy; EBRT: external beam radiotherapy

| Healthcare System Quality (column %) | |||||

|---|---|---|---|---|---|

| N | 1-star | 2-star | 3-star | p | |

| Overall number of patients | 48,050 | 5,125 | 23,657 | 19,268 | |

| Age | <0.001* | ||||

| 66–69 | 16,278 | 33.2 | 35.0 | 32.7 | |

| 70–74 | 17,540 | 37.5 | 36.5 | 36.3 | |

| 75–79 | 10,361 | 21.4 | 21.0 | 22.3 | |

| 80–84 | 3,315 | 6.8 | 6.5 | 7.4 | |

| 85+ | 556 | 1.0 | 1.1 | 1.3 | |

| Race | <0.001** | ||||

| White | 39,915 | 78.7 | 83.1 | 84.2 | |

| Black | 4,687 | 13.9 | 9.2 | 9.3 | |

| Hispanic | 841 | 2.8 | 1.9 | 1.3 | |

| Asian | 1,211 | 2.2 | 2.8 | 2.3 | |

| Other/Unknown | 1,396 | 2.4 | 2.9 | 3.0 | |

| Comorbidity | 0.015* | ||||

| 0 | 31,703 | 64.1 | 66.1 | 66.4 | |

| 1 | 10,856 | 23.5 | 22.7 | 22.2 | |

| 2 | 3,433 | 7.5 | 7.1 | 7.2 | |

| 3+ | 2,058 | 4.9 | 4.2 | 4.2 | |

| Clinical stage | <0.001* | ||||

| T1 | 27,559 | 57.8 | 56.3 | 58.8 | |

| T2 | 19,135 | 39.1 | 40.8 | 39.0 | |

| T3 | 1,164 | 2.8 | 2.6 | 2.1 | |

| T4 | 123 | 0.3 | 0.3 | 0.2 | |

| Gleason Grade | <0.001* | ||||

| ≤6 | 19,668 | 41.1 | 40.3 | 43.0 | |

| 7 | 20,104 | 41.7 | 43.1 | 41.6 | |

| ≥8 | 7,685 | 17.2 | 16.6 | 15.4 | |

| Prostate Specific Antigen | <0.001* | ||||

| low (≤10 ng/ml) | 32,897 | 70.7 | 76.3 | 77.3 | |

| intermediate | 6,917 | 18.5 | 16.0 | 15.3 | |

| high (>20 ng/ml) | 3,419 | 10.8 | 7.7 | 7.4 | |

| D’Amico risk12 | <0.001* | ||||

| Low | 13,217 | 28.8 | 30.1 | 32.2 | |

| Intermediate | 16,969 | 38.6 | 39.8 | 39.5 | |

| High | 12,715 | 32.6 | 30.1 | 28.3 | |

| Year of diagnosis | 0.004* | ||||

| 2004 | 8,223 | 18.2 | 17.6 | 16.2 | |

| 2005 | 7,850 | 15.9 | 16.4 | 16.4 | |

| 2006 | 8,441 | 17.1 | 17.7 | 17.6 | |

| 2007 | 8,717 | 18.2 | 17.9 | 18.5 | |

| 2008 | 7,613 | 16.0 | 15.5 | 16.2 | |

| 2009 | 7,206 | 14.6 | 15.0 | 15.1 | |

| 25% or more of adults in census tract with a college education | 23,022 | 38.7 | 47.5 | 51.1 | <0.001* |

| Median annual household income of census tract | <0.001* | ||||

| Low (≤$40,874) | 15,976 | 45.5 | 33.9 | 29.4 | |

| Intermediate | 15,985 | 30.4 | 34.3 | 32.9 | |

| High (≥$60,105) | 15,975 | 24.1 | 31.8 | 37.7 | |

| Residing in urban area | 43,594 | 85.2 | 90.7 | 92.2 | <0.001* |

| Treatment received | <0.001** | ||||

| Open prostatectomy | 7,151 | 20.7 | 18.7 | 9.1 | |

| Robotic prostatectomy | 5,512 | 6.6 | 12.9 | 11.4 | |

| Brachytherapy | 16,375 | 20.7 | 26.2 | 48.2 | |

| IMRT | 15,869 | 41.3 | 36.4 | 27.7 | |

| EBRT | 2,578 | 10.7 | 5.8 | 3.5 | |

Mantel-Haenszel chi-squared test;

Chi-squared test

Table 2.

Regional characteristics, overall and according to quality ranking of healthcare system.

| Healthcare System Quality (column %) | |||||

|---|---|---|---|---|---|

| N | 1-star | 2-star | 3-star | p | |

| Number of urologists per 100,000 men 65+ | <0.001* | ||||

| Low (≤53) | 15,707 | 32.7 | 33.3 | 32.0 | |

| Intermediate | 14,338 | 27.4 | 31.9 | 28.0 | |

| High (≥87) | 17,992 | 39.9 | 34.9 | 40.0 | |

| Number of radiation oncologists per 100,000 men 65+ | 0.786* | ||||

| Low (≤22) | 15,796 | 32.9 | 33.0 | 32.8 | |

| Intermediate | 15,361 | 28.9 | 33.2 | 31.3 | |

| High (≥37) | 16,880 | 38.2 | 33.8 | 36.0 | |

| Number of hospital beds per 100,000 men 65+ | 0.004* | ||||

| Low (≤4,750) | 14,996 | 32.4 | 33.0 | 28.7 | |

| Intermediate | 16,192 | 25.8 | 31.1 | 38.9 | |

| High (≥6,854) | 16,849 | 41.8 | 35.9 | 32.3 | |

| Medicare managed care penetration | <0.001* | ||||

| Low (≤4.9%) | 15,981 | 42.3 | 29.8 | 35.2 | |

| Intermediate | 15,948 | 25.7 | 33.7 | 34.6 | |

| High (≥16.2%) | 16,108 | 32.0 | 36.6 | 30.2 | |

Mantel-Haenszel chi-squared test

We then measured associations between healthcare system star-ranking and the time-dependent outcomes (treatment-related morbidity, secondary cancer therapy, all-cause mortality) using similar Cox proportional hazards models. These models were then back-transformed to derive the 5-year adjusted probability of each outcome. Because the time to treatment-related morbidity, secondary cancer therapy, and all-cause mortality may differ by treatment type, separate models were fitted according to primary treatment received (prostatectomy or radiotherapy without or with concurrent ADT). Each of these models was further stratified by type of prostatectomy (open or minimally invasive) or type of radiotherapy (brachytherapy, external beam, or intensity-modulated).20 Because the risk for secondary cancer therapy differs by prostate cancer risk classification, we additionally fitted separate models including patients with D’Amico low-risk or high-risk cancer only.12

We performed all analyses using Stata (Version 12MP) and SAS (Version 9.3) and considered p≤0.05 as statistically significant. The University of Michigan Medical School Institutional Review Board exempted this study from review.

Results

Most patients were less than 75 years old, white, and had tumors that were not clinically apparent and diagnosed at a prostate-specific antigen level of 10 ng/ml or less. While we observed numerous statistically significant differences across healthcare systems with respect to patient (Table 1) and regional (Table 2) characteristics, only a few were likely of clinical significance. Notably, patients who were treated in 3-star healthcare systems were older, had lower risk cancers, higher levels of education, greater incomes and were more likely to be treated with brachytherapy.

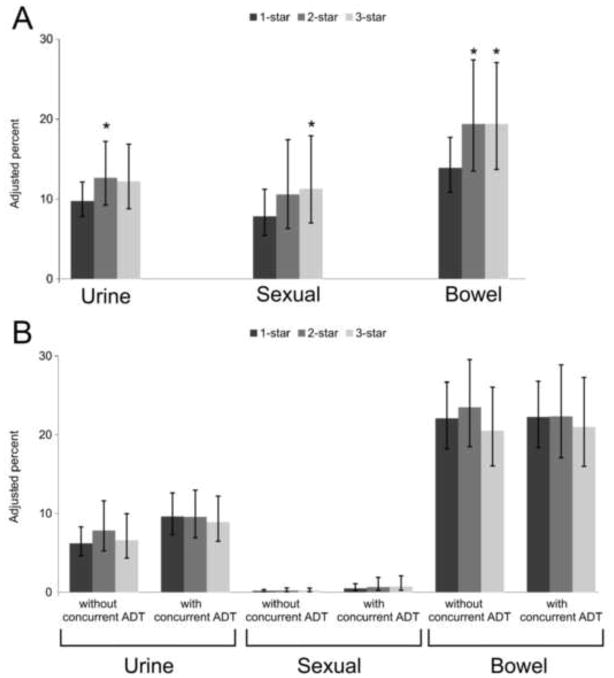

When examining associations between star-ranking and outcomes of prostate cancer treatment, our findings were mixed. Among prostatectomy patients, those treated in 3-star healthcare systems had a lower risk of perioperative complications (odds ratio 0.80, 95% confidence interval [CI] 0.64–1.00, p=0.050) and a slightly shorter adjusted length of stay (2.0 [95% CI 1.9–2.0] vs. 2.1 [95% CI 2.0–2.3] days, p=0.020) than those treated in 1-star systems. However, those treated in 3-star healthcare systems were more likely to undergo procedures for treatment-related morbidity (Figure 2A). For example, there was a trend towards more procedures for urinary morbidity after prostatectomy among patients treated in 3-star versus 1-star healthcare systems (12.2% [95% CI 8.8%–16.9%] vs. 9.7% [95% CI 7.8% vs. 12.1%] within five years, p=0.086]. Likewise, prostatectomy patients treated in 3-star healthcare systems were more likely to undergo procedures for sexual (11.3% [95% CI 7.0%–17.9%] vs. 7.8% [95% CI 5.4%–11.2%], p=0.043) and gastrointestinal treatment-related morbidity (19.3% [95% CI 13.7%–27.0%] vs. 13.9% [95% CI 10.8%–17.7%], p=0.010, Figure 2A). Among patients undergoing radiotherapy, star-ranking was not associated with treatment-related morbidity (Figure 2B).

Figure 2.

Adjusted risk of treatment-related morbidity at 5 years by quality of care of healthcare system among patients who underwent prostatectomy (panel A) and radiotherapy without or with concurrent androgen-deprivation therapy (ADT, panel B). * denotes p<0.05 compared to 1-star healthcare system.

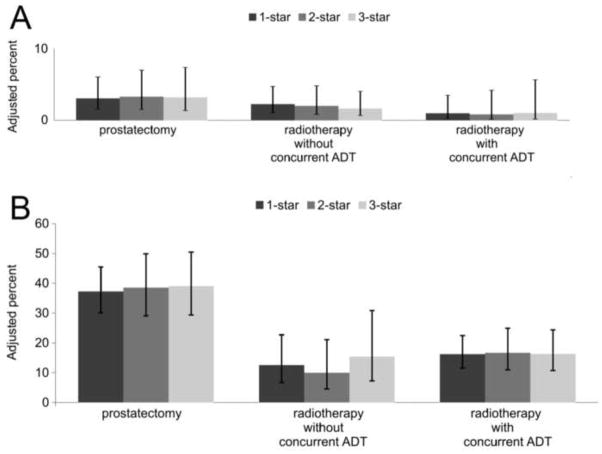

With respect to secondary cancer therapy, we found no associations between healthcare system star-ranking and outcome, both among low-risk (Figure 3A) and high-risk patients (Figure 3B). There were also no significant associations between star-ranking and all-cause mortality (Hazard Ratio 0.99, 95% CI 0.84–1.15, comparing 3-star to 1-star healthcare systems).

Figure 3.

Adjusted risk of secondary cancer therapy at 5 years by quality of care of healthcare system for patients with low-risk (panel A) and high-risk prostate cancer (panel B). Patients were stratified by type of primary treatment received (prostatectomy, radiotherapy without or with concurrent androgen-deprivation therapy [ADT]).

Discussion

Across more than 700 healthcare systems, we found substantial differences in the quality of prostate cancer care as estimated by adherence to nationally endorsed performance measures. However, associations between healthcare system quality and outcomes were mixed. For example, patients who underwent prostatectomy in 3-star healthcare systems had a significantly lower risk of perioperative complications. However, these patients were significantly more likely to undergo a procedure addressing treatment-related morbidity. Star-ranking was not significantly associated with secondary cancer therapy or all-cause mortality.

While previous studies have addressed regional variation in the quality of prostate cancer care at the census division or Hospital Service Area level,11,21 our study is among the first to link adherence to endorsed measures and the outcomes of treatment. While we did not find consistent associations between process measures of quality of care and outcomes among prostate cancer patients, such associations have previously been described in other diseases and settings. For example, better performance on claims-based process measures of care was associated with less decline in function and better survival among vulnerable elders.22 Among patients undergoing surgery for colorectal cancer, hospitals performing better on established measures of quality had significantly fewer perioperative complications,23 which is similar to our findings among prostate cancer patients. However, in our study, the associations between better performance and improved outcomes did not persist for longer-term outcomes (i.e., treatment-related morbidity, secondary cancer therapy, and all-cause mortality), which were not part of the prior study.23

There are several potential reasons for our mixed findings. First, it is entirely possible that the process measures examined in the current study are not relevant for outcomes such as cancer control and treatment-related morbidity. Indeed, the initial development of these measures by RAND was not guided by the desire to identify measures that are directly linked to outcomes, but rather by what experts felt to be reflective of high-quality prostate cancer care.5 However, patients who were treated in the best performing healthcare systems did not have consistently better outcomes, which implies that the current quality measures may not be reflective of the overall care received and may not be relevant for important outcomes. To identify measures that are more directly linked to outcomes, one would likely have to assess other processes of care, such as the details of the care provided in the operating room or the processes monitored by radiation quality assurance programs.24,25

Second, it is possible that the endorsed quality measures relate to other outcomes that could not be measured with the available data, including health-related quality of life, patient satisfaction and treatment-related regret.26,27 As part of the National Strategy for Quality Improvement,28 these patient-reported outcomes are increasingly the focus of quality assessment and should be incorporated into the systematic evaluation of prostate cancer care in the future. In summary, our mixed findings on associations between star-ranking and outcomes may be explained by limitations of the process measures themselves or by the lack of patient-reported outcomes.

In interpreting our findings, it is important to consider several limitations. First, our composite measure of prostate cancer quality rewards close cooperation between urologists and radiation oncologists. Therefore, the kind of treatment patients received differed by the star-ranking of the healthcare system (e.g., a higher prevalence of brachytherapy in 3-star healthcare systems). However, the kind of treatment received is also associated with outcomes such as secondary cancer therapy and treatment-related morbidity. To address this issue, all models were stratified by treatment type. Thus, the impact of star-ranking on outcomes was estimated only among the patients who got the same kind of treatment, but not across treatments.20 Second, claims data provide billing information but lack detailed clinical data,26 so we could not ascertain the patients’ actual urinary and sexual morbidity nor their specific preferences regarding treatment of these morbidities. Undergoing more procedures addressing urinary or sexual morbidity could reflect a higher incidence of morbidity in high-quality healthcare systems, differing patient preferences, or more aggressive management of these morbidities by the physicians practicing in these healthcare systems. Third, our findings related to overall survival were limited by length of follow-up and the protracted course of prostate cancer. With longer follow-up, significant associations between prostate cancer quality of care and survival could emerge. Finally, as with all SEER-Medicare studies, generalizability is limited to men older than 65 enrolled in fee-for-service Medicare.

These limitations notwithstanding, our study has important implications. We found substantial variation in the quality of prostate cancer care across more than 700 healthcare systems in the United States. For expeditious quality improvement, it would be most straightforward to take an approach similar to ours, measuring processes of care in claims data, and then use the results to direct interventions. While we could successfully measure processes of care and rank healthcare systems accordingly, our findings also show significant limitations to this approach. We were unable to consistently link performance on these process of care measures to outcomes, which questions how meaningful these measures ultimately are for patients. Thus, future studies should focus on the development of more discriminative quality measures. Particularly for surgical patients, measures addressing the quality of care provided within the operating room are currently mostly lacking, although the skill set of the surgical team is likely one of the most important factors contributing to postoperative outcomes.24,29 In addition, our findings underscore the importance of incorporating patient-reported outcomes into the quality assessment of prostate cancer care in the future.

Supplementary Material

Acknowledgments

Grant support: FRS is supported in part by grant T32 DK07782 from the NIH/NIDDK and by Postdoctoral Fellowship PF-12-118-01-CPPB from the American Cancer Society. BLJ is supported in part by grant KL2 TR000146 from the NIH. BKH is supported in part by Research Scholar Grant RSGI-13-323-01-CPHPS from the American Cancer Society.

Abbreviations

- ADT

androgen deprivation therapy

- CI

confidence interval

- HCPCS

Healthcare Common Procedure Coding System

- ICD-9

International Classification of Diseases, Ninth Revision

- SEER

Surveillance Epidemiology and End Results

Footnotes

Disclaimer: The views expressed in this article do not reflect the views of the federal government.

References

- 1.American Cancer Society. [accessed August 24, 2011];Cancer Facts & Figures. 2011 Available at: http://www.cancer.org/Research/CancerFactsFigures/CancerFactsFigures/cancer-factsfigures-2011.

- 2.Cooperberg MR, Broering JM, Carroll PR. Time Trends and Local Variation in Primary Treatment of Localized Prostate Cancer. J Clin Oncol. 2010;28:1117–1123. doi: 10.1200/JCO.2009.26.0133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Trinh Q-D, Bjartell A, Freedland SJ, et al. A systematic review of the volume-outcome relationship for radical prostatectomy. Eur Urol. 2013;64:786–798. doi: 10.1016/j.eururo.2013.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Litwin M, Steinberg M, Malin J, et al. [accessed October 7, 2013];Prostate Cancer Patient Outcomes and Choice of Providers: Development of an Infrastructure for Quality Assessment. 2000 Available at: http://www.rand.org/pubs/monograph_reports/MR1227.html.

- 5.Spencer BA, Steinberg M, Malin J, et al. Quality-of-care indicators for early-stage prostate cancer. J Clin Oncol. 2003;21:1928–1936. doi: 10.1200/JCO.2003.05.157. [DOI] [PubMed] [Google Scholar]

- 6.Thompson IM, Clauser S. [accessed September 13, 2011];Prostate Cancer Physician Performance Measurement Set. 2007 Available at: http://www.ama-assn.org/apps/listserv/x-check/qmeasure.cgi?submit=PCPI.

- 7.National Quality Forum (NQF) [accessed January 26, 2013];NQF-endorsed standards. 2012 Available at: www.qualityforum.org/QPS/QPSTool.aspx?Exact=false&Keyword=prostatecancer.

- 8.Centers for Medicare & Medicaid Services. [accessed July 5, 2012];Physician Quality Reporting System: 2010 Reporting Experience Including Trends (2007–2011) 2012 Available at: http://www.facs.org/ahp/pqri/2013/2010experience-report.pdf.

- 9.Fisher ES, Staiger DO, Bynum JPW, et al. Creating accountable care organizations: the extended hospital medical staff. Health Aff (Millwood) 2007;26:w44–57. doi: 10.1377/hlthaff.26.1.w44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bynum JPW, Bernal-Delgado E, Gottlieb D, et al. Assigning ambulatory patients and their physicians to hospitals: a method for obtaining population-based provider performance measurements. Health Serv Res. 2007;42:45–62. doi: 10.1111/j.1475-6773.2006.00633.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schroeck FR, Kaufman SR, Jacobs BL, et al. Regional Variation in Quality of Prostate Cancer Care. J Urol. 2013 doi: 10.1016/j.juro.2013.10.066. epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.D’Amico AV, Whittington R, Malkowicz SB, et al. Biochemical outcome after radical prostatectomy, external beam radiation therapy, or interstitial radiation therapy for clinically localized prostate cancer. JAMA. 1998;280:969–974. doi: 10.1001/jama.280.11.969. [DOI] [PubMed] [Google Scholar]

- 13.Reeves D, Campbell SM, Adams J, et al. Combining multiple indicators of clinical quality: an evaluation of different analytic approaches. Med Care. 2007;45:489–496. doi: 10.1097/MLR.0b013e31803bb479. [DOI] [PubMed] [Google Scholar]

- 14.Klabunde CN, Potosky AL, Legler JM, et al. Development of a comorbidity index using physician claims data. J Clin Epidemiol. 2000;53:1258–1267. doi: 10.1016/s0895-4356(00)00256-0. [DOI] [PubMed] [Google Scholar]

- 15.Diez Roux AV, Merkin SS, Arnett D, et al. Neighborhood of residence and incidence of coronary heart disease. N Engl J Med. 2001;345:99–106. doi: 10.1056/NEJM200107123450205. [DOI] [PubMed] [Google Scholar]

- 16.Merlo J, Chaix B, Yang M, et al. A brief conceptual tutorial of multilevel analysis in social epidemiology: linking the statistical concept of clustering to the idea of contextual phenomenon. J Epidemiol Community Health. 2005;59:443–449. doi: 10.1136/jech.2004.023473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dimick JB, Staiger DO, Hall BL, et al. Composite measures for profiling hospitals on surgical morbidity. Ann Surg. 2013;257:67–72. doi: 10.1097/SLA.0b013e31827b6be6. [DOI] [PubMed] [Google Scholar]

- 18.Hu JC, Gu X, Lipsitz SR, et al. Comparative Effectiveness of Minimally Invasive vs Open Radical Prostatectomy. JAMA. 2009;302:1557–1564. doi: 10.1001/jama.2009.1451. [DOI] [PubMed] [Google Scholar]

- 19.Jacobs BL, Zhang Y, Skolarus TA, et al. Comparative Effectiveness of External-Beam Radiation Approaches for Prostate Cancer. Eur Urol. 2014;65:162–168. doi: 10.1016/j.eururo.2012.06.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cleves M, Gutierrez R, Gould W, et al. An introduction to survival analysis using Stata. 2. Stata Press; 2008. [Google Scholar]

- 21.Spencer BA, Miller DC, Litwin MS, et al. Variations in Quality of Care for Men With Early-Stage Prostate Cancer. J Clin Oncol. 2008;26:3735–3742. doi: 10.1200/JCO.2007.13.2555. [DOI] [PubMed] [Google Scholar]

- 22.Zingmond DS, Ettner SL, Wilber KH, et al. Association of claims-based quality of care measures with outcomes among community-dwelling vulnerable elders. Med Care. 2011;49:553–559. doi: 10.1097/MLR.0b013e31820e5aab. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kolfschoten NE, Gooiker GA, Bastiaannet E, et al. Combining process indicators to evaluate quality of care for surgical patients with colorectal cancer: are scores consistent with short-term outcome? BMJ Quality & Safety. 2012;21:481–489. doi: 10.1136/bmjqs-2011-000439. [DOI] [PubMed] [Google Scholar]

- 24.Schroeck FR, Jacobs BL, Hollenbeck BK. Understanding Variation in the Quality of the Surgical Treatment of Prostate Cancer. Am Soc Clin Oncol Educ Book. 2013;2013:278–283. doi: 10.1200/EdBook_AM.2013.33.278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Moran JM, Dempsey M, Eisbruch A, et al. Safety considerations for IMRT: Executive summary. Med Phys. 2011;38:5067–5072. doi: 10.1118/1.3600524. [DOI] [PubMed] [Google Scholar]

- 26.Finlayson E, Birkmeyer JD. Research based on administrative data. Surgery. 2009;145:610–616. doi: 10.1016/j.surg.2009.03.005. [DOI] [PubMed] [Google Scholar]

- 27.Berry DL, Wang Q, Halpenny B, et al. Decision preparation, satisfaction and regret in a multi-center sample of men with newly diagnosed localized prostate cancer. Patient Educ Couns. 2012;88:262–267. doi: 10.1016/j.pec.2012.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.US Department of Health and Human Services. [accessed June 27, 2013];Report to Congress: National Strategy for Quality Improvement in Health Care. 2012 Available at: http://www.ahrq.gov/workingforquality/nqs/nqs2012annlrpt.pdf.

- 29.Birkmeyer JD, Finks JF, O’Reilly A, et al. Surgical Skill and Complication Rates after Bariatric Surgery. N Engl J Med. 2013;369:1434–1442. doi: 10.1056/NEJMsa1300625. [DOI] [PubMed] [Google Scholar]

- 30.McCarthy EP, Iezzoni LI, Davis RB, et al. Does clinical evidence support ICD-9-CM diagnosis coding of complications? Med Care. 2000;38:868–876. doi: 10.1097/00005650-200008000-00010. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.