Abstract

Many informed consent studies demonstrate that research subjects poorly retain and understand information in written consent documents. Previous research in multimedia consent is mixed in terms of success for improving participants’ understanding, satisfaction, and retention. This failure may be due to a lack of a community-centered design approach to building the interventions. The goal of this study was to gather information from the community to determine the best way to undertake the consent process. Community perceptions regarding different computer-based consenting approaches were evaluated, and a computer-based consent was developed and tested. A second goal was to evaluate whether participants make truly informed decisions to participate in research. Simulations of an informed consent process were videotaped to document the process. Focus groups were conducted to determine community attitudes towards a computer-based informed consent process. Hybrid focus groups were conducted to determine the most acceptable hardware device. Usability testing was conducted on a computer-based consent prototype using a touch-screen kiosk. Based on feedback, a computer-based consent was developed. Representative study participants were able to easily complete the consent, and all were able to correctly answer the comprehension check questions. Community involvement in developing a computer-based consent proved valuable for a population-based genetic study. These findings may translate to other types of informed consents, such as genetic clinical trials consents. A computer-based consent may serve to better communicate consistent, clear, accurate, and complete information regarding the risks and benefits of study participation. Additional analysis is necessary to measure the level of comprehension of the check-question answers by larger numbers of participants. The next step will involve contacting participants to measure whether understanding of what they consented to is retained over time.

Keywords: Decision making, focus groups, genetic research, computer-based informed consent, usability

INTRODUCTION

The number of research studies involving genetic information is increasing rapidly. It is important when obtaining consent to participate in a research study that the participant correctly understand what they are consenting to. In the case of longitudinal studies it is also important that they retain this knowledge over time. In a previous study, McCarty et al. [2005; 2007; 2008a; 2008b; 2011] investigated the comprehension of the elements of an informed consent process and identified the extent to which money was an inducement to participate in the Personalized Medicine Research Project (PMRP), a population-based DNA biorepository. Generally, they found that almost all study participants understood the overall goals of the project. However, many participants were unsure or incorrect about such key issues as the duration of their participation in the study, the fact that their DNA would be stored, and the non-disclosure of personal study results [McCarty et al., 2007]. Data from study questionnaires suggest that the incorrect responses were due to a lack of comprehension rather than a loss of memory of specific details, since the percentage of correct responses was not related to the time since providing consent [McCarty et al., 2007]. Surveys of participants in a number of research trials indicate that although respondents were satisfied with the informed consent process and considered themselves well-informed, they had poor understanding and often were unable to correctly recall important details of the trial [McCarty et al., 2007; Jefford and Moore, 2008; Jimison et al., 1998]. The goal of this study was to involve the community when determining the best way to undertake the consent process, and to evaluate whether people are making truly informed decisions to participate in research. Could participant recall and comprehension be improved by involving community members’ feedback during the design of a computer-based consent? Computer-based consents may be a vehicle to improve an individual's understanding of a study to assist in making truly informed decisions for participation in personalized medicine studies. They may also serve to better communicate consistent, clear, accurate, and complete information regarding risks and benefits of study participation for genetic clinical trials.

METHODS

A number of methods were used to evaluate the best way to design a computer-based consent process. Methods included simulated consents with a readability analysis and participant questionnaire, focus groups, hybrid focus groups, and formal usability testing. The methods are presented in the order they were conducted. The research study protocol for the PMRP and for the current sub-study were reviewed and approved by Marshfield Clinic's Institutional Review Board. All methods were conducted at the Interactive Clinical Design Institute's (ICDI) usability lab, located in the Marshfield Clinic Research Foundation's Biomedical Informatics Research Center. All methods were videotaped and digitally recorded.

Simulated Consents

In order to build a computer-based consent, we first needed to examine the existing consent process in detail. This would identify areas in the process in which the computer could substitute for a human, as well as identify certain aspects of the process that would still need to be completed by a human. It was important to document what the research coordinator did during the verbal consent process that differed from what was on the paper consent document. The videotapes of simulated consenting processes would be used to determine at what point patients would typically ask questions of the researcher in order to obtain more information, as well as to discern which sections of the consent that participants proceeded through quickly. The questions asked by the participants would form the basis of a Frequently Asked Questions (FAQ) section of the computer-based prototype [Plasek et al., 2011]. Another important measure to capture was the average length of time the research coordinator spent conducting the consent process. Simulations of six consents were conducted with employees in June 2009. Simulations of another six consents were conducted with participants from the ICDI usability participant database in July 2009. These participants were not employees. Since the PMRP project is a population-based biobank that recruits from the community and includes Marshfield Clinic employees, the participants in these two simulations were typical of PMRP subjects. The nonemployee group received a check for $25 for their time and travel. Members of the project team observed the consent process from the control room or adjoining usability lab. A usability analyst met with participants before the simulation, reviewed what would take place during the session, and answered any questions from the participants. The participants were asked to play the role of a research participant, John or Jane Doe, and were instructed not to sign their real name when it came time to sign the consent document. It was stressed that they would not actually be consenting to a research study, but that the consenting process itself was being studied. The research coordinator, who would normally be conducting the actual PMRP consent process, conducted the simulations. Currently this is the primary person who conducts the vast majority of the PMRP informed consent process. A new version of the consent document was used during the simulations, so the research coordinator was not yet well-versed with it. The research coordinator conducting the consent process also reiterated to the participants that the consent was not for a real research study. Participants were encouraged to ask any and all questions that came to mind during the simulation. After the consent process, five of the six employees completed a questionnaire that assessed the current consent process and gathered feedback on the idea of using a computer to administer the consent. The nonemployee group did not complete this questionnaire. The employee simulated consent recordings were professionally transcribed by transcriptionists. It was determined that six consents did not yield any new information regarding questions asked by participants, so those were not transcribed for this study. Review of both the transcripts and videos helped to create the FAQ section and the annotated consent document (Appendix).

Community Advisory Group Focus Groups

Two, one-time, one-hour focus groups were conducted in June 2009 with eleven members of the PMRP Community Advisory Group (CAG). The CAG is comprised of approximately 20 local community representatives who regularly meet with the PMRP team and provide feedback that may be representative of the public at large. CAG members are not enrolled in the PMRP, nor are they directly involved in participant enrollment efforts. One group had five participants and the other had six. All participants reviewed the print version of the consent document as well as a videotape clip of a simulated consent process. They also viewed examples of Web sites featuring multimedia presentations of information, although not information regarding informed consent. Participants were paid $75 for their time and travel. The goal of the focus groups was to discuss the community's willingness to adopt a computer-based consent process. We also solicited feedback on several specific aspects of the computer-based consent including study personality/representative, electronic signature, enhanced content (graphics, audio, videos, FAQ, glossary, animation), assessment/quiz questions during the consent, preferred computer-based consent content, and handling of participant compensation.

Hybrid Focus Groups

Two hybrid focus groups, with five participants each, were conducted in July 2009. Participants were recruited from the ICDI usability participant database. Participants were not employees or members of the CAG. The focus groups were structured to first allow participants to test sample computer-based consents and training modules on three different types of hardware: touch screen kiosks, ruggedized touch screens, and tablet computers. These participants were instructed to test the computer-based consents and training modules on each of the different hardware devices. They were also instructed to review them for functionality and ease of use, but not for content. A moderator and three staff members were available to assist if participants had any questions or experienced technical difficulties. Participants were provided with worksheets that outlined the different hardware devices, computer-based consents, and training modules, with sections where they could record comments as they progressed through the testing circuit. Immediately after the participants completed the testing circuit, a focus group was conducted. In the focus group, participants were allowed to provide their feedback about interacting with the computer-based consents and training modules on the various hardware devices. A moderator facilitated the conversation and solicited feedback on some specific topics including hardware preference, interaction elements, quiz questions, how to get help, electronic signatures, reference material, headphones, and study compensation.

Usability Testing of Computer-based Prototype

This test implemented a formal, task-based, usability evaluation method [Kahn and Prail, 1994]. This involved having participants take part in a simulated consent process. Participants were recruited through the ICDI usability volunteer database, with a total of nine participants taking part in the usability evaluation. None of the participants were employees or members of the CAG. The participant first met with a usability analyst, the study information sheet was reviewed, and any questions were answered. The usability analyst clarified to the participant that they would not actually be consenting to participation in PMRP, but would be playing the role of Jane Doe or John Doe. The participant was then greeted by the research coordinator who briefly introduced the participant to the computer-based prototype. The research coordinator stayed in the room during the instruction section of the computer-based consent in order to address any initial questions and get an idea of how comfortable the participant was with the technology.

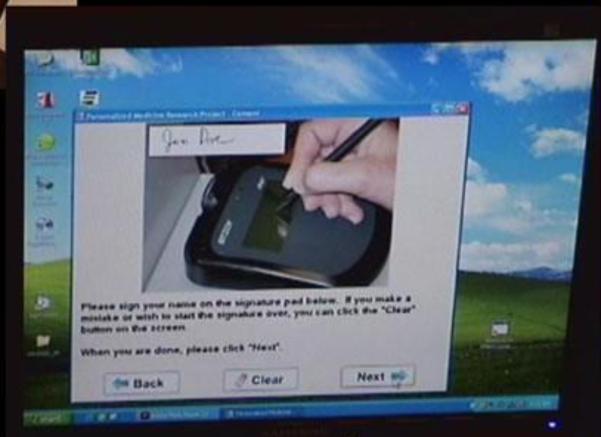

The coordinator then left the room while the participant completed the remainder of the computer-based consent, but was available at any time via a call button on the kiosk. After the participant completed the computer-based consent, the research coordinator had the participant sign (Jane/John Doe) the consent form, and she also signed the consent form using the electronic signature pad (Figure 1).

Figure 1. Usability testing of computer-based consent prototype.

Screen shot illustrating the electronic signature pad.

A paper copy of the signed consent form was then printed for the participant. After the consent process was complete, the usability analyst asked the participant to complete a ten question System Usability Scale (SUS) survey. The SUS is a simple, ten item attitude (Likert) scale giving a global view of subjective assessments of usability [Brooke, 1996]. The usability of a system can be measured only by taking into account the context of use of the system i.e., who is using the system, what they are using it for, and the environment in which they are using it. Furthermore, measurements of usability have several different aspects:

effectiveness (can users successfully achieve their objectives)

efficiency (how much effort and resource is expended in achieving those objectives)

satisfaction (was the experience satisfactory)

SUS has generally been seen as providing this type of high-level subjective view of usability, and is thus often used in running comparisons of usability between systems. It has been widely used in the evaluation of a range of systems [Brooke, 1996].

At the end of the testing, the usability analyst completed a brief exit interview, eliciting any final comments from the participant. The entire session lasted approximately 45 to 60 minutes, and participants received a $25 check for their time and travel.

Consent Readability

A readability analysis was done to compare the grade levels of the various consent forms: paper consent document, annotated consent form, and computer-based consent script. This provided information about the reading level of the current document and whether that level is appropriate for participants. The goal was to keep the reading level at approximately the eighth grade. The reading grade level was calculated using the Flesch-Kincaid Grade Level Readability Formula that is built into the Microsoft Word application. The Flesch-Kincaid grade level is typically used on educational texts, is scaled to grade-school levels, and takes into account the total number of words, word length, syllables, and sentence length within a document [http://www.readabilityformulas.com].

RESULTS

Simulated Consents: Overall Time and Questionnaire

The following results are based on the simulated consent processes with the employee group and the feedback from questionnaires of five of the six participants who completed the process. The average time of a consent process was 20.83 minutes, with the shortest process being approximately 13 minutes and the longest being approximately 28.5 minutes. Four out of five of the employee participants felt that they were well informed after completing the simulated consent process. They felt their questions had been answered adequately, that the presenter was unbiased, and that they had been given enough information to make an informed decision. All survey participants felt comfortable during the consent process. The sections that they thought were covered too quickly in the process were: what will happen if you agree to take part in the study, laws protecting genetic information, study length, and emergency care. Participants felt that benefits of the study, de-identifiable information, and little chance for breach of privacy were persuasive. Discussion of the National Institute of Health's database of Genotypes and Phenotypes (dbGaP), lack of obtaining study results or any direct benefit, ethical concerns about the length of study, and potential medical costs if illness occurs during blood draw were seen as being dissuasive elements. Participants suggested that the process could be improved by periodically pausing to ask whether they had questions; they felt as though they had to interrupt the research coordinator in order to ask questions. Another suggestion was to shorten the consent form and make it very easy and clear to understand. The final suggestion was to ensure that the research coordinator was better informed about the information in the consent document and was comfortable presenting it. The research coordinator was using the next generation consent form that had not been used in any actual consent processes yet, but was the officially approved consent form that the study would use moving forward. Although the timing of implementing the new consent form was suboptimal regarding the simulated consents, the study team had no control over this change. The simulated consents did allow the research coordinator to become familiar with the new version of the consent form. By the second set of simulated consents, the research coordinator was more comfortable working with the new consent form. When asked about moving to a computerized version of the informed consent process, participants thought that the personal connection could get lost and that not all questions would get answered with this change in format. Participants were also worried about the security and confidentiality of using a computerized system.

Community Advisory Group Focus Groups

A number of common themes were seen across both focus groups. In general both groups were open to the computer-based consent replacing the text-based consent. Although the two groups may have provided different examples, the categories below summarize the most common themes. Although the CAG are encouraged to provide feedback that may be representative of the larger community, best and ethical practices determined which suggestions from the CAG group were implemented or not. Also, CAG members are not directly involved in PMRP enrollment efforts.

a. Keep it simple

Both groups stressed the importance of keeping the computer-based consent as simple as possible, without any extra “fluff.” There were comments about cutting out anything that was unnecessary.

b. Consistent summary points

Both groups described that one value of having a computer-based consent was the ability to have a simple, clear, to-the-point, standardized message that covers only the summary points rather than reading the entire text verbatim. There were comments regarding how people “trust the messenger” in typical contracts and thus do not need to go through the document word for word. Other comments pointed out that going through the consent form verbatim was way too much information, as well as that it would be demeaning if someone simply read what was on the screen to them.

c. Keep it concise and let people choose what they want to hear

The groups thought that people do not want to spend a lot of time going through the consent form at a computer, rather they should be able to do so as quickly as possible. They thought the computer-based consent process should not take any longer than when the process is conducted by a research coordinator.

d. e-Signatures are just like signing a piece of paper

Both groups viewed e-Signatures as something that people already commonly do in other settings, such as in retail. They felt that signing an electronic signature pad was not a problem. One comment was that there would be a disconnect if a participant went through a computer-based consent process and then had to sign a paper form, rather than signing on the computer.

e. Reference material

One area in which opinions differed between the groups was related to having a laminated copy of the consent form available at the beginning of the computer-based consent session. One group thought it was a good idea to have both the printed consent form and the FAQs as they were going through the computer-based consent. This group thought that it would not hurt to have more information. The participant could choose whether or not they wanted to use the printed forms. The other group however, did not think it would be useful or necessary to have printed forms available, feeling that it is redundant of what is on the screen where the participant just follows along. Positives expressed about having the printed/hard copies available were that it is comforting to have something tactile to hold, and also that people trust printed/hard copies. This group thought a large-print version might be useful for the visually-impaired and the elderly. The project team decided against providing a paper version of the consent during the computer-based consent process in order to avoid overwhelming participants.

f. Cartoons are for kids

Both groups thought that unless the computer-based consent was being designed for consenting minors, cartoons and/or animations would be unnecessary and would make it appear unprofessional and not serious. Both groups thought that having animation would be expensive compared to having a real person providing narration. Some comments indicated that if the computer-based consent was animated, people might think of the consent process as a joke. Other comments were that pictures and having people “walking” onto the screen in front of the text were distracting. Both groups thought the narrator should be a real person or at least look like a real person. Some commented that a cartoon-based version might stimulate the thought processes of younger audiences.

g. Full body or talking head in a box

Another area where opinions differed between the groups was how to feature the narrator: full body or talking head. The first group did not like the full body next to the text and would instead prefer a talking head in a box, similar to a news broadcast on television or an embedded video. The second group preferred the full body over the talking head. They felt that it gave a bit more life to the person, but also thought that a combination of full body and talking head shots could work if they were varied from screen to screen appropriately, with respect to the text and graphics. The end product features a full shot of the principal investigator (CAM) combined with a variety of complimentary videos and graphics.

h. Primary investigator should be the “personality” in the computer-based consent

Both groups thought that the principal investigator (CAM) would be best in the role of primary investigator in the computer-based consent as someone in the field who is passionate about the research. Both groups thought that having someone well-known in the community would be preferable because there could be a synergy between the information campaigns for PMRP and the computer-based consent. The groups’ opinions were that if a medical doctor was presenting the information, then he/she should wear the white medical coat because it is viewed as a promise that he/she is practicing or doing research. Others felt that doctors perform medicine and actors perform in film productions, but that featuring the primary investigator builds connectedness to the project that is not attainable by featuring an actor. Another view was that having the “personality” in a white lab coat would really depend on what you want to convey within the computer-based training. The project team decided the end product would feature the principal investigator (CAM) wearing regular clothing.

i. Music is just an added distraction

Music and sound effects were seen as a distraction, making it hard to concentrate on what was being read. One opposing participant's view was that music and sound effects enhanced the experience and got their attention. The project team decided against featuring any background music that could distract participants.

j. Don't make it feel like a test, but reinforce the material

Both groups initially had a negative impression of the proposed seven comprehension check questions negatively. They did not like the idea of being tested during the consent process, but later warmed up to the idea of using questions as a reinforcement tool. One suggestion was that having a good FAQ page would accomplish the same thing as the comprehension check questions. There were comments that the questions would help people “sit up a little straighter,” read a little more, and pay closer attention. Some participants thought that the true/false questions would help seal the information into their memory. The correct answer could prompt them to think, “Where did I miss that?” Both groups thought that the questions should be presented at the end of each section, with the correct answer immediately following the question. The final computer-based consent featured seven comprehension check questions presented at the end of selected sections (Table 1). Questions consisted of a mix of true/false and multiple choice. The structure of the questions were in part based on a previous PMRP research study that found participants had poor understanding and recall of important study details [McCarty et al., 2007].

k. Make participating in the study easy and pay them with a Marshfield Area Chamber of Commerce and Industry (MACCI) gift certificate or check

Both groups thought that there should be an option to make participating in the study easy. There were comments that the MACCI gift certificate promotes the city (of Marshfield), supports local initiatives, and should be the first choice unless there is a good reason to have another option. Negative opinions of the MACCI gift certificates were that they cannot choose where to use/cash them but have to buy merchandise in Marshfield. Some pointed out that not everyone who participated will live in Marshfield. One group proposed the idea of giving participants a check immediately after consenting, if it was easy to setup. Both groups discussed the advantages and disadvantages of having a Visa gift card or other gift card. They were informed that this option had been tried in the past, but setup fees made it too expensive. Both groups thought that computerized payments (such as Amazon or iTunes) would be a bad idea since it promotes outside commerce. They also commented that not everyone has a computer, and that many people do not yet trust making financial transactions on the computer. Participants currently receive either a MACCI gift certificate or are mailed a check.

Hybrid Focus Groups

These groups were very lively and helped us explore a computer-based consent informed consent process for the PMRP. A number of common themes were seen across both hybrid focus groups. Although different groups had different examples, the categories below summarize the recurring themes.

a. Kiosks are the way to go as long as you can sit down

Most people in both groups preferred the kiosks over the tablet PC or the ruggedized touch screen because it had a bigger, easy to read screen, and they could use their fingers to navigate.

b. Keep it simple

Participants wanted the computer-based consent to be straight forward and easy to use.

c. Headphones are annoying

Both groups did not like being required to use headphones at the stations. They stated concerns about headphones not being sanitary. However, they did acknowledge that, in order to minimize distractions, headphones would be necessary if there were multiple people in the area at one time.

d. e-Signatures are what people want if it is part of a computerized process

Both groups viewed e-signatures as something that people are familiar with from other settings, like retail. They thought that signing on a computer would not be a problem as long as they can see their signature on the screen when they submit the consent document.

e. Printed reference materials are unnecessary and redundant

Both groups thought that having a printed/hard copy of the consent available during the computer-based consent would be unnecessary and redundant. However, one counter opinion was that having the hard copy of the consent available would be helpful for the subset of people who are unable to read/proofread items on a computer screen. Another opinion was that people would be lost if they had the paper in front of them, and then they may as well skip the computer-based consent if they have a hard copy consent.

f. People want to choose their compensation within the computer-based consent

Both groups thought it was a great idea to be able to choose the type of compensation that they want to receive within the computer-based consent and to have that choice documented electronically.

Usability Testing of Computer-based Prototype

There were nine participants in the usability testing. The participants consisted of six females between the ages of 48 and 78 and three males between the ages of 48 and 71. These participants were from the community and were representative of eligible PMRP participants.

a. Survey results

All nine participants completed the System Usability Scale survey (Table 2). An SUS score was calculated for each survey on a scale from 0 to 100, with 100 representing a perfect score [Tullis and Albert, 2008]. The average score of the SUS for the computer-based prototype was 91.11%. In general a score under 60% is considered relatively poor, and a score over 80% is considered good [Tullis and Albert, 2008].

b. Observations and recommendations

Although some obvious usability issues were observed during usability testing of the computer-based consent, all of the participants successfully completed it with relative ease. Most of the participants stated that it was very easy to use, even though many had never used a touch screen system before. A number of participants also stated that they were not good with computers or disliked computers, but they found the computer-based consent very easy to use. They also stated that the questions were clear and easily understood.

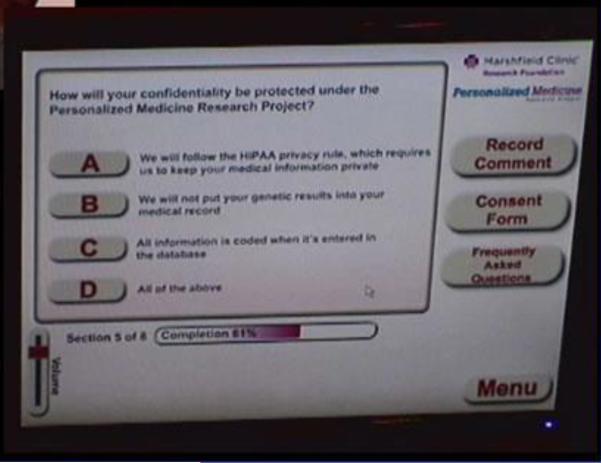

All of the seven comprehension check questions in the computer-based consent were answered correctly by all of the participants (Figure 2). Participants felt that the length was appropriate and the detail of instructions just right, but would not make the instructions any longer. None of the participants had issues entering their signature via the electronic signature pad. A list of issues with recommendations that were observed by both the participants and the project team during usability testing was compiled and is presented in Table 3. All of these issues were addressed in order to develop a more usable interface.

Figure 2. Usability testing of computer-based consent prototype.

Screen shot of example of comprehension check questions.

Consent Readability

A readability analysis of the consent document suggested that it was written at an eighth grade level, more specifically 8.4 using Microsoft Word's (2003) Flesch-Kincaid grading formula [http://www.readabilityformulas.com]. The document was six pages long using 11-point Times New Roman font with heading and subheadings in 14-point Times New Roman font. An annotated consent document was derived by observing which parts of the printed consent the research coordinator verbally described across all of the simulated consents. A readability analysis of the annotated consent document suggested that it was written at a 7.8 grade level using Microsoft Word's (2003) Flesch-Kincaid grading formula [http://www.readabilityformulas.com]. The document consisted of 1926 words, with 94 sentences and 20.4 words per sentence. Finally, a second annotated consent document was created to serve as the script for the computer-based prototype. The spoken audio in the prototype represented the consent document, while certain points were textually reiterated on the kiosk screen. Analysis of this consent suggested that the document was written at a 7.6 grade level using Microsoft Word's (2003) Flesch-Kincaid grading formula [http://www.readabilityformulas.com]. The document consisted of 2162 words, 166 paragraphs and 12.2 words per sentence (See Table 4).

DISCUSSION

This study examined several components regarding the design and format of informed consent documents for genetic bio-banking studies: community involvement, readability levels, computer-based delivery, and understanding. Many studies have focused on one or more of the above components, but the majority of consent studies are based on clinical research, not genetic research. Studies have been done in Kenya [Marsh et al., 2010; Molyneux et al., 2005] and other developing countries, involving communities and informed consent surrounding cultural and environmental differences. Other studies have compared different delivery models, including electronic, video-based, or computer-based consents [Bickmore et al., 2009; Issa et al., 2006; Campbell et al., 2004; Beskow et al., 2010]. Frazier et al. [2006] studied the understanding of genetic consents by older adults. The approach to that study was novel, in that it did not simply replace the human component of delivering the consent, but rather complemented it.

In another study, Campbell et al. [2004] found that clinicians still play an important role in verifying the participant's understanding during the consent process. In our study the combined computer/human delivery of the consent provided the best of both worlds. The computer-based consent was standard and consistently delivered. Participants could complete it at their own pace and could access additional detailed information at the click of a button when desired. Delivery of the consent by the research coordinator was unable to provide this standardization and flexibility. However, the research coordinator could answer questions or address any weak areas of understanding before, during, and after the consent process. They were also very important when introducing and quickly acclimating the participant to the computer touch screen interface. The process of the computer-based consent was simple and user-friendly for participants, even those with very low computer literacy. Even though the usability test participants were working on a relatively rough prototype, they were all able to easily complete the computer-based consent and answer all of the comprehension check questions correctly. This may have been due to the interactivity of the touch screen consent process. Campbell et al. [2004] also found that consents employing straight video did not work as well, especially with lower literacy participants. They speculated that this may have been due to the passive nature of the participant's role in the consent process. Another reason for the success of the touch screen in our study, may have been due to the up-front involvement of the community on several levels. The community advisory board, through focus groups, had an initial role in steering the early format and design of the consent. Then potential representative participants from the community completed the touch screen consent and their feedback was incorporated into the design as well.

Limitations of our study include the generalizability of findings based on the small sample size; however the sample size is large enough for a proof-of-concept study. The study also did not control for reading skill or medical literacy. Some of the participants in the usability testing may have previously completed the paper-based consent for the PMRP study, which could have biased the results. If this was the case, it was most likely that the participant completed the paper-based consent far in advance of taking part in this study, and the consent form used in this study was a newer version. Also, two of the participants were involved in both the simulated consent process and the usability testing, which may have familiarized them with the consent, although the two methods were spaced over a year apart. There may be a limitation in using the Flesch-Kincaid formula to compare reading difficulty of both a printed consent form and a verbal consent script. However, the goal was to get a very basic estimate of the grade level across consent processes.

CONCLUSIONS

This study demonstrates the benefits of combining multiple methods of community involvement when designing effective consents for genetic-based research. This is important because the number of studies involving genetic data is growing rapidly, and there is little research done on the informed consent process in this area. Also, these study findings could translate to the informed consent process for other types of research. The computer-based consent is currently being used to consent PMRP participants, using a touch screen device (Figure 3).

Figure 3. Computer-based consent on touch screen kiosk.

User demos one of the computer-based consent devices currently being used in the field.

Information collected when using the computer-based consent, particularly answers to the comprehension questions, will allow future analysis regarding the quality of answers by larger numbers of actual participants. Future work will involve contacting participants at a future date to measure whether their understanding of what they had given consent for has been retained.

Supplementary Material

ACKNOWLEDGEMENTS

This study was grant funded by NIH National Human Genome Research Institute as a part of the eMERGE project, grant number 5 U01 U01HG04608. The authors would also like to thank the PMRP Community Advisory Board (CAB), the Ethics and Security Advisory Board (ESAB) and the community members that participated in the usability testing of the PMRP computer-based consent prototype.

Footnotes

Conflict of Interest: None of the authors have competing interests.

AUTHORS' CONTRIBUTIONS

CAM was the principal investigator for the study, conceived of the study, participated in the study design, and helped to draft and complete the manuscript. ANM participated in the study design, literature review, data collection, and analysis and wrote the manuscript. JMP participated in the study design, literature review, data collection, and analysis and helped to draft the manuscript. DGH participated in the design and programming of the computer-based consent. NSP participated in the design and programming of the computer-based consent. WSF conducted the simulated consents, contributed to the interface design, and helped draft the manuscript. CJW participated in the simulated consents, contributed to the interface design and helped to comment and complete the manuscript. LVR participated in the design and programming of the computer-based consent and helped to draft the manuscript. VDM was the project manager. All authors have read and given final approval of the manuscript as submitted.

REFERENCES

- Beskow LM, Friedman JY, Hardy NC, Lin L, Weinfurt KP. Developing a simplified consent form for biobanking. PLoS One. 2010;5:e13302. doi: 10.1371/journal.pone.0013302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickmore TW, Pfeifer LM, Paasche-Orlow MK. Using computer agents to explain medical documents to patients with low health literacy. Patient Educ Couns. 2009;75:315–320. doi: 10.1016/j.pec.2009.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooke J. SUS: A “quick and dirty” usability scale. In: Jordan PW, Thomas B, Weerdmeester BA, McClelland IL, editors. Usability Evaluation in Industry. Taylor & Francis; London: 1996. pp. 189–194. [Google Scholar]

- Campbell FA, Goldman BD, Boccia ML, Skinner M. The effect of format modifications and reading comprehension on recall of informed consent information by low-income parents: a comparison of print, video, and computer-based presentations. Patient Educ Couns. 2004;53:205–216. doi: 10.1016/S0738-3991(03)00162-9. [DOI] [PubMed] [Google Scholar]

- Frazier L, Calvin AO, Mudd GT, Cohen MZ. Understanding of genetics among older adults. J Nurs Scholarsh. 2006;38:126–132. doi: 10.1111/j.1547-5069.2006.00089.x. [DOI] [PubMed] [Google Scholar]

- Issa MM, Setzer E, Charaf C, Webb AL, Derico R, Kimberl IJ, Fink AS. Informed versus uninformed consent for prostate surgery: the value of electronic consents. J Urol. 2006;176:694–699. doi: 10.1016/j.juro.2006.03.037. [DOI] [PubMed] [Google Scholar]

- Jefford M, Moore R. Improvement of informed consent and the quality of consent documents. Lancet Oncol. 2008;9:485–493. doi: 10.1016/S1470-2045(08)70128-1. [DOI] [PubMed] [Google Scholar]

- Jimison HB, Sher PP, Appleyard R, LeVernois Y. The use of multimedia in the informed consent process. J Am Med Inform Assoc. 1998;5:245–256. doi: 10.1136/jamia.1998.0050245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn MJ, Prail A. Formal usability inspections. In: Nielsen J, Mack RL, editors. Usability Inspection Methods. John Wiley & Sons, Inc.; New York: 1994. pp. 141–171. [Google Scholar]

- Marsh VM, Kamuya DM, Mlamba AM, Williams TN, Molyneux SS. Experiences with community engagement and informed consent in a genetic cohort study of severe childhood diseases in Kenya. BMC Med Ethics. 2010;11:13. doi: 10.1186/1472-6939-11-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarty C, Chisholm R, Chute C, Kullo I, Jarvik G, Larson E, Li R, Masys D, Ritchie M, Roden D, Struewing JP, Wolf WA, eMERGE Team The eMERGE Network: A consortium of biorepositories linked to electronic medical records data for conducting genomic studies. BMC Med Genomics. 2011;4:13. doi: 10.1186/1755-8794-4-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarty C, Nair A, Austin DM, Giampietro PF. Informed consent and subject motivation to participate in a large, population-based genomics study; the Marshfield Clinic Personalized Medicine Research Project. Community Genet. 2007;10:2–9. doi: 10.1159/000096274. [DOI] [PubMed] [Google Scholar]

- McCarty CA, Chapman-Stone D, Derfus T, Giampietro PF, Fost N, Marshfield Clinic PMRP Community Advisory Group Community consultation and communication for a population-based DNA biobank: the Marshfield clinic personalized medicine research project. Am J Med Genet A. 2008a;146A:3026–3033. doi: 10.1002/ajmg.a.32559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCarty CA, Peissig P, Caldwell MD, Wilke RA. The Marshfield Clinic Personalized Medicine Research Project (PMRP): 2008 scientific update and lessons learned in the first 6 years. Personalized Medicine. 2008b;5:529–541. doi: 10.2217/17410541.5.5.529. [DOI] [PubMed] [Google Scholar]

- McCarty CA, Wilke RA, Giampietro PF, Wesbrook SD, Caldwell MD. Marshfield Clinic Personalized Medicine Research Project (PMRP): design, methods and recruitment for a large population-based biobank. Personalized Medicine. 2005;2:49–79. doi: 10.1517/17410541.2.1.49. [DOI] [PubMed] [Google Scholar]

- Molyneux CS, Wassenaar DR, Peshu N, Marsh K. 'Even if they ask you to stand by a tree all day, you will have to do it (laughter)...!': Community voices on the notion and practice of informed consent for biomedical research in developing countries. Soc Sci Med. 2005;61:443–454. doi: 10.1016/j.socscimed.2004.12.003. [DOI] [PubMed] [Google Scholar]

- Plasek JM, Pieczkiewicz DS, Mahnke AN, McCarty CA, Starren JB, Westra BL. The role of nonverbal and verbal communication in a multimedia informed consent process. Applied Clinical Informatics. 2011;2:240–249. doi: 10.4338/ACI-2011-02-RA-0016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [February 3, 2012];The Flesch Grade Level Readability Formula. Available at: http://www.readabilityformulas.com/flesch-grade-level-readability-formula.php.

- Tullis T, Albert B. Measuring the User Experience Collecting, Analyzing, and Presenting Usability Metrics. Elsevier/Morgan Kaufmann; Amsterdam: 2008. Self-Reported Metrics. pp. 123–166. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.