Abstract

Background

Counterfactual thinking involves mentally simulating alternatives to reality. The current article reviews literature pertaining to the relevance counterfactual thinking has for the quality of medical decision making. Although earlier counterfactual thought research concluded that counterfactuals have important benefits for the individual, there are reasons to believe that counterfactual thinking is also associated with dysfunctional consequences. Of particular focus is whether or not medical experience, and its influence on counterfactual thinking, actually informs or improves medical practice. It is hypothesized that relatively more probable decision alternatives, followed by undesirable outcomes and counterfactual thought responses, can be abandoned for relatively less probable decision alternatives.

Design and Methods

Building on earlier research demonstrating that counterfactual thinking can impede memory and learning in a decision paradigm with undergraduate students, the current study examines the extent to which earlier findings can be generalized to practicing physicians (N=10). Participants were asked to complete 60 trials of a computerized Monty Hall Problem simulation. Learning by experience was operationalized as the frequency of switch-decisions.

Results

Although some learning was evidenced by a general increase in switch-decision frequency across block trials, the extent of learning demonstrated was not ideal, nor practical.

Conclusions

A simple, multiple-trial, decision paradigm demonstrated that doctors fail to learn basic decision-outcome associations through experience. An agenda for future research, which tests the functionality of reference points (other than counterfactual alternatives) for the purposes of medical decision making, is proposed.

Significance for public health.

The quality of healthcare depends heavily on the judgments and decisions made by doctors and other medical professionals. Findings from this research indicate that doctors fail to learn basic decision-outcome associations through experience, as evidenced by the sample’s tendency to select the optimal decision strategy in only 50% of 60 trials (each of which was followed by veridical feedback). These findings suggest that professional experience is unlikely to enhance the quality of medical decision making. Thus, this research has implications for understanding how doctors’ reactions to medical outcomes shape their judgments and affect the degree to which their future treatment intentions are consistent with clinical practice guidelines. The current research is integrated with earlier research on counter-factual thinking, which appears to be a primary element inhibiting the learning of decision-outcome associations. An agenda for future research is proposed.

Key words: counterfactual thinking, medical errors, medical reasoning

Introduction

A doctor must decide upon one of two treatments (A or B) for a patient with a serious disease. The doctor decides on Treatment A and, unfortunately, the patient dies. To the extent that Treatment B was previously considered and feasible, the doctor will mentally simulate what might have been had he/she selected Treatment B. In fact, the more likely the doctor was to actually select Treatment B, the more extreme the affective reaction he/she will experience in response to the unfortunate outcome.1 This type of mental simulation is known in philosophy and psychology as counterfactual thinking,2,3 because it involves mentally simulating alternatives to reality. Thus, a doctor may mentally change the perceived antecedents of an outcome and mentally play out the consequences once the facts are known.

Counterfactual thoughts tend to take one of two directions.4 Because people often take at face value desirable decisions and outcomes,5 they rarely engage in downward counterfactual thinking, that is, mental simulation of alternatives that are worse than reality. Thus, the large majority of counterfactual thoughts are upward, whereby people mentally simulate alternatives that are better than reality in response to undesirable outcomes,6 unexpected outcomes,3 and perceptually abnormal events.7 It is important to note that downward and upward counterfactuals tend to be triggered by different types of events and serve different purposes. Downward counterfactuals tend to follow close calls or relatively satisfying outcomes, lead to relatively positive affect, and theoretically provide behavioural prescriptions for how one might prevent unsatisfying outcomes in the future. Upward counterfactuals tend to follow relatively unsatisfying outcomes, lead to relatively negative affect and theoretically provide behavioural prescriptions for how one might promote satisfying outcomes in the future. Although other important distinctions have been made in the counterfactual thinking literature,8-11 they are beyond the scope of the current analysis.

A critical aspect of counterfactual thoughts is their tendency to serve as important reference points and standards of comparison for social perception and judgment. It has long been known that mental comparison cases directly shape affective, cognitive, and behavioural reactions to events.12-14 As suggested by general judgment and decision making research, as it pertains to mentally simulated and forgone alternatives,15,16 the use of counterfactuals as references points in judging the quality and directions of medical practice is ubiquitous.

Consequences of counterfactual thinking

The influence and consequences of upward counterfactual thoughts are well documented, and include affective reactions,4,17 judgments of blame and responsibility,18 victim compensation,19 experienced regret/perceived regret,2,12,20 and judgments of causality.7 Many empirical approaches to counterfactual thinking advocate for the position that counterfactuals are functional.4,9,10,21,22 The crux of the argument is that counterfactuals are essential to subsequent decision making and planning because they offer important behavioural prescriptions for learning, behaviour modification, and future decisions. With regard to counterfactual thinking’s place in medicine, Höfler argued that counterfactual thinking is important to causal reasoning,23 as it serves as the basis of causal thinking in epidemiology. Furthermore, Höfler contended that counterfactuals provide a framework for many statistical procedures, enabling medical professionals to estimate causal effects, and that the counterfactual approach is useful for teaching purposes.

However, here it is argued that counterfactual thinking can have undesirable (dysfunctional) as well as desirable (functional) consequences. In fact, recent research links counterfactual thinking to distortions of reality in memory,24 increased risk-taking/gambling,6 and hindsight bias.6,25 Counterfactual thinking also appears to be a precursor to outcome bias,16 whereby people judge the quality of a decision on the basis of its associated outcome rather than on its prior probability.

More important to the current analysis is the possibility that counterfactual thinking negatively influences causal reasoning and learning. Earlier research on counterfactual thinking showed that counterfactuals greatly influence causal ascriptions.7 However, the work of Mandel and Lehman conclusively demonstrates that when people generate counterfactuals they tend to think of ways that an undesirable outcome may have been prevented.26 Only when people engage in direct causal reasoning (i.e., considering the causes of such outcomes rather than focusing on what might have been) do they appear to generate antecedents that actually covary with the outcomes in question. Thus, counterfactuals may covary with the perceived causes of outcomes, but they do not necessarily lead the social perceiver to generate the correct causes of outcomes. Similarly, statisticians, such as Dawid,27 have argued that the counterfactual concept is unable to solve the fundamental problems of causal inference in the first place because any particular individual, at a fixed time, can observe an outcome only under a single condition.

In any case, we know that counterfactual thinking and highly salient and cognitively available representations of undesirable outcomes influence both causal reasoning and decision making in medicine. A doctor’s last undesirable experience with a patient can be the most influential factor affecting his/her actions and decisions in the next similar case that he/she faces.28,29

Perhaps the most devastating consequence of counterfactual thinking in medical practice is when it serves as the catalyst for changing otherwise optimal decision making strategies to suboptimal decision strategies. It is clear that good decisions, as defined by their associations with highly probable, desirable outcomes, sometimes result in undesirable outcomes. It is also clear that bad decisions, as defined by their associations with highly probable undesirable outcomes, sometimes result in desirable outcomes. However, people possess an outcome bias when they judge the quality of decisions. That is, even when they know that a decision alternative is the most favourable, given its associated prior probability of success, people cannot help but allow the outcome to influence their perceived quality of the decision; and there appears to be nothing special about the medical profession that immunizes medical professionals from this apparent pitfall in judgment. A critical component to this bias is the consideration that a more desirable outcome could have or would have occurred if another decision alternative had been selected. The easier it is for people to imagine those alternatives, the greater the affective reaction to reality, and the more likely they will follow the prescriptions of their counterfactuals in the future.

Research conducted by Ratner and Herbst demonstrates that good decisions can also be overwhelmed by the negative emotional reactions associated with undesirable outcomes that people can easily mentally undo.30 Even when people recall that a particular decision alternative was more successful than other decision alternatives in the past, they will often abandon such decision alternatives if they focus on their affective reactions to similar previous events.

In medicine, as with many other scientifically-based practices, a priori probabilities are rarely certain. This is why one of the biggest influences on medical decision making is one’s professional experience (despite the existence of empirically-based clinical practice guidelines).31,32 The focus of the current analysis is to examine the role of counterfactual thinking in medical decision making and experiential learning. Of particular interest is whether counterfactual thinking aids or impedes learning in medical practice. Earlier research on the impact of counterfactual thinking suggests that counterfactuals enhance learning.21,33 However, such demonstrations failed to consider the impact of counterfactuals on memory and perceived skill level. On the basis of research distinguishing counterfactual content for the self and others,34 metacognitive findings suggesting that people are inaccurate in their self-appraisals,35 and the link between hindsight bias and counterfactual thinking,6,25 Petrocelli et al.36 hypothesized that counterfactuals can inhibit improvements in academic performance by providing a false sense of competence. Their studies showed that studying behaviour and improvement on standardized exam items were inhibited by spontaneous counterfactual thought responses. When Petrocelli et al. manipulated the salience of counterfactual thinking, they found that the negative relationship between counterfactual thought frequency and exam improvement was mediated by studying behaviour. Furthermore, perceived skill mediated the link between counterfactual thinking and studying behaviour. Thus, consistent with earlier notions that people discontinue practice when they believe they have reached mastery of a bod of knowledge,35 Petrocelli et al.’s participants failed to practice academic material when they overestimated their abilities, and their perceived abilities were partially a function of their tendency to explain away undesirable outcomes with counterfactual thoughts.

The research conducted by Petrocelli et al.36 involved conscious and deliberate decision making. However, the link between counterfactual thinking and decision making can also be mediated by learning inhibition. Another set of studies, conducted by Petrocelli et al.,37 supports this claim. In their paradigm, participants made decisions to buy one of two stocks across multiple trials (i.e., sequential years) after observing value-by-month graphs. As participants completed subsequent trials (i.e., year to year), the better of the two stocks simply alternated, creating a simple concept rule to be learned (i.e., A, B, A, B, A, B...). Interestingly, the majority of Petrocelli et al.’s participants failed to learn this concept rule after 30 consecutive trials. Consistent with their hypothesis, learning was less likely to occur as the frequency of counterfactualized trials increased. Furthermore, the relationship between counterfactual thinking and learning was mediated by the degree to which participants had overestimated their recent performance. The experimenters concluded that upward counterfactuals can inhibit learning in at least one of two ways. First, focusing on alternative decisions, outcomes, or both can essentially distort the feedback process. Rather than encoding and decoding reality (i.e., the actual decisions and outcomes), participants may have recalled an alternative decision-outcome event to the extent that they made losing decisions. For instance, if a participant lost the third trial, but counterfactualized it away, they may be more likely to recall the following outcomes: A, B, B, B, A, B...; and less likely to learn the actual pattern. A second possibility also implicates memory distortions via counterfactuals: participants who counterfactualized losing trials were more likely to overestimate their performance and were less likely to learn the more distal pattern emerging. Feeling that one is performing better than he/she actually is might attenuate either his/her motivation, or perceived need to improve one’s outcomes by testing other strategies.

Counterfactual thinking and the Monty Hall problem

Because the demonstrations of dysfunctional counterfactual thinking cited above were derived within relatively complex systems, they do not rule out alternative explanations. Petrocelli and Harris sought to examine the possibility that counterfactuals inhibit experiential learning within a very simple decision paradigm (i.e., the Monty Hall three doors paradigm).38 This research asked two basic questions: i) do people learn optimal decision strategies from their experiences? and ii) what role does counterfactual thinking play in learning or not learning these strategies?

The current research and that of Petrocelli and Harris employed the Monty Hall Problem (MHP) to investigate the answers to these questions. The MHP is a two-stage decision problem popularized by the game show Let’s Make a Deal. In the classic version, a MHP-contestant is presented with three doors, one of which conceals a prize and two of which conceal something relatively undesirable, such as a goat. Importantly, the prize and goats do not change positions once they have been assigned to a door and Monty (the host) knows which door conceals the prize. The contestant selects a door, and Monty always opens an unselected door that does not reveal the prize. The contestant is then faced with the decision to stick with his/her initial door or to switch to the other remaining door. Intuitively, the decision to stick or switch appears to be arbitrary as the probability of winning the prize appears to be 0.50 for both options. However, this assumption is mathematically incorrect. The probability of winning a MHP-trial using the stick-decision is the same as that when the contestant first begins (i.e., 0.33), and the probability of winning using the switch-decision is 0.67. Many people fail to see the advantage of uniform switching in the MHP and resist most explanations supporting it.39 In fact, the probability of winning the prize through switching increases as a function of the number of doors (i.e., 1: probability of selecting the winning door with the first guess). For example, if Monty presented 10 doors (one prize and nine goats), and revealed eight goats after the initial door is selected, the respective probabilities of a win with the stick- and switch-decisions would be 0.10 and 0.90.

The MHP is an ideal task, for the purpose of studying the link between counterfactual thinking and learning as learning can be easily operationalized as a decision maker’s switch-decision frequency.40-42 Is it possible for people to learn the MHP-solution from repeatedly playing the game? After repeated trials, one might expect people to learn the associations between switching and winning and sticking and losing (at least implicitly). After all, there are only three doors, two possible strategies, two possible outcomes, and players will win twice as many trials with the switch-decision as they do with the stick-decision.

Granberg and Brown’s participants played 50 trials of a computer-simulated MHP.40 Switch-decision frequency reached approximately 50% in the final block of 10 trials. In two variations, the incentive to switch was increased (i.e., one point awarded for stick-wins and two points awarded for switch-wins or one point for stick-wins and four points for switch-wins); nonetheless, participants switched in only 63% and 85% of the final 10 trials, respectively. Their participants also tended to believe that success in the MHP was a matter of luck rather than control when no extra incentive to employ the switch-decision was used, and only slightly above the mid-point on a lucky-control response item when the incentive was employed. Furthermore, Gilovich, Medvec, and Chen demonstrated that people are typically more motivated to reduce cognitive dissonance following a switch-loss than a stick-loss.43

Petrocelli and Harris proposed that mentally simulating alternatives to reality (i.e., counterfactual thoughts),38 particularly in response to switch-losses, inhibits learning and increases the likelihood of decision makers irrationally committing to a losing strategy. They also proposed that a biased memory process mediates this relationship. In Study 1, participants were asked to complete 60 computerized trials of the MHP and to list their thought-response after each trial. Counterfactuals were clearly involved as thought responses to MHP trials. Similar to previous research, the average switch-decision percentage per 10-trial block peaked at about 38%. In Study 2, Petrocelli and Harris directly manipulated the salience of counterfactual alternatives by spoon-feeding half of their participants with counterfactual statements; the other half of their sample was not spoon-fed counterfactuals. The average switch-decision percentage per 10-trial block peaked at about 40% and 55% respectively. More importantly, Petrocelli and Harris found the counterfactual-learning inhibition link to be mediated by a memory distortion biased against the switch-decision.

The findings of the Petrocelli and Harris studies suggest that upward counterfactual thinking inhibits associative learning in the MHP by creating a false association between switching and losing. That is, Monty Hall contestants focus on an alternative strategy (i.e., sticking) following switch-losses (e.g., If only I hadn’t switched) to a greater extent than they do following stick-losses. Furthermore, people are more likely to overestimate their switch-losses than they are their stick-losses. In other words, the thought-processing that transpires once the outcome in the MHP is known may lead to associative illusions in memory. Particularly, rather than associating the switch-decision with winning and the stick-decision with losing, the incorrect assumption of equal win-probabilities may be maintained.

Is there anything unique about medical professionals that guard them against the dysfunction of counterfactual thinking as it pertains to learning from their professional experiences? If doctors are well-positioned to learn from experience in something as simple as the MHP, surely they would be well-positioned to learn from their experiences in their own specialty. This was the focus of the current study.

Current study

Practicing physicians were recruited to complete 60 computerized trials of the MHP. Similar to procedures of the Petrocelli and Harris studies, learning was operationalized as a decision maker’s switch-decision frequency. If experience is sufficient for learning the association between switch-decisions and winning, as well as stick-decisions and losing, switch-decision frequency should naturally increase as participants progress through the trials.

Design and Methods

Participants and procedure

Practicing physicians (N=10, seven males) in the state or North Carolina, USA were recruited to participate in the current study. Participants reported an average age of 43.50, and an average of 15.00 (SD=5.44) years of post-medical school practice. The areas of specialization consisted of two doctors in internal medicine, six in family and general practice, and two in paediatrics.

Participants were met individually in their places of work, received a brief oral introduction to the study and were provided with a laptop computer. All study materials were presented using MediaLab v2012 research software.44 The study was described as an examination of how people make decisions. The instructions of the study were self-paced, and participants advanced the instructions by pressing the space bar or a response key. Participants were asked to complete 60 computerized trials of the MHP, and instructed to win as many trials as possible.

Monty Hall problem

Participants were introduced to the MHP, and were informed that they were to play 60 trials of a computerized version of the game-show popularly known as Let’s Make a Deal. They then read the following description: in this game, Monty Hall, a thoroughly honest game-show host, has placed money behind one of three doors. There is a goat behind each of the other doors. Monty will ask you to pick a door. Then he will open one of the other doors (one you did not pick). Then, Monty will ask you to make a final choice between the remaining doors, and you will win whatever is behind the door you select. Monty always knows where the money is and does not change its location once you begin a trial. Try to win as much money as possible.

During game-play, the trial-number was displayed at the top of the screen-frame. For each trial, the prize-revealing door was randomized by the computer software. At the beginning of each trial, three closed doors were presented, as well as three response buttons labelled 1, 2, and 3. After participants selected their initial door, the following screen-frame read: You picked Door X. Let’s see what is behind one of the doors you did not pick. Importantly, when Monty was permitted to open one of two doors (in cases whereby the money was behind the initially selected door), a randomly selected goat-concealing door was opened. The next screen-frame read: Behind Door Z, a door you did not pick, is a goat. You picked Door X. You now have the option to stay with your original door, Door X, or you may switch to the other remaining door. It’s up to you. What would you like to do? Participants made their decision by clicking one of two response buttons labelled Stick and Switch. Each trial concluded with the following message: Your final choice is Door X [Y]. The money is behind Door X [Y]. You Win [Lose] this trial.

On average, the entire session was completed in approximately 15 minutes. At the conclusion of each session, participants were debriefed and thanked for their time.

Results

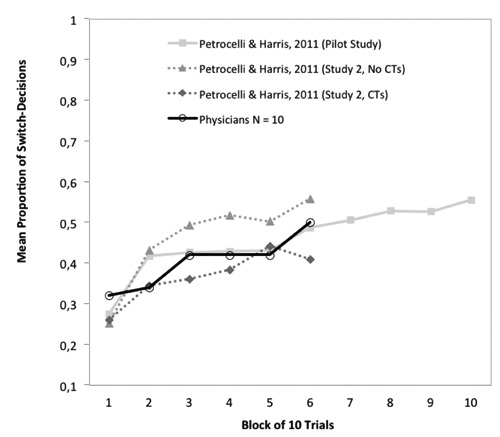

For each participant, the proportion of switch-decisions for each block of 10 trials was calculated. These data are displayed in Figure 1 along with data reported by Petrocelli and Harris.38 A repeated measures analysis of variance was employed to analyse the difference in proportions across the six blocks of 10 trials. This analysis produced a statistically significant main effect of block, F(5,45)=3.00, P<0.05, h2=0.25, suggesting that some learning did occur during the task. However, not until the comparison between block 2 and block 6 is there a statistically significant difference in switch decisions, t(45)=2.99, P<0.01. As displayed in Figure 1, the rate of switch-decisions clearly did not exceed that of earlier samples. Furthermore, a one-sample t-test revealed that even the switch-decision total for block 6 was not significantly different from the chance-probability-switch-proportion of 0.50, t(9)=-0.22, ns. Thus, although there is some statistically significant data suggestive of learning, the degree to which it may have occurred appears to be practically insignificant.

Figure 1.

Mean proportion of switch-decisions. CT = Counter-factual Thoughts

Discussion and Conclusions

The current findings are consistent with earlier research demonstrating that learning is inhibited in a multiple-trial MHP paradigm. Similar to undergraduate student samples, physicians appear to make the same decision error repeatedly. Despite winning twice as much via switching than sticking, on average the sample made sub-optimal stick-decisions (a 0.33 probability of winning any particular trial) as much as they made optimal switch-decisions (a 0.67 probability of winning any particular trial). This tendency was evident even after 60 MHP trials. Although the sample size of the current study was relatively small, the within-subjects nature of the design adds to the validity of the conclusions. Furthermore, the striking similarity between the current data and that pertaining to larger samples suggests that there is little reason to expect larger samples to lead to different conclusions.38

The MHP is mirrored by medical practice when doctors and their patients are faced with the choice between three treatment options, all of which have a low, but equal, probability of success (e.g., 0.33). Similar to medical practice whereby people are motivated to mentally undo undesirable outcomes, every loss is almost a win in the multiple-trial MHP paradigm. This makes the MHP ideal for eliciting counterfactual thoughts. Although they clearly complicate learning in the MHP, the dysfunction of counterfactuals is not found only in their generation, but in the haste to follow their prescriptions in the future. The multiple-trial MHP paradigm is also ideal as an experimental learning paradigm in that it presents little external information to distract the individual from learning the solution. Speed in learning the solution depends greatly on accurately encoding reality rather than its alternatives. If suboptimal decision making runs rampant with something as simple as the MHP, how can we expect doctors to make optimal decisions in more complex situations? The data suggest that there is nothing special about professional medical training or experiences that will enhance the learning of doctors in such situations. Surely, this is not to suggest that doctors never learn from their experiences, but it appears that learning via experience is considerably slower than one might hope in contexts that are especially likely to enhance counterfactual thought generation. The current data further suggests that medical decision making should be based on something more than experience alone.

The current data suggest that doctors do not maximize their learning from their experiences the way they should, or at the rate that we might hope that they would. One speculation is that people do learn something from experience, but their learning appears to be inhibited by their endorsement of a priori strategies followed-up by confirmation bias,45 and other cognitive illusions, to justify their continued use. Such speculation is supported by three findings. First, the decision/outcome that occurred the least (and was least likely to occur to begin with), tends to be overrepresented in memory – see Study 2 of Petrocelli and Harris.38 This is because switch-losses are counterfactualized away much more frequently than stick-losses. It is more painful to experience failure when that failure follows changes to one’s decision than no changes to his/her decision, and people appear to over-remember these instances. Such findings are in line with over-recalling changes, in comparison to lack of changes, made to multiple choice exam items that are marked incorrect.46

Second, it has been demonstrated that counterfactual-learning inhibition link is mediated by the memory distortion biased against the switch decision – see Study 1 and Study 2 of Petrocelli and Harris.

Finally, the multiple-trial MHP paradigm demonstrates that distorted memory for switch-losses encourages significantly greater preference for the stick-decision, as expressed in both verbal and behavioural measures. Thus, counterfactuals can even be correct for proximal events, yet prescribe dysfunctional implications for the future.

Counterfactual thinking in medical practice and training

When and where does counterfactual thinking emerge for doctors in their medical practice and training? Similar to previous research, counterfactual thinking is likely to emerge when doctors experience unexpected outcomes, undesirable outcomes, repeatable situations, come close to reaching more desirable outcomes, and feel some degree of subjective control over the situations in which they encounter. Counterfactuals are likely to emerge in the minds of medical professionals and are likely to be expressed through the direct communications between attending and resident physicians, nurses, and all medical professionals involved in the care of a patient.

Medical training is very experiential, and often times a physician’s unique experiences are most cognitively available. Consistent with the notion of the availability heuristic (i.e., estimating the frequency or likelihood of an event based on the ease with cases come to mind), some clinical researchers have concluded that what influences clinical choices the most is the physician’s last bad experience.28,29 Thus, it is not hard to imagine how counterfactuals, in response to undesirable medical outcomes, can affect subsequent decision making in similar cases.

An experiential modality of medical training, the morbidity and mortality conference (MMC), also happens to be a breading ground for counterfactual thinking. MMCs are widespread and serve as an important medium of communication among physicians and change in medical practice within an institution. They are in every teaching hospital in the United States. Meetings are held weekly and often mandatory for resident physicians and full-time faculty. The primary goal of the MMC session is to serve as the main didactic learning session dedicated to developing competency in practice-based learning and improvement and system-based practice. It also serves as a key element in promoting quality improvement and patient safety. The objectives of the MMC often include: i) identifying and presenting patient cases involving adverse outcomes; ii) analysing the pertinent facts of a case in a systematic and nonpunitive manner (in order to identify possible contributing or causative factors for the adverse outcome, or possible system-based issues contributing to the outcome or that were sub-optimal in preventing or responding to the outcome); and iii) identifying areas for improvement in the care. The typical MMC session includes a case presentation in timeline format, a brief literature review relevant to the case in question, the identification of key issues leading to the undesired outcome, and the identification of workgroups to address the key issues.47,48 Not only are counterfactual thoughts often expressed during the presentation of these specific cases,6 but often attendees of the conference voice their own counterfactuals. Concurrence often emerges in support of such counterfactuals from other attendees. Because consensus often breeds certainty,49 case presenters and attendees may often feel fully justified in altering decision strategies for future similar cases based on the counterfactual prescriptions generated during the conference. The MMC is one way in which medical practice improves in quality and safety. However, it is unlikely that optimal decision strategies are uniformly developed through such a modality.

Medical decision making is greatly shaped by a doctor’s experiences and vicarious experiences.23,31,32,50,51 Through years of experience, doctors will gain an abundance of experiential data. Their unique experiences are what make them specialists. A doctor’s explanations (or causal ascriptions) for medical outcomes inevitably affect his/her decisions about diagnoses and treatments in future cases; each case can serve as an important learning experience.

To what degree do doctors monitor their data accurately and shape their judgments and decisions accordingly? To what degree do preexisting hypotheses of causation, confirmation biases, emotions, and the motivation to be correct cloud the data? The current research findings, and those of Petrocelli and Harris,38 call the accuracy of such data collection processes into question. Counterfactual thinking appears to be one cognitive activity that can distort the data collection process and perceived implications for subsequent decisions. Similar to losing a MHP trial via switching, doctors may make decisions associated with the highest probability of success (e.g., adhering strictly to clinical practice guidelines) and still experience undesirable outcomes for their patients. In such cases, doctors may mentally simulate alternative actions that may or may not prescribe optimal actions for the future.

Research agenda for alternative reference points for medical decision making

The problem with counterfactual thoughts is that they often serve as faulty reference points for judging reality and decisions. Thus, they sometimes mislead people to adopt beliefs about the actual causes of events and the ways to prevent undesirable outcomes in the future that are simply incorrect. Certainly, counterfactuals may be correct and lead to new insights and advances. However, there appear to be basic elements that must be in place for any particular counterfactual thought to contribute to improvements in medical decision making; these include: i) correct casual antecedent, ii) accurate memory for actual occurrences, iii) ability to change behaviour in the direction of the counterfactual prescriptions, iv) motivation to follow the prescriptions, v) a similar situation encountered in the future, and vi) successfully making the necessary behavioural change.

Because there appear to be many links in the chain (between counterfactual thinking and functional medical decision making) that can be broken, perhaps medical professionals should use more functional reference points than those afforded by counterfactuals. Each of the proposed alternative reference points (described below) warrants future research attention.

Consider-alternatives-strategy

Doctors are unlikely to stop generating counterfactual thoughts in response to undesirable outcomes, nor should they even attempt to. A more functional reference point for judging the medical course of action, and determining whether or not it should change in the future, should be done by first considering the possibility that the counterfactual might be wrong. Studies in debiasing suggest that simply considering the opposite, or alternative ways in which an event might have unfolded, can help to prevent tunnel vision leading to hindsight bias.52-55 Thus, when doctors begin to engage in counterfactual thought experiments considering multiple upward and downward counterfactuals may help to put the default counterfactual in clearer perspective.

Causal reasoning

Counterfactual thinking is often effortless and occurs automatically when undesirable outcomes are encountered.18 Counterfactualizing an event may clarify ways in which a particular outcome may have been prevented, but often it fails to be associated with adopting causal ascriptions that actually covary with the outcome in question. Mandel and Lehman showed that when people are asked to consider a scenario and asked to list counterfactuals they tend to list things that might have prevented the outcome and not necessarily the things that that covary with those outcomes.26 However, when people are asked to list things that might have caused the outcome, they actually list things that covary with those outcomes. This practice would seem to lead to much more accurate causal ascriptions and more functional prescriptions for future similar cases.

Advocacy and communication of the actuarial reasoning approach

Advocacy for both clinical vs. actuarial reasoning in medical practice is an ongoing debate.56,57 Despite the fact that medicine is a science and that it often entails varying degrees of uncertainty, doctors rarely communicate their work as such to their patients. Changing the way that medical professionals, and the masses they serve, think about medicine is perhaps the only way to accept that sometimes bad outcomes follow good decision making strategies. If doctors can change how they and their patients think about medicine (e.g., thwarting the super-person scheme), they are much more likely to accept some degree of the inevitable fallibility of medical science and many errors can be reframed as an unfortunate part of the process. Doctors are trained to behave like clinicians, but what might errors look like if they were also trained to behave like statisticians and communicate this role to their patients? As Groopman argued,29 the healthy perspective of medicine embraces uncertainty: Paradoxically, taking uncertainty into account can enhance a physician’s therapeutic effectiveness, because it demonstrates his honesty, his willingness to be more engaged with his patients, his commitment to the reality of the situation rather than resorting to evasion, half-truth, and even lies. (p. 155).

Education in statistics and basic judgment and decision making

Education in statistics and basic judgment and decision making is simply inadequate in medical training. Awareness and recognition of cognitive biases and possible errors in one’s judgments can lead to more functional decision making. For instance, studies reported by Gigerenzer and his colleagues suggests that doctor’s often apply a substantial degree of guesswork to the diagnostic processes as opposed to calculating Bayesian conditional probabilities.58,59 In one example, Gigerenzer and his colleagues showed that 60% of 160 gynaecologists estimated the chances that a woman has breast cancer, after receiving a positive mammogram, to be 81% or greater. However, the chances are only 10%, and doctors appear to forget about the frequency of false positive test results that mammograms produce. To make better estimates of conditional probabilities, Gigerenzer advocates that doctors think as frequentists rather than probabilists. Doctors and patients would seem to make better treatment decisions and fewer errors if they know that the probability of a serious condition is actually only 0.10 than 0.85 – this type of knowledge should also help to reduce the likelihoods of common errors that come through over-testing, over-medicating, and over-treating in general. Not only do patients deserve to know what a positive or negative test result actually means, but it is critical that physicians know what test results mean as well, and they should be able to communicate this to their patients.

Evidence-based medicine and clinical practice guidelines

Because medical decision making is a complex task, physicians also rely on clinical practice guidelines (CPGs) that aim to enhance medical decisions and eliminate unnecessary and unjustified treatment variance.60 However, conscious non-adherence to CPGs is common among physicians.61-65 Ambivalence toward evidence-based medicine (EBM) and clinical practice guidelines (CPGs) is quite ubiquitous in the medical field.66 According to Pelletier,67 20% to 50% of conventional medical care, and almost all of surgery, is not adequately supported by EBM. A recent meta-analysis suggests that physicians’ quality of care decreases as they gain experience.68 Some of this variation is explained by the degree to which doctors adhere to CPGs. When CPGs fail, doctors often fail to recognize that good decision strategies, such as adhering to appropriate CPGs, are not always followed by good outcomes, leaving them at risk for switching from an optimal to a suboptimal decision strategy. Determining how physicians process the success of treatments as a possible source of non-adherence to CPGs is crucial, as research suggests that the most serious medical errors in diagnoses and treatment selection result from faulty reasoning.69,70

The current theoretical framework is based on the efficacy of actuarial decision making and the assumption that CPG-adherence enhances healthcare. Healthcare service delivery can be improved by elucidating how doctors process medical outcomes and how their processing affects CPG-adherence. The obvious motive of high performance and aspects of training and continuing education through morbidity and mortality conferences, for example, can prompt doctors to engage in counterfactual thinking often, develop confidence in their counterfactual thoughts, and become subject to the outcome bias. However, rather than employ counterfactual alternatives as reference points to judge the quality of medical decisions and form prescriptions for the future, doctors may perform better by comparing their decisions to available CPGs.

References

- 1.Petrocelli JV, Percy EJ, Sherman SJ, Tormala ZL. Counterfactual potency. J Personality Soc Psychol 2011;100:30-46 [DOI] [PubMed] [Google Scholar]

- 2.Kahneman D, Tversky A. The simulation heuristic. Kahneman D, Slovic P, Tversky A. Judgment under uncertainty: heuristics and biases. New York: Cambridge University Press; 1982. 201-8 [Google Scholar]

- 3.Roese NJ, Olson JM. Counterfactual thinking: a critical overview. Roese NJ, Olson JM. What might have been: the social psychology of counterfactual thinking. Hillsdale: Lawrence Erlbaum Associates; 1995. 1-55 [Google Scholar]

- 4.Markman KD, Gavanski I, Sherman SJ, McMullen MN. The mental simulation of better and worse possible worlds. J Exp Soc Psychol 1993;29:87-109 [Google Scholar]

- 5.Gilovich T. Biased evaluation and persistence in gambling. J Personality Soc Psychol 1983;44:1110-26 [DOI] [PubMed] [Google Scholar]

- 6.Petrocelli JV, Sherman SJ. Event detail and confidence in gambling: the role of counterfactual thought reactions. J Exp Soc Psychol 2010;46:61-72 [Google Scholar]

- 7.Wells GL, Gavanski I. Mental simulation of causality. J Personality Soc Psychol 1989;56:161-9 [Google Scholar]

- 8.Roese NJ, Olson JM. The structure of counterfactual thought. Personality Soc Psychol Bull 1993;19:312-9 [Google Scholar]

- 9.Epstude K, Roese NJ. The functional theory of counterfactual thinking. Personality Soc Psychol Rev 2008;12:168-92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Markman KD, McMullen MN. A reflection and evaluation model of comparative thinking. Personality Soc Psychol Rev 2003;7:244-67 [DOI] [PubMed] [Google Scholar]

- 11.McMullen MN. Affective contrast and assimilation in counterfactual thinking. J Exp Soc Psychol 1997;33:77-100 [Google Scholar]

- 12.Kahneman D, Miller DT. Norm theory: comparing reality to its alternatives. Psychol Rev 1986;93:136-53 [Google Scholar]

- 13.Biernat M, Manis M, Nelson TE. Stereotypes and standards of judgment. J Personality Soc Psychol 1991;60:485-99 [Google Scholar]

- 14.Schwarz N, Bless H. Scandals and the public’s trust in politicians: assimilation and contrast effects. Personality Soc Psychol Bull 1992;18:574-9 [Google Scholar]

- 15.Roese N. Counterfactual thinking and decision making. Psychonom Bull Rev 1999;6:570-8 [DOI] [PubMed] [Google Scholar]

- 16.Baron J, Hershey JC. Outcome bias in decision evaluation. J Personality Soc Psychol 1988;54:569-79 [DOI] [PubMed] [Google Scholar]

- 17.Gleicher F, Kost KA, Baker SM, et al. The role of counterfactual thinking in judgments of affect. Personality Soc Psychol Bull 1990;16:284-95 [Google Scholar]

- 18.Goldinger SD, Kleider HM, Azuma T, Beike DR. Blaming the victim under memory load. Psychologic Sci 2003;14:81-5 [DOI] [PubMed] [Google Scholar]

- 19.Miller DT, McFarland C. Counterfactual thinking and victim compensation: a test of norm theory. Personality Soc Psychol Bull 1986;12:513-9 [Google Scholar]

- 20.Miller DT, Taylor BR. Counterfactual thought, regret, and superstition: how to avoid kicking yourself. Roese NJ, Olson JM. What might have been: the social psychology of counterfactual thinking. Hillsdale: Lawrence Erlbaum Associates; 1995. 305-31 [Google Scholar]

- 21.Roese NJ. The functional basis of counterfactual thinking. J Personality Soc Psychol 1994;66:805-18 [Google Scholar]

- 22.Roese NJ. Counterfactual thinking. Psychologic Bull 1997;121:133-48 [DOI] [PubMed] [Google Scholar]

- 23.Höfler M. Causal inference based on counterfactuals. BMC Med Res Methodol 2005;5:1-12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Petrocelli JV, Crysel LC. Counterfactual thinking and confidence in blackjack: a test of the counterfactual inflation hypothesis. J Exp Soc Psychol 2009;45:1312-5 [Google Scholar]

- 25.Roese NJ, Olson JM. Counterfactuals, causal attributions, and the hindsight bias: a conceptual integration. J Exp Soc Psychol 1996;32:197-227 [Google Scholar]

- 26.Mandel DR, Lehman DR. Counterfactual thinking and ascriptions of cause and preventability. J Personality Soc Psychol 1996;71:450-63 [DOI] [PubMed] [Google Scholar]

- 27.Dawid A. Causal thinking without counterfactuals. J Am Statistic Assoc 2000;95:407-24 [Google Scholar]

- 28.Potchen EJ. Measuring observer performance in chest radiology: some experiences. J Am Coll Radiol 2006;3:423-32 [DOI] [PubMed] [Google Scholar]

- 29.Groopman J. How doctors think. New York: Houghton Mifflin Company; 2007 [Google Scholar]

- 30.Ratner RK, Herbst KC. When good decisions have bad outcomes: the impact of affect on switching behavior. Organ Behav Hum Decis Process 2005;96:23-37 [Google Scholar]

- 31.Greenwood J. Theoretical approaches to the study of nurses’ clinical reasoning: getting things clear. Contemporary Nurse 1998;7:110-6 [DOI] [PubMed] [Google Scholar]

- 32.Round A. Introduction to clinical reasoning. J Evaluat Clin Pract 2001;7:109-17 [DOI] [PubMed] [Google Scholar]

- 33.Nasco SA, Marsh KL. Gaining control through counterfactual thinking. Personality Soc Psychol Bull 1999;25:556-68 [Google Scholar]

- 34.Girotto V, Ferrante D, Pighin S, Gonzalez M. Postdecisional counterfactual thinking by actors and readers. Psychologic Sci 2007;18:510-5 [DOI] [PubMed] [Google Scholar]

- 35.Metcalfe J. Cognitive optimism: self-deception or memory-based processing heuristics? Personality Soc Psychol Rev 1998;2:100-10 [DOI] [PubMed] [Google Scholar]

- 36.Petrocelli JV, Seta CE, Seta JJ, Prince LB. If only I could stop generating counterfactual thoughts: when counterfactual thinking interferes with academic performance. J Exp Soc Psychol 2012;48:1117-23 [Google Scholar]

- 37.Petrocelli JV, Seta CE, Seta JJ. Dysfunctional counterfactual thinking: when simulating alternatives to reality impedes experiential learning. Thinking Reasoning 2013;19:205-30 [Google Scholar]

- 38.Petrocelli JV, Harris AK. Learning inhibition in the Monty Hall Problem: the role of dysfunctional counterfactual prescriptions. Personality Soc Psychol Bull 2011;37:1297-311 [DOI] [PubMed] [Google Scholar]

- 39.Krauss S, Wang XT. The psychology of the Monty Hall problem: discovering psychological mechanisms for solving a tenacious brain teaser. J Exp Psychol Gen 2003;132:3-22 [DOI] [PubMed] [Google Scholar]

- 40.Granberg D, Brown TA. The Monty Hall dilemma. Personality Soc Psychol Bull 1995;21:711-23 [Google Scholar]

- 41.Granberg D, Dorr N. Further exploration of two-stage decision making in the Monty Hall dilemma. Am J Psychol 1998;111:561-79 [Google Scholar]

- 42.Franco-Watkins AM, Derks PL, Dougherty MRP. Reasoning in the Monty Hall problem: examining choice behaviour and probability judgements. Thinking Reasoning 2003;9:67-90 [Google Scholar]

- 43.Gilovich T, Medvec VH, Chen S. Commission, omission, and dissonance reduction: coping with regret in the Monty Hall problem. Personality Soc Psychol Bull 1995;21:182-90 [Google Scholar]

- 44.Jarvis WBG. MediaLab v2012 ed. New York: Empirisoft; 2012 [Google Scholar]

- 45.Klayman J, Ha YW. Confirmation, disconfirmation, and information in hypothesis testing. Psychologic Rev 1987;94:211-28 [Google Scholar]

- 46.Kruger J, Wirtz D, Miller DT. Counterfactual thinking and the first instinct fallacy. J Personality Soc Psychol 2005;88:725-35 [DOI] [PubMed] [Google Scholar]

- 47.Orlander J, Fincke B. Morbidity and mortality conference: a survey of academic internal medicine departments. J Gen Intern Med 2003;18:656-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Orlander J, Barber T, Fincke B. The morbidity and mortality conference: the delicate nature of learning from error. Acad Med 2002;77:1001-6 [DOI] [PubMed] [Google Scholar]

- 49.Petrocelli JV, Tormala ZL, Rucker DD. Unpacking attitude certainty: attitude clarity and attitude correctness. J Personality Soc Psychol 2007;92:30-41 [DOI] [PubMed] [Google Scholar]

- 50.Montgomery K. How doctors think: clinical judgment and the practice of medicine. New York: Oxford University Press; 2006 [Google Scholar]

- 51.Del Mar C, Doust J, Glasziou P. Clinical thinking: evidence, communication and decision making. Oxford: Blackwell; 2006 [Google Scholar]

- 52.Hirt ER, Markman KD. Multiple explanation: a consider-an-alternative strategy for debiasing judgments. J Personality Soc Psychol 1995;69:1069-86 [Google Scholar]

- 53.Arkes HR. Costs and benefits of judgment errors: implications for debiasing. Psychologic Bull 1991;110:486-98 [Google Scholar]

- 54.Lord CG, Lepper MR, Preston E. Considering the opposite: a corrective strategy for social judgment. J Personality Soc Psychol 1984;47:1231-43 [DOI] [PubMed] [Google Scholar]

- 55.Croskerry P. Cognitive forcing strategies in clinical decision making. Ann Emerg Med 2003;41:110-20 [DOI] [PubMed] [Google Scholar]

- 56.Dawes RM, Faust D, Meehl PE. Clinical versus actuarial judgment. Science 1989;243:1668-74 [DOI] [PubMed] [Google Scholar]

- 57.Swets JA, Dawes RM, Monahan J. Psychological science can improve diagnostic decisions. Psychologic Sci Publ Interest 2000;1:1-26 [DOI] [PubMed] [Google Scholar]

- 58.Gigerenzer G, Gaissmaier W, Kurz-Milcke E, et al. Helping doctors and patients make sense of health statistics. Psychologic Sci Publ Interest 2007;8:53-96 [DOI] [PubMed] [Google Scholar]

- 59.Hoffrage U, Gigerenzer G. Using natural frequencies to improve diagnostic inferences. Acad Med 1998;73:538-40 [DOI] [PubMed] [Google Scholar]

- 60.Gundersen L. The effect of clinical practice guidelines on variations in care. Ann Intern Med 2000;133:317-8 [DOI] [PubMed] [Google Scholar]

- 61.Farr BM. Reasons for noncompliance with infection control guidelines. Infect Control Hosp Epidemiol 2000;21:411-6 [DOI] [PubMed] [Google Scholar]

- 62.Mottur-Pilson C, Snow V, Bartlett K. Physician explanations for failing to comply with best practices. Effect Clin Pract 2001;4:207-13 [PubMed] [Google Scholar]

- 63.Randolph AG. A practical approach to evidence-based medicine: lessons learned from developing ventilator management protocols. Crit Care Clin 2003;19:515-27 [DOI] [PubMed] [Google Scholar]

- 64.Rello J, Lorente C, Bodí M, et al. Why do physicians not follow evidence-based guidelines for preventing ventilator-associated pneumonia? A survey based on the opinions of an international panel of intensivists. Chest 2002;122:656-61 [DOI] [PubMed] [Google Scholar]

- 65.Webster BS, Courtney TK, Huang YH, et al. Physicians’ initial management of acute low back pain versus evidence-based guidelines. J Gener Intern Med 2005;20:1132-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Tunis S, Hayward R, Wilson M, et al. Internists’ attitudes about clinical practice guidelines. Ann Intern Med 1994;120:956-63 [DOI] [PubMed] [Google Scholar]

- 67.Pelletier KR. Conventional and interative medicine - evidence based? Sorting fact from fiction. Focus Altern Complement Therap 2003;8:3-6 [Google Scholar]

- 68.Choudhry NK, Fletcher RH, Soumerai SB. Systematic review: the relationship between clinical experience and quality of health care. Ann Intern Med 2005;142:260-73 [DOI] [PubMed] [Google Scholar]

- 69.Bogner MS.Human error in medicine. Florence: Lawrence Erlbaum Associates; 1994 [Google Scholar]

- 70.Graber M, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med 2005;165:1493-9 [DOI] [PubMed] [Google Scholar]