Abstract

The paper summarises previous theories of accident causation, human error, foresight, resilience and system migration. Five lessons from these theories are used as the foundation for a new model which describes how patient safety emerges in complex systems like healthcare: the System Evolution Erosion and Enhancement model. It is concluded that to improve patient safety, healthcare organisations need to understand how system evolution both enhances and erodes patient safety.

Significance for public health.

The article identifies lessons from previous theories of human error and accident causation, foresight, resilience engineering and system migration and introduces a new framework for understanding patient safety in healthcare; the System Evolution, Erosion and Enhancement (SEEE) model. The article is significant for public health because healthcare organizations around the world need to understand how safety evolves and erodes to develop and implement interventions to reduce patient harm.

Key words: safety, human error

Background

All too often, the response when a doctor, nurse or allied healthcare professional makes a mistake is to blame and punish. Healthcare professionals who make errors are often viewed as being careless, culpable and are subject to disciplinary action.1

This approach, known as the person-centred approach to human error,1 commonly prevails in healthcare systems around the world. It does not improve patient safety. The person-centred model of human error is linked to two myths that we must eradicate if we want to make healthcare systems safer; the punishment myth and the perfection myth.1 The punishment myth relates to the belief that if we punish healthcare professionals who make mistakes they will be more careful in the future and make fewer errors.1 The perfection myth is the belief that if people try hard enough they will not make errors.1 It is based on the assumption that error-free performance is attainable and is something doctors, nurses and allied healthcare professionals can achieve if they are more vigilant. Evidence from cognitive psychology and human factors research shows us that the expectation of error-free performance is unrealistic.2,3 Human performance is shaped by the context in which it occurs; factors like task and process design, culture, teamwork and environmental conditions all influence human performance.2,4

Research on accident causation and landmark publications on medical error,2,3 including To error is human in the United States and an Organization with a Memory in the UK,5,6 have advocated that it is important to understand how healthcare systems fail, rather than simply focusing on individual healthcare professionals who make errors. The article discusses previous models of accident causation,2,3 human error,2,3,7,8 foresight,9,10 resilience engineering and system migration.11-13 Five lessons from these previous theories are identified and a new framework for understanding safety is presented: the System Evolution Erosion and Enhancement (SEEE) model.

The systems approach to accident causation

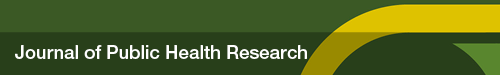

The systems approach is encapsulated in Reason’s Swiss Cheese model of accident causation.2,3 The model states that in any system there are many levels of defence but these defences are imperfect both because of inherent human fallibility and weaknesses in how systems are designed and operated.

Reason’s model distinguishes between active failures and latent conditions.2,3 Active failures are errors and violations that are committed by people at the service delivery end of the healthcare system (e.g., the ward nurse, the pharmacist,, the general practitioner, the operating room team, the psychiatric nurse etc.). Active failures by these people may have an immediate impact on safety.

Latent conditions result from poor decisions made by the higher management in an organization, e.g. by regulators, governments, designers, and manufacturers. Latent conditions lead to weaknesses in the organization’s defences, thus increasing the likelihood that when active failures occur they will combine with existing preconditions, breach the system’s defences, and result in an organizational accident. Latent conditions and active failures lead to windows of opportunity in a system’s defences. When these windows of opportunity are aligned across several levels of a system, an accident trajectory is created (Figure 1). The accident trajectory is represented by the penetration of the levels of defence by an arrow. The holes represent latent and active failures that have breached successive levels of defence. When the arrow penetrates all the levels of defence, an adverse event (a death or patient harm) occurs.2,3

Figure 1.

Swiss Cheese model of organizational accidents.

Swiss cheese and medical incident investigation

Incident investigations based on the Swiss Cheese model identify how latent conditions and active errors combine to lead to patient harm. The latent conditions and active failures that have been identified from investigations of intrathecal vincristine medication incidents in healthcare are summarised below.6,9,14

Latent conditions: i) unclear or inaccessible chemotherapy policies and procedures. For example, policies where it was not made clear that intravenous vincristine and intrathecal methotrexate should not be given in the same clinical location on the same day. ii) A hierarchical organizational culture in which nurses and pharmacists felt unable to challenge the unsafe practice of consultants. iii) The design of spinal connectors (which allowed a vincristine syringe to be attached to a spinal connector). iv) Poor induction practices for junior doctors whereby doctors were allowed to circumvent the intrathecal medicine induction training because of production pressures on the ward. v) A culture characterized by convergence of benevolence where staff routinely broke rules to meet patient needs and to ensure that treatment was administered as closely to chemotherapy protocols as possible.9

Active failures: i) checking errors by hospital pharmacists which allow vincristine to be sent to a clinical area at the same time methotrexate is scheduled to be administered to a patient; ii) checking omissions or failures by the junior doctors means that they do not identify they are about to administer vincristine intrathecally (when it should only be administered intravenously).

Beyond Swiss Cheese

The Swiss Cheese model has been used as the theoretical basis for developing other models of incident causation (for example, Helmreich’s threat and error management model in aviation) and incident investigation tools in healthcare [(for example, the London protocol and Prevention Recovery Information System for Monitoring and Analysis (PRISMA)-Medical (PRISMA-Medical)].15-17 The distinction between active and latent failures has strongly influenced efforts to understand the causes of error and incident investigation for the last two decades, both in healthcare and other industries. Its dominance has prevailed even though Reason himself has developed newer models aimed at understanding human error in complex systems. For example, the three buckets model and the harm absorbers model,9,10 both which recognise that healthcare professionals often use intuition, expertise and foresight to anticipate, intervene and prevent patient harm.9,10 In this sense, doctors, nurses and allied healthcare professionals are the last line of defence in the healthcare system.9,10

The old versus the new view of human error

Some critics have argued that, although well-intentioned, in practice, the Swiss Cheese Model, leads to a linear approach to incident investigation: in what has been termed the old view of human error, efforts are made to trace back from active errors to identify organisational failures without recognising the complexity of systems like healthcare and aviation.7,8

Dekker distinguishes between the old view and the new view of human error.7,8 He argues that the old view of human error, where there is a search for organisational deficiencies or latent failures, simply causes us to relocate the blame for incidents upstream to senior managers and regulators. This was recently evidenced in the United Kingdom National Health Service in the Francis Inquiry report into the deaths of patients at Mid Staffordshire hospital.18 There was significant focus in both the inquiry report and in subsequent media coverage on the lapses by healthcare regulators that led to delays in intervening to prevent patients being harmed. As a result of the findings of the Francis Inquiry and other high profile national incident reports, the NHS’s key regulator, the Care Quality Commission, has come under intense media scrutiny. Dekker’s argument that blame is simply attributed further upstream seems, to some extent, to have been borne out by Mid Staffordshire.

Dekker advocates that a new view of human error is needed which views safety as an emergent property of a system in which there are numerous trade-offs between safety and other goals, (for example, production pressures).7,8 Other theorists have also recognised that safety is an emergent property in complex systems, including proponents of resilience engineering.11,12

Resilience engineering

Resilience is the ability of individuals, teams and organisations to identify, adapt and absorb variations and surprises on a moment by moment basis.11 Resilience engineering recognises that complex systems are dynamic and it is the ability of individuals, teams and organisations to adapt to system changes that creates safety. Resilience moves the focus of learning about safety away from What went wrong? to Why does it go right?.12

Case study examples of how healthcare teams create resilience in emergency departments and operating theatres have been published.19-21 For example, Patterson described how collaborative cross checking occurs in teams and that this is a resilience strategy used by teams to maintain safety.20

One key concept from resilience engineering is the distinction between Safety 1 and Safety 2.12 In healthcare, safety has traditionally been defined by its absence. That is to say, we learn how to improve safety from investigating past events like incidents, complaints. This is known as Safety I.12 In contrast, Safety II focuses on the need to learn from what goes right. It involves exploring the ability to succeed when working conditions are dynamic.12 Safety II involves looking at good outcomes, including how healthcare organisations adapt to drifts and disturbances from a safe state and correct them before an incident occurs. In healthcare, we rarely learn from what goes right because resources are solely invested into learning from what goes wrong. However, serious incidents occur less frequently than instances of Safety II (which are numerous).12 Hence focusing on what goes right would provide an opportunity to about events that occur frequently, as opposed to rarely.

Migration to system safety boundaries

Amalberti’s system migration model is also relevant to understanding medical errors in healthcare.13 Amalberti postulates that humans are naturally adaptable and explore their safety boundaries. A combination of life pressures, perceived vulnerability, belief systems and the trade-off between these factors versus perceived individual benefits leads people to navigate through the safety space:

Amalberti differentiates between:13 i) the legal space, i.e. prescribed behaviour; ii) the illegal-normal space, where people naturally drift into depending on situational factors and personal beliefs; iii) the illegal-illegal space; which brings people into an area of that is unsafe and where the probability of an accident occurring is greatly increased.

In healthcare, the legal-space is defined by policies, procedures and guidelines that describe standards of safe practice. Frequently, when serious incidents occur, non-compliance with policies and procedures is identified as a root cause. All too often hindsight bias comes into play in the investigation process and too little consideration is given to the situational factors that led to non-compliance. Hindsight bias occurs when an investigator,22 who is looking backwards after an incident has occurred, judges the behaviour of those involved unfairly because with the benefit of hindsight it is easy to see the alternative courses of action that could have been taken which would prevent the incident from occurring.

In one study exploring procedural non-compliance in healthcare, a ward sister who had been disciplined following a drug error that led to serious harm to a patient was interviewed.23 The hospital had recently amended its Medication policy for the drug involved in the incident, reverting back to a double-checking procedure from single checking by a nurse prior to medication administration. The ward sister was unaware the Medication policy had been changed and did not involve another nurse in the checking process before administering the drug to the patient. As a result, she was disciplined, partly because hindsight and outcome bias in the incident investigation process meant that the lead investigator did not look into how the changes to the medication policy was communicated to nursing staff and whether other nurses were unaware of the changes to the checking procedure.23

Five lessons about safety and accident causation

Table 1. summarises five lessons from the theories that have been summarised in the article. It is postulated that future theories of safety need to take account of these five lessons in order to develop models and frameworks that capture the complexity of safety in healthcare. Without an under-pinning theoretical framework that captures how safety is a complex, dynamic phenomenon, healthcare organisations around the world will not understand the different facets of safety that emerge as healthcare systems evolve over time.

Table 1.

Five key lessons from previous theories of accident causation, human error, foresight, resilience and system migration.

| What is the lesson to learn? | Source |

|---|---|

| Lesson 1: a combination of systems and human factors can enhance or erode safety. | Swiss Cheese, Three buckets and harm absorber models |

| Lesson 2: systems are dynamic: they evolve over time and spring nasty surprises. Healthcare professionals, teams and organisations sometimes successfully anticipate and manage these nasty surprises, and sometimes they do not. | Three buckets and harm absorber models; Resilience engineering; Safety 1 versus Safety 2 |

| Lesson 3: safety is an emergent property of the system which needs to be understood in the context of trade-offs with other competing goals (for example, in healthcare, meeting efficiency targets, making financial savings and ensuring continuity of the service). |

The old and new view of human error; Resilience engineering |

| Lesson 4: hindsight bias, together with the human tendency to attribute blame and the fact that serious incidents occur less frequently than successful outcomes limits what we can learn from taking human error as our starting point and tracing backwards to identify the causes of what went wrong. We therefore need to balance our focus and learn from what goes right rather than being preoccupied with learning from what goes wrong. | The old and new view of human error; Safety 1 versus Safety 2 |

| Lesson 5: humans migrate and explore the system’s safety boundaries. The extent to which they do this depends upon a combination of factors including life pressures, situational factors and personal belief systems. | System migration |

The Safety Evolution Erosion and Enhancement model

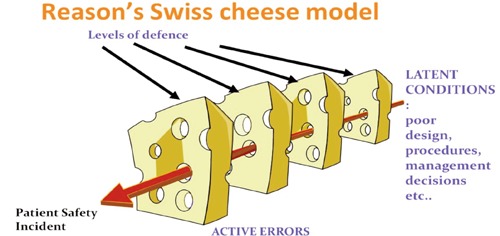

The five lessons summarised in Table 1 are illustrated in Figure 2. Figure 2 shows the underlying processes that healthcare organisations need to appreciate to understand safety as a complex, dynamic process. It shows how system evolution impacts on safety both positively and negatively by causing both erosion and enhancement. Figure 2 shows that both systems-level, team and individual human factors can enhance or erode safety in healthcare (i.e. Lesson 1). Figure 2 aims to show that any system, whether it is a healthcare system, aviation, offshore oil and gas, banking or other complex sociotechnical system naturally evolves over time (i.e. Lesson 2). System evolution is caused by many different types of factors including the introduction of new technology, innovations in healthcare procedures, organisational restructuring or mergers, staff retention and recruitment, patient pathway and process re-design, the context and focus of external regulators, cultural change, equipment maintenance and IT upgrades, production, efficiency and safety performance targets, and the economic climate. System evolution also occurs as a result of the natural human tendency to explore safety boundaries (i.e. Lesson 5) (Figure 2).

Figure 2.

The processes of safety evolution, erosion and enhancement.

Enhancement and erosion

System evolution can have both positive and negative effects on safety. In terms of positive effects, system evolution can lead to safety enhancement. Enhancement occurs when system evolution strengthens in-built defences, barriers and safeguards or where it improves the ability of individuals and teams to anticipate and respond when nasty surprises occur. By recognising that safety enhancement results from system evolution, Figure 2 illustrates the importance for healthcare organisations of learning from what goes right (i.e. Safety II; Hollnagel 2012; i.e. Lesson 4), rather than only learning from what goes wrong.

System evolution can also lead to erosion, where defences, barriers and safeguards are weakened or where the ability of teams and individuals to identify, intervene and thwart emerging safety threats is negatively affected (Figure 2).

The central tenet of the SEEE model is that in order to effectively manage safety, healthcare organisations need to understand the relationship between system evolution and enhancement and erosion. Hence we need to recognise that safety is an emergent property of the system and that it needs to be understood in context of how it is balanced against other competing goals like production and efficiency targets (i.e. Lesson 3).

All too often, as other authors have previously stated, healthcare organisations carry out a forensic analysis of what went wrong after an incident occurred.7,8,11,12 As shown in Figure 2, this is equivalent to trying to understand and improve safety by looking through a microscope whose lens is focused on understanding safety erosions in isolation. As other authors have previously stated, this type of approach means we miss opportunities to learn from what goes right.12 Another way of understanding this problem is with reference to the recent measuring and monitoring safety framework developed by Vincent, Burnett and Carthey.24 The measurement framework (Figure 3) comprises five dimensions, all of which are important for the effective monitoring and management of safety: i) Past harm: has patient care been safe in the past? This involves assessing rates of past harm to patients, both physical and psychological. ii) Reliability: are our clinical systems, processes and behaviour reliable? This is the reliability of processes and systems in organisations but also the capacity of the staff to follow safety critical procedures. iii) Sensitivity to operations: is care safe today? This is the information and capacity to monitor safety on an hourly or daily basis. We refer to this as sensitivity to operations. iv) Anticipation and preparedness: will care be safe in the future? This refers to the ability to anticipate, and be prepared for, threats to safety. V) Integration and learning: are we responding and improving? The capacity of an organisation to respond, assimilate, learn and improve from, safety information.

Figure 3.

Measurement and monitoring of safety framework.

Healthcare organisations who only focus on learning about system erosion invest time and resources learning from past harm and some elements of reliability without paying sufficient attention to the other dimensions of the measurement and monitoring framework (i.e. sensitivity to operations, anticipation and preparedness, integration and learning).

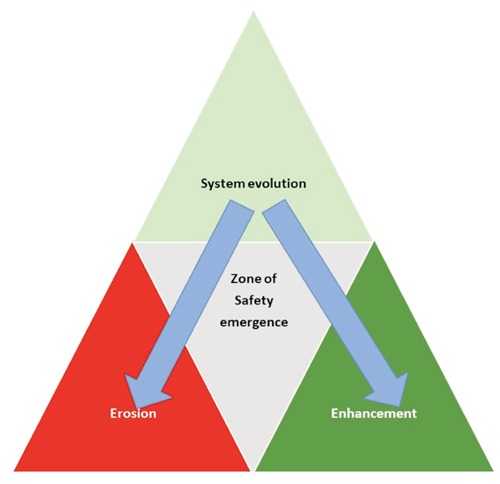

Understanding safety emergence

The SEEE model is shown in Figure 4. Figure 4 shows how system evolution leads to both erosion and enhancement. Importantly, it also shows the zone of safety emergence. This is the area where positive and negative effects of system evolutions need to be anticipated and understood in order to manage safety effectively. The SEEE model postulates that how well healthcare organisations manage safety critically depends on their ability to anticipate and understand emerging erosions and enhancements to safety that occur as the healthcare system evolves. Rather than using the old view of error to learn safety lessons, we need to develop methods that provide insights into the relationship between system evolution, erosion and enhancement. Most importantly, future methods to learn and improve safety need to focus on what is happening in the zone of safety emergence, rather than retrospectively trying to learn after incidents have occurred. This approach all too often leads to an exclusive focus on the process of erosion.

Figure 4.

The System Evolution, Erosion and Enhancement model of safety.

Conclusions

To improve patient safety, there is a need to learn lessons from past theories of accident causation, human error, foresight, resilience and system migration and to develop theoretical models which describe safety as a complex, multi-faceted and dynamic phenomenon. By developing an under-pinning theoretical framework that captures the complexity of safety evolution, erosion and enhancements, healthcare organizations around the world can be educated and supported to understand the true complexity of monitoring and measuring patient safety.

References

- 1.Leape LL. Striving for perfection. Clin Chem 2002;48:1871-2 [PubMed] [Google Scholar]

- 2.Reason JT. Human error. Cambridge: Cambridge University Press; 1990 [Google Scholar]

- 3.Reason JT. Managing the risks of organisational accidents. Farnham: Ashgate Publishing; 1997 [Google Scholar]

- 4.Carthey J. Taking further steps. The how to guide to implementing human factors in healthcare. Available from:http://www.chfg.org/wp-content/uploads/2013/05/Implementing-human-factors-in-healthcare-How-to-guide-volume-2-FINAL-2013_05_16.pdf [Google Scholar]

- 5.Kohn LT, Corrigan JM, Donaldson MS. To err is human: building a safer health system. Washington, DC: National Academy Press; 2000 [PubMed] [Google Scholar]

- 6.Department of Health. An organisation with a memory: report of an expert group on learning from adverse events in the NHS chaired by the Chief Medical Officer. Crown Copyright. London, UK 2000. Available from:http://webarchive.nationalarchives.gov.uk/20130107105354/http:/www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_4065086.pdf

- 7.Dekker S. The field guide to understanding human error. Farnham: Ashgate publishing; 2006 [Google Scholar]

- 8.Dekker S. Drift into failure. Farnham: Ashgate publishing; 2011 [Google Scholar]

- 9.Reason J. Beyond the organisational accident: the need for error wisdom on the frontline. Qual Saf Health Care 2004;13Suppl2:28-33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Reason JT. The human contribution: unsafe acts, accidents and heroic recoveries. Farnham: Ashgate publishing; 2008 [Google Scholar]

- 11.Hollnagel E, Woods DD, Leveson N. Resilience engineering. Concepts and precepts. Farnham: Ashgate publishing; 2006 [Google Scholar]

- 12.Hollnagel E. Proactive approaches to patient safety. The Health Foundation. 2012. Available from:http://www.health.org.uk/public/cms/75/76/313/3425/Proactive%20approaches%20to%20safety%20management%20thought%20paper.pdf?realName=PPqBkh.pdf [Google Scholar]

- 13.Amalberti R, Vincent C, Auroy Y, de Saint Maurice G. Violations and migrations in health care: a framework for understanding and management. Qual Saf Health Care 2006;15 Suppl 1:i66-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Toft B. External Inquiry into the adverse incident that occurred at Queen’s Medical Centre, Nottingham, 4th January 2001. Department of Health England. Crown Copyright. London, UK. 2001. Available from:http://www.who.int/patientsafety/news/Queens%20Medical%20Centre%20report%20(Toft).pdf [Google Scholar]

- 15.Helmreich RL. On error management: lessons from aviation BMJ 2000;320:781-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Vincent C, Taylor-Adams S, Chapman EJ, et al. How to investigate and analyse clinical incidents: clinical risk unit and association of litigation and risk management protocol. Br Med J 2000;320:777-81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Snijders C, van der Schaaf TW, Klip H, et al. Feasibility and reliability of PRISMA-medical for specialty-based incident analysis. Qual Saf Health Care 2009;18:486-91 [DOI] [PubMed] [Google Scholar]

- 18.Francis R. Report of the Mid Staffordshire NHS Foundation Trust Public Inquiry. The Stationary Office; Norwich, UK: 2013. Available from:http://www.midstaffspublicinquiry.com/report [Google Scholar]

- 19.Cook RI, Nemeth C. Taking things on ones stride: cognitive features of two resilient performances. Hollnagel E, Woods DD, Leveson N. Resilience engineering. Concepts and precepts. Farnham: Ashgate publishing; 2006. 205-220 [Google Scholar]

- 20.Patterson ES, Woods DD, Cook RI, Render M. Collaborative crosschecking to enhance resilience. Cognit Technol Work 2007;9:155-62 [Google Scholar]

- 21.Hu YY, Arriaga AF, Roth EM, et al. Protecting patients from an unsafe system: the etiology and recovery of intraoperative deviations in care. Ann Surg 2012;256:203-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fischhoff B. Hindsight ≠ foresight: the effect of outcome knowledge on judgement under uncertainty. Qual Saf Health Care 2003;12:304-12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Carthey J, Walker S, Deelchand V, et al. Breaking the rules: understanding non-compliance with policies and guidelines. BMJ 2011;343:13:d5283 [DOI] [PubMed] [Google Scholar]

- 24.Vincent C, Burnett S, Carthey J. Measurement and monitoring of safety. The Health Foundation; London, UK: 2013. Available from:http://www.health.org.uk/public/cms/75/76/313/4209/The%20measurement%20and%20monitoring%20of%20safety.pdf?realName=haK11Q.pdf [Google Scholar]