Abstract

Incident Reporting Systems (IRS) are and will continue to be an important influence on improving patient safety. They can provide valuable insights into how and why patients can be harmed at the organizational level. However, they are not the panacea that many believe them to be. They have several limitations that should be considered. Most of these limitations stem from inherent biases of voluntary reporting systems. These limitations include: i) IRS can’t be used to measure safety (error rates); ii) IRS can’t be used to compare organizations; iii) IRS can’t be used to measure changes over time; iv) IRS generate too many reports; v) IRS often don’t generate in-depth analyses or result in strong interventions to reduce risk; vi) IRS are associated with costs. IRS do offer significant value; their value is found in the following: i) IRS can be used to identify local system hazards; ii) IRS can be used to aggregate experiences for uncommon conditions; iii) IRS can be used to share lessons within and across organizations; iv) IRS can be used to increase patient safety culture. Moving forward, several strategies are suggested to maximize their value: i) make reporting easier; ii) make reporting meaningful to the reporter; iii) make the measure of success system changes, rather than events reported; iv) prioritize which events to report and investigate, report and investigate them well; v) convene with diverse stakeholders to enhance the value of IRS.

Significance for public health.

Incident Reporting Systems (IRS) are and will continue to be an important influence on improving patient safety. However, they are not the panacea that many believe them to be. They have several limitations that should be considered when utilizing them or interpreting their output: i) IRS can’t be used to measure safety (error rates); ii) IRS can’t be used to compare organizations; iii) IRS can’t be used to measure changes over time; iv) IRS generate too many reports; v) IRS often don’t generate in-depth analyses or result in strong interventions to reduce risk; vi) IRS are associated with costs. Moving forward, several strategies are suggested to maximize their value: i) make reporting easier; ii) make reporting meaningful to the reporter; iii) make the measure of success system changes, rather than events reported; iv) prioritize which events to report and investigate, do it well; v) convene with diverse stakeholders to enhance their value.

Key words: Incident Reporting Systems, healthcare

The promise

Incident Reporting Systems (IRS) are a cornerstone for improving patient safety.1 All high-risk industries have them. While IRS are relatively new in healthcare, similar systems in nuclear, railway, fire, and aviation industry have had tremendous success.2 The concept behind IRS is simple; they provide a mechanism to identify risks so that organizations can implement interventions to reduce these risks. IRS provide valuable information to identify hazards and surface learning opportunities. In healthcare, IRS provide frontline caregivers a mechanism to raise concerns, providing voice to these clinicians that management can work to mitigate.

The Institute of Medicine (IOM) advocates for the development and use of IRS. The IOM recommended the following. Recommendation 5.1: a nationwide mandatory reporting system should be established that provides for the collection of standardized information by governments about adverse events that result in death or serious harm. Recommendation 5.2: the development of voluntary reporting efforts should be encouraged.1 The Joint Commission now requires that all hospitals have and use IRS.

Because of this, there has been an explosion of adverse event reporting systems in healthcare. Some reporting systems are national (Australian Incident Management System, National Reporting Learning System, etc.), some are local (Patient Safety Network, Pennsylvania Safety Reporting System, etc.), some focus on a specific area (Intensive Care Unit Reporting System) while others focus on a specific type of event (Medmarx – medication), some are public while others are private. The recent Patient Safety Act has tasked the government with creation of a national database for sharing of adverse events, known as the National Patient Safety Database (NPSD).

Yet to date, there is limited evidence to demonstrate that IRSs in healthcare have lived up to their expectations for making care safer. Progress in reducing preventable harm has been slow,3,4 and IRS have not provided the insight or lead to improvements that many had hoped for. In this article, we review some challenges that IRS have encountered, focusing on what IRS cannot do. We then discuss what they can do and how they provide the most value. Finally, we offer some practical suggestions on moving forward.

The challenge

Incident Reporting Systems can’t be used to measure safety (error rates)

IRS cannot be used to measure safety. There is growing interest among policy makers, payers, hospital leaders and patients in measuring how safe hospitals and health systems are. This includes: i) measuring the rate of specific adverse events (for example, how often do medication errors occur in our institution?); ii) holding individuals/organizations accountable for safety problems (for example, why are never events occurring at this institution?); iii) measuring the overall safety profile of a hospital to make purchasing decisions (for example, is this a safe hospital? Should I get my care here?).

In the absence of better sources of information, some turn to IRS to answer these questions. However, IRS data are a non-random sample from the total universe of safety hazards. They are more suited for identifying risks. For many reasons, IRS cannot be used to measure safety. First, events are under-reported. Approximately 7% of all adverse events are reported to IRS,5 and different methods of detecting errors identify different types of events and results in different conclusions about safety.6 Reasons for this include fear of retribution, shame, lack of time, complexity of reporting system, lack of perceived value, reporting fatigue, etc.7 Moreover, there is variation (bias) on what types of events are reported. For example, is a tear during vaginal delivery of a baby an adverse event? While some say yes, others may argue that it is a known and unavoidable consequence of vaginal delivery of large babies. Even among reported events, there is variation in the threshold in reporting. While some individuals may report near-misses, others only report those event that result in patient harm. Some types of adverse events are reported with high frequency (e.g. falls) while others are often under-reported (e.g. medication adverse events). Finally, some provider types report adverse events with regularity (e.g. nurses) while others report events infrequently (physicians).8

In order to be a valid measure of the rate of adverse events, a measure requires three things. There should be a clear definition of the event (numerator); few adverse event in healthcare are well defined. There should be a clear definition of the population at risk (denominator); the population in healthcare are usually not defined. Finally, there should be a consistent surveillance system for detection of both the event and the population at risk. IRS have a problem with all three of these. For example, medication adverse events might be measured in terms of patients, patient days, or doses of medications administered; IRS don’t measure any of these. Moreover IRS rely on the vigilance, honesty, and whim of healthcare providers to detect and report adverse events; this is a poor substitute for a surveillance system. The most valid measures of patient safety are related to hospital acquired infections, such as central-line associated blood stream infections. For many of these conditions, there are national definitions for numerators, denominators, and hospitals have invested significant infrastructure into active surveillance processes. Incidentally, this is the area with the most demonstrated progress.9

Incident Reporting Systems can’t be used to compare organizations

IRS cannot be used to compare organizations. Healthcare leaders and patients want a measure to compare patient safety among health systems, hospitals, and healthcare providers. The underreporting and bias in the system make comparisons nearly impossible. This is underscored by a statement from the National Coordinating Council for Medication Error Reporting Systems (NCC-MERP) explicitly recommending that data from these systems not be used to compare healthcare providers or their organizations.10 For example, Table 1 is a hypothetical example of the number of medication error reported to a hospital IRS. On initial inspection, it may seem that the oncology unit has poor medication safety practices. What if the oncology unit sees four times more patients or administers four times more medications per patient than the other units? As it turns out, an investigation into these numbers revealed that the oncology unit was more vigilant in its medication safety practices because of its use of chemotherapeutic agents. There were twice as many double checks as the other units (better safety culture). Near-misses uncovered by these double-checks were routinely reported to the IRS (better reporting culture). Blindly using the IRS to compare these units would have misled us about their safety practices.

Table 1.

Hypothetical example of number of adverse events reported to a hospital Incident Reporting Systems.

| Clinical area | Events/month |

|---|---|

| Emergency Department | 25 |

| General Internal Medicine | 19 |

| Oncology | 112 |

| Surgery | 15 |

| Intensive Care Unit | 30 |

Incident Reporting Systems can’t be used to measure changes over time

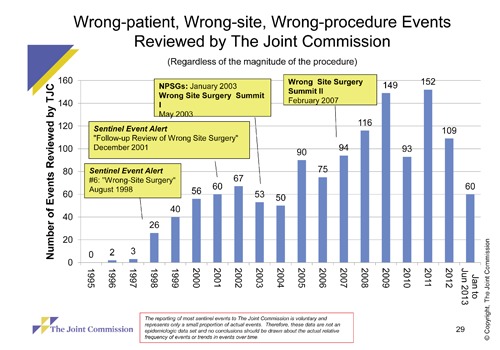

Valid error rates are required to make inferences about changes in safety over time. For all of the reasons discussed above, IRS do not provide such information. Take the example of wrong-site surgeries. The Veterans Affairs (VA) has developed a tool to reduce their risk, and in 2004 The Joint Commission required that hospitals implement a time out, to prevent these events. Yet despite implementation of these interventions, the apparent rate of wrong-site surgeries from IRS continues to climb (Figure 1). This increase is much more likely to be due to increased reporting (reporting bias) from increased awareness rather than an increase in wrong-site surgery from all these interventions. This data urges caution in interpreting changes over time in reported events, even highly visible events that are well-defined.

Figure 1.

Wrong-patient, wrong-site, wrong-procedure events reviewed by the joint commission. Reproduced with permission.

Incident Reporting Systems generate too many reports

Even for a medium-sized organization, an active error reporting culture can lead to many reports. For example, Johns Hopkins Hospital (~1000 beds) generates approximately 500 reports per month. Many organizations do not have the resources to read, much less analyse all of these reports. This can lead to dissatisfaction from users when their reports are left unresolved. In addition, reporting is sometimes used to complain about a colleague’s performance or behaviour. It is not surprising that many users feel dissatisfied with these systems.11

Moreover, some reports provide little incremental value about the insight of safety systems. For example, patient fall reports tend to contain the following similar narrative: nurse found patient on the ground after the patient tried to get out of bed. Physician was notified. After evaluation, no permanent harm was identified. Without further details and/or action by those analysing team, these reports tend to have limited incremental value, questioning the benefit of having the user report them in the first place.

Incident Reporting Systems often don’t generate in-depth analyses or result in strong interventions to reduce risk

Because of the relatively limited resources, error investigations and analysis in healthcare are often superficial. In many hospitals, the legal department performs these analyses. Their staff often have limited to no training in adverse event investigation or human factors. This is contrasted with other high-risk industries, where accidents are rare and investigations are in-depth. For example, the United Airlines flight 1549 Hudson River crash resulted in a 16-month investigation, removal of the plane from the bottom of the river, and DNA analysis of the Canadian Geese that caused the engine failure. Examples such as this are rare in healthcare. Moreover meaningful change occurs infrequently, with the majority of changes being informing staff involved and education/training.12 This leads to recurrence of adverse events across the healthcare industry, sometimes even within the same institution.13 The lack of meaningful change can be due to a variety of reasons: production pressures, small financial margins, limited regulatory authority, lack of standardization, professional culture, lack of institutional will, etc.3,14 The lack of meaningful change, for whatever reason, diminishes the potential value of an adverse event reporting system. Some challenges are national, leaving individual hospitals with only a limited ability to make changes. For example, a medication error will likely occur somewhere in the United States today involving an inadvertent hydromorphone for morphine substitution. Yet the individual hospital has a limited ability to fix the problem of two look-alike sound-alike drugs that have an 8X difference in potency. Instead, many hospitals resort to education and training of their staff, an intervention known to have limited ability to permanently reduce risk.

Incident Reporting Systems are associated with costs

IRS come with costs. The costs of building and implementation of these systems are most visible. However, there are significant costs to training staff on its use, actual reporting, collecting, and analysing the data from these systems. These costs are often ignored, but can be significant. In the fall example above, the costs of collecting additional informational about fall events might be better spent on implementing best practices (fall risk assessment, fall risk communication/signs, hourly rounds for comfort, safety, pain, toileting, chair/bed/posey alarms, medication modification) to reduce patient falls.15 Moreover, when budgets are tight, it is easy to cut funds for the analysis and change implementation from these systems. Ironically, these are the steps that improve patient safety. On the other side, the assumption is that IRS will lead to reduction of medical errors. This in turn, would lead to reduction in patient harm, which would lead to reduction in costs. While true in principle, this has been extremely difficult to quantify and demonstrate. As with most preventative efforts, benefits are theoretical while costs are real. For example, hospitals are compensated for the complexity and number of patients they treat, not the number of errors they prevent. In some cases, having an adverse event might lead to a higher profit margin.16

What can Incident Reporting Systems do

Given these challenges, what value, can an adverse event reporting system offer? Should a hospital/healthcare system invest in one at all?

Incident Reporting Systems can be used to identify local system hazards

Information provided in IRS are nuggets of gold from which we may better identify hazards, understand the complex inner workings of our healthcare system and design interventions to reduce risks to future patients. For example, several years ago, a nurse at Johns Hopkins Hospital reported that her patient was sent the incorrect medication from pharmacy. Although the label was correct, she noticed that the pill looked different than the metoprolol that she normally gave. As it turns out, the pharmacy label-maker had received a software update and had defaulted back to incorrect settings. Such a report, of a near-miss, likely prevented hundreds of medication errors. Currently, the most valuable lessons from these IRS occur from single cases. A variety of tools that provide a systematic analytic framework can help clinicians learn from these events.17

Incident Reporting Systems can be used to aggregate experiences for uncommon conditions

While the most valuable lessons from IRS occur from single cases, the aggregation of uncommon cases can provide insight into failure modes and risks. In the wrong-site surgery example above, aggregation of 132 wrong-site surgical events in the state of Colorado revealed that 100% of the cases involved a communication failure and 72% did not have a time-out performed.18 This required 6 years and data from 5937 physicians. A single institution would not have enough experience/events to identify such patterns and potential vulnerabilities. Therefore, the aggregation and analysis of uncommon cases from the IRS across many organizations can be useful in this way.

Incident Reporting Systems can be used to share lessons within and across organizations

The lessons learned from IRS can be used to educate, inform, and prevent other organizations from experiencing the same adverse events. Such a system for sharing can occur at a local, regional, national, or international level. For example, the Canadian Global Patient Safety Alerts (GPSA) system is a repository of case details and lessons learned from adverse events. Healthcare organizations can search the system to identify adverse events, their known failure mechanisms, and interventions that may be implemented to prevent their occurrence. This allows healthcare organizations to share the most valuable aspect of IRS (lessons learned) without the burdens of incident investigation and confidentiality concerns. Given that the same adverse events are occurring around the world, such sharing is sorely needed.

Incident Reporting Systems can be used to increase patient safety culture

The real implementation and use of a reporting system (the full reporting cycle with: reporting, analysis, and implementation of system changes in analogy to the Plan-Do-study-Act cycle of quality management) within a healthcare institution communicates a lot about how the organization views patient safety. The implementation and communication of such a system to staff members can be a method of changing patient safety culture. This effect is apart from the advantages of the IRS itself.

Moving forward

To enhance their value, we offer some practical suggestions in the design and use of IRS.

Make reporting easier

As opposed to making adverse event reporting systems more complex, we should move to making reporting exceedingly easy and less burdensome. Healthcare providers should have quick and ready access (electronic, web-based) to these systems.19 These systems should be so simple that staff can use them with minimal or without training.20 Given the infrequent nature by which most staff members report adverse events, any training will likely have been forgotten at the time of reporting.

These systems should probably ask for a minimal amount of information. Instead of asking the healthcare provider to categorize the event, rate the event, and attribute causes, a free text description and some identifying information might be all that is required. The deluge of adverse event reports by healthcare providers has lead to many reports that are incomplete,21 with some being inaccurate.11 A patient safety officer familiar with adverse event reporting should further investigate events that have merit. Such a system has been developed at the University of Basel, Switzerland and has been adopted as the standard in Germany.22

Make reporting meaningful to the reporter

Healthcare providers do not report adverse events because much of the time, identified problems are not remediated.23 Moreover, the reporter rarely receives feedback about whether the event was read or deemed important. From a communication science perspective, this is problematic because communication has not taken place until the receiver of a message has conveyed feedback to the sender to confirm message receipt and validated the intended meaning of the message. Thus, interpersonal communication is a simultaneous sense-making process, and the extent to which participants share a common interpretation of a given message determines the effectiveness of any given interaction.24 In light of this literature, it is no surprise that when surveyed, less than 39% felt that reporting adverse events represented good use of staff time and resources.11 Instead, clinicians often develop apathy towards reporting, especially in the setting of high production pressures.

The following communication concepts can help clarify how to make incident reporting more meaningful to the reporter.

Empathy (i.e., one’s ability to understand another’s thoughts and feelings) is a critical skill that contributes to competent communication.25 This particularly makes sense in the IRS context: IRS managers should take the perspective of clinicians, understand why they are currently not reporting, and then adapt their communication strategies to these reasons to facilitate increased reporting.

Communication relies on multiple channels. Written communication is channel-lean and thus subject to a high likelihood of miscommunication (i.e., message being decoded inaccurately).25 In the context of AERS, this risk goes both ways – the report of an adverse event may be interpreted inaccurately by risk management, and the response by risk management may be interpreted inaccurately by the reporting medical professional. All behaviour (and non-behaviour) is communication; thus, no feedback in response to AERS communicates something (such as, for example, they don’t care to respond).

Communication has literal meanings as well as relational implications.26 For example, communication in response to submitted incident reports contains informational content (e.g., your report was received), but it also contains relational content that, if done well, can convey trust, investment, commitment, etc.; these relational contents define the type of relationship we want to have with others. So conceptually, relational message contents define the type of communication that takes place in a given organization. Thus, relational communication chronologically precedes the development of a safety culture, so it could be even argued that it is communication culture which leads to safer practice.

Based upon these communication concepts, the following strategies are recommended:

The person who reports an event should receive timely feedback.8,20 Even if this feedback is only to thank the reporter and let them know that the event is being investigated. This feedback validates understanding of the report and communicates that somebody is listening and cares about the event. Furthermore, a competent communication response can influence reporters to feel that reporting is worth my time and may increase future reporting.

Managers should share reports with staff.27 This will not only educate the staff of risks in their environment, but will also provide a forum to solicit their ideas on how to further reduce risk and inform staff that action is occurring as a result of their reporting efforts. Moreover, these lessons should be shared at the local, regional, national, and international levels. This may reduce the likelihood that events recur across the healthcare industry.

Leaders should devote institutional resources to not just collecting the data, but analysing the events and mediating risk. In the end, this is the ultimate value of the system. When staff observe that the institution is willing to change based upon their feedback, real changes in safety culture start to occur.

Make the measure of success system changes, rather than events reported

It is easy to become focused on gathering reports from an adverse event reporting system. In the early stages of implementation, it is important to educate, inform, and get staff to report events. This is an important component of safety culture. However, the ultimate measure of success of an IRS is not the number of reports received, rather the amount of harm prevented as a result of the system. In many cases, this might be difficult to measure. A reasonable alternative is measuring the number of system changes made as a result of the IRS.

Prioritize which events to report and investigate; report and investigate them well

Whereas the airline industry has fatal accidents as a solitary focus, healthcare has no such focus. This lack of focus has caused the healthcare industry to fragment its efforts. As mentioned above, this has lead to a deluge of adverse event reports with few in-depth analyses and even fewer strong solutions.

One alternative is to focus on reporting of a finite set of high-yield events. Such a set of events might be based upon level of harm, preventability, or regional/national priority. An organization might focus on events that occur most frequently (e.g., medication errors), lead to the most harm (e.g., falls), or are of greatest concern to patients or policy makers (e.g., wrong-site surgery). The National Quality Forum (NQF) has such a set of events, termed Serious Reportable Events (SRE).28 Mandatory reporting of some form of SRE has been adopted by over 11 states.29 This will serve to prioritize healthcare’s efforts and might lead to a more meaningful IRS.

Convene with diverse stakeholders to enhance the value of Incident Reporting Systems

Several countries have a national IRS (United Kingdom, Australia, United States developing). These recommendations for local organizations may also apply to national systems. Moreover, those leading national IRS should meet regularly with healthcare provider organizations and other stakeholders to improve use of IRS data to reduce preventable harm. For example, a national reporting system may partner with device manufacturers or professional specialties to share common events.

Conclusions

IRS are and will continue to be an important influence on improving patient safety. They can provide valuable insights into how and why patients can be harmed at the organizational level. However, they are not the panacea that many believe them to be. They have several limitations that should be considered when utilizing them or interpreting their output. Moving forward, the suggested strategies may maximize their value.

References

- 1.Institute of Medicine. To err is human: building a safer health system. Washington DC: National Academy Press; 1999 [Google Scholar]

- 2.Aviation Safety Reporting System. ASRS program briefing. 2012. Available from:http://asrs.arc.nasa.gov/docs/ASRS_ProgramBriefing2012.pdf

- 3.Sevdalis N, Hull L, Birnbach DJ. Improving patient safety in the operating theatre and perioperative care: obstacles, interventions, and priorities for accelerating progress. Br J Anaesth 2012;109Suppl1:i3-16 [DOI] [PubMed] [Google Scholar]

- 4.Landrigan CP, Parry GJ, Bones CB, et al. Temporal trends in rates of patient harm resulting from medical care. N Engl J Med 2010;363:2124-34 [DOI] [PubMed] [Google Scholar]

- 5.Sari AB, Sheldon TA, Cracknell A, Turnbull A. Sensitivity of routine system for reporting patient safety incidents in an NHS hospital: retrospective patient case note review. BMJ 2007;334:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Flynn EA, Barker KN, Pepper GA, et al. Comparison of methods for detecting medication errors in 36 hospitals and skilled-nursing facilities. Am J Health Syst Pharm 2002;59:436-46 [DOI] [PubMed] [Google Scholar]

- 7.Pham JC, Story JL, Hicks RW, et al. National study on the frequency, types, causes, and consequences of voluntarily reported emergency department medication errors. J Emerg Med 2011;40:485-92 [DOI] [PubMed] [Google Scholar]

- 8.Kingston MJ, Evans SM, Smith BJ, Berry JG. Attitudes of doctors and nurses towards incident reporting: a qualitative analysis. Med J Aust 2004;181:36-9 [DOI] [PubMed] [Google Scholar]

- 9.Pronovost P, Needham D, Berenholtz S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med 2006;355:2725-32 [DOI] [PubMed] [Google Scholar]

- 10.National Coordinating Council for Medication Error Reporting and Prevention. Use of medication error rates to compare health care organizations is of no value. 2002. Available from:http://www.nccmerp.org/council/council2002-06-11.html Accessed on: October, 2013 [Google Scholar]

- 11.Braithwaite J, Westbrook M, Travaglia J. Attitudes toward the large-scale implementation of an incident reporting system. Int J Qual Health Care 2008;20:184-91 [DOI] [PubMed] [Google Scholar]

- 12.Latif A, Rawat N, Pustavoitau A, et al. National study on the distribution, causes, and consequences of voluntarily reported medication errors between the ICU and non-ICU settings. Crit Care Med 2013;41:389-98 [DOI] [PubMed] [Google Scholar]

- 13.Johnson PE, Chambers CR, Vaida AJ. Oncology medication safety: a 3D status report 2008. J Oncol Pharm Pract 2008;14:169-80 [DOI] [PubMed] [Google Scholar]

- 14.Bosk CL, Dixon-Woods M, Goeschel CA, Pronovost PJ. Reality check for checklists. Lancet 2009;374:444-5 [DOI] [PubMed] [Google Scholar]

- 15.Pham JC, Aswani MS, Rosen M, et al. Reducing medical errors and adverse events. Ann Rev Med 2012;63:447-63 [DOI] [PubMed] [Google Scholar]

- 16.Hsu E, Lin D, Evans SJ, et al. Doing well by doing good: assessing the cost savings of an intervention to reduce central line-associated bloodstream infections in a Hawaii hospital. Am J Med Qual 2013 May 7. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 17.Pham JC, Kim GR, Natterman JP, et al. ReCASTing the RCA: an improved model for performing root cause analyses. Am J Med Qual 2010;25:186-91 [DOI] [PubMed] [Google Scholar]

- 18.Stahel PF, Sabel AL, Victoroff MS, et al. Wrong-site and wrong-patient procedures in the universal protocol era: analysis of a prospective database of physician self-reported occurrences. Arch Surg 2010;145:978-84 [DOI] [PubMed] [Google Scholar]

- 19.Garbutt J, Waterman AD, Kapp JM, et al. Lost opportunities: how physicians communicate about medical errors. Health Aff (Millwood) 2008;27:246-55 [DOI] [PubMed] [Google Scholar]

- 20.Karsh BT, Escoto KH, Beasley JW, Holden RJ. Toward a theoretical approach to medical error reporting system research and design. Appl Ergon 2006;37:283-95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Getz KA, Stergiopoulos S, Kaitin KI. Evaluating the completeness and accuracy of MedWatch data. Am J Ther 2012 Sep 24. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 22.ProtectData. Critical incident reporting systems. Available from:https://www.cirsmedical.ch/cug/ Accessed on: October 2013 [Google Scholar]

- 23.Pfeiffer Y, Manser T, Wehner T. Conceptualising barriers to incident reporting: a psychological framework. Qual Saf Health Care 2010;19:e60 [DOI] [PubMed] [Google Scholar]

- 24.Barnlund DC. A transactional model of communication. Mortensen CD. Communication theory. 2ndNew Brunswick: Transaction Publishers; 2008. 47-57 [Google Scholar]

- 25.Floyd K. Interpersonal communication. 2ndNew York: McGraw-Hill; 2011 [Google Scholar]

- 26.Burgoon JK, Hale JL. The fundamental topoi of relational communication. Commun Monographs 1984;51:193-214 [Google Scholar]

- 27.Beasley JW, Escoto KH, Karsh BT. Design elements for a primary care medical error reporting system. WMJ 2004;103:56-9 [PubMed] [Google Scholar]

- 28.National Quality Forum. Serious reportable events in healthcare - 2011 update: a concensus report. Available from:http://www.qualityforum.org/Publications/2011/12/Serious_Reportable_Events_in_Healthcare_2011.aspx Accessed on: April 2013 [Google Scholar]

- 29.National Quality Forum. Variability of state reporting of adverse events. 2011. Available from:http://www.qualityforum.org/Topics/SREs/Serious_Reportable_Events.aspx Accessed on: June 2013 [Google Scholar]