Abstract

Objective

1) To examine clinician adherence to a standardized assessment battery across settings (acute hospital, IRF, outpatient facility), professional disciplines (PT, OT, SLP), and time of assessment (admission, discharge/monthly), and 2) evaluate how specific implementation events affected adherence.

Design

Retrospective cohort study

Setting

Acute hospital, IRF, outpatient facility with approximately 118 clinicians (PT, OT, SLP).

Participants

2194 participants with stroke who were admitted to at least one of the above settings. All persons with stroke undergo standardized clinical assessments.

Interventions

N/A

Main Outcome Measure

Adherence to Brain Recovery Core assessment battery across settings, professional disciplines and time. Visual inspections of 17 months of time-series data were conducted to see if the events (e.g. staff meetings) increased adherence ≥ 5% and if so, how long the increase lasted.

Results

Median adherence ranged from 0.52 to 0.88 across all settings and professional disciplines. Both the acute hospital and IRF had higher adherence than the outpatient setting (p ≤ .001) with PT having the highest adherence across all three disciplines (p < .004). Of the 25 events conducted across the 17 month period to improve adherence, 10 (40%) resulted in a ≥ 5% increase in adherence the following month, with 6 services (60%) maintaining their increased level of adherence for at least one additional month.

Conclusion

Actual adherence to a standardized assessment battery in clinical practice varied across settings, disciplines and time. Specific events increased adherence 40% of the time with gains maintained for greater than a month in 60%.

Keywords: assessment, adherence, rehabilitation

Measurement of patient outcomes and health status continue to be recognized as an essential component of rehabilitation clinical practice.1–5 Although measurement itself has not been identified as improving patient outcomes, the implication is that standardized assessment can facilitate continuity of care, assist in provider decision making, and determine patient’s prognosis and function over time.1, 6–9 Despite these benefits, actual use of standardized assessment in clinical practice remains a challenge.1, 10

In a survey of 1,000 physical therapists (PT), it was found that use of standardized measures across different patient conditions and practice settings was not part of routine clinical practice.1 In a separate study, the majority of surveyed speech language pathologists (SLP) describe using their own or non-standardized/informal assessments to assess communication deficits in patients post-stroke.9 Despite mandated standardized measures, some groups report that 92% have never used the scores in their clinical practice (e.g. diagnostic evaluation, treatment planning or monitoring).6 Rehabilitation professionals (occupational therapists (OT), PT, nursing) have identified many challenges such as organizational policy and procedures, clinician competence and beliefs, and the measurement itself (pieces of equipment, time to administer) as barriers to the implementation of standardized assessments into everyday clinical practice.7, 11–14 Literature examining how to implement change within the healthcare system has shown that targeted, prospective efforts are more likely to improve professional practice15 and that specific strategies such as audit and feedback or educational meetings can be useful as well.16–19

In 2008, the Brain Recovery Core (BRC) was developed as a partnership between Washington University School of Medicine, Barnes Jewish Hospital, and The Rehabilitation Institute of St. Louis.20 The BRC is a system of organized stroke rehabilitation across the continuum of care, from the acute stroke service to return to home and community life. As part of the system, clinicians (PT, OT, SLP) administer a standardized battery of assessments that cover stroke-induced impairment, function and activities of daily living. Lack of clinician adherence was a chief concern during development of the BRC it is arguably the most common reason for failure of clinical databases that manage these assessments.8 Strategies including audit, feedback and educational meetings were utilized to promote adherence.

With the continuous demand for standardized assessments in everyday clinical practice it is critical to report on efforts of implementation and to examine actual adherence. Adherence was operationally defined as the percentage of time all standardized measures were completed at each required time point. The purpose of this study is to report on-going clinician adherence to standardized assessments in patients post-stroke across settings (acute hospital, inpatient rehabilitation facility (IRF), outpatient facility) and professional rehabilitation disciplines (PT, OT, SLP).

Methods

This retrospective cohort study utilized 2194 participant records stored in the Brain Recovery Core database from August 2010 through December 2011.20, 21 All participants admitted to Barnes-Jewish Hospital and The Rehabilitation Institute of St. Louis undergo standardized assessments by professional discipline PT, OT, and SLP. This battery was developed with both therapist and administrative input to meet the needs of participants and clinicians at each facility across the rehabilitation continuum, and it is considered the minimum assessment requirement for all persons with stroke. The battery encompasses the domains of motor (PT, OT), cognition (OT, SLP) and language (SLP) (see Appendix 1 for a brief summary), with approximately nine measures in the PT battery, 14 in the OT battery and 16 in the SLP battery.20 Each measure is not required at every setting. As reflects the clinical needs, the measurements begin as more impairment-based for the acute setting while the outpatient assessments include participation measures. Only a portion of the measures required at the admission assessment are required for the discharge (IRF) or monthly (outpatient) assessments. In addition, some assessments are conditional based on participant results on brief screening measures. For example, the 15-item Boston Naming Test22 is used as a screen for possible aphasia. If a participant fails the Boston Naming test, they are then given the Western Aphasia Battery23 to determine if aphasia is present, and if so subsequent aphasia measures are administered. However, if the participant passes the Boston Naming Test screen than none of the subsequent aphasia measures are administered, thus the evaluation would take less time. The time to complete assessments mirrors the time given at each setting for an evaluation and are presented in Table 1. Rehabilitation data (assessment scores) are stored from participants across all three settings (acute hospital, IRF, outpatient facility) and professional disciplines (PT, OT, SLP). All participants entered into the database have a primary stroke diagnosis, have received standard rehabilitation services as prescribed by licensed clinicians at each facility and have provided informed consent to have their stroke rehabilitation data stored and used for research. Washington University Human Research Protection Office has approved the database and studies using de-identified data.

Table 1.

Clinician characteristics and average time to complete standardized assessment battery

| Setting | Years Experience | Mean Annual Turnover | |

|---|---|---|---|

| Acute Hospital | <1–35 | 10% | |

| IRF | <1–35 | 5% | |

| Outpatient Facility | 2–31 | 5% | |

| Time (mean minutes) | PT | OT | SLP |

| Acute Hospital | 20 | 39 | 40 |

| IRF | 50 | 90 | 52 |

| Outpatient Facility | 52 | 46 | 45 |

IRF = Inpatient Rehabilitation Facility

PT: Physical Therapy

OT: Occupational Therapy

SLP: Speech-Language Pathology

Average time is based on random observation of assessments conducted each month at each facility.

The acute hospital setting assessments are completed once a patient is stabilized and rehabilitation services are ordered, usually within 24 hours of admission. The IRF setting assessments are completed within 48–72 hours of admission and discharge. The outpatient setting assessments are completed at admission (first 1–2 visits) and then on a monthly basis. All assessments are administered by licensed clinicians who have been trained on these assessments, complete annual competencies on them, and who are observed for consistency. Each month, clinician-specific and measure-specific feedback on adherence (defined as all measures completed at their specific time point) were extracted from the database and provided to the clinician managers. Managers used policies and procedures already in place at each setting to disseminate feedback to staff clinicians. Various events (e.g. staff meetings at each setting and within each professional discipline) were held periodically throughout the 17 month time period. Events typically included: presentation of previous monthly adherence, discussion and feedback of individual assessments, interpretation and application of assessment results, and identification and solution of barriers affecting adherence.

Statistical Analysis

SPSS version 19a was used for all statistical analyses and the criterion for statistical significance was set at p < 0.05. In this analysis, the data of interest (individual unit in the analysis) is the assessment by a clinician of an individual participant at a specific time point along the rehabilitation continuum. Each assessment is required to be completed 100% of the time, regardless of whether or not it was completed at other settings or in other disciplines. The same participants were evaluated at more than one facility and by more than one discipline at each facility with less than 5% of participants seen for only one evaluation at only one facility. Likewise, each clinician performed assessments on multiple participants over the 17 month time period. Data were aggregated across individual assessments and not across individual participants or individual clinicians. Non-parametric analyses were selected because of violations in the normality assumption. A Friedman two-way analysis of variance by ranks was used to determine if adherence to the Brain Recovery Core assessment battery differed across settings and professional disciplines. Settings (acute hospital, IRF, outpatient facility) and professional disciplines (PT, OT, SLP) were considered within group factors for this analysis. For significant results, a Wilcoxon Signed-Ranks test with Bonferroni’s Correction (p < 0.008) was performed post-hoc. A similar analysis was repeated to compare if adherence to the assessment battery varied based on when the assessment was administered at the IRF (admission, discharge) and outpatient (admission, monthly) settings (Bonferroni’s Correction (p < 0.013)). Percent adherence was then plotted and visual inspection time-series data was conducted to see if events increased adherence ≥ 5% and if so, how long the increase in adherence lasted. An improvement equal to or greater than 5% was selected to determine if any association could be found between events and improvement in adherence. Although somewhat arbitrary, 5% was considered sufficient to indicate a real improvement in adherence but not too high of expectation.

Results

The majority of clinicians treating participants post-stroke across all three settings were female and professional experience ranged from 0–35 years. Average yearly turnover rate is 5–10% across disciplines (Table 1). The demographics and distribution of stroke participants across services is shown in Table 2.

Table 2.

Sample characteristics, Mean (SD) or %

| Acute (n= 2083) | IRF (n= 397) | Outpatient (n= 155) | |

|---|---|---|---|

| Age at stroke, year | 63 (15) | 62 (13) | 57 (12) |

| Gender | |||

| Women | 52% | 50% | 46% |

| Men | 48% | 50% | 54% |

| Race | |||

| African American | 39% | 61% | 55% |

| Caucasian | 58% | 37% | 25% |

| Asian | 1% | 1% | 1% |

| Other/missing | 2% | 1% | 19% |

| First Stroke | 70% | 66% | 58% |

| Days, Median (IQ) | |||

| Stroke to-Acute Assessment | 0 (0) | ||

| Stroke to-IRF Assessment | 5 (7) | ||

| *Stroke to-Outpatient Assessment | 57 (91) | ||

IRF = Inpatient Rehabilitation Facility

Stroke to Outpatient Assessment includes the combination of participants with new strokes as well as patients with more chronic stroke-related disabilities

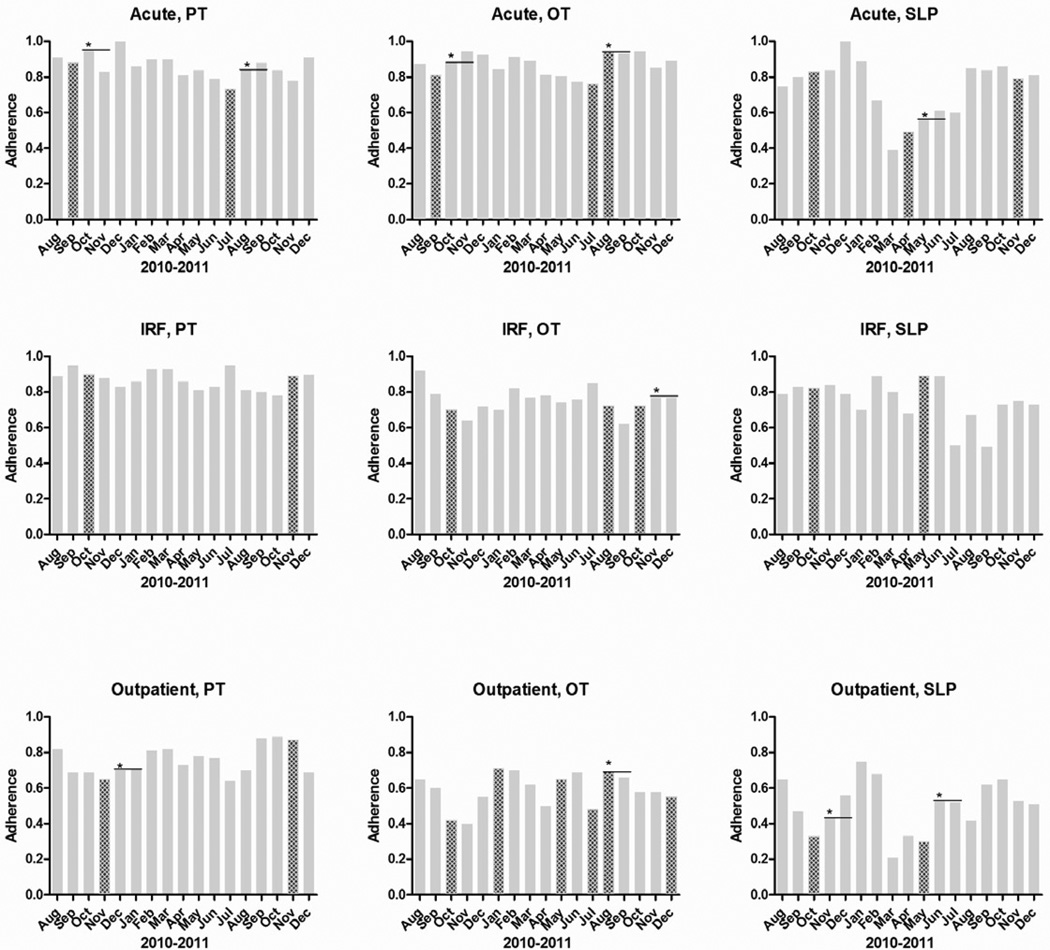

Figure 1 shows adherence rates by setting (rows) and disciplines (columns) on a monthly basis. Median adherence ranged from 0.52 to 0.88 across all settings and professional disciplines (Table 3). Friedman’s test statistic χ2 (8) = 81.454 was significant (p < .001). Post hoc testing was conducted to examine differences across settings and disciplines. Of the three settings, the acute and IRF settings were not significantly different (p = .256), however both had significantly higher adherence than the outpatient setting (p ≤ .001). Of the three disciplines PT had the highest adherence, followed by OT and then SLP (p < .004). At the IRF and outpatient facility, adherence with the admission assessment was greater than with the discharge or monthly assessment, respectively, and more IRF discharge assessments were completed than outpatient monthly assessments (Table 3; p ≤ .002).

Figure 1.

Percent adherence to measures across setting (horizontal) and discipline (vertical). The patterned columns denote months when events (e.g. staff meetings at each institution and within each professional discipline) targeted to improve adherence occurred. The * denotes ≥ 5% increase in adherence during the month following the event. The – denotes the level of the ≥ 5% increase in adherence to see if the improvement in adherence was maintained up to 2 months after the event.

Table 3.

Percent adherence across setting and discipline (top) and time (bottom). Values are median (IQ).

| Setting | PT | OT | SLP | Median Across Setting (IQ) |

|---|---|---|---|---|

| Acute facility | 0.86 (0.82–0.91) | 0.88 (0.81–0.93) | 0.80 (0.61–0.85) | 0.84 (0.73–0.88) |

| IRF | 0.88 (0.82–0.92) | 0.76 (0.71–0.79) | 0.79 (0.69–0.84) | 0.80 (0.76–0.83) |

| Outpatient facility | 0.73 (0.69–0.82) | 0.60 (0.53–0.68) | 0.52 (0.38–0.64) | 0.60 (0.55–0.71) † |

| Median Across Discipline (IQ) | 0.84 (0.80–0.85)* | 0.74 (0.71–0.76)* | 0.68 (0.62–0.74)* | |

| Assessment Time | Median Across Time (IQ) | |||

| IRF Admission | 0.94 (0.89–0.97) | 0.79 (0.74–0.85) | 0.79 (0.69–0.86) | 0.85 (0.79–0.88) |

| IRF Discharge | 0.82 (0.71–0.88) | 0.65 (0.58–0.68) | 0.76 (0.71–0.87) | 0.74 (0.67–0.82) ‡ |

| Outpatient Admission | 0.92 (0.88–0.96) | 0.78 (0.62–0.81) | 0.71 (0.63–0.81) | 0.78 (0.65–0.84) |

| Outpatient Monthly | 0.65 (0.56–0.78) | 0.38 (0.23–0.49) | 0.29 (0.20–0.41) | 0.46 (0.35–0.54) ‡ |

IRF = Inpatient Rehabilitation Facility

Significance across discipline: PT and OT (p < .001), PT and SLP (p < .001), and OT and SLP (p = .004).

Significance across setting: Acute facility and Outpatient facility (p < .001) and IRF and Outpatient facility (p = .001).

Significance across assessment time: IRF Admission and IRF Discharge (p =.002), Outpatient Admission and Outpatient Monthly (p < .001), and IRF Discharge and Outpatient Monthly (p < .001).

For the duration of the 17 month time period, feedback was provided on a monthly basis to managers at each setting showing actual clinician- and measure-specific adherence to the required assessment battery. In addition, 25 events were conducted across settings and disciplines throughout the 17 month period to improve adherence. Of these 25 events, 10 (40%) were followed by a ≥ 5% increase in adherence the following month (Figure 1). Of the 10 events that resulted in increased adherence, 6 services (60%) maintained their increased level of adherence for at least one additional month. For example, in April the SLPs’ at the acute setting had an event. In the following month, May there was a greater than 5% increase in adherence and that gain was maintained for an additional month, June.

Discussion

This report offers new information examining clinician adherence across both professional rehabilitation discipline and setting among clinicians treating the post-stroke population. Median clinician adherence to the standardized assessments of the BRC varied from 0.52 to 0.88 across a 17 month period. The acute hospital and IRF settings and professional discipline of PT were found to have the highest adherence. Admission assessments were more often completed than the discharge or monthly assessments. Throughout this time period, monthly clinician- and measure-specific feedback was provided and staff events were held. In the month following an event, there was an increase in adherence 40% of the time and this was maintained for an additional month in 60% of those cases.

Data on clinician adherence to standardized assessments has been most commonly collected via self-report/survey.1, 4, 10, 24 Although this method is able to encompass a wider distribution of clinicians and is quicker, it is potentially biased by the clinician’s perception of adherence to standardized assessments versus actual adherence. The current study is an important addition to the literature on clinician adherence, as it reports actual adherence to standardized assessments across settings and disciplines. Despite the difference in methodology, clinician adherence presented in this report is generally equivalent with other published studies (48–70% adherence).1, 4, 25, 26 In similar clinical databases targeted at acute physical therapy clinical practice, full adherence to the computerized system was found.8 In that project, an electronic medical record system was built utilizing the defined measures that clinicians were expected to complete. Here in the BRC, the acute hospital is the only setting with an electronic medical record. This may, in part, explain why a higher level of adherence was seen in the acute setting when compared to the outpatient facility. In addition, it is noted that the clinician turnover rate is twice as high in the acute setting, yet they have significantly higher adherence than the outpatient facility. These findings in addition to a third survey10, are in sharp contrast with self-report data indicating that outpatient therapists are four times more likely than acute therapists and 10 times more likely than inpatient rehabilitation therapists to use standardized outcome measures.1

Audit and feedback techniques have been shown to improve clinical practice through increasing adherence to clinical guidelines.16–18 Monthly audits of missing data were conducted throughout the 17 month period with the information disseminated back to the clinicians. Despite this process, no trend or steady improvement over time was detected across setting or professional discipline. Structured events, which have been shown to improve clinical practice19, were held, but were followed by improved adherence the following month only 40% of the time. Since the increased adherence was sustained for an additional month 60% of the time, we were only effective in increasing longer-term adherence 24% (6/25) of the time an event was held. Collectively, these numbers indicate that sustainability of uniform standardized assessment use in clinical practice is complex. Using implementation and sustainability methods suggested by the healthcare implementation literature had only small influences on adherence. New methods for promoting adherence and sustaining adherence clearly need to be developed.

It is unclear if the higher adherence seen in PT is a reflection of discipline versus time to complete the required assessments. Broad agreement and discussion about common use of standardized assessment tools has been a focus of the discipline of PT1, 4, 5, 7, 8, 10, 12, 27 for a greater duration of time compared to OT28, 29 and SLP.30, 31 As a result, completion of the BRC standardized assessment battery may have been a more natural transition leading to higher adherence in PT compared to the other disciplines. Another factor that may explain different adherence rates across disciplines is the time to complete assessments. Both OT and SLP assess two domains, whereas PT assesses only one domain. OT assessed motor and cognition as well as screened for language deficits and SLP assessed both cognition and language. PT however only assessed the motor domain. As a result the standardized assessments were more encompassing for OT and SLP, yet each battery was designed to be completed in the time allotted for an evaluation at each setting. Exceptions requiring longer assessments times were seen across clinicians and in patients with greater deficits as was expected. Nonetheless, clinicians were generally able to complete the evaluations within the required timeframes. It is therefore difficult to ascertain whether the higher adherence seen in PT is an artifact of discipline history with standardized assessments or if it is due to the length of the assessments.

Study Limitations

Two limitations are important to consider when interpreting these results. First, the majority of the patients were evaluated by multiple disciplines and at more than one setting. Less than 5% of patients were evaluated at only one setting and by only one discipline. From a statistical perspective, our data violate the assumption of independent observations. There is no way to avoid this violation because post-stroke rehabilitation across the continuum of care is an interdisciplinary endeavor. The acute hospital requires evaluation of all participants post-stroke by both physical and occupational therapy, with results on several measures triggering speech-language pathology evaluation. The IRF requires an admission evaluation by all three disciplines. It is common that participants will require services after discharge from the acute care hospital and will then receive services from the IRF and/or outpatient facility. Likewise, each therapist evaluated numerous patients in the data set. It is possible that particular patients might be more likely to have completed all the assessments (e.g. a person with very mild stroke) or be less likely to have completed all the assessments (e.g. a person with severe stroke). Despite this potential bias, we were still able to detect differences in adherence across disciplines and settings.

Second, although feedback was provided monthly to hospital administrators at each facility, it was up to the manager and current facility policies on how this information was disseminated to the individual clinician. Different supervisors and facilities may have more or less effective strategies to encourage adherence to the standardized assessments and this may have been reflected in the results of this report.

Conclusions

Our results indicate that actual adherence to a standardized assessment battery differs across settings and across disciplines. Continuous audits of the medical record, clinician-specific feedback, and events specifically focused on increased adherence were not as effective as desired. Substantial, ongoing effort is therefore needed to maintain and/or increase sustainability of using standardized assessment batteries in stroke rehabilitation. Future work is needed to develop new processes to promote adherence and then to test the effectiveness of those processes.

Supplementary Material

Acknowledgments

We thank the staff, administrators, and data entry team at Barnes Jewish Hospital, The Rehabilitation Institute of Saint Louis, and Washington University for their enthusiasm, support, and efforts on this project. Funding was provided by HealthSouth Corporation, the Barnes Jewish Hospital Foundation, and the Washington University McDonnell Center for Systems Neuroscience.

Financial Disclosure We certify that no party having a direct interest in the results of the research supporting this article has or will confer a benefit on us or on any organization with which we are associated AND, if applicable, we certify that all financial and material support for this research (eg, NIH or NHS grants) and work are clearly identified in the title page of the manuscript.

Abbreviations

- IRF

Inpatient Rehabilitation Facility

- PT

Physical Therapy

- OT

Occupational Therapy

- SLP

Speech-Language Pathology

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

These data were presented at the 2013 American Physical Therapy Association Meeting in San Diego, California, January 21–24, 2013.

Supplier

IBM Corporation; Armonk, New York

References

- 1.Jette DU, Halbert J, Iverson C, Miceli E, Shah P. Use of standardized outcome measures in physical therapist practice: Perceptions and applications. Phys Ther. 2009;89:125–135. doi: 10.2522/ptj.20080234. [DOI] [PubMed] [Google Scholar]

- 2.Kramer A, Holthaus D. Uniform patient assessment for post-acute care. 2006 [Google Scholar]

- 3.Lansky D, Butler JB, Waller FT. Using health status measures in the hospital setting: From acute care to 'outcomes management'. Med Care. 1992;30:MS57–MS73. doi: 10.1097/00005650-199205001-00006. [DOI] [PubMed] [Google Scholar]

- 4.Russek L, Wooden M, Ekedahl S, Bush A. Attitudes toward standardized data collection. Phys Ther. 1997;77:714–729. doi: 10.1093/ptj/77.7.714. [DOI] [PubMed] [Google Scholar]

- 5.Swinkels RA, van Peppen RP, Wittink H, Custers JW, Beurskens AJ. Current use and barriers and facilitators for implementation of standardised measures in physical therapy in the netherlands. BMC Musculoskelet Disord. 2011;12:106. doi: 10.1186/1471-2474-12-106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Garland AF, Kruse M, Aarons GA. Clinicians and outcome measurement: What's the use? J Behav Health Serv Res. 2003;30:393–405. doi: 10.1007/BF02287427. [DOI] [PubMed] [Google Scholar]

- 7.Potter K, Fulk GD, Salem Y, Sullivan J. Outcome measures in neurological physical therapy practice: Part i. Making sound decisions. J Neurol Phys Ther. 2011;35:57–64. doi: 10.1097/NPT.0b013e318219a51a. [DOI] [PubMed] [Google Scholar]

- 8.Shields RK, Leo KC, Miller B, Dostal WF, Barr R. An acute care physical therapy clinical practice database for outcomes research. Phys Ther. 1994;74:463–470. doi: 10.1093/ptj/74.5.463. [DOI] [PubMed] [Google Scholar]

- 9.Vogel AP, Maruff P, Morgan AT. Evaluation of communication assessment practices during the acute stages post stroke. J Eval Clin Pract. 2010;16:1183–1188. doi: 10.1111/j.1365-2753.2009.01291.x. [DOI] [PubMed] [Google Scholar]

- 10.Van Peppen RP, Maissan FJ, Van Genderen FR, Van Dolder R, Van Meeteren NL. Outcome measures in physiotherapy management of patients with stroke: A survey into self-reported use, and barriers to and facilitators for use. Physiother Res Int. 2008;13:255–270. doi: 10.1002/pri.417. [DOI] [PubMed] [Google Scholar]

- 11.Deyo RA, Patrick DL. Barriers to the use of health status measures in clinical investigation, patient care, and policy research. Med Care. 1989;27:S254–S268. doi: 10.1097/00005650-198903001-00020. [DOI] [PubMed] [Google Scholar]

- 12.Stevens JG, Beurskens AJ. Implementation of measurement instruments in physical therapist practice: Development of a tailored strategy. Phys Ther. 2010;90:953–961. doi: 10.2522/ptj.20090105. [DOI] [PubMed] [Google Scholar]

- 13.Bayley MT, Hurdowar A, Richards CL, Korner-Bitensky N, Wood-Dauphinee S, Eng JJ, McKay-Lyons M, Harrison E, Teasell R, Harrison M, Graham ID. Barriers to implementation of stroke rehabilitation evidence: Findings from a multi-site pilot project. Disabil Rehabil. 2012;34:1633–1638. doi: 10.3109/09638288.2012.656790. [DOI] [PubMed] [Google Scholar]

- 14.Wedge FM, Braswell-Christy J, Brown CJ, Foley KT, Graham C, Shaw S. Factors influencing the use of outcome measures in physical therapy practice. Physiother Theory Pract. 2012;28:119–133. doi: 10.3109/09593985.2011.578706. [DOI] [PubMed] [Google Scholar]

- 15.Baker R, Camosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, Robertson N. Tailored interventions to overcome identified barriers to change: Effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2010:CD005470. doi: 10.1002/14651858.CD005470.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Grimshaw JM, Thomas RE, MacLennan G, Fraser C, Ramsay CR, Vale L, Whitty P, Eccles MP, Matowe L, Shirran L, Wensing M, Dijkstra R, Donaldson C. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess. 2004;8:iii–iv. 1–72. doi: 10.3310/hta8060. [DOI] [PubMed] [Google Scholar]

- 17.Jamtvedt G, Young JM, Kristoffersen DT, O'Brien MA, Oxman AD. Does telling people what they have been doing change what they do? A systematic review of the effects of audit and feedback. Qual Saf Health Care. 2006;15:433–436. doi: 10.1136/qshc.2006.018549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jamtvedt G, Young JM, Kristoffersen DT, O'Brien MA, Oxman AD. Audit and feedback: Effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2006:CD000259. doi: 10.1002/14651858.CD000259.pub2. [DOI] [PubMed] [Google Scholar]

- 19.van der Wees PJ, Jamtvedt G, Rebbeck T, de Bie RA, Dekker J, Hendriks EJ. Multifaceted strategies may increase implementation of physiotherapy clinical guidelines: A systematic review. Aust J Physiother. 2008;54:233–241. doi: 10.1016/s0004-9514(08)70002-3. [DOI] [PubMed] [Google Scholar]

- 20.Lang CE, Bland MD, Connor LT, Fucetola R, Whitson M, Edmiaston J, Karr C, Sturmoski A, Baty J, Corbetta M. The brain recovery core: Building a system of organized stroke rehabilitation and outcomes assessment across the continuum of care. J Neurol Phys Ther. 2011;35:194–201. doi: 10.1097/NPT.0b013e318235dc07. [DOI] [PubMed] [Google Scholar]

- 21.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (redcap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Calero MD, Arnedo ML, Navarro E, Ruiz-Pedrosa M, Carnero C. Usefulness of a 15-item version of the boston naming test in neuropsychological assessment of low-educational elders with dementia. J Gerontol B Psychol Sci Soc Sci. 2002;57:P187–P191. doi: 10.1093/geronb/57.2.p187. [DOI] [PubMed] [Google Scholar]

- 23.Kirk A. Target symptoms and outcome measures: Cognition. Can J Neurol Sci. 2007;34(Suppl 1):S42–S46. doi: 10.1017/s0317167100005552. [DOI] [PubMed] [Google Scholar]

- 24.Otterman NM, van der Wees PJ, Bernhardt J, Kwakkel G. Physical therapists' guideline adherence on early mobilization and intensity of practice at dutch acute stroke units: A countrywide survey. Stroke. 2012 doi: 10.1161/STROKEAHA.112.660092. [DOI] [PubMed] [Google Scholar]

- 25.Duncan PW, Horner RD, Reker DM, Samsa GP, Hoenig H, Hamilton B, LaClair BJ, Dudley TK. Adherence to postacute rehabilitation guidelines is associated with functional recovery in stroke. Stroke. 2002;33:167–177. doi: 10.1161/hs0102.101014. [DOI] [PubMed] [Google Scholar]

- 26.Grol R. Successes and failures in the implementation of evidence-based guidelines for clinical practice. Med Care. 2001;39:II46–II54. doi: 10.1097/00005650-200108002-00003. [DOI] [PubMed] [Google Scholar]

- 27.Sullivan JE, Andrews AW, Lanzino D, Perron AE, Potter KA. Outcome measures in neurological physical therapy practice: Part ii. A patient-centered process. J Neurol Phys Ther. 2011;35:65–74. doi: 10.1097/NPT.0b013e31821a24eb. [DOI] [PubMed] [Google Scholar]

- 28.Aota's centennial vision and executive summary. The American Journal of Occupational Therapy. 2007;61:613–614. [Google Scholar]

- 29.Wolf TJ, Baum C, Connor LT. Changing face of stroke: Implications for occupational therapy practice. Am J Occup Ther. 2009;63:621–625. doi: 10.5014/ajot.63.5.621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yorkston KMSK, Duffy J, Beukelman D, Golper LA, Miller R, Strand E, Sullivan M. Evidence-based medicine and practice guidelines: Application to the field of speech-language pathology. Journal of Medical Speech-Language Pathology. 2001;9:243–256. [Google Scholar]

- 31.Ratner NB. Evidence-based practice: An examination of its ramifications for the practice of speech-language pathology. Lang Speech Hear Serv Sch. 2006;37:257–267. doi: 10.1044/0161-1461(2006/029). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.