Abstract

A new iterative scheme has been constructed for finding minimal solution of a rational matrix equation of the form X + A*X −1 A = I. The new method is inversion-free per computing step. The convergence of the method has been studied and tested via numerical experiments.

1. Introduction

In this paper, we will discuss the following nonlinear matrix equation:

| (1) |

where A is an n × n nonsingular complex matrix, I is the unit matrix of the appropriate size, and X ∈ C n×n is an unknown Hermitian positive definite (HPD) matrix that should be found. It was proved in [1] that if (1) has an HPD solution, then all its Hermitian solutions are positive definite and, moreover, it has the maximal solution X L and the minimal solution X S in the sense that X S ≤ X ≤ X L for any HPD solution X.

A lot of papers have been published regarding the iterative HPD solutions of such nonlinear rational matrix equations in the literature due to their importance in some practical problems arising in control theory, dynamical problems, and so forth (see [2, 3]).

The most common iterative method for finding the maximal solution of (1) is the following fixed-point iteration [4]:

| (2) |

The maximal solution of (1) can be obtained through X L = I − Y S, where Y S is the minimal solution of the dual equation Y + AY −1 A* = I.

In 2010, Monsalve and Raydan in [5] proposed the following iteration method (also known as Newton's method) for finding the minimal solution:

| (3) |

which is an inversion-free scheme. Since, A −1 should be computed only once in contrast to the matrix iteration (2). Note that A −∗ = A −1*, and similar notations are used throughout.

Remark 1 . —

We remark that there are several other well-known iterative methods for solving (1) rather than Newton's method (3). To the best of our knowledge, the procedure of extending higher-order iterative methods for finding the solution of (1) has not been exploited up to now. Hence, we hope that this interlink among the fields of root-finding and solving (1) may lead to discovering novel and innovative techniques.

The rest of this paper is organized as follows. In Section 2, we develop and analyze a new inversion-free method for finding roots of a special map F. In Section 3, we provide some numerical comparisons by employing some experiments in machine precision. Some concluding remarks will be drawn in Section 4.

2. A New Iterative Method

An equivalent formulation of (1) is to find an HPD matrix X such that F(X) = 0. Toward this goal, we write F(X)≔X + A*X −1 A − I = 0. Furthermore, we have

| (4) |

Now, using a change of variable as Z = A −1 XA −∗, we could simplify (4) as follows:

| (5) |

In order to obtain an iterative method for finding the minimal HPD solution of (1), it is now enough to solve the well-known matrix equation Z −1 − B = 0, at which B = A(A −1 A −∗ − Z)A*. One of such ways to challenge this matrix inversion problem is via applying the Schulz-type iteration methods (see, e.g., [6–10]). Applying Chebyshev's method [11] yields

| (6) |

We remark that there is a tight relationship between iterative methods for nonlinear systems and the construction of higher-order methods for matrix equations ([12, 13]).

The matrix iteration (6) requires A −1 to be computed only once at the beginning of the iteration and this makes the iterative method fall in the category of inversion-free algorithms for solving (1).

In the meantime, it is easy to show that the zeros of the map G(Z) = Z −1 − B are equal to the zeros of the map F(X) = X −1 − H, wherein H = A −∗(I − X)A −1. To be more precise, we are finding the inverse of the matrix H which matches the minimal HPD solution of (1).

Remark 2 . —

Following Remark 1, we applied Chebyshev's method for (1) in this work and will study its theoretical behavior. The extension of the other well-known root-finding schemes for finding the minimal solution of (1) will remain for future studies.

Lemma 3 . —

The proposed method (6) produces a sequence of Hermitian matrices using the Hermitian initial matrix X 0 = AA*.

Proof —

The initial matrix AA* is Hermitian, and H k = A −∗(I − X k)A −1. Thus, H 0 = A −∗ A −1 − A −∗ AA*A −1 is also Hermitian; that is, H 0* = H 0. Now using inductive argument, we have

(7) By considering (X l)* = X l, (l ≥ k) we now show that

(8) Note that H l = (H l)* has been used in (8). Now the conclusion holds for any l + 1. Thus, the proof is complete.

Theorem 4 . —

By considering that A and X k are nonsingular matrices, the sequence {X k} generated by (6) is convergent to the minimal solution using the initial matrix X 0 = AA*.

Proof —

Let us consider H k = A −∗(I − X k)A −1. We therefore have

(9) Taking a generic matrix operator norm from both sides of (9), we obtain

(10) On the other hand, Chebyshev's method for matrix inversion problem is convergent if the initial approximation reads ||I − HX 0|| < 1. That is to say, ||I − [A −∗(I − X)A −1]X 0|| < 1. This together with the initial matrix X 0 = AA* gives

(11) which is true when X is the minimal HPD solution of (1).

Note that since

(12) we obtain that X 0 −1 > X S −1; thus X 0 < X S. And subsequently using mathematical induction, it would be observed that {X k} tends to X S.

The only problem that happens in this process is the fact that the convergence order is q-linear. In fact, although Chebyshev's method for matrix inversion has third local order of convergence, this rate will not be preserved for finding the minimal HPD solution of (1).

The reason is that the matrix H, which we must compute its inverse by Chebyshev's method, is dependent on the X itself. That is to say, the unknown is located in the essence of the matrix H = A −∗(I − X)A −1.

Theorem 5 . —

The sequence of matrices produced by (6) satisfies the following error inequality:

(13) where λ k = −X S −1 δ k + X k X S 2 δ k + 3Y k − X k X S −1 Y k − Y k X S −1 X k + Y k A −∗ A −1 X k and Y k = X k A −∗ A −1 X k.

Proof —

First since limk→∞ X k = X S and by using (6) we have

(14) Note that we have used the fact that (I − X S) = A*X S −1 A. Relation (14) yields

(15) wherein δ k = X k − X S and λ k = −X S −1 δ k + X k X S 2 δ k + 3X k A −∗ A −1 X k − X k X S −1 X k A −∗ A −1 X k − X k A −∗ A −1 X k X S −1 X k + X k A −∗ A −1 X k A −∗ A −1 X k. We remark that δ k A −1 X k = A −1 X k δ k.

Consequently, one has the error inequality (13). This shows the q-linear order of convergence for finding the minimal HPD solution of (1). We thus have

(16) which is guaranteed since

(17)

3. Numerical Comparisons

In this section, we mainly investigate the performance of the new method (6) for matrix equation (1). All experiments were run on a Pentium IV computer, using Mathematica 8 [14]. We report the number of required iterations (Iter) for converging. In our implementations, we stop all considered methods when the infinity norm of two successive iterates is less than given tolerance.

Note that recently Zhang in [15] studied a way to accelerate the beginning of such iterative methods for finding the minimal solution of (1) via applying multiple Newton's method for matrix inversion. This technique could be given by

| (18) |

for any 1 ≤ t ≤ 2. Subsequently, we could improve the behavior of the new method (6) using (18) as provided in Algorithm 1.

Algorithm 1.

A hybrid method for computing the minimal HPD solution of (1).

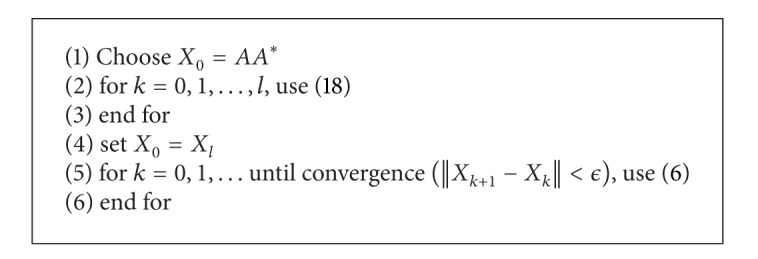

We compare Algorithm 1, denoted by PM, with (2) denoted by M1, (3) denoted by M2, and the method proposed by El-Sayed and Al-Dbiban [16] denoted by M3, which is a modification of the method presented by Zhan in [17], as follows:

| (19) |

Example 1 (see [18]). —

In this experiment, we compare the results of different methods for finding the minimal solution of (1) when the matrix A is defined by

(20) and the solution is

(21) The results are given in Figure 1(a) in terms of the number of iterations when the stopping criterion is ||X k+1−X k||∞ ≤ 10−8.

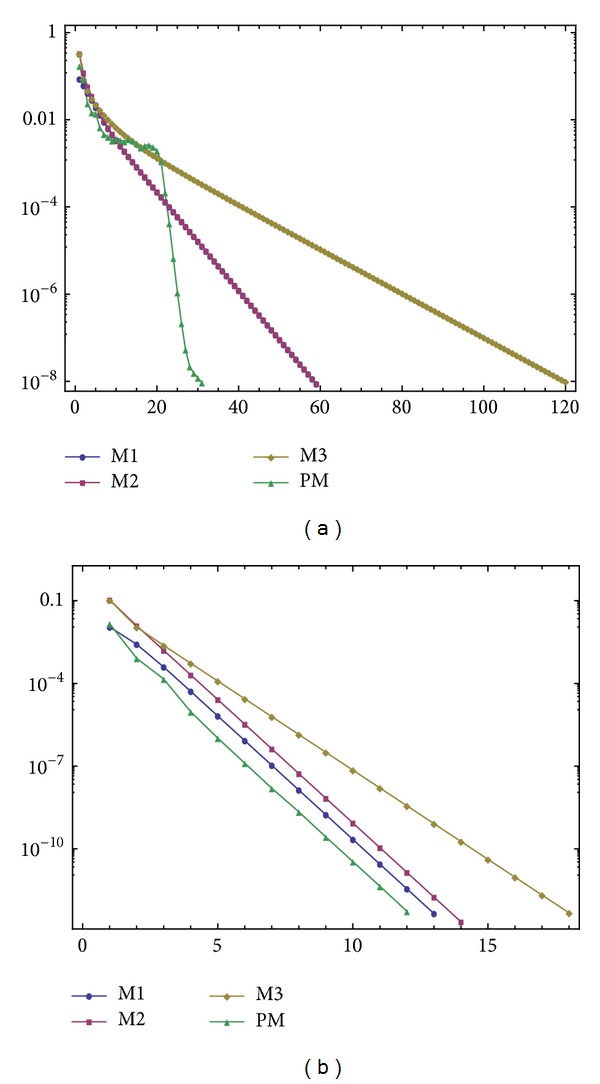

Figure 1.

Number of iterations against accuracies for experiment 1 (a) and experiment 2 (b).

Note that PM and M2 converge to X S, whereas all the other schemes converge to X L; thus we use other schemes to find the maximal solution of the dual equation D(X) = X + AX −1 A* − I in our written codes so as to have fair comparisons. Here t = 2 has been chosen for PM (with l = 19). This l = 19 for the number of iterations in the inner finite loop of Algorithm 1 has been considered in the numerical report.

Furthermore, we have chosen this number empirically. In fact, varying l shows us that we even can obtain better or worse results than the reported ones in different examples.

In Example 1, we have used t = 2. In fact we have chosen this value for t since we are solving an operator equation in essence. To be more precise, we wish to consider the solution of the operator equation to be of multiplicity 2. This consideration makes the algorithm converge faster at the initial phase of the process and when we are enough close to the solution, then we flash back to the ordinary methods, that is, treat the solution as a simple zero (solution) of the operator equation.

Example 2 (see [19]). —

Applying the stopping criterion ||X k+1−X k||∞ ≤ 10−12, we compare the behavior of various methods for the following test matrix:

(22) with the solution as

(23) The results are illustrated in Figure 1(b), wherein t = 1.2 has been chosen for PM (with l = 1).

4. Conclusions

We have studied the fact that the minimal HPD solution of (1) is equivalent to the roots of a nonlinear map. This special map has been solved by the well-known Chebyshev method as a matrix inversion problem.

The developed method requires the computation of one matrix inverse at the beginning of the process and it is hence an inversion-free method. The convergence and the rate of convergence have been studied for this scheme. Furthermore, using a proper acceleration technique from the literature, we have further speeded up the process of finding the HPD solution of (1).

Acknowledgments

The authors express their sincere thanks to the referees for the careful and details reading of the manuscript and very helpful suggestions that improved the manuscript substantially. The authors also gratefully acknowledge that this research was partially supported by the University Putra Malaysia under the GP-IBT Grant Scheme having project number GP-IBT/2013/9420100.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Engwerda JC, Ran CMA, Rijkeboer AL. Necessary and sufficient conditions for the existence of a positive definite solution of the matrix equation X + A*X −1 A = Q . Linear Algebra and its Applications. 1993;186:255–275. [Google Scholar]

- 2.El-Sayed SM, Ran ACM. On an iteration method for solving a class of nonlinear matrix equations. SIAM Journal on Matrix Analysis and Applications. 2001/02;23(3):632–645. [Google Scholar]

- 3.Li J. Solutions and improved perturbation analysis for the matrix equation X − A*X −p A = Q(p > 0) Abstract and Applied Analysis. 2013;2013:12 pages.575964 [Google Scholar]

- 4.Engwerda JC. On the existence of a positive definite solution of the matrix equation X + A*X −1 A = I . Linear Algebra and its Applications. 1993;194:91–108. [Google Scholar]

- 5.Monsalve M, Raydan M. A new inversion-free method for a rational matrix equation. Linear Algebra and Its Applications. 2010;433(1):64–71. [Google Scholar]

- 6.Ullah MZ, Soleymani F, Al-Fhaid AS. An efficient matrix iteration for computing weighted Moore-Penrose inverse. Applied Mathematics and Computation. 2014;226:441–454. [Google Scholar]

- 7.Soleymani F, Tohidi E, Shateyi S, Haghani FK. Some matrix iterations for computing matrix sign function. Journal of Applied Mathematics. 2014;2014:9 pages.425654 [Google Scholar]

- 8.Soleymani F, Sharifi M, Shateyi S, Haghani F. A class of Steffensen-type iterative methods for nonlinear systems. Journal of Applied Mathematics. 2014;2014:9 pages.705375 [Google Scholar]

- 9.Soleymani F, Stanimirović PS, Shateyi S, Haghani FK. pproximating the matrix sign function using a novel iterative method. Abstract and Applied Analysis. 2014;2014:9 pages.105301 [Google Scholar]

- 10.Soleymani F, Sharifi M, Shateyi S, Khaksar Haghani F. An algorithm for computing geometric mean of two Hermitian positive definite matrices via matrix sign. Abstract and Applied Analysis. 2014;2014:6 pages.978629 [Google Scholar]

- 11.Traub JF. Iterative Methods for the Solution of Equations. Englewood Cliffs, NJ, USA: Prentice Hall; 1964. [Google Scholar]

- 12.Iliev A, Kyurkchiev N. Nontrivial Methods in Numerical Analysis: Selected Topics in Numerical Analysis. LAP LAMBERT Academic Publishing; 2010. [Google Scholar]

- 13.Soleymani F, Lotfi T, Bakhtiari P. A multi-step class of iterative methods for nonlinear systems. Optimization Letters. 2014;8(3):1001–1015. [Google Scholar]

- 14.Trott M. The Mathematica Guide-Book for Numerics. New York, NY, USA: Springer; 2006. [Google Scholar]

- 15.Zhang L. An improved inversion-free method for solving the matrix equation X + A ∗ X −α A = Q . Journal of Computational and Applied Mathematics. 2013;253:200–203. [Google Scholar]

- 16.El-Sayed SM, Al-Dbiban AM. A new inversion free iteration for solving the equation X + A*X −1 A = Q . Journal of Computational and Applied Mathematics. 2005;181(1):148–156. [Google Scholar]

- 17.Zhan X. Computing the extremal positive definite solutions of a matrix equation. SIAM Journal on Scientific Computing. 1996;17(5):1167–1174. [Google Scholar]

- 18.Guo CH, Lancaster P. Iterative solution of two matrix equations. Mathematics of Computation. 1999;68(228):1589–1603. [Google Scholar]

- 19.El-Sayed SM. An algorithm for computing positive definite solutions of the nonlinear matrix equation X + A*X −1 A = I . International Journal of Computer Mathematics. 2003;80(12):1527–1534. [Google Scholar]