SUMMARY

A biomarker (S) measured after randomization in a clinical trial can often provide information about the true endpoint (T) and hence the effect of treatment (Z). It can usually be measured earlier and more easily than T and as such may be useful to shorten the trial length. A potential use of S is to completely replace T as a surrogate endpoint to evaluate whether the treatment is effective. Another potential use of S is to serve as an auxiliary variable to help provide information and improve the inference on the treatment effect prediction when T is not completely observed. The objective of this report is to focus on its role as an auxiliary variable and to identify situations when S can be useful to increase efficiency in predicting the treatment effect in a new trial in a multiple-trial setting. Both S and T are continuous. We find that higher efficiency gain is associated with higher trial-level correlation but not individual-level correlation when only S, but not T is measured in a new trial; but, the amount of information recovery from S is usually negligible. However, when T is partially observed in the new trial and the individual-level correlation is relatively high, there is substantial efficiency gain by using S. For design purposes, our results suggest that it is often important to collect markers that have high adjusted individual-level correlation with T and at least a small amount of data on T. The results are illustrated using simulations and an example from a glaucoma clinical trial.

Keywords: auxiliary variables, biomarker, clinical trials, meta analysis, mixed model, surrogate

1. INTRODUCTION

A biomarker (S) in a clinical trial is a type of variables intended to provide information about the true endpoint (T) and the effect of treatment (Z). It is often an intermediate physical or laboratory indicator in a disease progression process, and can be measured earlier and is often easier to collect than T. Examples of biomarkers include CD4 counts in AIDS, blood pressure and serum cholesterol level in cardiovascular disease, and prostate-specific antigen in prostate cancer studies. Early measurements are also used as biomarkers for the later measurements, such as the earlier vision test result as a biomarker for the later result in a study on patients with age-related muscular degeneration [1]. Different investigators use different terminology for the roles of the biomarkers [2]. In this paper, we call S a surrogate endpoint when the potential use of S is to completely replace T to evaluate whether the treatment is effective [3]. Alternatively, when S is used to help provide information or enhance the efficiency of the estimator of the treatment effect on T when T is not completely observed, we call S as auxiliary variables [4]. When the true endpoints are rare, later-occurring or costly to obtain, the proper use of good biomarkers can substantially reduce the trial size and duration, hence lower the expense and lead to earlier decision making.

Previous research on the biomarker has often focused on the potential role of S as a surrogate endpoint for T. In a landmark article, Prentice [3] proposed a formal definition for perfect surrogacy and provided validation criteria for a single-trial setting. The criteria require that changes in S fully capture the effect of treatment on T. This paper inspired much research in the field, but the criteria are considered too restrictive for practical use. To relax the criteria, a surrogacy measure based on the proportion of the treatment effect explained (PTE) by S was proposed [5] and further studied and extended by several other authors (e.g., [7, 8, 9]). Freedman [5] also suggested that the PTE confidence interval’s lower bound be > 0.75 for a marker to be acceptable as a surrogate endpoint. However, this requires the treatment effect on T to be very strong, which is rarely observed in practice [8, 10]. The PTE estimator is also highly variable and can be out of the [0,1] range [7, 11]; hence, its practical use is limited.

From a biological aspect, there are often multiple causal pathways leading to disease and complex mechanisms by which the treatment functions; hence, a biomarker may or may not mediate the effect of the treatment on T and the surrogacy measures are often not directly transferable from one study to another. Another problem is that S may not capture the harmful side effect of the treatment. These associated uncertainties in the use of S in replacing T to test a new treatment can lead to incorrect, even harmful conclusions [11, 12]. As a result, very few biomarkers have been accepted as valid surrogate endpoints for T and their potential use as substitutes has been less than promising.

With new biomarkers being discovered and developed at a phenomenal rate, the clinical research community continue to be extremely interested in biomarkers in clinical trials. In this paper, we focus on the use of S as an auxiliary variable in helping predict the treatment effect on T. As we shall see, this role of a biomarker proves to be more promising. One of the most common scenarios for S to be useful as an auxiliary outcome is when one has more information on S than that on T for a study population. This occurs often in practice, since patients are usually recruited into a trial sequentially in calendar time and S is observed more often and earlier than T, particularly on those enrolled early. Previous surrogacy measures are often proposed based on summary statistics in order to identify a replacement for T, and they are not usually suggested explicitly for the purpose of prediction. In the presence of individual-level data, a biomarker may actually be effective as an auxiliary outcome in enhancing inference, but not be identified as such using existing surrogacy measures. A strong association between S and T does not suffice for S to be a substitute for T; as Baker et al [13] stated, “a correlate does not make a surrogate”. However, when individual data on T exist, a strong association can inform and increase the efficiency of treatment effect prediction, as we shall demonstrate.

A number of authors have explored the role of biomarkers as auxiliary variables. However, the opinions on their values have been mixed, as noted by [14]. In much of the previous work, the information recovered from S appears to be very small [18, 4, 19] unless in rare situations when S and T are very highly correlated; however, when there is more structural relationship between S and T, it is more likely to achieve significant efficiency gain by using S [14]. Most of the work mentioned above has focused on the situation when T is the time to an event. When S and T are continuous data, Venkatraman and Begg [21] proposed fully nonparametric tests that incorporate the information from S and found that the amount of efficiency gain through S for these tests is small except in rare occasions when the correlation between S and T is extremely high. A homogeneous sample such as the single trial setting has often been considered in the previous work. When we can identify a group of trials which have similar treatment groups and patient populations, it is natural to use a meta-analytic approach to predict the treatment effect in a new trial. This approach could allow one to account for the heterogeneity among different trials and borrow information from previous trials to improve the efficiency.

In this paper, we will focus on examining the extent of information gain from S in a multiple trial setting. We will examine the situation when T is either completely missing or partially missing in a new trial when we have information on S, T and Z in the previous trials. The objective is to predict the treatment effect on T in the new trial when S and T are continuous and Z is binary. We examine the factors, particularly, the correlation between S and T and the fraction of missing T, that impact the extent of increase in the precision of the treatment effect estimate resulting from utilizing S to identify the situations when S can be beneficial. The results are intended to be of practical value and directly applicable to clinical trials.

In Section 2, we introduce a commonly used bivariate mixed model. In Section 3, we summarize several related methods used to predict the effect of Z on T in a new trial when T is either completely missing or partially missing in the new trial. The methods include those proposed by Buyse et al [10], Gail et al [22] and Henderson [23]. In Section 4, we examine the extent of information recovery from S and its relation to the correlation between S and T. In Section 5, we evaluate the methods and efficiency gain through simulations. In Section 6, we give a data example. In Section 7, we present conclusions.

2. The Model

Suppose we have n randomized trials, i = 1, …, n, where the nth trial is labeled as new and there are mi patients in the ith trial. Let Z = 0, 1 denote the placebo and treatment groups, respectively and (Sij, Tij, Zij) represent S, T, and Z for individual j in trial i. We are interested in predicting the actual treatment effect on T in the new trial (δTn) based on previous (n − 1) existing trials and whatever data is available in the nth trial. A commonly used bivariate mixed model used to describe the joint distribution of Sij and Tij [10] is:

| (1) |

where

| (2) |

and

| (3) |

The treatment effect in the nth trial is δTn = γ1 + γ1n. Let , βT = (α0, γ0, α1, γ1) and . The model (1) can be written in a general mixed model notation as Yi = Xiβ + Uiηi + εi, where β denotes the fixed effects, ηi denotes the random effects, Xi and Ui are the corresponding design matrices. The vector Yi follows a bivariate normal distribution with mean Xiβ and variance where Σi is a 2mi × 2mi matrix with mi blocks of σ on the main diagonal and zeros elsewhere.

3. Methods for Predicting the Treatment Effect δTn in the New Trial

In this section, we introduce several related methods used to predict the effect of Z on T in a new trial when T is either completely missing or partially missing in the new trial.

3.1. Buyse et al Method

Buyse et al (BMBRG) [10] assumed the same model and suggested a method to estimate δTn when T is completely unobserved in the nth trial. First, they fit a bivariate mixed model to the data from trial 1 through (n−1) to obtain the estimates of D, α0, γ0, α1 and γ1, denoted by , , , and , respectively. Second, they fit a linear regression Snj = μ0Sn + δSnZnj + εSnj in the nth trial. One then obtains that and where and are estimates of μ0Sn and δSn based on data from the nth trial. Given that β, D, σ, a0n and a1n are known, BMBRG showed that δn follows a normal distribution with conditional mean

| (4) |

and conditional variance

| (5) |

While various methods can be used to obtain the estimate for δTn, denoted by , in our simulations, we replace β, D, σ, a0n and a1n with their estimates in equations (4) and (5) as often done in practice. Specifically, we obtain β, D and σ using a restricted maximum likelihood method from PROC MIXED in SAS. We estimate μ0Sn and δSn using PROC GLM in SAS and then obtain the estimates for a0n and a1n. However, this often leads to underestimation of .

3.2. Gail et al Method

Gail et al (GPHC) [22] proposed to estimate δTn without involving models for the joint distribution of (Sij, Tij) at the individual level. The method applies to the situation when T is completely unobserved in the nth trial. Let represent the marginal means of T in the Z = 0 and 1 groups in the ith trial and similarly for . GPHC assume that follows a multivariate normal distribution with covariance φ where φ is a 4 × 4 matrix representing the between-trial variance; hence, its estimate follows a multivariate normal distribution with the covariance φ +ωi where ωi is a 4 × 4 matrix with two block diagonal matrices denoting the within-trial variance for each treatment group. The elements of μTi, μSi, and φ are connected with the parameters in the model (1) in the following way: μ0Ti = γ0 + r0i, μ1Ti = γ0 + r0i + γ1 + r1i, μ0Si = α0 + a0i, μ1Si + α0 + a0i + α1 + a1i, φ11 = dtt + dbb + 2dtb, φ12 = dts + dab + dta + dsb, φ13 = dtt + dtb, φ14 = dts + dsb, φ22 = dss + daa + 2dsa, φ23 = dst + dta, φ24 = dss + dsa, φ33 = dtt, φ34 = dst and , φ44 = dss.

GPHC show that μTn given (and β, φ and ω) follows a normal distribution with mean

and variance

where ω22n denotes the variance of and ω44n for .

The treatment effect on T in the new trial, δTn, has mean

| (6) |

and variance

| (7) |

If we drop the terms w22n and w44n from the above expressions, we obtain the identical expressions as those of the BMBRG mean and variance. The GPHC formula takes into account the uncertainty associated with estimating a0n and a1n while BMBRG does not. Similar to BMBRG, GPHC also assume that β, D and σ are known in deriving equations (6) and (7). Since the uncertainties of β, D and σ are not accounted for here, is often underestimated. Gail et al (2000) noted that this method is analogous to the generalized estimating equations (GEE) [6]. We note that the GEE approach can handle the situation when T is partially observed in the new trial, thus the GPHC method could be generalized and would be worthy of further investigation.

To estimate δTn and , in our simulations, we first obtain by calculating the covariances of the treatment- and trial-specific means where and denote the estimates of φ and ωi, respectively. We then calculate the treatment-specific covariances of S and T within each trial and then average them over different trials to obtain . From these, we calculate . We calculate the overall treatment-specific means as and (i.e., the estimates of γ0 and γ1) and the variances for each treatment group in the new trial for and (i.e., the estimates of ω22n and ω44n). We estimate μ0Sn and μ1Sn and then calculate a0n and a1n. Then we plug in these estimates into (6) and (7) to obtain the mean and variance for .

3.3. Henderson Method (HD)

While both BMBRG and GPHC methods only apply to the situation when T is completely missing in the new trial, the HD method applies to the situations when T is either completely missing, partially missing or completely observed in the new trial. Using the general mixed model notation, we can obtain the estimates of β and ηn (denoted by and ) by solving the mixed model equation which is described by Henderson [23] (details in Appendix A) and their sum follows a normal distribution with mean

| (8) |

and variance

The treatment effect for the nth trial has mean

| (9) |

and variance

| (10) |

Note that is the best linear unbiased predictor (BLUP) and can be derived as an empirical Bayes estimator [25, 26]. When T is completely missing in the nth trial, the expression of in (9) is exactly the same as the GPHC estimate in (6). Different from GPHC and BMBRG, the variance formula in (10) accounts for the uncertainty associated with estimating β, but it treats D and σ as known quantities. In the implementations, we obtain these estimates using PROC MIXED in SAS.

3.4. Empirical Bayes Estimate and Conditional Posterior Variance (EB-CPV)

Let r be the number of patients in the new trial on whom we have information on both S and T. The empirical Bayesian estimate of δTn can be obtained as the posterior mode estimate when we assume flat priors for the fixed effects and multivariate normal priors for the random effects [26]. Its expression is identical to the HD estimate in equation (9) [26]. When β, D and σ are known, the conditional posterior variance (CPV) of δTn can approximate the variance of [28]. We obtain the CPV of δTn as (details in Appendix C):

| (11) |

where, Ψd is a function only of the between-trial covariances given by and Φe is a function only of the within-trial covariances given by . The elements of Ψd and Φe are listed below:

When T is completely missing in the nth trial, i.e., r = 0, the CPV simplifies to:

| (12) |

an expression equivalent to the BMBRG variance formula in (5). The CPV formula can be viewed as the generalization of the BMBRG variance formula. Note that the CPV underestimates the prediction variance because they treat β, D, σ, a0n and a1n as known quantities. Morris [29] and Ghosh and Rao [28] showed that a better estimator of the prediction variance can be obtained by adding to the CPV a second term that takes into account the uncertainty about all parameters.

3.5. Bayesian Estimation (denoted by Bayes)

An alternative method to obtain the distributions of the parameters of interest is a fully Bayesian estimation method which is also applicable when T is either partially missing or completely missing. We assume flat priors for the fixed effects, i.e., p(α0) ∝ 1, p(γ0) ∝ 1, p(α1) ∝ 1, and p(γ1) ∝ 1, and vague priors for the rest of parameters, specifically, σ−1 ~ W (a, E) and D-−1 ~ W (c, F), where W refers to the Wishart distribution. We use a = 3, c = 5, E = (a + 1)−1I2 and F = (c + 1)−1I4. A data augmentation method is used to implement the procedure (details in Appendix B). The Bayesian estimation method naturally takes into consideration the uncertainty associated with estimating every parameter [27], but it can be sensitive to the prior specifications. While it is computationally intensive to conduct extensive simulations to evaluate the properties of this method, it is very feasible to analyze data using this method.

4. Efficiency Gain and Correlation

In this section, we study the precision of the predicted treatment effects () and the factors that impact the precision, particularly, the correlation between S and T and the fraction of missingness.

4.1. Correlation

In a multiple-trial setting, with a bivariate mixed model assumption, the treatment adjusted individual-level or within-trial correlation between S and T is . The trial-level correlation between S and T is defined by Buyse et al [10] as

The between-trial correlation assesses how well the treatment effect on T in the new trial can be predicted by that on S. While is identified as the key factor that impacts the degree of efficiency gain from S in the research by Buyse et al [10] and Gail et al [22], as we shall see in the following, plays an even more important role than in obtaining substantial efficiency gain from S with respect to the estimated treatment effect on T when T is partially observed.

4.2. Prediction Precision and Correlation

We examine the impacts of and on the prediction precision using the CPV formula in equation (11). We note that when there is an equal number of patients per treatment group in the new trial, the elements of Φe in CPV simplify to

When T is completely missing in the new trial, the CPV simplifies to ; hence, the factors that determine the precision of the predictor of the treatment effect on T are and drr which are between-trial level. When T is partially observed, the additional important factors are within-trial level including , σtt and r. Since the within-trial covariances in Φe are usually significantly smaller than the between-trial covariances in Ψd, we find that Φe usually dominates and Ψd has a negligible impact on the CPV. Although the CPV usually underestimates the prediction variance, our simulation studies show that it usually accounts for the majority of the total variance, and a comparison between (11) and (12) should suffice to provide algebraic intuition about the prediction variance.

5. Simulations

5.1. The Setup

We conduct simulation studies to evaluate the bias, efficiency and coverage rates of the confidence intervals for the predicted treatment effect in a new trial using the above methods. For comparison purposes, we also estimate δTn based on observed T using the simple estimate without any distributional assumption (denoted by SIMPLE). That is, , where Tnk1 represents T on patient k in the Z = 1 group in the nth trial and similarly for Tnl0, mn1 represents the number of patients in the Z = 1 group in the nth trial and similarly for mn0.

We generate 500 data sets based on the bivariate mixed model in (1). We assume equal number of patients per trial and let mi = m. The parameter specifications are: βT = (1, 2, 1, 1), dss = 0.5, dtt = 0.2, daa = 3.5, drr = 1.6, σss = 1 and σtt = 0.3. To examine the impact of the trial-level correlation, we vary the correlation matrices for the random effects: , and , which correspond to , 0.5 and 0.8, respectively. To examine the impact of the individual-level correlation, we vary from 0.1, 0.5, to 0.9. We vary n, m, and the percentage of missingness in the new trial (denoted by p). For each different data set, we have a different underlying true treatment effect δTn because δTn is not fixed and follows a known distribution. Its average across 500 data sets is denoted by . For each data set and each method used, we obtain , its standard error, its CI as and an indicator variable for whether the 95% CI contains δTn or not. Let denote the average of across 500 data sets. We examine the method’s performance by its average bias , the average standard error (SE), the root mean squared error , and the coverage rate (CR) over all simulated data sets. As we will see all estimates are unbiased, the relative efficiency (RE) of two estimators can be approximated by the inverse of the ratio of the two corresponding RMSE2s.

5.2. Method Evaluation

In Table I, we present Bias, RMSE, SE and CR of using the respective methods including SIMPLE, HD, BMBRG, GPHC, Bayes and EB-CPV from simulations with various combinations of n, m, and the percentage of missingness. We let and . When T is completely or 50% observed in the new trial, SIMPLE and HD generate estimates which are unbiased, have similar RMSE and confidence intervals with nominal-level or close- to-nominal-level coverage rates; on the other hand, CPV consistently gives underestimated prediction variances (i.e., SE < RMSE).

Table I.

Simulation results based on 500 data sets. βT= (1, 2,1,1), dss = 0.5, dtt = 0.2, daa = 3.5, drr = 1.6, σss = 1, σtt = 0.3, and

| n | m | %Missing | Methods | Bias | RMSE | SE | CR |

|---|---|---|---|---|---|---|---|

| 10 | 100 | 0% | SIMPLE | −0.005 | 0.111 | 0.109 | 95.0 |

| HD | −0.005 | 0.112 | 0.106 | 94.8 | |||

| Bayes | −0.006 | 0.112 | 0.109 | 94.8 | |||

| EB-CPV | −0.005* | 0.112* | 0.093† | 90.8 | |||

| 50% | SIMPLE | −0.002 | 0.149 | 0.139 | 95.4 | ||

| HD | 0.000 | 0.143 | 0.139 | 94.0 | |||

| Bayes | −0.004 | 0.145 | 0.145 | 94.8 | |||

| EB-CPV | 0.000* | 0.143* | 0.132† | 91.2 | |||

| 100% | BMBRG | −0.013 | 1.193 | 0.736 | 76.6 | ||

| GPHC | −0.010 | 1.125 | 0.755 | 80.2 | |||

| HD | −0.011 | 1.125 | 0.795 | 82.0 | |||

| Bayes | −0.037 | 1.139 | 1.105 | 94.8 | |||

|

| |||||||

| 40 | 100 | 0% | SIMPLE | −0.005 | 0.111 | 0.109 | 95.0 |

| HD | −0.005 | 0.110 | 0.108 | 95.0 | |||

| EB-CPV | −0.005* | 0.110* | 0.093† | 91.4 | |||

| 50% | SIMPLE | −0.002 | 0.149 | 0.155 | 95.4 | ||

| HD | 0.003 | 0.140 | 0.142 | 95.8 | |||

| EB-CPV | 0.003* | 0.140* | 0.130† | 93.2 | |||

| 100% | BMBRG | −0.008 | 0.965 | 0.887 | 92.8 | ||

| GPHC | −0.008 | 0.965 | 0.877 | 92.6 | |||

| HD | −0.008 | 0.965 | 0.869 | 92.4 | |||

|

| |||||||

| 40 | 300 | 0% | SIMPLE | 0.002 | 0.063 | 0.063 | 94.2 |

| HD | 0.002 | 0.063 | 0.063 | 94.4 | |||

| EB-CPV | 0.002* | 0.063* | 0.066† | 90.9 | |||

| 50% | SIMPLE | −0.002 | 0.089 | 0.090 | 94.4 | ||

| HD | 0.000 | 0.085 | 0.083 | 93.6 | |||

| EB-CPV | 0.000* | 0.085* | 0.077† | 91.4 | |||

| 100% | BMBRG | −0.002 | 0.920 | 0.868 | 93.8 | ||

| GPHC | −0.002 | 0.919 | 0.871 | 93.8 | |||

| HD | −0.002 | 0.919 | 0.882 | 94.0 | |||

|

| |||||||

| 55 | 100 | 0% | SIMPLE | −0.005 | 0.111 | 0.109 | 95.0 |

| HD | −0.005 | 0.110 | 0.108 | 95.0 | |||

| EB-CPV | −0.005* | 0.110* | 0.094† | 90.8 | |||

| 50% | SIMPLE | −0.002 | 0.149 | 0.155 | 95.4 | ||

| HD | 0.002 | 0.140 | 0.142 | 95.8 | |||

| EB-CPV | 0.002* | 0.140* | 0.131† | 93.4 | |||

| 100% | BMBRG | −0.033 | 0.950 | 0.883 | 93.8 | ||

| GPHC | −0.033 | 0.948 | 0.898 | 94.0 | |||

| HD | −0.033 | 0.948 | 0.898 | 94.2 | |||

When T is completely missing in the new trial, BMBRG, GPHC and HD all underestimate the variances of . When the number of the trials is relatively large (n = 40, 55), the extent of underestimation is minor; however, with a small number of trials (n = 10), the extent can be more severe and the coverage rates can be less than 85%. Although HD is expected to have better CR than GPHC and GPHC is expected to be better than BMBRG because they account for more uncertainty of the parameters, the advantages of HD and GPHC over BMBRG are small and all methods give similar CRs. The Bayes method we used gives more precise estimates of the variances and the coverage rates are around the 95% nominal level.

The SIMPLE and HD methods give estimates with similar precision which shows that the efficiency gain from the bivariate normal assumption is small. When T is partially or completely observed, the increase in m can improve the precision of the estimates while a larger n does not necessarily improve much precision. When T is completely missing, there is a minor gain in the precision when n and m increase.

5.3. R , , Percentage of Missingness and Information Recovery from S

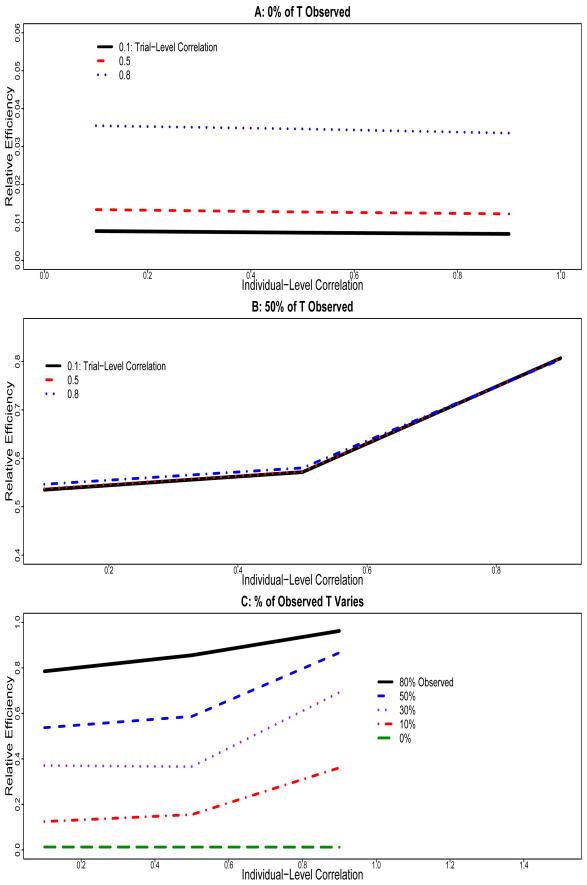

Figure 1A shows the relatively efficiency of when T is completely missing in the new trial compared to the estimate before any deletion in T occurs using the HD method. Relative efficiency is defined as the inverse of the ratio of the two variances. We vary and and let n = 40 and m = 100. We find that while the increases in have negligible impact on the precision, the increase in can improve the precision more than any other factor. These findings agree with the algebraic intuition from the CPV variance formula in (12). Relative to the estimate based on completely observed data, the relative efficiency varies from 0.7%, 1.2% to 3.4% as we increase from 0.1, 0.5 to 0.8. As a result, when we completely rely on S and summary statistics from previous trials to predict , the extent of information recovery is often limited and the precision of is usually insufficient to be clinically useful.

Figure 1.

Simulation results based on 500 data sets. Relative efficiency of the new treatment effect estimate using S when T is not completely observed to that when T is completely observed. A: 0% of T Observed in the new trial; B: 50% of T observed in the new trial; C: Percentage of Observed T Varies in the new trial.

Figure 1B presents the relative efficiency of when T is 50% missing compared with the estimate before any deletion of T using the HD method. We find that high can lead to a large gain of efficiency from the use of S. When is large (e.g., 0.7 or 0.9), most of the information on δTn is recovered from S and the precision of the estimate is close to that when T is completely observed. On the other hand, the magnitude of does not have much impact on the amount of efficiency gain from S. The observations here are in agreement with the CPV variance formula in (11).

Figure 1C shows the relative efficiency of when T is partially or completely missing compared with the estimate before any deletion of T. Naturally, the higher the proportion of available T, the smaller the RMSE, and thus the greater the precision for the treatment effect prediction. Interestingly, we find that there is a substantial efficiency gain from the information on S with even a small fraction of observed T, particularly when is high. For example, when 30% T are observed, the lost information due to missingness is almost completely recovered from S when .

6. Data Analysis: a Glaucoma Study

The evaluation of the extent of information recovery from S in predicting the treatment effect on T in a new trial is illustrated using the Collaborative Initial Glaucoma Treatment Study (CIGTS) [30]. Glaucoma is a group of diseases that cause vision loss and is a leading cause of blindness. High pressure in the eyes, i.e. intraocular pressure (IOP), is a major risk factor of glaucoma. The CIGTS is a randomized multi-center clinical trial to compare the effects of two types of treatments, surgery and medicine, on reducing IOP among glaucoma patients. Patients are enrolled between 1993 and 1997. A total of 607 patients are included in the study and among them, 307 are randomly assigned into the medicine group. IOP (recorded in mmHg) has been measured at different time points following the treatment. For the purpose of this paper, we take the IOP measurement at month 96 as T and that at month 12 as S. We assume that the IOP measurements are normally distributed. To evaluate the situation of a meta-analysis where data are from different trials; we treat the different centers in the CIGTS study as independent trials testing a similar group of treatments. A preliminary analysis of these data shows that the estimate of the between-trial variances, , is non-positive definite. Mimicing the approach of Gail et. al. [22], we rescale up the data size by simulating Sij and Tij from bivariate normal distributions for each trial and treatment group with the trial-specific and treatment-specific means and variance-covariances from the real data. Nonetheless, our results are generalizable. The CIGTS study includes 14 centers from which we delete five centers (i.e., 5, 7, 12, 13, 14) either because they had too few observations or because of non-positive definite covariance matrices within center. We also deleted two outliers that are greater than 35mmHg. For the centers included (n = 9), we increase the sample sizes to 335, 176, 385, 264, 539, 368, 286, 528, and 319. The trial-specific and treatment-specific means and correlations for S and T are listed in Table II.

Table II.

Description of Pseudodata in Glaucoma study: Treatment-Specific Means and Individual-Level Correlations for Each Center

| Center | Sample Size | Medicine | Surgery | Individual-level Correlation | |

|---|---|---|---|---|---|

|

| |||||

| (Means of S, T) | (Means of S, T) | Medicine | Surgery | ||

|

| |||||

| 1 | 670 | (17.63, 16.52) | (13.76, 14.59) | 0.367 | 0.608 |

| 2 | 352 | (17.22, 16.42) | (14.63, 12.98) | −0.455 | 0.467 |

| 3 | 770 | (19.27, 17.58) | (15.81, 16.17) | 0.589 | 0.548 |

| 4 | 528 | (17.17, 15.51) | (10.93, 12.88) | 0.176 | 0.540 |

| 5 | 1078 | (18.52, 18.67) | (14.99, 15.32) | 0.435 | 0.407 |

| 6 | 736 | (18.62, 18.89) | (15.13, 17.11) | −0.16 | −0.0056 |

| 7 | 572 | (18.35, 15.34) | (14.59, 14.53) | 0.177 | 0.396 |

| 8 | 1056 | (18.59, 16.16) | (13.60, 13.72) | 0.31 | 0.95 |

| 9 | 638 | (17.56, 16.82) | (14.19, 14.61) | 0.042 | 0.756 |

The HD method is used to fit the rescaled data for which is positive definite and the estimates of and , denoted by and , are obtained as 0.25 and 0.15, respectively. We randomly select Center 8 as the new trial and delete some proportion of T in Center 8 to examine the extent of efficiency gain through the use of S. The missing mechanism is missing completely at random [24]. The results are listed in Table III. Without missing T, is −2.45 with the standard error of 0.29. When T is completely missing, is 1.58 with the standard error of 0.79. When 20% or 50% of T are missing, the precision of using S is comparable to that based on completely observed T. Even with 80% missing, the SE is substantially smaller than that when 100% of T is missing. For further illustration, we treat Center 9 as a new trial and obtain similar results. With this rescaled data, we have artificially increased the sample size by approximately five fold for each trial, hence, the power to detect the treatment effect is much larger than the original data. When δTn is predicted solely based on S, the relatively efficiency is only about 10% compared to that when T is not missing, and reaches the significance level of 0.05 for Center 9 and is not quite significant for center 8. In this particular study we completely observe S by the end of year 1998 but only start to observe T in year 2001, thus by solely relying on S to predict δTn, we can significantly shorten the trial length, but the result is only of borderline significance. In practice many trials do not have such strong effect so when δTn is predicted solely based on S, the substantial loss in precision often results in failure to detect any real treatment effect difference. In the CIGTS, by October 2002, about 20% of T would have been observed and the treatment effect is clearly significant, illustrating the benefit of significant increase in the precision of by utilizing a small fraction of T. There is a also a considerable time saving compared to collecting T on all subjects, which would have required follow-up to 2005.

Table III.

Estimate treatment effect on IOP at the 96th month utilizing information from early IOP measures at the 12th month in the glaucoma study.

| p | Estimate | Standard Error | p-value |

|---|---|---|---|

| center = 8 | |||

|

| |||

| SIMPLE† | −2.45 | 0.29 | < .0001 |

| No missing‡ | −2.33 | 0.22 | < .0001 |

| 100% missing‡ | −1.58 | 0.79 | 0.063 |

| 90% missing‡ | −1.50 | 0.47 | 0.0059 |

| 80% missing‡ | −2.37 | 0.39 | < .0001 |

| 50% missing‡ | −2.61 | 0.29 | < .0001 |

| 20% missing‡ | −2.19 | 0.23 | < .0001 |

|

| |||

| center = 9 | |||

|

| |||

| SIMPLE† | −2.21 | 0.30 | < .0001 |

| No missing‡ | −2.32 | 0.27 | < .0001 |

| 100% missing‡ | −2.68 | 0.82 | 0.0053 |

| 90% missing‡ | −2.19 | 0.61 | 0.0023 |

| 80% missing‡ | −2.30 | 0.49 | < .0002 |

| 50% missing‡ | −2.04 | 0.36 | < .0001 |

| 20% missing‡ | −2.15 | 0.30 | < .0001 |

Based on complete data before any deletion.

HD method was used.

7. DISCUSSION

In this report, we examine the role of biomarkers as auxiliary variables in predicting the treatment effect and identify situations when biomarkers can be beneficial in a multiple-trial setting. While previous literature on the use of biomarkers as substitutes for the true endpoints has been mostly negative and the proposed surrogate measures are often not useful in practice, we show that it is possible for S to be useful as auxiliary variables in helping provide information and enhancing the inference on T. Although a high correlation between S and T does not qualify S as a good surrogate [13], we show that the correlation is a critical measure in determining the extent of information recovery from S.

In a multiple-trial setting, when T is completely unobserved, has little impact on the amount of information recovered from S; on the other hand, the higher the , the higher the efficiency gain from S. However, even with a relatively high , the predicted treatment effect based on data from other trials and biomarkers in the new trial solely is usually too imprecise to be clinically useful. On the other hand, when the predicted treatment effect on T solely based on S would be sufficient to detect the difference in the treatment effect, the benefit of reducing the trial length can be enormous. Examples include the situation when the statistical power to detect treatment effect is very large or when is close to 1 such as the ovarian cancer example in [10]. However, these cases are usually rare in practice. On the contrary, when T is partially observed in the new trial, we find that a high is a very important determinant in increasing the precision of the predicted treatment effect from S but the impact of is negligible. With even a small fraction of T and a high , the information on the treatment effect is mostly recovered and the prediction precision is close to that when T is completely observed. It appears that some data on T are essential to provide the basis for individual-level predictions of T from S and take advantage of the distributional assumption between S and T, and hence to give a much more efficient treatment estimate.

We compare the BMBRG, GPHC and HD methods when T is completely missing. Each method gave unbiased estimates; but the variances were underestimated, particularly when the number of the trials was small. Either a bootstrap [22] or fully Bayesian or measurement-error approach [15] could remedy this problem. When T is partially observed, we use two methods: HD and EB-CPV. We find that the underestimation of the variance from the HD method becomes negligible but CPV consistently underestimates the variance. We note that we only consider the case of missing T being missing completely at random and that all methods are applicable when the missing mechanism is missing at random [24].

In conclusion, biomarkers would seem to have a useful role as auxiliary variables. Future research should focus on their roles as auxiliary variables and identify scenarios when biomarkers can increase the precision of the treatment effect. For design purposes, our results suggest that it is often important to collect at least some data on the true endpoint and more information on biomarkers which have high adjusted individual-level correlations with the true endpoint. With appropriate utilization of high quality biomarkers in estimating the treatment effect when the true endpoint is not completely observed, one can reach a desired level of precision earlier, hence shortening the study period and reducing the cost. In our study, we consider continuous S and T. For future research, it would also be interesting to investigate the factors that impact the efficiency gain and the extent of it when S and T are other types of data such as binary, categorical and time to an event.

ACKNOWLEDGEMENTS

The authors would like to thank Dr. Brenda Gillespie for providing us with the CIGTS data. This research was supported by National Institutes of Health Grant CA129102.

8. APPENDIX A: Henderson Method [23]

Let Y = Xβ+Uη+ε, where the vectors Y, η and ε and the matrix X are obtained from stacking the vectors Yi, ηi and εi and the matrices Xi, respectively, underneath each other, and where U is the block-diagonal matrix with blocks Ui on the main diagonal and zeros elsewhere. Let and Σ be block-diagonal with blocks D and Σi on the main diagonal and zeros elsewhere. We have the following relationships: , var(ε) = Σ, cov(η, ε) = 0 and we let . The estimates of , Σ and are denoted by , and . Henderson [23] proposed a method to obtain estimates of β and η by solving the mixed model equation as follows:

The solution can be written as:

The covariance matrix of is

McLean and Sanders (1988) [33] and McLean, Sanders and Stroup (1991) [34] show that C can also be written as

where

In practice, the estimate, , is often obtained by substituting and Σ in C with their estimates, as we have done in this paper. From the above, we canDobtain the expression for the mean and variance for as follows:

9. APPENDIX B: BAYESIAN ESTIMATION

Iterate the following two steps until the parameters reach convergence:

Step 1: Impute missing Tnj’s from a normal distribution with mean and variance:

Step 2: Apply Gibbs sampling to the complete data to estimate the parameters:

where,

From the distributions of β and ηi, we can obtain the distribution of δTn.

10. APPENDIX C: CONDITIONAL POSTERIOR VARIANCE OF δTn

Let α0 + a0i = μ0Si, γ0 + r0i = μ0Ti, α1 + a1i = δSi and γ1 + r1i = δTi. We can rewrite the model (1) as

Assume there are r observations with both S and T observed and mn – r observations with just S observed in the nth trial. The likelihood can be written as:

which is proportional to the posterior density when we assume flat priors for the fixed effects and multivariate normal distributions for the random effects. The conditional posterior distributions of μ0Tn and δTn given the data and all other parameters are proportional to:

| (13) |

where

The covariance contribution for μ0Tn and δTn from term B is .

We define . From (13),

A is proportional to a bivariate normal density. The covariance contribution from term A is defined as , where

Combining the variance contributions from terms A and B, we can obtain the conditional posterior covariance for μ0Tn and δTn as: . The corresponding conditional posterior variance for ( 0 1 ) ( 0 1 )T.

REFERENCES

- 1.Buyse M, Molenberghs G. Criteria for the validation of surrogate endpoints in randomized experiments. Biometrics. 1998;54:10141029. [PubMed] [Google Scholar]

- 2.Baker SG, Kramer BS. Biomarker, Surrogate Endpoints, and Early Detection Imaging Tests: Reducing Confusion. http://www.icsa.org/bulletin/Bulletin-1-2004-Contents/A3-25-controverstial-issues-v4.doc.

- 3.Prentice RL. Surrogate endpoints in clinical trials, definition and operational criteria. Statistics in Medicine. 1989;8:431–440. doi: 10.1002/sim.4780080407. [DOI] [PubMed] [Google Scholar]

- 4.Hsu C, Taylor JMG, Murray S, Commenges D. Survival analysis using auxiliary variables via nonparametric multiple imputation. Statistics in Medicine. 2006;25:3503–3517. doi: 10.1002/sim.2452. [DOI] [PubMed] [Google Scholar]

- 5.Freedman LS, Graubard BI, Schatzkin A. Statistical validation of intermediate endpoints for chronic disease. Statistics in Medicine. 1992;11:167–178. doi: 10.1002/sim.4780110204. [DOI] [PubMed] [Google Scholar]

- 6.Liang KY, Zeger SL. Longitudinal Data Analysis using Generalized Linear Models. Biometrika. 1986;73:13–22. [Google Scholar]

- 7.Lin DY, Fleming TR, DeGruttola V. Estimating the proportion of treatment effect captured by a surrogate marker. Statistics in Medicine. 1997;16:1515–1527. doi: 10.1002/(sici)1097-0258(19970715)16:13<1515::aid-sim572>3.0.co;2-1. [DOI] [PubMed] [Google Scholar]

- 8.Bycott PW, Taylor JMG. An evaluation of a measure of the proportion of the treatment effect explained by a surrogate marker. Controlled Clinical Trials. 1998;19:555–568. doi: 10.1016/s0197-2456(98)00039-7. [DOI] [PubMed] [Google Scholar]

- 9.Wang Y, Taylor JMG. A measure of the proportion of treatment effect explained by a surrogate marker. Biometrics. 2003;58:803–812. doi: 10.1111/j.0006-341x.2002.00803.x. [DOI] [PubMed] [Google Scholar]

- 10.Buyse M, Molenberghs G, Burzykowski T, Renard D, Geys H. The validation of surrogate endpoints in meta-analyses of randomized experiments. Biostatistics. 2000;1:49–67. doi: 10.1093/biostatistics/1.1.49. [DOI] [PubMed] [Google Scholar]

- 11.De Gruttola V, Fleming T, Lin DY, Coombs R. Perspective: validating surrogate markers - are we being nave? The Journal of Infectious Diseases. 1997;175:237–246. doi: 10.1093/infdis/175.2.237. [DOI] [PubMed] [Google Scholar]

- 12.Fleming TR, DeMets DL. Surrogate endpoints in clinical trials: Are we being misled? Annals of Internal Medicine. 1996;125:605–613. doi: 10.7326/0003-4819-125-7-199610010-00011. [DOI] [PubMed] [Google Scholar]

- 13.Baker SG, Kramer BS. A perfect correlate does not a surrogate make. BMC Medical Research Methodology. 2003;3:16. doi: 10.1186/1471-2288-3-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cook RJ, Lawless JF. Some comments on efficiency gains from auxiliary information for right-censored data. Journal of Statistical Planning and Inference. 2001;96:191–202. [Google Scholar]

- 15.Burzykowski T, Molenberghs G, Buyse M. The Evaluation of surrogate endpoints. Springer; New York: 2004. 2004; Chapter 18. [Google Scholar]

- 16.Pepe MS, Reilly M, Fleming TR. Auxiliary outcome and the mean score method. Journal of Statistical Planning and Inference. 1994;43:137–160. [Google Scholar]

- 17.Robins JM, Rotnitzky A. Recovery of Information and Adjustment for Dependent Censoring using Surrogate Markers. In: Jewell N, Dietz K, Farewell V, editors. AIDS Epidemiology: Methodological Issues. Birkhauser; Boston: 1992. pp. 297–331. [Google Scholar]

- 18.Malani HM. A modification of the re-distribution to the right algorithm using disease markers. Biometrika. 1995;82:515–526. [Google Scholar]

- 19.Murray S, Tsiatis AA. Nonparametric Survival Estimation Using Prognostic Longitudinal Covariates. Biometrics. 1996;52:137–151. [PubMed] [Google Scholar]

- 20.Kosorok MR, Fleming TR. Using surrogate failure time data to increase cost effectiveness in clinical trials. Biometrika. 1993;80:823–833. [Google Scholar]

- 21.Venkatraman ES, Begg CB. Properties of a nonparametric test for early comparison of treatments in clinical trials in the presence of surrogate endpoints. Biometrics. 1999;55:1171–1176. doi: 10.1111/j.0006-341x.1999.01171.x. [DOI] [PubMed] [Google Scholar]

- 22.Gail M, Pfeiffer R, Houwelingen HCV, Carroll RJ. On Meta-analytic assessment of surrogate outcomes. Biostatistics. 2000;1:231–246. doi: 10.1093/biostatistics/1.3.231. [DOI] [PubMed] [Google Scholar]

- 23.Henderson CR. Best linear unbiased estimation and prediction under a selection model. Biometrics. 1975;31:423–447. [PubMed] [Google Scholar]

- 24.Little RJA, Rubin DB. Statistical Analysis with Missing Data. 2nd Edition Wiley; New York: 2002. [Google Scholar]

- 25.Laird NM, Lang N, Stram D. Random-effects models for longitudinal data. Biometrics. 1982;38:963–974. [PubMed] [Google Scholar]

- 26.Robinson GK. That BLUP is a good thing: the estimation of random effects. Statistical Science. 1991;6:15–51. [Google Scholar]

- 27.Louis TA, Zelterman D. Bayesian approaches to research synthesis. In: Cooper H, Hedges LV, editors. The handbook of research synthesis. Russell Sage Foundation; New York: 1994. [Google Scholar]

- 28.Ghosh M, Rao NK. Small area estimation: an appraisal. Statistical Science. 1994;9:55–76. [Google Scholar]

- 29.Morris C. Parametric empirical Bayes inference: theory and application (with discussions) Journal of American Statistical Association. 1983;78:47–65. [Google Scholar]

- 30.Musch DC, Lichter PR, Guire KE, Standardi CL, CIGTS Investigators The Collaborative Initial Glaucoma Treatment Study (CIGTS): Study design, methods, and baseline characteristics of enrolled patients. Ophthalmology. 1999;106:653–62. doi: 10.1016/s0161-6420(99)90147-1. [DOI] [PubMed] [Google Scholar]

- 31.Searle SR. Linear Models. Wiley; New York: 1971. [Google Scholar]

- 32.SAS Institute Inc. Cary, NC, USA: 2003. [Google Scholar]

- 33.McLean RA, Sanders WL. Approximating Degrees of Freedom for Standard Errors in Mixed Linear Models. Proceedings of the Statistical Computing Section, American Statistical Association, New Orleans. 1988:50–59. [Google Scholar]

- 34.McLean RA, Sanders WL, Stroup WW. A Unified Approach to Mixed Linear Models. The American Statistician. 1991;45:54–64. [Google Scholar]