Abstract

The relation between the timing of language input and development of neural organization for language processing in adulthood has been difficult to tease apart because language is ubiquitous in the environment of nearly all infants. However, within the congenitally deaf population are individuals who do not experience language until after early childhood. Here, we investigated the neural underpinnings of American Sign Language (ASL) in 2 adolescents who had no sustained language input until they were approximately 14 years old. Using anatomically constrained magnetoencephalography, we found that recently learned signed words mainly activated right superior parietal, anterior occipital, and dorsolateral prefrontal areas in these 2 individuals. This spatiotemporal activity pattern was significantly different from the left fronto-temporal pattern observed in young deaf adults who acquired ASL from birth, and from that of hearing young adults learning ASL as a second language for a similar length of time as the cases. These results provide direct evidence that the timing of language experience over human development affects the organization of neural language processing.

Keywords: age of acquisition, anatomically constrained magnetoencephalography, critical period, language processing, sign language

Introduction

One of the most challenging questions in neurolinguistics is the role early language input that plays in the development of the left-hemisphere canonical network for language processing (Penfield and Roberts 1959). The left hemisphere shows adult-like activations from a very young age (Dehaene-Lambertz et al. 2002; Imada et al. 2006; Travis et al. 2011). However, the degree to which such neural activation patterns are contingent upon language experience is unknown because nearly all hearing children experience language at, or even before, birth (Moon and Fifer 2000). Congenital deafness often has the effect of delaying the onset of language acquisition, and many deaf children born to hearing parents do not receive functional language input until they receive special services or interact with other deaf individuals who use sign language. These circumstances thus offer a unique opportunity to investigate the effects of delayed onset of “first” language (L1) acquisition on the classic network for language processing. Here, we ask how an extreme delay in L1 input affects the organization of linguistic processing in the brain, which requires that we first consider how age of acquisition, AoA, affects second language (L2) and sign language learning and neural processing.

The most common means of investigating the effects of delayed AoA on the neural processing of language is by studying L2 acquisition. Most neuroimaging studies agree that the L2 is acquired and processed through neural mechanisms similar to those that support the L1, with differences observed in more extended activity of the brain system supporting L1 (for review see Abutalebi 2008). A number of studies also show that a less proficient and/or a late acquired L2 engages the right hemisphere to a greater extent than L1 (Dehaene et al. 1997; Perani et al. 1998; Wartenburger et al. 2003; Leonard et al. 2010, 2011).

Studies using event-related potentials (ERPs) indicate that responses to L2 typically exhibit slightly delayed latencies compared with L1 (Alvarez et al. 2003; Moreno and Kutas 2005). Two recent magnetoencephalography (MEG) studies on Spanish-English bilinguals replicate these findings, indicating that the representations of L1 and L2 are largely overlapping in the left-hemisphere frontal regions, but that L2 additionally recruits bilateral posterior and right-hemisphere frontal areas (Leonard et al. 2010, 2011). Many behavioral studies with L2 learners confirm the existence of a negative correlation between L2 AoA and language outcome at various levels of linguistic structure (Birdsong 1992; White and Genesee 1996; Flege et al. 1999). While it is generally agreed that earlier acquisition of L2 is “better,” there is disagreement as to the exact nature of AoA effects on L2 learning. The disagreement arises from the fact that the magnitude of the AoA effects is variable and near-native L2 acquisition is sometimes possible despite late AoA (Birdsong and Molis 2001).

Near-normal language proficiency does not occur when L1 acquisition is delayed, as demonstrated by a number of studies of deaf signers with varying L1 AoA. Sign languages are linguistically equivalent to spoken languages (Klima and Bellugi 1979; Sandler and Lillo-Martin 2006) and, similar to spoken language, early onset of sign language results in native proficiency and the capability to subsequently acquire L2s (Mayberry et al. 2002). Delays in sign language acquisition, on the other hand, have been associated with low levels of language proficiency. Specifically, as acquisition begins at older ages, language processing becomes dissociated from meaning and more tied to the perceptual form of words; syntactic abilities decrease, and sentence and narrative comprehension decline (Mayberry and Fischer 1989; Newport 1990; Mayberry and Eichen 1991; Boudreault and Mayberry 2006). These effects are greatest in those cases where no functional language has been available until late childhood or even early teenage years (Boudreault and Mayberry 2006). While few, if any, such individuals have been followed longitudinally, psycholinguistic studies with adult deaf life-long signers with late childhood to adolescent AoA show that language processing deficits are severe and long term (for discussion see Mayberry 2010).

In rare cases, some deaf individuals do not have access to meaningful spoken language, because they are deaf and, due to various circumstances in their upbringing combined with social and educational factors, have not been exposed to any kind of sign language. Deaf individuals who are not in significant contact with a signed or spoken language typically use gesture prior to their exposure to language (Morford 2003). Such individuals have been termed homesigners, because they typically develop an idiosyncratic gesture system (called homesign) to communicate with their caregivers and/or families (Goldin-Meadow 2003). In the USA, homesigners typically begin receiving special services at a very young age and enter school and experience language (spoken or signed) by age 5 or younger. This may not be the case in other parts of the world where the use of homesign without any formal language may extend into adolescence or adulthood, for example, in the case of homesigners in Latin American countries where special services may be sparse or nonexistent (Senghas and Coppola 2001; Coppola and Newport 2005). Rare cases of homesigners in the USA also do not receive any formal language instruction until adolescence, mostly due to unusual family or social circumstances that include a lack of schooling at the typical age of 5 years.

We studied 2 such deaf adolescents named Shawna and Carlos (pseudonyms) who had not been in contact with any formal language (spoken or signed) in childhood and had just begun to acquire American Sign Language (ASL) at age of approximately 14 years, 2–3 years prior to participating in the study. Shawna and Carlos were thus unlike the previously described North American homesigners (Goldin-Meadow 2003) in that they were not immersed in a language environment until they were teenagers and, importantly, received very little schooling, and no special services or intervention until age of approximately 14 years. Their backgrounds thus resemble those of first-generation homesigners in Latin American countries (Senghas and Coppola 2001; Coppola and Newport 2005).

Shawna's and Carlos' backgrounds have been described elsewhere (Ferjan Ramirez et al. 2013). Briefly, they had begun to acquire ASL, their L1, through full immersion at age of approximately 14 years when they were placed in a group home for deaf children where they resided together at the time of our study. The group home was managed by deaf and hearing professionals, all highly proficient ASL signers, who worked with the adolescents every day and exclusively through ASL. Despite their clear lack of linguistic stimulation and schooling in childhood, however, both had an otherwise healthy upbringing, unlike previously described cases of social isolation and/or abuse (Koluchova 1972; Curtiss 1976). Shawna lived with hearing guardians who did not use any sign language and was reportedly kept at home and not sent to school until age of 12 years. Prior to first receiving special services at age 14;7, she had attended school for a total of 16 months, during which she was switched among a number of deaf and hearing schools. She reportedly relied on behavior and limited use of gesture to communicate. Carlos was born in a Latin American country and lived there until the age of 11 years with his large biological family all of whom were hearing. In his home country, he enrolled in a deaf school at a young age, but stopped attending after a few months because the school was of poor quality according to the parental report. At age 11 years, he immigrated to the USA with a relative and was placed in a classroom for mentally retarded children where the use of sign language was limited. Upon receiving special services at age 13;8 he knew only a few ASL signs and relied on some use of gestures and whole-body pantomime to communicate.

Beyond the description given here, whether Shawna or Carlos developed sophistication with homesign gestures is unknown. However, the professionals (deaf and hearing signers) who have worked with them since their initial arrival at the group home, believed that this is unlikely because the cases were not observed to use homesign to communicate with deaf peers or adults (Ferjan Ramirez et al. 2013). It is also interesting to note that, after 1–2 years of ASL immersion, Shawna and Carlos used very little gesture and almost exclusively used ASL to communicate. Thus, their home sign gestures, if they were used prior to group home placement, were no longer used soon after a formal language became available. It is important to understand that even those cases reported to have developed complex homesign systems prior to exposure to conventional languages show marked deficits in later language development (Morford 2003), suggesting that homesign does not serve as an L1 in terms of supporting future conventional language acquisition (Morford and Hänel-Faulhaber 2011). The professionals at the group home also reported that Shawna and Carlos had no knowledge of any conventional spoken language, were illiterate, and unable to lip-read upon placement in the group home. The limited schooling they received thus seems to have had little effect on their language development.

About 1 year prior to participating in the current study, Shawna and Carlos were administered the Test of Nonverbal Intelligence, Third Edition (TONI-3). The TONI-3 is typically used with children and adults between ages 6 and 90. Their age-adjusted scaled score was 1 to 1.5 standard deviations below the mean. These results, however, should be interpreted with caution because of the participants' atypical life and lack of school experience. As discussed by Mayberry (2002), the nonverbal intelligence quotient (IQ) scores of late L1 learners who have suffered from educational deprivation tend to be low when they first become immersed in a conventional language. As documented by Morford (2003), however, IQ scores show significant increases over time as more education and linguistic input is received.

In preparation for the present neuroimaging study, we estimated the size and composition of Shawna's and Carlos' vocabularies using the MacArthur-Bates Communicative Developmental Inventory (CDI) for ASL (Anderson and Reilly 2002), which we cross-validated by further analyzing their spontaneous ASL production. (We have previously reported the results of our analyses of Shawna's and Carlos' language after 1–2 years of ASL immersion in Ferjan Ramirez et al. 2013.) Shawna knew 47% of the signs on the ASL-CDI list, and Carlos knew 75% of the list total. In addition, their vocabularies included several signs that are not part of the ASL-CDI list. Their ASL vocabulary composition was similar to that of child L1 learners, with a preponderance of nouns, followed by predicates, and relatively few grammatical words (Bates et al. 1994; Anderson and Reilly 2002). Further, Shawna and Carlos, like young deaf and hearing children who acquire language from birth, produced short utterances (Newport and Meier 1985; Bates et al. 1998). Shawna's mean length of utterance in sign units was 2.4, and Carlos' mean length of utterance was 2.8. Their utterances were predominantly declarative and simple and included examples such as SCHOOL FOOD LIKE, or LETTER BRING (Examples are given as English glosses because ASL has no written form. For more examples see Ferjan Ramirez et al. 2013.). They did not use conjunction, subordination, conditionals, or wh-questions. As in child L1 (Bates and Goodman 1997), their syntactic development was consistent with their vocabulary size and composition. These analyses suggested that the language acquisition of Shawna and Carlos, although begun extraordinarily late in development, was highly structured and shared basic characteristics of young child language learners.

With these ASL acquisition findings in mind, the present study asks how Shawna and Carlos neurally represent their newly acquired ASL words. Given that their language acquisition looks child-like, one hypothesis is that their neural language representation will look child-like as well. Recent neuroimaging studies suggest that infant language learners activate the canonical left-hemisphere fronto-temporal network when presented with language stimuli. The occurrence of adult-like activations has been reported in French- (Dehaene-Lambertz et al. 2002), English- (Travis et al. 2011), and Finnish-learning infants (Imada et al. 2006) between the ages of 3 and 18 months. These results suggest that the language network is functional for language processing from an early age. We asked whether these canonical patterns of neural activation would also appear in the cases whose initial language immersion occurred in adolescence rather than infancy.

Deaf babies who experience sign language from birth have not yet been studied with neuroimaging methods. However, given the parallels between sign and spoken languages (Klima and Bellugi 1979; Sandler and Lillo-Martin 2006), there is no reason to assume that the infant neural representation of sign language would diverge from that of spoken language. Evidence from aphasia (Hickok et al. 1996), cortical stimulation (Corina et al. 1999), and neuroimaging (Petitto et al. 2000; Sakai et al. 2005; MacSweeney et al. 2006; MacSweeney, Capek, et al. 2008; Mayberry et al. 2011; Leonard et al. 2012) suggests that, when acquired from birth by deaf native signers, the neural patterns associated with sign language processing look much like those associated with spoken language processing. Interestingly, Newman et al. (2002) suggest that this may not be the case for “hearing” native signers. In agreement with spoken language studies on L2 acquisition, the canonical language areas are also the main sites of neural activity in deaf individuals who acquire British Sign Language at a later age, following acquisition of a spoken/written language (as indicated by their reading scores; MacSweeney, Waters, et al. 2008). These findings confirm that the canonical language network is supramodal in nature (Marinkovic et al. 2003), further demonstrating its robustness for linguistic processing. The question considered here is whether the predisposition of this network to process language is independent of the timing of linguistic experience over development; if this is the case, then Shawna's and Carlos' neural activations in response to ASL signs should look like those of infants and adults with early L1 onset.

Alternatively, Shawna and Carlos may exhibit neural activation patterns that diverge from the canonical one. This would suggest that early language experience is required to bring about the functionality of the left-hemisphere language network, that is, that there is a critical period when language input must occur for this network to become functional. Such findings would explain why delayed L1 acquisition has severe and long-term negative effects on language acquisition and processing (Mayberry and Fischer 1989; Newport 1990; Mayberry and Eichen 1991; Boudreault and Mayberry 2006). One functional magnetic resonance imaging (fMRI) study with deaf nonnative signers suggests that delayed exposure to L1 significantly alters the adult neural representation of language (Mayberry et al. 2011). Specifically, Mayberry and colleagues scanned 23 life-long deaf signers who were first immersed in ASL at ages ranging from birth to 14 years. On an ASL grammaticality judgment task and on a phonemic hand judgment task, early language exposure correlated with greater positive hemodynamic activity in the classical language areas (such as the left inferior frontal gyrus (IFG), left insula, left dorsolateral prefrontal cortex, and left superior temporal sulcus), and greater negative (below baseline) activity in the perceptual areas of the left lingual and middle occipital gyri. As age of L1 exposure increased, this pattern reversed, suggesting that linguistic representations may rely to a greater extent on posterior brain areas, and to a lesser extent on the classical language areas, when the L1 is acquired late. These neuroimaging results accord with previous psycholinguistic findings and show that delays in L1 AoA significantly affect language processing, even after 20 years of language use. What is currently unknown is how the human brain processes a language that it has just begun to acquire for the first time in adolescence. Such individuals have never before been neuroimaged.

Materials and Methods

Participants

Cases

Two cases were studied whose language input was delayed until adolescence. The cases' backgrounds are described in the Introduction. The present neuroimaging results for the cases are compared with that of 2 carefully selected control groups: 12 young deaf adults who acquired sign language from birth (native signers), and 11 young hearing adults who studied ASL in college (L2 signers). The 2 control groups studied here, unlike the cases, had ideal language acquisition circumstances from birth. The native group serves to establish a baseline of how ASL is processed in the deaf brain when acquired from birth. The L2 group serves as a control in establishing how ASL is processed in the hearing brain when acquisition begins later in life, and full proficiency has not yet been achieved. Like the cases, the L2 learners began to acquire ASL in adolescence or young adulthood, have only used it for a limited period of time, and were not highly proficient at the time of study. Importantly, and unlike the cases, the L2 control participants experienced language (English) from birth, and the L1 control participants were proficient L2 learners of English. The results from the control groups have been reported in detail elsewhere (Leonard et al. unpublished data) and are only reported here insofar as they are relevant and necessary to the interpretation of the 2 cases.

Deaf Native Signers

Twelve healthy right-handed congenitally deaf native signers (6 females, 17–36 years) with no history of neurological or psychological impairment were recruited for participation. All had profound hearing loss from birth and acquired ASL from their deaf parents.

Hearing L2 ASL Learners

Eleven hearing native English speakers also participated (10 females; 19–33 years). All were healthy adults with normal hearing and no history of neurological or psychological impairment. All participants had 4–5 academic quarters (40–50 weeks) of college-level ASL instruction, and used ASL on a regular basis at the time of the study. Participants completed a self-assessment questionnaire to rate their ASL proficiency on a scale from 1 to 10, where 1 meant “not at all” and 10 meant “perfectly.” For ASL comprehension, the average score was 7.1 ± 1.2, and the ASL production was 6.5 ± 1.9.

While the participants in the control groups were older than the cases, this should not have a significant effect on our results because our dependent measure, the N400 semantic congruity effect, has been shown to be particularly stable in the age range tested here (Holcomb et al. 1992; Kutas and Iragui 1998). The N400 effect undergoes a change in amplitude between ages 5 and 15 years (Holcomb et al. 1992), and then again with normal aging (Kutas and Iragui 1998). However, in the age range of our participants (16–33 years), the N400 changes are very small to nonexistent.

Stimuli and Task

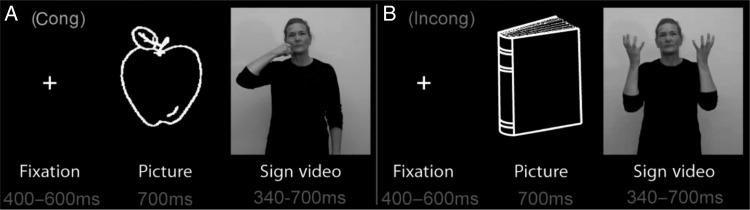

We developed a stimulus set of ASL words that Shawna and Carlos knew well (Ferjan Ramirez et al. 2013) along with a task they were able to perform with high accuracy in the scanner. The cases and all control participants performed a semantic decision task that took advantage of decades of research on an event-related neural response between 200 and 600 ms after the onset of meaningful stimuli, known as the N400 (Kutas and Hillyard 1980; Kutas and Federmeier 2000, 2011) or N400m in MEG (Halgren et al. 2002). While we recorded MEG, participants saw a line drawing of an object for 700 ms, followed by a sign (mean length: 515.3 ms; length range: 340–700 ms) that either matched (congruent; e.g. “cat-cat”) or mismatched (incongruent; e.g., “cat-ball”) the picture in meaning (Fig. 1). To measure accuracy and maintain attention, participants pressed a button when the word matched the picture; response hand was counterbalanced across blocks within participants. Responding only to congruent trials makes the task easy to perform, which was important for successful testing of the cases who lack experience in performing complex cognitive tasks. Responding only to congruent trials could theoretically lead to important differences in neural responses to congruent and incongruent conditions; however, previous studies in our laboratory (Travis et al. 2011, 2012), as well as additional analyses conducted in the present study (see Supplementary Fig. S2), indicate that the neural response to button press does not affect the N400 semantic congruity effect. The number of stimuli was high, allowing us to obtain statistically significant results for individual participants. To ensure that the cases were able to perform the task with high accuracy, we worked with them extensively prior to scanning to ensure that they understood the task instructions and were comfortable with the scanners.

Figure 1.

Schematic diagram of task design. Each picture and sign appeared in both the congruent (A) and incongruent (B) conditions. Averages of congruent versus incongruent trials thereby compared responses with exactly the same stimuli.

All signs were highly imageable concrete nouns selected from ASL developmental inventories (Schick 1997; Anderson and Reilly 2002) and picture naming data (Bates et al. 2003; Ferjan Ramirez et al. 2013). Stimulus signs were reviewed by a panel of 6 deaf and hearing fluent signers to ensure accurate production and familiarity. Fingerspelling or compound nouns were excluded. Each sign video was edited to begin when all phonological parameters (handshape, location, movement, and orientation) were in place, and was ended when the movement was completed. Each sign appeared in both the congruent and incongruent conditions and, if a trial from one condition was rejected due to artifacts in the MEG signal, the corresponding trial from the other condition was also eliminated to ensure that sensory processing across congruent and incongruent trials included in the averages was identical. Native signers saw 6 blocks of 102 trials each, and L2 signers saw 3 blocks of 102 trials each because they were also scanned on the same task in the auditory and written English modality (3 blocks for each; see Leonard et al. unpublished data). Our previous work with MEG sensor data and anatomically constrained MEG (aMEG) analyses suggests that 300 trials (150 in each condition) are sufficient to capture clean and reliable single-subject responses. Shawna saw 5 blocks of 102 trials because she was not familiar with the rest of the words (Ferjan Ramirez et al. 2013). Carlos saw 5 blocks of the 102 trials due to equipment malfunction during one of the blocks. Prior to testing, Carlos and Shawna participated in a separate acclimation session during which they were familiarized with the MEG and MRI scanners and practiced the task. Before scanning began, all participants performed a practice run in the scanner. The practice run implemented a separate set of stimuli that was not part of the experimental stimuli. All controls and both cases understood the task quickly. No participant required repetitions of the practice block in the MEG.

Procedure

Using the above-described experimental paradigm with spoken words in hearing subjects, we previously found a typical N400m evoked as the difference in the magnitude of the neural response to congruent vs. incongruent trials (Travis et al. 2011). In the present study, we estimated the cortical generators of this semantic effect using aMEG, a noninvasive neurophysiological technique, that combines MEG and high-resolution structural MRI (Dale et al. 2000). MEG was recorded in a magnetically shielded room (IMEDCO-AG, Switzerland), with the head in a Neuromag Vectorview helmet-shaped dewar containing 102 magnetometers and 204 planar gradiometers (Elekta AB, Helsinki, Finland). Data were collected at a continuous sampling rate of 1000 Hz with minimal filtering (0.1–200 Hz). The positions of 4 nonmagnetic coils affixed to the subjects' heads were digitized along with the main fiduciary points such as the nose, nasion, and preauricular points for subsequent coregistration with high-resolution MRI images. Structural MRI was acquired on the same day after MEG, and participants were allowed to sleep or rest in the MRI scanner.

aMEG has previously been used successfully with 12- to 18-month-old infants (Travis et al. 2011) and it was likewise suitable for use with these cases whose language was beginning to develop. Importantly, and unlike hemodynamic techniques, aMEG allows us to focus on the spatial and temporal aspects of word processing and to estimate the spatiotemporal distribution of specific neural stages of single-word (sign) comprehension. Using aMEG, we have previously shown that, when learned from birth, sign languages are processed in a left fronto-temporal brain network (Leonard et al. 2012), similar to the network used by hearing subjects to understand speech, concordant with other neuroimaging studies (Petitto et al. 2000; Sakai et al. 2005; MacSweeney et al. 2006; MacSweeney, Capek, et al. 2008; Mayberry et al. 2011).

Anatomically Constrained MEG Analysis

The data were analyzed using a multimodal imaging approach that constrains the MEG activity to the cortical surface as determined by high-resolution structural MRI (Dale et al. 2000). This noise-normalized linear inverse technique has been used extensively across a variety of paradigms, particularly language tasks that benefit from a distributed source analysis (Marinkovic et al. 2003; Leonard et al. 2010), and has been validated by direct intracranial recordings (Halgren et al. 1994; McDonald et al. 2010).

The cortical surface was obtained with a T1-weighted structural MRI and was reconstructed using FreeSurfer (http://surfer.nmr.mgh.harvard.edu/). A boundary element method forward solution was derived from the inner skull boundary (Oostendorp and Van Oosterom 1992), and the cortical surface was downsampled to approximately 2500 dipole locations per hemisphere (Dale et al. 1999; Fischl et al. 1999). The orientation-unconstrained MEG activity of each dipole was estimated every 4 ms, and the noise sensitivity at each location was estimated from the average prestimulus baseline from −190 to −20 ms. aMEG was performed on the waveforms produced by subtracting congruent from incongruent trials.

The data were inspected for bad channels (channels with excessive noise, no signal, or unexplained artifacts), which were excluded from further analyses. Additionally, trials with large (>3000 fT/cm for gradiometers) transients were rejected. Blink artifacts were removed using independent components analysis (Delorme and Makeig 2004) by pairing each MEG channel with the electrooculogram channel, and rejecting the independent component that contained the blink. For the cases, fewer than 9% of trials were rejected due to either artifacts or cross-condition balancing. For native signers, fewer than 3% of trials were rejected; for L2 signers, fewer than 2% were rejected.

Individual subject aMEG movies were constructed from the averaged data in the trial epoch for each condition using only data from the gradiometers; these data were combined across subjects by taking the mean activity at each vertex on the cortical surface and by plotting it on an average Freesurfer fs average brain (version 450) at each latency. Vertices were matched across participants by morphing the reconstructed cortical surfaces into a common sphere, optimally matching gyral–sulcal patterns and minimizing shear (Sereno and Dale 1996; Fischl et al. 1999). All statistical comparisons were made on regions of interest (ROIs) timecourses, which were selected based on information from the average incongruent–congruent subtraction across all subjects.

Results

Behavioral Results

Both the native and L2 signer control groups performed the task with high accuracy and fast reaction times (94%, 619 ms, and 89%, 719 ms, respectively, from the onset of the signed stimulus; see Table 1). Shawna and Carlos performed within one standard deviation of the L2 group (84%, 811 ms, and 85%, 733 ms, respectively). The neural results were unchanged when only correctly answered trials were included in the MEG analyses.

Table 1.

Participant background information and task performance: mean (SD)

| Participant(s) | Gender | Age | Age of language onset | Age of ASL acquisition | Accuracy (%) | RT (ms) |

|---|---|---|---|---|---|---|

| Native signers | 6M, 6F | 30 (6.4) | Birth | Birth | 0.94 (0.04) | 619.1 (97.5) |

| L2 learners | 1M, 10F | 22;5 (3.8) | Birth | 20 (3.9) | 0.89 (0.05) | 719.5 (92.7) |

| Shawna | F | 16;9 | 14;7 | 14;7 | 0.84 | 811.4 |

| Carlos | M | 16;10 | 13;8 | 13;8 | 0.85 | 733.1 |

RT, reaction time.

Anatomically Constrained MEG Results

We examined aMEG responses to ASL signs at the group level (2 control groups) and at individual levels (2 cases and 2 representative control participants) from 300 to 350 ms postsign onset, a time window during which lexico-semantic encoding is known to occur in spoken and sign languages (Kutas and Hillyard 1980; Kutas and Federmeier 2000, 2011; Marinkovic et al. 2003; Leonard et al. 2012). The N400 is a broad stimulus-related brain activity in the 200- to 600-ms poststimulus time window (Kutas and Federmeier 2000, 2011). In our previous studies on lexico-semantic processing using spoken, written, and sign language stimuli, we have observed that the onset of this effect is around about 220-ms poststimulus, and the peak activity occurs slightly before 400 ms poststimulus. The 300- to 350-ms poststimulus time window was selected because we have previously observed that the semantic effect in picture-priming paradigms with spoken and signed stimuli is the strongest at this time (see Leonard et al. 2012; Travis et al. 2012). Similar results were obtained using a broader time window (Supplementary Fig. S1).

Given their sparse language exposure throughout childhood, we hypothesized that Shawna's and Carlos' neural activation patterns would diverge significantly from both control groups. Specifically, we expected that ASL processing in Shawna and Carlos would occur in more posterior and right-hemisphere areas based on previous neuroimaging studies on late L1 acquisitions of sign language (Mayberry et al. 2011) and on L2 acquisition of spoken languages (Abutalebi 2008; Leonard et al. 2010, 2011). We further expected that neural activations in the classical left-hemisphere language network would be weaker in both cases, compared with both control groups based on previous research (Mayberry et al. 2011).

To directly compare the strength of semantically modulated neural activity in Shawna and Carlos with that of the control groups, we first considered the neural activation patterns in 9 bilateral ROIs. ROIs were selected by considering the aMEG movies of grand-average activity across the whole brain of all 25 subjects (all 12 native signers, all 11 L2 signers, and the 2 cases). These movies are a measure of signal-to-noise ratio (SNR), being the F-ratio of explained variance over unexplained variance. The strongest clusters of neural activity across all the subjects and conditions were selected for statistical comparisons, thereby producing empirically derived ROIs that were independent of our predictions.

Table 2 presents normalized aMEG values for the subtraction of incongruent–congruent trials for both control groups and for Carlos and Shawna. We defined as “significantly different” those ROIs in which Shawna's or Carlos' aMEG values were >2.5 standard deviations away from the mean value of each control group. We applied a strict significance threshold (a z-score of 2.5 corresponds to a P-value of 0.0124), because we conducted comparisons in multiple ROIs. As summarized in Table 1, both the cases exhibited greater activity than the control groups in several right-hemisphere ROIs. Specifically, Carlos showed greater activity than native signers in right lateral occipito-temporal (LOT) and posterior superior temporal sulci (pSTS), and greater activity than the L2 signers in the right intraparietal sulcus (IPS). Similarly, Shawna showed greater activity than the natives in right IFG, IPS, and pSTS, and greater activity than the L2 signers in the right IPS.

Table 2.

ROI analyses

| ROI | Native mean (SD) |

L2 mean (SD) |

||

|---|---|---|---|---|

| LH | RH | LH | RH | |

| Control groups | ||||

| AI | 0.39 (0.14) | 0.40 (0.18) | 0.33 (0.12) | 0.36 (0.13) |

| IFG | 0.29 (0.12) | 0.30 (0.12) | 0.26 (0.12) | 0.28 (0.14) |

| IPS | 0.37 (0.10) | 0.32 (0.13) | 0.36 (0.13) | 0.28 (0.08) |

| IT | 0.43 (0.12) | 0.35 (0.11) | 0.36 (0.13) | 0.34 (0.18) |

| LOT | 0.29 (0.12) | 0.29 (0.10) | 0.30 (0.16) | 0.32 (0.15) |

| PT | 0.54 (0.14) | 0.45 (0.17) | 0.45 (0.16) | 0.43 (0.18) |

| STS | 0.43 (0.08) | 0.41 (0.18) | 0.32 (0.09) | 0.36 (0.16) |

| TP | 0.45 (0.16) | 0.46 (0.15) | 0.34 (0.14) | 0.38 (0.16) |

| pSTS | 0.33 (0.09) | 0.27 (0.07) | 0.34 (0.16) | 0.32 (0.15) |

| Carlos | Shawna | |||

| Cases | ||||

| AI | 0.27 | 0.43 | 0.38 | 0.43 |

| IFG | 0.26 | 0.31 | 0.34 | 0.60a |

| IPS | 0.31 | 0.54b | 0.41 | 0.66a,b |

| IT | 0.50 | 0.43 | 0.41 | 0.27 |

| LOT | 0.43 | 0.57a | 0.17 | 0.29 |

| PT | 0.33 | 0.57 | 0.52 | 0.33 |

| STS | 0.26 | 0.40 | 0.47 | 0.23 |

| TP | 0.42 | 0.51 | 0.27 | 0.20 |

| pSTS | 0.26 | 0.47a | 0.35 | 0.54a |

a2.5 standard deviations from native mean.

b2.5 standard deviations from L2 mean.

These results partly confirmed our hypotheses. As expected, Shawna and Carlos exhibited stronger activity than the controls in a number of right-hemisphere ROIs. Also in agreement with our hypotheses is the fact that 2 of the significant ROIs were located in posterior parts of the brain (pSTS and LOT). The finding that both Shawna and Carlos exhibited stronger activity than the native signers in the right IPS, and that Shawna's right IPS activity was also stronger than that of the L2 group, was unexpected. The hypothesis that Shawna and Carlos would exhibit weaker activity than the control groups in the classical left-hemisphere language regions (e.g. IFG or STS) was not confirmed.

The next step of our analysis was to look at the activation patterns across the entire brain, including the areas outside the ROIs. Because the ROIs were derived based on the grand average of all participants (the cases and both control groups), it is possible that some brain areas that were strongly activated in Shawna and Carlos were not selected as ROIs. An analysis of activations across the entire brain surface allowed us to focus on Shawna's and Carlos' individual neural activation patterns. We first qualitatively compared the aMEGs associated with the incongruent versus congruent contrast of the cases with those of the control groups and 2 individual control participants. We then examined whether differences between congruent and incongruent conditions were due to larger signals in one or the other direction by examining the MEG sensor level data directly. Planar gradiometers were examined, which, unlike other MEG sensors, are most sensitive to the immediately underlying cortex.

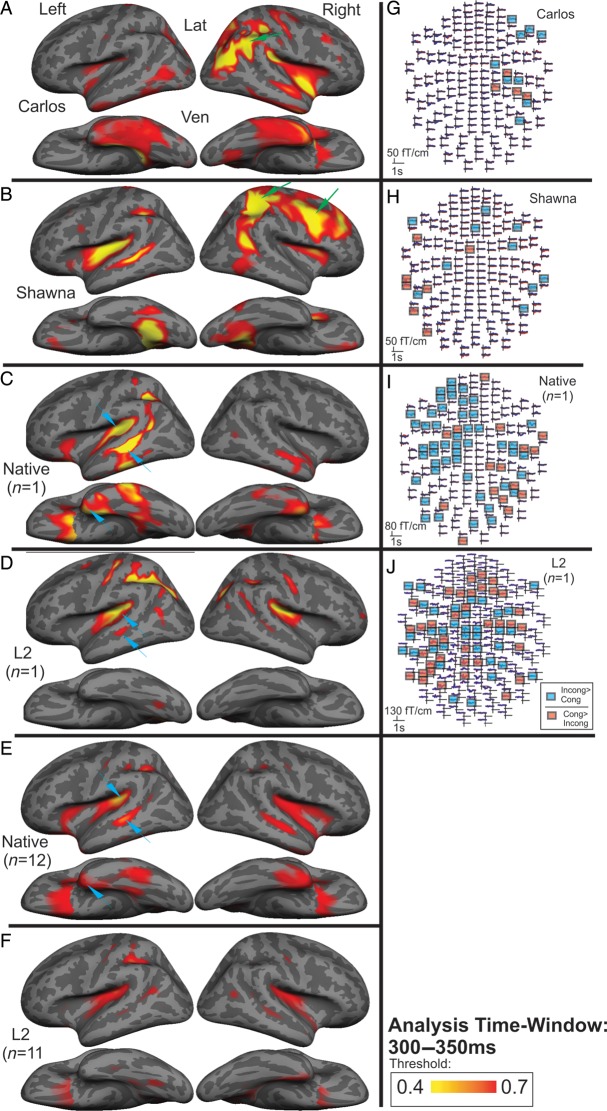

The aMEG maps in Figure 2 represent the strength of the congruent–incongruent activities across the whole brain for Carlos (panel A) and Shawna (panel B), 2 representative control participants (panel C: 17-year-old native signer and panel D: 19-year-old L2 signer), and both control groups (panel E, native signers and panel F, L2 signers). The 2 control participants (panels C,D) were selected for analyses at the individual level based on being closest in age to Carlos and Shawna. Recall that the aMEG maps are essentially a measure of SNR. The areas shown in yellow and red represent those brain regions where the SNR is larger than the baseline. The maps are normalized within each control group or each individual, allowing for a qualitative comparison of overall congruent–incongruent activity patterns.

Figure 2.

(A–F) Contrasting semantic activation patterns to signs in cases who first experienced language at approximately 14 years old, compared with a native and L2 signers. During semantic processing (300–350 ms), (A) Carlos and (B) Shawna show the strongest effect in the right occipito-parietal cortex (blue arrows). Shawna also shows the left superior temporal and right frontal activity. (C) A representative native signer (17-year-old female, accuracy: 97%, reaction time (RT): 573 ms) and (D) a representative L2 signer (19-year-old female, accuracy: 94%, RT: 584.8 ms.) show semantic effects in the left fronto-temporal language areas, as does the native signer group (E). The L2 group (F) also shows similar patterns of activity, but with overall smaller subtraction effects. Maps are normalized to strongest activity for each participant or group. (G–J) Individual MEG sensor data. The cases lack a strong incongruent > congruent effect in the left fronto-temporal regions. Blue channels: significant incongruent > congruent activity between 300 and 350 ms, red channels: significant congruent > incongruent effects at the same time. (E) Carlos has the strongest incongruent > congruent effects in right-hemisphere channels (blue channels); (F) Shawna also shows the most incongruent > congruent effects in right occipito-temporo-parietal channels (blue channels). In the cases, the semantic effect in the left (Shawna) and right (Carlos) temporal cortices seen in panels A and B is mostly due to congruent > incongruent activity (red channels, panels G and H). (I) A native signer shows strong incongruent > congruent effects in left fronto-temporal channels (blue channels). (H) An L2 signer also shows predominantly left-lateralized semantic effects (blue channels). Statistical significance was determined by a random-effects resampling procedure (Maris and Oostenveld 2007) and reflects time periods where incongruent and congruent conditions diverge at P < 0.01. The 2 control participants are the same individuals as those whose aMEGs are displayed in panels C and D.

We previously showed that, consistent with other neuroimaging studies of sign language, in the native signers signs elicited activity in a left-lateralized fronto-temporal network including the temporal pole (TP), planum temporale (PT), and STS, and to a lesser extent in the homologous right-hemisphere areas (Fig. 2E, data from Leonard et al. 2012). Consistent with the previous studies on L2 acquisition (Abutalebi 2008), this canonical language network was also activated in L2 signers (Fig. 2F, data from Leonard et al. unpublished data).

The same left-lateralized fronto-temporal activations are observed when we look at the aMEG maps of the 2 individual control participants (Fig. 2C,D). Note that the normalized aMEG values of the 2 control participants were also compared with the average aMEG values of their respective groups in each of the 18 ROIs, and no significant differences were found (i.e. there were no ROIs where the individual control subjects were >2.5 standard deviations away from the respective group mean). Taken together, these results corroborate previous research showing that the left fronto-temporal areas process word meaning independently of modality (spoken, written, or signed) (Marinkovic et al. 2003) and hearing status (Leonard et al. 2012). Importantly, in the participants who acquired language from birth (native and L2 signers), we were able to observe these canonical activations at the individual and group levels.

Consistent with the fact that they were developing language and were able to understand the stimuli signs (Ferjan Ramirez et al. 2013), Carlos and Shawna exhibited the semantic modulation effect—the N400 effect. MEG channels with significant semantic effects for the 2 cases and the 2 representative control participants are highlighted in red and blue in Figure 2, panel G (Carlos), H (Shawna), I (native signer), and J (L2 signer). Using a random-effects resampling procedure (Maris and Oostenveld 2007), we determined in which MEG channels the incongruent > congruent and the congruent > incongruent effects were significant (at P < 0.01). Channels with significant congruent > incongruent activity are shown in red, and channels with significant incongruent > congruent activity are shown in blue.

By simultaneously inspecting the MEG sensor data (Fig. 2, panels G,H) and aMEGs (Fig. 2, panels A,B), it is clear that the localization patterns of semantically modulated activity in Shawna and Carlos were quite different from those observed in the control participants. While both cases exhibited semantic effects in parts of the classical left-hemisphere language network and the homologous areas in the right hemisphere (e.g. left PT/STS for Shawna, right PT/STS for Carlos), examination of the MEG sensor data revealed that this was predominantly due to congruent > incongruent activity (channels highlighted in red). That is, although the aMEG data suggest that the cases' left-hemisphere activations were in similar locations to those of the control participants, the nature of these activations was quite different because the majority of the left-hemisphere effects shown in the cases were in the opposite direction to those shown in the control participants (Fig. 2, panels G–J). In Shawna and Carlos, the signature of word comprehension (incongruent > congruent responses, channels highlighted in blue) primarily localized to the right superior parietal, anterior occipital, and dorsolateral prefrontal areas that were not activated in the controls.

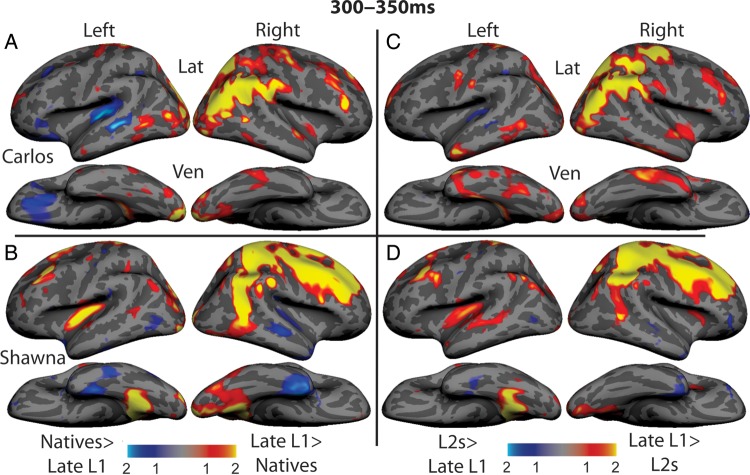

For the final step of our analyses, we mapped the z-score of the aMEG for each case compared with each of the control groups. Since the aMEG is calculated from the difference in activity evoked between congruent and incongruent signs and is always positive, large z-scores reflect areas where the magnitude of the responses may be unusual in the cases; their polarity (congruent larger vs. incongruent larger) is uncertain, but can be inferred from the sensor data noted above. Figure 3 shows that Carlos' neural activity for sign-word meaning was greater than that of the native signers (panel A) and that of the L2 learners (panel C) predominantly in the right parieto-occipital cortex. Native signers exhibited greater activity than Carlos in the left PT and STS. Shawna's neural activity for sign-word meaning was greater than that of the native (panel B) and L2 signers (panel D) in the right parietal and frontal cortices and in the left PT. Both control groups exhibited stronger activity than Shawna in portions of the right and left temporal lobes.

Figure 3.

Z-score maps showing brain areas where semantic modulation is greater in the 2 cases compared with the control groups (yellow and red) and areas where semantic modulation is greater in the control groups compared with the 2 cases (blue) (A) Carlos versus native signers, (B) Shawna versus native signers, (C) Carlos versus L2 signers, and (D) Shawna versus L2 signers. The cases exhibit stronger activity than the control participants predominantly in the right-hemisphere parietal cortex, with additional areas in the right occipital cortex (Carlos) and right frontal cortex (Shawna).

Discussion

The present study is the first to consider the neural underpinnings of language in adolescents learning a first language after a childhood of sparse language input and, as such, provides novel insights into the nature of a critical period for language. Previous research suggests that childhood environmental, social, and linguistic deprivation severely limit subsequent language development (Koluchova 1972; Curtiss 1976; Windsor et al. 2011). The cases studied here provide unique insights into the role of language experience in the organization of neural processing because they were linguistically, but not physically or emotionally deprived.

The cases are roughly analogous to uneducated homesigners from other parts of the world previously described in the literature (Senghas and Coppola 2001; Morford 2003; Coppola and Newport 2005). Prior case studies with such individuals show that when sign language input becomes available, they quickly replace their idiosyncratic gestures with signs (Emmorey et al. 1994; Morford 2003). This was confirmed in our prior analyses of the language development of Shawna and Carlos; after 1–2 years of language acquisition, they had a limited, noun-biased ASL vocabulary, and were able to produce short, simple utterances, much like young children who acquire language from birth (Ferjan Ramirez et al. 2013). The question we asked here was how (where and when) the cases process their newly acquired words in the brain. To answer the question we also compared their neural processing with 2 control groups, one deaf group who acquired ASL from birth and one hearing group who acquired English from birth who had been learning ASL for the same amount of time as the cases.

Consistent with previous research (Hickok et al. 1996; Petitto et al. 2000; Sakai et al. 2005; Abutalebi 2008; MacSweeney, Capek, et al. 2008; Mayberry et al. 2011; Leonard et al. 2012), the present aMEG results for the native and L2 signers show that when either spoken or sign languages are acquired from birth, word meaning is processed primarily in the classical left-hemisphere fronto-temporal language network. This network is well established to be the main site of neural generators of the N400 response across modalities (Halgren et al. 1994; Marinkovic et al. 2003) and is involved in processing word meaning in L2 learners (Leonard et al. 2010, 2011) as well as in infants (Dehaene-Lambertz et al. 2002; Imada et al. 2006; Travis et al. 2011).

In contrast, the results for the cases indicate that a paucity of language experience throughout childhood significantly disrupts the organization of this canonical language network. The cases were able to learn and process word meaning despite their atypical childhood experience, as demonstrated by both their accurate behavioral performance and their strongly modulated neural processing of words due to semantic priming. However, the cortical localization of this activity and its polarity diverged significantly from the pattern of the deaf and hearing controls (native and L2 signers). Both cases showed the classical incongruent > congruent responses (i.e. semantic priming decreasing the neural response) in some brain areas, but these responses localized mainly to the right-hemisphere superior parietal, anterior occipital, and dorsolateral prefrontal cortices, areas that were not activated when the control participants processed signs, deaf or hearing, native or L2 learners. These striking results demonstrate that the timing of functional language experience during human development has marked affects on the organization of the neural network underlying word comprehension.

Areas outside the classical left-hemisphere language network have previously been linked to the processing of later-acquired or less-proficient languages. Relatively strong right-hemisphere activations have previously been reported in less-proficient L2 learners and in L2 learners who began their L2 learning at a late age (Dehaene et al. 1997; Perani et al. 1998). In addition, 2 MEG studies reported greater right-hemisphere activations in ex-illiterates, compared with control subjects when reading words (Castro-Caldas et al. 2009) or listening to words (Nunes et al. 2009). This series of findings indicates greater right-hemisphere involvement when a language skill is learned after childhood. Modulations within nonclassical brain regions have also been previously reported during language tasks performed by hearing adult populations (Travis et al. 2011). From low-level phonetic processing (Kuhl 2010) to syntax (Mayberry et al. 2011), there is a general pattern of broader, more extensive neural activity at early stages of linguistic and biological development. Anterior occipital regions have previously been described as markers of underdeveloped language in normally developing populations (Mayberry et al. 2011). For example, when performing language tasks, toddlers show greater hemodynamic activation in occipital areas when compared with older children (Redcay et al. 2008), and children show greater hemodynamic activation in occipital regions than adults (Brown et al. 2005).

Previous findings from a range of language learning situations thus predict that a highly delayed onset of language acquisition and lower proficiency would result in more activity in right frontal and occipito-temporal areas. This was apparent to some extent for the 2 cases. However, unlike the cases studied here, normally developing infants and children, L2 learners, and ex-illiterates all show activation in the classical neural language network, reflecting the common timing of their initial language experience, namely early life. Previous studies do not illuminate how the developing brain copes with a paucity of language experience over childhood in the absence of emotional and physical deprivation. Our results show that the patterns of neural organization for language arising from this unique developmental situation are unlike those associated with language learning in infants (Dehaene-Lambertz et al. 2002; Imada et al. 2006; Travis et al. 2011), children (Brown et al. 2005; Redcay et al. 2008), L2 learners (Leonard et al. 2010, 2011), or ex-illiterates (Castro-Caldas et al. 2009; Nunes et al. 2009).

Shawna and Carlos showed responses in posterior visual areas similar to deaf signers whose L1 acquisition begins in late childhood (Mayberry et al. 2011). The previously studied late L1 learners had a mean length of ASL experience of 19 years, in contrast to the present cases who had only 2–3 years of ASL experience. The cases uniquely showed increased activity in right occipito-parietal and frontal regions, which could either be due to the fact that they were comparatively more linguistically deprived throughout childhood than the previously studied late L1 learners, or that they had comparatively less-language experience at the time of neuroimaging. Longitudinal studies are required to adjudicate these alternative possibilities.

The distinctive superior parietal activity we observed in both cases suggest that the adolescent brain meets the challenge of learning language for the first time in a different fashion from either that of infant L1 or older L2 learners. It is generally accepted that planning, generating, and analyzing skilled manual movements engage the parietal cortex (Buccino et al. 2001). We might thus hypothesize that the activation patterns observed in Shawna and Carlos arise from a childhood of watching the gestures hearing people commonly produce. However, hemodynamic studies of native speakers show that semantic aspects of co-speech gestures are processed in brain areas typically associated with spoken language comprehension, including the left IFG (Skipper et al. 2007; Willems et al. 2007) and superior temporal sulcus (Holle et al. 2008), and not in the right superior parietal cortex.

The superior parietal areas that were activated when Shawna and Carlos identified the meanings of ASL signs are part of the so-called dorsal stream. A well-established neural framework indicates that human action recognition begins in the visual cortex and then continues through either the dorsal or the ventral stream depending on how meaningful the action is (Goodale and Milner 1992). Meaningful actions, such as opening a bottle or drawing a line, are processed primarily by the ventral stream (for review see Decety and Grezes 1999), consistent with the theory that the ventral stream accesses the semantic knowledge associated with visual patterns. In contrast, meaningless actions primarily engage the dorsal pathway, which is theorized to be involved in the analysis of the visual attributes of unfamiliar movements and the generation of visual-to-motor transformations. Consistent with the dual steam model, hearing adults have been found to primarily engage the dorsal stream when watching ASL signs, which were meaningless visual actions for them (Decety et al. 1997; Grezes et al. 1998). The dual stream model has also been applied to language processing. Listening to meaningful spoken language primarily engages the ventral stream, but the dorsal stream is recruited when articulatory re-mapping is used to aid language performance (Hickok and Poeppel 2004).

The strong parietal activations for sign processing that we observe in both cases suggest that their lexical processing involves articulatory re-mapping and visual-to-motor transformations of signs in order to access sign meaning. Crucially, however, neither the deaf native nor the hearing L2 control groups showed such dorsal parietal activations. Previous research has found that late L1 learners have unique phonological recognition patterns for signs in comparison with deaf and hearing adults who had infant language exposure (Morford and Carlson 2011; Hall et al. 2012), and that these effects extend to sentence processing (Mayberry et al. 2002; Mayberry et al. 2011). The present results suggest when the adolescent brain acquires language for the first time it uses different strategies than those employed by either the infant language learner or the older L2 learner.

Infants are exquisitely sensitive to the dynamic patterns of the ambient language in the environment and learn the basic phonetic structure of words, consonant (Werker and Tees 1984), and vowel (Kuhl et al. 1992) features, before the end of the first year of life. Note that this early passive learning precedes the ability to produce words. This early tuning to the phonetic structure of words may both enable and be enabled by the neural architecture and connections of the ventral pathway and the classic language system for language processing (Kuhl 2004). The cases studied here, and late L1 learners we previously studied, experienced sustained dynamic language patterns only well after infancy when their expressive-motor and receptive-perceptual systems had already been developed without the synchronizing constraints of word structure where phonetic form and meaning are inextricably linked. Under such learning conditions, an alternative strategy, such as visuo-motor transformations and remapping of visual–motor forms, may be necessary to recognize word meaning, a mechanism suggested by the activation of the dorsal stream in the cases.

In addition to learning the phonetic structure of words for the first time, the cases must also map their prior world knowledge onto the specific semantic structure and categories of their new L1, learning that native and L2 learners accomplished in early childhood. Although the cases, especially Shawna, did show some neural responses in the classical language network, they were qualitatively different from those of the native signers in that they were increased rather than decreased by semantic priming. Such responses were also observed in the age-matched L2 control signer (Fig. 2, panel H). In the cases, the congruent > incongruent responses were mainly in the anteroventral temporal lobe (AVTL), which in typically developing individuals contain neurons that respond to the semantic categories of words across modalities (Chan et al. 2011) and are hypothesized to function as “semantic hubs” (Patterson et al. 2007). We might predict that as time passes and the cases create a stronger semantic network with the requisite phonetic representations, the more typical incongruent > congruent modulation may appear in their AVTL. In the same vein, the congruent > incongruent modulation may be a signature of new language learning because they have also been reported in 12 month olds, but not in 14 or 19 month olds, undergoing a picture-priming ERP study (Friedrich and Friederici 2004, 2005). Interestingly, similar neural responses have been observed in response to nonwords (Holcomb and Neville 1990) and to “grooming” gestures inserted in ASL sentences (Grosvald et al. 2012.). Both nonwords and grooming gestures lack phonetic structure (and lexical meaning).

Finally, we observed that Carlos' and Shawna's neural activation patterns were not identical to one another. For example, Shawna showed the semantic modulation effect in the right frontal cortex, which was absent in Carlos. These differences should not be surprising given their backgrounds. Language in the ambient environment constrains learning: Infants induce the phonetic and semantic structure of words within a similar developmental timeframe across languages and cultures (Ambridge and Lieven 2011). Without external language to guide the developing brain, the result may be more neural variation. Future studies are necessary to discover the extent of variation in neural activation patterns when the adolescent brain first begins to learn language and whether it reduces as more language is acquired.

Our results provide initial direct evidence that the timing of language experience during human development significantly disrupts the organization of neural language processing in later life. The cases reported here exhibited neural activity in brain areas that have previously been associated with learning language at a late age, in addition to unique activation patterns heretofore unobserved. Longitudinal studies are necessary to determine whether the neural patterns we find here will become more focal in the left anteroventral and superior temporal cortices as more language is learned, or whether they will remain right-lateralized with the strongest activity in areas not typically associated with lexico-semantic processing.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

The research reported in this publication was supported in part by NSF grant BCS-0924539, NIH grant T-32 DC00041, an innovative research award from the Kavli Institute for Mind and Brain, and NIH grant R01DC012797. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Supplementary Material

Notes

We thank D. Hagler, A. Lieberman, A. Dale, K. Travis, T. Brown, M. Hall, and P. Lott for assistance. Conflict of Interest: None declared.

References

- Abutalebi J. Neural aspects of second language representation and language control. Acta Psychol. 2008;128:466–478. doi: 10.1016/j.actpsy.2008.03.014. [DOI] [PubMed] [Google Scholar]

- Alvarez RP, Holcomb PJ, Grainger J. Accessing world meaning in two languages: an event-related brain potential study of beginning bilinguals. Brain Lang. 2003;87:290–304. doi: 10.1016/s0093-934x(03)00108-1. [DOI] [PubMed] [Google Scholar]

- Ambridge B, Lieven EV. Child language acquisition: contrasting theoretical approaches. Malden, MA: Cambridge University Press; 2011. [Google Scholar]

- Anderson D, Reilly J. The MacArthur Communicative Development Inventory: normative data for american sign language. J Deaf Stud Deaf Educ. 2002;7:83–106. doi: 10.1093/deafed/7.2.83. [DOI] [PubMed] [Google Scholar]

- Bates E, Bretherton I, Snyder L. From first words to grammar: individual differences and dissociable mechanisms. New York: Cambridge University Press; 1998. [Google Scholar]

- Bates E, D'Amico S, Jacobsen T, Szekely A, Andonova E, Devescovi A, Herron D, Lu CC, Pechman T, Pleh C, et al. Timed picture naming in seven languages. Psychon Bull Rev. 2003;10(2):344–380. doi: 10.3758/bf03196494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates E, Goodman JC. On the inseparability of grammar and the lexicon: evidence from acquisition, aphasia and real-time processing. Lang Cogn Proc. 1997;12:507–584. [Google Scholar]

- Bates E, Marchman V, Thal D, Fenson L, Dale P, Reznick JS, Reilly J, Hartung J. Developmental and stylistic variation in the composition of early vocabulary. J Child Lang. 1994;35:85–123. doi: 10.1017/s0305000900008680. [DOI] [PubMed] [Google Scholar]

- Birdsong D. Ultimate attainment in second language acquisition. Language. 1992;64:706–755. [Google Scholar]

- Birdsong D, Molis M. On the evidence for maturational constraints in second language acquisition. J Mem Lang. 2001;44:235–249. [Google Scholar]

- Boudreault P, Mayberry RI. Grammatical processing in American Sign Language: age of first-language acquisition effects in relation to syntactic structure. Lang Cogn Proc. 2006;21:608–635. [Google Scholar]

- Brown TT, Lugar HM, Coalson RS, Miezin FM, Petersen SE, Schlaggar BL. Developmental changes in human cerebral functional organization for word generation. Cereb Cortex. 2005;15(3):275–290. doi: 10.1093/cercor/bhh129. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkovski F, Fink GR, Fadiga L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13:400–404. [PubMed] [Google Scholar]

- Castro-Caldas A, Nunes MV, Maestu F, Ortiz T, Simoes R, Fernandes R, de la Guia E, Garcia E, Goncalves M. Learning ortography in adulthood: a magnetoencephalographic study. J Neuropsychol. 2009;3:17–30. doi: 10.1348/174866408X289953. [DOI] [PubMed] [Google Scholar]

- Chan AM, Baker JM, Eskandar E, Schomer D, Ulbert I, Marinkovic K, Cash SS, Halgren E. First-pass selectivity for semantic categories in the human anteroventral temporal lobe. J Neurosci. 2011;31(49):18119–18129. doi: 10.1523/JNEUROSCI.3122-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coppola M, Newport EL. Grammatical subject in home sign: abstract linguistic structure in adult primary gesture systems without linguistic input. Proc Natl Acad Sci USA. 2005;102(52):19249–19253. doi: 10.1073/pnas.0509306102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina D, McBurney S, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. Functional roles of Broca's area and SMG: evidence from cortical stimulation mapping in a deaf signer. NeuroImage. 1999;10:570–581. doi: 10.1006/nimg.1999.0499. [DOI] [PubMed] [Google Scholar]

- Curtiss S. Genie: a psycholinguistic study of a modern-day “wild child”. New York: Academic Press; 1976. [Google Scholar]

- Dale AM, Fischl BR, Sereno MI. Cortical surface-based analysis. I. Segmentation and surface reconstruction. NeuroImage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl B, Buckner RL. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical. Neuron. 2000;26:55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Decety J, Grezes J. Neural mechanisms subserving the perception of human action. Trends Cogn Sci. 1999;3(5):172–178. doi: 10.1016/s1364-6613(99)01312-1. [DOI] [PubMed] [Google Scholar]

- Decety J, Grezes J, Perani D, Jeannerod M, Procyk E, Grassi F, Fazio F. Brain activity during observation of actions: influence of action content and subject's strategy. Brain. 1997;120:1763–1777. doi: 10.1093/brain/120.10.1763. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Dupoux E, Mehler J, Cohen L, Paulesu E, Perani D, van de Moortele PF, Lehericy S, Le Bihan D. Anatomical variability in the cortical representation of first and second language. Neuroreport. 1997;8(17):3809–3815. doi: 10.1097/00001756-199712010-00030. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. Functional neuroimaging of speech perception in infants. Science. 2002;298:2013–2015. doi: 10.1126/science.1077066. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Grant R, Ewan B. Paper presented at the 19th annual Boston University Conference on Language Development. A new case of linguistic isolation: preliminary report; Boston (MA). 1994. [Google Scholar]

- Ferjan Ramirez N, Lieberman AM, Mayberry RI. The initial stages of first-language acquisition begun in adolescence: when late looks early. J Child Lang. 2013;40(2):391–414. doi: 10.1017/S0305000911000535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RB, Dale AM. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 1999;8(4):272–284. doi: 10.1002/(SICI)1097-0193(1999)8:4<272::AID-HBM10>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flege JE, Yeni-Komshian GH, Liu S. Age constraints on second-language acquisition. J Mem Lang. 1999;41:78–104. [Google Scholar]

- Friedrich M, Friederici AD. N400-like semantic congruity effects in 19-month-olds: processing known words in picture contexts. Brain responses to words and nonsense words in picture contexts. J Cogn Neurosci. 2004;16:1465–1477. doi: 10.1162/0898929042304705. [DOI] [PubMed] [Google Scholar]

- Friedrich M, Friederici AD. Phonotactic knowledge and lexical-semantic processing in one-year-olds: brain responses to words and nonsense words in picture contexts. J Cogn Neurosci. 2005;17(11):1785–1802. doi: 10.1162/089892905774589172. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. The resilience of language. New York: Psychology Press; 2003. [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Grezes J, Costes N, Decety J. Top-down effect of strategy on the perception of human biological motion: a PET investigation. Cogn Neuropsychol. 1998;15:553–582. doi: 10.1080/026432998381023. [DOI] [PubMed] [Google Scholar]

- Grosvald M, Gutierrez E, Hafer S, Corina D. Dissociating linguistic and non-linguistic gesture processing: electrophysiological evidence from American Sign Language. Brain Lang. 2012;121(1):12–14. doi: 10.1016/j.bandl.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halgren E, Baudena P, Heit G, Clarke JM, Marinkovic K. Spatio-temporal stages in face and word processing. 1. Depth-recorded potentials in the human occipital, temporal and parietal lobes. J Physiol Paris. 1994;88:1–50. doi: 10.1016/0928-4257(94)90092-2. [DOI] [PubMed] [Google Scholar]

- Halgren E, Dhond RP, Christenson N, Van Petten C, Marinkovic K, Lewine JD, Dale AM. N400-like magnetoencephalography responses modulated by semantic context, word frequency, and lexical class in sentences. NeuroImage. 2002;17:1101–1116. doi: 10.1006/nimg.2002.1268. [DOI] [PubMed] [Google Scholar]

- Hall ML, Ferreira VS, Mayberry RI. Phonological similarity judgments in ASL: evidence for maturational constraints on phonetic perception in sign. Sign Lang Linguist. 2012;15:104–127. [Google Scholar]

- Hickok G, Bellugi U, Klima ES. The neurobiology of signed language and its implications for the neural organization of language. Nature. 1996;381:699–702. doi: 10.1038/381699a0. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Coffey SA, Neville HJ. Visual and auditory sentence processing: a developmental analysis using event-related brain potentials. Dev Neuropsychol. 1992;8(2–3):203–231. [Google Scholar]

- Holcomb PJ, Neville HJ. Auditory and visual semantic priming in lexical decision: a comparison using event-related brain potentials. Lang Cogn Process. 1990;5(4):281–312. [Google Scholar]

- Holle H, Gunter TC, Ruschemeyer SA, Hennenlotter A, Iacoboni M. Neural correlates of the processing of co-speech gestures. NeuroImage. 2008;39:2010–2024. doi: 10.1016/j.neuroimage.2007.10.055. [DOI] [PubMed] [Google Scholar]

- Imada T, Zhang Y, Cheour M, Taulu S, Ahonen A, Kuhl P. Infant speech perception activates Broca's area: a developmental magnetoencephalography study. Neuroreport. 2006;17(10):957–962. doi: 10.1097/01.wnr.0000223387.51704.89. [DOI] [PubMed] [Google Scholar]

- Klima ES, Bellugi U. The signs of language. Cambridge, MA: Harvard University Press; 1979. [Google Scholar]

- Koluchova J. Severe deprivation in twins: a case study. J Child Psychol Psychiatry. 1972;13:107–114. doi: 10.1111/j.1469-7610.1972.tb01124.x. [DOI] [PubMed] [Google Scholar]

- Kuhl P. Brain mechanisms in early language acquisition. Neuron. 2010;617:713–727. doi: 10.1016/j.neuron.2010.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl P. Early language acquisition: Cracking the speech code. Nat Rev Neurosci. 2004;5(11):831–843. doi: 10.1038/nrn1533. [DOI] [PubMed] [Google Scholar]

- Kuhl P, Williams KA, Lacerda F, Stevens KN, Lindblom B. Linguistic experience alters phonetic perception in infants by 6 months of age. Science. 1992;255:606–608. doi: 10.1126/science.1736364. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Electrophysiology reveals semantic memory use in language comprehension. Trends Cogn Sci. 2000;4(12):463–470. doi: 10.1016/s1364-6613(00)01560-6. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP) Annu Rev Psychol. 2011;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. Reading senseless sentences: brain potentials reflect semantic incongruity. Science. 1980;207:203–208. doi: 10.1126/science.7350657. [DOI] [PubMed] [Google Scholar]

- Kutas M, Iragui V. The N400 in a semantic categorization task across 6 decades. Electroencephalogr Clin Neurophysiol. 1998;108(5):456–471. doi: 10.1016/s0168-5597(98)00023-9. [DOI] [PubMed] [Google Scholar]

- Leonard MK, Brown TT, Travis KE, Gharapetian L, Hagler DJ, Jr, Dale AM, Elman J, Halgren E. Spatiotemporal dynamics of bilingual word processing. NeuroImage. 2010;49(4):3286–3294. doi: 10.1016/j.neuroimage.2009.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Ferjan Ramirez N, Torres C, Travis KE, Hatrak M, Mayberry RI, Halgren E. Signed words in the congenitally deaf evoke typical late lexico-semantic responses with no early visual responses in left superior temporal cortex. J Neurosci. 2012;32(28):9700–9705. doi: 10.1523/JNEUROSCI.1002-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Torres C, Travis KE, Brown TT, Hagler DJ, Jr., Dale AM, Elman J, Halgren E. Language proficiency modulates the recruitment of non-classical language areas in bilinguals. PLoS One. 2011;6(3):e18240. doi: 10.1371/journal.pone.0018240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Brammer MJ, Giampietro V, David AS, Calvert GA, McGuire PK. Lexical and sentential processing in British Sign Language. Hum Brain Mapp. 2006;27:63–76. doi: 10.1002/hbm.20167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Capek C, Campbell R, Woll B. The signing brain: the neurobiology of sign language. Trends Cogn Sci. 2008;12(11):432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Waters D, Brammer MJ, Woll B, Goswami U. Phonological processing in deaf signers and the impact of age of first language acquisition. NeuroImage. 2008;40:1369–1379. doi: 10.1016/j.neuroimage.2007.12.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 2003;38(3):487–497. doi: 10.1016/s0896-6273(03)00197-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Mayberry RI. Cognitive development of deaf children: the interface of language and perception in neuropsychology. In: Segaolwitz SJ, Rapin I, editors. Handbook of neuropsychology. 2nd ed. Part II. Vol. 8. Amsterdam: Elsevier; 2002. pp. 71–107. [Google Scholar]

- Mayberry RI. Early language acquisition and adult language ability: what sign language reveals about the critical period for language. In: Marschark M, Spencer P, editors. Oxford handbook of deaf studies, language, and education. Vol. 2. Oxford University Press; 2010. pp. 281–291. [Google Scholar]

- Mayberry RI, Chen J-K, Witcher P, Klein D. Age of acquisition effects on the functional organization of language in the adult brain. Brain Lang. 2011;119:16–29. doi: 10.1016/j.bandl.2011.05.007. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Eichen E. The long-lasting advantage of learning sign language in childhood: another look at the critical period for language acquisition. J Mem Lang. 1991;30:486–512. [Google Scholar]

- Mayberry RI, Fischer S. Looking through phonological shape to sentence meaning: the bottleneck of non-native sign language processing. Mem Cognit. 1989;17:740–754. doi: 10.3758/bf03202635. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Lock E, Kazmi H. Linguistic ability and early language exposure. Nature. 2002;417:38. doi: 10.1038/417038a. [DOI] [PubMed] [Google Scholar]

- McDonald CR, Thesen T, Carlson C, Blumberg M, Girard HM, Trongnetrpunya A, Sherfey JS, Devinsky O, Kuzniecky R, Doyle WK, et al. Multimodal imaging of repetition priming: using fMRI, MEG, and intracranial EEG to reveal spatiotemporal profiles of word processing. NeuroImage. 2010;53(2):707–717. doi: 10.1016/j.neuroimage.2010.06.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moon C, Fifer WP. Evidence of transnatal auditory learning. J Perinatol. 2000;20:S37–S44. doi: 10.1038/sj.jp.7200448. [DOI] [PubMed] [Google Scholar]

- Moreno EM, Kutas M. Processing semantic anomalies in two languages: an electrophysiological exploration in both languages of Spanish-English bilinguals. Cogn Brain Res. 2005;22:205–220. doi: 10.1016/j.cogbrainres.2004.08.010. [DOI] [PubMed] [Google Scholar]

- Morford JP. Grammatical development in adolescent first-language learners. Linguistics. 2003;41:681–721. [Google Scholar]

- Morford JP, Carlson ML. Sign perception and recognition in non-native signers of ASL. Lang Learn Dev. 2011;7(2):149–168. doi: 10.1080/15475441.2011.543393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP, Hänel-Faulhaber B. Homesigners as late learners: connecting the dots from delayed acquisition in childhood to sign language processing in adulthood. Lang Ling Compass. 2011;5(8):525–537. [Google Scholar]

- Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. A critical period for right hemisphere recruitment in American Sign Language Processing. Nat Neurosci. 2002;5(1):76–80. doi: 10.1038/nn775. [DOI] [PubMed] [Google Scholar]

- Newport EL. Maturational constraints on language learning. Cogn Sci. 1990;14:11–28. [Google Scholar]

- Newport EL, Meier R. The acquisition of American Sign Language. In: Slobin D., editor. The cross-linguistic study of language acquisition. Vol. 1. Hillsdale, NJ: Lawrence Erlbaum Associates; 1985. [Google Scholar]

- Nunes MV, Castro-Caldas A, Del Rio D, Maestu F, Ortiz T. The ex-illiterate brain. Critical period, cognitive reserve and HAROLD model. Dement Neuropsychol. 2009;3(3):222–227. doi: 10.1590/S1980-57642009DN30300008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostendorp TF, Van Oosterom A. Source parameter estimation using realistic geometry in bioelectricity and biomagnetism. In: Nenonen J, Rajala HM, Katila T, editors. Biomagnetic localization and 3D modeling. Helsinki: Helsinky University of Technology; 1992. Report TKK-F-A689. [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 2007;8(12):976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Penfield W, Roberts WL. Speech and brain mechanisms. Princeton, NJ: Princeton University Press; 1959. [Google Scholar]

- Perani D, Paulesu E, Galles NS, Dupoux E, Dehaene S, Bettinardi V, Cappa SF, Fazio F, Mehler J. The bilingual brain. Proficency and age of acquisition of the second language. Brain. 1998;121(10):1841–1852. doi: 10.1093/brain/121.10.1841. [DOI] [PubMed] [Google Scholar]